Abstract

For the free vibrations of multi-degree mechanical structures appeared in structural dynamics, we solve the quadratic eigenvalue problem either by linearizing it to a generalized eigenvalue problem or directly treating it by developing the iterative detection methods for the real and complex eigenvalues. To solve the generalized eigenvalue problem, we impose a nonzero exciting vector into the eigen-equation, and solve a nonhomogeneous linear system to obtain a response curve, which consists of the magnitudes of the n-vectors with respect to the eigen-parameters in a range. The n-dimensional eigenvector is supposed to be a superposition of a constant exciting vector and an m-vector, which can be obtained in terms of eigen-parameter by solving the projected eigen-equation. In doing so, we can save computational cost because the response curve is generated from the data acquired in a lower dimensional subspace. We develop a fast iterative detection method by maximizing the magnitude to locate the eigenvalue, which appears as a peak in the response curve. Through zoom-in sequentially, very accurate eigenvalue can be obtained. We reduce the number of eigen-equation to to find the eigen-mode with its certain component being normalized to the unit. The real and complex eigenvalues and eigen-modes can be determined simultaneously, quickly and accurately by the proposed methods.

1. Introduction

In the free vibration of a q-degree mass-damping-spring structure, the system of differential equations for describing the motion is [1]

where is a time-dependent q-dimensional vector to signify the generalized displacements of the system.

In engineering application the mass matrix and the stiffness matrix are positive definite because they are related to the kinetic energy and elastic strain energy. However, the damping properties of a system reflected in the viscous damping matrix are rarely known, making it difficult to be evaluated exactly [2,3].

In terms of the vibration mode , we can express the fundamental solution of Equation (1) as

which leads to a nonlinear eigen-equation for :

where is a matrix quadratic of the structure. Equation (3) is a quadratic eigenvalue problem (QEP) to determine the eigen-pair . In addition to the free vibrations of mechanical structures, many related systems which lead to the quadratic eigenvalue problems were discussed by Tisseur and Meerbergen [4]. In the design of linear structure, knowing natural frequency of the structure is vital important to avoid the resonance with external exciting. The natural frequency is the imaginary part of the eigenvalue in Equation (3), which is a frequency to easily vibrate the structure [5,6].

Using the generalized Bezout method [7] to tackle Equation (3) one introduces a solvent matrix determined by

where is a zero matrix, such that a factorization of reads as

It gives us an opportunity to determine the eigenvalues of Equation (3) through the eigenvalues solved from two q-dimensional eigenvalue problems, generalized one and standard one:

The key point of the solvent matrix method is solving a nonlinear matrix Equation (4) to obtain an accurate matrix [8,9]. Most of the numerical methods that deal directly with the quadratic eigenvalue problem by solving Equations (4) and (6) are the variants of Newton’s method [10,11,12,13]. These Newton’s variants converge when the initial guess is close enough to the solution. But even for a good initial guess there is no guarantee that the method will converge to the desired eigenvalue. There are different methods to solve the quadratic eigenvalue problems [14,15,16,17,18,19].

Defining

Equation (8) becomes a generalized eigenvalue problem for the n-vector :

where with . Equation (10) is used to determine the eigen-pair , which is a linear eigen-equation associated to the pencil , where is an eigen-parameter.

In the linearization from Equation (3) to Equation (10), an unsatisfactory aspect is that the dimension of the working space is doubled to . However, for the generalized eigenvalue problems many powerful numerical methods are available [20,21]. The numerical computations in [22,23] revealed that the methods based on the Krylov subspace can be very effective in the nonsymmetric eigenvalue problems by using the Lanczos biorthogonalization algorithm and the Arnoldi’s algorithm. The Arnoldi and nonsymmetric Lanczos methods are both of the Krylov subspace methods. Among the many algorithms to solve the matrix eigenvalue problems the Arnoldi method [23,24,25,26], the nonsymmetric Lanczos algorithm [27], and the subspace iteration method [28] are well known. The affine Krylov subspace method was first developed by Liu [29] to solve linear equations system, which is however not yet used to solve the generalized eigenvalue problems. It is well known that the eig function in MATLAB is an effective code to compute the eigenvalues of the generalized eigenvalue problems. We will test it for the eigenvalue problem of highly ill-conditioned matrices and point out its limitation.

The paper sets up a new detection method for determining the real and complex eigenvalues of Equation (10) in Section 2, where the idea of exciting vector and excitation method (EM) are introduced with two examples being demonstrated. In Section 3, we express the generalized eigenvalue problem (10) in an affine Krylov subspace, and a nonhomogeneous linear equations system is derived. To precisely locate the position of the real eigenvalue in the response curve, we develop an iterative detection method (IDM) in Section 4, where we derive new methods to compute eigenvalue and eigenvector. In Section 5 some examples of the generalized eigenvalue problems are given. The EM and IDM are extended in Section 6 to directly solve the quadratic eigenvalue problem (3), where we derive IDM in the eigen-parametric plane to determine the complex eigenvalue. In Section 7, several examples are given and solved by either linearizing them to the generalized eigenvalue problems or treating them in the original quadratic forms by the direct detection method. Finally, the conclusions are drawn in Section 8.

2. A New Detection Method

Let be the set of all the eigenvalues of Equation (10), which may include the pairs of conjugate complex eigenvalues. It is known that if then Equation (10) has only the trivial solution with and . In contrast, if then Equation (10) has a non-trivial solution with and , which is called the eigenvector. However, when n is large it is difficult to directly solve Equation (10) to determine and by the manual operations. Instead, numerical methods have to be employed to solve Equation (10), from which is always obtained to be a zero vector no matter which is, since the right-hand side of is zero.

To definitely determine to have a finite magnitude with , we consider a variable transformation from to by

where is a given nonzero vector, which being inserted into Equation (10) generates

It is important that the right-hand side is not zero because of , which is a given exciting vector to render a nonzero response of and then by Equation (11) is available. We must emphasize that when is near to a singular matrix, we cannot eliminate in Equation (12) by inverting the matrix to obtain .

2.1. Real Eigenvalue

Let the eigen-parameter in Equation (12) run in an interval , and we can solve Equation (12) by the Gaussian elimination method to obtain and then . Hence, the response curve is formed by varying the magnitude vs. the eigen-parameter in the interval . It is different from Equation (10) that now we can compute in Equation (12) and then by Equation (11), when is a given nonzero vector. Through this transformation by solving a nonhomogeneous linear system (12), rather than the homogeneous linear system (10), the resultant vector can generate a nonzero finite response of when the eigen-parameter tends to an eigenvalue, and for most eigen-parameters that not near to any eigenvalue the responses of are very small, nearly close to zero. The technique to construct a nonzero response curve is called an excitation method (EM).

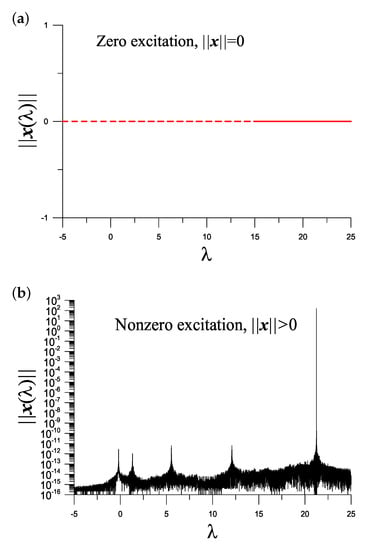

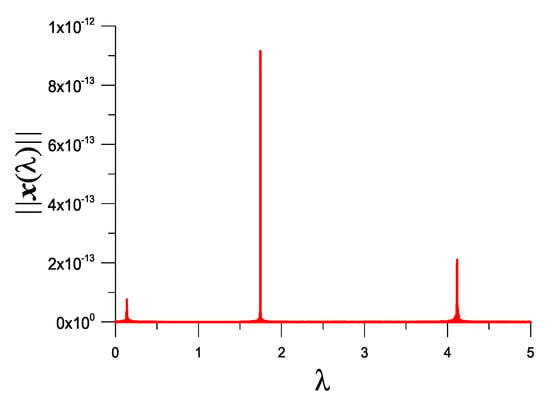

We take and , and we plot vs. the eigen-parameter in Figure 1a, wherein only zero values of appear.

Figure 1.

For a generalized eigenvalue problem (13), (a) zero response under a zero excitation, and (b) showing five peaks in the response curve under a nonzero excitation.

However, under a nonzero excitation with , we plot vs. the eigen-parameter in Figure 1b, where we can observe five peaks of the response curve which signifying the locations of five real eigenvalues to be sought. When does not locate at the peak point, is zero from the theoretical point of view; however, the value of as shown in Figure 1b is not zero, which is very small due to the machinery round-off error caused by using the Gaussian elimination method to solve Equation (12). In Section 4, we will develop an iterative method to precisely locate those eigenvalues based on the EM.

2.2. Complex Eigenvalue

Because and are real matrices, the eigenvalue may be a complex number, which is assumed to be

where , and and are, respectively, the real and imaginary parts of . Correspondingly, we take

No matter which is, since Equation (20) is a consistent linear system with a dimension , we can solve it by using the Gaussian elimination method to obtain and then by Equation (19).

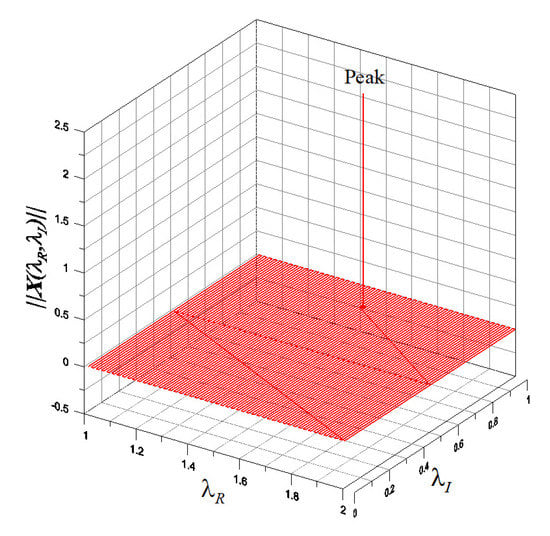

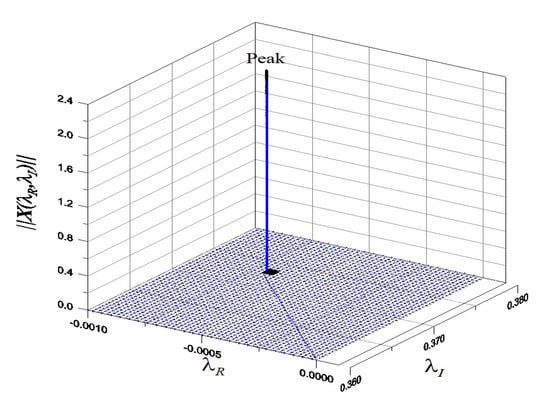

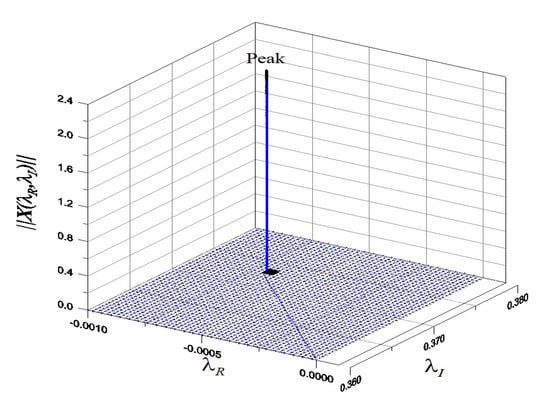

When and take values inside a rectangle by , we can plot vs. over the eigen-parametric plane, and investigate the property of the response surface.

For instance, for

we have a pair of complex eigenvalues:

We take , , and with , we plot over the plane in Figure 2, where we can observe one peak near to the point . More precise values of will be obtained by the iterative detection method to be developed in Section 6.

Figure 2.

For a 2 by 2 matrix the detection of a complex eigenvalue.

3. Generalized Eigenvalue Problem in an Affine Krylov Subspace

The detection method developed in the previous section has a weakness that we need to solve an n-dimensional linear system (12) for real eigenvalue and a -dimensional linear system (20) for complex eigenvalue. Therefore, in the many selected points inside the range we may spend a lot of computational time to construct the response curve to detect real eigenvalues or the response surface to detect complex eigenvalues. In this section we develop the detection methods in a lower dimensional subspace, instead of the detection methods carried out in the full space.

3.1. The Krylov Subspace

When n is a large dimension, Equation (10) is a high-dimensional linear equations system. In order to reduce the dimension of the governing equation to m in an m-dimensional Krylov subspace, we begin with

The Krylov matrix is fixed by

which is an matrix with its jth column being the vector .

The Arnoldi process is used to normalize and orthogonalize the Krylov vectors , such that the resultant vectors satisfy , where is the Kronecker delta symbol. The Arnoldi procedure for the orthogonalization of can be written as follows [30].

denotes the Krylov matrix, where the subscript k in means the kth column of , which possesses the following property:

3.2. Linear Nonhomogeneous Equations in an Affine Krylov Subspace

4. An Iterative Detection Method for Real Eigenvalue

It follows from Equation (29) that

which is the projection of Equation (10) into the affine Krylov subspace as a nonhomogeneous projected eigen-equation. Upon comparing to the original eigen-equation in Equation (10), Equation (30) is different for the appearance of a nonhomogeneous term in the right-hand side, When we take in the right-hand side, is a zero vector solved by the numerical method and thus . To excite the nonzero response of , we must give a nonzero exciting vector in the right-hand side.

In Section 2, we have given an example to show that the peaks of at the eigenvalues are happened in the response curve of vs. , which motivates us using a simple maximum method to determine the eigenvalue by collocating points inside an interval by

where the size of must be sufficiently large to include at least one eigenvalue . Therefore, the numerical procedures for determining the eigenvalue of a generalized eigenvalue problem are summarized as follows. (i) Select m, , a and b. (ii) Construct . (iii) For collocating point solving Equation (30), setting and taking the optimal to satisfy Equation (31).

By repeating the use of Equation (31), we gradually reduce the size of the interval centered at the previous peak point by renewing the interval to a finer one. Give an initial interval , and we place the collocating points by and pick up the maximal point denoted by . Then, a finer interval is given by and , which is centered at the previous peak point and with a smaller length . In that new interval we pick up the new maximum point denoted by . Continuing this process until , we can obtain the eigenvalue with high accuracy. This algorithm is shortened as an iterative detection method (IDM).

In order to construct the response curve, we choose a large interval of to include all eigenvalues, such that the rough locations of the eigenvalues can be observed in the response curve as the peaks. Then, to precisely determine the individual eigenvalue, we choose a small initial interval to include that eigenvalue as internal point. A few iterations by the IDM can compute very accurate eigenvalue.

When the eigenvalue is obtained, if one wants to compute the eigenvector, we can normalize a nonzero th component of by . Let

where and are the components of and , respectively. Then, it follows from Equation (10) an -dimensional linear system:

where are constructed by

We can apply the Gaussian elimination method or the conjugate gradient method to solve in Equation (33). And then is computed by

5. Examples of Generalized Eigenvalue Problems

Example 1.

We consider

and . The two smallest eigenvalues are . It is a highly ill-conditioned generalized eigenvalue problem due to Cond.

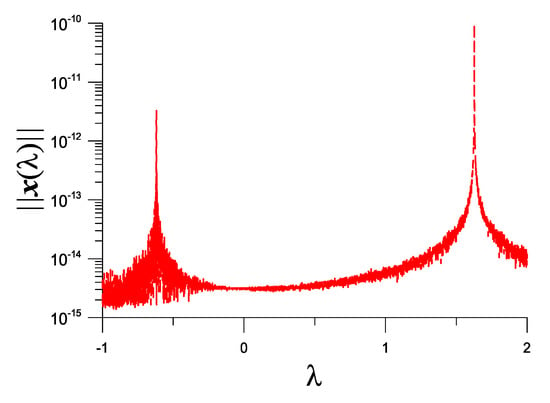

We take and plot vs. in Figure 3, where we can observe two peaks happened at two eigenvalues. The zigzags in the response curve are due to the ill-conditioned in Equation (10).

Figure 3.

For example 1, showing two peaks in the response curve obtained from the IDM, corresponding to eigenvalues −0.619402940600584 and 1.627440079051887.

Starting from and under a convergence criterion , with seven iterations we can obtain , which is very close to −0.619402940600584 with a difference . On the other hand, the error to satisfy Equation (10) is very small with , where is solved from Equations (32)–(35) with .

Starting from and with six iterations, we can obtain , which is very close to 1.627440079051887 with a difference , and is obtained.

Example 2.

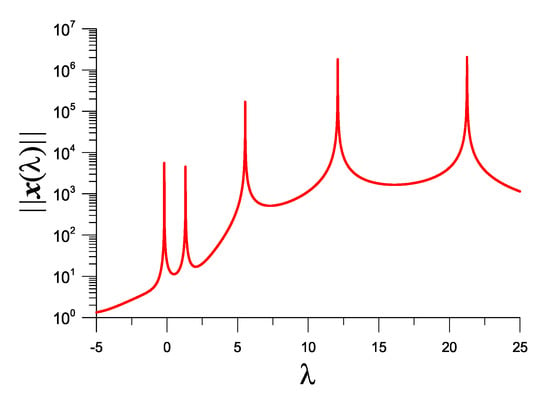

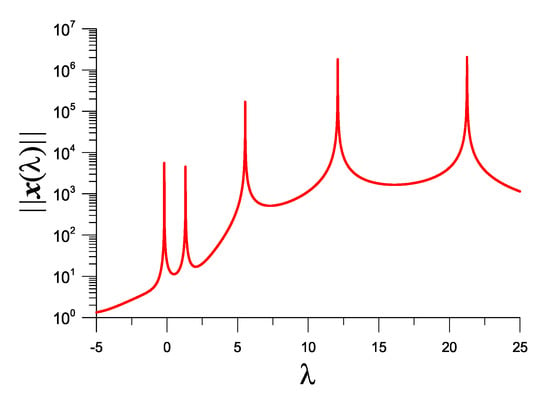

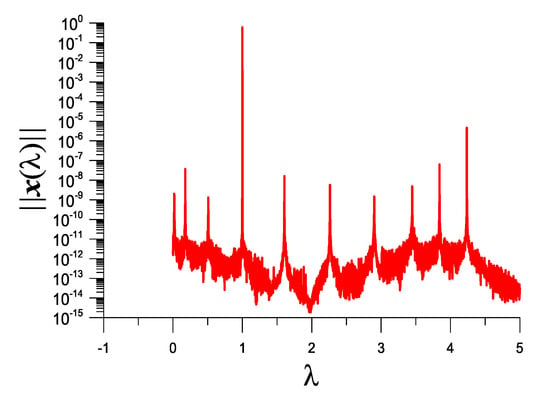

In Equation (10), and are given in Equation (13). We take and plot vs. λ in Figure 4, where five peaks in the response curve happen at five eigenvalues.

Figure 4.

For example 2, showing five peaks in the response curve obtained from the IDM, corresponding to five eigenvalues.

Starting from and under a convergence criterion , through five iterations is obtained. is obtained, where is solved from Equations (32)–(35) with .

Starting from and under a convergence criterion , we can obtain and after seven iterations.

With and seven iterations, we can obtain and .

With and seven iterations, we can obtain and .

With and seven iterations, we can obtain and .

By comparing the response curve in Figure 4 with that in Figure 1b, the numerical noise is disappeared by using the IDM in the affine Krylov subspace.

Example 3.

Let and , . In Equation (10), we take [21]:

Other elements are all zeros, where we take . The eigenvalues are for .

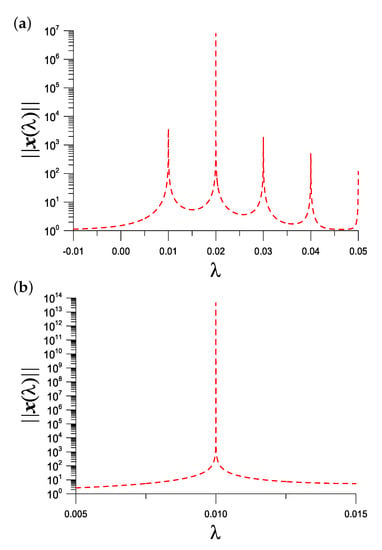

We take and plot vs. in Figure 5a, where five peaks are obtained by the IDM, which correspond to the first five eigenvalues. Within a finer interval in Figure 5b, one peak appears at a more precise position.

Figure 5.

For example 3, (a) showing five picks of with in the interval [−0.01,0.05], and (b) a pick of is enlarged in the interval [0.005,0.015], which is a process of zoom-in.

Starting from and with one iteration under a convergence criterion , the eigenvalue obtained is very close to 0.01 with an error . is obtained, where is solved from Equations (32)–(35) with .

With and , after two iterations the eigenvalue obtained is very close to 0.02 with an error . is obtained.

For this problem, if we take and to compute the eigenvalue in the full space, the computational time is increased. The CPU time spent to construct the response curve is 6.09 s. For the eigenvalue 0.01 the error is the same with , but the CPU time increases to 5.83 s. In contrast, the subspace method spent 1.05 s to construct the response curve, and the CPU time for the eigenvalue 0.01 is 1.05 s. When n is increased to , the subspace method with spent 1.05 s to construct the response curve and the CPU time for the eigenvalue 0.01 is 6.53 s; however, by using the full space method with the CPU time is 44.91 s and and CPU time for the eigenvalue 0.01 is 44.67 s. Therefore, the computational efficiency of the m-dimensional affine Krylov subspace method is better than that by using the full space method with dimension n.

To further test the efficiency of the proposed method, we consider the eigenvalue problem of Hilbert matrix [31]. In Equation (10), we take and

Due to highly ill-conditioned nature of the Hilbert matrix, it is a quite difficult eigenvalue problem. For this problem, we take , and to compute the largest eigenvalue, which is given as 2.182696097757424. The affine Krylov subspace method is convergent very fast with six iterations, and the CPU time is 1.52 s. The error of eigen-equation is . However, finding the largest eigenvalue in the full space with dimension , it does not converge within 100 iterations, and the CPU time is increased to 33.12 s. By using the MATLAB, we obtain 2.182696097757423 which is close to that obtained by the Krylov subspace method. However, the MATLAB leads to a large error of Det, which indicates that the characteristic equation for the Hilbert matrix is highly ill-posed. Notice that the smallest eigenvalue is very difficult to be computed, since it is very close to zero. However, we can obtain the smallest eigenvalue with one iteration and the error is obtained. For the eigenvalue problem of the Hilbert matrix, the MATLAB leads to a wrong eigenvalue , which is negative and contradicts to the positive eigenvalues of the Hilbert matrix. The eig function in Matlab cannot guarantee to obtain a positive eigenvalue for the positive definite Hilbert matrix. The first 41 eigenvalues are all negative. The first 73 eigenvalues are less than . So most of these eigenvalues computed by Matlab should be spurious. The MATLAB is effective for general purpose eigenvalue problem with normal matrices, but for the highly ill-conditioned matrices the effectiveness of the MATLAB might be lost.

In addition to the computational efficiency, the Krylov subspace method has several advantages including easy-implementation, the ability to detect all eigenvalues and computing all the corresponding eigenfunctions simultaneously. One can roughly locate the eigenvalues from the peaks in the response curve and then determine precise value by using the IDM. Although for the eigenvalue problem of the highly ill-conditioned Hilbert matrix, the Krylov subspace method is reliable.

6. An Iterative Detection Method for Complex Eigenvalue

Instead linearizing Equation (3) to a linear generalized eigenvalue problem in Equation (10), we directly treat the quadratic eigenvalue problem (3). Now, we consider the detection of complex eigenvalue of the quadratic eigenvalue problem (3). Because , and are real matrices, the complex eigenvalue is written by Equation (14). When we take

inserting Equations (14) and (39) into Equation (3) yields

Letting

Equation (40) becomes

which is an dimensional homogeneous linear system.

The numerical procedures for determining the complex eigenvalue of the quadratic eigenvalue problem (3) are summarized as follows. (i) Give q, , a and b. (ii) For each collocating point solving Equation (44) to obtain and taking the optimal to satisfy

where the size of must be large enough to include at least one complex eigenvalue.

By Equation (45), we gradually reduce the size of the rectangle centered at the previous peak point by renewing the range to a finer one. Give an initial interval , and we fix the collocating points by , and pick up the maximal point denoted by . Then, a finer rectangle is given by , and , and in that new rectangle we pick up the new maximum point denoted by . Continuing this process until , we can obtain the complex eigenvalue with high accuracy. This algorithm is shortened as an iterative detection method (IDM).

When the complex eigenvalue is computed, we can apply the techniques in Equations (32)–(35) with replaced by and by in Equation (42) to compute the complex eigen-mode. The numerical procedures to detect the complex eigenvalue and to compute the complex eigenvector in Equations (14)–(18) for the generalized eigenvalue problem (10) are the same to those in the above.

7. Examples of Quadratic Eigenvalue Problems

7.1. Linearizing Method

For the quadratic eigenvalue problem (3) there are two major methods: Linearization method to a generalized eigenvalue problem as shown by Equation (10) and the decomposition method based on the generalized Bezout method as shown by Equations (4)–(6). In this section, we give two examples of the quadratic eigenvalue problem (3) solved by an iterative detection method in Section 4 for the resultant generalized eigenvalue problem, and developing a direct detection method to Equation (3) for the applications to other three examples.

Example 4.

We consider the structural system (1) with [4]:

There exist four real eigenvalues and two imaginary eigenvalues i and .

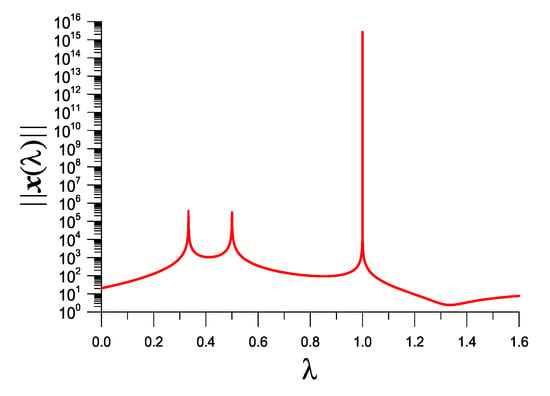

We take and plot vs. in Figure 6, where three peaks happen at . Starting from and under a convergence criterion , through six iterations is obtained with , where is solved from Equations (32)–(35) with . Starting from and after one iteration, we can obtain and is obtained. Starting from and after two iterations, we can obtain and , where is solved from Equations (32)–(35) with .

Figure 6.

For example 4, showing three picks of in the response curve in the interval [0,1.6].

By Equation (47), we cannot recover . To remedy this defect, we notice that Equation (3) can also be expressed by Equation (10) with

Let . We can set up a new eigenvalue problem:

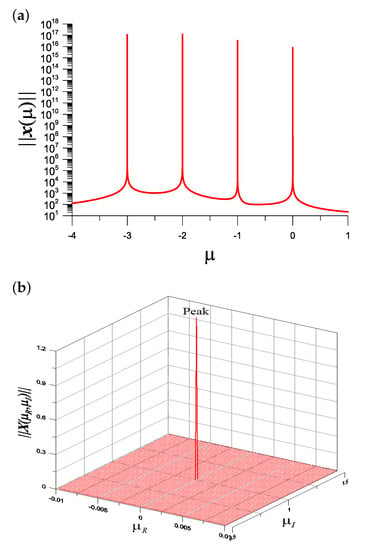

We take and plot vs. in Figure 7a, where we can observe four peaks happened at , by which we can recover by using .

Figure 7.

For example 4, (a) showing four picks of in the response curve in the interval [−4,1], and (b) a pick of over the plane is enlarged in the interval .

Starting from and under a convergence criterion , after one iteration is obtained, and , where is solved from Equations (32)–(35) with . Starting from and after one iteration, we can obtain and . Starting from and after one iteration, we can obtain and is obtained, where is solved from Equations (32)–(35) with . Starting from and after one iteration, we can obtain .

We employ the IDM in Section 6 to locate the complex eigenvalue as shown in Figure 7b. We find that with the initial guess , we can find and with one iteration.

By the same token, when we locate the complex eigenvalue of Equation (21) with the initial guess , we can find and within ten iterations. Upon comparing to the exact one in Equation (22) the error is , which is very accurate, and the IDM is convergent very fast.

In the structural dynamics the coefficient matrices , and are symmetric; moreover, and are positive definite matrices. This example with a non-positive definite matrix and a non-symmetric matrix is borrowed from the literature [4]. This eigenvalue problem has an infinity eigenvalue.

Example 5.

We consider a free vibration problem of an MK structural system with [1]:

By inserting into Equation (1) with , we can derive

Let for . We take and plot vs. in Figure 8, where we can observe three peaks, whose precise values are 0.1391941468883, 1.745898311614913 and 4.114907541476740, respectively. Those values coincide to the roots obtained from the characteristic equation Det, that is,

Figure 8.

For example 5, showing three picks of in the response curve in the interval [0,5].

The corresponding natural frequencies are , and , respectively.

Starting from and under a convergence criterion , with four iterations is obtained, where is solved from Equations (32)–(35) with . Starting from and with five iterations, we can obtain . Starting from and with four iterations, we can obtain .

The corresponding eigen-modes are given as follows:

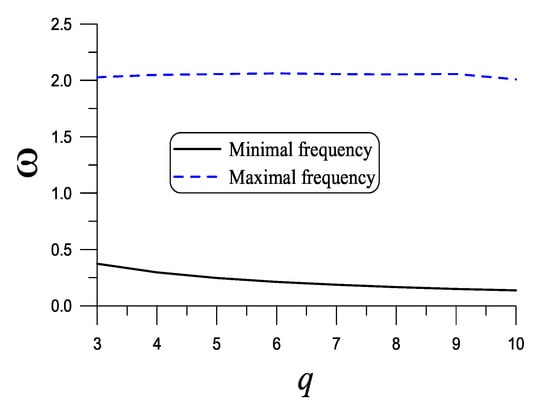

We extend the results to a ten-degree system with , , and . The response curve of vs. is plotted Figure 9, where we can observe ten peaks. The minimal frequency is and the maximal frequency is .

Figure 9.

For example 5 with ten-degree, showing ten picks of in the response curve in the interval [0,5].

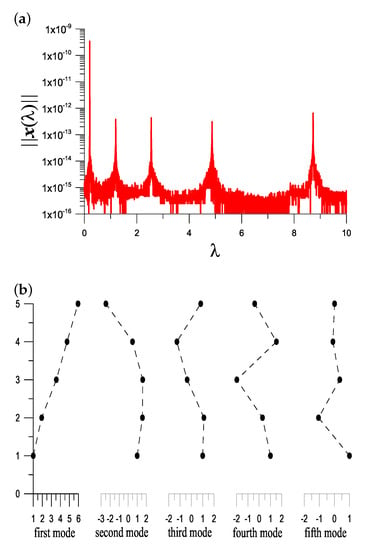

As a practical application, we consider a five-story shear building with [32]

We take and plot vs. in Figure 10a, where we can observe five peaks. Starting from and under a convergence criterion , with six iterations is obtained and is obtained, where is solved from Equations (32)–(35) with with the first mode being shown in Figure 10b at the first column.

Figure 10.

For a five-degree MK system, (a) showing five picks of in the response curve in the interval [0,10], and (b) displaying the five vibration modes.

Starting from and with seven iterations is obtained and is obtained, with the second mode being shown in Figure 10b at the second column.

Starting from and with six iterations is obtained and is obtained, with the third mode being shown in Figure 10b at the third column.

Starting from and with six iterations is obtained and is obtained, with the fourth mode being shown in Figure 10b at the fourth column.

Starting from and under a convergence criterion , with six iterations is obtained, and is obtained, where is solved from Equations (32)–(35) with , and the fifth mode is shown in Figure 10b at the fifth column. We have compared to the exact solution solved from the characteristic equation Det with an error , which is given by

The result presented in [32] has an error 0.1492, which is much more larger than . The advantage of the presented method is convergent fast and very accurate. The errors of the characteristic equation from the first to fifth eigenvalues are, respectively, Det, , , and .

The fundamental modes and frequencies obtained at here are convergent faster and more accurate than the Stodola iteration method as described in [32]. We have checked the accuracy to satisfy the characteristic equation Det, which is about in the orders to by the presented method. But with the Stodola method its accuracy is poor with very large error in the order for the fifth eigenvalue to satisfy the characteristic equation. One reason to cause the large error is that even with a small error of obtained by the Stodola method, it is amplified by the product of large coefficients in and with the amount , which is estimated by the product of the diagonal elements of .

7.2. Direct Detection Method

Example 6.

Let , Equation (3) can be written as

By letting

we come to a nonhomogeneous linear system:

where is a nonzero constant exciting vector.

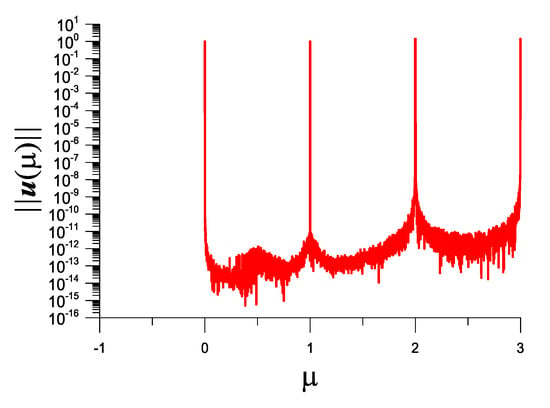

We apply the IDM in Section 4 to directly detect the real eigenvalues of example 4, and plot vs. in Figure 11, where four peaks are happened at , by which we can recover by using .

Figure 11.

For example 4 solved as a quadratic eigenvalue problem, showing four picks of in the response curve in the interval [0,3].

Starting from and under a convergence criterion , with six iterations is obtained. Starting from and with six iterations, we can obtain and . Starting from and after one iteration, we can obtain and . Starting from and after two iterations, we can obtain and .

The eigen-modes corresponding to are given as follows:

Example 7.

We consider [12,33]:

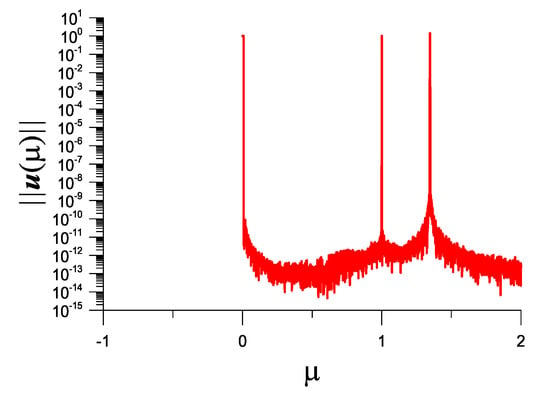

There exist three pairs of complex eigenvalues.

Beginning with , , and , respectively, and under a convergence criterion , the IDM with 11 iterations converges to the following eigenvalues:

whose corresponding complex eigen-modes are with the errors , and , respectively. The accuracy of the eigevalues and eigen-modes is in the order of or .

Example 8.

We extend example 5 to a q-degree MCK system with , , , , and and . With , we employ the IDM in Section 6 to locate the complex eigenvalue, and over the plane there exists one peak in Figure 12. We find that with the initial guess and , we can find and with six iterations. The corresponding complex eigen-mode is given by

whose error is obtained.

Figure 12.

For example 7, showing a pick of in the response surface for complex eigenvalues.

For , we find that with the initial guess and , we can find and with seven iterations, and the error is obtained.

In Figure 13, with we plot the maximal and minimal frequencies with respect to q, which can be seen that the maximal frequencies are insensitive to the dimension q of the MCK system.

Figure 13.

For example 7 with different dimension, showing maximal and minimal frequencies.

Remark 1.

It is interesting that the original real eigenvalues are changed to three as shown in Figure 14, where we can observe three peaks. Starting from and with six iterations, we can obtain and . Starting from and with six iterations, we can obtain and .

Figure 14.

For a nonlinear perturbation of example 4, showing three picks of in the response curve in the interval [0,2].

8. Conclusions

This paper was concerned with the fast iterative solutions of generalized and quadratic eigenvalue problems. Since the vector eigen-equation is a homogeneous linear system, the eigenvector is either a zero vector when the eigen-parameter is not an eigenvalue, or an unknown vector when the eigen-parameter is an eigenvalue. Based on the original eigen-equation, we cannot construct the response curve, which is the magnitude of the eigenvector with respect to the eigen-parameter. We transformed the eigenvector to a new vector including a nonzero exciting vector, which by inserting into the original eigen-equation yields a nonhomogeneous linear system for the new vector. By varying the eigen-parameter in a desired interval, we can construct the response curve. This is a new idea of the so-called excitation method (EM). Then, by maximizing the magnitude of the eigenvector solved from the nonhomogeneous linear system, we can quickly detect the location of the eigenvalue, which is a peak in the response curve. To precisely obtain the eigenvalue, we developed an iterative detection method (IDM) by sequentially reducing the size of the searching interval. For reducing the computational cost of the generalized eigenvalue problem, we derived the nonhomogeneous linear system in a lower m-dimensional affine Krylov subspace. If m is large enough and , the presented method can find all eigenvalues very effectively. Then, we reduced the eigen-equation with one dimension less to a nonhomogeneous linear system to determine the eigenvector with high accuracy.

In summary, the key outcomes are pointed out here.

- We transformed the eigenvector to a new vector including a nonzero exciting vector, which by inserting into the original eigen-equation yields a nonhomogeneous linear system for the new vector.

- By varying the eigen-parameter in a desired interval, we can construct the response curve.

- By maximizing the magnitude of the eigenvector solved from the nonhomogeneous linear system, we can quickly detect the location of the eigenvalue, which is a peak in the response curve.

- To precisely obtain the eigenvalue, we developed an iterative detection method (IDM) by sequentially zoom-in.

- From the peaks in the response curve one can roughly locate the eigenvalues and then determine precise value by using the IDM.

- By using the EM and IDM, we have solved the quadratic eigenvalue problem for the application to the free vibrations of multi-degree mechanical systems.

- For the complex eigenvalue we developed the IDM in the eigen-parameter plane of real and imaginary parts of complex eigenvalue. Very accurate eigenvalue and eigen-mode can be obtained merely through a few iterations.

Author Contributions

Conceptualization, C.-S.L.; Data curation, C.-S.L.; Formal analysis, C.-S.L.; Funding acquisition, C.-W.C.; Investigation, C.-W.C.; Methodology, C.-S.L.; Project administration, C.-W.C.; Resources, C.-S.L.; Software, C.-W.C.; Supervision, C.-S.L. and C.-W.C.; Validation, C.-W.C.; Visualization, C.-W.C. and C.-L.K.; Writing—original draft, C.-S.L.; Writing—review & editing, C.-W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Meirovitch, L. Elements of Vibrational Analysis, 2nd ed.; McGraw-Hill: New York, NY, USA, 1986. [Google Scholar]

- Smith, H.A.; Singh, R.K.; Sorensen, D.C. Formulation and solution of the non-linear, damped eigenvalue problem for skeletal systems. Int. J. Numer. Meth. Engng. 1995, 38, 3071–3085. [Google Scholar] [CrossRef]

- Osinski, Z. (Ed.) Damping of Vibrations; A.A. Balkema: Rotterdam, The Netherlands, 1998. [Google Scholar]

- Tisseur, F.; Meerbergen, K. The quadratic eigenvalue problem. SIAM Rev. 2001, 43, 235–286. [Google Scholar] [CrossRef]

- Cakar, O. Mass and stiffness modifications without changing any specified natural frequency of a structure. J. Vib. Contr. 2011, 17, 769–776. [Google Scholar] [CrossRef]

- Gao, W. Natural frequency and mode shape analysis of structures with uncertainty. Mech. Sys. Sig. Process 2007, 21, 24–39. [Google Scholar] [CrossRef]

- Gantmacher, F.R. The Theory of Matrices 1; Chelsea: New York, NY, USA, 1959. [Google Scholar]

- Kublanovskaya, V.N. On an approach to the solution of the generalized latent value problem for λ-matrices. SIAM J. Numer. Anal. 1970, 7, 532–537. [Google Scholar] [CrossRef]

- Ruhe, A. Algorithms for the nonlinear eigenvalue problem. SIAM J. Numer. Anal. 1973, 10, 674–689. [Google Scholar] [CrossRef]

- Dennis, J.E., Jr.; Traub, J.F.; Weber, R.P. The algebraic theory of matrix polynomials. SIAM J. Numer. Anal. 1976, 13, 831–845. [Google Scholar] [CrossRef][Green Version]

- Davis, G.J. Numerical solution of a quadratic matrix equation. SIAM J. Sci. Stat. Comput. 1981, 2, 164–175. [Google Scholar] [CrossRef]

- Higham, N.J.; Kim, H. Solving a quadratic matrix equation by Newton’s method with exact line searches. SIAM J. Matrix Anal. Appl. 2001, 23, 303–316. [Google Scholar] [CrossRef]

- Higham, N.J.; Kim, H. Numerical analysis of a quadratic matrix equation. IMA J. Numer. Anal. 2000, 20, 499–519. [Google Scholar] [CrossRef]

- Bai, Z.; Su, Y. SOAR: A second-order Arnoldi method for the solution of the quadratic eigenvalue problem. SIAM J. Matrix Anal. Appl. 2005, 26, 640–659. [Google Scholar] [CrossRef]

- Qian, J.; Lin, W.-W. A numerical method for quadratic eigenvalue problems of gyroscopic systems. J. Sound Vib. 2007, 306, 284–296. [Google Scholar] [CrossRef]

- Meerbergen, K. The quadratic Arnoldi method for the solution of the quadratic eigenvalue problem. SIAM J. Matrix Anal. Appl. 2008, 30, 1463–1482. [Google Scholar] [CrossRef]

- Hammarling, S.; Munro, C.J.; Tisseur, F. An algorithm for the complete solution of quadratic eigenvalue problems. ACM Trans. Math. Softw. 2013, 39, 18. [Google Scholar] [CrossRef]

- Guo, C.-H. Numerical solution of a quadratic eigenvalue problem. Linear Algebra Appl. 2004, 385, 391–406. [Google Scholar] [CrossRef][Green Version]

- Chen, C.; Ma, C. An accelerated cyclic-reduction-based solvent method for solving quadratic eigenvalue problem of gyroscopic systems. Comput. Math. Appl. 2019, 77, 2585–2595. [Google Scholar] [CrossRef]

- Golub, G.H.; van Loan, C.F. Matrix Computations; The John Hopkins University Press: Baltimore, MD, USA, 2012. [Google Scholar]

- Sakurai, T.; Sugiura, H. A projection method for generalized eigenvalue problems using numerical integration. J. Comput. Appl. Math. 2003, 159, 119–128. [Google Scholar] [CrossRef]

- Saad, Y. Variations on Arnoldi’s method for computing eigenelements of large unsymmetric matrices. Linear Algebra Its Appl. 1980, 34, 269–295. [Google Scholar] [CrossRef]

- Saad, Y. Chebyshev acceleration techniques for solving nonsymmetric eigenvalue problems. Math. Comput. 1984, 42, 567–588. [Google Scholar] [CrossRef]

- Arnoldi, W.E. The principle of minimized iterations in the solution of the matrix eigenvalue problem. Quart. Appl. Math. 1951, 9, 17–29. [Google Scholar] [CrossRef]

- Saad, Y. Numerical solution of large nonsymmetric eigenvalue problems. Comput. Phys. Commun. 1989, 53, 71–90. [Google Scholar] [CrossRef][Green Version]

- Morgan, R.B. On restarting the Arnoldi method for large nonsymmetric eigenvalue problems. Math. Comput. 1996, 65, 1213–1230. [Google Scholar] [CrossRef]

- Parlett, B.N.; Taylor, D.R.; Liu, Z.A. A look-ahead Lanczos algorithm for unsymmetric matrices. Math. Comput. 1985, 44, 105–124. [Google Scholar]

- Nour-Omid, B.; Parlett, B.N.; Taylor, R.L. Lanczos versus subspace iteration for solution of eigenvalue problems. Int. J. Num. Meth. Eng. 1983, 19, 859–871. [Google Scholar] [CrossRef]

- Liu, C.-S. A doubly optimized solution of linear equations system expressed in an affine Krylov subspace. J. Comput. Appl. Math. 2014, 260, 375–394. [Google Scholar] [CrossRef]

- Saad, Y. Iterative Methods for Sparse Linear Systems, 2nd ed.; SIAM: Philadelphia, PA, USA, 2003. [Google Scholar]

- Fettis, H.E.; Caslin, J.C. Eigenvalues and eigenvectors of Hilbert matrices of order 3 through 10. Math. Comput. 1967, 21, 431–441. [Google Scholar] [CrossRef][Green Version]

- Berg, G.V. Elements of Structural Dynamics; Prentice-Hall: Hoboken, NJ, USA, 1988. [Google Scholar]

- Lancaster, P. Lambda-Matrices and Vibrating Systems; Pergamon Press: Oxford, UK, 1966. [Google Scholar]

- Chiappinelli, R. What do you mean by “nonlinear eigenvalue problems”? Axioms 2018, 7, 39. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).