An Improved Lithium-Ion Battery Fire and Smoke Detection Method Based on the YOLOv8 Algorithm

Abstract

1. Research Background

2. Literature Review

2.1. Research Progress

2.2. Issues and Research Motivation

- 1.

- High false alarm and miss rates.

- 2.

- Insufficient real-time performance.

- 3.

- Inadequate feature extraction capabilities.

- 1.

- Feature Extraction Optimization

- 2.

- Specialized Dataset Design

- 3.

- Loss Function and Anchor Box Adjustment

- 4.

- Enhancement of Real-Time Performance

3. Algorithm Research

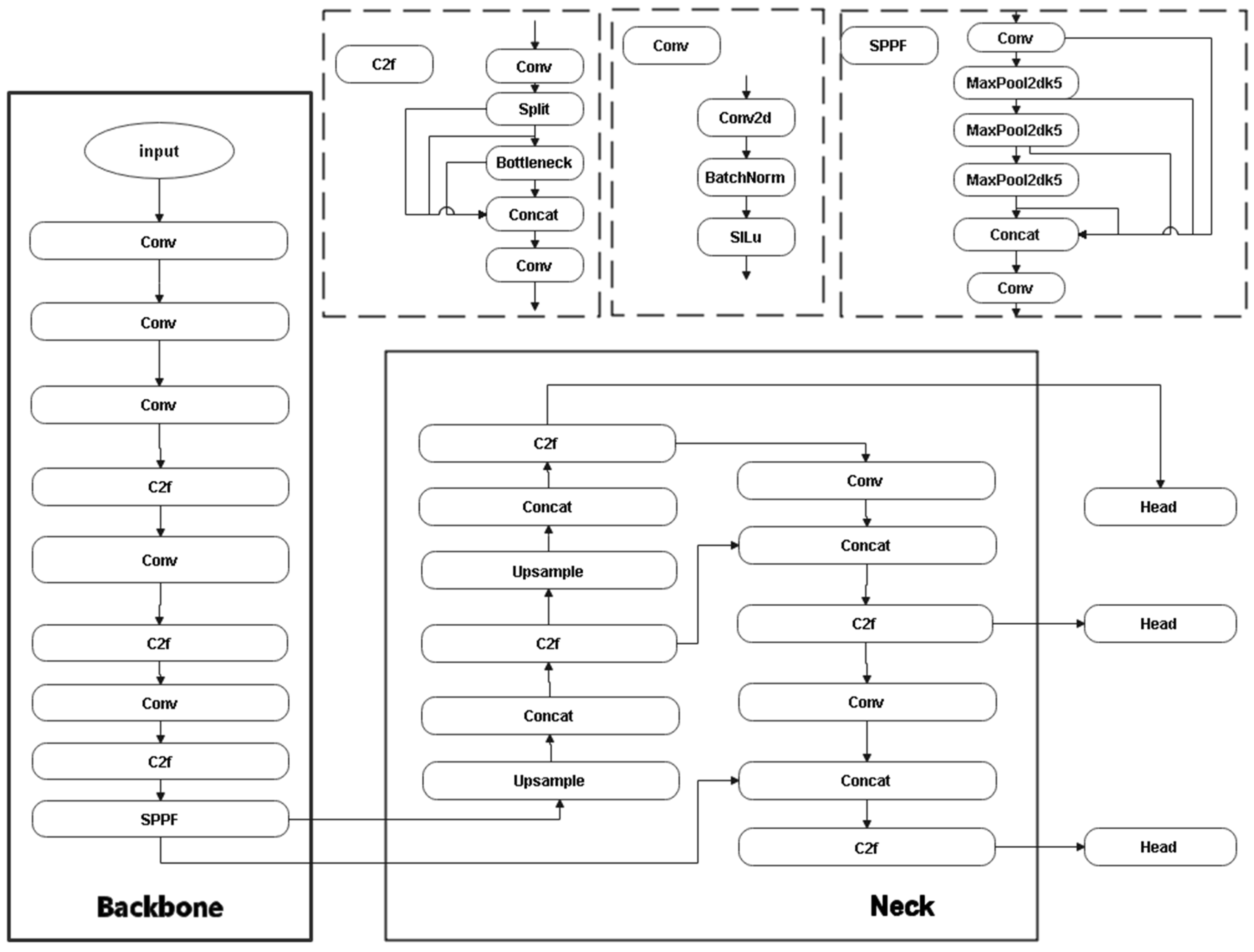

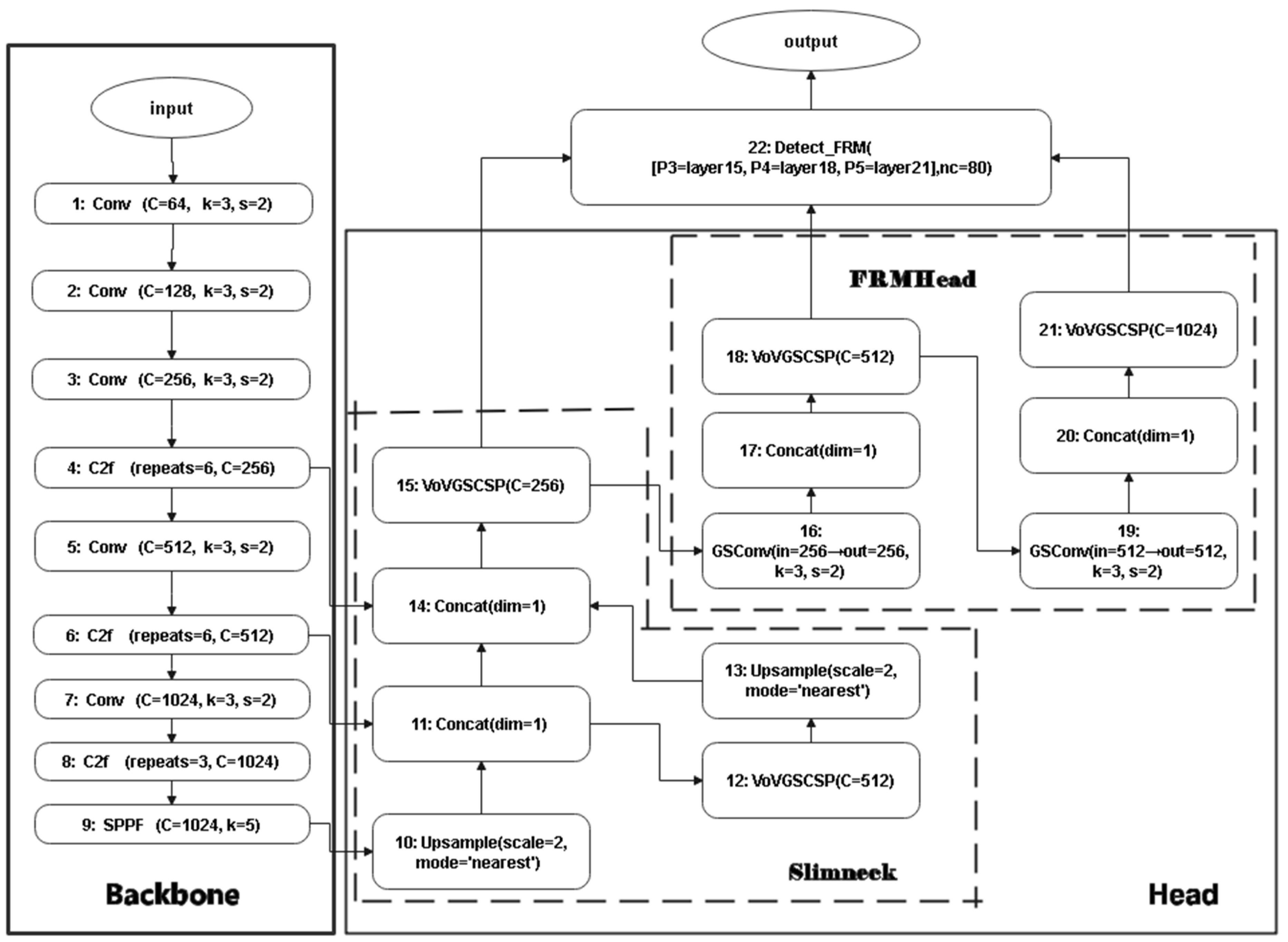

3.1. Single-Model Approach

3.2. Model Fusion

4. Experimental Preparation

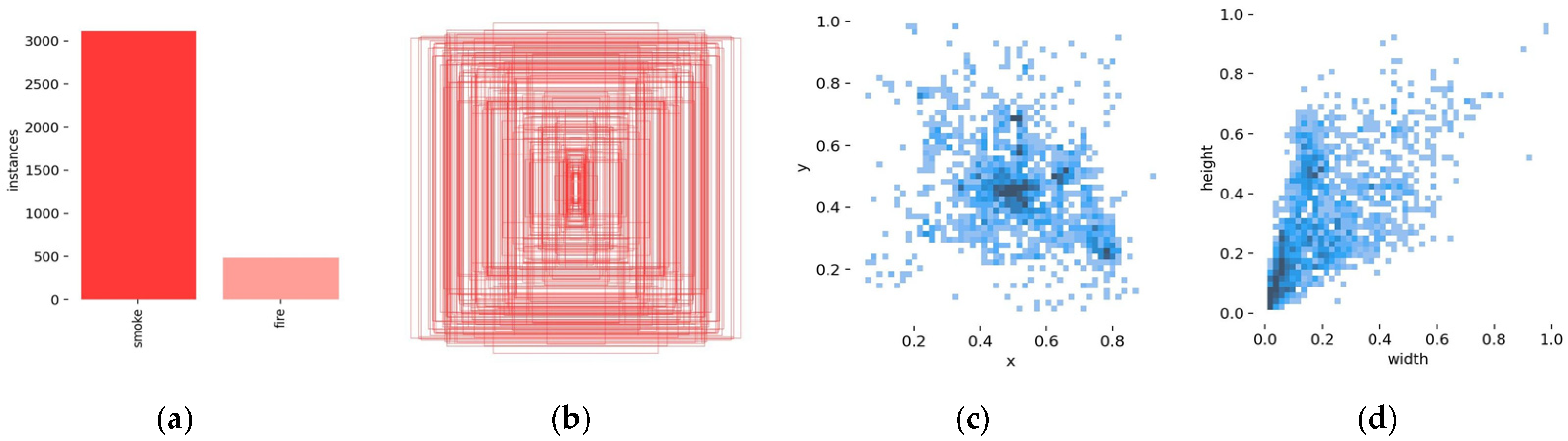

4.1. Data Collection

- (1)

- Comprehensiveness: The dataset covers all key stages of the lithium battery thermal runaway process. From initial smoke emission to the end of the post-burning phase, the complete evolution of thermal runaway is recorded. This provides sufficient data support to distinguish subtle differences between the initial smoke, subsequent fire stages, and later combustion phases.

- (2)

- Multi-scene: The dataset sources include not only controlled laboratory experiments but also real-world scenarios, thereby enhancing the model’s generalization ability and robustness during testing.

- (3)

- Uniform temporal sampling: By extracting one frame every 3 s, the method effectively avoids redundancy among consecutive frames while ensuring balanced sampling of the state changes over time, preventing the model from becoming overly dependent on features from a specific moment during training.

- (4)

- Strict screening: After initially extracting a large number of images, redundant, ghosting, and low-quality images were removed to finally obtain 2300 high-quality images. The images provide clean and effective training samples, and their rich content and reasonable distribution of features lay a solid data foundation for subsequent experiments.

4.2. Experimental Setup and Configuration

4.3. Model Performance Evaluation Metrics

5. Experimental Verification

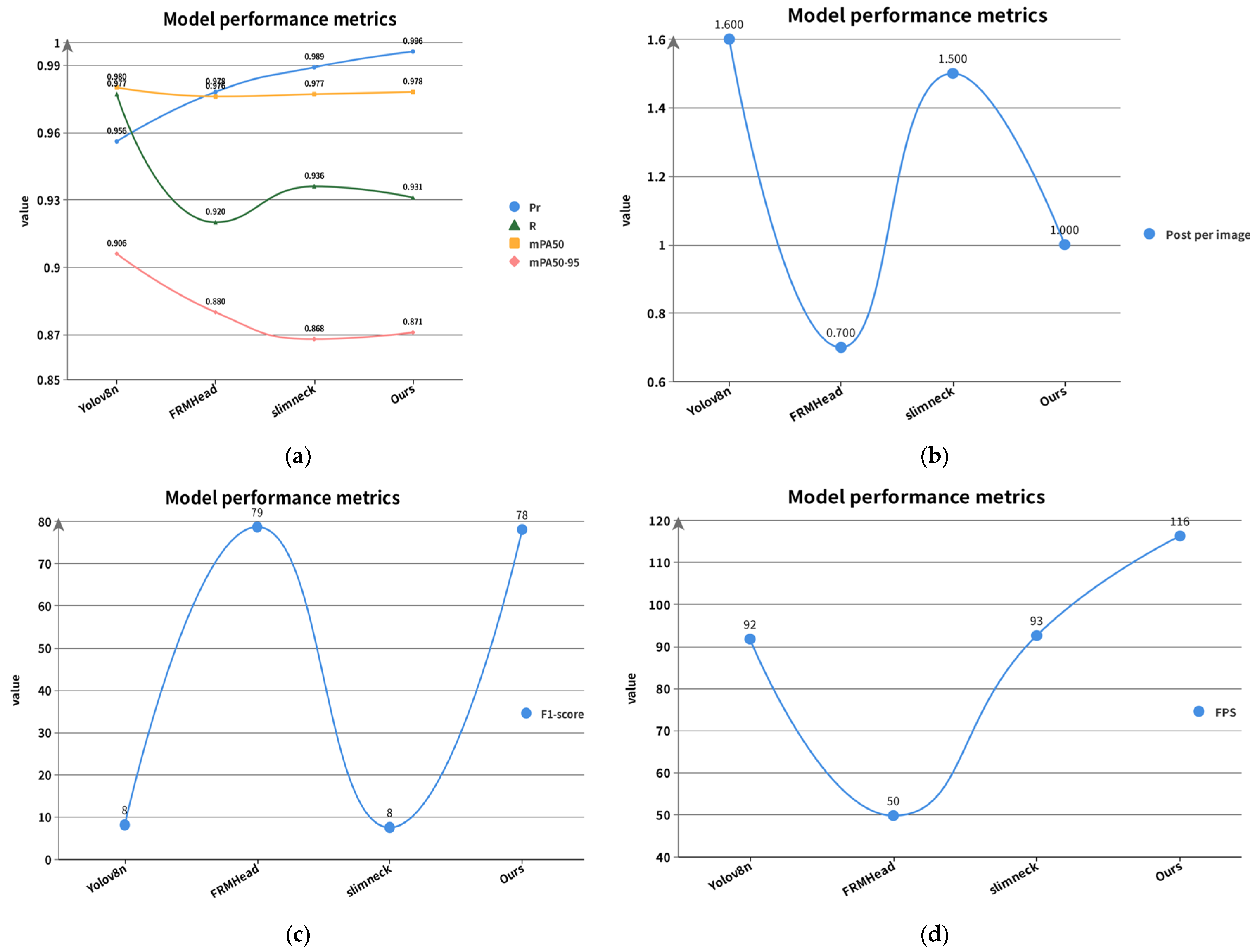

5.1. Ablation Experiment

5.1.1. YOLOv8 + FRMHead Model vs. YOLOv8 + Slimneck Model

5.1.2. YFSNet Model

- 1.

- Precision and Detail Capture

- 2.

- Recall and Information Balance

- 3.

- Compute Complexity vs. Real-Time Speed

- 4.

- Overall Consistency (F1-Score)

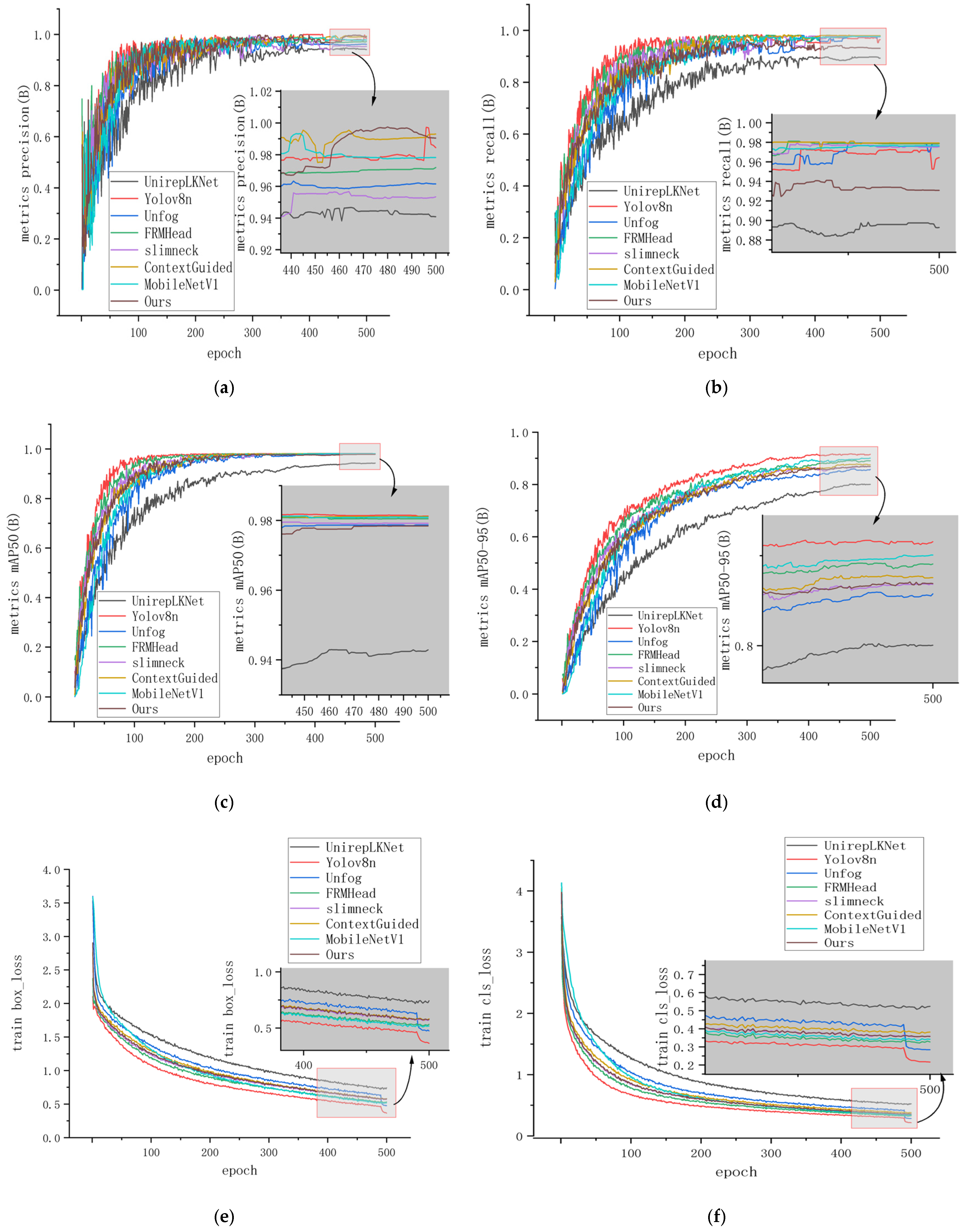

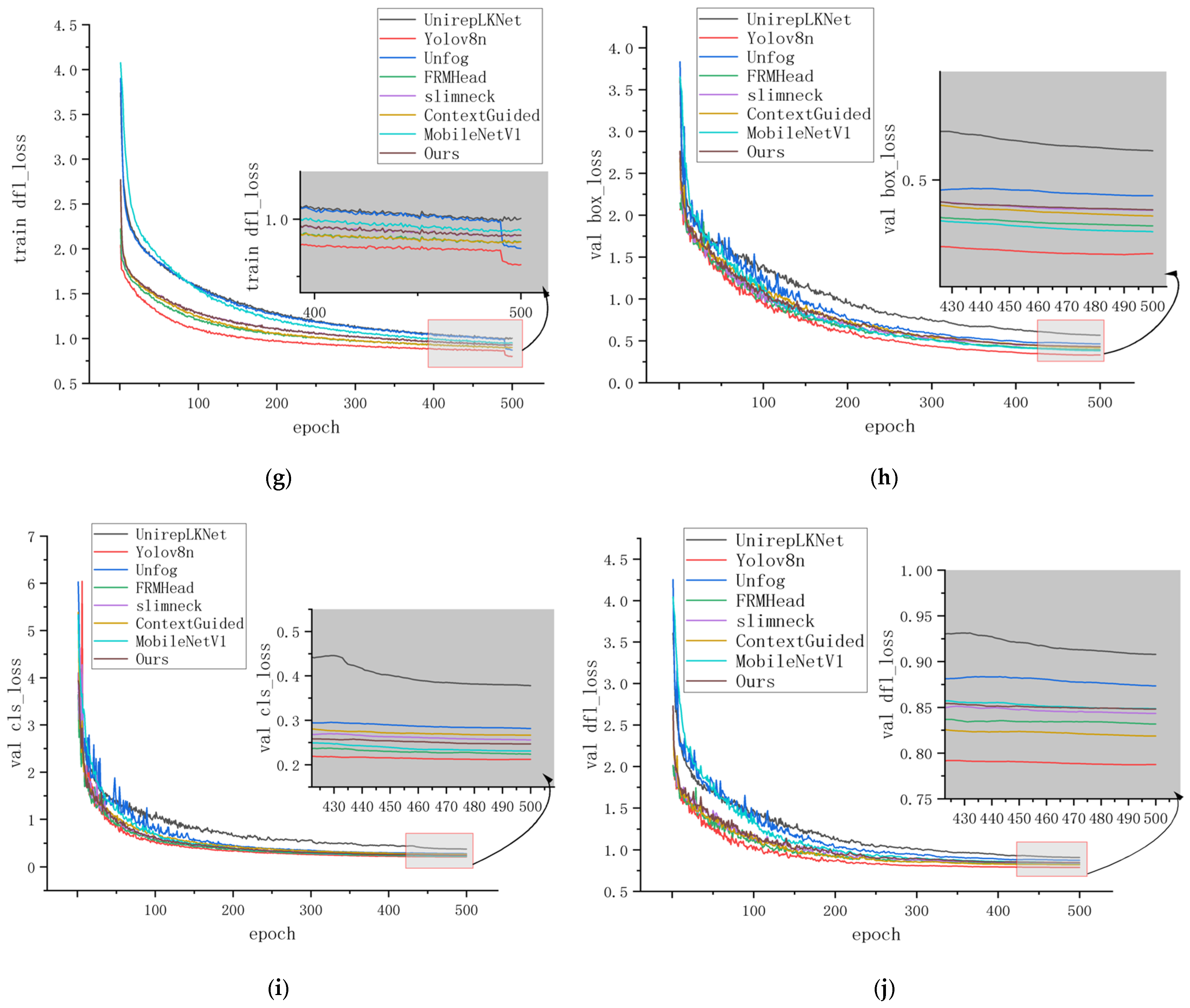

5.2. Comparison Experiment

- 1.

- Precision (Pr):

- 2.

- Recall (R):

- 3.

- mPA50 and mPA50-95:

- 4.

- Computation Load (GFLOPs) and Post-processing Time:

- 5.

- F1-score:

- 6.

- Inference Speed (FPS):

- 7.

- Comprehensive Analysis:

- The improved model (YFSNet) achieves approximately 4–5% higher precision than traditional networks, significantly reducing false detections.

- With a similar F1-score to high-level algorithms like FRMHead and a 133% increase in inference speed, it offers a potent combination of accuracy and efficiency.

- Stable mPA50 metrics combined with moderate GFLOPs and minimal post-processing delay make it ideal for industrial applications where both high detection accuracy and operational efficiency are essential.

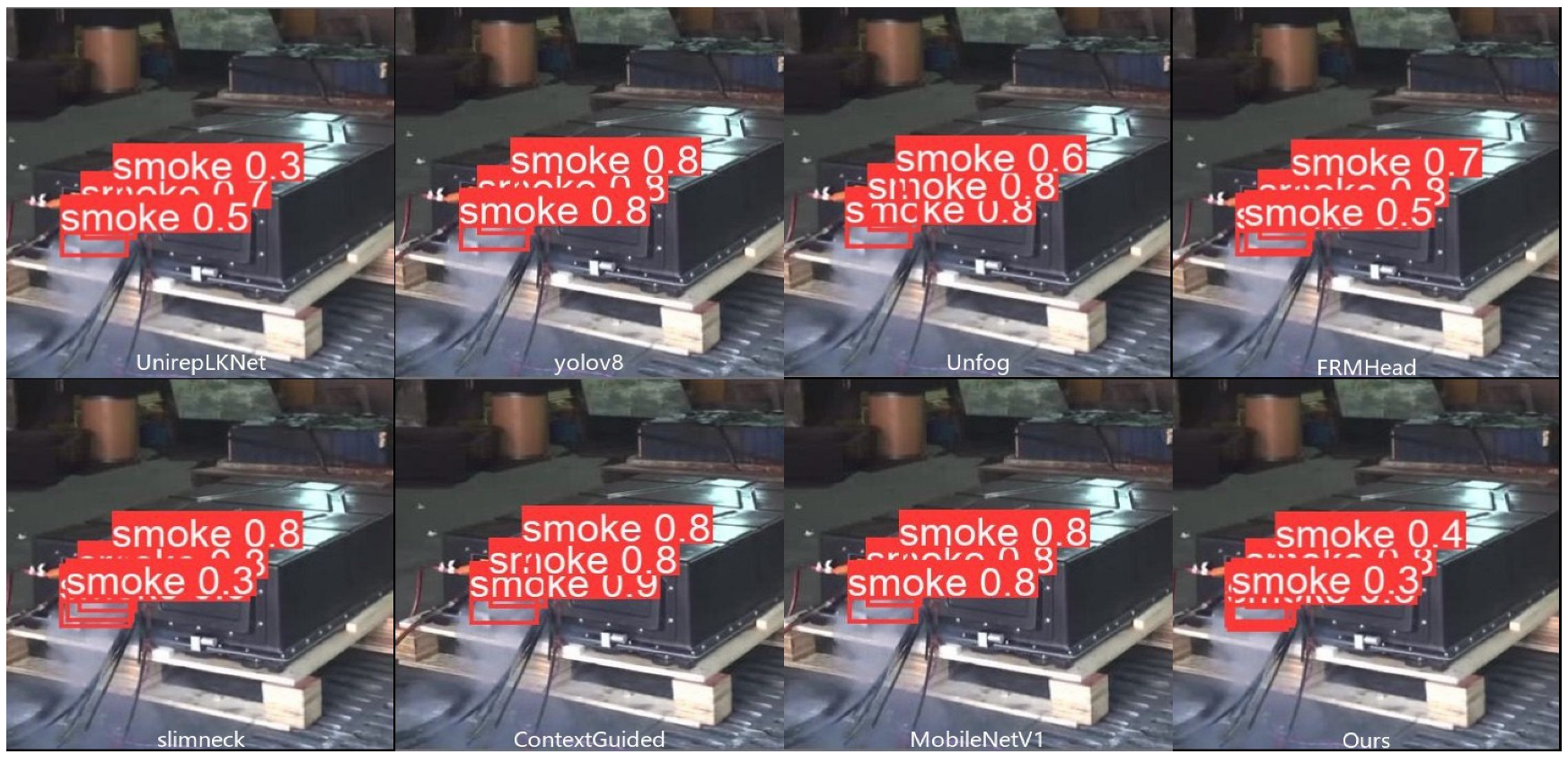

5.3. Model Performance Comparison

6. Conclusions

6.1. Summary

6.2. Improvement Directions

- 1.

- Extreme Conditions:

- 2.

- Data Diversity:

- 3.

- Scalability and Deployment:

- 4.

- Broader Applications:

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bajolle, H.; Lagadic, M.; Louvet, N. The future of lithium-ion batteries: Exploring expert conceptions, market trends, and price scenarios. Energy Res. Soc. Sci. 2022, 93, 102850. [Google Scholar] [CrossRef]

- Tarascon, J.M.; Armand, M. Issues and challenges facing rechargeable lithium batteries. Nature 2001, 414, 359–367. [Google Scholar] [CrossRef]

- Dunn, B.; Kamath, H.; Tarascon, J.M. Electrical energy storage for the grid: A battery of choices. Science 2011, 334, 928–935. [Google Scholar] [CrossRef] [PubMed]

- Zalosh, R.; Gandhi, P.; Barowy, A. Lithium-ion energy storage battery explosion incidents. J. Loss Prev. Process Ind. 2021, 72, 104560. [Google Scholar] [CrossRef]

- Wang, Z.; Huang, G.; Chen, Z.; An, C. Accidents involving lithium-ion batteries in non-application stages: Incident characteristics, environmental impacts, and response strategies. BMC Chem. 2025, 19, 94. [Google Scholar] [CrossRef]

- Sathishkumar, V.E.; Cho, J.; Subramanian, M.; Naren, O.S. Forest fire and smoke detection using deep learning-based learning without forgetting. Fire Ecol. 2023, 19, 9. [Google Scholar] [CrossRef]

- Bu, F.; Gharajeh, M.S. Intelligent and vision-based fire detection systems: A survey. Image Vis. Comput. 2019, 91, 103803. [Google Scholar] [CrossRef]

- Mozaffari, M.H.; Li, Y.; Ko, Y. Real-time detection and forecast of flashovers by the visual room fire features using deep convolutional neural networks. J. Build. Eng. 2023, 64, 105674. [Google Scholar] [CrossRef]

- Feng, J.; Sun, Y. Multiscale network based on feature fusion for fire disaster detection in complex scenes. Expert Syst. Appl. 2024, 240, 122494. [Google Scholar] [CrossRef]

- Jeon, M.; Choi, H.S.; Lee, J.; Kang, M. Multi-scale prediction for fire detection using convolutional neural network. Fire Technol. 2021, 57, 2533–2551. [Google Scholar] [CrossRef]

- Saponara, S.; Elhanashi, A.; Gagliardi, A. Real-time video fire/smoke detection based on CNN in antifire surveillance systems. J. Real-Time Image Process. 2021, 18, 889–900. [Google Scholar] [CrossRef]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Thermal infrared sensing for near real-time data-driven fire detection and monitoring systems. Sensors 2020, 20, 6803. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Ye, J.; Wang, C.; Ge, C.; Yu, Y.; Zhang, Q. A fire source localization algorithm based on temperature and smoke sensor data fusion. Fire Technol. 2023, 59, 663–690. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Quach, C.H.; Pham, M.T. Video smoke detection for surveillance cameras based on deep learning in indoor environment. In Proceedings of the 2020 4th International Conference on Recent Advances in Signal Processing, Telecommunications & Computing (SigTelCom), Hanoi, Vietnam, 28–29 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 82–86. [Google Scholar]

- Jana, S.; Shome, S.K. Hybrid ensemble based machine learning for smart building fire detection using multi modal sensor data. Fire Technol. 2023, 59, 473–496. [Google Scholar] [CrossRef]

- Han, X.; Chang, J.; Wang, K. You only look once: Unified, real-time object detection. Procedia Comput. Sci. 2021, 183, 61–72. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Amin, A.; Mumtaz, R.; Bashir, M.J.; Zaidi, S.M.H. Next-generation license plate detection and recognition system using yolov8. In Proceedings of the 2023 IEEE 20th International Conference on Smart Communities: Improving Quality of Life using AI, Robotics and IoT (HONET), Boca Raton, FL, USA, 4–6 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 179–184. [Google Scholar]

- Guo, T.; Xu, X. Salient object detection from low contrast images based on local contrast enhancing and non-local feature learning. Vis. Comput. 2021, 37, 2069–2081. [Google Scholar] [CrossRef]

- Zhang, S.; Sun, Y.; Su, J.; Gan, G.; Wen, Z. Adaptive Training Strategies for Small Object Detection Using Anchor-Based Detectors. In International Conference on Artificial Neural Networks; Springer Nature: Cham, Switzerland, 2023; pp. 28–39. [Google Scholar]

- Sahoo, S.; Nanda, P.K. Adaptive feature fusion and spatio-temporal background modeling in KDE framework for object detection and shadow removal. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1103–1118. [Google Scholar] [CrossRef]

- Tao, H.; Zheng, Y.; Wang, Y.; Qiu, J.; Stojanovic, V. Enhanced feature extraction YOLO industrial small object detection algorithm based on receptive-field attention and multi-scale features. Meas. Sci. Technol. 2024, 35, 105023. [Google Scholar] [CrossRef]

- Cao, J.; Chen, Q.; Guo, J.; Shi, R. Attention-guided context feature pyramid network for object detection. arXiv 2020, arXiv:2005.11475. [Google Scholar]

- Huang, Y.; Li, J. Key Challenges for grid-scale lithium-ion battery energy storage. Adv. Energy Mater. 2022, 12, 2202197. [Google Scholar] [CrossRef]

- Pu, Z.; Yang, M.; Jiao, M.; Zhao, D.; Huo, Y.; Wang, Z. Thermal Runaway Warning of Lithium Battery Based on Electronic Nose and Machine Learning Algorithms. Batteries 2024, 10, 390. [Google Scholar] [CrossRef]

- Azzabi, T.; Jeridi, M.H.; Mejri, I.; Ezzedine, T. Multi-Modal AI for Enhanced Forest Fire Early Detection: Scalar and Image Fusion. In Proceedings of the 2024 IEEE International Conference on Advanced Systems and Emergent Technologies (IC_ASET), Hammamet, Tunisia, 27–29 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Hu, D.; Huang, S.; Wen, Z.; Gu, X.; Lu, J. A review on thermal runaway warning technology for lithium-ion batteries. Renew. Sustain. Energy Rev. 2024, 206, 114882. [Google Scholar] [CrossRef]

- Su, L.; Lee, Y.H.; Chen, Y.L.; Tseng, H.W.; Yang, C.F. Using Edge Computing Technology in Programmable Logic Controller to Realize the Intelligent System of Industrial Safety and Fire Protection. Sens. Mater. 2023, 35, 1731–1740. [Google Scholar] [CrossRef]

- Titu, M.F.S.; Pavel, M.A.; Michael, G.K.O.; Babar, H.; Aman, U.; Khan, R. Real-Time Fire Detection: Integrating Lightweight Deep Learning Models on Drones with Edge Computing. Drones 2024, 8, 483. [Google Scholar] [CrossRef]

- Murthy, C.B.; Hashmi, M.F.; Bokde, N.D.; Geem, Z.W. Investigations of object detection in images/videos using various deep learning techniques and embedded platforms—A comprehensive review. Appl. Sci. 2020, 10, 3280. [Google Scholar] [CrossRef]

- Hu, Z.; Chen, W.; Wang, H.; Tian, P.; Shen, D. Integrated data-driven framework for anomaly detection and early warning in water distribution system. J. Clean. Prod. 2022, 373, 133977. [Google Scholar] [CrossRef]

| Equipment | Parameters |

|---|---|

| Processor | 13th Gen Intel(R) Core(TM) i7-13620H 2.40 GHz |

| RAM | 16 GB |

| Operating system | Windows11 |

| GPU | NVIDIA GeForce RTX 4060 |

| Programming tools | PyCharm |

| Programming languages | Python |

| Model | Performance Metrics | |||||||

|---|---|---|---|---|---|---|---|---|

| Pr | R | mPA50 | mPA50-95 | GFLOPs | PPI | F1-Score | FPS | |

| Yolov8n | 0.956 | 0.977 | 0.98 | 0.906 | 8.2 | 1.6 | 8.1109 | 91.7431 |

| V8 + FRMHead | 0.978 | 0.92 | 0.976 | 0.88 | 76.3 | 0.7 | 78.6316 | 49.7512 |

| V8 + Slimneck | 0.989 | 0.936 | 0.977 | 0.868 | 7.3 | 1.5 | 7.5009 | 92.5926 |

| Model | Performance Metrics | |||||||

|---|---|---|---|---|---|---|---|---|

| Pr | R | mPA50 | mPA50-95 | GFLOPs | PPI | F1-Score | FPS | |

| UnirepLKNet | 0.945 | 0.896 | 0.942 | 0.799 | 16.4 | 1.9 | 16.8365 | 44.8430 |

| YOLOv8n | 0.956 | 0.977 | 0.98 | 0.906 | 8.2 | 1.6 | 8.1109 | 91.7431 |

| Unfog | 0.96 | 0.979 | 0.979 | 0.859 | 9.6 | 0.8 | 9.5059 | 123.4568 |

| FRMHead | 0.978 | 0.92 | 0.976 | 0.88 | 76.3 | 0.7 | 78.6316 | 49.7512 |

| Slimneck | 0.989 | 0.936 | 0.977 | 0.868 | 7.3 | 1.5 | 7.5009 | 92.5926 |

| ContextGuided | 0.99 | 0.979 | 0.981 | 0.877 | 7.7 | 2.4 | 7.7430 | 222.2222 |

| MobileNetV1 | 0.993 | 0.947 | 0.979 | 0.896 | 15.7 | 2.3 | 16.0723 | 34.1297 |

| YFSNet | 0.996 | 0.931 | 0.978 | 0.871 | 75.5 | 1.0 | 78.0467 | 116.2791 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, L.; Kang, D.; Liu, Q. An Improved Lithium-Ion Battery Fire and Smoke Detection Method Based on the YOLOv8 Algorithm. Fire 2025, 8, 214. https://doi.org/10.3390/fire8060214

Deng L, Kang D, Liu Q. An Improved Lithium-Ion Battery Fire and Smoke Detection Method Based on the YOLOv8 Algorithm. Fire. 2025; 8(6):214. https://doi.org/10.3390/fire8060214

Chicago/Turabian StyleDeng, Li, Di Kang, and Quanyi Liu. 2025. "An Improved Lithium-Ion Battery Fire and Smoke Detection Method Based on the YOLOv8 Algorithm" Fire 8, no. 6: 214. https://doi.org/10.3390/fire8060214

APA StyleDeng, L., Kang, D., & Liu, Q. (2025). An Improved Lithium-Ion Battery Fire and Smoke Detection Method Based on the YOLOv8 Algorithm. Fire, 8(6), 214. https://doi.org/10.3390/fire8060214