Abstract

The purpose of the present study is to improve the efficiency of phishing web resource detection through multimodal analysis and using methods of explainable artificial intelligence. We propose a late fusion architecture in which independent specialized models process four modalities and are combined using weighted voting. The first branch uses CatBoost for URL features and metadata; the second uses CNN1D for symbolic-level URL representation; the third uses a Transformer based on a pretrained CodeBERT for the homepage HTML code; and the fourth uses EfficientNet-B7 for page screenshot analysis. SHAP, Grad-CAM, and attention matrices are used to interpret decisions; a local LLM generates a consolidated textual explanation. A prototype system based on a microservice architecture, integrated with the SOC, has been developed. This integration enables streaming processing and reproducible validation. Computational experiments using our own updated dataset and the public MTLP dataset show high performance: F1-scores of up to 0.989 on our own dataset and 0.953 on MTLP; multimodal fusion consistently outperforms single-modal baseline models. The practical significance of this approach for zero-day detection and false positive reduction, through feature alignment across modalities and explainability, is demonstrated. All limitations and operational aspects (data drift, adversarial robustness, LLM latency) of the proposed prototype are presented. We also outline areas for further research.

1. Introduction

In today’s digital world, attacks on information systems using social engineering are becoming increasingly common. These attacks aim to deceive users into revealing confidential information, which the attacker then uses for personal enrichment and to harm the owner of the information resource. According to the Russian company Positive Technologies [1], the number of computer attacks in Russia increased by 26% in 2024. A substantial proportion of computer attacks, affecting both organizations (50%) and individuals (88%), are attributed to social engineering. The most common social engineering attack is phishing—an attack orchestrated by an attacker to force a user to interact with an infected (phishing) website in order to extract critical credentials (logins, passwords, payment details) and inject malware into the system. According to the international working group APWG (Anti-Phishing Working Group), the number of phishing attacks against online payments and the financial (banking) sector increased significantly in the first quarter of 2025, amounting to 30.9% of the total number of attacks [2].

There are three main methods of organizing phishing attacks [3]:

- Malicious links, which appear as impostor websites infected with malware;

- Malicious file attachments, which are infected with malware to compromise the user’s computer or files;

- Fraudulent data entry forms, which prompt the user to enter their login credentials or other sensitive information to log in.

Unlike the past, when attackers required advanced technical skills and knowledge of web programming, scripting, social engineering techniques, and so on, a dedicated industry (criminal environment) called Phishing-as-a-Service (PaaS) has emerged in recent years, opening up the organization and execution of successful, highly organized phishing attacks to a wide audience of non-professional criminals. This situation is driving the rapid growth of the phishing threat and highlights the urgent need to take significant measures to neutralize it.

As noted in some studies [4,5,6,7], existing traditional methods of detecting phishing web resources, such as the use of blacklists of malicious sites and analysis of uniform resource locators (URLs)—links to specific web pages or the addresses of other web resources on the internet—cannot accurately identify phishing sites, resulting in a significant number of false positives.

In this context, the use of machine learning (ML) methods, such as artificial neural networks (NNs), support vector machines (SVMs), random forest (RF) algorithms, and others, is promising. These methods allow for the analysis and classification of websites based on a set of characteristic URLs associated with phishing. The solution to the problem of detecting phishing websites is reduced to the problem of the binary classification of URLs in a vector feature space: determining whether a URL belongs to one of two classes—legitimate or malicious (phishing). The advantages of using machine learning methods are higher accuracy in the presence of noisy and incomplete input data, the ability to adapt in real time and retrain when the training data change, and the ability to detect new types of phishing attacks. Thus, they ensure greater efficiency in detecting phishing websites.

Additional advantages in solving the problem of detecting phishing websites are revealed by the emergence of modern advanced artificial intelligence (AI) technologies based on the application of deep learning methods (DL), including convolutional neural networks (CNNs), long short-term memory networks (LSTM), Transformers, large language models (LLMs) [8,9,10], and hybrid (multimodal) AI systems [11,12,13]. A distinctive feature of this class of AI methods and systems is the use of additional information about the web resource as source code and a visual image (image) of the web page besides a set of symbolic URL features; this increases the dimensionality of the feature space under study, attracting additional expert knowledge about the structure of phishing websites at the classification stage, which ultimately increases the reliability of the decisions made. Such models can be classified as multimodal (modality refers to the type of input/output data and methods of extracting features from these data for subsequent processing in a machine learning model [14]).

As noted in some studies, the approaches discussed above (machine and deep learning methods, multimodal AI systems) have one major drawback: they are implemented based on a “black box” architecture, i.e., they are not transparent to the receiver of the results (in this case, an information security (IS) specialist) and have low interpretability (there is a result, but it is unclear why this result was obtained or what evidence supports it). This explains the increased interest in creating “explainable” AI (XAI) and, in particular, in creating a new generation of phishing website detection systems based on it, which not only have high accuracy in detecting infected sites but also high interpretability (explainability) regarding the obtained results (examples illustrating the construction of such systems can be found in [15,16,17]).

It should also be noted that building phishing website detection systems based on the previously mentioned ML, DL, and XAI methods in practice is challenged by a number of open questions, such as

- The ambiguity in choosing a suitable dataset (training dataset);

- The composition of features included in the dataset;

- The architecture of the classifier model;

- The values of its hyperparameters;

- The means of selecting effective training algorithms (tuning) for the detection system;

- The mechanisms in interpreting the results, considering the natural limitations associated with the required computational costs of system implementation.

Thus, the present study is aimed at developing and experimentally evaluating an industrial-grade multimodal phishing web resource detection system designed to

- Incorporate specialized models for four heterogeneous modalities (URL features and metadata, URL symbolic representation, HTML code, and web page screenshot) within a late fusion architecture;

- Incorporate a comprehensive, end-to-end explainable AI pipeline (SHAP, Grad-CAM, attention matrix analysis) that subsequently generates human-readable explanations utilizing a local LLM;

- Guarantee operational suitability in an SOC environment by means of microservice implementation, TI/SIEM integration, and testing against zero-day links and obfuscated attacks.

The scientific novelty of this work consists of the following aspects:

- A multimodal, late fusion architecture for the detection of phishing web resources is proposed. In this architecture, independent, specialized models for four modalities (CatBoost for URL features and metadata, CNN1D for symbolic URLs, a CodeBERT Transformer for HTML code, and EfficientNet-B7 for screenshots) are combined via a trainable meta-classifier that optimises the F1-score for the “phishing” class.

- A comprehensive, end-to-end explainable AI (XAI) pipeline is developed. It includes SHAP coefficients for tabular features, Grad-CAM and integrated gradients for symbolic and visual models, Transformer attention matrix analysis for HTML code, and the subsequent aggregation of these explanations by a local LLM into structured text reports intended for SOC analysts.

- An industrial-grade prototype system based on a microservice architecture with integration into the SOC/TI/SIEM framework is implemented and experimentally tested. It can process 0.5–1.1 thousand events per second, degrading to a subset of models while maintaining acceptable detection quality.

- A quality assessment is performed, which includes

- A comparison of single-modal and multimodal configurations on a proprietary, updated dataset (3.2 million URLs) and the public MTLP dataset, where the multimodal ensemble achieves an F1-score of 0.989 and 0.953, respectively;

- An exploratory assessment of resistance to typical obfuscation techniques;

- An expert review of the explanation utility with SOC analysts, using Fleiss’s and Cronbach’s to demonstrate the XAI component’s high consistency and practical applicability.

This article is structured as follows. Section 2 provides a comprehensive analysis and the systematization of relevant studies on the development of a machine and deep learning-based phishing detection system using explainable artificial intelligence mechanisms. Section 3 presents the proposed approach to building a multimodal phishing detection system based on deep learning and explainable artificial intelligence and discusses the system’s architecture, the composition of its software modules, and the tasks that it performs. Section 4 presents the results of comparative experiments that confirm the viability and effectiveness of the developed system and offers recommendations for its practical application. The Conclusions provide insights into the potential and prospective uses of multimodal systems.

2. Analysis of Relevant Works

2.1. Description of Data Collection Methodology for Review of Sources

The objective of the review was to systematize and analyze modern approaches to developing multimodal phishing detection systems using XAI methods for the period from 2020 to 2025. Peer-reviewed studies were identified and extracted through the following indexing databases: IEEE Xplore, ACM Digital Library, Springer, ScienceDirect, arXiv, Google Scholar, e-Library, e-Library.ru, and cyberlinlin.ru.

Key search queries used to find sources for the review were as follows:

- “multimodal phishing detection” AND (“explainable AI” OR “XAI”);

- “deep learning” AND “phishing detection” AND (“SHAP” OR “LIME”);

- “large language models” AND “phishing”;

- “visual phishing detection” AND “interpretability”;

- “multimodal fusion” AND “cybersecurity”.

Inclusion criteria: English or Russian peer-reviewed articles in scientific journals and conferences; technical reports from leading security organizations; open-source projects with scientific substantiation; studies with empirical results.

Exclusion criteria: publications without a quantitative assessment of performance; work not related to multimodal analysis or to XAI.

2.2. Review of Major Publications on Research into Multimodal Phishing Detection Systems

A large number of studies have examined the practical application of machine learning and deep learning methods in detecting phishing websites (see, for example, the comprehensive reviews [5,7,10,13]). Table 1 provides typical examples of the implementation of ML- and DL-based systems, listing the authors of the relevant publications, the datasets used, the ML and DL models, and the accuracy achieved in detecting phishing sites.

An analysis of the content of these publications (Table 1) leads to the following conclusions:

- There are now fairly representative open datasets containing a large number of URL samples with a wide variety of characteristics for both phishing sites (Kaggle, PhishTank, OpenPhish, etc.) and legitimate sites (Alexa Rank, crawlcommon, etc.), which can be used in the training phase for phishing web detection systems (see also [18]).

- An important stage preceding the training of such systems is a detailed study (preprocessing) of the set of features of phishing and legitimate websites contained in the dataset, including their normalization and coding, the analysis of correlations and informational significance, the possible reduction of the dimensionality of the feature space, etc., using methods of feature selection (detailed information about this can be found in [19,20,21]).

- Based on their research, the authors of several publications [21,22,23] pay close attention to the comparative assessment of the effectiveness of different ML models (as used in the specific issue of phishing web addresses) using such quality metrics as accuracy, recall, accuracy, and F1-score (harmonic mean). Based on their research, random forest algorithms, decision trees, and multilayer perceptrons are preferred.

- A hybrid ML model based on a set of classifiers [11] (for example, implemented by an RF algorithm) is particularly interesting in terms of improving the accuracy of phishing detection, where each individual classifier interacts with its own set of URL features and the final decision on the presence or absence of a phishing threat is taken by an arbitrator (MLP) using a separate voting system.

- The combination of the capabilities of natural language text analysis (natural language processing, NLP), including such features as URLs, text blocks, domains, and logs, with the processing of visual elements (screenshots) of a web page, as well as structural features of HTML code, within the framework of a multimodal concept [10,24,25], allows one not only to increase the precision and recall but also to predict the development of new types of phishing attacks, including generative adversarial attacks.

Table 1.

The summary table shows the use of machine and deep learning methods in detecting phishing attacks.

Table 1.

The summary table shows the use of machine and deep learning methods in detecting phishing attacks.

| No. | Authors | Dataset | ML/DL Models | Accuracy |

|---|---|---|---|---|

| 1. | Al-Diabat M. [19] | University of Irvine Repository (11,000 URLs, based on PhishTank and Yahoo Directory, 30 features) | Classifiers based on C4.5 and IREP algorithms The scikit-learn library version 1.8 was used. | C4.5—96% IREP—95% |

| 2. | Mahajan R., Siddavatam I. [22] | Phishtank (19,653 URLs) + Alexa Rank (17,058 URLs) | Decision trees (DT), RF, SVM The scikit-learn library version 1.8 was used. | RF—97.14% |

| 3. | Almomani A., Alauthman M., Shatnawi M.T. et al. [20] | Huddersfield University Dataset 1 (2456 URLs, 30 features); Dataset 2 (10,000 URLs, 48 features) | 16 ML models (RF, classification and regression trees (CART), logistic regression (LR), SVM, NN, Bayesian additive regression trees (BART), etc.) The scikit-learn library version 1.8 was used. | RF—96% (Dataset 1) and 98% (Dataset 2) |

| 4. | Khera M., Prasad T., Xess L.D., et al. [23] | Phishing website dataset | 9 ML models (RF, DT, LR, K-nearest neighbors (kNN), XGBoost, XBNet, etc.) The scikit-learn library version 1.8 was used. | RF—97.3% |

| 5. | Alazaidah R., Al-Shaikh A., Almousa M.R. et al. [21] | Dataset 1 (11,055 URLs, 30 features, 2 classes) Dataset 2 (1353 URLs, 9 features, 3 classes) | 24 ML models, 6 learning strategies (Naive Bayes, LR, MLP, AdaBoost, RF, random tree, etc.) The scikit-learn library version 1.8 was used. | Filtered Classification J-48— 90.76% (Dataset 1); RF—97.26% (Dataset 2) |

| 6. | Aslam, S., Aslam, H., Manzoor, A. et al. [4] | Dataset 1 (DS1): Benign Yandex sites Dataset 2 (DS2): Benign sites from Common Crawl, the Alexa database, and phishing sites from PhishTank | LSTM | 96.04% |

| 7. | Ghaleb F.A., Alsaedi M., Saeed F., et al. [11] | Malicious URLs dataset (651,191 URLs) | Hybrid ML model: 3 × RF + multilayer perceptron (MLP) | 96.80% |

| 8. | Krotov E.Yu., [10] | Phishing website dataset (10,000 URLs) + Common Crawe | Multimodal system: CNN + LSTM (image + text) | 92% |

| 9. | Lee J., Lim P., et al. [24] | Open Phish (1585 URLs) + Alexa Rank (3000 URLs) | Multimodal system: GPT-4-turbo, Claude 3, Gemini Pro-Vision 1.0 | GPT-4—92% Claude 3—90% |

| 10. | Syed Sh. A., [25] | PhishTank, APWG, Open Phish | BERT (text) + ResNet + VGG16 (image) | 96.2% |

According to the review, multimodal phishing detection systems can be categorized on the basis of three main criteria: the type of modality used, the strategy for combining them, and the level of explainability integration.

2.3. Classification of Data Types for Feature Generation in the Detection of Phishing Attacks

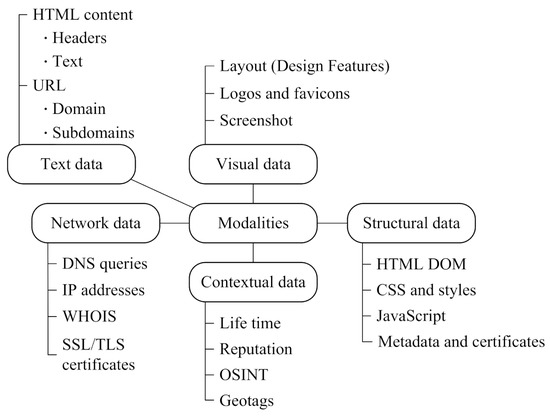

Figure 1 and Table 2 show the possible classification [13,24,26,27,28,29,30] of data types for generating features (modalities) in modern systems.

Figure 1.

Classification of data types for feature generation in phishing attack analysis.

Table 2.

Types of modalities in modern detection systems for phishing attacks.

The following itemization enumerates the features associated with various modalities [7,12,31,32,33].

- The main categories of URL features: lexical, syntactic, semantic, entropic, statistical;

- The main categories of structural HTML features: DOM structural, data entry form structure, characteristics of the page’s script component, CSS and style features of the design template, and semantic HTML features;

- The main categories of visual features: logo detection, layout analysis, color analysis, visual similarity, and image quality.

Table 3 shows the comparative characteristics of the achieved accuracy for multimodal detection systems for phishing attacks.

An analysis of publications on the classification of features shows the following:

- URL features provide fast initial analysis with relatively high accuracy (up to 93%) but are vulnerable to attacks by URL obfuscation;

- HTML features provide the most detailed information about the attack structure and are particularly effective in detecting credential phishing;

- Visual features are critical in detecting brand impersonation and visual cloning attacks, achieving 94–98% accuracy, but require significant computational resources;

- Multimodal integration outperforms single-modal approaches in the F1-score by 8–15 percent, but it increases the time to classify (up to 5–10 s);

- Feature engineering remains an important step even in the use of deep learning, as hand-selected and hand-crafted features ensure interpretability and resilience to adversarial perturbations.

Table 3.

Distribution of modalities in modern detection systems for phishing attacks.

Table 3.

Distribution of modalities in modern detection systems for phishing attacks.

| Combination of Modalities | Number of Systems | Average Accuracy | Typical Architectures of ML Models |

|---|---|---|---|

| URL + HTML | 18 | 96.5–99.2% | CNN + LSTM, BERT [34,35] |

| URL + Visual | 12 | 93.4–97.8% | CNN + ResNet/VGG [36,37] |

| URL + HTML + Visual | 8 | 98.0–99.4% | Transformers, LLM [27,28] |

| All 5 types | 3 | 98.4–99.6% | Agent-based LLM systems [27] |

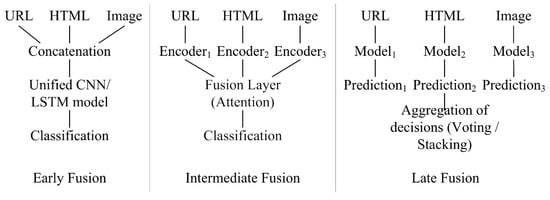

There are four main feature fusion strategies across all modalities [28,34,38] (Table 4 and Figure 2).

Table 4.

Comparative analysis of modality fusion strategies.

Figure 2.

Modality fusion strategies.

- Early fusion: Concatenates the properties of all modalities at the input level, followed by processing with one model. Advantages: Captures low-level correlations of features, simple architecture of the final model. Disadvantages: Loss of specificity of the modality, complexity of training.

- Intermediate fusion: Each modality is processed by a separate encoder and then the features are combined at an intermediate level by means of an attentional mechanism. It provides a balance between specificity and modality interaction.

- Late fusion: Independently train specialized models for each modality with decision aggregation by means of voting or stacking. Advantages: Model specialization, modularity, parallelism. Best results for heterogeneous data.

- Hybrid fusion: Combination of early and late fusion with adaptive weights. This strategy shows maximum performance with high architectural complexity.

2.4. Explainable Artificial Intelligence Methods for Detecting Phishing Attacks

Regarding the need for explainability (transparency, interpretation) of the decisions taken by phishing detection systems (i.e., explaining why a particular website is considered legitimate or phishing), a large number of studies have been published in this field of explainable AI research (XAI).

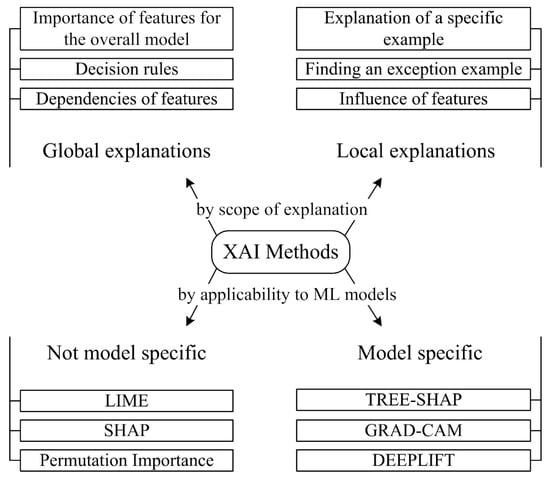

Explainable AI methods are classified according to the range of explanations (global and local) and the degree of focus on the model used (model-agnostic and model-specific [39]) (Figure 3). Global XAI methods [39] provide a general understanding of the model’s behavior across the entire dataset, including feature importance and decision rules. Local methods [40] explain a model’s predictions by showing how features affect a specific sample.

Figure 3.

Classification of XAI methods to explain decision-making process in phishing attack analysis.

Most of the research on the use of XAI for phishing detection tasks is focused on combining ML and specialized XAI methods to explain the decisions that it helps to make. These XAI methods are [41] LIME, SHAP, decision trees, logistic regression, partial dependency plots, integrated gradients, and others. LIME and SHAP are the most popular.

The Local Interpretable Model-Agnostic Explanations (LIME) method is a model-agnostic interpretation method applicable to different types of ML models. The method explains individual predictions of the ML model (i.e., binary classification results) by generating biased input values and building a simple, interpretable “input–output” model based on the range of determined URL features. This allows one to identify the most important (informative) URL features that have the greatest impact on the classification outcome. LIME [40] constructs an interpretable neighborhood model in the vicinity of a prediction by generating biased samples and training a linear model. For example, the hybrid approach based on SHAP and LIME proposed in [33] increased the accuracy in the classification of phishing links for a random forest model to 97.06 percent (+0.65) and for an XGBoost model to 97.21 percent (+0.41).

Shapley Additive Explanations (SHAP) is an independent model-based method, as is LIME. It is based on game theory concepts and calculates Shapley values, which reflect the contributions of each player (in this case, URL features) to the outcome of the cooperative game (in this case, an ML prediction of the nature of the website). SHAP [39] ensures local precision (the sum of the Shapley values is equal to the difference between the prediction and the average prediction), consistency (if a model change increases the contribution of a feature, its Shapley value does not decrease), and the absence of bias (features with no influence are assigned a zero value).

The following are examples of applications of this approach (ML + XAI methods).

- Phishing website detection system [16]: Base classifier model (ML)—random forest (RF); training datasets (datasets)—PhishTank and Tranco; number of used URL features—26; Lorenz Zonoids (multivariate extension of the Gini coefficient) was used as a feature selection procedure (XAI method).

- Phishing website detection system [42]: Base classifier models—SVM and RF; dataset—Ebbu 2017; number of URL features—40; XAI methods—LIME and Explainable Boosting Machine (EBM); prediction accuracy—94.7% (for SVM) and 97.3% (for RF).

- A system for detecting phishing websites [43]: six ML models were studied (XGBoost, LightGBM, RF, kNN, Twin SVM, CNN) using four datasets (ISCX-URLs, P.L-URLs, Phish Guard URLs, Suspicious-URLs) and the XAI-LIME method; the XGBoost model showed the highest forecast accuracy of 96.8%.

One approach to building a phishing website detection system based on XAI, proposed in [44], involves using a large language model (LLM) combined with processing input text data (URL features) using NLP, the Chain-of-Thought query decomposition algorithm, and a one-shot learning procedure (with a single example—a prompt). Here, the LLM simultaneously functions as a classifier and an explanatory module that generates a textual explanation of the classification result. Comparative experiments conducted by the authors [44] to evaluate the effectiveness of various LLM usage options (five LLM architectures and three datasets) showed the advantage of the GPT-4 Turbo Transformer—the F1-score was 0.92.

In [40], the authors present the integration of XAI with large language models to generate natural language explanations for the analysis of phishing emails. The system includes a detection module using classical ML models, which allows accuracy of 98.4%; a feature importance module (computing LIME and SHAP); an explanation module (DeepSeek v3, 671B parameters); and prompt templates with four explanation modes (detailed, educational, technical, and simplified). The explanation quality metrics on the reference set prepared by the authors showed accuracy of 94.2%, completeness of 87.6%, consistency of 96.8%, and actionability of 82.1%.

In [35], the authors introduced the PhishTransformer model, which showcases the efficacy of self-attention mechanisms in URL analysis. The model accepts a URL as input; following tokenization and the implementation of convolutional layers at the character level, positional encoding and self-attention layers are used. In an experimental dataset comprising a balanced collection of 100,000 URLs, the authors achieved an F1-score of 0.99. The attention mechanism allows one to identify key tokens in a URL, based on which the decision is most often made about whether a link belongs to a legitimate (official TLD (.com, .org), known brands) or phishing (“verify”, “secure”, “account”, “update”, “confirm”) website.

Across the scenarios previously discussed, the application of XAI approaches provided logical explanations supporting the selection of a particular solution, thereby identifying the URL features that most strongly affected the prediction result (i.e., the reasons for classifying a specific website as either legitimate or phishing), which is of significant importance to the user, who is often an information security specialist.

It should also be noted that, besides the mentioned approach to detecting phishing sites based on URL feature analysis, there are known examples of solving this problem using other features of website maliciousness and other methods of interpreting the results obtained with their help. For example, to analyze the source code of a web page, the bimodal Transformer model CodeBERT [45] can be used, pretrained on a large “code documentation” dataset, which allows the generation of text comments on the program code based on a “zero-shot” scenario, i.e., without fine-tuning the model parameters. Such information is of interest to information security specialists, since the elements of the web page source code provide insight into the structure, design, and functionality of the website.

It is advisable to use XAI methods such as Gradient-Weighted Class Activation Mapping (Grad-CAM) [46] and Deep Learning Important Features (DeepLIFT) [47] when analyzing visual features (website homepage images, screenshots, logs, brand indicators) using convolutional neural networks (CNNs).

The Grad-CAM method [48] creates heatmaps showing image regions that are important for classification using the gradients of the final convolutional layer.

The Phishpedia system proposed in [36] uses Grad-CAM to visualize critical regions on phishing web pages, detecting 1704 phishing sites in 30 days (1133 zero-days—66.5%) with a false positive rate of 1.4%. Grad-CAM detected fake bank logos, suspiciously placed login forms, and non-standard brand color schemes.

A second widely used method of interpreting CNN outputs (DeepLIFT) evaluates the influence of each input (image pixel) on the neural network output using backpropagation, considering the contributions of each feature to neural network activation.

The authors of [49] conducted a systematic comparison of ten CNN architectures on the Multitype Logo Phishing (MTLP) dataset for the visual analysis of screenshots of web resource home pages. To build neural network analysis models, the authors used transfer learning for subsequent fine-tuning on a balanced set of 15,000 examples.

The authors found that the deep NN DenseNetBlur121D provided the best balance between classification accuracy and model size (F1-score 94.39%, classification time 45 ms, size 28.1 MB); the NN MobileNetV2 was optimal (F1-score 87.6%, classification time 21 ms, size 9.2 MB) for offline use on mobile devices (edge deployment); and the NN ResNet50 demonstrated stability (F1-score 91.04%, classification time 38 ms, size 97.5 MB) on heterogeneous data.

In [37], the authors address the problem of detecting previously unknown (zero-day) phishing websites through visual similarity by training a deep CNN with a triplet-based loss function. The model achieved 81% accuracy on zero-day attacks, outperforming VGG16 (51.32%) and ResNet50 (32.21%). Key advantages of the proposed solution include the ability to train on a few examples (few-shot learning) and robustness to design variations (color palettes, element placement).

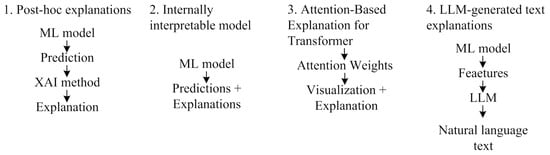

Table 5 and Figure 4 provide the characteristics of the explainable AI methods applied to the analysis of phishing attacks.

Table 5.

Summary of explainable AI methods.

Figure 4.

Options for integrating XAI with ML models for analysis of phishing attacks.

Regarding Table 5, local visual explanation allows for the visualization of image regions that are important for decision-making in terms of models. "Intrinsic" (internally interpretable) means that the model is inherently interpretable and does not require additional explanation methods.

Another important principle for constructing explainable AI systems is the level of integration between XAI and the ML model. A possible classification system for such integration is shown in Table 6.

Table 6.

Comparison of XAI integration levels.

2.5. Main Datasets Used to Train Multimodal ML Models for Phishing Attack Detection

Some key datasets for training multimodal ML models are shown in Table 7.

Table 7.

Key datasets for training phishing detection systems.

An analysis of the datasets and reviewed studies provides insights into certain aspects:

- Phishing sites are accessible for an average of 4–8 h before being blocked, and datasets become quickly outdated;

- The ratio of legitimate URLs to phishing URLs ranges from 100 to 1 to 1000 to 1 in real traffic among corporate information systems;

- The percentage of annotation errors in community-driven datasets is quite high (up to 15%);

- Feature distributions are dynamic and change over time (concept drift).

2.6. Promising Multimodal Systems for Analysis of Phishing Attacks

A promising direction in developing systems for the detection of phishing websites involves integrating GO, LLM, and XAI methods. This approach utilizes a multimodal system architecture that combines (concatenates) modules designed to process and analyze diverse features of phishing websites, including URLs, HTML content, screenshots, visual images, and source code. This comprehensive architecture ensures high accuracy, completeness, and reliability in detection while simultaneously achieving a high degree of interpretability (explainability) for the forecast results, attributable to the multifaceted nature of the analysis and the consideration of various perspectives on the detection task (see, e.g., [52]).

The research outlined in [27] introduces the PhishAgent system, an agent-based framework for phishing detection that leverages multimodal large language models. The perception module is designed to analyze various data modalities: it processes URLs using tokenization and embeddings, extracts features from HTML via Document Object Model (DOM) parsing, and performs object detection and brand database matching for logos. The reasoning module is predicated on employing a cloud-based large language model (LLM) utilizing a Chain-of-Thought (CoT) prompting strategy. The system implements the construction of a brand knowledge graph and a database of known phishing patterns. The action module allows the generation of explanations and execution of queries to external APIs (VirusTotal, WHOIS). The system yields an F1-score of 93.7–96.2% on three datasets. Another distinctive feature is its resistance to adversarial attacks, with a decrease in accuracy of only 2.3% (the best result among the systems considered by the authors). Disadvantages include the high cost of inference (via the API of a cloud commercial LLM) and a processing delay of 5–15 s per query.

The paper [28] presents the KnowPhish system, which addresses the problem of reference-based phishing detection without a predefined list of brands through the automatic construction of a multimodal knowledge graph. A multimodal approach is used, including a combination of visual (CLIP embeddings), text (BERT), and structural (GraphSAGE) analysis. The system achieves an F1-score of 89.6% for zero-day attacks and 94.3% for known threats.

The paper [34] proposes a system that has the highest accuracy in classifying phishing resources among non-LLM approaches through the late fusion of specialized models. The system architecture includes a URL branch (character-level, CNN), another URL branch (vocabulary-level, Transformer), and an HTML branch (graph convolutional network for DOM tree analysis). This approach yielded an F1-score of 99.27% on a specialized dataset.

General-purpose LLM models can be nearly as successful as specialized phishing analysis models in terms of classification accuracy if the necessary prompt data are prepared, enabling web search capabilities, and they can explain the decision in natural language. In [44], the best LLM results for GPT-4 Turbo and Claude 3 included an F1-score of 0.92 in zero-shot prompt mode.

Domain-specific models for phishing analysis typically achieve over 95% F1-scores on data with a known distribution but have low generalization capacity. It was shown in [44] that URLNet and URLTran models, trained on a single dataset, experienced 10–30% decreases in their F1-scores on URLs from other sources because of data drift. Therefore, a combination of transfer learning approaches (retraining specialized high-speed models) on new data is necessary, along with models capable of explaining the classification results, providing meaningful support to the expert making the final decision.

2.7. Findings from the Systematic Review

The challenges encountered in the advancement of phishing attack detection systems involve the following.

- Contemporary machine learning models exhibit susceptibility to adversarial attacks [53], while existing certified defences impose prohibitive computational overheads. This highlights critical research needs: (1) the development of scalable certified defences for deployment in production systems, (2) the implementation of adaptive adversarial training robust to unknown attack types, and (3) the creation of robustness benchmarks tailored to the domain of phishing attacks.

- Systems based on large language models (LLMs) [26,27] demonstrate high classification accuracy; however, the latency inherent in their operation—specifically the 5–15 s delay in decision-making and explanations—presents a critical barrier to their utilization in real-time defence systems.

- The most advanced detection systems for zero-day phishing threats attain recall metrics in the range of 87–94%. Existing systems consistently fail to detect 6–13% of unknown threats. To mitigate this vulnerability, actual research [26,28] efforts are focused on advancing few-shot learning, meta-learning, and anomaly detection to address these limitations.

Thus, multimodal phishing detection systems presently demonstrate the following properties.

- Current hybrid architectures demonstrate good detection accuracy, achieving performance rates of 98–99.68%. The integration of large language models (LLMs) has yielded a significant advancement in zero-day detection, with recall metrics ranging from 87 to 94%, while also enabling the generation of natural language explanations. Additionally, explainable artificial intelligence (XAI) methodologies are utilized in a substantial portion (80%) of recent research.

- The adoption of multimodal systems has resulted in an improvement in accuracy metrics ranging from 5% to 12%. It has been demonstrated that late fusion techniques produce better outcomes when dealing with heterogeneous modalities. Furthermore, agent-based LLM architectures constitute a new paradigm characterized by their integrated reasoning functionalities.

- A critical challenge is to ensure model adversarial robustness. This problem is characterized by a 3–9% decrease in accuracy, a temporal decay effect (an 8–15% degradation in performance without overfitting), and high LLM latency, which imposes significant real-time operational constraints.

Summarizing the review results, the following research gaps can be identified. Existing multimodal solutions for detecting phishing web pages either focus solely on one or two modalities (e.g., visual similarity and logos in VisualPhishNet and subsequent works) or employ heavy, cloud-based multimodal LLM agents with access to external knowledge (PhishAgent, KnowPhish, etc.). This significantly limits their applicability in on-premise security operations center (SOC) environments with strict requirements concerning latency, inference costs, and data control. In conclusion, it should be noted that the existing literature offers practically no solutions that, at the same time,

- Utilize multiple heterogeneous modalities (URL and metadata, symbolic URL, HTML code, page screenshot) in a late-binding architecture featuring a trainable meta-classifier;

- Incorporate an end-to-end explainable AI (XAI) loop with modality-specific interpretation methods and the consolidation of natural language explanations via a local LLM;

- Are implemented as an industrial microservice prototype, integrated with SOC/TI/SIEM frameworks, and evaluated using both standard classification metrics and an assessment of explanation usefulness for real SOC analysts.

3. Design of a Multimodal Phishing Website Detection System Using Explainable Artificial Intelligence

The present section describes the design and implementation process of a phishing web resource detection system, as well as a series of experiments to evaluate the effectiveness of multimodal analysis.

3.1. Structural Diagram of the Web Resource Analysis System

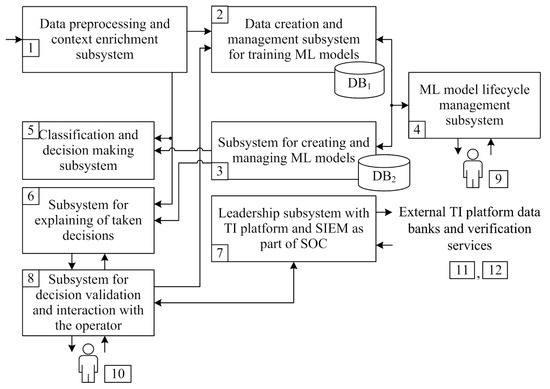

The structural diagram of the proposed web resource analysis system includes subsystems that implement a comprehensive analysis of the target resource using a multimodal set of late-binding models, with the ability to generate detailed reports in natural language for the SOC expert (Figure 5).

Figure 5.

Architecture of the web resource analysis system.

The system receives the URL of the web resource being analyzed, downloads the HTML of the home page, and also downloads an image of the home page (screenshot).

Subsystem (1) for preprocessing initial data and context enrichment involves (Table 8)

Table 8.

Modalities of analysis of the target web resource in the task of detecting phishing attacks.

- Feature Extraction;

- Context enrichment with data from external sources;

- Normalization, standardization, and the generation of a multimodal context.

URL analysis involves the extraction of lexical and statistical features and the collection of associated metadata. This metadata acquisition process includes verifying the URL domain’s affiliation with known domain lists and acquiring domain registration age data (WHOIS), and other data are also collected. To enrich domain attributes, the system can utilize not only classic WHOIS queries but also the Registration Data Access Protocol (RDAP), which was standardized by the IETF in RFC 7480-7484 as a modern replacement for WHOIS. Unlike the historically text-based WHOIS, RDAP provides structured responses in JSON format, a unified REST/HTTP query interface, and built-in security and access control mechanisms. This facilitates seamless integration with the system’s microservice architecture and ensures compliance with contemporary registration data protection and minimization requirements (as stipulated by the GDPR and the current ICANN policy regarding the de facto replacement of WHOIS with RDAP).

Over 89 features are generated, providing comprehensive information about the URL.

The subsystem (2) used to create and manage data for the training of ML models enables one to supplement the database (DB1) with new labeled data from external sources (e.g., PhishTank subscription newsletters) and internal data validated by SOC specialists.

Subsystem (2) comprises the following components:

- A module dedicated to data labeling, relabeling, and label validation;

- A database (DB1) for storing enriched and prepared data;

- A version management module for dataset tracking and synchronization with corresponding machine learning (ML) models;

- A balancing and augmentation module configured to generate training, validation, and test datasets.

The regularly updated example database allows for the further training and/or retraining of classifier models as data drift occurs. A database of explanations for URL phishing indicators is also being developed (for example, by comparing them with MITRE tactics and techniques, known APT attacks, etc.).

The subsystem for machine learning (ML) model creation and management (3) facilitates the updating of operational models deployed within the data processing pipeline. This subsystem incorporates the following modules:

- Machine learning (ML) models specifically designed for the analysis of URLs/metadata, images, and the source code of target resource pages;

- A late fusion multimodal module that operates based on a weighted model voting mechanism;

- A product model database (DB2).

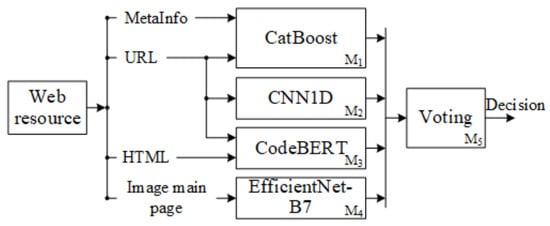

The structures of ML model interactions and the designations of specific models are shown in Figure 6 and Table 9.

Figure 6.

The structure of ML model interaction used for the analysis of phishing web resources.

Table 9.

The main ML models used in the web resource analysis system.

Data analysts manage the model lifecycle using subsystem (4). Subsystem 4 includes the following modules:

- A performance monitoring module;

- A data drift detection module, which detects decreased model classification accuracy on freshly labeled data;

- The retraining and/or updating of model modules at specified intervals.

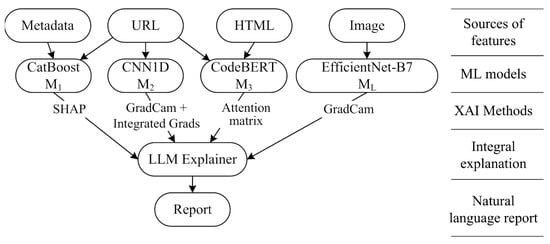

The subsystem for classification and decision-making (5), using trained models, classifies the web resource being analyzed. The subsystem for explaining the decision (6) being made implements the scheme presented in Figure 7 and Table 10.

Figure 7.

The process of analyzing a web resource and generating an explanation in natural language for an SOC specialist.

Table 10.

XAI mechanisms used in combination with ML models as part of the web resource analysis system.

The subsystem implements API interaction with the locally installed LLM—the QwQ-32B reasoning model of the Qwen series [60]. Data with explanations for URLs’ classification as phishing links, prepared in subsystem (2), are used as a special prompt for zero-shot learning. The subsystem output (6) is a JSON file with a class name for the URL and a short text explanation of the result of the classification according to the proposed template. Only those websites that the model classifies as malicious are handled by the error correction mechanisms, which allows for acceptable overall performance of the system, given the significantly (by two orders of magnitude) lower LLM performance in terms of events per time.

The SOC’s thread intelligence and SIEM integration subsystem (7) collects metadata from web sources to improve the capabilities of modules for classifying and explaining information. This subsystem automates the response to phishing attacks by creating SOAR incidents and promptly reporting them to security monitoring specialists in the SOC.

The decision verification subsystem (8) provides an interface for first- and second-line SOC monitoring experts to adjust the marking. The subsystem includes the following modules:

- SOC analytics dashboard;

- Decision verification and data markup module.

Threat intelligence feeds (PhishTank, OpenPhish) and reputation services (VirusTotal, Google Safe Browsing, Kaspersky TI) serve as external data sources.

3.2. Key ML Models in a Multimodal Web Resource Analysis System

In the base version, ML models were trained independently of one another to implement a delayed binding strategy and to be able to select models for different scenarios (stream event processing or back-end analysis of individual websites).

3.2.1. Developing a URL Analysis Model

—CatBoost Model for Analysis of URL-Extracted Features and Metadata

This study tested several decision tree-based model organization options (random forest, XGBoost, CatBoost) for the analysis of tabular features extracted from URL and metadata in binary classification problems. Optuna has been used to select the optimal hyperparameters for each of the models. CatBoost scores for the best ROC-AUC metric, indicating a strong ability to discriminate between classes; see Table 11.

Table 11.

Comparative results of tree-based models on the prepared dataset.

The CatBoost model provides the best representation of categorical and quantitative features with the ability to be efficiently multithreaded on the CPU. When translating the model into the C language and then compiling it using LLVM, the model’s performance increased by 26% compared to the original version. To train the model, we used a composite sample of

- More than 150,000 domain names from real phishing campaigns (picked by PhishTank, OpenPhish, VirusTotal);

- 200,000 legitimate domains from Alexa Top and SSL certificates (Let’s Encrypt, Mozilla).

—CNN1D for URL Analysis at the Character Level

CNN1D (Table 12) performs the character-level analysis of local URL patterns. The choice of a CNN was justified by the following:

Table 12.

Parameters of CNN1D URL classification model.

- The ability to extract local patterns as substrings characteristic of phishing URLs (e.g., “login,” “secure”) through the use of convolutions with kernels of varying sizes;

- High analysis efficiency on the CPU because of the reduced number of model parameters, which allows for the simultaneous analysis of short (“@,” “//”) and long (“login”) URL anomalies, improving the classification accuracy.

A balanced (50% phishing and 50% legitimate web resources) MTLP dataset [61] was used to train the model. It included

- Phishing URLs (from open sources such as PhishTank and OpenPhish);

- Legitimate URLs (from popular services, including Google, Microsoft, and banking portals);

- Metadata—page source code, domain WHOIS data, screenshots, and binary labels (“phishing”/“not phishing”).

The CNN1D model for web resource symbolic name analysis demonstrated high efficiency in detecting phishing URLs, achieving an F1-score of 91.41% on the test set.

3.2.2. Development of a Model for the Analysis of Visual Images of the Main Page of a Web Resource

—CNN2D Model for Visual Image Analysis Based on Pretrained EfficientNet-B7

The analysis used a pretrained version of EfficientNet-B7. It has demonstrated high performance in the field of computer vision tasks because of its balanced depth and width and the resolution of the network. Pretrained in ImageNet, EfficientNet-B7 is able to effectively detect complex visual patterns such as logo distortions, non-standard layouts of UI elements, and other phishing indicators. To adjust the model for binary classification tasks, the last layer has been replaced by a linear classifier that estimates the probability that a web resource belongs to the class of “phishing”.

The model was trained on an MTLP dataset containing phishing and legitimate website images (50/50 split). To increase the model’s robustness to data changes, an augmentation was used; the images were scaled to a fixed size (16:9 aspect ratio) and normalized using the ImageNet statistics.

For training, the BCWithLogitsLoss loss function was used. This function combines binary cross-entropy and logistic regression into a single layer, ensuring numerical robustness. The AdamW optimizer, with separate learning rates ( for frozen layers and for the classifier), minimizes the risk of weight corruption in the pretrained weights. OneCycleLR was used to dynamically adjust the learning rate, with parameters cycling through [, ] depending on the number of epochs.

The developed visual image analysis model shows high performance in detecting phishing sites, with an F1-score of 93.22 percent.

3.2.3. Development of a Model for the Analysis of the HTML Code of the Main Page of a Web Resource

—Transformer for Analysis of the HTML Code of the Main Page Based on the Pretrained CodeBERT

According to the source search, BERT variants are the predominant variants for the text analysis of HTML content. The CodeBERT neural network Transformer is pretrained in code (six programming languages, including JavaScript) and is a powerful tool, including for JavaScript obfuscation detection.

The following were extracted from the source site’s home page: JavaScript code blocks, inline handlers, and HTML structure (forms, iframes, meta-tags). The extracted blocks and URLs were combined into a single tokenization string with a maximum of 512 tokens.

The model was fine-tuned in batch mode on an MTLP dataset that contained phishing and legitimate web pages. The final classifier based on the CodeBERT retrained model achieved an F1-score of 0.950 using the test sample.

3.2.4. Development of a Meta-Classifier Model

Within the proposed architecture for a multimodal phishing web resource detection system, models M1–M4—specifically CatBoost for tabular URL features, CNN1D for symbolic URLs, CodeBERT for HTML code, and EfficientNet-B7 for visual page appearance—are trained independently. Each model generates probability estimates for an object belonging to the “phishing” class.

A subsequent level employs a trainable meta-classifier, M5, to execute a late fusion strategy. This architecture aligns with stacked generalization [62], where the second level (the meta-learner) takes the base model outputs as input and determines the optimal method for their combination.

The current implementation of the system incorporates a second-level tabular model (a linear classifier), functioning as a meta-classifier. This is realized as a generalized arbiter (stacking classifier) [62] that

- Utilizes estimations of class membership probabilities derived from the base models;

- Determines the weighting of modality contributions based on the validation sample to optimize the F1-score for the positive class (phishing);

- Facilitates operation with partially accessible modalities (for example, instances where screenshots are unavailable or HTML cannot be downloaded), which is essential for security operations center (SOC) environments.

Training for the M5 model is structured as a two-stage stacking process to prevent data leakage between layers.

- Description of the process of base model training. Models to are trained utilizing the original features of their respective modalities. For each object within the validation set, phishing probability predictions are generated by the four models.

- Creating the training set for model M5. For each URL, a meta-feature vector is developed comprising the M1–M4 predictions and consistency aggregates—specifically, the mean, variance, and the total number of models that identified the phishing class. The target labels are consistent with those specified in the original problem.

- Training a meta-classifier. A secondary tabular classifier is developed to optimize the F1-score for the positive class, utilizing the derived meta-features. Cross-validation is employed to select hyperparameters and the decision threshold; this facilitates a reduction in unidentified phishing attacks while simultaneously managing the proliferation of false positives.

- Inference mode on new data. During the operational phase, models to calculate probability estimates in parallel. These probabilities are subsequently provided to model , which generates the final estimate.

Within the framework of model ensemble theory [62], a trainable meta-classifier represents a more generalized case, where the weights and interactions between various modalities are determined according to the dataset. For the purpose of phishing detection, this approach is essential for several reasons.

- Adaptive modality weighting. URL-based models occasionally generate false positives for popular legitimate domains (such as vk.com and rutube.ru), whereas a visual model correctly identifies these sites as non-phishing. A meta-classifier facilitates the downweighting of the URL branch in such instances—for example, when a high-confidence URL model prediction coincides with a low-confidence visual model output. Conversely, it enhances the contributions of those modalities that provide more reliable indicators for a specific class of examples.

- Accounting for non-linear interactions between modalities. Stacking facilitates the consideration of complex scenarios—for instance, where a URL appears relatively innocuous yet the HTML code contains suspicious input forms and redirects, and the visual appearance mimics a brand logo. These combinations are characteristically found in phishing attacks. Simple voting mechanisms fail to distinguish between instances where “two moderately confident models agree” and those where “one model strongly disagrees while others remain uncertain”; conversely, a meta-classifier can be trained to assign distinct final decisions to these specific patterns.

- Prioritizing the positive class (phishing) and managing the balance between false positives and false negatives. In the training of the classifier, the primary performance metric utilized is the F1-score for the “phishing” class. This approach facilitates a strategic shift in the balance towards minimizing false negatives (missed attacks) while maintaining an acceptable false positive rate, as previously established for the CatBoost model. Furthermore, the decision threshold for may be independently adjusted for positive and negative alerts, in accordance with specific SOC regulations.

- Preserving functionality during modality unavailability. In a production environment, various data sources may periodically become unavailable; for instance, a screenshot might be absent, HTML code may fail to load, or integration with external threat intelligence (TI) services could be temporarily suspended owing to security policies. The meta-classifier can be trained on incomplete feature combinations—utilizing techniques such as modality masking or dropout—enabling it to transition seamlessly from full multimodal operation to a reduced configuration. Compared to a monolithic hybrid architecture, this strategy substantially increases the system’s fault tolerance.

3.3. Key XAI Technologies as Part of a Multimodal Web Resource Analysis System

In the design of the explanation subsystem, a deliberate decision was made to restrict its scope to post hoc and built-in XAI methods. These methods satisfy three essential requirements, formulated based upon the findings of the review (Section 2.4, Table 5 and Table 6 and Section 2.7): (1) consistency with the specific model type employed, thereby ensuring the fidelity of the resulting explanations; (2) a predictable and acceptable computational overhead, requisite for stream processing within a security operations center (SOC) environment; and (3) demonstrable efficacy in the context of security and fraud prevention tasks. The chosen methodology is as follows: for the tree-based model (CatBoost applied to URLs and metadata), SHAP is utilized; for the convolutional models (symbolic URL analysis) and (screenshot analysis), the gradient-based methods Grad-CAM and integrated gradients are employed; and, for the Transformer model (CodeBERT applied to HTML), attention is harnessed as an intrinsic interpretation mechanism. The outputs generated by these disparate XAI components are subsequently converted into a unified intermediate representation and aggregated by a local large language model (LLM) into a concise text report, intended for the use of SOC analysts.

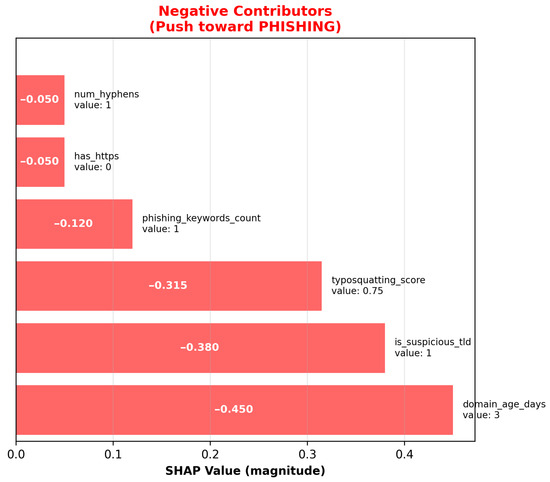

3.3.1. SHAP Coefficients for Interpreting the Performance of the M1 CatBoost Model

The CatBoost framework has an inbuilt implementation for extracting the values of SHAP coefficients for features. The results of the classification and decision-making test are shown in Table 13 and Figure 8 shows those for the synthetic test.

Table 13.

Example of interpretation of the solution of model .

Figure 8.

Histogram of top features determining the model’s decision.

3.3.2. Grad-CAM and Integrated Gradients for Interpreting the Performance of the M2 CNN1D Model

An adapted version of the Grad-CAM method (for CNNs with one-dimensional input layers) weights the feature maps by importance using gradients and displays the focus area of the model in the intermediate representation (Table 14).

Table 14.

Example of interpretation of the solution of model .

The integrated gradients method works at the input level (URL symbols) and indicates the contribution of each symbol to the model’s final forecast based on an integrated gradient score (but it requires a defined baseline).

3.3.3. Grad-CAM for Interpreting the Performance of the CNN2D Model

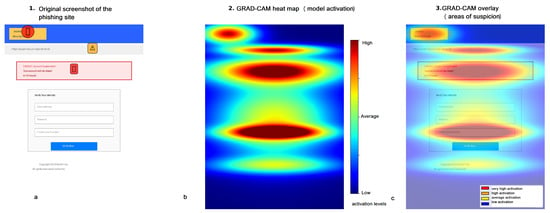

The EfficientNet-B7 neural network consists of mobile inverted bottleneck convolution blocks. The final layer prior to global average pooling was chosen for Grad-CAM operation. The MTLP dataset was previously used for the fine-tuning of the model, so a screenshot of the main page of the synthetic website, PayPA1-Security.tk, was used to illustrate the operation of XAI. The visualization results of the model’s operation are shown in Figure 9.

Figure 9.

Illustration of Grad-CAM’s operation for the analysis of an image of the main page of a web resource: (a) screenshot with markers of areas indicating phishing; (b) heatmap of model activation; (c) overlay of the Grad-CAM heatmap on the original image.

3.3.4. Attention Matrix for Interpreting the Work of the Model, CodeBERT

The attention matrix is a table that describes which HTML page elements the model considers to be related. When interpreted, this approach allows the relationship between extracted page elements and the URL to be evaluated and the final decision to be made.

For the synthetic web resource http://paypa1-security.tk/login, the HTML page size was 2985 characters, 232 tokens were extracted, and the attention matrix included 53,824 elements. Examples of extracted tokens are .

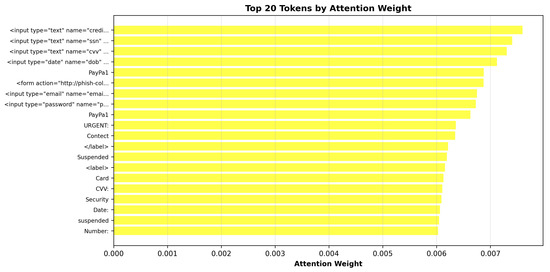

Figure 10 shows a histogram illustrating the distribution of attention values among the extracted tokens in the binary web resource classification task.

Figure 10.

Most significant tokens based on the attention matrix analysis.

3.3.5. LLM Explainer for Preparing Final Reports in Natural Language

Local LLM Qwen2.5-32B is used to generate the final natural language report summarizing the explanations obtained by the different approaches.

The Grad-CAM results for CNN2D are prematched with elements of the HTML page and fed into the explanatory model as an “element”-“importance” dictionary. This is achieved by semantically dividing the page and mapping the visual areas to the DOM.

Other structured explanations obtained from XAI models are also fed into the template as data to populate the prompt template. The explanation module outputs a JSON file with a class label and a markdown document with an extended textual explanation of the classification result using a static template.

The LLM will generate a report (Appendix A) either for the websites of interest to the system user or only for sites that the model classifies as harmful, allowing for acceptable overall system throughput.

3.4. Software Architecture of a Web Resource Analysis System

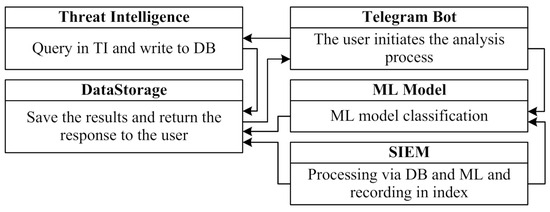

The proposed algorithms and model for the analysis of web resources are implemented as software in Python 3.10 with an assessment of their effectiveness on real data (Figure 11).

Figure 11.

Architecture of interaction of software modules implementing key components of the system.

The project consists of a group of containers, each of which performs a separate task, and their interaction provides complete coverage of the process of the analysis, verification, and notification of potential threats.

- “Telegram bot” (App-tg_bot)—Implements the functionality of receiving incoming messages from users and serves as the first link in the value verification chain.

- “Machine learning module”—A container with a machine learning model is deployed on the basis of the “m_service” image, where the “/check_domain” endpoint is implemented via the Flask API. When a POST request with a domain is received, multistage processing occurs.

- “Threat intelligence module” (App-ti_service)—A container for integration with external reputation services such as Kaspersky TI, VirusTotal TI, and others via an API implemented on FastAPI.

- “Data storage service”—A database for the long-term storage of all system operation results; a container with a MongoDB database is used.

- Container for updating Elasticsearch (“App-update_es”)—In the test environment, the project uses Elasticsearch to analyze domains, where the “update_es” container is responsible for regularly updating the “idecoutm” index. Each time the script is run, a complete check is performed to see if the current domain has been processed before: if a match is found, the document is updated to reflect the new results, and, if not, a full check cycle is started. The update occurs every 3 s, which ensures that the data in Elasticsearch are up-to-date and enables the quick tracking of changes in the analyzed domains. With the help of Praeco (Elasticsearch alerting made simple; URL: https://github.com/johnsusek/praeco (accessed on 15 February 2025)), a correlation rule was created to notify analysts at the SOC, which allows for a prompt response to information security incidents.

- Container management component (“Portainer”)—Uses the “portainer/portainer-ce:latest” image and provides a web interface for managing all of the project’s Docker containers. Special attention was paid during the design to safety and data isolation. All containers run in a single virtual segment, with no external ports exposed except for the Telegram API and the web administration interfaces. All requests are handled over HTTPS, logged, and may be signed for investigation.

Particular attention was also given to integration with existing monitoring systems during the design process. The used log structure allows for the transmission of events to SIEM systems, such as Wazuh, Splunk, and ELK-based solutions. The records are stored in MongoDB and replicated to Elasticsearch, which allows for the quick creation of analysis views. The index is updated automatically, and visualization is possible via Kibana or a customized dashboard in Praeco. This enables SOC analysts to monitor and make informed decisions about suspicious activity in real time.

The system was adapted to operate in a real-world information security center, including with regard to response procedures. The decision algorithm may be based on machine learning models or on data from external threat intelligence services. If necessary, the administrator may manually change the status of an object by adding it to the whitelist or blacklist. Scenarios for automatic action following a threat detection are described separately, e.g., sending the result to an email gateway, adding an IP address to a blocking system, or sending a notification through a corporate messaging application.

The prototype was deployed using a high-performance server, the parameters of which are shown in Table 15. In the isolated environment, each model used dedicated GPUs and data were exchanged between the models through RAM channels.

Table 15.

Server parameters for running of models.

Thus, the developed architecture allows the following:

- Processing queries in real time;

- Storing results and metadata for subsequent analysis;

- Scaling individual modules independently (e.g., multiplying ml_service);

- Integrating with external sources via APIs and with internal security systems via SIEM interfaces;

- Providing a visual interface for both users (via Telegram) and administrators (via Portainer and Mongo Express).

4. Computational Experiment to Evaluate the Performance of the Web-Based Resource Analysis System

Several computational experiments have been performed on our own dataset and on the available MTLP dataset to provide a comparative assessment of the capabilities of the proposed solution.

The key stage in developing the automatic phishing web resource detection system was the formation of an up-to-date dataset. Datasets widely used in research (ISCX-2016 Dataset, EBBU-2017 Dataset, HISPAR-Phishstats Dataset) quickly become outdated. At the same time, the quality of the classification model, its ability to identify new attack patterns, and its sensitivity and specificity directly depend on the correctness and relevance of the data.

4.1. Computational Experiment I on the Prepared Dataset for Models and

4.1.1. First Phase of Testing: Collecting the Dataset () and Preparing Models

Data were collected from several authoritative sources (Dataset ):

- Phishing URLs were obtained from open repositories Phishing Site URLs (Kaggle), OpenPhish, URLHaus, and PhishTank, where malicious link databases are updated daily;

- Legitimate URLs were selected from the Alexa and Majestic Million Top 1 Million Websites lists, as well as downloaded from secure corporate proxy logs.

The initial sample in Table 16 contained over 4 million rows, of which, after cleaning and filtering, approximately 3.2 million unique URLs remained, balanced across classes.

Table 16.

Estimated time costs for image processing in batch mode.

Data labeling was performed semi-automatically. Some URLs were labeled based on the source (for example, if a link was taken from OpenPhish, it was a priori phishing). In complex cases, the following were used:

- Cross-checks via the VirusTotal API;

- A built-in reputational score checking module;

- Manual checks by WHOIS data (registration date, TLD zone, domain activity);

- Validation using OpenAI and QwQ-32B models in batch mode.

As a result, a dataset was formed containing

- 1.6 million phishing web resources;

- 1.6 million legitimate web resources.

Similarly, CatBoost shows the best ROC-AUC metric of 0.924, indicating a strong ability to discriminate between classes. For the best CatBoost model, the false positive rate metric was 19.98%, and the false negative rate metric was 8.66%. Given the comparable performance of the models based on ensembles of decision trees, the CatBoost model was selected, which had the best F1-score indicators on the test sample, but the preparation of the data preprocessing pipeline and the speed of model training were significantly higher. The model also allows for the evaluation of the significance of each feature during classification. The CatBoost model provides the best representation of categorical and quantitative features with the ability to be efficiently multithreaded on a CPU. When translating the model into the C language and then compiling it using LLVM, the model’s performance increased by 26% compared to the original version.

Additionally, a classifier was built based on the retrained CodeBERT model, deployed in the ONNX model format in the FastAPI container. The ONNX model format is a static computational graph in which the vertices are computational operators and the edges are responsible for the sequence of data transfer across the vertices, which allows the model to run 1.5–2 times faster in classification mode. However, using the model on a server with a CPU in multithreaded mode renders it inferior to models based on CatBoost.

The peak performance estimates for the number of requests processed in the security operation center (SOC) were as follows: 231 thousand per day, 11 thousand per hour, and 100 per second.

4.1.2. Second Stage of Testing—System Throughput Assessment

The second phase of the evaluation involved deploying the complete system on a test bed simulating the conditions of the SOC. The flow of incoming URLs was formed from several sources:

- Corporate mail gateway logs;

- Traffic through proxy servers and web filters;

- Specially generated requests through a Telegram bot.

The total volume of test samples was about 100 thousand URLs, arriving at an average speed of 500 requests per minute. Under this load, the system demonstrated stable operation, with an average processing time of 47 ms for one request, including preprocessing, calling the model, and writing the results to the database. The peak load was up to 1100 URLs/minute, while no timeout errors or microservice freezes were recorded.

4.1.3. Third Stage of Testing—Testing as Part of the SOC

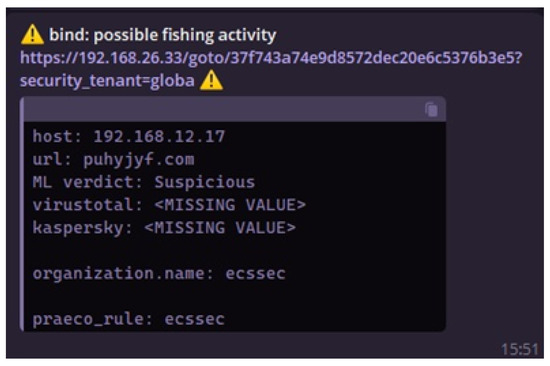

During integration with Praeco, an attack was simulated: within 5 min, the system received 20 fake URL links using the secure-update-login[.]com subdomain and replacing Latin characters with Cyrillic. The system worked with high accuracy, generated a trigger based on the correlation rule, and sent a notification to the SOC Telegram channel (Figure 12).

Figure 12.

ML trigger notification.

4.1.4. Fourth Stage of Testing—Zero-Day Resource Testing

In addition, manual testing was conducted on “zero-day” links—URLs that were not previously included in the database and which imitated new phishing patterns. Of the 100 such links, the system identified 92 as suspicious, including six that had no signs in the VirusTotal databases but had an anomalous structure. For an objective assessment, the system was compared with open tools:

- Google Safe Browsing;

- The OpenPhish Detection API;

- The URLhaus API.

The proposed model detected 15–20% more “fresh” phishing links, especially in cases of obfuscated addresses, short redirects, and character substitution. A significant advantage was provided by the explainability of classifications—the SOC analyst could immediately see on the basis of which features the link was classified as phishing.

4.1.5. Testing the Subsystem for the Explanation of Positive Responses

The application of a subsystem to explain the reasons for classifying resources as phishing is shown for the expanded QwQ-32B model. A detailed explanation is used to justify a positive classification for SOC first-line monitors and allows them to agree or mark the item as a false positive for the further adjustment of the training data. The application of approaches to estimating the SHAP coefficients at this stage was carried out only at the stage of preparing data for model training, since the obtained results require additional interpretation for monitoring specialists.

Thus, the conducted testing confirmed not only the high accuracy of the model but also its suitability for deployment in real time. The system is capable of analyzing a large flow of incoming URLs, is scalable, and provides integration with response infrastructure, making it applicable to monitoring centers, highly secure organizations, and even national CERT structures.

4.1.6. Summary Results of Computational Experiment I

Table 17 presents the summary results of computational experiment I.

Table 17.

The results of computational experiment I (M1, M2, M3).

4.2. Computational Experiment II on the Prepared Dataset for Models and

A balanced (50% phishing and 50% legitimate web resources) MTLP dataset was used to train the models (Table 18).

Table 18.

Estimated time costs for image processing in batch mode for computational experiment II.

The CNN1D model for web resource symbolic name analysis demonstrated high performance in detecting phishing URLs, achieving an F1-score of 91.14% on the test set (Table 19).

Table 19.

Summary results of computational experiment II.

The fine-tuned EfficientNet-B7 model for visual image analysis demonstrated high performance in detecting phishing websites, achieving an F1-score of 93.22% (Table 19).

4.3. Computational Experiment III on the Prepared Dataset for All Models

The final experiment assessed the multimodal performance of the system on datasets and . The results are shown in Table 20.

Table 20.

Summary results of computational experiment III.

4.4. Exploratory Assessment of Robustness of a Multimodal Architecture Against Conventional Obfuscation Techniques

In addition to the primary computational experiments, an exploratory assessment was conducted to evaluate the robustness of the multimodal architecture against conventional obfuscation techniques frequently employed in phishing campaigns. Subsequently, several subclasses of “enhanced” attacks were synthetically generated, utilizing the initial corpus of URLs as a baseline (Table 21).

Table 21.

Assessing the system’s resistance to obfuscation techniques.

For each subsample, a comparison was conducted between the performance degradation of individual branches—specifically URL, HTML, and visual—and that of the integrated multimodal framework employing a meta-classifier.

Preliminary results indicate that, for URL and HTML obfuscation, unimodal models experience a reduction of up to 4–7 percentage points in the F1-score; in contrast, the multimodal system demonstrates a more modest performance degradation, owing to the complementary nature of the integrated modalities. A comprehensive and systematic investigation into the robustness against targeted adversarial attacks remains the objective of future research.

4.5. Evaluating the Quality of Explanations Provided for Resulting Decisions

During the assessment of the proposed system’s performance, a deployment scenario was evaluated on a test stand designed to simulate the operational conditions of a security operations center (SOC). Within this phase, a sample of 100 URLs was generated, all of which were classified as “zero-day” threats for the period under analysis. These URLs lacked an established negative reputation in public sources but were deemed potentially fraudulent or malicious based on internal analysis and retrospective incident reviews. This sample was processed by the system as an event stream, with the test stand replicating the standard workflow of an SOC analyst: the reception of events, the analysis of correlation rules and detector triggers alongside their corresponding explanations, and the formal recording of decisions.

The second substage was dedicated to evaluating the efficacy of the decision explanation subsystem. To assess these explanations, 30 unique cases—generated by the proposed system for specific URLs—were selected at random. For each instance, the system produced a textual explanation delineating the primary features that influenced the classification. These 30 explanations were initially appraised in their basic modes (without the RAG subsystem) by three active first-line SOC analysts. The experts were asked to rate the utility of each explanation on a five-point scale: 1—“explanation useless”; 2—“minimal assistance”; 3—“somewhat useful but requires significant refinement”; 4—“useful for decision-making”; and 5—“highly useful, satisfying the analyst’s requirements almost entirely”. This process generated a total of 90 ratings. The mean score for the URL dataset was 3.97, with the majority of ratings concentrated within the 4–5 range. On average, the experts perceived the explanations as useful, although they noted scope for further enhancement regarding completeness and structure.