Abstract

Topic modeling is a fundamental technique in natural language processing used to uncover latent themes in large text corpora, yet existing approaches struggle to jointly achieve interpretability, semantic coherence, and scalability. Classical probabilistic models such as LDA and NMF rely on bag-of-words assumptions that obscure contextual meaning, while embedding-based methods (e.g., BERTopic, Top2Vec) improve coherence at the expense of diversity and stability. Prompt-based frameworks (e.g., TopicGPT) enhance interpretability but remain sensitive to prompt design and are computationally costly on large datasets. This study introduces VISTA (Vector-Similarity Topic Analysis), a multi-view, hierarchical, and interpretable framework that integrates complementary document embeddings, mutual-nearest-neighbor hierarchical clustering with selective dimension analysis, and large language model (LLM)-based topic labeling enforcing hierarchical coherence. Experiments on three heterogeneous corpora—BBC News, BillSum, and a mixed U.S. Government agency news + Twitter dataset—show that VISTA consistently ranks among the top-performing models, achieving the highest C_UCI coherence and a strong balance between topic diversity and semantic consistency. Qualitative analyses confirm that VISTA identifies domain-relevant themes overlooked by probabilistic or prompt-based models. Overall, VISTA provides a scalable, semantically robust, and interpretable framework for topic discovery, bridging probabilistic, embedding-based, and LLM-driven paradigms in a unified and reproducible design.

1. Introduction

Topic modelling is a critical technique in natural language processing (NLP) used to uncover latent themes in large document collections. Classical methods such as Latent Dirichlet Allocation (LDA) [1] and Non-negative Matrix Factorization (NMF) [2] have long dominated this field. These generative models typically rely on a bag-of-words assumption, which disregards syntactic relations and contextual dependencies. They represent topics through probabilistic distributions of words, often leading to interpretability challenges due to ambiguous or incoherent word groupings [3]. Furthermore, such models largely ignore semantic context, limiting their ability to capture nuanced meanings within text [4].

Beyond these foundational approaches, subsequent research sought to enhance the robustness and flexibility of probabilistic topic models. For example, a density-based method for adaptive model selection in LDA was introduced in [5], addressing the long-standing challenge of estimating the appropriate number of topics. Later, the emergence of variational autoencoders enabled neural extensions of topic models, most notably the Autoencoding Variational Inference for Topic Models (AVITM) proposed by [6]. AVITM combined the interpretability of probabilistic modeling with the scalability and representational power of deep neural networks. These developments effectively bridged classical and neural paradigms, laying the groundwork for subsequent embedding- and transformer-based innovations.

Recent advances have introduced neural and embedding-based methods, significantly enhancing the semantic coherence of identified topics. For example, [7] systematically demonstrated that clustering pretrained word embeddings can rival LDA while being computationally more efficient. Techniques leveraging pretrained word embeddings [7,8,9] and transformer-based architectures, notably BERT [10,11,12], has proven effective in capturing semantic relationships and in generating more coherent and human-interpretable topics. For instance, BERTopic employs Sentence-BERT embeddings reduced with UMAP, clustered via HDBSCAN, and subsequently represented through a class-based TF-IDF procedure, effectively capturing nuanced thematic structures [13]. Similarly, approaches that leverage deep contextualized representations have shown improved topic coherence and interpretability [14,15,16]. Despite these advancements, existing neural methods often face limitations related to computational complexity and scalability.

Parallel to embedding-based approaches, the introduction of prompt-based frameworks such as TopicGPT have opened new avenues for flexible and interactive topic modeling [17]. These methods leverage large language models (LLMs) such as GPT-3 and GPT-4 [18,19] to generate highly interpretable and contextually grounded topics. Unlike classical models that output only top-n word lists, TopicGPT produces natural language topic labels and descriptions, reducing the need for manual interpretation. Another notable approach, Goal-Driven Explainable Clustering (GOALEX), operates through a Propose–Assign–Select (PAS) algorithm that integrates user-defined objectives and yields clusters with explicit linguistic explanations [20]. This significantly enhances the interpretability and usability of topics by aligning clustering outcomes with specific analytical goals articulated by users.

Building upon these recent innovations, this paper introduces a novel topic modelling methodology designed to overcome the limitations of both classical and contemporary techniques. Our method integrates the semantic coherence and computational efficiency of embedding-based clustering. At the same time, it incorporates the interactive, goal-driven clarity of prompt-based and explainable frameworks, which add adaptability, interactivity, and human-aligned interpretability. Through systematic benchmarking and user evaluations, we demonstrate that the approach achieves superior performance in capturing coherent topics while offering practical scalability and interpretability enhancements essential for real-world applications. In this way, our approach unifies the strengths of embedding-based clustering (speed, semantic consistency), neural contextual methods (rich transformer embeddings), and prompt-based frameworks (adaptability and interpretability).

To summarize, this work makes four primary contributions to the field of topic modelling. First, we introduce VISTA, a multi-view and hierarchically interpretable framework that integrates complementary document representations—full texts, summaries, noun-only variants, and pseudo-tweets—to enhance robustness across heterogeneous and cross-length corpora. To the best of our knowledge, VISTA is the first fully unsupervised topic-modelling framework capable of producing coherent topic labels without any predefined topic lists, manual supervision, or model training, while naturally accommodating documents of highly variable lengths. Second, we propose a selective-dimension analysis that identifies the most discriminative embedding dimensions prior to similarity computation, followed by a mutual-nearest-neighbour (MNN) hierarchical clustering procedure that operates on these refined distance estimates, thereby improving cluster stability and semantic resolution. Third, we develop a bottom-up, LLM-driven hierarchical labeling strategy that produces coherent, human-interpretable topic names while enforcing semantic alignment across parent and child nodes. Finally, VISTA provides a unified and scalable approach that bridges probabilistic, embedding-based, and LLM-based paradigms, resulting in improved semantic coherence, interpretability, and cross-domain stability.

The remainder of this manuscript is structured as follows. Section 2 introduces the methodological components of VISTA, including the multi-view embedding design, mutual-nearest-neighbor clustering, selective-dimension analysis, and hierarchical LLM-based labeling. Section 3 presents quantitative and qualitative evaluations across three heterogeneous datasets and compares VISTA with established baselines. Section 4 discusses methodological implications, contrasts VISTA with related approaches, and identifies key limitations and avenues for future work. Section 5 concludes with a summary of findings and the broader relevance of the proposed framework.

2. Materials and Methods

2.1. Overview of Our Approach

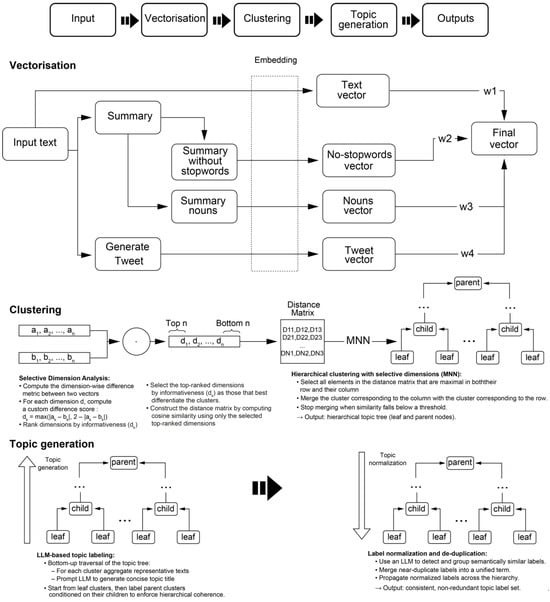

Our approach is structured into three main steps: (1) creating document vector embeddings, (2) applying hierarchical clustering, and (3) generating topic labels. First, we create semantic vector embeddings that capture the key thematic content of each document. Next, we apply hierarchical clustering to group semantically similar documents, thereby forming both broad topics and fine-grained subtopics. Finally, we leverage LLMs to automatically assign topic labels to each cluster, ensuring semantic consistency across the hierarchy. This fully automated pipeline enables scalable and interpretable topic modeling without the need for manual tuning. The main advantage of the proposed approach over existing methods such as BERTopic and Top2Vec is that it discovers and assigns explicit document topics, rather than returning only sets of associated keywords. A comprehensive schematic summarising all stages of the VISTA framework is presented in Figure 1.

Figure 1.

Integrated schematic of the VISTA framework, illustrating the complete topic-modelling pipeline from multi-view document vectorisation to hierarchical topic generation. The workflow begins with raw input documents, which are transformed into several complementary semantic representations: abstractive summaries, noun-only summaries, stopword-free summaries, noun-only full-text views, and (when applicable) pseudo-tweet variants. Each view is embedded separately, yielding a multi-view set of document vectors. These are then fused through a weighted similarity aggregation procedure. To improve semantic discriminability, VISTA applies selective-dimension analysis: vector dimensions are normalized, ranked by informativeness using a custom per-dimension difference metric, and only the top-ranked dimensions are retained when computing pairwise similarities. A mutual-nearest-neighbour (MNN) graph is constructed based on these refined similarities to suppress asymmetric or spurious links. MNN connectivity serves as the basis for hierarchical clustering: clusters merge only when they are mutual nearest neighbours and only while similarity remains above a threshold, producing a stable and interpretable topic tree. Finally, bottom-up LLM-based topic labelling generates concise topic titles and short descriptions for leaf clusters, followed by parent-cluster labels conditioned on their children to ensure hierarchical coherence. A final normalization step merges redundant labels and propagates consistent terminology across the hierarchy. The output includes a complete topic tree, human-readable topic labels, and document-level assignments.

2.2. Documents Embeddings

Each document is represented through multiple complementary embeddings to capture its thematic content with higher robustness. For every document, we first generate four abstractive summaries, a choice motivated by empirical testing of multiple configurations. In practice, four summaries provided a good balance between performance gains and computational cost, while ensuring stable topic quality. However, this number is not fixed and can be adjusted depending on corpus size, resource constraints, or specific application needs. Each summary is then transformed into two variants: (i) a version with all stop words removed, and (ii) a noun-only version, based on evidence that nouns typically carry the strongest topical signal, while other parts of speech such as verbs or adjectives tend to appear more uniformly across domains and thus contribute less to topic discrimination [7]. In addition, we generate a noun-only representation of the original text, yielding a compact feature set that highlights the most salient semantic information. This combination of summarization, stopword removal, and noun extraction also reduces computational cost when applying external LLM tools, while preserving topical coherence. Empirical analyses further indicated that generating multiple summaries improves the stability and quality of topic generation. This strategy is consistent with findings from multi-view learning [21], which demonstrate that complementary representations enhance robustness, as well as prior work showing that noun-based or phrase-based filtering emphasizes the most discriminative topical signals [22].

In cases where datasets include both long-form documents and short texts such as tweets, we additionally create “pseudo-tweet” versions of longer texts. Tweets are short, often contextually incomplete, and linguistically non-standard—properties that make them particularly challenging for topic modeling [23]. By generating concise tweet-like variants of longer documents, we produce a more balanced and comparable dataset. Embedding these pseudo-tweets provides an additional semantic perspective that sharpens cluster boundaries and enables fairer comparisons between short and long text forms within the same representational space.

All embeddings in our approach are generated using the text-embedding-ada-002 model from OpenAI, which has demonstrated strong performance in semantic encoding and provides a high degree of semantic resolution and coherence in preliminary evaluations.

2.3. Hierarchical Clustering

In the second step, we apply hierarchical clustering to organize documents into coherent topical structures. Pairwise similarity between vectors is first computed using the mutual nearest neighbor (MNN) principle, which links two elements only if each is the nearest neighbor of the other in semantic space [24]. This bidirectional confirmation reduces spurious links that may arise from unidirectional proximity and produces more reliable clustering.

Generally, each MNN candidate pair is evaluated across three independent vector projections: (i) noun-only representations of full documents, (ii) noun-only representations of summaries, and (iii) stopword-free summaries. The aggregated similarity is calculated as a weighted average:

where denotes the cosine similarity between documents reduced to nouns, the cosine similarity between summaries reduced to nouns, and the cosine similarity between summaries with stop words removed.

When pseudo-tweet embeddings are included, the similarity formula is extended with a fourth component:

Here, denotes the cosine similarity between pseudo-tweet representations of longer documents or actual tweets.

The weights satisfy the constraint , with the condition . In cases where pseudo-tweet embeddings are included, the formulation is extended to , with the additional condition .

In this study, the weighting scheme was kept fixed across all datasets to avoid corpus-specific parameter tuning and to allow the evaluation of the architecture itself rather than optimized hyperparameters. The chosen weights (with a higher emphasis on noun-rich representations) were selected through exploratory trials during model development, as these consistently produced stable MNN pairings and semantically coherent cluster structures. We therefore used a single global configuration—corresponding to a relative weighting of 1.8 for noun-only full-text embeddings, 0.8 for noun-only summaries, and 0.4 for stopword-free summaries—applied uniformly across all experiments. No dataset-specific fine-tuning or optimization was performed to avoid overfitting and to demonstrate that VISTA performs robustly without parameter adjustment.

This weighting scheme prioritizes the semantically most informative representations while still leveraging complementary information from summarized forms. The resulting score serves as the input to the MNN algorithm, enabling a robust assessment of semantic similarity that accommodates heterogeneous text types, including very short or stylistically variable inputs such as tweets. The weights can be further tuned using evaluation metrics, allowing adaptation to corpus-specific characteristics.

Once mutual pairs are identified, hierarchical clustering progressively merges them into larger clusters. Unlike conventional approaches that continue until all items form a single cluster, our method halts merging when the similarity between the two closest clusters falls below a predefined threshold. In this study, a single threshold value was applied uniformly across all datasets. This choice was informed by exploratory experimentation, which indicated that the threshold primarily governs semantic granularity rather than scaling with corpus size. We deliberately avoided dataset-specific tuning to prevent overfitting and to evaluate the robustness of the architecture itself. As additional documents are introduced, the number of resulting topic trees grows sublinearly, since intermediate documents often bridge semantically related clusters even when pairwise similarities fall below the cutoff. This behavior supports the use of a stable, globally applied merging threshold. This stopping criterion prevents the combination of semantically distant groups and preserves fine-grained topical resolution.

A key distinction of our approach lies in the novel procedure used for calculating inter-cluster similarity. Rather than relying on direct comparison of entire vectors—as is common in conventional clustering methods—we introduce a selective dimension analysis designed to emphasize the most informative differences between clusters. In this procedure, all vector dimensions are first normalized to the interval , after which a custom difference metric is computed for each dimension according to the following formula:

where and denote the values of the corresponding dimension in the two vectors being compared. This formula captures differences across dimensions even when vector values are restricted to the closed interval , including extreme cases (e.g., –0.95 vs. 0.95) where conventional measures may fail. In doing so, it avoids circularity effects that could otherwise distort similarity estimates. After computing these per-dimension differences, they are ranked, and the final inter-cluster similarity is derived from a subset of dimensions with the largest discrepancies, typically corresponding to 10–20% of the total. To clarify this choice, we note that prior work on unsupervised feature selection and high-dimensional distance metrics shows that only a small subset of embedding dimensions typically carries meaningful discriminative signal [25,26]. During model development, we experimented with several retention ratios (5%, 10%, 15%, 20%, 25%) and consistently observed that extremely small subsets (<10%) produced unstable merges, while larger subsets (>25%) diluted contrast and reintroduced noise. For this reason, and to avoid dataset-specific fine-tuning that could lead to overfitting, we fixed a single global value corresponding to precisely 16.28% of embedding dimensions for all experiments. This value lies within the empirically stable interval and provides a balanced trade-off between noise suppression and semantic discriminability across heterogeneous corpora.

By focusing only on the most discriminative dimensions, this procedure suppresses semantic noise, emphasizes the defining contrasts between topical units, and improves the clarity of thematic boundaries. Such a selective approach is particularly effective in domains where documents share a common register—such as scientific writing—where subtle semantic distinctions are essential for meaningful topic separation. By concentrating only on the most discriminative dimensions, our procedure is consistent with prior work showing that selective feature weighting can enhance cluster separation [25,26], while extending this principle to embedding spaces through a novel per-dimension difference metric.

2.4. Topic Labelling with LLMs

In the final step, we assign human-readable topic labels to clusters using LLMs. The process follows a bottom-up traversal of the hierarchical clustering tree: topic labels are first generated for the leaf clusters, and subsequently for higher-level clusters by conditioning on the labels of their children. This strategy ensures hierarchical coherence, i.e., that broader cluster labels semantically encompass the more specific subtopics.

For each cluster, the LLM is prompted with representative text segments (e.g., summaries, noun-only forms, and pseudo-tweets) to generate a concise topic label and a short natural-language description. To avoid redundancy, similar labels are normalized through lexical similarity measures and merged into unified terms. The final label set is then propagated back to individual documents, ensuring that each text is directly associated with an interpretable thematic assignment rather than only a distribution over keywords.

To mitigate the inherent nondeterminism of LLM-generated labels, VISTA employs several mechanisms that enhance stability and reproducibility. For each cluster, multiple candidate labels are generated and subsequently merged through lexical and semantic normalization, which rapidly converges toward consistent descriptors across runs. In addition, parent-level labels are produced conditionally on the finalized set of child labels, ensuring hierarchical coherence and reducing the likelihood of contradictions between levels. Together, these procedures provide a robust safeguard against variability arising from stochastic LLM sampling.

This procedure offers two main advantages. First, it leverages the expressive power of LLMs to produce topic descriptors that are semantically rich and immediately interpretable, avoiding the ambiguity of keyword lists produced by conventional models. Second, by enforcing consistency across hierarchical levels, the approach produces topic taxonomies that more faithfully reflect the multi-layered organization of real-world corpora.

3. Results

3.1. Datasets

The experimental evaluation is conducted on two publicly available text corpora selected to represent distinct domains and text lengths. The BBC News Archive dataset (www.kaggle.com/code/binnyshukla/bbc-news-topic-modeling, accessed on 23 October 2025) articles categorized into five sections: business, entertainment, politics, sport, and technology. It provides a well-structured collection of short- to medium-length journalistic texts, making it suitable for assessing the performance of topic models on real-world news content. The second dataset, BillSum (www.kaggle.com/datasets/akornilo/billsum, accessed on 23 October 2025), comprises more than 22,000 U.S. Congressional bills from the 103rd–115th sessions, each accompanied by a human-written summary. This corpus represents a more complex and formal legislative domain, allowing the evaluation of topic models on long-form documents characterized by rich legal and technical vocabulary. The third dataset, U.S. Agency News and Twitter Feed (https://github.com/domjanbaric/vista/tree/main/data, accessed on 23 October 2025), consist of texts of news posts and tweets from US agencies like NASA, HHS, NIH and others. The combination of long format news postings and short format of tweets tests how models perform when the length of text is heterogeneous.

3.2. Baseline Models for Comparison

For comparison, we evaluate our method against four representative topic modeling approaches that span probabilistic, embedding-based, and prompt-based paradigms.

BERTopic [13] builds on transformer-based embeddings to capture semantic relationships between documents. These embeddings are clustered using HDBSCAN, and representative keywords are extracted through a class-based TF-IDF procedure. BERTopic supports dynamic topic modeling and has demonstrated strong performance across domains with heterogeneous text lengths.

Topic2Vec [27] extends the Word2Vec framework by jointly learning continuous vector representations of words and topics in the same semantic space. This enables direct measurement of word–topic similarity and produces more fine-grained representations than probabilistic models, resulting in improved topic coherence in downstream applications.

TopicGPT [17] represents a prompt-based approach that employs LLMs to generate and refine topics from user-provided examples or automatically sampled document subsets. Its outputs are highly interpretable and human-readable, but performance is sensitive to prompt design and the specificity of initial topics, which can reduce stability.

Latent Dirichlet Allocation (LDA) [1] is the canonical probabilistic topic model that represents documents as mixtures of latent topics, with each topic modeled as a distribution over words. While it remains a widely used baseline, LDA requires manual specification of the number of topics and relies on human interpretation of topic–word distributions. It performs less effectively on short or noisy texts and struggles to capture complex semantic structures.

3.3. Evaluation Metrics

We assess model quality using five standard metrics. Topic Diversity (TD) measures the proportion of unique words among the top-ranked topic terms [14]. coherence combines NPMI with cosine similarity between context vectors [28]. coherence relies on log conditional probabilities derived from word co-occurrence counts [29]. coherence, applies a normalized variant of PMI [30], while (also referred to as CPMI) employs unnormalized PMI [31]. Formal definitions and equations for all metrics are provided in the Supplementary Materials S1.

All experiments were conducted on a MacBook Pro equipped with an M3 Max chip and 36 GB of memory, using Python 3.10. The full code for our analyses is publicly available at: https://github.com/domjanbaric/vista (accessed on 23 October 2025).

In the following sections, we present a comparative evaluation of VISTA against LDA, BERTopic, Top2Vec, and TopicGPT across multiple corpora. Performance is assessed with metrics that jointly capture topic diversity, semantic coherence, and interpretability.

3.4. Quantitative Results

Table 1, Table 2 and Table 3 report the comparative performance of five models—LDA, BERTopic, Top2Vec, TopicGPT, and our proposed approach—on three datasets: BBC News, BillSum, and the newly introduced U.S. Government Agency News and Twitter Feed corpus. Evaluation was conducted using topic diversity and four coherence measures (, , , and ).

Table 1.

Comparative performance of five topic modeling approaches on the BBC News Archive dataset. Evaluation is reported across topic diversity (TD) and four coherence metrics (, , , and ). Higher values generally indicate stronger performance, except for , where less negative scores are preferable.

Table 2.

Comparative performance of five topic modeling approaches on the BillSum dataset. The dataset consists of long-form legislative texts with complex vocabulary, providing a challenging test for topic models. Metrics include topic diversity (TD) and four coherence metrics (, , , and ). Higher values generally indicate stronger performance, except for , where less negative scores are preferable.

Table 3.

Comparative performance of five topic modeling approaches on the U.S. Agency News and Twitter Feed dataset. This corpus combines long-format institutional news with short social media posts, testing model robustness under heterogeneous text lengths. Results are reported across topic diversity (TD) and four coherence metrics (, , , and ). Higher values generally indicate stronger performance, except for , where less negative scores are preferable.

On the BBC corpus (Table 1), our model achieves a topic diversity of 0.66, the second-highest after Top2Vec (0.82). However, unlike Top2Vec, which often produces fragmented and noisy clusters, our model preserves semantic consistency by leveraging multi-view embeddings and the MNN-based clustering strategy. This is reflected in its strong balance across coherence scores: second-best in (0.06) and solid in (0.50), while clearly outperforming most baselines in (–2.47) and (–0.22). BERTopic attains the highest (0.69), but at the cost of a poor (–4.49), confirming that surface-level coherence may conceal semantic instability. By contrast, our approach avoids this trade-off, providing both lexical coherence and deep semantic alignment—an outcome of its selective dimension analysis and hierarchical clustering design.

The BillSum dataset (Table 2) consists of long, technical, and highly formal legislative texts—a particularly challenging domain for topic models. Here, our method demonstrates its robustness by producing the best score (–1.18) and the highest (0.14), reflecting strong internal and external coherence. It also yields competitive values in (0.46) and (0.02). Although its topic diversity (0.34) is lower than LDA (0.60) and BERTopic (0.59), this reduction mirrors the narrow, specialized nature of legislative discourse, where fewer but semantically precise topics are preferable to diffuse clusters. The effectiveness of our approach in this context illustrates how hierarchical merging with threshold-based stopping enables the detection of well-defined, domain-specific topics without overfitting or excessive fragmentation.

The agency dataset (Table 3) combines institutional news with short, informal tweets—an extreme test of heterogeneity. Despite this variability, our model remains highly effective. It achieves competitive scores across metrics, with and , close to BERTopic (0.70, 0.13) and TopicGPT (0.51, 0.16). VISTA also attains a strong value (0.50), outperforming LDA, BERTopic, and Top2Vec, and only slightly below TopicGPT (1.18). Its score (–2.35) is substantially better than BERTopic (–6.37) and Top2Vec (–6.36), underscoring its robustness in capturing document-level coherence across divergent text types. These results highlight the value of incorporating pseudo-tweet representations, which allow longer documents to be projected into the same semantic space as short tweets, improving fairness in clustering. Unlike Top2Vec, which again maximizes diversity (0.83) at the expense of coherence, our method delivers a balanced and interpretable taxonomy of topics across texts of different lengths and registers.

Across all three datasets, our method consistently ranks among the top-performing models, typically placing first or second across key coherence metrics. In particular, it demonstrates exceptional strength in coherence, where it achieves the best score on the BBC and BillSum datasets and remains highly competitive on the Agency corpus, confirming its ability to generate semantically rich and externally consistent topics. While some competing models excel in isolated metrics—such as BERTopic in or Top2Vec in topic diversity, or TopicGPT in on the Agency dataset —our approach distinguishes itself by delivering balanced, robust, and generalizable performance across domains of varying size, style, and complexity.

Beyond numerical performance, the results directly reflect the methodological innovations of our framework. By employing multi-view embeddings and MNN-based hierarchical clustering, the model ensures robustness and stability. Through LLM-driven topic labeling, it avoids the interpretability problems of probabilistic models such as LDA, while empirical clustering guided by vector similarity counters the instability of prompt-based methods such as TopicGPT, eliminating sensitivity to initial examples. In addition, selective dimension analysis balances surface-level coherence with deeper semantic alignment, and the integration of summaries and pseudo-tweet representations enables seamless adaptation to heterogeneous datasets, ensuring fair treatment of both short and long texts.

Taken together, these findings indicate that our method provides a scalable, semantically robust, and interpretable framework for topic modeling across diverse corpora. While we report TopicGPT results for the BBC and Agency datasets—since the original framework does not scale to larger collections such as BillSum [17] our comparisons demonstrate that the proposed approach offers a compelling alternative that combines coherence, stability, and interpretability in a single unified model.

3.5. Qualitative Case Studies

In this section, we analyze selected examples from the BillSum, BBC, and US Government Agency News and Twitter datasets to illustrate the advantages of the proposed VISTA framework. The full texts of the documents used in these case studies are provided in Supplementary Materials S2 (Examples S1–S3) for reference and reproducibility. As outlined in the introduction, keyword-based models often struggle with documents in which the central theme is not explicitly expressed through frequent or surface-level terms. Such approaches tend to overlook latent semantic cues, frequently producing misleading or overly generic topics. By contrast, VISTA captures deeper contextual meaning, enabling it to infer coherent and accurate topics even when relevant keywords are sparse or implicit. Furthermore, unlike methods that rely on selecting the top-k keywords from clusters—often leading to fragmented or diffuse topic representations—VISTA consistently produces single, well-formed, and interpretable topics.

3.5.1. Case Study: Health Care

The following article (Supplementary Materials S2, Examples S1) discusses UK government reforms designed to provide elderly and disabled individuals with greater control over their personal care budgets. It addresses policy proposals, funding mechanisms for social care, political responses, and broader implications for health services and personal autonomy.

Traditional keyword-based topic models fail to capture the nuanced subject matter. LDA, Top2Vec, and NMF tend to emphasize economic or partisan political terms such as budget, tax, tory, labour, and chancellor. These reflect the presence of fiscal and governmental terminology but do not reveal the article’s actual focus on social care and personal autonomy. Similarly, models such as LSA and BERTopic generate vague or generic topics, dominated by terms like say, people, and plan, which contribute little semantic specificity.

In contrast, VISTA correctly identifies the central theme of health and social care, surfacing this latent topic despite the absence of repetitive high-frequency keywords explicitly referring to “health care.” The model successfully captures the document’s underlying intent—policy-driven reform in adult social services—demonstrating its capacity to generalize from contextual cues rather than relying solely on word frequency.

This example underscores a central strength of our approach: the ability to infer coherent, domain-specific topics even when surface-level political or economic framing might mislead traditional keyword- or frequency-based models.

To further illustrate the comparison, Table 4 presents the top-ranked terms generated for this document. While VISTA correctly identifies health care as the central topic, competing methods yield keyword lists that are either overly generic, dominated by political and economic terms, or semantically diffuse.

Table 4.

Top-ranked terms generated by six topic modeling approaches for the article analyzed in Example 1 (Health Care; Supplementary Materials S2).

These outputs reinforce the limitations of keyword-based methods: BERTopic and LSA generate vague or generic descriptors (say, people, plan), while LDA, NMF, and Top2Vec overemphasize fiscal and partisan cues (budget, tax, labour, tory). None of these lists capture the document’s actual focus on adult social care reform. By contrast, VISTA produces a concise and accurate label (health care), demonstrating its ability to surface the latent semantic theme rather than relying on shallow word-frequency patterns.

3.5.2. Case Study: Rugby

This article (Supplementary Materials S2, Examples S2) focuses on concerns regarding rising injury rates in professional rugby, particularly within the English league, as emphasized by England coach Andy Robinson. The text addresses long-term health implications for players, the impact of professionalization on physical demands, and the launch of an “injury audit” supported by major rugby organizations. The full text of the article is provided in the Supplement (see Supplementary Material, Example S2).

Despite this focused narrative, most baseline models fail to capture the specific topic. Top2Vec returns a noisy mix of football-related terms such as mourinho, liverpool, and referee, suggesting cross-sport contamination. LDA and NM produce vague or generic sports topics dominated by terms like win, player, team, and training, which indicate the sports domain but not the article’s central concern of player safety and injury. Even BERTopic, though it includes rugby, dilutes the focus with unrelated terms such as club, win, and wale, limiting interpretability.

In contrast, VISTA identifies Rugby union as the central topic, accurately reflecting the document’s thematic focus. Crucially, the model avoids conflating rugby with general sports or unrelated football discourse and recognizes contextual signals tied to physical toll, injury, and professional demands—even though these cues are not repeated keywords. This demonstrates how VISTA produces semantically coherent, document-specific topics by leveraging contextual embeddings and hierarchical labeling rather than relying solely on keyword frequency.

Table 5 summarizes the top-ranked terms generated for this document. While VISTA yields a precise and contextually appropriate label (Rugby union), baseline models produce outputs that are either overly generic or contaminated by unrelated sports terms.

Table 5.

Top-ranked terms generated by six topic modeling approaches for the article analyzed in Example 2 (Rugby; Supplementary Materials S2).

These results further highlight the limitations of keyword- and embedding-based methods in isolating document-specific semantics. Whereas BERTopic, LDA, NMF, and Top2Vec drift toward broad sports-related cues or cross-domain contamination, VISTA produces a concise and semantically faithful label, reinforcing its strength in identifying context-specific themes within specialized domains.

3.5.3. Case Study: Death in Custody

This article (Supplementary Materials S2, Examples S3) reports on a parliamentary committee’s findings concerning deaths in custody in the UK, particularly among vulnerable individuals with histories of mental illness, substance abuse, and suicidal tendencies. The text highlights systemic failures in the prison system, criticizes the inappropriate incarceration of at-risk individuals, and calls for urgent policy reform to uphold basic human rights. The full text of the article is provided in the Supplement (see Supplementary Material, Example S3).

Despite the gravity and specificity of the issue, baseline models fail to identify a coherent or meaningful theme. BERTopic, Top2Vec, and LDA produce politically generic or institutionally vague keyword sets such as labour, government, election, case, and official, which reflect surface-level discourse but miss the core problem of custodial deaths and mental health failures. Similarly, NMF and LSA return uninformative or unrelated terms like say, fall, good, and play, offering little interpretive value.

In sharp contrast, VISTA accurately captures the central topic with the label death in custody, directly reflecting the article’s legal and human rights emphasis. Crucially, the model recognizes this as a distinct, high-stakes theme rather than conflating it with broad political or bureaucratic references. This demonstrates VISTA’s ability to surface nuanced, domain-relevant topics even when key terms are sparse or embedded in complex narratives.

Table 6 presents the top-ranked terms generated by each model for this document. Whereas most baselines drift toward generic political or institutional vocabulary, VISTA identifies the precise underlying theme.

Table 6.

Top-ranked terms generated by six topic modeling approaches for the article analyzed in Example 3 (Death in Custody; Supplementary Materials S2).

These results highlight the limitations of keyword- and frequency-based models, which default to broad institutional or political terms, overlooking the text’s true emphasis on custodial deaths and human rights failures. By contrast, VISTA produces a concise and contextually faithful label, underscoring its strength in capturing sensitive, domain-specific issues that require semantic depth rather than surface-level keyword overlap.

3.6. Topic Tree Visualizations

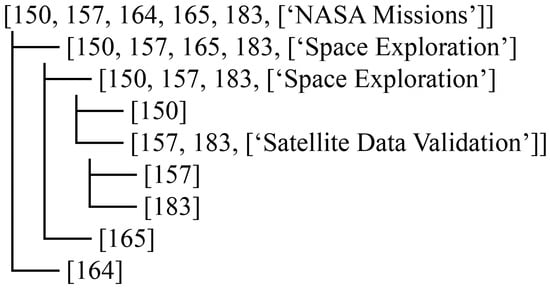

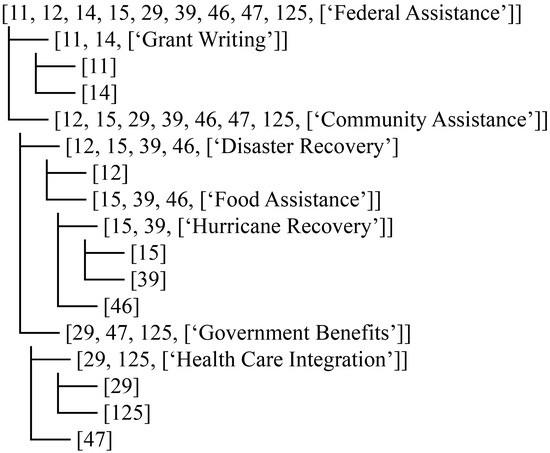

To illustrate the interpretability and structural clarity enabled by VISTA, we present two representative Topic Tree visualizations, each derived from a different dataset. Each underlying text is annotated with its topic node ID, corresponding to the labels shown in the visualizations (Figure 2 and Figure 3). For transparency and reproducibility, we deliberately retain each text in the exact format in which it was originally scraped, without additional normalization or editing. The underlying texts used to construct these trees are provided in Supplementary Material S3. These visualizations demonstrate how the model constructs hierarchies in a bottom-up fashion: beginning with fine-grained leaf topics, interpreting their semantic content, and progressively merging related clusters into broader, coherent themes.

Figure 2.

Topic Tree for NASA Missions derived from a space research corpus (source texts provided in Supplementary Material S3). The visualization shows how fine-grained leaf topics are progressively merged into broader, semantically coherent themes.

Figure 3.

Topic Tree for Federal Assistance derived from public-sector texts on aid and policy (source texts provided in Supplementary Material S3). The visualization illustrates how diverse subtopics are progressively merged into broader, coherent categories.

The first visualization, NASA Missions (Figure 2), based on a corpus of space research (see Supplementary Material S3 for dataset details), centers on the broad theme NASA Missions 150, 157, 164, 165, 183. The model initially isolates specific leaf nodes—such as 157 and 183, which it groups into the coherent subtheme Satellite Data Validation. This subtopic is then merged with related nodes 150 and 157 to form the broader theme Space Exploration. Finally, Space Exploration is integrated with topic 165, producing the unified higher-level concept NASA Missions. Even the standalone node 164 is appropriately placed outside the dominant cluster, reflecting its semantic distance from the other topics. This example highlights the model’s capacity to identify meaningful substructures within specialized domains and assemble them into intuitive hierarchies—without supervision or predefined ontologies.

The second visualization, Federal Assistance (Figure 3), drawn from public-sector texts on aid and policy (dataset provided in Supplementary Material S3), is more structurally complex. The model analyzes a diverse set of topics 11, 12, 14, 15, 29, 39, 46, 47, 125 and progressively organizes them into semantically coherent clusters. For example, 15 and 39 are grouped as Hurricane Recovery, which then merges with 46 to form Food Assistance, and subsequently with 12 to form Disaster Recovery. In parallel, 29 and 125 are aligned under Health Care Integration, which is then merged with 47 into the broader category Government Benefits. Ultimately, all branches converge into the high-level theme Federal Assistance.

The datasets originate from heterogeneous sources, including institutional web pages (e.g., NASA, HHS, NIH), official government reports, news portals, and social media platforms (e.g., Twitter/X, Facebook). This heterogeneity illustrates the robustness of the VISTA framework when applied to corpora that combine different styles, registers, and levels of formality.

Taken together, these two visualizations demonstrate that VISTA goes well beyond simple document clustering. Rather than producing flat sets of topics, it constructs conceptual hierarchies that evolve naturally from concrete, interpretable micro-topics to broader macro-level themes. This hierarchical organization not only mirrors the way humans structure knowledge but also provides analysts with an intuitive framework for navigating complex corpora. Crucially, it enables the detection of both fine-grained distinctions (e.g., Hurricane Recovery vs. Food Assistance) and higher-order thematic categories (e.g., Federal Assistance), offering a multi-level perspective that traditional topic models cannot provide. This hierarchical interpretability represents one of VISTA’s most distinctive advantages, supporting advanced exploration, comparative analysis, and actionable insights across diverse domains such as science, policy, and public communication

4. Discussion

4.1. Interpretation of Results and Methodological Contributions

The results presented in Section 3 highlight both the empirical performance and the methodological contributions of the VISTA framework. Across all three datasets, VISTA consistently ranked among the top-performing models, particularly excelling in the coherence score, where it achieved the best results on the BBC and BillSum datasets and remained highly competitive on the Agency corpus. This indicates that the topics generated by VISTA are not only internally consistent but also externally validated through semantic co-occurrence patterns. Importantly, the framework achieved these results while maintaining a balance between topic diversity and coherence—avoiding the trade-offs observed in models such as BERTopic (high coherence but poor ) or Top2Vec (high diversity but low coherence).

These outcomes can be directly attributed to several methodological innovations integrated into VISTA. The use of multi-view embeddings (full-text, summaries, noun-only variants, stopword-free versions, and pseudo-tweets) provides complementary perspectives on the same document, mitigating the biases of any single representation. By leveraging mutual nearest neighbor (MNN)-based clustering, the framework reduces spurious associations that commonly arise in high-dimensional semantic spaces, thereby ensuring more stable and reliable clusters. The selective dimension analysis further strengthens this stability by emphasizing the most discriminative vector dimensions, which enhances semantic contrast between clusters and prevents the merging of loosely related topics.

Another important contribution is the introduction of pseudo-tweets or corpora containing heterogeneous text lengths. This design allowed VISTA to align short and long documents within a shared semantic space, resulting in particularly strong performance on the mixed U.S. Agency News and Twitter dataset. Without this adjustment, models such as LDA and Top2Vec either fragmented short texts into noise or overfitted to longer ones, leading to substantial losses in coherence.

Finally, LLM-driven topic labeling proved central to interpretability. By generating hierarchical labels from leaf to parent clusters, VISTA produced concise and semantically faithful descriptors such as health care, rugby union, and death in custody. These labels captured the latent intent of documents more effectively than word lists produced by probabilistic or embedding-based models, which frequently defaulted to vague or politically generic terms. The case studies demonstrated how this feature transforms ambiguous keyword sets into human-readable, domain-relevant topics that are immediately useful for analysis.

Taken together, these methodological contributions explain why VISTA achieved robust and interpretable performance across diverse datasets. More broadly, the framework demonstrates that integrating embedding-based clustering with LLM-driven interpretation is a viable path toward overcoming long-standing weaknesses in topic modeling, including keyword ambiguity, sensitivity to corpus heterogeneity, and lack of semantic depth. In this way, VISTA not only advances the technical state of the art but also contributes conceptually to the ongoing shift in topic modeling research: from purely probabilistic word distributions toward semantically grounded, hierarchically interpretable, and practically usable models.

4.2. Comparison with Other Models

Compared to traditional probabilistic topic modeling methods such as LDA [1], NMF [2], and Latent Semantic Analysis (LSA) [32], our approach offers several key advantages stemming from a fundamental paradigm shift: moving from distributional models to semantic vector representations combined with hierarchical clustering and language-based interpretation.

First, unlike LDA and related methods that represent topics as probability distributions over words—requiring manual interpretation of word lists to derive topic labels—our system employs LLMs to directly assign topic labels to each cluster. This eliminates the need for the so-called “reading tea leaves” approach [3], often cited as a weakness of probabilistic models. This advantage was reflected in our experiments: while LDA produced highly redundant or vague keyword lists, VISTA consistently yielded concise, human-readable labels (e.g., health care and death in custody), capturing latent semantic intent that keyword-based methods overlooked.

Second, our method automatically determines the number of topics based on structural stability in semantic space and the stopping criterion of hierarchical merging. This avoids the need for manual selection of the number of topics, a parameter that is typically unintuitive and experimentally determined in LDA, NMF, and LSA. In practice, this property enabled VISTA to adapt flexibly to corpora of varying size and complexity, producing more fine-grained and stable taxonomies than competing models. For instance, on the BillSum dataset, VISTA generated fewer but semantically sharper topics, reflected in its superior and scores, despite lower overall diversity.

In comparison with more recent LLM-based approaches such as TopicGPT [17], which offer high-quality topic generation via LLM prompting, our model does not depend on user-specified example topics. Prompt-based methods can be highly sensitive to initial input; when example topics are overly specific, TopicGPT often produces isolated, narrowly defined clusters for nearly every document. In contrast, our model requires no initial topic assumptions. Instead, it relies on empirical grouping based on vector similarity and hierarchical structure, ensuring both stability and scalability without user intervention. The robustness of this design was evident in our evaluation: VISTA achieved competitive coherence on the BBC corpus without extensive prompt engineering and outperformed TopicGPT in external consistency () on the BillSum dataset, while remaining competitive on the Agency corpus.

Another important advantage over TopicGPT and related prompt-based frameworks is the ability of our model to detect and assign subtopics without redefining prompts or regenerating topics. Whereas prompt-based systems typically require iterative adjustments to capture finer thematic distinctions, our approach naturally produces a hierarchical taxonomy of topics through clustering. Semantic labels are progressively generated along the hierarchy, enabling both deductive exploration of predefined categories and inductive discovery of emerging themes. This property was highlighted in our Topic Tree visualizations, where VISTA successfully organized micro-topics (e.g., Hurricane Recovery) into broader macro-level structures (e.g., Federal Assistance).

Finally, unlike both classical probabilistic models and more recent neural or prompt-based approaches, which are often static and struggle to accommodate heterogeneous textual sources such as tweets, summaries, and full-length articles, our method integrates multiple representations of the same document—including summaries and pseudo-tweets—into a unified framework. This design yields a robust multilayered semantic network that enables consistent comparison across texts of varying lengths, registers, and styles. In the heterogeneous U.S. Agency + Twitter dataset, this feature proved decisive: VISTA achieved strong performance (0.50), substantially better than LDA, BERTopic, and Top2Vec, and only slightly below TopicGPT (1.18), demonstrating resilience in aligning short and long texts within a single semantic space.

Taken together, these results show that VISTA not only addresses long-standing limitations of probabilistic and embedding-based methods but also offers a scalable and semantically faithful alternative to LLM-driven prompt-based frameworks.

4.3. Remaining Limitations of VISTA and Future Work

The proposed approach exhibits two primary limitations: reliance on large language models (LLMs) and latency introduced by their use in multi-step processing. These challenges are interrelated, as each LLM call—particularly when applied to full-text representations of topic groups—introduces latency, typically on the order of several seconds per query. Furthermore, the multi-step distance calculation, which requires generating multiple summaries and alternate document representations, adds additional computational overhead.

Scalability represents another constraint. For very large datasets (e.g., collections exceeding 50,000 documents), the context window of current LLMs imposes strict limits on the amount of text that can be processed in a single pass. This can hinder efficiency and may necessitate partitioning or downsampling strategies.

Finally, the present study relies exclusively on OpenAI’s GPT-4o for topic labeling. To ensure robustness and generalizability, future research should examine the performance of VISTA across different LLM architectures and providers. Additionally, because VISTA’s labeling module is fully model-agnostic, future work will systematically compare GPT-4o with open-source LLMs, including LLaMA, DeepSeek, and related architectures, to evaluate how differences in model families affect semantic granularity, stability, and interpretability of generated topic labels. Furthermore, we did not conduct seed-level variance analysis for embeddings or summarizers, nor did we evaluate the effect of different LLM sampling parameters. Incorporating such statistical robustness tests would significantly expand the experimental scope and risk overfitting hyperparameters to the evaluation corpora. We consider these aspects important directions for future work aimed at systematically assessing stochastic variability in LLM-assisted topic modelling. Such comparative evaluation would clarify the extent to which our results depend on model-specific characteristics versus the general principles of the proposed framework.

In addition, several broader limitations should be acknowledged. First, reproducibility remains a challenge: as LLMs are inherently stochastic, small changes in parameters or random seeds may yield slightly different topic labels, complicating deterministic replication. Second, the present study evaluates VISTA exclusively on English-language datasets. While this follows standard practice in the topic-modelling literature and ensures comparability with established baselines, it also limits the ability to assess cross-lingual portability. Languages with richer morphology, different topical cue distributions, or non-nominal theme markers may require adaptations of the noun-only view or alternative feature selection strategies. Extending VISTA to multilingual and low-resource settings therefore represents an important direction for future research. Finally, practical considerations such as computational cost and the environmental footprint of repeated LLM queries may limit large-scale adoption, underscoring the importance of future work on efficiency and sustainability.

5. Conclusions

This study introduced VISTA, a multi-view, hierarchical, and interpretable framework for topic modeling. By combining complementary embeddings, MNN-based clustering with selective dimension analysis, pseudo-tweet representations, and LLM-driven labeling, VISTA overcomes key limitations of probabilistic, embedding-based, and prompt-based approaches. Experiments across three diverse corpora demonstrated that the framework consistently achieves high semantic coherence, balanced topic diversity, and robust interpretability, with particularly strong results in coherence. Beyond numerical performance, the case studies confirmed its ability to generate concise, domain-relevant labels that capture latent semantic intent more effectively than baseline models. While future work is needed to improve scalability and multilingual applicability, VISTA provides a practical and generalizable approach to discovering semantically rich topics across heterogeneous text collections.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/make7040162/s1, Supplementary Material S1. Evaluation Metrics. Formal definitions of the evaluation metrics (Topic Diversity, , , , and ) used in this study. While brief descriptions are given in the main text, full mathematical formulations are provided here for completeness and reproducibility.; Supplementary Material S2. Full text examples used in qualitative analysis. This section provides the complete documents referenced in the case studies discussed in the main text. Each example is shown in full to allow readers to verify the context from which model outputs were derived.; Supplementary Material S3. Datasets used to generate the Topic Tree visualizations (Figure 2 and Figure 3). The file contains source texts for the NASA Missions corpus and the Federal Assistance corpus. Each text is annotated with its topic node ID, corresponding to the labels shown in the visualizations. To ensure transparency and reproducibility, we intentionally provide each text in the exact format in which it was originally scraped, without additional normalization or editing. The datasets are drawn from diverse sources, including institutional web pages (e.g., NASA, HHS, NIH), official government reports, news portals, and social media platforms (e.g., Twitter/X, Facebook). This heterogeneity illustrates the robustness of the VISTA framework when applied to corpora that combine different styles, registers, and levels of formality.

Author Contributions

Conceptualization: T.G., D.B. and M.G.; Methodology: D.B. and M.G.: Software, T.G.; Validation, T.G. and D.B.; Formal Analysis, T.G. and D.B.; Investigation, T.G., D.B. and M.G.; Data Curation: T.G. and D.B.; Visualization: T.G. and M.G.; Supervision: D.B. and M.G.; Writing—original draft: T.G., D.B. and M.G.; Writing—review and editing: T.G., D.B. and M.G.; Funding acquisition: M.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the project “Implementation of cutting-edge research and its application as part of the Scientific Center of Excellence for Quantum and Complex Systems, and Representations of Lie Algebras”, Grant No. PK.1.1.10.0004, co-financed by the European Union through the European Regional Development Fund—Competitiveness and Cohesion Programme 2021–2027.

Data Availability Statement

The datasets analyzed in Section 3.4 (Quantitative Results) are publicly available from the following sources: BBC News Archive dataset: https://www.kaggle.com/code/binnyshukla/bbc-news-topic-modeling (accessed on 23 October 2025); US Bills dataset (BillSum): https://www.kaggle.com/datasets/akornilo/billsum (accessed on 23 October 2025); U.S. Agency News and Twitter Feed dataset: https://github.com/domjanbaric/vista/tree/main/data (accessed on 23 October 2025). The full texts of the documents used in Section 3.5 (Qualitative Case Studies) are provided in Supplementary Materials S2 (Examples S1–S3). The datasets used to generate the Topic Tree visualizations in Section 3.6 (NASA Missions and Federal Assistance) are provided in Supplementary Materials S3. The VISTA implementation and usage instructions are available at https://github.com/domjanbaric/vista (accessed on 23 October 2025).

Conflicts of Interest

Author Domjan Barić was employed by the company Aras™ Digital Products. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef] [PubMed]

- Chang, J.; Boyd-Graber, J.L.; Gerrish, S.; Wang, C.; Blei, D.M. Reading Tea Leaves: How Humans Interpret Topic Models. Adv. Neural Inf. Process. Syst. 2009, 22, 288–296. [Google Scholar]

- Boyd-Graber, J.; Hu, Y.; Mimno, D. Applications of Topic Models. Found. Trends® Inf. Retr. 2017, 11, 143–296. [Google Scholar] [CrossRef]

- Cao, J.; Xia, T.; Li, J.; Zhang, Y.; Tang, S. A density-based method for adaptive LDA model selection. Neurocomputing 2009, 72, 1775–1781. [Google Scholar] [CrossRef]

- Srivastava, A.; Sutton, C. Autoencoding Variational Inference for Topic Models. arXiv 2017, arXiv:1703.01488. [Google Scholar] [CrossRef]

- Sia, S.; Dalmia, A.; Mielke, S.J. Tired of Topic Models? Clusters of Pretrained Word Embeddings Make for Fast and Good Topics too! arXiv 2020, arXiv:2004.14914. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. arXiv 2018, arXiv:1802.05365. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar]

- Dieng, A.B.; Ruiz, F.J.R.; Blei, D.M. Topic modeling in embedding spaces. Trans. Assoc. Comput. Linguist. 2020, 8, 439–453. [Google Scholar] [CrossRef]

- Meng, Y.; Huang, J.X.; Wang, G.Y.; Wang, Z.H.; Zhang, C.; Zhang, Y.; Han, J.W.; Assoc Comp, M. Discriminative Topic Mining via Category-Name Guided Text Embedding. In Proceedings of the 29th World Wide Web Conference (WWW), Taipei, Taiwan, 20–24 April 2020; pp. 2121–2132. [Google Scholar]

- Thompson, L.; Mimno, D. Topic Modeling with Contextualized Word Representation Clusters. arXiv 2020, arXiv:2010.12626. [Google Scholar] [CrossRef]

- Pham, C.M.; Hoyle, A.; Sun, S.; Resnik, P.; Iyyer, M. TopicGPT: A Prompt-based Topic Modeling Framework. arXiv 2024, arXiv:2311.01449. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- OpenAi; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Wang, Z.; Shang, J.; Zhong, R. Goal-Driven Explainable Clustering via Language Descriptions. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language, Singapore, 6–10 December 2023. [Google Scholar]

- Xu, C.; Tao, D.; Xu, C. A Survey on Multi-view Learning. arXiv 2013, arXiv:1304.5634. [Google Scholar] [CrossRef]

- Martin, F.; Johnson, M. More Efficient Topic Modelling Through a Noun Only Approach. In Proceedings of the Australasian Language Technology Association Workshop 2015, Parramatta, Australia, 8–9 December 2015; pp. 111–115. [Google Scholar]

- Zhao, W.X.; Jiang, J.; Weng, J.; He, J.; Lim, E.-P.; Yan, H.; Li, X. Comparing Twitter and Traditional Media Using Topic Models. In Advances in Information Retrieval, Proceedings of the 33rd European Conference on IR Resarch, ECIR 2011, Dublin, Ireland, 18–21 April 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 338–349. [Google Scholar]

- Haghverdi, L.; Lun, A.T.L.; Morgan, M.D.; Marioni, J.C. Batch effects in single-cell RNA-sequencing data are corrected by matching mutual nearest neighbors. Nat. Biotechnol. 2018, 36, 421–427. [Google Scholar] [CrossRef]

- Dy, J.G.; Brodley, C.E. Feature Selection for Unsupervised Learning. J. Mach. Learn. Res. 2004, 5, 845–889. [Google Scholar]

- Aggarwal, C.C.; Hinneburg, A.; Keim, D.A. On the Surprising Behavior of Distance Metrics in High Dimensional Space. In Database Theory, Proceedings of the 8th International Conference London, UK, 4–6 January 2001; Van den Bussche, J., Vianu, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; pp. 420–434. [Google Scholar]

- Niu, L.-Q.; Dai, X.-Y. Topic2Vec: Learning Distributed Representations of Topics. arXiv 2015, arXiv:1506.08422. [Google Scholar] [CrossRef]

- Röder, M.; Both, A.; Hinneburg, A. Exploring the Space of Topic Coherence Measures. In Proceedings of the Eighth ACM International Conference on Web Search and Data Mining (WSDM), Shanghai, China, 2–6 February 2015; pp. 399–408. [Google Scholar]

- Mimno, D.; Wallach, H.; Talley, E.; Leenders, M.; McCallum, A. Optimizing Semantic Coherence in Topic Models. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, EMNLP 2011, Edinburgh, UK, 27–31 July 2011; pp. 262–272. [Google Scholar]

- Bouma, G.J. Normalized (pointwise) Mutual Information in Collocation Extraction. In Proceedings of the Biennial GSCL Conference 2009, Postdam, Germany, 30 September–2 October 2009. [Google Scholar]

- Newman, D.; Lau, J.H.; Grieser, K.; Baldwin, T. Automatic evaluation of topic coherence. In Proceedings of the Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Los Angeles, CA, USA, 2–4 June 2010; pp. 100–108. [Google Scholar]

- Deerwester, S.; Dumais, S.T.; Furnas, G.W.; Landauer, T.K.; Harshman, R. Indexing By Latent Semantic Analysis. J. Am. Soc. Inf. Sci. 1990, 41, 391–407. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).