Highlights

What are the main findings?

- We release RWSD, a large-scale UAV detection dataset of 14,592 real-world images covering diverse backgrounds, UAV sizes, and viewpoints to benchmark robust detectors.

- We present a lightweight feature enhancement model (LFEM) tailored for UAV detection, and extensive experimental results demonstrate the effectiveness and efficiency of our approach.

What is the implication of the main finding?

- RWSD provides the community with a challenging, openly available benchmark for evaluating UAV detectors under realistic, complex conditions.

- LFEM offers an accurate yet compact solution with the potential to be deployed on edge or mobile platforms for real-time aerial surveillance, narrowing the gap between research and field-ready anti-UAV systems.

Abstract

Real-time Unmanned Aerial Vehicle (UAV) detection is a growing research field centered on advanced computer vision and deep learning algorithms. However, the rise of unmanned aerial vehicles (UAVs) has sparked numerous concerns due to their potential for malicious use in illegal activities. To address these concerns, Vision-based object detection approaches for UAVs have recently been developed. Nonetheless, UAV detection in real-world scenarios, such as images with diverse backgrounds and various perspectives, remains underexplored. To fill this gap, we present a new UAV detection dataset called the real-world scenarios dataset (RWSD). This dataset leverages real-world footage and is constructed under challenging conditions, including complex backgrounds, varying UAV sizes, different perspectives, and multiple UAV types. It aims to support the development of robust UAV detection algorithms that can perform well in diverse and realistic conditions. YOLO, a popular one-stage object detection approach, is widely employed for UAV detection across different environments due to its efficiency and simplicity. However, this series of detectors encounters challenges in real-world scenarios, such as excessive computation and suboptimal detection rates. In this study, we propose a lightweight feature enhancement model (LFEM) to address these limitations. Specifically, we base our model on YOLOv5, introducing the Ghost module to improve UAV detection with fewer floating-point operations (FLOPs). Additionally, we incorporate the SIMAM module to enhance feature representation, particularly for real-world scenarios. Extensive experiments on the RWSD, UAVDT, and DOTAv1.0 datasets demonstrate the effectiveness of our approach. Our proposed LFEM achieves an impressive 93.2% mAP50, outperforming baseline models while maintaining a lightweight profile. Comparative and ablation studies further confirm that our algorithm is a promising and efficient solution for practical UAV detection tasks.

1. Introduction

With advancements in industrial technology, unmanned aerial vehicles (UAVs) have become mainstream due to their compact size, affordability, and ease of operation [1]. UAVs have diverse applications, such as logistics [2], transportation [3], and surveillance [4]. However, their growing use raises concerns about security, privacy, and public safety. Drones can be exploited for malicious purposes, such as illegal transport and unauthorized surveillance, emphasizing the need for effective detection and identification technologies.

Currently, no fully reliable UAV detection system exists. Most available detection and warning technologies rely on radar [5], radio frequency (RF) [6], and acoustic sensors [7], but these methods have significant drawbacks, including high costs and susceptibility to noise. Additionally, their performance in detecting smaller UAVs is constrained by limited electromagnetic signal transmission [8]. Consequently, these algorithms are not widely applicable and are mainly restricted to specific environments, such as airports and other public areas.

Deep learning-based methods have recently gained significant attention in various areas [9,10,11,12,13] of computer vision, particularly in object detection, due to their powerful feature extraction capabilities. These advancements present promising opportunities for developing a high-performance anti-UAV detection system. Numerous general object detection models are currently available, including Faster-RCNN [12], RetinaNet [14], and YOLO [15]. Although two-stage detectors like Faster-RCNN achieve state-of-the-art accuracy, they require a large number of parameters and substantial computational resources, which greatly limit their real-time applicability. In contrast, single-stage networks, such as YOLO, demonstrate exceptional performance in object detection and significantly outperform traditional hand-crafted methods as well as two-stage neural networks in terms of efficiency and speed. Moreover, a primary trend is the deep integration of sampling strategies into end-to-end frameworks, superseding traditional, disjointed data augmentation to enable more adaptive feature learning and precise localization [16]. In parallel, the evolution of model architectures continues; while Transformers offer powerful global modeling, their prohibitive cost has spurred research into more efficient and task-specialized variants [11,12,13,14,15,16,17,18,19]. Addressing the challenge at its source, generative models have emerged as a state-of-the-art solution, synthesizing high-quality, diverse data to overcome the fundamental scarcity of training samples [20,21].

The YOLOv5 model [22] is the most widely recognized within the YOLO family for its robustness in practical applications. However, when applied directly to UAV detection tasks, these general methods often fall short. Later, the YOLOv8 and YOLOv10 object detection algorithms [8,23] were subsequently introduced to enhance the model’s detection accuracy. Despite advancements in detection algorithms, effectively detecting small objects in real-world scenarios remains a significant challenge. Additionally, for mobile and embedded devices, the YOLOv5 model consumes substantial hardware resources and computational power, limiting the ability to run other algorithms concurrently. UAVs frequently blend into complex backgrounds with considerable noise and interference, which further complicates detection. To address these issues, several approaches, including modified YOLOv5 models that incorporate attention modules [24,25,26], have been proposed and have shown promising results.

Our primary objective is to enhance the performance and practicality of UAV detection by addressing critical challenges at both the data and methodological levels. To achieve this, our work makes two core advancements. First, at the data level, deep learning models typically require large datasets for robust performance, but existing datasets, such as AntiUAV [27] and MAV-VID [28], are limited in size and coverage, which restricts the training of high-performance models. To fully leverage existing detection methods for UAV detection at the data level and foster further advancements in this field, we introduce a new real-world scenarios dataset (RWSD), designed specifically for UAV detection. This dataset captures challenging conditions, including complex backgrounds, varying UAV sizes, diverse perspectives, and multiple UAV types, and is used to retrain multiple detection models. Second, at the methodological level, we propose a lightweight feature enhancement model (LFEM). By integrating the Ghost module into the YOLOv5 framework and introducing a dedicated feature enhancement block, our model achieves higher accuracy than the original YOLOv5 while simultaneously reducing its computational load, making it more suitable for real-world applications.

In conclusion, the main contribution of this work can be outlined as follows:

- (1)

- We introduce RWSD, a new, large-scale, and publicly available dataset for UAV detection. It is specifically designed to address real-world challenges, comprising 14,592 images across training, validation, and testing sets, and featuring diverse UAV types, sizes, and complex environmental conditions.

- (2)

- We present a lightweight feature enhancement model (LFEM) tailored for UAV detection. Our model achieves superior accuracy compared to the original YOLOv5 while significantly reducing computational costs. Extensive experimental results demonstrate the effectiveness and efficiency of our approach.

- (3)

- We benchmark state-of-the-art object detection methods on the proposed RWSD dataset. Additionally, we conduct a comprehensive investigation and analysis of the performance of various object detectors for UAV detection in real-world scenarios.

2. Related Work

This section provides a review of related works on UAV detection. Since our study centers on vision-based UAV detection, the related works are discussed below.

2.1. UAV Dataset

To achieve a robust model in computer vision tasks, the dataset quality is paramount. Consequently, various datasets tailored for UAV detection have been developed. Below, we review some significant related works in this area.

Object detection tasks often leverage widely available open-source datasets, such as PASCAL VOC [29] and ImageNet [30]. However, these datasets predominantly feature objects in isolation, lacking the contextual richness in which objects naturally occur. To address this limitation, the Microsoft COCO (Common Objects in Context) dataset [31] was introduced, comprising 330,000 images, 1.5 million object instances, 80 categories, pixel-level segmentation, and image captions. Despite their value for image classification, localization, and detection research, datasets like COCO, PASCAL VOC, and ImageNet offer limited content related to UAV imagery in diverse environments. This gap necessitated the creation of specialized datasets for advanced UAV detection systems.

The UAV dataset is an early drone detection dataset generated in simulated environments, widely used for academic competitions and related research [32]. The Det-Fly dataset [33], compiled by Zheng et al., consists of UAV-in-flight images captured by another UAV. This dataset incorporates various real-world conditions, including diverse backgrounds, viewing angles, object distances, flight altitudes, and lighting conditions. The Drone-vs-Bird Detection Challenge dataset [34] presents images with coexisting UAVs and birds, requiring the detector to differentiate between them accurately. The similarity in size, color, and shape between drones and birds poses a significant challenge for detection systems.

Additionally, the Quadcopter UAV [35] and Micro UAV [36] datasets focus on specific UAV types in complex environments, evaluating detection algorithms for quadcopter and micro-aircraft types. However, these datasets are limited to a single UAV type, reducing their applicability across diverse scenarios. The Anti-UAV dataset [27] includes visible and infrared dual-mode data, with videos captured in day and night settings. While offering a broad motion range, its activities are mostly concentrated in the central region with limited variance. The Complex Background Datasets [37] simulate challenging scenarios, such as intricate backgrounds and varying rainfall levels, providing performance benchmarks for adverse weather conditions.

In contrast, our dataset addresses these limitations by capturing data in diverse real-world scenarios under various environmental conditions, such as rain and day and night settings. The UAVs in our dataset are uniformly distributed across complex backgrounds both horizontally and vertically, enhancing the model’s robustness during training. Furthermore, it includes a wide variety of UAV types, such as fixed-wing aircraft and medium and miniature rotary-wing drones, making it more representative and suitable for real-world applications.

2.2. Object Detection Methodology

Object detection is a foundational task in computer vision and has been the subject of extensive research. Early methods primarily relied on traditional hand-crafted feature-based techniques, as outlined in [38]. However, with the advent of deep learning, convolutional neural networks (CNNs) have demonstrated remarkable improvements in object detection performance. Modern CNN-based detection frameworks are broadly classified into two-stage and one-stage approaches, distinguished by the inclusion or absence of a region proposal step.

Two-stage algorithms, known for their high accuracy, operate in two main phases: (1) generating candidate regions of interest and (2) refining these regions to produce final detections with bounding boxes. Notable examples include R-CNN [39], Fast R-CNN [40], and Faster R-CNN [12], introduced by Girshick and collaborators. In contrast, single-stage methods, such as SSD [41] and the YOLO series [8,15,23], are renowned for their efficiency and real-time performance. The YOLO (You Only Look Once) framework, initially introduced by Joseph et al. in 2016 [15], has undergone numerous advancements, resulting in improved versions that achieve higher detection precision. While some variants of YOLO emphasize model simplification and parameter reduction for deployment on mobile and embedded devices, others focus on boosting accuracy in complex environments [23,42]. DETR pioneered the use of Transformer architecture in object detection, eliminating hand-designed components (e.g., anchor generation, NMS) to enable fully end-to-end training and inference [43]. Based on this, deformable DETR [44] overcomes the original DETR’s limitations—slow convergence and poor small-object detection—by introducing a deformable attention module that focuses on sparse key sampling points, significantly accelerating convergence and improving computational efficiency. While transformer-based detectors like DETR and Deformable DETR have achieved remarkable performance on general benchmarks, their large model size (e.g., 41M parameters for DETR) and high computational demand make them less suitable for resource-constrained applications. FRANCK [45] is a specialized framework for Source-Free Object Detection (SFOD) with DETR-based models, addressing cross-domain detection challenges when source data is unavailable.

To balance computational efficiency and detection performance, lightweight models have gained attention. For instance, MobileNetV1 [46] replaces standard convolutions with depth-wise convolutions (DW-Conv), where each input channel has its own convolution kernel. This significantly reduces model parameters and accelerates inference, setting the stage for subsequent lightweight models [47,48]. MobileNetV2 [49] further enhances feature reuse and fusion by introducing inverted residual blocks (IRBs). Similarly, ShuffleNet adopts group convolutions to replace 1 × 1 convolutions [50], achieving even lower computational costs than MobileNet. However, this method sacrifices some inter-group information exchange, making it less effective for small-object detection.

Recent studies have also explored the use of attention mechanisms or feature recalibration modules to refine feature maps. A notable example is the Squeeze-and-Excitation (SE) module, which captures global contextual information and models inter-channel relationships using fully connected layers. This process emphasizes the most critical channels, improving feature refinement. Building on this, advanced modules have incorporated global contexts via convolutional aggregators, integrated long-range dependencies, unified attention with normalization, or utilized feature style cues for more robust representations. However, many of these approaches rely on hand-crafted attention weight calculations, which are computationally intensive and thus less suitable for mobile and embedded platforms.

To address the aforementioned challenges, we first introduce the Real-World Scenarios Dataset (RWSD), specifically designed for UAV detection in complex conditions. These conditions include diverse backgrounds, varying UAV sizes, different viewing perspectives, and multiple UAV types. This dataset aims to support the development and evaluation of detection models capable of operating effectively in real-world environments. To further improve model performance and facilitate deployment on embedded devices, this paper presents a Lightweight Feature Enhancement Model (LFEM) tailored to tackle these challenges. Our model incorporates two key components into the YOLO network: the Ghost module and the SIMAM attention block. The Ghost module generates more feature maps using fewer parameters, significantly reducing floating-point operations (FLOPs) without sacrificing detection accuracy. The SIMAM feature enhancement mechanism is integrated into the neck module to enhance feature selection and extraction before the detection heads, which is critical for distinguishing relevant features.

3. Methods

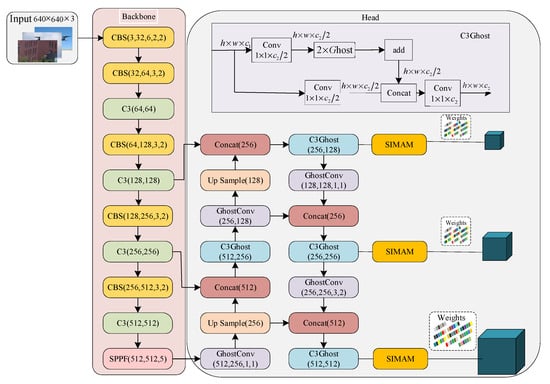

The overall architecture of the proposed Lightweight Feature Enhancement Model (LFEM) is illustrated in Figure 1. YOLOv5, a state-of-the-art and highly efficient end-to-end object detection model, is well-known for its exceptional accuracy and real-time capabilities. It comes in five variants of varying sizes, including YOLOv5s, YOLOv5m, and YOLOv5l, to meet diverse application requirements. For this study, we selected YOLOv5s as the base model, as it offers an optimal balance between model complexity and detection performance. The YOLOv5 framework comprises three primary components: Input, Backbone, and Head.

Specifically, the proposed dataset is used to train the network, where UAV RGB images are input to the detection backbone to extract feature maps. To enhance performance and reduce complexity, the standard convolution operations in the network are replaced with the Ghost convolution module. Additionally, the SIMAM module is integrated into the head component to enable efficient multi-level feature fusion. These fused and enhanced feature maps are then processed through three detection heads, which output predictions for confidence scores, object categories, and anchor boxes.

Figure 1.

Network architecture of the proposed method.

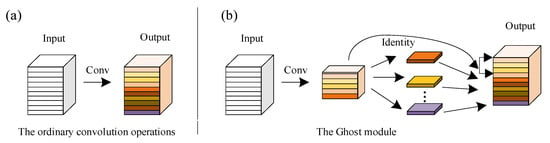

3.1. Ghost Lightweight Module

The number of parameters and FLOPs in the model are primarily influenced by convolution operations. To simplify the model, we replaced certain standard convolutions in the YOLOv5s network with Ghost modules [51]. Figure 2a,b highlight the differences between these two convolution techniques, and the detailed calculation process is as follows:

Figure 2.

The feature enhancement Ghost module. (a) The ordinary convolution operations. (b) The Ghost module.

Let the input and output feature maps be denoted as and respectively. Here, and represent the number of input and output channels, and denote the height of input and output feature maps, and and denote the width of input and output feature maps. The kernel size of convolution filters is represented as . In this convolution process, the required number of floating-point operations can be computed as , and this value typically exceeds an order of magnitude 105 due to the fact that and are typically quite large.

The Ghost lightweight module splits the convolutional layer into two segments, applying distinct calculation strategies to each. In the first segment, standard convolution is used, with a strictly controlled number of feature maps to keep computation manageable. In the second stage, additional feature maps are generated not by conventional convolution but through a simple “Linear Transformation”. Based on the analysis above, the total floating-point operations of the Ghost lightweight module are calculated to be , where () is the number of transformation operations conducted at a low cost, and the kernel size of linear operations is denoted as . We quantified the Ghost module’s improvements by calculating the ratio of floating-point operations, demonstrating its theoretical speed-up over standard convolution.

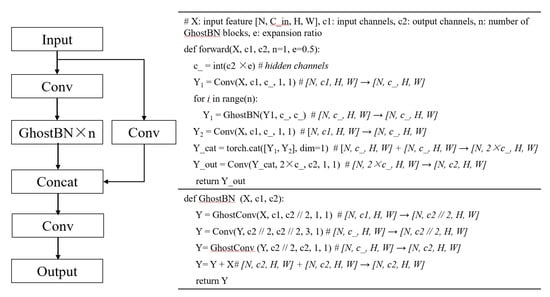

using the formula above, the FLOPs of the Ghost module are approximately s times lower compared to the ordinary convolution. Given its strong performance, we replaced standard convolutions in the neck network with GhostConv (purple Figure 1) and C3Ghost (blue Figure 1) modules for the enhanced CBS and C3 modules, significantly reducing both computation and model complexity. Therefore, we can take advantage of current machine learning libraries like PyTorch 2.1.2 to implement C3Ghost in just a few lines, as shown in Figure 3.

Figure 3.

C3Ghost module and a PyTorch-like implementation of C3Ghost.

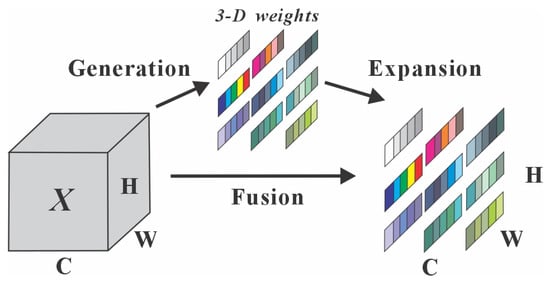

3.2. The Feature Enhancement Module

The attention module is commonly applied in deep learning to improve feature extraction and achieve high performance. However, most existing attention modules have two main limitations. Firstly, they generally enhance feature maps based on channel and spatial dimensions independently, making it challenging to learn attention weights from both dimensions simultaneously. Secondly, some attention modules rely on hyperparameters, requiring extensive expert knowledge to optimize performance. To address these issues, SIMAM, a parameter-free approach [52], is proposed, which uses 3D attention weights. Figure 4 shows the feature-enhanced module SIMAM. As is shown in Figure 4, SIMAM modules directly generate 3-D weights from features X, and then expand the generated weights for feature enhancement. Here, the same color denotes that a single scalar is employed for each point on the feature map.

Figure 4.

The feature-enhanced module SIMAM.

To efficiently generate 3D weights for an attention mechanism, we use the SIMAM method inspired by visual neuroscience. The core principle is that the most important neurons are those exhibiting strong spatial suppression, where an active neuron inhibits its neighbors. SIMAM identifies these high-priority neurons by measuring their linear separability from surrounding neurons; a high degree of separability indicates a strong suppression effect and thus, greater importance. Specifically, in this work, SIMAM is integrated into the proposed model to improve UAV detection performance. Inspired by neuroscience theory, SIMAM captures essential features using an energy function, which defines the energy for each neuron as follows:

Here, and represent the target neuron and other neurons within each channel of the input feature , with denoting the spatial dimension index. gives the number of neurons per channel. The transformation weights and bias, and , are defined as follows:

where the mean is computed across all the neurons within a channel, excluding . Based on the above operations, we can calculate the minimal energy as follows:

assuming that all pixels follow the same distribution, reducing computation. According to Equation (4), the weight for each neuron is represented as . As a result, the feature enhancement module is defined as follows:

Here, E groups across both channel and spatial dimensions. The sigmoid function is applied to prevent excessively large weight values.

4. Proposed Dataset

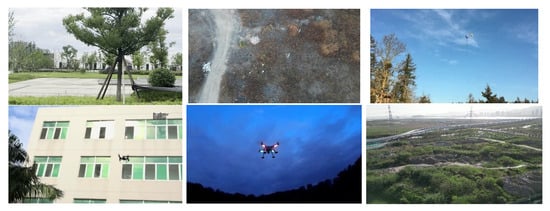

Datasets are a fundamental component of computer vision tasks, significantly contributing to the robustness and generalization capabilities of models. In this study, multiple datasets were reviewed and obtained from various publicly available or published sources. Following this review, a custom training dataset, called the Real-World Scenarios Dataset (RWSD) [53], was created by merging multiple existing datasets, e.g., QUADCOPTER, Drone Detection, MIDGARD dataset, and supplementing them with challenging conditions. Specifically, for the acquisition of the RWSD dataset, a DJI Matrice M600 Pro UAV platform was used to collect experimental data, with the UAV flown at varying altitudes to capture the images. Specifically, the dataset was collected from an aerial perspective, where a camera integrated into the UAV captured images of various ground objects and backgrounds. The proposed dataset comprises 14,592 images, including diverse backgrounds such as urban, indoor, outdoor, meadow, bright, and gloomy conditions, as shown in Figure 5. In addition, in our dataset, the UAV distributions are dispersed with a relatively uniform horizontal and vertical spread, which enhances the robustness of models trained on this data, as seen in Figure 6. The resulting dataset includes multiple UAV types, such as fixed-wing, medium, and micro-rotor drones, as is shown in Figure 7. Given that UAV detection methods in the literature have not been extensively investigated for multi-scale UAV detection, particularly under the influence of complex backgrounds like buildings, grass, and trees, we were motivated to consider various drone sizes from different viewing angles in the proposed dataset, as seen in Figure 8. To ensure the robustness of models trained on our dataset, we paid special attention to scale diversity. The RWSD dataset contains UAVs captured at various distances, resulting in a wide range of object scales from 30 × 30 to 600 × 600 pixels, which poses a significant challenge for multi-scale detection algorithms.

Figure 5.

Sample images with diverse backgrounds from RWSD dataset.

Figure 6.

Examples of UAV images in the dataset showing a relatively uniform distribution.

Figure 7.

Examples of multiple UAV types.

Figure 8.

Examples of varying UAV sizes.

5. Experiments and Results

This section primarily presents the experimental settings, benchmarks, implementation details, and results of this study. First, we evaluate the effectiveness of the improvements made to the proposed LFEM method by comparing its performance with other state-of-the-art models. Then, we conduct an ablation study to further analyze the method’s performance and validate the effectiveness of each component of the LFEM.

5.1. Implementation Details

To ensure a robust and reproducible evaluation, we adopted a meticulous data splitting strategy designed to prevent data leakage from video sequences. Specifically, all images originating from the same video sequence were kept together and exclusively assigned to one of the three subsets. These video sequences were then randomly allocated to the training, validation, and test sets at a 60%:20%:20% ratio. For the standalone images, a standard random image-level split was applied. This method guarantees that the model is tested on scenes it has never encountered during training, providing an unbiased and rigorous assessment of its generalization capabilities. The final dataset comprises 8931 images in the training set, 3047 images in the validation set, and 2614 images in the test set.

All experiments were conducted on the annotated RWSD dataset. The proposed method was implemented using PyTorch 2.1.2 in a deep learning environment and executed on Ubuntu 20.04 systems. The network was run on an NVIDIA RTX 4090 GPU with CUDA version 12.0. All models were trained from scratch with random initialization for 100 epochs, using a batch size of 64. The input image size was fixed at 640 × 640 pixels. The SGD optimizer with a learning rate of 0.001 was employed. We used mean average precision (mAP), precision, and recall as performance metrics to quantitatively evaluate the proposed model and other existing models, with higher values indicating better performance. To compare model complexity, we also recorded the parameter count and FLOPs for each model, where lower values for these metrics indicate a lighter model. Each experiment was repeated five times with different random seeds, with the results reported as the average performance and corresponding 95% confidence intervals.

5.2. Benchmarks

This study aims to investigate how real-world conditions impact the performance of UAV detection methods and evaluate the strengths and weaknesses of the selected methods. To this end, a custom test dataset, the RWSD (as described earlier), is curated to include challenging conditions such as varying UAV scales, diverse backgrounds, and multiple viewpoints. The object detection methods chosen for this evaluation include prominent one-stage detectors like YOLOv5, YOLOv8, and RetinaNet, as well as the widely used two-stage detector, Faster R-CNN. The following subsections provide an overview and description of each selected detector. A comparative summary of several object detection algorithm categories can be found in Table 1.

Faster R-CNN [12]: Faster R-CNN is a two-stage object detection network designed to address the speed limitations of the original R-CNN model. In Fast R-CNN, the ROI Pooling layer extracts feature vectors of uniform length from each Region of Interest (ROI) in an image. Faster R-CNN builds on this by replacing R-CNN’s three-stage approach with a more efficient single-stage network. This allows convolutional layers to be computed just once and shared across all region proposals (ROIs). In terms of accuracy, Faster R-CNN outperforms R-CNN, offering significant improvements in both speed and detection performance.

RetinaNet [14]: RetinaNet is a one-stage object detection network that incorporates a Feature Pyramid Network (FPN). The backbone network extracts features from the input images, which are then processed by the FPN to create a pyramid of multi-scale feature maps, enabling the detection of objects at various scales. Specifically designed to address challenges like imbalanced data and varying object sizes, RetinaNet employs the Focal Loss function in conjunction with the FPN. This unique architecture helps the model effectively focus on hard-to-detect objects, improving performance in these challenging scenarios.

YOLOv5 [15]: YOLOv5 consists of four main components: input, backbone network, neck network, and output. The core convolutional module, CBS, integrates batch normalization and a SiLU activation function to address the gradient vanishing problem. The backbone employs CSPn, which consists of two branches—one with a series of Bottleneck modules and the other with a CBS convolution block. This dual-branch structure enhances feature extraction by deepening the network. The input component optimizes data and adjusts anchor boxes, while the backbone network, utilizing two CSP1 and two CSP3 modules, extracts features. The neck network integrates multi-level features through successive down-sampling and up-sampling, while the output head performs bounding box regression and applies Non-Maximum Suppression (NMS) for accurate target detection.

Table 1.

Comparison and summary of different types of object detection algorithms. The source code is available at https://github.com/sunlight-622/a-lightweight-feature-enhancement-model-LFEM- (accessed on 15 October 2025).

Table 1.

Comparison and summary of different types of object detection algorithms. The source code is available at https://github.com/sunlight-622/a-lightweight-feature-enhancement-model-LFEM- (accessed on 15 October 2025).

| Model Name | Architectures | Key Features | Tasks |

|---|---|---|---|

| Faster-RCNN | CNN, Region Proposal Network, RoI Pooling, Classification & Bounding Box Regression | Two-stage detector, Anchor-based | Object Detection, Instance Segmentation |

| RetinaNet | ResNet + FPN, Classification Subnet, BBox Subnet, Anchor | One-stage detector, Focal Loss for class imbalance, Multi-scale feature fusion | Object Detection, Keypoint Estimation |

| YOLOv5 | CSPDarknet53, Path Aggregation Network, Decoupled Detection Head | Anchor-free detection, SWISH activation, PANet | Object Detection, Basic Instance Segmentation |

| YOLOv8 | CSPDarknet (C2f), Path Aggregation Network, Anchor-Free Decoupled Head | GANs, anchor-free detection | Object Detection, Instance Segmentation, Panoptic Segmentation, Keypoint Estimation |

| YOLOv10 | Enhanced CSPNet, Path Aggregation Network | Anchor-free detection, SWISH activation, PANet | Object Detection |

| LFEM | CSPDarknet53, Path Aggregation Network, Decoupled Detection Head, SIMAM, Ghost module | SIMAM feature enhancement; Ghost lightweight module | Object Detection |

YOLOv8 [8]: YOLOv8 is an advanced iteration of YOLOv5, developed by S. Rath. Building upon the foundation of its predecessors, YOLOv8 introduces several upgrades, including an improved backbone network, an anchor-free split head, and refined loss functions. These enhancements increase the model’s performance, versatility, and efficiency, allowing it to better handle complex detection tasks and improve overall detection accuracy.

YOLOv10 [23]: YOLOv10, a next-generation real-time object detector developed by Tsinghua University, achieves a balance between accuracy and efficiency by pioneering a consistent dual assignment strategy for NMS-free, end-to-end detection. This approach, combined with a holistic efficiency- and accuracy-driven architecture that optimizes the backbone, neck, and head, allows the model to reduce parameters while maintaining superior performance.

5.3. Experimental Results

Contrast experiments: The state-of-the-art methods, including Faster R-CNN, RetinaNet, YOLOv5, YOLOv8, and YOLOv10, were evaluated on the proposed RWSD UAV detection dataset. Table 2 presents the experimental results for each method. The performance is assessed using the mean average precision (mAP) metric, specifically mAP50 and mAP50-95, which are widely used in computer vision for benchmarking object detection models. In this study, all models were trained for 100 epochs to ensure a fair comparison.

Table 2.

An overview of the performance of various object detection models for UAV detection on the proposed RWSD dataset, i.e., 95% confidence intervals reported after the symbol ±.

Table 2.

An overview of the performance of various object detection models for UAV detection on the proposed RWSD dataset, i.e., 95% confidence intervals reported after the symbol ±.

| Method | Param. (M) | mAP50 (%) | mAP50-95 (%) |

|---|---|---|---|

| Faster-RCNN | 41.70 | 73.39 ± 0.82 | 46.49 ± 0.73 |

| RetinaNet | 36.70 | 76.20 ± 0.91 | 43.44 ± 1.11 |

| YOLOv5 | 8.10 | 90.62 ± 0.11 | 56.33 ± 0.28 |

| YOLOv8 | 11.10 | 84.96 ± 0.41 | 53.98 ± 0.32 |

| YOLOv10 | 7.02 | 88.70 ± 0.66 | 56.50 ± 0.50 |

| LFEM | 5.62 | 93.04 ± 0.54 | 58.95 ± 0.43 |

As shown in Table 2, the proposed LFEM method outperforms all other models across the evaluation metrics while reducing the number of parameters. In contrast, the Faster R-CNN and RetinaNet models perform poorly on the challenging RWSD dataset, which includes various complex scenarios. As a result, these models fail to meet the detection requirements for real-world applications. Although YOLOv5, YOLOv8, and YOLOv10 demonstrate higher detection confidence for UAVs, their performance still falls short when compared to the LFEM method. In contrast, LFEM proves that a meticulously crafted CNN architecture can outperform these models with minimal complexity, a critical advantage for real-time scenarios.

Evaluation on Public Benchmarks: To validate the effectiveness of our proposed LFEM model, we conducted experiments on two public datasets: DOTAv1.0 [54] for remote sensing scenarios and UAVDT [55] for drone-based object detection. We compared LFEM against three mainstream methods: YOLOv10, YOLOv8, and YOLOv5. Performance was evaluated using mAP50 (mean Average Precision at 50% Intersection over Union) and Recall. To ensure statistical robustness, each experiment was repeated five times with different random seeds, with the results reported as the average performance and corresponding 95% confidence intervals (denoted by ±), as detailed in Table 3. The results on the UAVDT dataset show that LFEM significantly outperformed all other methods, achieving an mAP50 of 54.79 ± 0.40% and a Recall of 52.64 ± 1.28%. This highlights LFEM’s distinct advantage in drone-based object detection. On the more challenging DOTAv1.0 dataset, while the performance gap between methods narrowed, LFEM still maintained its lead. In comparison, YOLOv8 and YOLOv5 delivered comparable performance, whereas YOLOv10′s mAP50 was slightly higher than YOLOv8′s. In conclusion, LFEM consistently demonstrated superior detection performance across both datasets while utilizing the fewest parameters. The tighter confidence intervals for LFEM across all metrics further underscore its excellent robustness and stability. Collectively, these findings confirm LFEM’s outstanding capability in achieving an optimal balance between model lightweighting and high performance.

Table 3.

Performance of various object detection models on UAVDT and DOTAv1.0, i.e., 95% confidence intervals reported after the symbol ±.

Table 3.

Performance of various object detection models on UAVDT and DOTAv1.0, i.e., 95% confidence intervals reported after the symbol ±.

| Method | Param. (M) | UAVDT | DOTAv1.0 | ||

|---|---|---|---|---|---|

| mAP50 (%) | Recall (%) | mAP50 (%) | Recall (%) | ||

| Yolov10 | 7.02 | 53.99 ± 1.90 | 50.68 ± 0.11 | 72.36 ± 0.27 | 67.63 ± 0.53 |

| Yolov8 | 11.10 | 49.75 ± 1.33 | 48.75 ± 0.80 | 71.88 ± 0.21 | 69.19 ± 0.13 |

| Yolov5 | 8.10 | 52.50 ± 0.77 | 48.41 ± 1.97 | 69.61 ± 0.37 | 67.61 ± 0.17 |

| LFEM | 5.62 | 54.79 ± 0.40 | 52.64 ± 1.28 | 73.10 ± 0.16 | 69.45 ± 0.40 |

Ablation study: To assess the effectiveness of the lightweight Ghost module and the feature enhancement SIMAM module, an ablation study of the proposed LFEM algorithm was conducted. The Ghost modules, which replace standard convolutions and C3 blocks in the neck to create a lightweight feature pyramid, demonstrated their ability to significantly reduce computational load while maintaining nearly identical performance compared to the baseline. As shown in Table 4, the number of parameters was reduced by 20.0%, and GFLOPs decreased by 15.7%. Experimental results demonstrate that the proposed LFEM method significantly improves inference speed while maintaining detection accuracy. Compared to the baseline model, LFEM reduces the inference time from 4.80 ms to 4.30 ms, achieving a 10.4% improvement in inference efficiency. Despite these reductions, the mAP0.5 only dropped by 0.3%, while the recall rate improved by 0.8%. Moreover, the integration of the SIMAM module, strategically placed at the terminus of the backbone and the apex of the detection head to refine high-level semantic features, further improved performance. By enabling the network to focus on relevant target objects through 3D attention weights on feature maps, this enhancement boosted detection performance, particularly in complex real-world scenarios. Notably, SIMAM does not introduce additional parameters, maintaining the overall parameter count. After incorporating the SIMAM module, the network’s precision and recall increased by 1.24% and 1.75%, respectively, marking a substantial improvement in detection performance.

Table 4.

Ablation test result for the proposed LFEM method, i.e., 95% confidence intervals reported after the symbol ±.

Table 4.

Ablation test result for the proposed LFEM method, i.e., 95% confidence intervals reported after the symbol ±.

| Method | Param. (M) | GFLOPs | Inf. Time (ms) | Precision (%) | Recall (%) | mAP50 (%) | mAP95 (%) |

|---|---|---|---|---|---|---|---|

| Yolov5 (Baseline) | 7.02 | 15.9 | 4.80 | 95.37 ± 0.70 | 85.74 ± 0.42 | 90.62 ± 0.11 | 56.33 ± 0.28 |

| +SIMAM | 7.02 | 15.9 | 5.20 | 96.61 ± 0.20 | 86.99 ± 0.60 | 92.01 ± 0.22 | 58.5 ± 0.37 |

| +Ghost | 5.62 | 13.4 | 4.10 | 95.07 ± 0.71 | 85.58 ± 0.55 | 90.33 ± 0.29 | 56.01 ± 0.31 |

| LFEM | 5.62 | 13.4 | 4.30 | 96.57 ± 0.41 | 87.49 ± 0.74 | 93.04 ± 0.54 | 58.95 ± 0.43 |

Visualization of the prediction results: In addition to the quantitative evaluation of the proposed LFEM method using precision, recall, and mAP metrics, sample detection results are shown in Figure 9. The detection results for UAVs under various challenging conditions—such as complex backgrounds, varying UAV sizes, different perspectives, and multiple UAV types—are presented in panels YOLO Figure 9a–h. As seen in Figure 9a,b, the proposed method successfully detects small UAVs. Even in complex environments with backgrounds containing buildings, trees, and grass, as shown in Figure 9a,d, the detection algorithm maintains high accuracy. In Figure 9b, the method also effectively identifies UAVs that share similar colors and shapes with surrounding objects, such as stones in grassy areas. Furthermore, in Figure 9g, where there is significant overlap between the UAV and background, nearly all UAVs are still detected successfully. These results collectively highlight the precision and robustness of the proposed algorithm.

Figure 9.

Examples of visualized detection results on the test data. (a) Detection of small UAVs in a complex background. (b) Identification of UAVs with colors and shapes similar to surrounding stones. (c,d) Detection in a complex environment with buildings, trees, and grass. (e,f) Detection of UAVs with scale diversity. (g,h) Robust UAV detection under varying weather conditions.

6. Discussion and Conclusions

In this work, we propose the Real-World Scenarios Dataset (RWSD) for UAV detection, which is divided into three subsets (training, testing, and validation), with all images manually and precisely annotated. This dataset contributes to the development of more robust UAV detection algorithms under realistic conditions. Furthermore, a novel algorithm, referred to as LFEM, has been developed to improve UAV detection performance. The Ghost module is employed to reduce the FLOPs without compromising detection performance. Additionally, the SIMAM feature enhancement module is introduced to extract key features from the feature map, thereby improving feature representation for small objects. Notably, the recall of YOLOv5 is boosted to 90.5% on the RWSD dataset, an increase of 5.1%. Comparative experiments and visualization results also demonstrate that the proposed method outperforms other popular algorithms.

While our proposed LFEM model demonstrates promising performance, a critical analysis of its limitations is essential for a balanced understanding of its capabilities and for guiding future research. Firstly, the model’s performance is constrained by specific environmental and physical factors. Our systematic error analysis indicates that the model struggles most with motion blur and heavy occlusion, which are prevalent in high-speed drone navigation. These conditions lead to a high rate of false negatives, as critical structural features become indistinct. Furthermore, while not explicitly tested, we anticipate that performance would degrade under adverse weather conditions such as heavy rain or fog, which are not well represented in our current dataset. Secondly, the evaluation, while statistically rigorous, is confined to an offline, server-based environment. Our experiments, conducted on an RTX 4090, do not fully capture the practical challenges of on-board deployment. Its real-time performance (e.g., FPS) on resource-constrained, low-power hardware typical of drones (e.g., NVIDIA Jetson series) remains to be validated. Thirdly, the model’s generalizability is limited by the dataset. The model was trained and evaluated exclusively on the RWSD dataset. While cross-validation provides a robust estimate of performance within this domain, the model’s ability to generalize to novel aerial imagery with different camera angles, altitudes, or target objects is an open question. The current reliance on a single, fixed dataset limits the broader applicability of our findings.

Future research will focus on three primary directions aimed at enhancing the model’s robustness, reliability, and adaptability in real-world scenarios. Firstly, future work will focus on enhancing the model’s robustness against key environmental challenges, specifically motion blur and extreme weather, which currently cause significant performance degradation. Motion blur, prevalent with fast-moving drones, obscures critical structural features and distorts the object’s shape, leading to missed detections and localization errors. We will integrate deblurring modules and leverage temporal information for dynamic detection. To address environmental limitations, we will augment our dataset using GANs and explore a multi-modal framework that fuses visual data with infrared or radar. Secondly, to further ensure the scientific rigor of our methodology, we will adopt k-fold cross-validation to provide a more robust and reliable estimate of the model’s generalization ability, mitigating the biases of a fixed data split. Secondly, we will bridge the gap between offline evaluation and real-world application by focusing on on-board integration. To validate the lightweight claim in a practical setting, a key next step is to deploy LFEM on representative drone hardware, such as an NVIDIA Jetson platform. We will measure and report real-time metrics, including Frames Per Second (FPS), power consumption, and memory usage. This will provide a concrete demonstration of the model’s suitability for real-time on-board processing and address the journal’s emphasis on practical relevance. We will also discuss the engineering challenges associated with this integration. Additionally, beyond detection, we will envision extending our framework to encompass more complex UAV-centric tasks, such as multi-object detection, fine-grained classification for identifying specific UAV models, and temporal tracking to establish continuous flight paths, thereby significantly broadening the practical applicability of our research.

Author Contributions

Conceptualization, Y.H. and D.B.; methodology, Y.H.; software, X.Y.; validation, D.L., Y.L. and X.L.; formal analysis, H.H.; investigation, H.H.; resources, X.L.; data curation, X.Y.; writing—original draft preparation, Y.H.; writing—review and editing, Y.H.; visualization, D.L.; supervision, D.B.; project administration, D.B. and Y.L.; funding acquisition, D.B., X.L. and Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Reward Funds for Research Project of Tianmushan Laboratory, grant number No. TK-2025-D-005. This research was supported by the Zhejiang Provincial Natural Science Foundation of China, grant number LZ24E050006. This research was supported by the Research Start-up Funds of Hangzhou International Innovation Institute of Beihang University, grant number No.2024KQ089.

Data Availability Statement

The RWSD dataset generated and analyzed during the current study is available in the Zenodo repository at the Real-World Scenarios Dataset (RWSD). The dataset is licensed under the Creative Commons Attribution 4.0 International License (CC BY 4.0).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lort, M.; Aguasca, A.; Lopez-Martinez, C.; Marín, T.M. Initial evaluation of SAR capabilities in UAV multicopter platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 127–140. [Google Scholar] [CrossRef]

- Škrinjar, J.P.; Škorput, P.; Furdić, M. Application of Unmanned Aerial Vehicles in Logistic Processes New Technologies, Development and Application 4; Springer: Berlin/Heidelberg, Germany, 2019; pp. 359–366. [Google Scholar]

- Xu, Y.; Yu, G.; Wang, Y.; Wu, X.; Ma, Y. Car Detection from Low-Altitude UAV Imagery with the Faster R-CNN. J. Adv. Transp. 2017, 2017, 2823617. [Google Scholar] [CrossRef]

- Cheng, H.; Lin, L.; Zheng, Z.; Guan, Y.; Liu, Z. An autonomous vision-based target tracking system for rotorcraft unmanned aerial vehicles. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: New York, NY, USA, 2017; pp. 1732–1738. [Google Scholar]

- Hoffmann, F.; Ritchie, M.; Fioranelli, F.; Charlish, A.; Griffiths, H. Micro-Doppler based detection and tracking of UAVs with multistatic radar. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; IEEE: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Abunada, A.H.; Osman, A.Y.; Khandakar, A.; Chowdhury, M.E.H.; Khattab, T.; Touati, F. Design and implementation of a RF based anti-drone system. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020; IEEE: New York, NY, USA, 2020; pp. 35–42. [Google Scholar]

- Chang, X.; Yang, C.; Wu, J.; Shi, X.; Shi, Z. A surveillance system for drone localization and tracking using acoustic arrays. In Proceedings of the 2018 IEEE 10th Sensor Array and Multichannel Signal Processing Workshop (SAM), Sheffield, UK, 8–11 July 2018; IEEE: New York, NY, USA, 2018; pp. 573–577. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics Yolov8, 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 15 October 2024).

- Gao, G.; Yu, Y.; Yang, M.; Chang, H.; Huang, P.; Yue, D. Cross-resolution face recognition with pose variations via multilayer locality-constrained structural orthogonal procrustes regression. Inf. Sci. 2020, 506, 19–36. [Google Scholar] [CrossRef]

- Gao, G.; Yu, Y.; Xie, J.; Yang, J.; Yang, M.; Zhang, J. Constructing multilayer locality-constrained matrix regression framework for noise robust face super-resolution. Pattern Recognit. 2021, 110, 107539. [Google Scholar] [CrossRef]

- Gao, G.; Yu, Y.; Yang, J.; Qi, G.-J.; Yang, M. Hierarchical deep CNN feature set-based representation learning for robust cross-resolution face recognition. IEEE Trans. Circuits Syst. Video Technol. 2020, 32, 2550–2560. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8–10 and 15–16, 2016, Proceedings, Part II; 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Ross, T.-Y.; Dollár, G. Focal loss for dense object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Huang, Z.; Zhang, C.; Jin, M. Better Sampling, Towards Better End-to-End Small Object Detection. In International Conference on Computer Animation and Social Agents; Springer: Singapore, 2024; Volume 2374. [Google Scholar]

- Dai, T.; Wang, J.; Guo, H. FreqFormer: Frequency-aware transformer for lightweight image super-resolution. In Proceedings of the International Joint Conference on Artificial Intelligence, Jeju, Republic of Korea, 3–9 August 2024. [Google Scholar]

- Chun, L.; Wang, X.; Lv, F. Vit-comer: Vision transformer with convolutional multi-scale feature interaction for dense predictions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Zhang, X.; Zhang, Y.; Yu, F. HiT-SR: Hierarchical transformer for efficient image super-resolution. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024. [Google Scholar]

- Fan, L. Diffusion-based continuous feature representation for infrared small-dim target detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5003617. [Google Scholar] [CrossRef]

- Khanna, S.; Liu, P.; Zhou, L. DiffusionSat: A Generative Foundation Model for Satellite Imagery. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Ultralytics. YOLOv5: YOLOv5 in PyTorch. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 15 October 2024).

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Munir, A.; Siddiqui, A.J.; Anwar, S.; El-Maleh, A.; Khan, A.H.; Rehman, A. Vision-based UAV Detection under Adverse Weather Conditions. Authorea Prepr. 2023. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Luo, X.; Shao, B.; Cai, Z.; Wang, Y. A lightweight YOLOv5-FFM model for occlusion pedestrian detection. arXiv 2024, arXiv:2408.06633. [Google Scholar] [CrossRef]

- Jiang, N.; Wang, K.; Peng, X.; Yu, X.; Wang, Q.; Xing, J.; Li, G.; Zhao, J.; Guo, G.; Han, Z. Anti-UAV: A large multi-modal benchmark for UAV tracking. arXiv 2021, arXiv:2101.08466. [Google Scholar]

- Rodriguez-Ramos, A.; Rodriguez-Vazquez, J.; Sampedro, C.; Campoy, P. Adaptive inattentional framework for video object detection with reward-conditional training. IEEE Access 2020, 8, 124451–124466. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6–12, 2014, Proceedings, Part V; 13; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Anon. Available online: https://www.kaggle.com/datasets/dasmehdixtr/drone-dataset-uav (accessed on 12 January 2025).

- Zheng, Y.; Chen, Z.; Lv, D.; Li, Z.; Lan, Z.; Zhao, S. Air-to-air visual detection of micro-uavs: An experimental evaluation of deep learning. IEEE Robot. Autom. Lett. 2021, 6, 1020–1027. [Google Scholar] [CrossRef]

- Coluccia, A.; Fascista, A.; Schumann, A.; Sommer, L.; Dimou, A.; Zarpalas, D.; Akyon, F.C.; Eryuksel, O.; Ozfuttu, K.A.; Altinuc, S.O. Drone-vs-bird detection challenge at IEEE AVSS2021. In Proceedings of the 17th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Washington, DC, USA, 16–19 November 2021; IEEE: New York, NY, USA, 2021; pp. 1–8. [Google Scholar]

- Pawełczyk, M.; Wojtyra, M. Real world object detection dataset for quadcopter unmanned aerial vehicle detection. IEEE Access 2020, 8, 174394–174409. [Google Scholar] [CrossRef]

- Walter, V.; Vrba, M.; Saska, M. On training datasets for machine learning-based visual relative localization of micro-scale UAVs. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: New York, NY, USA, 2020; pp. 10674–10680. [Google Scholar]

- Munir, A.; Siddiqui, A.J.; Anwar, S. Investigation of UAV Detection in Images with Complex Backgrounds and Rainy Artifacts. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 221–230. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: New York, NY, USA, 2008; pp. 1–8. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I; 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Yao, H.; Zhao, S.; Lu, S.; Chen, H.; Li, Y.; Liu, G.; Xing, T.; Yan, C.; Tao, J.; Ding, G. Source-Free Object Detection with Detection Transformer. IEEE Trans. Image Process. 2025, 34, 5948–5963. [Google Scholar] [CrossRef]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning (PMLR), Virtual Event, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Zhang, J.; Li, X.; Li, J. Rethinking Mobile Block for Efficient Neural Models. arXiv 2023, arXiv:2301.01146. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Li, Y.; Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Yuan, L.; Liu, Z.; Zhang, L.; Vasconcelos, N. Micronet: Improving image recognition with extremely low flops. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 468–477. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (PMLR), Virtual Event, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Han. The Real-World Scenarios Dataset (RWSD) [Data Set]. 2025. Zenodo. Available online: https://zenodo.org/records/17694811 (accessed on 15 October 2025).

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Zhang, L. DOTA: A Large-scale Dataset for Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.G.; Yang, Y. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).