Highlights

What are the main findings?

- This study proposes a novel hierarchical visible-infrared fusion framework that integrates feature-level fusion with an Environment-Aware Dynamic Weighting (EADW) mechanism and decision-level fusion with D-S evidence theory for un-certainty management.

- The proposed framework demonstrates significant performance improvements, with a significant enhancement in detection capability and system robustness for Low-Slow-Small (LSS) UAV clusters in complex environments, particularly under challenging conditions such as nighttime and haze.

What are the implications of the main findings?

- The work provides an efficient and reliable technical solution for LSS-UAV cluster detection, which is critical for enhancing low-altitude security systems.

- The success of the EADW-DS fusion architecture offers a new paradigm for mul-ti-modal information fusion, highlighting the importance of combining adaptive feature fusion with uncertainty-aware decision fusion.

Abstract

Addressing the technical challenges in detecting Low-Slow-Small Unmanned Aerial Vehicle (LSS-UAV) cluster targets, such as weak signals and complex environmental interference coupling with strong features, this paper proposes a visible-infrared multi-modal fusion detection method based on deep learning. The method utilizes deep learning techniques to separately identify morphological features in visible light images and thermal radiation features in infrared images. A hierarchical multi-modal fusion framework integrating feature-level and decision-level fusion is designed, incorporating an Environment-Aware Dynamic Weighting (EADW) mechanism and Dempster-Shafer evidence theory (D-S evidence theory). This framework effectively leverages the complementary advantages of feature-level and decision-level fusion. This effectively enhances the detection and recognition capability, as well as the system robustness, for LSS-UAV cluster targets in complex environments. Experimental results demonstrate that the proposed method achieves a detection accuracy of 93.5% for LSS-UAV clusters in complex urban environments, representing an average improvement of 18.7% compared to single-modal methods, while the false alarm rate is reduced to 4.2%. Furthermore, the method demonstrates strong environmental adaptability, maintaining high performance under challenging conditions such as nighttime and haze. This method provides an efficient and reliable technical solution for LSS-UAV cluster target detection.

1. Introduction

In recent years, LSS-UAVs have seen increasingly widespread applications in various civil and military fields due to their small physical size (wingspan < 1 m), low flight speed (5–15 m/s), low flight altitude (<500 m), and cluster cooperative operation characteristics [1]. However, the rapid increase in LSS-UAV numbers has highlighted low-altitude security concerns, posing severe challenges to existing low-altitude detection systems [2,3,4]. Traditional single-modal detection methods exhibit significant limitations in complex environments: radar detection is susceptible to ground clutter and bird interference [5]; visible light detection is highly dependent on lighting conditions, with performance drastically declining at night or under low illumination [6]; infrared detection is significantly affected by ambient temperature, and urban heat island effects lead to increased false alarm rates [7]. Consequently, multi-modal fusion detection technology with strong environmental adaptability has become a research hotspot and inevitable trend in the field of LSS-UAV detection [8,9,10,11,12].

Current LSS-UAV detection research primarily focuses on two directions: optimization of single-modal detection technologies and multi-modal information fusion. In terms of single-modal optimization, researchers have improved radar detection performance by enhancing signal processing algorithms (e.g., wavelet denoising, pulse compression) [13], or employed deep learning models (e.g., YOLO series, Faster R-CNN) to enhance optical target detection capabilities [14,15].

Regarding multi-modal information fusion, existing fusion strategies can be categorized into three types: data-level fusion, feature-level fusion, and decision-level fusion. Common modal combinations include visible light and infrared (RGBT), vision and audio, and vision and radar. Reference [16] utilized generative adversarial network (GAN)-based image fusion techniques to enhance input image information and improved the YOLOv5 target detection model by incorporating an attention mechanism, significantly enhancing UAV detection performance. Reference [17] attempted to use satellite remote sensing maps to provide background environmental information as an auxiliary to radar signal detection, incorporating target background features into the detection decision to improve accuracy and robustness. Reference [18] proposed a multi-scale differential attention fusion detection network. By calculating image illumination information and target local contrast, it guided the multi-scale differential attention module to perform deep cross-fusion of intra-modal and inter-modal features in visible and infrared images, improving the detection and recognition accuracy of drone-to-ground targets under low-light conditions. Reference [19] proposed a fusion detection system integrating optical sensors, acoustic arrays, and radar. It used the MUSIC algorithm for acoustic array localization, enhanced system detection capability through radar technology, and combined short-wave infrared and visible light sensor images for accurate UAV positioning. Overall, multi-modal fusion methods have made significant progress in LSS-UAV detection. However, challenges remain, such as significant inter-modal information differences, multi-scale target variations, and complex, dynamic detection scenarios. Furthermore, many existing fusion strategies lack adaptive mechanisms to handle real-time environmental changes and effective uncertainty management to resolve decision conflicts, which are critical for robust performance in real-world applications [20].

Addressing the aforementioned issues, this paper proposes a visible-infrared multi-modal fusion detection framework based on deep learning. Its core innovations include: (1) Constructing dedicated and robust deep learning models (Visible: Improved ResNet-50 + DPAM; Infrared: Dual-branch ConvNeXt-Tiny + UNet) for extracting discriminative single-modal features under complex interference; (2) Designing a novel hierarchical fusion architecture combining feature-level and decision-level fusion. An Environment-Aware Dynamic Weighting (EADW) mechanism adaptively fuses multi-modal features based on real-time environmental states, and D-S evidence theory effectively fuses feature-level decisions with independent single-modal decisions, managing uncertainty and resolving conflicts. This effectively solves the problems of poor environmental adaptability, lack of information interaction, and sensitivity to decision conflicts inherent in traditional fusion methods, significantly improving the accuracy and robustness of LSS-UAV cluster target detection in complex scenarios. (3) Providing comprehensive experimental validation and analysis on a public benchmark dataset, demonstrating superior performance and robustness compared to state-of-the-art methods.

2. Deep Learning-Based Multi-Modal Feature Extraction

2.1. Relevant Deep Learning Theories and Methods

Deep learning achieves efficient feature representation and decision inference for complex data by constructing multi-layer nonlinear neural network models. Its core idea is to optimize network parameters using the backpropagation algorithm, extracting abstract features layer by layer, thereby overcoming the limitations of traditional machine learning methods in feature engineering [21]. Typical deep learning network models include Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Transformers. CNNs excel at processing image data, extracting local spatial features through convolutional kernels, and achieving feature dimensionality reduction and translation invariance via pooling operations. RNNs are suitable for sequential data processing, capturing temporal dependencies using recurrent structures. Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks optimize long-term memory capabilities through gating mechanisms, making them suitable for analyzing continuous UAV motion trajectories. Transformers, based on the self-attention mechanism, eliminate recursive limitations in sequence processing through global context modeling, enabling efficient association of multi-scale features and demonstrating unique advantages in multi-modal data alignment [22].

2.2. Photoelectric Target Feature Extraction Based on Deep Learning

In complex environments, UAV cluster targets in visible light images often exhibit problems such as blurred texture features, complex scene interference, and dense target overlap, posing challenges for traditional image processing methods. Deep learning-based target detection algorithms overcome these challenges by introducing attention mechanisms and constructing multi-level feature extraction networks to extract multi-scale texture features of LSS targets, enabling efficient detection of UAV clusters in complex environments.

2.2.1. Deep Learning Network Design

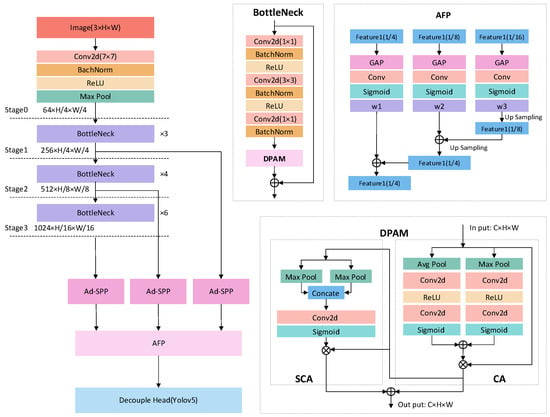

The network design employs an improved ResNet-50 (with Stage4 removed to retain higher-resolution feature maps and reduce computational cost) as the backbone, fused with an Adaptive Feature Pyramid (AFP) structure and a Dual-Path Attention Module (DPAM), as illustrated in Figure 1.

Figure 1.

Schematic diagram of the visible light multi-scale texture feature extraction network structure.

The proposed DPAM innovates upon existing dual-path attention mechanisms [23,24] by employing parallel, non-serial processing of channel and spatial attention. Unlike serial structures which may suppress features prematurely, DPAM preserves and enhances complementary information through cross-layer residual connections and a dynamic gating mechanism, which is particularly beneficial for capturing the weak and multi-scale features of LSS-UAVs.

The backbone network embeds the DPAM into Stages 1–3 of ResNet-50, preserving shallow texture details through cross-layer residual connections. The output feature map resolutions are 1/4, 1/8, and 1/16 of the input image, respectively. The feature fusion layer employs Adaptive Spatial Pyramid Pooling (Adaptive-SPP), where the pooling kernel size is dynamically adjusted:

where is the kernel size for the k-th pooling layer, is the size of the input feature map, and is a learnable scale parameter.

Target detection is based on an enhanced YOLOv5 architecture, utilizing a Decoupled Head to separate classification and regression tasks, and introducing the GIoU loss function to optimize bounding box localization accuracy:

where is the smallest enclosing box covering both the predicted box and the ground truth box , and represents the Intersection over Union.

To address illumination variations and background interference, the Dual-Path Attention Module (DPAM) is introduced. Unlike traditional serial attention mechanisms that process channel and spatial attention sequentially (which may suppress informative features) [23], and inspired by the effectiveness of parallel attention structures [24], DPAM contains two parallel sub-modules: Channel Attention (CA) and Spatial Attention (SA). This parallel structure allows for simultaneous refinement of features along channel and spatial dimensions, effectively enhancing feature discriminability for small and dim targets in complex scenes.

Channel Attention (CA): Generates channel weights via Global Average Pooling (GAP) and Global Max Pooling (GMP):

where is the Sigmoid function, and is the input feature map.

Spatial Attention (SA): Based on the CA-weighted feature map , generates spatial weights by concatenating the results of GAP and GMP followed by convolution:

where is the Sigmoid function, is the CA-weighted feature map, denotes a convolutional layer with a 7 × 7 kernel, and represents the convolution operation.

The diagram illustrates the improved ResNet-50 backbone with DPAMs embedded in Stages 1–3, the Adaptive Feature Pyramid (AFP), and the decoupled detection head. Key components are labeled for clarity.

2.2.2. Dense Target Decoupling Strategy

To address detection bounding box overlap caused by cluster target texture overlap, a Non-Maximum Suppression (NMS) strategy is introduced. Its suppression threshold is dynamically adjusted based on the local target density :

where is the local target density factor, and is the base overlap threshold.

2.3. Infrared Target Feature Extraction Based on Deep Learning

UAV clusters in infrared images present challenges such as weak thermal radiation features, strong background thermal noise interference, and variable target scales, making traditional infrared target detection algorithms difficult to satisfy multi-scenario applicability requirements. Drawing on the small target detection approach in [25], this paper designs a dual-branch infrared small target detection architecture, incorporating a lightweight detection head after feature extraction to achieve end-to-end thermal target localization and recognition.

2.3.1. Deep Learning Network Design

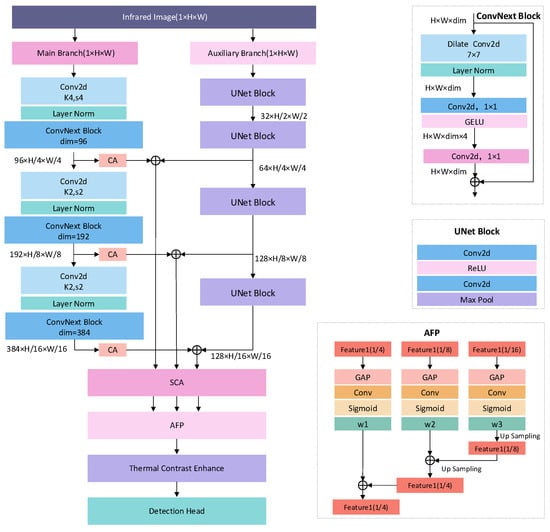

The network design adopts a dual-branch architecture, combining multi-scale thermal feature extraction with cross-modal attention fusion (fusion of features from different levels within the infrared image), as shown in Figure 2.

Figure 2.

Schematic diagram of the infrared thermal radiation feature extraction network structure.

The diagram shows the dual-branch architecture, comprising a main branch based on improved ConvNeXt-Tiny and an auxiliary branch with lightweight UNet, followed by feature fusion and a lightweight detection head.

The main branch is built upon an improved ConvNeXt-Tiny [26], incorporating dilated convolution to expand the receptive field. The output feature map resolutions range from 1/4 to 1/16 of the input image. The auxiliary branch employs a lightweight UNet structure to extract thermal gradient features, preserving low-level thermal distribution details. Its output feature map is aligned with corresponding layers of the main branch. Features from the main branch and auxiliary branch are fused via Channel Attention (CA) and Spatial Cross-Attention (SCA):

where SCA is calculated as

where , , are learnable projection matrices, and is a dimension scaling factor.

Target detection employs a lightweight thermal object detection head, comprising three sub-modules: bounding box regression , object confidence , and object classification .

where is the input feature tensor, is the target confidence layer, is the target classification layer, denotes the convolutional weights of the -th layer, represents the convolutional bias of the -th layer, is the Sigmoid activation function, denotes the Rectified Linear Unit activation function, and signifies the global average pooling function. A thermal contrast enhancement module is added before the detection head:

2.3.2. Multi-Scale Detection and Group Target Decoupling

An Adaptive Feature Pyramid (AFP) structure is adopted to optimize multi-scale feature representation, constructing a dynamic scale fusion path at the end of the backbone network. Its scale weights are generated by a gating mechanism:

where represents feature maps from different levels, and is the Sigmoid function.

To address dense overlap within cluster targets, the Density-Aware NMS strategy defined in Equation (5) is similarly employed.

3. Multi-Modal Fusion Architecture Design

Existing multi-modal fusion strategies can be categorized into data-level, feature-level, and decision-level fusion. Data-level fusion directly combines raw data, preserving all original details. It achieves optimal fusion when data is highly complementary and precisely aligned but suffers from spatiotemporal alignment issues, high computational complexity, and poor robustness to missing modalities. Feature-level fusion achieves information complementarity by extracting high-level features from each modality, balancing information retention and computational feasibility. However, it faces challenges in aligning heterogeneous features and potential information fusion loss. Decision-level fusion combines decisions made independently for each modality. It offers complete decoupling between modalities, strong fault tolerance, and the highest environmental adaptability. Drawbacks include lack of information interaction and potential decision conflicts.

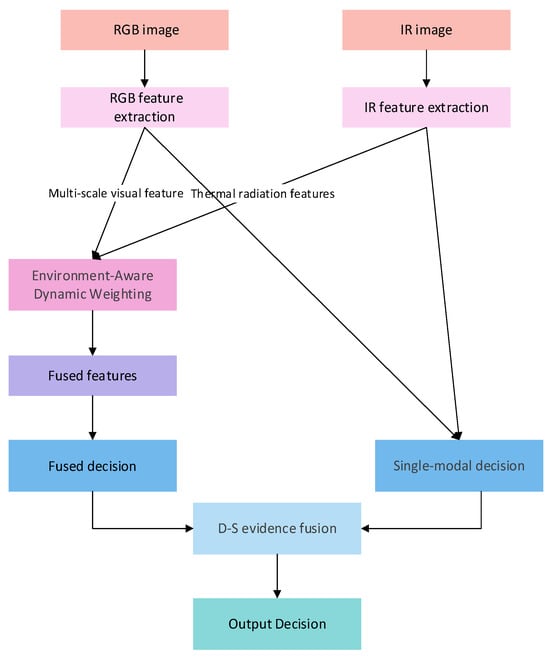

To address these issues, this paper proposes a hierarchical fusion architecture combining feature-level and decision-level fusion (as shown in Figure 3). This architecture first enhances feature discriminability at the feature-level fusion layer using an Environment-Aware Dynamic Weighting (EADW) mechanism. Subsequently, at the decision-level fusion layer, it employs D-S evidence theory to fuse the single-modal decisions with the feature-fusion layer decision. This effectively resolves the problems of missing information interaction and decision conflicts, enhancing the overall system robustness.

Figure 3.

Hierarchical feature-level + decision-level fusion architecture.

The diagram illustrates the overall pipeline: visible and infrared images are processed by their respective feature extraction networks, followed by the EADW-based feature fusion layer, and finally the D-S evidence theory-based decision fusion layer.

3.1. Feature Fusion Layer Design

Recent research [27] indicates that fusion mechanisms based on dynamic environment awareness can significantly enhance detection robustness in complex scenes. To address the failure of traditional weighted averaging methods during abrupt environmental changes, this paper proposes an Environment-Aware Dynamic Weighting (EADW) fusion mechanism. Its core idea is to adaptively adjust the contribution weight of each modal feature in the fusion based on real-time environmental states. This mechanism comprises three key components: environment perception, dynamic weight generation, and feature alignment and fusion.

3.1.1. Dynamic Environment Awareness

An environmental awareness vector is constructed, encompassing illumination, weather, and time factors. These factors are estimated in real time using onboard light sensors, public weather API data (for visibility and humidity), and system clock information. is the illumination factor, characterizing its impact on the visible light sensor [22].

where is the current illuminance value (in lux). represent the reference illuminance of midday sunlight (e.g., 100,000 lux), and signifies the reference illuminance of nighttime moonlight (e.g., 0.1 lux). are the weather impact factor, characterizing atmospheric attenuation under different weather conditions (Clear, Rainy, Foggy). They are calculated based on sensor measurements:

where the is rainfall intensity (mm/h), is visibility (m), is relative humidity (%), and is a learnable weight matrix. is the time impact factor, characterizing the influence of time of day on background noise for the infrared sensor.

where is the local time (hours).

3.1.2. Dynamic Weight Generation

For each modality , an input vector is constructed, where is the mean detection confidence of the current frame (output by the corresponding single-modal detection network). A two-layer fully connected neural network (MLP) computes a weight score for this modality:

where , are learnable weight matrices, , are bias vectors. The dynamic fusion weight for modality after Softmax normalization is

The dynamic weight for each modality m, computed by Equation (15), is normalized via Softmax and thus ranges between 0 and 1, with the sum of weights for all modalities equal to 1.

3.1.3. Feature Alignment and Fusion

To avoid dimension mismatch, each modality’s feature is first projected (linear transformation) into a common space:

where is a projection matrix, is a bias vector, is the original feature dimension of modality , and is the target fusion feature dimension (set to 512 in our experiments). The fused feature output is then

3.2. Decision Fusion Layer Design

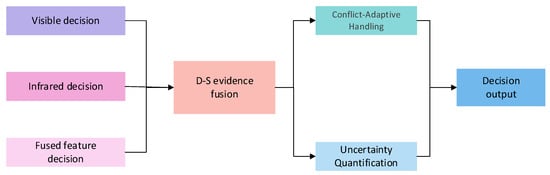

To fully leverage the global information complementarity advantage of the feature fusion decision and the local reliability advantage of single-modal decisions, and to effectively quantify uncertainty and resolve decision conflicts, this paper proposes a complementary fusion strategy combining the feature fusion decision and independent single-modal decisions. D-S evidence theory is introduced for the final decision fusion [28], as illustrated in Figure 4.

Figure 4.

Schematic diagram of the decision fusion process.

The diagram details the D-S evidence theory based fusion process: BPA construction for fusion decision and single-modal decisions, evidence combination, conflict-adaptive handling, and final decision generation.

3.2.1. Evidence Space Modeling

D-S evidence theory, as an uncertainty reasoning framework, has been validated in recent research for effectively handling conflicting decisions [27]. Define the frame of discernment , where denotes “Target Present”, denotes “Target Absent”. The power set is . Based on the decision input sources, define a three-dimensional evidence space: , where each evidence source corresponds to its Basic Probability Assignment (BPA) function , satisfying:

3.2.2. Basic Probability Assignment (BPA) Construction

Differentiated BPA generation strategies are employed for the two types of inputs: the joint feature fusion decision and the single-modal independent decisions .

The BPA for the Fusion Decision () is constructed as follows:

where is the fusion decision authority coefficient (set to 0.9, >single-modal coefficients), is a decision confidence threshold (set to 0.6, filtering low-quality decisions).

The BPA for a Single-Modal Decision is constructed as follows:

where is the Sigmoid function, compressing probabilities to [0, 1], controls the steepness of the confidence curve (set to 5), is a threshold offset compensating for sensor bias (set to 0.5 for visible, 0.4 for infrared), quantifies the modality’s inherent uncertainty factor (sensor reliability, set to 0.3 for visible, 0.2 for infrared).

3.2.3. D-S Evidence Combination Rule

The constructed three-dimensional evidence space is combined using Dempster’s rule:

where denote the target proposition, and is the conflict factor (when the intersection of all evidence combination sets is empty), characterizing the degree of conflict among pieces of evidence. A higher value of indicates more severe contradiction in the evidence, calculated as

Typically, indicates normal status, indicates moderate conflict, and indicates severe conflict.

The combination process involves enumerating all possible assignments across the evidence space (24 cases), Consistency checking is then performed: probability assignment is calculated only when the intersection of all evidence combination sets is the universal set (), indicating all pieces of evidence support the same recognition outcome (). Concurrently, the conflict factor () is computed for cases where conflict exists among the evidence. Using for normalization, the conflict mass is redistributed to the non-conflicting cases.

3.2.4. Conflict-Adaptive Handling

Referencing Murphy’s evidence conflict quantification method [29], when the system detects moderate or higher conflict (), a conflict-adaptive handling mechanism is activated. First, the reliability of each evidence source is assessed to obtain dynamic weighting factors :

where is a quality metric for the evidence (e.g., historical accuracy), is the source’s inherent uncertainty factor, is a tuning factor (empirically set to 0.9). If a single-modal decision highly conflicts with others, the weight of the feature fusion decision is increased (×1.2). If the feature fusion decision itself has high uncertainty, its weight is decreased (×0.8). Using these adjustments, the evidence is combined using a weighted rule:

3.2.5. Decision Generation and Uncertainty Management

Define the belief function , representing the minimum credibility supporting proposition A (sum of probabilities of all subsets supporting A). Define the plausibility function , representing the maximum credibility supporting proposition A (sum of probabilities of all propositions compatible with A).

Based on these definitions, the final decision output rule is

4. Experiments and Results Analysis

4.1. Experimental Setup

Dataset: Experiments are based on the Anti-UAV multi-modal dataset released by Jiang et al. [30], containing 318 synchronized visible-infrared video pairs. To construct a diverse and representative dataset while mitigating frame redundancy, we extracted 10 keyframes per video using a systematic sampling strategy: (1) five frames were sampled at fixed intervals to capture temporal diversity, and (2) five additional frames were selected around moments of significant target motion or appearance change, identified by optical flow analysis. This process formed our **Anti-UAV-RGBT dataset**, comprising 6360 spatio-temporally strictly aligned infrared-visible image pairs (alignment error < 3 pixels relative to 1920 × 1080 resolution). The dataset covers diverse environments including urban high-rise clusters, suburban areas, and near-water areas, under various conditions (daytime, nighttime, haze). The dataset is split into 3200 images for training, 1820 for testing, and 1340 for validation.

Hardware/Software Environment: Hardware: NVIDIA RTX 3090 GPU (24 GB VRAM), Intel Xeon Gold 6330 CPU @ 2.5 GHz, 45 GB RAM. Software: PyTorch 2.0.0, Python 3.8 (Ubuntu 20.04), CUDA 11.8.

Single-modal Model Training: Visible: Input: 1920 × 1080 visible images. Architecture: Enhanced ResNet-50 (with FPN, DPAM, decoupled head). Optimizer: SGD (lr = 0.01, momentum = 0.9). Batch Size: 16. Epochs: 150. Augmentation: Random crop, flip, color jitter. Infrared: Input: 640 × 512 infrared images. Architecture: Dual-branch ConvNeXt-Tiny + lightweight UNet. Optimizer: Adam (lr = 5 × 10−4). Batch Size: 16. Epochs: 120. Augmentation: Brightness/contrast adjustment.

Fusion Model Training: The network parameters of the feature-level fusion module (EADW) and the decision-level fusion module (D-S evidence theory) were jointly fine-tuned end-to-end with the single-modal detection networks. Multi-task loss (classification cross-entropy + localization GIoU) was used. Optimizer: Adam (lr = 1 × 10−4). Batch Size: 8. Early stopping threshold: Validation loss plateau < 0.1% for 20 epochs.

Evaluation Metrics: Widely recognized object detection metrics were employed: Accuracy, Precision, Recall, False Alarm Rate (FAR), F1-Score.

Specifically, Accuracy = (TP + TN)/(TP + TN + FP + FN), Precision = TP/(TP + FP), Recall = TP/(TP + FN), FAR = FP/(FP + TN), F1-Score = 2 × Precision × Recall/(Precision + Recall), where TP, TN, FP, FN denote True Positives, True Negatives, False Positives, and False Negatives, respectively.

All results are reported as mean ± standard deviation over three independent runs to ensure statistical reliability.

4.2. Overall Performance Comparison

Table 1 presents the comprehensive performance comparison results on the test set between the proposed fusion method, single-modal baseline methods (Visible Light, Infrared), and six representative fusion methods (including both classical strategies and recent state-of-the-art (SOTA) methods: Cross-modal Attention Fusion [31], Feature Weighted Average Fusion [32], Voting Decision Fusion [33], Dynamic Feature Fusion [20], and the recently proposed TransFuser [23], CMX [34]).

Table 1.

Overall Performance Comparison of Different Methods on Test Set (%).

The proposed method achieves the best performance across all core metrics. Accuracy reaches 93.5%, Precision is 92.8%, Recall is 94.1%, FAR is as low as 4.2%, and the F1-Score is 93.4%. All results are presented as mean values, with standard deviations across three runs being less than 0.5% for all metrics, indicating stable performance. This fully validates the effectiveness of the proposed visible-infrared multi-modal fusion framework and hierarchical fusion strategy.

Compared to the best-performing single-modal method (Visible Light detection), the proposed method improves accuracy by 10.4% and reduces FAR by 4.3%. This is primarily attributed to the complementarity of multi-source information and the effective management of uncertainty and decision conflicts by the D-S evidence theory, significantly reducing the risk of misjudgment caused by single sensor failure or interference.

Compared to traditional fusion methods, the proposed method improves accuracy by 4.3% over Feature Weighted Average Fusion, 6.0% over Voting Decision Fusion, 3.4% over Cross-modal Attention, and 4.8% over Dynamic Feature Fusion. Notably, it also outperforms the recent SOTA method TransFuser [23] by 2.0% in accuracy and 2% in F1-Score. Advantages in FAR control are even more pronounced. This demonstrates the importance of the proposed Environment-Aware Dynamic Weighting (EADW) mechanism in adaptively adjusting modal weights during feature fusion, and the superiority of the D-S evidence theory in handling uncertainty and conflicts of heterogeneous information and achieving complementary information fusion during decision fusion, outperforming simple averaging or voting strategies.

Computational Cost Analysis: We further report the computational complexity and inference speed in Table 2. The proposed method achieves a favorable balance between performance and efficiency. With 46.2 M parameters and 81.9 G FLOPs, it is more efficient than the transformer-based CMX [35] (55.3 M, 98.7 G) and TransFuser [34] (62.1 M, 110.5 G) methods, while delivering superior accuracy (Table 1). Its inference speed of 28 FPS, though slightly lower than the Cross-Modal Attention baseline (31 FPS) due to the sequential processing in the EADW and D-S modules, still meets real-time requirements and demonstrates a good trade-off between performance and computational cost.

Table 2.

Computational Complexity and Inference Speed Comparison.

4.3. Environmental Adaptability Analysis

To evaluate the robustness and environmental adaptability of the method, Table 3 details the detection accuracy comparison of various methods under three typical environmental conditions in the Anti-UAV-RGBT dataset.

Table 3.

Accuracy Comparison under Different Environmental Conditions (%).

The proposed method maintains high detection accuracy across all tested environmental conditions (range: 89.7–94.8%), with an average performance of 92.3%, significantly outperforming other comparison methods. This demonstrates the method’s strong adaptability and robustness in complex environments.

Nighttime Conditions: The visible light sensor is severely degraded, making infrared the primary source. The proposed method automatically and significantly reduces the fusion weight of the visible modality and increases the weights of the infrared modality via the EADW mechanism. Crucially, the D-S evidence theory effectively fuses the decision from the feature fusion layer (which may be limited by visible light failure) with the independent infrared decision. Therefore, the proposed method achieves results significantly better than other fusion methods and single-modal methods at night.

Haze Conditions: Benefiting from the EADW mechanism dynamically reducing the weight of the visible light sensor (severely affected by atmospheric attenuation) while effectively fusing information from infrared (relying on thermal radiation), the detection accuracy is substantially improved.

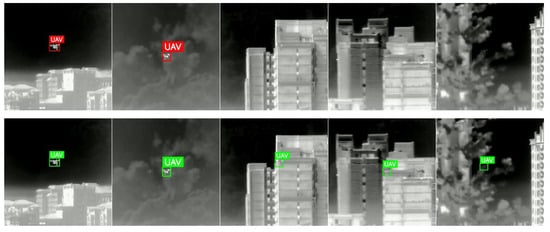

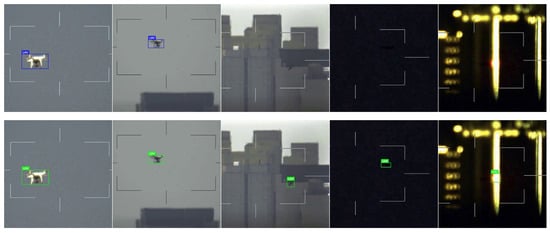

Figure 5 and Figure 6 visually compare the detection effects of the visible light single-modal method, infrared single-modal method, and the proposed method in different scenarios.

Figure 5.

Comparison of infrared single-modal detection and multi-modal hierarchical fusion detection results.

As shown in the figure, the first row shows results from the infrared-only method, while the second row shows results from the proposed fusion method. Under conditions of urban thermal radiation interference, the infrared single-modal detection method fails to detect targets obscured by buildings and trees, whereas the proposed approach successfully identifies them.

Figure 6.

Comparison of visible light single-modal detection and multi-modal hierarchical fusion detection results.

As shown in the figure, the first row shows results from the visible-only method, while the second row shows results from the proposed fusion method. In scenarios with similar backgrounds, extremely low-light conditions, and nighttime light interference, the visible light single-modal detection method misses some targets, while our method correctly detects all of them.

4.4. Ablation Study

To verify the effectiveness of key components in the proposed fusion framework, an ablation study was conducted. The combination of only feature-level average fusion (FeatAvg) and decision-level simple voting (Vote) serves as the baseline (Baseline). The proposed components—Environment-Aware Dynamic Weighting (EADW), D-S Evidence Theory (D-S), and Conflict-Adaptive (CA) handling—are incrementally introduced. Results are shown in Table 4.

Table 4.

Ablation Study Results of Key Components (%).

Role of EADW: Introducing the EADW mechanism over the baseline improves accuracy by 2.8 percentage points and reduces FAR by 1.6 points. This fully demonstrates the effectiveness of environment-aware dynamic weighting in the feature fusion stage. EADW adaptively adjusts the contribution of different modalities based on real-time environmental states (illumination, weather, time) and single-modal confidence, significantly enhancing the feature fusion’s adaptability to environmental changes and its discriminative power.

Role of D-S Evidence Theory: Replacing simple voting with D-S evidence theory on top of EADW further improves accuracy by 1.8 points and reduces FAR by 0.7 points. This validates the advantage of D-S theory in the decision fusion stage. D-S theory quantitatively handles uncertainty and conflict information from different evidence sources (feature fusion decision and single-modal decisions), fusing heterogeneous decisions more scientifically and robustly through evidence combination rules, effectively overcoming the limitations of simple voting under missing information interaction and decision conflicts.

Gain of Conflict-Adaptive (CA) Mechanism: Adding the CA handling mechanism to the D-S fusion results in the optimal performance of the full method (Ours), with accuracy further increasing by 1.4 points and FAR decreasing by 0.6 points. This indicates that the CA mechanism is crucial for handling scenarios with moderate or higher conflict (K > 0.3). CA dynamically assesses the reliability of evidence sources and adjusts their weights based on conflict level, maintaining fusion system reliability and decision accuracy even when significant contradictions exist between evidence sources.

Overall Effect: The ablation study clearly shows the performance evolution path from baseline to the final method (From 87.5% to 90.3%, then to 92.1%, and finally to 93.5%). Each key component’s introduction brings a clear performance gain, fully demonstrating the necessity and effectiveness of the proposed EADW, D-S, and CA mechanisms in building a high-performance, highly robust multi-modal fusion detection system.

5. Conclusions and Outlook

This paper addresses the challenge of detecting Low-Slow-Small (LSS) UAV cluster targets by proposing a visible-infrared multi-modal fusion detection method based on deep learning. A hierarchical multi-modal fusion framework integrating feature-level and decision-level fusion is designed, effectively enhancing the detection, recognition capability, and system robustness for LSS-UAV cluster targets in complex environments. The core contributions include the following:

- (1)

- Dedicated deep feature extraction networks (Improved ResNet-50 with DPAM for Visible/Dual-branch ConvNeXt-Tiny with UNet for IR) tailored for visible and infrared modalities were designed. These networks address issues such as blurred texture features, complex scene interference, and varying target scales in visible and infrared images, effectively enhancing the feature discrimination capability of single modalities under complex interference.

- (2)

- An innovative EADW-DS hierarchical feature-level and decision-level fusion framework was proposed. The EADW mechanism dynamically adjusts fusion weights by perceiving real-time environmental states (illumination, weather, time) and single-modal confidence, significantly improving the environmental adaptability of feature fusion. The D-S evidence theory fuses the feature fusion layer’s decision with independent single-modal decisions. By quantifying uncertainty and introducing a Conflict-Adaptive (CA) handling mechanism, it effectively resolves issues of missing information interaction and decision conflicts, substantially enhancing the overall system robustness.

On the Anti-UAV-RGBT dataset, the proposed method achieved a detection accuracy of 93.5%, representing an average improvement of 18.7% over the best single-modal method, while reducing the false alarm rate to 4.2%. Performance improvements were particularly significant (>8%) under harsh conditions like haze and nighttime. Ablation studies validated the effectiveness of key components like EADW, D-S, and CA.

Future research will focus on the following aspects:

- (1)

- Incorporating Additional Modalities: Introduce other modalities such as radar, RF (Radio Frequency), and acoustic signals to explore detection methods for LSS targets under more modalities and complex scene interference conditions. The proposed hierarchical fusion architecture is designed to be extensible for integrating more than two modalities.

- (2)

- Enhancing Dynamic Weighting: Refine the dynamic weighting mechanism within EADW by incorporating reinforcement learning or online learning techniques, enabling more intelligent and fine-grained adjustment of modality contributions based on real-time scene changes (e.g., sudden interference, target maneuvers). This could further improve the system’s autonomy and long-term adaptation in dynamic environments.

- (3)

- Robustness to Adversarial Attacks: Investigate the vulnerability of the proposed multi-modal system to adversarial attacks and develop corresponding defense mechanisms [35], enhancing the security and reliability of the detection system in potentially contested environments.

- (4)

- Theoretical Analysis: Conduct deeper theoretical analysis on the relationship between environmental parameters, fusion weights, and final performance to provide stronger theoretical foundations for the EADW mechanism.

Author Contributions

Conceptualization, Z.L., Y.Z. and B.R.; methodology, Z.L. and Y.Z.; software, Z.L. and Z.H.; validation, Z.L., Z.H. and H.X.; formal analysis, Z.L. and Z.H.; investigation, Z.L. and H.X.; writing—original draft preparation, Z.L. and M.L.; writing—review and editing, Z.L. and M.L.; visualization, Z.L. and H.X.; supervision, B.R.; project administration, B.R.; funding acquisition, B.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, grant number 62471508.

Data Availability Statement

The Anti-UAV dataset was obtained from the website https://github.com/ucas-vg/Anti-UAV (accessed on 5 April 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Na, Z.; Cheng, L.; Sun, H.; Lin, B. Survey of UAV detection and recognition research based on deep learning. Signal Process. 2024, 40, 609–624. [Google Scholar] [CrossRef]

- Adnan, W.H.; Khamis, M.F. Drone use in military and civilian application: Risk to national security. J. Media Inf. Warf. 2022, 15, 60–70. [Google Scholar]

- Qiu, X.; Luo, B.; Fu, Z.; Tan, X.; Xiong, C. Review of anti-UAV technology development at home and abroad. Tact. Missile Technol. 2024, 63–73, 98. [Google Scholar] [CrossRef]

- Liu, L.; Liu, D.; Wang, X.; Wang, F.; Li, Y.; He, Y.; Liao, M. Development status and prospect of UAV swarm and anti-UAV swarm. Acta Aeronaut. Astronaut. Sin. 2022, 43, 4–20. [Google Scholar]

- Cheng, Y.; Zou, R.; Chen, J.; Wu, H.; Hua, X. LSS-Ku-1.0: A radar low-slow-small UAV detection dataset under ground clutter background. Signal Process. 2025, 41, 807–820. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, J.; Li, D.; Wang, D. Vision-based anti-UAV detection and tracking. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25323–25334. [Google Scholar] [CrossRef]

- Yang, F.; Wang, M. Infrared small target detection method for low-altitude surveillance systems. Opt. Tech. 2024, 50, 120–128. [Google Scholar] [CrossRef]

- Xu, S.; Chen, X.; Li, H.; Liu, T.; Chen, Z.; Gao, H.; Zhang, Y. Airborne small target detection method based on multimodal and adaptive feature fusion. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5637215. [Google Scholar] [CrossRef]

- Song, H. Small target detection based on multimodal data fusion. In Proceedings of the IEEE International Symposium on Computer Applications and Information Technology (ISCAIT), Xi’an, China, 21–23 March 2025; pp. 537–540. [Google Scholar] [CrossRef]

- Ouyang, J.; Jin, P.; Wang, Q. Multimodal feature-guided pretraining for RGB-T perception. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 17, 16041–16050. [Google Scholar] [CrossRef]

- Li, M.; Zhou, M.; Zhi, R. Survey of UAV recognition research based on multi-modal fusion. Comput. Eng. Appl. 2025, 61, 1–14. Available online: http://kns.cnki.net/kcms/detail/11.2127.TP.20250523.1539.008.html (accessed on 10 April 2025).

- Han, Z.; Yue, M.; Zhang, C.; Gao, Q. Multi-modal fusion detection for UAV targets based on Siamese network. Infrared Technol. 2023, 45, 739–745. [Google Scholar]

- Hu, N.; Tian, X. OFDM-MIMO radar signal design and processing method for small UAVs. Signal Process. 2024, 40, 878–886. [Google Scholar]

- Kaleem, Z. Lightweight and computationally efficient YOLO for rogue UAV detection in complex backgrounds. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 5362–5366. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Li, R.; Peng, Y.; Yang, Q. Fusion enhancement: UAV target detection based on multi-modal GAN. In Proceedings of the IEEE Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 15–17 September 2023; pp. 1953–1957. [Google Scholar] [CrossRef]

- Gao, M.; Lin, S. Deep learning LSS target detection algorithm based on radar signal and remote sensing map fusion. Signal Process. 2024, 40, 82–93. [Google Scholar] [CrossRef]

- Guo, R.; Sun, B.; Sun, X.; Bu, D.; Su, S. Multi-modal fusion target detection method for UAVs under low-light conditions. Chin. J. Sci. Instrum. 2025, 46, 338–350. [Google Scholar] [CrossRef]

- Hengy, S.; Laurenzis, M.; Schertzer, S.; Hommes, A.; Kloeppel, F.; Shoykhetbrod, A.; Geibig, T.; Johannes, W.; Rassy, O.; Christnacher, F. Multimodal UAV detection: Study of various intrusion scenarios. In SPIE Electro-Optical Remote Sensing XI; SPIE: Bellingham, WA, USA, 2017; Volume 10434, pp. 203–212. [Google Scholar]

- Feng, D.; Haase-Schütz, C.; Rosenbaum, L.; Hertlein, H.; Glaeser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep Multi-Modal Object Detection and Semantic Segmentation for Autonomous Driving: Datasets, Methods, and Challenges. IEEE Trans. Intell. Transp. Syst. 2021, 22, 1341–1360. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. Available online: https://arxiv.org/abs/1706.03762 (accessed on 8 December 2025).

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Chi, W.; Liu, J.; Wang, X.; Feng, R.; Cui, J. DBGNet: Dual-branch gate-aware network for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5003714. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar] [CrossRef]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Drone-based RGB-infrared cross-modality vehicle detection via uncertainty-aware learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6700–6713. [Google Scholar] [CrossRef]

- Zhao, G.; Chen, A.; Lu, G.; Liu, W. Data fusion algorithm based on fuzzy sets and D-S theory of evidence. Tsinghua Sci. Technol. 2020, 25, 12–19. [Google Scholar] [CrossRef]

- Murphy, C.K. Combining belief functions when evidence conflicts. Decis. Support Syst. 2000, 29, 1–9. [Google Scholar] [CrossRef]

- Jiang, N.; Wang, K.; Peng, X.; Yu, X.; Wang, Q.; Xing, J.; Li, G.; Guo, G.; Ye, Q.; Jiao, J.; et al. Anti-UAV: A large-scale benchmark for vision-based UAV tracking. IEEE Trans. Multimed. 2023, 25, 486–500. [Google Scholar] [CrossRef]

- Xu, K.; Wang, B.; Zhu, Z.; Jia, Z.; Fan, C. A contrastive learning enhanced adaptive multimodal fusion network for hyperspectral and LiDAR data classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4700319. [Google Scholar] [CrossRef]

- Liu, Z.; Shen, Y.; Lakshminararasimhan, V.B.; Liang, P.P.; Zadeh, A.B.; Morency, L.-P. Efficient low-rank multimodal fusion with modality-specific factors. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL), Melbourne, Australia, 15–20 July 2018; pp. 2247–2256. [Google Scholar]

- Perez-Rua, J.-M.; Vielzeuf, V.; Pateux, S.; Baccouche, M.; Jurie, F. MFAS: Multimodal fusion architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6959–6968. [Google Scholar] [CrossRef]

- Li, H.; Zhang, S.; Kong, X. CMX: Cross-Modal Fusion for RGB-X Semantic Segmentation with Transformers. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5003713. [Google Scholar] [CrossRef]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).