Abstract

On the basis of the new iterative technique designed by Zhongli Liu in 2016 with convergence orders of three and five, an extension to order six can be found in this paper. The study of high-convergence-order iterative methods under weak conditions is of extreme importance, because higher order means that fewer iterations are carried out to achieve a predetermined error tolerance. In order to enhance the practicality of these methods by Zhongli Liu, the convergence analysis is carried out without the application of Taylor expansion and requires the operator to be only two times differentiable, unlike the earlier studies. A semilocal convergence analysis is provided. Furthermore, numerical experiments verifying the convergence criteria, comparative studies and the dynamics are discussed for better interpretation.

MSC:

49M15; 47H99; 65D99; 65J15; 65G99

1. Introduction

Driven by the needs of applications in applied mathematics, Refs. [1,2,3,4,5,6] finding a solution of the equation

is considered significant, where is a nonlinear Fréchet differentiable operator. Here and below, T and are Banach spaces and is an open convex set in Due to the complexity in finding closed form solutions to (1), it is often advised to adopt iterative methods to find . Newton’s method has been modified in numerous ways in the studies found in [7,8,9,10,11,12,13,14]. This paper is based on the Newton–Gauss iterative method studied by Zhongli Liu et al. in [15]. Precisely, in [15], Zhongli Liu et al. constructed the following method (see (2) below) by employing the two-point Gauss quadrature formula, given for each as

where ,

This was further extended to a method of order five given by

Recall [1] that a sequence converges to with convergence order if for

where c is called the rate of convergence.

The current level of research is established in [7,8,9,10,11,12,13,14,15]. In these references, the convergence order was established using higher order derivatives. Thus, these results cannot be applied to solve equations involving operators that are not at least five (if the order of the method is four) times differentiable. This limits their applicability, and other problems also exist:

- (a)

- No computable error bounds are provided;

- (b)

- There is no information on the uniqueness domain of the solution;

- (c)

- The local convergence analysis is provided only when ;

- (d)

- The more interesting (than the local) semilocal convergence analysis is not provided.

We address all of these concerns in the more general setting of a Banach space using generalized conditions and majorizing sequences. Moreover, our extended method (see (4)) is of order six, not five.

The study on the order of convergence of (2) and (3) in [15] involves Taylor expansion. The major concern in [15] is the necessity of assumptions on the derivatives of up to order five, reducing the utility of the above methods. As an example, consider given by

It follows that

Observe that is not bounded.

Our study is solely based on obtaining the required order of convergence of the above methods without using Taylor expansion and assumptions only on and This enhances the applicability of the considered iterative method to a wider range of practical problems. Our approach can be used to study other similar methods [5,6,16,17,18].

Furthermore, we have extended this to a method of order six with the ideas in [3,4] defined for given by

The outline of the article is as follows. We discuss the convergence of the methods (2), (3) and (4) in Section 2, Section 3 and Section 4 respectively. Semilocal convergence of the methods is developed in Section 5. Section 6 deals with examples. Section 7 is dedicated to the dynamics and the basins of attraction of the methods (2), (3) and (4). Section 8 gives the conclusion.

2. Convergence Analysis of (2)

Hereafter, and for some

The following assumptions are made in our study:

- (A1)

- a simple solution of (1) exists and ;

- (A2)

- (A3)

- ;

- (A4)

- ;

- (A5)

- (A6)

- for parameter to be specified in what follows and and are scalars.

We define the functions by

and let Observe that is a continuous nondecreasing function. Furthermore, and Therefore, there exists a smallest zero for

Let the functions be defined by

and Observe that is a nondecreasing and continuous function, and Therefore, has a smallest zero

Let

Then, and ∀

For convenience, we use the notation and

Theorem 1.

Proof.

This is proved utilizing induction. Let Using (A2) gives

By the Banach result on invertible operators [1,2], it follows that

Thus, by our assumptions,

So, the iterate and the result holds for In addition, is well defined. In fact,

where we also used

and

That is Therefore, by Banach lemma on invertible operators [1] exists and

From (2) and (9), we obtain

Let

3. Convergence Analysis of (3)

Let be given as

and Then, and Therefore, has a smallest zero Let

Then,

Set For Method (3), we have the following theorem:

Theorem 2.

Proof.

Note that (21) and (22) follow as in Theorem 1, by letting and in Theorem 1 and hence Observe

So, by (A5), we get

□

4. Convergence Analysis of (4)

Let be given by

and Then, and Therefore, has a smallest zero

Let

Then,

Set Similarly, for Method (4), we develop:

Theorem 3.

Next, we provide a uniqueness result of the solution

Proposition 1.

Suppose:

(1) such that for some and such that

for each

(2) such that

Let Then, the Equation (1) has a unique solution

5. Semilocal Convergence

Generalized continuity conditions, as well as real majorizing sequences, are utilized to show the semilocal convergence of the three methods under the same set of conditions [1,2,5].

Suppose: ∃ a nondecreasing and continuous real function defined on such that the function has a minimal root Let be a nondecreasing and continuous real function on

We shall show that the following scalar functions are majorizing for Method (2), Method (3) and Method (4), respectively, for and each

and

and

and

We choose in practice the smallest version of the possible sequences

Next, the convergence is developed for these sequences.

Lemma 1.

Proof.

It follows from the definitions and the condition (33) that these sequences are nondecreasing and bounded from above by Hence, they are convergent to some □

Remark 1.

The parameter is an upper bound of each of these scalar sequences, and it is not the same in general. Moreover, it is the least and unique.

The functions the parameter and the scalar sequences are associated with the operator as follows:

- (E1)

- and a parameter such that the operator is well defined and

- (E2)

- for eachSet

- (E3)

- for each

- (E4)

- The conditions of Lemma 1 hold and

- (E5)

Next, we first present the convergence of Method (2).

Theorem 4.

Under the conditions (E1)–(E5), the sequence generated by the formula (2) is convergent to some satisfying and

Proof.

The assertions

and

shall be established by induction. The assertion (35) holds for by (E1) and the first substep of Method (2). Then, we also have that

Similarly, for

thus

We also need the estimate

or

By using (20) and (37)–(41), we get

and

Thus, the iterate and the assertions (36) and (38) (for ) hold. Furthermore, by the first substep of Method (2), the iterate is well defined and

Notice that

Then, by (20), (43), (44) and (38) (for ),

and

Hence, the induction for the assertions (35), (36) is completed and the iterates By the condition (E4), the sequence is Cauchy. It follows from (42) and (45) that the sequence is also fundamental in so In view of (44) and the continuity of the operator it follows that (if ). Let be an integer. Then, we have the estimate

By letting in (48), we show the assertion (36). □

Theorem 5.

Proof.

The assertions (49), (50) and (52) are given in Theorem 4. We have from the last substep of Method (3)

However,

so we get

Consequently, we obtain

□

Theorem 6.

Proof.

The proof is as in the proof of Theorem 4, but we use Method (4) to obtain instead

□

Next, a region is specified for the solution.

Proposition 2.

Suppose:

(1) such that for some ;

(2) The condition (E2) holds on the ball ;

(3) There exists such that

Define the region . Then the unique solution of the equation in the region is .

Proof.

Therefore, we get

and consequently, □

Remark 2.

(1) Under all the conditions (E1)–(E5), we can choose and

(2) The limit point in the condition (E5) can be replaced by λ or μ given in the Lemma 1.

6. Numerical Examples

This section gives two examples for the verification of parameters used in the above discussed theorems and an example comparison of this method with a Noor–Waseem type method [19] and a Newton–Simpson type method [7].

Example 1.

Let equipped with max norm and Consider on Ω defined for as

it follows that

and

Then, (A1)–(A5) hold if and The parameters are:

Example 2.

Let Define the function on Ω by

We have that

Then, for we can take and and The parameters are:

In the next two examples [17] [15], we compare the Noor–Waseem-type method studied in [19] and the Newton– Simpson type method in [7] with the methods (2), (3) and (4).

Example 3.

Let We solve the system

The solutions are: and

We consider for approximating using these methods (2), (3) and (4) with initial guess The obtained results are displayed in Table 1, Table 2 and Table 3.

Table 1.

Methods of order 3.

Table 2.

Methods of order 5.

Table 3.

Methods of order 6.

Remark 3.

7. Basins of Attractions

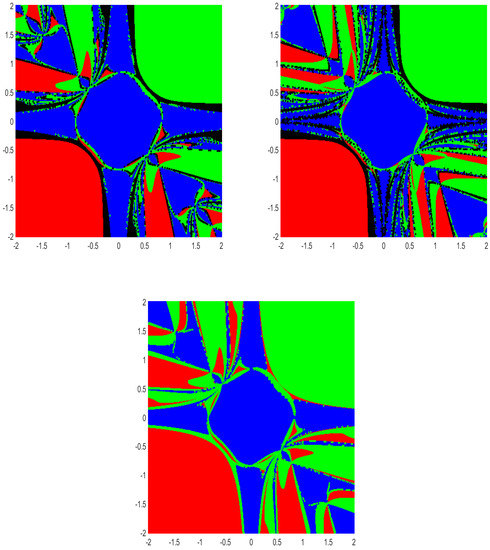

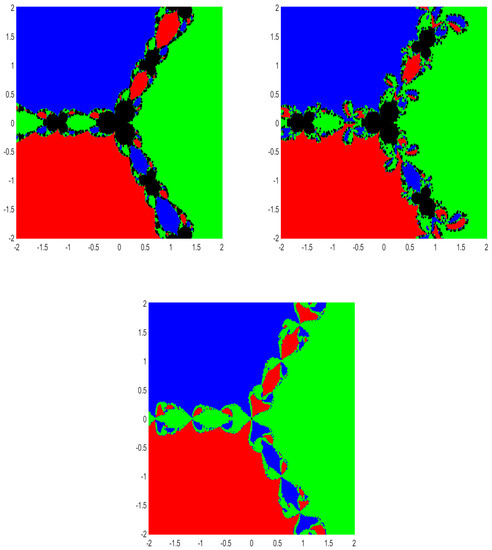

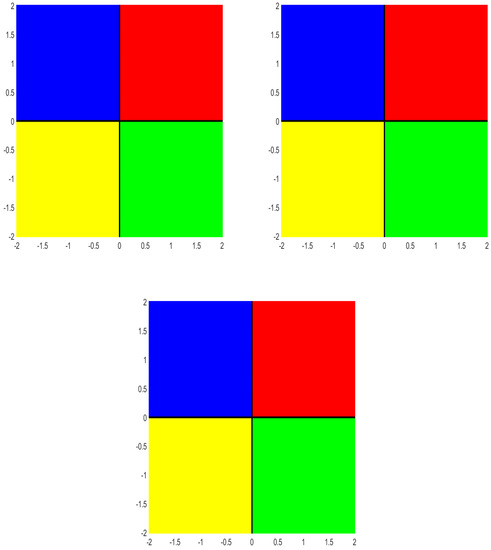

To obtain the convergence region of Methods (2), (3) and (4), we study the Basins of Attraction (BA) (i.e., the collection of all initial points from which the iterative method converges to a solution of a given equation) and Julia sets (JS) (i.e., the complement of basins of attraction) [18]. In fact, we study the BA associated with the roots of the three systems of equations given in Examples 5–7.

Example 5.

The solutions are and

Example 6.

The solutions are and

Example 7.

The solutions are and

The region which contains all the roots of Examples 5–7 is used to plot BA and JS. We choose an equidistant grid of points in as the initial guess , for Methods (2), (3) and (4). A tolerance level of and a maximum of 50 iterations are used. A color is assigned to each attracting basin corresponding to each root, and if we do not obtain the desired tolerance with the fixed iterations, we assign the color black (i.e., we decide that the iterative method starting at does not converge to any of the roots). In this way, we distinguish each BA by their respective colors for the distinct roots of each method.

Figure 1, Figure 2 and Figure 3 demonstrate the BA corresponding to each root of the above examples (Examples 5–7) for Methods (2), (3) and (4). The JS (black region), which contains all the initial points from which the iterative method does not converge to any of the roots, can easily be observed in the figures.

All the calculations in this paper were performed on a 16-core 64-bit Windows machine with Intel Core i7-10700 CPU @ 2.90GHz, using MATLAB.

In Figure 1 (corresponding to Example 5), the red region is the set of all initial points from which the iterates (2), (3) and (4) converge to the blue region is the set of all initial points from which the iterates (2), (3) and (4) converge to and the green region is the set of all initial points from which the iterates (2), (3) and (4) converge to The black region represents the Julia set.

In Figure 2 (corresponding to Example 6), the red region is the set of all initial points from which the iterates (2), (3) and (4) converge to the blue region is the set of all initial points from which the iterates (2), (3) and (4) converge to and the green region is the set of all initial points from which the iterates (2), (3) and (4) converge to The black region represents the Julia set.

In Figure 3 (corresponding to Example 7), the red region is the set of all initial points from which the iterates (2), (3) and (4) converge to the blue region is the set of all initial points from which the iterates (2), (3) and (4) converge to the green region is the set of all initial points from which the iterates (2), (3) and (4) converge to and the yellow region is the set of all initial points from which the iterates (2), (3) and (4) converge to The black region represents the Julia set.

8. Conclusions

In this article, Method (2) is extended to methods with better orders of convergence. The analyses of the local and semilocal convergence criteria for these methods, Methods (2), (3) and (4), are carried out with the assumptions imposed only on the first and second derivatives of the operator involved and without the application of Taylor series expansion. Additionally, the Fatou and Julia sets corresponding to these methods, including appropriate comparisons and examples, are displayed, thus verifying the theoretical approach.

Author Contributions

Conceptualization, R.S., S.G., I.K.A. and J.P.; methodology, R.S., S.G., I.K.A. and J.P.; software, R.S., S.G., I.K.A. and J.P.; validation, R.S., S.G., I.K.A. and J.P.; formal analysis, R.S., S.G., I.K.A. and J.P.; investigation, R.S., S.G., I.K.A. and J.P.; resources, R.S., S.G., I.K.A. and J.P.; data curation, R.S., S.G., I.K.A. and J.P.; writing—original draft preparation, R.S., S.G., I.K.A. and J.P.; writing—review and editing, R.S., S.G., I.K.A. and J.P.; visualization, R.S., S.G., I.K.A. and J.P.; supervision, R.S., S.G., I.K.A. and J.P.; project administration, S.G., J.P., R.S. and I.K.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors Jidesh Padikkal and Santhosh George wish to thank the SERB, Govt. of India, for the Project No. CRG/2021/004776.

Conflicts of Interest

The authors declare that there are no conflicts of interest.

References

- Argyros, I.K. The Theory and Applications of Iteration Methods, 2nd ed.; Engineering Series; CRC Press, Taylor and Francis Group: Boca Raton, FL, USA, 2022. [Google Scholar]

- Argyros, I.K.; Magréñan, A.A. A Contemporary Study of Iterative Schemes; Elsevier, Academic Press: New York, NY, USA, 2018. [Google Scholar]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. Increasing the convergence order of an iterative method for nonlinear systems. Appl. Math. Lett. 2012, 25, 2369–2374. [Google Scholar] [CrossRef]

- Cordero, A.; Martínez, E.; Toregrossa, J.R. Iterative methods of order four and five for systems of nonlinear equations. J. Comput. Appl. Math. 2012, 231, 541–551. [Google Scholar] [CrossRef]

- Magréñan, A.A.; Argyros, I.K.; Rainer, J.J.; Sicilia, J.A. Ball convergence of a sixth-order Newton-like method based on means under weak conditions. J. Math. Chem. 2018, 56, 2117–2131. [Google Scholar] [CrossRef]

- Shakhno, S.M.; Gnatyshyn, O.P. On an iterative Method of order 1.839... for solving nonlinear least squares problems. Appl. Math. Applic. 2005, 161, 253–264. [Google Scholar]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Darvishi, M.T.; Barati, A. A fourth-order method from quadrature formulae to solve systems of nonlinear equations. Appl. Math. Comput. 2007, 188, 257–261. [Google Scholar] [CrossRef]

- Darvishi, M.T.; Barati, A. A third-order newton-type method to solve systems of nonlinear equations. Appl. Math. Comput. 2007, 187, 630–635. [Google Scholar] [CrossRef]

- Frontini, M.; Sormani, E. Third-order methods from quadrature formulae for solving systems of nonlinear equations. Appl. Math. Comput. 2004, 149, 771–782. [Google Scholar] [CrossRef]

- Homeier, H.H.H. A modified newton method with cubic convergence: The multivariable case. J. Comput. Appl. Math 2004, 169, 161–169. [Google Scholar] [CrossRef]

- Khirallah, M.Q.; Hafiz, M.A. Novel three order methods for solving a system of nonlinear equations. Bull. Math. Sci. Appl. 2012, 2, 1–14. [Google Scholar] [CrossRef]

- Noor, M.A.; Waseem, M. Some iterative methods for solving a system of nonlinear equations. J. Comput. Math. Appl. 2009, 57, 101–106. [Google Scholar] [CrossRef]

- Podisuk, M.; Chundang, U.; Sanprasert, W. Single-step formulas and multi-step formulas of integration method for solving the initial value problem of ordinary differential equation. Appl. Math. Comput. 2007, 190, 1438–1444. [Google Scholar] [CrossRef]

- Liu, Z.; Zheng, Q.; Huang, C. Third- and fifth-order Newton–Gauss methods for solving nonlinear equations with n variables. Appl. Math. Comput. 2016, 290, 250–257. [Google Scholar] [CrossRef]

- Behl, R.; Maroju, P.; Martínez, E.; Singh, S. A study of the local convergence of a fifth order iterative scheme. Indian J. Pure Appl. Math. 2020, 51, 439–455. [Google Scholar]

- Iliev, A.; Iliev, I. Numerical method with order t for solving system nonlinear equations. In Proceedings of the Collection of Works from the Scientific Conference Dedicated to 30 Years of FMI, Plovdiv, Bulgaria, 1–3 November 2000; pp. 105–112. [Google Scholar]

- Magréñan, A.A.; Gutiérrez, J.M. Real dynamics for damped Newton’s method applied to cubic polynomials. J. Comput. Appl. Math. 2015, 275, 527–538. [Google Scholar] [CrossRef]

- George, S.; Sadananda, R.; Jidesh, P.; Argyros, I.K. On the Order of Convergence of Noor-Waseem Method. Mathematics 2022, 10, 4544. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).