Abstract

One of the main tasks in the problems of machine learning and curve fitting is to develop suitable models for given data sets. It requires to generate a function to approximate the data arising from some unknown function. The class of kernel regression estimators is one of main types of nonparametric curve estimations. On the other hand, fractal theory provides new technologies for making complicated irregular curves in many practical problems. In this paper, we are going to investigate fractal curve-fitting problems with the help of kernel regression estimators. For a given data set that arises from an unknown function m, one of the well-known kernel regression estimators, the Nadaraya–Watson estimator , is applied. We consider the case that m is Hölder-continuous of exponent with , and the graph of m is irregular. An estimation for the expectation of is established. Then a fractal perturbation corresponding to is constructed to fit the given data. The expectations of and are also estimated.

1. Introduction

One of the main tasks in the problems of machine learning, curve fitting, signal analysis, and many statistical applications is to develop suitable models for given data sets. In many real-world applications, it requires to generate a function to interpolate or to approximate the data arising from some unknown function. In data-fitting problems, interpolation is usually applied when the data are noise-free, and regression is considered if we have noisy observations.

The theory of nonparametric modeling of a regression has been developed by many researchers. Several types of estimators and their statistical properties have been studied in the literature. The class of kernel estimators is one of the main types of nonparametric curve estimations, and the Nadaraya–Watson estimator, the Priestley–Chao estimator, and the Gasser–Müller estimator are widely used in applications. See [1,2,3,4,5,6] and references given in these books. In [7,8], the authors investigated the differences between several types of kernel regression estimators, and there is no answer to which of these estimators is the best since each of them has advantages and disadvantages.

Fractal theory provides another technology for making complicated curves and fitting experimental data. A fractal interpolation function (FIF) is a continuous function interpolating a given set of points, and the graph of a FIF is the attractor of an iterated function system. The concept of FIFs was introduced by Barnsley ([9,10]), and it has been developed to be the basis of an approximation theory for nondifferentiable functions. FIFs can also be applied to model discrete sequences ([11,12,13]). Various types of FIFs and their approximation properties were discussed in [14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44], and the references given in the literature. See also the book [45] for recent developments. In [46,47,48,49,50], the construction of FIFs for random data sets is given, and some statistical properties of such FIFs were investigated. In [51], the authors made a topological–geometric contribution for the development and applications of fractal models, which present periodic changes.

For a given data set that arises from an unknown function m, the purpose of this paper is not to establish a fractal function that interpolates points in the data set, but we aim to find a fractal function that has good approximation for these data points. In [52], the authors trained SVM by the chosen training data and then applied the SVM model to calculate the interpolation points used to construct a linear FIF. In this paper, we consider the Nadaraya–Watson estimator for some sample data chosen from a given data set, and establish an estimation for the expectation of . Then a FIF corresponding to is constructed to fit the given data set, and the expectations of and are also estimated.

Throughout this paper, let be a given data set, where N is an integer greater than or equal to 2, and . We take and for convenience. Let and for . Let denote the set of all real-valued continuous functions defined on I. The set of functions in that interpolate all points in is denoted by . Define for . It is known that is a Banach space, and is a complete metric space, where the metric is induced by .

2. Construction of Fractal Interpolation Functions

In this section, we establish a fractal perturbation of a given function in . The construction given here has been treated in the literature (see [47]). We show the details here to make our paper more self-contained.

Let and , where . Assume that the data points in are non-collinear. For , let be a homeomorphism such that and . Define by

where and p is a continuous function on I such that and . Then , , and

Define by for . For , let . Then . Since is a homeomorphism, can be written as

Hence is the graph of the continuous function defined by . Define a mapping by

By we see that, for and ,

Then

Here . Since , we have the following theorem ([47], Theorem 2.1).

Theorem 1.

The operator T given by is a contraction mapping on .

Definition 1.

The fixed point of T in is called a fractal interpolation function (FIF) on I corresponding to the continuous function u.

The FIF given in Definition 1 satisfies the following equation for :

If for all i, then . Therefore, can be treated as a fractal perturbation of u.

3. The Nadaraya–Watson Estimator

Let be a given data set, where . Suppose that

where is an unknown function, and each is an observation of . Here, all are independent stochastic disturbance terms with zero expectation, E, and finite variance, Var. In this section, we consider the Nadaraya–Watson estimator for and establish an estimation for the expectation of .

Consider the case that m is Hölder continuous of exponent with , and the graph of m is irregular. Then, m satisfies the inequality with and :

The Nadaraya–Watson estimator of m is defined by

Here is a bandwidth, and k is an integrable function defined on .

The function k is called a kernel and is usually assumed to be bounded and satisfies some integrable conditions. Some widely used kernels are given in ([2], p. 41) and ([5], p. 3), and the estimations using different kernels are usually numerically similar (see [6]). In this paper, we assume that there are positive numbers , , , and R such that the kernel k satisfies the condition

Condition (8) and its multidimensional form was considered in ([5], Theorem 1.7) and ([1], Theorem 5.1).

A new estimation for the bias of was obtained in [53]. Here, we give an estimation for E in the following Theorem 2. Similar results were studied in [1,2,5], and other literature. The convergence rate of upper estimation obtained in Theorem 2 is the same as the known results.

The Nadaraya–Watson estimator given in (7) can be written in the form

Then for all t and

In the following lemma, we give a lower bound for . Define

Lemma 1.

Let . Suppose that and there are positive numbers and η such that for . Let and let and be defined in (11) and (7), respectively. Assume that and for some . Then for ,

Proof.

For , the condition implies that

where and is the number of elements of . Since if and only if , we have }.

For , we have , and by the condition , we see that and this implies . For , we have and . Hence and . For , we have and . Hence and . Then the condition implies . □

Theorem 2.

Let be a given data set and assume that m satisfies . Suppose that k satisfies and is defined by . Assume that and for some . Then we have

Proof.

We see that

By (6) and (9)–(10), we have

Condition implies that if . Therefore,

On the other hand, by and , we also have

By (9), (10) and (5), we have

Since all are independent and satisfy and , the condition and estimation imply that

Then by (14) and (15), we have (13). □

For a given kernel k which satisfies , estimation shows that and should be chosen so that is as large as possible. The minimizer with respect to d of the right-hand side of can be obtained by setting , and then solve the equation

We have

and the upper estimate given in can be reduced to , where depends on , , , , , R, , and .

4. Fractal Perturbation of the Nadaraya–Watson Estimator

In this section, we consider FIFs corresponding to the function and we establish estimations for the expectation of and . Suppose that k is continuous and we replace each in by . Then . By the construction given in Section 2 with , we have a FIF on I that satisfies the equation for :

Here, p is chosen to be the linear polynomial such that and . Then we replace by for each i and consider a random variable for every . We are interested in estimations for .

Theorem 3.

Suppose that k is continuous and k satisfies with and . Suppose that m satisfies and is defined by . Let . Assume that , , and for some and , where and are defined in . Suppose that and . Then we have

Proof.

For , implies

and we have

Then

and therefore

Since p is the linear polynomial with and , we have

and then

The convexity of the square function implies that

and therefore

By , . By with , we see that if , then and . This implies . For , if , then and . This implies . Then

where . Let . Then the number of elements in is less than .

By and with , we have

By we also have for . Condition shows that m is continuous and therefore m is bounded on I. Then for ,

We also have the same estimate for .

By the condition , we have , and then can be reduced to

Thus, can be obtained by and . Moreover, we have by , , and the inequality

□

For a given kernel k, which satisfies condition , estimation shows that and should be chosen so that is as large as possible. If we choose , where is given by with and , then can be reduced to

where and D depends on , , , , , , , and depends on , , , , , . Moreover, the constant M can be estimated by .

The right-hand side of tends to A when . In fact, if d is chosen so that and as , then the right-hand side of tends to A as . Moreover, as .

Example 1.

The data set we used in this example is the Crude Oil WTI Futures daily highest price from 2021/7/19 to 2022/8/17. These data are opened and they can be obtained from the website https://www.investing.com/commodities/crude-oil-historical-data. There are 287 raw data and we chose 11 data as our sample subset . These data points are shown in Figure 1. We set , where and are the Crude Oil WTI Futures daily highest prices in 2021/7/19, 8/26, 10/6, 11/16, 12/28, 2022/2/2, 3/9, 4/19, 5/30, 7/5, and 8/17.

Figure 1.

Raw data and sample data.

Let be defined by , with each being replaced by ,

and choose k to be the Epanechnikov kernel . Let and choose , , , in . We estimate M by , and set and in Theorem 3. Assume that in this example. The values of and λ are estimated by the sample variance and , respectively. By , we set .

We construct a FIF by the method given in Section 2 with linear functions and the linear polynomial p such that , , and , . The chosen values are given in Table 1.

Table 1.

The values of .

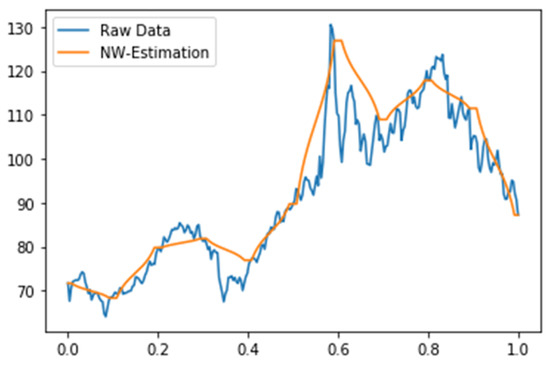

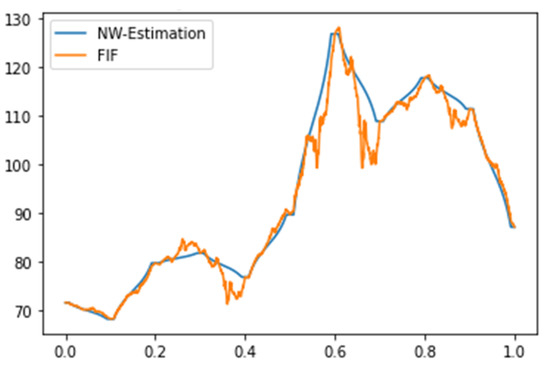

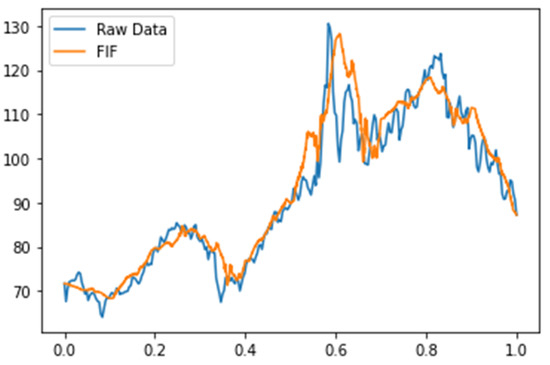

The graphs of raw data and are shown in Figure 2. The graphs of and are shown in Figure 3. The graphs of raw data and are shown in Figure 4.

Figure 2.

Raw data and .

Figure 3.

and .

Figure 4.

Raw data and .

5. Conclusions

The purpose of this paper is to construct a fractal interpolation function (FIF) that has good approximation for a given data set. We consider the Nadaraya–Watson estimator for some sample data chosen from a given data set, and then apply to construct a FIF to fit the given set of data points. The Nadaraya–Watson estimator is widely used in data-fitting problems, and its fractal perturbation is considered in our paper. The expectations of mean squared errors of such approximation are also estimated. By the figures given in Example 1, we may see the quality of curve fitting by a FIF, which is constructed from with 11 sample points to fit the 287 raw data points. We see that the error of approximation can be decreased by choosing more sample data.

In this paper, we construct a FIF to fit a given data set with the help of the Nadaraya–Watson estimator. In fact, the Priestley–Chao estimator, the Gasser–Müller estimator, and other types of kernel regression estimators can also be used in our approach. Nonparametric regression has been studied for a long time. Several types of models with their theoretical results and applications are widely developed by many researchers. Fractal perturbations of these models are worth investigating in the field of fractal curve fitting.

Author Contributions

Conceptualization, D.-C.L.; methodology, D.-C.L.; software, C.-W.L.; validation, D.-C.L. and C.-W.L.; formal analysis, D.-C.L.; investigation, C.-W.L.; resources, C.-W.L.; data curation, C.-W.L.; writing—original draft preparation, C.-W.L.; writing—review and editing, D.-C.L.; project administration, D.-C.L.; funding acquisition, D.-C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ministry of Science and Technology, R.O.C. grant number MOST 110-2115-M-214-002.

Data Availability Statement

The data set used in this paper can be obtained in the webpage https://www.investing.com/commodities/crude-oil-historical-data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Györfi, L.; Kohler, M.; Krzyzak, A.; Walk, H. A Distribution-Free Theory of Nonparametric Regression; Springer: New York, NY, USA, 2002. [Google Scholar]

- Härdle, W.; Müller, M.; Sperlich, S.; Werwatz, A. Nonparametric and Semiparametric Models; Springer: New York, NY, USA, 2004. [Google Scholar]

- Hart, J.D. Nonparametric Smoothing and Lack-of-Fit Tests; Springer: New York, NY, USA, 1997. [Google Scholar]

- Li, Q.; Racine, J.S. Nonparametric Econometrics; Princeton University Press: Mercer County, NJ, USA, 2007. [Google Scholar]

- Tsybakov, A.B. Introduction to Nonparametric Estimation; Springer: New York, NY, USA, 2009. [Google Scholar]

- Wasserman, L. All of Nonparametric Statistics; Springer: New York, NY, USA, 2006. [Google Scholar]

- Chu, C.-K.; Marron, J.S. Choosing a kernel regression estimator. Stat. Sci. 1991, 6, 404–436. [Google Scholar] [CrossRef]

- Jones, M.C.; Davies, S.J.; Park, B.U. Versions of kernel-type regression estimators. J. Amer. Statist. Assoc. 1994, 89, 825–832. [Google Scholar] [CrossRef]

- Barnsley, M.F. Fractal functions and interpolation. Constr. Approx. 1986, 2, 303–329. [Google Scholar] [CrossRef]

- Barnsley, M.F. Fractals Everywhere; Academic Press: Orlando, FL, USA, 1988. [Google Scholar]

- Marvasti, M.A.; Strahle, W.C. Fractal geometry analysis of turbulent data. Signal Process. 1995, 41, 191–201. [Google Scholar] [CrossRef]

- Mazel, D.S. Representation of discrete sequences with three-dimensional iterated function systems. IEEE Trans. Signal Process. 1994, 42, 3269–3271. [Google Scholar] [CrossRef]

- Mazel, D.S.; Hayes, M.H. Using iterated function systems to model discrete sequences. IEEE Trans. Signal Process. 1992, 40, 1724–1734. [Google Scholar] [CrossRef]

- Balasubramani, N. Shape preserving rational cubic fractal interpolation function. J. Comput. Appl. Math. 2017, 319, 277–295. [Google Scholar] [CrossRef]

- Balasubramani, N.; Guru Prem Prasad, M.; Natesan, S. Shape preserving α-fractal rational cubic splines. Calcolo 2020, 57, 21. [Google Scholar] [CrossRef]

- Barnsley, M.F.; Elton, J.; Hardin, D.; Massopust, P. Hidden variable fractal interpolation functions. SIAM J. Math. Anal. 1989, 20, 1218–1242. [Google Scholar] [CrossRef]

- Barnsley, M.F.; Massopust, P.R. Bilinear fractal interpolation and box dimension. J. Approx. Theory 2015, 192, 362–378. [Google Scholar] [CrossRef]

- Chand, A.K.B.; Kapoor, G.P. Generalized cubic spline fractal interpolation functions. SIAM J. Numer. Anal. 2006, 44, 655–676. [Google Scholar] [CrossRef]

- Chand, A.K.B.; Navascués, M.A. Natural bicubic spline fractal interpolation. Nonlinear Anal. 2008, 69, 3679–3691. [Google Scholar] [CrossRef]

- Chand, A.K.B.; Navascués, M.A. Generalized Hermite fractal interpolation. Rev. Real Acad. Cienc. Zaragoza 2009, 64, 107–120. [Google Scholar]

- Chand, A.K.B.; Tyada, K.R. Constrained shape preserving rational cubic fractal interpolation functions. Rocky Mt. J. Math. 2018, 48, 75–105. [Google Scholar] [CrossRef]

- Chand, A.K.B.; Vijender, N.; Viswanathan, P.; Tetenov, A.V. Affine zipper fractal interpolation functions. BIT Numer. Math. 2020, 60, 319–344. [Google Scholar] [CrossRef]

- Chand, A.K.B.; Viswanathan, P. A constructive approach to cubic Hermite fractal interpolation function and its constrained aspects. BIT Numer. Math. 2013, 53, 841–865. [Google Scholar] [CrossRef]

- Chandra, S.; Abbas, S.; Verma, S. Bernstein super fractal interpolation function for countable data systems. Numer. Algorithms 2022. [Google Scholar] [CrossRef]

- Dai, Z.; Wang, H.-Y. Construction of a class of weighted bivariate fractal interpolation functions. Fractals 2022, 30, 2250034. [Google Scholar] [CrossRef]

- Katiyar, S.K.; Chand, A.K.B. Shape preserving rational quartic fractal functions. Fractals 2019, 27, 1950141. [Google Scholar] [CrossRef]

- Katiyar, S.K.; Chand, A.K.B.; Kumar, G.S. A new class of rational cubic spline fractal interpolation function and its constrained aspects. Appl. Math. Comput. 2019, 346, 319–335. [Google Scholar] [CrossRef]

- Luor, D.-C. Fractal interpolation functions with partial self similarity. J. Math. Anal. Appl. 2018, 464, 911–923. [Google Scholar] [CrossRef]

- Massopust, P.R. Fractal Functions, Fractal Surfaces, and Wavelets; Academic Press: San Diego, CA, USA, 1994. [Google Scholar]

- Massopust, P.R. Interpolation and Approximation with Splines and Fractals; Oxford University Press: New York, NY, USA, 2010. [Google Scholar]

- Miculescu, R.; Mihail, A.; Pacurar, C.M. A fractal interpolation scheme for a possible sizeable set of data. J. Fractal Geom. 2022. [Google Scholar] [CrossRef]

- Navascués, M.A. Fractal approximation. Complex Anal. Oper. Theory 2010, 4, 953–974. [Google Scholar] [CrossRef]

- Navascués, M.A. Fractal bases of Lp spaces. Fractals 2012, 20, 141–148. [Google Scholar] [CrossRef]

- Navascués, M.A.; Chand, A.K.B. Fundamental sets of fractal functions. Acta Appl. Math. 2008, 100, 247–261. [Google Scholar] [CrossRef]

- Navascués, M.A.; Pacurar, C.; Drakopoulos, V. Scale-free fractal interpolation. Fractal Fract. 2022, 6, 602. [Google Scholar] [CrossRef]

- Prasad, S.A. Super coalescence hidden-variable fractal interpolation functions. Fractals 2021, 29, 2150051. [Google Scholar] [CrossRef]

- Ri, S.; Drakopoulos, V. Generalized fractal interpolation curved lines and surfaces. Nonlinear Stud. 2021, 28, 427–488. [Google Scholar]

- Tyada, K.R.; Chand, A.K.B.; Sajid, M. Shape preserving rational cubic trigonometric fractal interpolation functions. Math. Comput. Simul. 2021, 190, 866–891. [Google Scholar] [CrossRef]

- Vijender, N. Fractal perturbation of shaped functions: Convergence independent of scaling. Mediterr. J. Math. 2018, 15, 211. [Google Scholar] [CrossRef]

- Viswanathan, P. A revisit to smoothness preserving fractal perturbation of a bivariate function: Self-Referential counterpart to bicubic splines. Chaos Solitons Fractals 2022, 157, 111885. [Google Scholar] [CrossRef]

- Viswanathan, P.; Chand, A.K.B. Fractal rational functions and their approximation properties. J. Approx. Theory 2014, 185, 31–50. [Google Scholar] [CrossRef]

- Viswanathan, P.; Chand, A.K.B. α-fractal rational splines for constrained interpolation. Electron. Trans. Numer. Anal. 2014, 41, 420–442. [Google Scholar]

- Viswanathan, P.; Navascués, M.A.; Chand, A.K.B. Associate fractal functions in Lp-spaces and in one-sided uniform approximation. J. Math. Anal. Appl. 2016, 433, 862–876. [Google Scholar] [CrossRef]

- Wang, H.-Y.; Yu, J.-S. Fractal interpolation functions with variable parameters and their analytical properties. J. Approx. Theory 2013, 175, 1–18. [Google Scholar] [CrossRef]

- Banerjee, S.; Gowrisankar, A. Frontiers of Fractal Analysis Recent Advances and Challenges; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Kumar, M.; Upadhye, N.S.; Chand, A.K.B. Linear fractal interpolation function for data set with random noise. Fractals, 2022; accepted. [Google Scholar] [CrossRef]

- Luor, D.-C. Fractal interpolation functions for random data sets. Chaos Solitons Fractals 2018, 114, 256–263. [Google Scholar] [CrossRef]

- Luor, D.-C. Statistical properties of linear fractal interpolation functions for random data sets. Fractals 2018, 26, 1850009. [Google Scholar] [CrossRef]

- Luor, D.-C. Autocovariance and increments of deviation of fractal interpolation functions for random datasets. Fractals 2018, 26, 1850075. [Google Scholar] [CrossRef]

- Luor, D.-C. On the distributions of fractal functions that interpolate data points with Gaussian noise. Chaos Solitons Fractals 2020, 135, 109743. [Google Scholar] [CrossRef]

- Caldarola, F.; Maiolo, M. On the topological convergence of multi-rule sequences of sets and fractal patterns. Soft Comput. 2020, 24, 17737–17749. [Google Scholar] [CrossRef]

- Wang, H.-Y.; Li, H.; Shen, J.-Y. A novel hybrid fractal interpolation-SVM model for forecasting stock price indexes. Fractals 2019, 27, 1950055. [Google Scholar] [CrossRef]

- Tosatto, S.; Akrour, R.; Peters, J. An upper bound of the bias of Nadaraya-Watson kernel regression under Lipschitz assumptions. Stats 2021, 4, 1–17. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).