Overcoming Domain Shift in Violence Detection with Contrastive Consistency Learning

Abstract

1. Introduction

- We present a pioneering investigation into the pervasive domain shift challenges in violence detection. To adequately address this, we propose CoMT-VD, a new contrastive Mean Teacher model specifically designed to enhance model adaptability and performance across diverse target domain distributions.

- We introduce a new dual-strategy contrastive learning (DCL) module that integrates two distinct positive-negative pair matching strategies to compute complementary consistency losses. This promotes the learning of more effective discriminative features for violence detection under challenging domain shift conditions.

- We conduct comprehensive evaluations of detecting violence under challenging domain shift scenarios, which unequivocally demonstrate consistent and notable performance improvements when CoMT-VD is integrated with various baseline models.

2. Related Work

2.1. Violence Detection

2.2. Knowledge Distillation and Mean Teacher

2.3. Contrastive Learning (CL)

2.4. Domain Adaptation (DA)

3. Proposed Method

3.1. Problem Definition

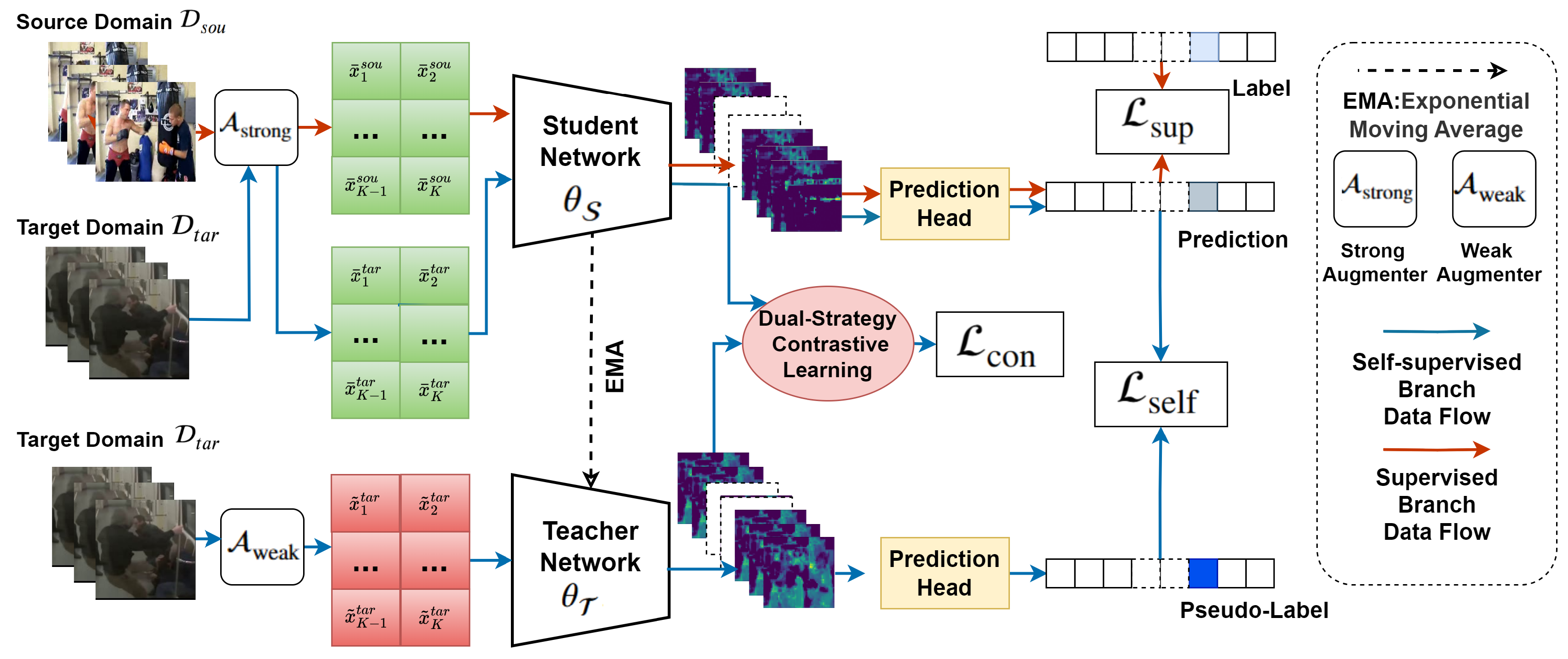

3.2. Contrastive Mean Teacher Violence Detection

3.2.1. Method Overview

3.2.2. Teacher–Student Framework

3.2.3. Cross-Domain Augmentation

- Subtle Brightness Adjustment: Pixel intensities are moderately modulated, for example, , where the brightness factor is sampled from a narrow interval of .

- Gentle Frame Blending: Subtle temporal alterations or mild blending with non-violent frames are performed using a minimal blending coefficient , ensuring that the dominant pixel remains unaltered: .

- Significant Occlusion: Larger or more strategically disruptive occlusion masks are employed: , with and .

- Major Brightness and Contrast Shifts: Pixel intensities and contrast are altered dramatically. For instance, brightness [46,47] might be scaled by , where is sampled from a wider range , and contrast adjustments are similarly intensified to simulate challenging real-world lighting conditions (i.e., very dark or overexposed scenes).

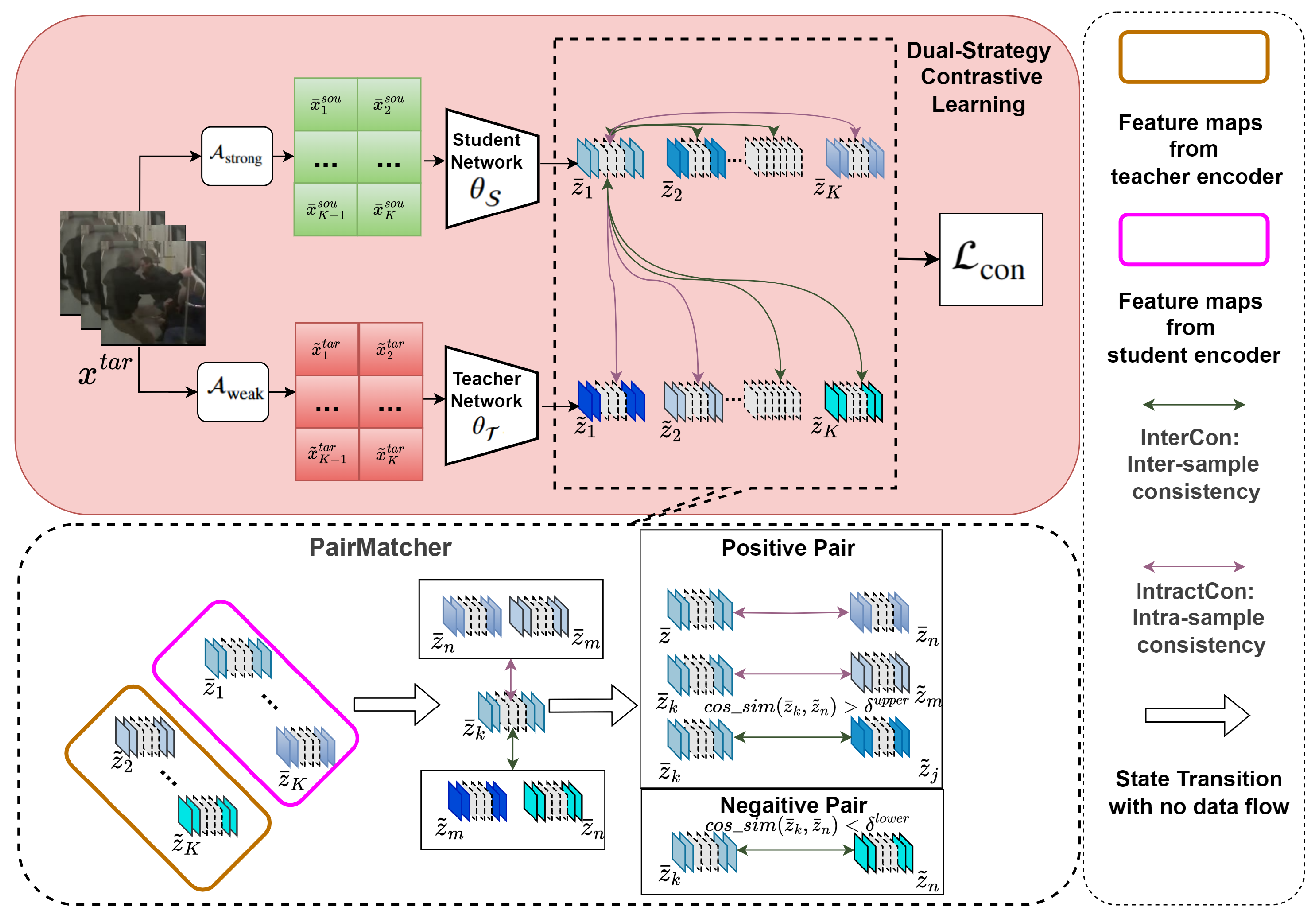

3.2.4. Dual-Strategy Contrastive Learning

- Intra-Sample Consistency: To enable the model to maintain feature invariance of the same sample across different scenarios, we construct positive pairs by pairing the augmented features of a sample with those extracted from other augmentation methods applied to the same sample, while treating features from other samples as negative pairs. This ensures that the model preserves consistency in extracted features across varying environments while enhancing its robustness to noise and perturbations.

- Inter-Sample Consistency: Normal samples consistency establishes positive pairs by matching a sample with its differently augmented views, while treating samples from other instances as negatives—a classic positive-negative pairing strategy. This encourages the network to preserve feature consistency across diverse transformations and real-world variations. However, relying solely on this approach may under-utilize valuable semantic relationships among distinct instances that share the same class label. To fully exploit the diverse information emerging from different views generated by the same sample within a batch, we design an intra-sample feature pairing strategy.

3.2.5. Mean Teacher Optimization

| Algorithm 1 Training Procedure of CoMT-VD |

1. Initialize the student network and the teacher network ; 2. For each and do: ⊳ generate the strong augmentations 3. ; 4. ; ▹ generate the weak augmentations 5. ; ⊳ compute the feature of the strong augmented samples 6. and ; 7. Calculate using Equation (1); ; 8. Calculate via Equation (2); 9. Calculate via Equation (7); 10. Calculate ; 11. Update using Equation (8); 12. Update using Equation (10); 13. End For |

4. Experiments

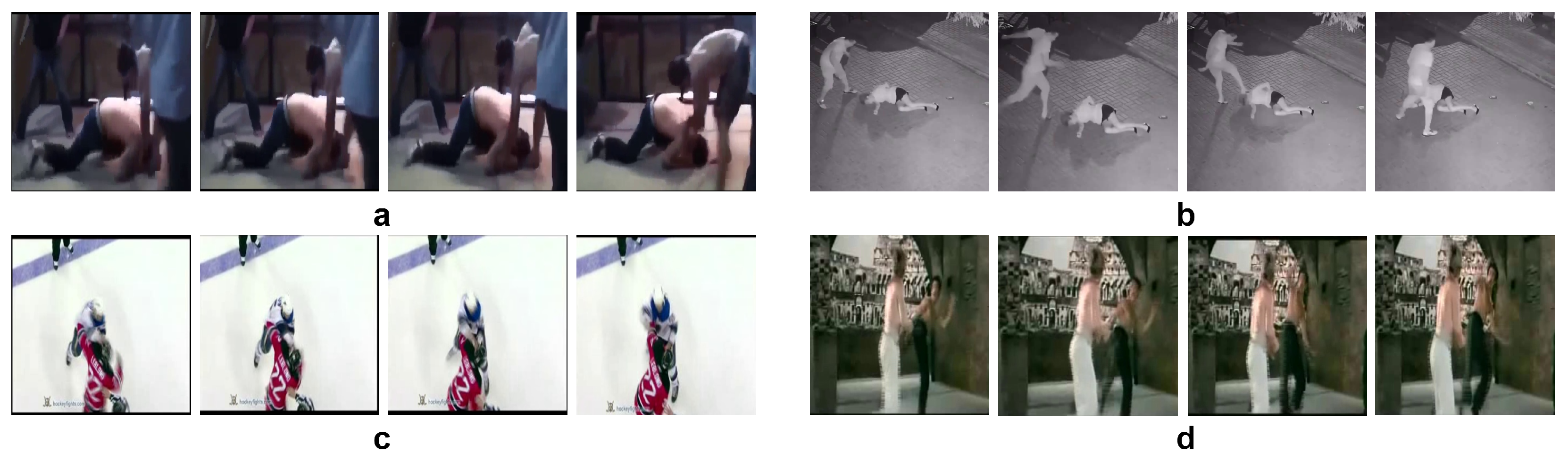

4.1. Benchmark Datasets

- RLVS [7] is a large-scale real-world captured dataset, containing 1000 violent and 1000 non-violent video clips. The video data in RLVS features more diverse scenarios including streets, classrooms, courtyards, corridors, and sports fields. Most footage is captured from a third-person perspective, predominantly showing fights involving multiple individuals, with a small number of group violence scenes.

- RWF-2000 [52] is designed for real-world violent behavior detection under surveillance cameras. The videos in this dataset are collected from raw surveillance footage on YouTube, segmented into clips of up to 5 s at 30 frames per second, with each clip labeled as either violent or non-violent behavior.

- Hockey Fight [53] comprises 1000 real video clips from ice hockey matches, evenly split between 500 fight scenes and 500 normal segments in the hockey games. However, a notable limitation of this dataset is the lack of scene diversity, as all videos are confined to ice hockey rinks during matches.

- Movies [53] is relatively small-scale, consisting of 200 video clips from action movies, with 100 clips depicting violent scenes and 100 non-violent scenes.

- Violent Flow [54] describes violent or non-violent group behaviors in real-world scenarios. The samples originates from surveillance cameras and monitoring devices, capturing large-scale crowd behaviors in public spaces such as stadiums, streets, and squares. The videos are divided into two categories, violent video and normal video, with a total of 246 video clips (123 violent and 123 non-violent clips).

4.2. Baselines

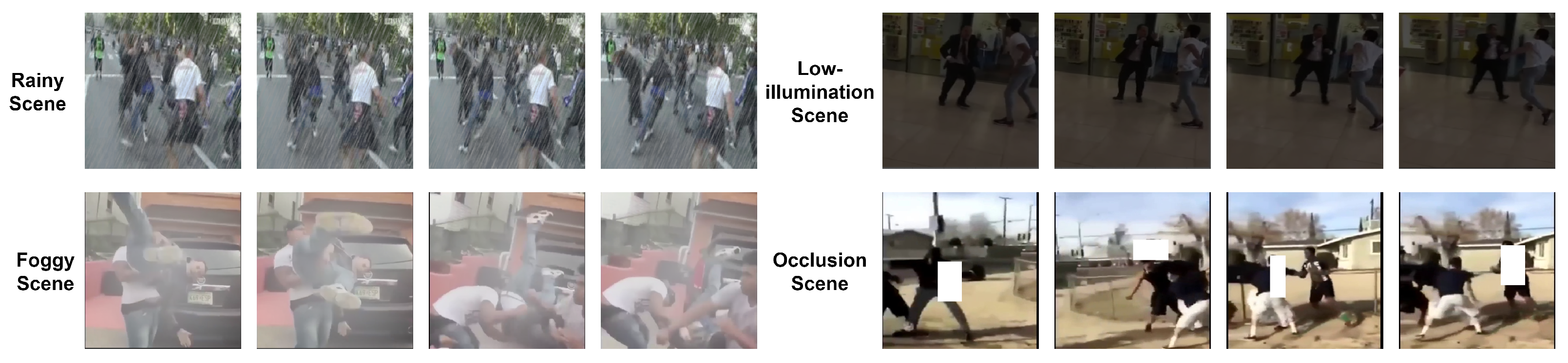

4.3. Experimental Setup

- Rain Simulation: Gaussian noise is strategically injected into the original images, with elongated noise points designed to simulate realistic raindrops. Raindrop parameters are precisely set to a length of 10 pixels and a count of 500 per frame, ensuring a consistent and reproducible simulation of rainy scenes [16].

- Fog Simulation: A fog-like color template [16] (fixed at an RGB value of 200) is overlaid onto the original image at 50% intensity. This process emulates the reduced visibility and contrast of foggy conditions.

- Low-Light Simulation: The brightness of each frame is systematically reduced to 40–70% of its original level. This simulates varying degrees of low-light conditions, a frequent challenge in surveillance and monitoring applications.

4.4. Experimental Results

4.4.1. Performance for Unseen Conditions

4.4.2. Performance for Cross Datasets

5. Ablation Studies

5.1. Different Augmentation Intensities

5.2. The Impact of Other Factors on Performance

6. Conclusions

6.1. Discussion

6.2. Limitation

6.3. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Clarin, C.; Dionisio, J.; Echavez, M.; Naval, P. DOVE: Detection of movie violence using motion intensity analysis on skin and blood. PCSC 2005, 6, 150–156. [Google Scholar]

- De Souza, F.D.; Chavez, G.C.; do Valle Jr, E.A.; Araújo, A.d.A. Violence detection in video using spatio-temporal features. In Proceedings of the 2010 23rd SIBGRAPI Conference on Graphics, Patterns and Images, Gramado, Brazil, 30 August–3 September 2010; pp. 224–230. [Google Scholar]

- Khan, H.; Yuan, X.; Qingge, L.; Roy, K. Violence Detection from Industrial Surveillance Videos Using Deep Learning. IEEE Access 2025, 13, 15363–15375. [Google Scholar] [CrossRef]

- Sultani, W.; Chen, C.; Shah, M. Real-world anomaly detection in surveillance videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6479–6488. [Google Scholar]

- Maqsood, R.; Bajwa, U.I.; Saleem, G.; Raza, R.H.; Anwar, M.W. Anomaly recognition from surveillance videos using 3D convolution neural network. Multimed. Tools Appl. 2021, 80, 18693–18716. [Google Scholar] [CrossRef]

- Wang, Z.; She, Q.; Smolic, A. Action-net: Multipath excitation for action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13214–13223. [Google Scholar]

- Soliman, M.M.; Kamal, M.H.; Nashed, M.A.E.M.; Mostafa, Y.M.; Chawky, B.S.; Khattab, D. Violence recognition from videos using deep learning techniques. In Proceedings of the 2019 Ninth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 8–10 December 2019; pp. 80–85. [Google Scholar]

- Pandey, B.; Sinha, U.; Nagwanshi, K.K. A multi-stream framework using spatial–temporal collaboration learning networks for violence and non-violence classification in complex video environments. Int. J. Mach. Learn. Cybern. 2025, 16, 4737–4766. [Google Scholar] [CrossRef]

- Sun, S.; Gong, X. Multi-scale bottleneck transformer for weakly supervised multimodal violence detection. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024; pp. 1–6. [Google Scholar]

- Rendón-Segador, F.J.; Álvarez-García, J.A.; Salazar-González, J.L.; Tommasi, T. Crimenet: Neural structured learning using vision transformer for violence detection. Neural Netw. 2023, 161, 318–329. [Google Scholar] [CrossRef]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 2010, 22, 199–210. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3D convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Xu, Y.; Cao, H.; Mao, K.; Chen, Z.; Xie, L.; Yang, J. Aligning correlation information for domain adaptation in action recognition. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 6767–6778. [Google Scholar] [CrossRef]

- Da Costa, V.G.T.; Zara, G.; Rota, P.; Oliveira-Santos, T.; Sebe, N.; Murino, V.; Ricci, E. Dual-head contrastive domain adaptation for video action recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1181–1190. [Google Scholar]

- Li, J.; Xu, R.; Liu, X.; Ma, J.; Li, B.; Zou, Q.; Ma, J.; Yu, H. Domain adaptation based object detection for autonomous driving in foggy and rainy weather. arXiv 2023, arXiv:2307.09676. [Google Scholar] [CrossRef]

- Dasgupta, A.; Jawahar, C.; Alahari, K. Source-free video domain adaptation by learning from noisy labels. Pattern Recognit. 2025, 161, 111328. [Google Scholar] [CrossRef]

- Xu, Y.; Cao, H.; Xie, L.; Li, X.L.; Chen, Z.; Yang, J. Video unsupervised domain adaptation with deep learning: A comprehensive survey. ACM Comput. Surv. 2024, 56, 1–36. [Google Scholar] [CrossRef]

- Gao, Z.; Zhao, Y.; Zhang, H.; Chen, D.; Liu, A.A.; Chen, S. A novel multiple-view adversarial learning network for unsupervised domain adaptation action recognition. IEEE Trans. Cybern. 2021, 52, 13197–13211. [Google Scholar] [CrossRef]

- Huang, S.W.; Lin, C.T.; Chen, S.P.; Wu, Y.Y.; Hsu, P.H.; Lai, S.H. Auggan: Cross domain adaptation with gan-based data augmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 718–731. [Google Scholar]

- Choi, J.; Sharma, G.; Chandraker, M.; Huang, J.B. Unsupervised and semi-supervised domain adaptation for action recognition from drones. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1717–1726. [Google Scholar]

- Cen, F.; Zhao, X.; Li, W.; Wang, G. Deep feature augmentation for occluded image classification. Pattern Recognit. 2021, 111, 107737. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Shen, C.; Kong, T.; Li, L. Dense contrastive learning for self-supervised visual pre-training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 3024–3033. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Guo, Y.; Ma, S.; Su, H.; Wang, Z.; Zhao, Y.; Zou, W.; Sun, S.; Zheng, Y. Dual mean-teacher: An unbiased semi-supervised framework for audio-visual source localization. Adv. Neural Inf. Process. Syst. 2023, 36, 48639–48661. [Google Scholar]

- Mahmoodi, J.; Nezamabadi-pour, H. A spatio-temporal model for violence detection based on spatial and temporal attention modules and 2D CNNs. Pattern Anal. Appl. 2024, 27, 46. [Google Scholar] [CrossRef]

- Mahmoodi, J.; Nezamabadi-Pour, H. Violence Detection in Video Using Statistical Features of the Optical Flow and 2D Convolutional Neural Network. Comput. Intell. 2025, 41, e70034. [Google Scholar] [CrossRef]

- Buciluǎ, C.; Caruana, R.; Niculescu-Mizil, A. Model compression. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; pp. 535–541. [Google Scholar]

- Wu, M.C.; Chiu, C.T.; Wu, K.H. Multi-teacher knowledge distillation for compressed video action recognition on deep neural networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2202–2206. [Google Scholar]

- Kumar, A.; Mitra, S.; Rawat, Y.S. Stable mean teacher for semi-supervised video action detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 4419–4427. [Google Scholar]

- Wang, X.; Hu, J.F.; Lai, J.H.; Zhang, J.; Zheng, W.S. Progressive teacher-student learning for early action prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3556–3565. [Google Scholar]

- Xiong, B.; Yang, X.; Song, Y.; Wang, Y.; Xu, C. Modality-Collaborative Test-Time Adaptation for Action Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 26732–26741. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Singh, A.; Chakraborty, O.; Varshney, A.; Panda, R.; Feris, R.; Saenko, K.; Das, A. Semi-supervised action recognition with temporal contrastive learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10389–10399. [Google Scholar]

- Shah, K.; Shah, A.; Lau, C.P.; de Melo, C.M.; Chellappa, R. Multi-view action recognition using contrastive learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 3381–3391. [Google Scholar]

- Lorre, G.; Rabarisoa, J.; Orcesi, A.; Ainouz, S.; Canu, S. Temporal contrastive pretraining for video action recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 662–670. [Google Scholar]

- Zheng, S.; Chen, S.; Jin, Q. Few-shot action recognition with hierarchical matching and contrastive learning. In Computer Vision—ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 297–313. [Google Scholar]

- Nguyen, T.T.; Bin, Y.; Wu, X.; Hu, Z.; Nguyen, C.D.T.; Ng, S.K.; Luu, A.T. Multi-scale contrastive learning for video temporal grounding. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 6227–6235. [Google Scholar]

- Dave, I.; Gupta, R.; Rizve, M.N.; Shah, M. Tclr: Temporal contrastive learning for video representation. Comput. Vis. Image Underst. 2022, 219, 103406. [Google Scholar] [CrossRef]

- Altabrawee, H.; Noor, M.H.M. STCLR: Sparse Temporal Contrastive Learning for Video Representation. Neurocomputing 2025, 630, 129694. [Google Scholar] [CrossRef]

- Sohn, K.; Liu, S.; Zhong, G.; Yu, X.; Yang, M.H.; Chandraker, M. Unsupervised domain adaptation for face recognition in unlabeled videos. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3210–3218. [Google Scholar]

- Kim, D.; Tsai, Y.H.; Zhuang, B.; Yu, X.; Sclaroff, S.; Saenko, K.; Chandraker, M. Learning cross-modal contrastive features for video domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 13618–13627. [Google Scholar]

- Chen, M.H.; Kira, Z.; AlRegib, G.; Yoo, J.; Chen, R.; Zheng, J. Temporal attentive alignment for large-scale video domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6321–6330. [Google Scholar]

- Aich, A.; Peng, K.C.; Roy-Chowdhury, A.K. Cross-domain video anomaly detection without target domain adaptation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 2579–2591. [Google Scholar]

- Valois, P.H.V.; Niinuma, K.; Fukui, K. Occlusion Sensitivity Analysis With Augmentation Subspace Perturbation in Deep Feature Space. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 1–6 January 2024; pp. 4829–4838. [Google Scholar]

- Wang, Z.; Jiang, J.x.; Zeng, S.; Zhou, L.; Li, Y.; Wang, Z. Multi-receptive field feature disentanglement with Distance-Aware Gaussian Brightness Augmentation for single-source domain generalization in medical image segmentation. Neurocomputing 2025, 638, 130120. [Google Scholar] [CrossRef]

- Kandel, I.; Castelli, M.; Manzoni, L. Brightness as an augmentation technique for image classification. Emerg. Sci. J. 2022, 6, 881–892. [Google Scholar] [CrossRef]

- Choi, J.; Sharma, G.; Schulter, S.; Huang, J.B. Shuffle and attend: Video domain adaptation. In Computer Vision–ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XII 16; Springer: Cham, Switzerland, 2020; pp. 678–695. [Google Scholar]

- Sahoo, A.; Shah, R.; Panda, R.; Saenko, K.; Das, A. Contrast and mix: Temporal contrastive video domain adaptation with background mixing. Adv. Neural Inf. Process. Syst. 2021, 34, 23386–23400. [Google Scholar]

- Yuan, J.; Liu, Y.; Shen, C.; Wang, Z.; Li, H. A simple baseline for semi-supervised semantic segmentation with strong data augmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 8229–8238. [Google Scholar]

- Sohn, K. Improved deep metric learning with multi-class n-pair loss objective. In Proceedings of the Advances in Neural Information Processing Systems 29 (NIPS 2016), Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Cheng, M.; Cai, K.; Li, M. RWF-2000: An open large scale video database for violence detection. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 4183–4190. [Google Scholar]

- Bermejo Nievas, E.; Deniz Suarez, O.; Bueno García, G.; Sukthankar, R. Violence detection in video using computer vision techniques. In Computer Analysis of Images and Patterns, Proceedings of the 14th International Conference, CAIP 2011, Seville, Spain, 29–31 August 2011; Proceedings, Part II 14; Springer: Cham, Switzerland, 2011; pp. 332–339. [Google Scholar]

- Hassner, T.; Itcher, Y.; Kliper-Gross, O. Violent flows: Real-time detection of violent crowd behavior. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 1–6. [Google Scholar]

- Song, W.; Zhang, D.; Zhao, X.; Yu, J.; Zheng, R.; Wang, A. A novel violent video detection scheme based on modified 3D convolutional neural networks. IEEE Access 2019, 7, 39172–39179. [Google Scholar] [CrossRef]

- Tan, Z.; Xia, Z.; Wang, P.; Wu, D.; Li, L. SCTF: An efficient neural network based on local spatial compression and full temporal fusion for video violence detection. Multimed. Tools Appl. 2024, 83, 36899–36919. [Google Scholar]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 6836–6846. [Google Scholar]

| Methods | VGG16 + LSTM [7] | VGG16 + LSTM + CoMT-VD | C3D [55] | C3D + CoMT-VD | ViViT [57] | ViViT + CoMT-VD | ActionNet [6] | ActionNet + CoMT-VD | SCTF [56] | SCTF + CoMT-VD |

|---|---|---|---|---|---|---|---|---|---|---|

| ACC | ||||||||||

| Datesets | ||||||||||

| Rainny Scenes | ||||||||||

| RLVS [7] | 71.5 | 78.3 (+6.8) | 70.3 | 78.5 (+8.2) | 69.5 | 79.3 (+9.8) | 73.3 | 80.3 (+7.0) | 74.0 | 82.8 (+8.8) |

| RWF-2000 [52] | 69.5 | 78.8 (+9.3) | 70.8 | 79.3 (+8.5) | 68.8 | 76.3 (+7.5) | 70.0 | 79.5 (+9.5) | 73.5 | 79.8 (+6.3) |

| Hockey Fight [53] | 72.3 | 83.5 (+9.2) | 71.5 | 82.3 (+10.8) | 70.5 | 78.0 (+7.5) | 73.5 | 82.3 (+8.8) | 76.3 | 85.0 (+9.7) |

| Movies [53] | 75.0 | 80.0 (+5.0) | 72.5 | 80.0 (+7.5) | 72.5 | 77.5 (+5.0) | 75.0 | 80.0 (+5.0) | 77.5 | 85.0 (+7.5) |

| Violent Flow [54] | 72.0 | 84.0 (+12.0) | 76.0 | 82.0 (+6.0) | 70.0 | 78.0 (+8.0) | 76.0 | 86.0 (+10.0) | 78.0 | 84.0 (+6.0) |

| Foggy Scenes | ||||||||||

| RLVS [7] | 71.8 | 77.5 (+5.7) | 69.3 | 76.3 (+7.0) | 69.8 | 75.0 (+5.2) | 74.5 | 84.5 (+10.0) | 73.0 | 83.3 (+10.3) |

| RWF-2000 [52] | 67.3 | 78.5 (+11.2) | 69.8 | 78.3 (+8.5) | 69.5 | 75.8 (+6.3) | 72.3 | 80.3 (+8.0) | 73.8 | 81.5 (+7.8) |

| Hockey Fight [53] | 69.5 | 78.0 (+8.5) | 71.0 | 77.5 (+6.5) | 68.0 | 77.0 (+9.0) | 73.0 | 82.0 (+9.0) | 75.5 | 83.5 (+8.0) |

| Movies [53] | 72.5 | 77.5 (+5.0) | 69.0 | 80.0 (+11.0) | 68.5 | 77.5 (+9.0) | 75.0 | 85.0 (+10.0) | 75.0 | 82.5 (+7.5) |

| Violent Flow [54] | 70.0 | 76.0 (+6.0) | 72.0 | 80.0 (+8.0) | 68.0 | 78.0 (+10.0) | 78.0 | 84.0 (+6.0) | 78.0 | 86.0 (+8.0) |

| Low-light Scenes | ||||||||||

| RLVS [7] | 70.0 | 75.5 (+5.5) | 69.3 | 77.0 (+7.7) | 67.5 | 76.8 (+9.3) | 70.3 | 81.3 (+11.0) | 72.0 | 81.8 (+9.8) |

| RWF-2000 [52] | 67.0 | 79.3 (+12.3) | 68.5 | 78.8 (+9.3) | 66.5 | 75.3 (+8.8) | 69.0 | 79.0 (+10.0) | 71.3 | 79.3 (+8.0) |

| Hockey Fight [53] | 69.0 | 78.5 (+9.5) | 68.0 | 78.0 (+10.0) | 67.5 | 77.0 (+9.5) | 71.5 | 80.5 (+9.0) | 75.5 | 82.0 (+6.5) |

| Movies [53] | 70.0 | 77.5 (+7.5) | 70.0 | 77.5 (+7.5) | 70.0 | 75.0 (+5.0) | 70.0 | 80.0 (+10.0) | 75.0 | 82.0 (+7.0) |

| Violent Flow [54] | 68.0 | 78.0 (+10.0) | 72.0 | 80.0 (+10.0) | 70.0 | 76.0 (+6.0) | 76.0 | 86.0 (+10.0) | 76.0 | 84.0 (+8.0) |

| Occlusion Scenes | ||||||||||

| RLVS [7] | 68.8 | 76.8 (+8.0) | 70.3 | 78.8 (+8.5) | 67.5 | 77.3 (+9.8) | 71.3 | 82.5 (+11.2) | 72.0 | 84.3 (+12.3) |

| RWF-2000 [52] | 67.3 | 78.5 (+11.2) | 68.3 | 79.0 (+10.7) | 66.8 | 77.0 (+11.2) | 72.0 | 80.3 (+10.3) | 71.8 | 81.3 (+9.5) |

| Hockey Fight [53] | 68.5 | 79.0 (+10.5) | 69.0 | 80.5 (+11.5) | 67.5 | 77.5 (+10.0) | 72.5 | 81.0 (+8.5) | 75.5 | 82.5 (+7.0) |

| Movies [53] | 70.0 | 77.5 (+7.5) | 67.5 | 80.0 (+12.5) | 67.5 | 77.5 (+10.0) | 72.5 | 80.0 (+7.5) | 72.5 | 82.5 (+10.0) |

| Violent Flow [54] | 66.0 | 78.0 (+12.0) | 68.0 | 76.0 (+8.0) | 66.0 | 76.0 (+10.0) | 78.0 | 84.0 (+6.0) | 76.0 | 86.0 (+10.0) |

| Methods | VGG16 + LSTM | VGG16 + LSTM + CoMT-VD | C3D | C3D + CoMT-VD | ViViT | ViViT + CoMT-VD | ActionNet | ActionNet + CoMT-VD | SCTF | SCTF + CoMT-VD |

|---|---|---|---|---|---|---|---|---|---|---|

| ACC | ||||||||||

| Datesets | ||||||||||

| RLVS | 67.4 | 75.2 (+7.8) | 69.3 | 75.1 (+5.8) | 68.7 | 74.8 (+6.1) | 72.9 | 78.6 (+5.7) | 74.1 | 79.5 (+5.4) |

| RWF-2000 | 68.3 | 75.8 (+7.5) | 70.0 | 76.7 (+6.7) | 69.4 | 78.6 (+9.2) | 74.9 | 80.0 (+5.1) | 73.5 | 81.7 (+8.2) |

| RLVS + RWF-2000 | 71.3 | 78.7 (+7.4) | 72.3 | 79.5 (+7.2) | 71.3 | 78.9 (+7.6) | 75.8 | 82.9 (+7.1) | 75.0 | 82.3 (+7.3) |

| Methods | VGG16 + LSTM | VGG16 + LSTM + CoMT-VD | C3D | C3D + CoMT-VD | ViViT | ViViT + CoMT-VD | ActionNet | ActionNet + CoMT-VD | SCTF | SCTF + CoMT-VD |

|---|---|---|---|---|---|---|---|---|---|---|

| ACC | ||||||||||

| Datesets | ||||||||||

| RLVS | 72.5 | 78.0 (+5.5) | 71.5 | 76.5 (+5.0) | 69.5 | 76.0 (+6.5) | 76.0 | 82.0 (+6.0) | 74.5 | 82.0 (+7.5) |

| RWF-2000 | 69.0 | 77.5 (+8.5) | 72.0 | 79.5 (+7.5) | 68.0 | 74.0 (+6.0) | 74.0 | 83.5 (+9.5) | 76.0 | 84.0 (+8.0) |

| RLVS + RWF-2000 | 72.0 | 80.0 (+8.00) | 70.0 | 82.0 (+12.0) | 72.0 | 78.0 (+6.0) | 78.0 | 86.5 (+8.5) | 76.5 | 86.0 (+9.5) |

| Methods | RLVS [7] | RWF-2000 [52] | Hockey Fight [53] | Movies [53] | Violent Flow [54] | |

|---|---|---|---|---|---|---|

| ACC | ||||||

| [0.5, 1.5] | [0.9–1.1] | 81.8 | 79.3 | 82.3 | 81.5 | 84.0 |

| [0.9–1.2] | 79.0 (−2.8) | 80.1 (−2.2) | 79.6 (−1.9) | 78.8 (−2.7) | 80.2 (−3.8) | |

| [1.0–1.1] | 78.4 (−3.4) | 79.2 (−3.1) | 78.1 (−4.2) | 77.8 (−3.7) | 78.8 (−5.2) | |

| [0.8–1.0] | 79.5 (−2.3) | 76.3 (−3.0) | 78.5 (−3.8) | 77.0 (−4.5) | 78.00 (−6.0) | |

| [0.5, 1.4] | [0.9–1.1] | 77.2 (−4.6) | 76.4 (−2.9) | 78.0 (−4.3) | 76.5 (−5.0) | 79.3 (−4.7) |

| [0.6, 1.5] | 76.8 (−5.0) | 75.0 (−4.3) | 79.6 (−1.9) | 77.8 (−3.7) | 78.2 (−5.8) | |

| [0.4, 1.5] | 78.0 (−3.8) | 78.8 (−2.7) | 79.3 (−2.2) | 78.2 (−3.3) | 80.1 (−3.9) | |

| Methods | RLVS [7] | RWF-2000 [52] | Hockey Fight [53] | Movies [53] | Violent Flow [54] | |

|---|---|---|---|---|---|---|

| ACC | ||||||

| 0.3 | 0.1 | 81.8 | 79.3 | 82.3 | 81.5 | 84.0 |

| 0.2 | 77.8 (−4.0) | 76.3 (−3.0) | 79.8 (−2.5) | 78.5 (−3.0) | 79.7 (−4.3) | |

| 0.2 | 0.1 | 76.2 (−5.6) | 75.0 (−4.3) | 77.5 (−4.8) | 76.3 (−5.2) | 79.1 (−5.9) |

| 0.4 | 78.3 (−3.5) | 77.0 (−5.3) | 78.6 (−3.7) | 78.3 (−3.2) | 80.0 (−4.0) | |

| Methods | RLVS [7] | RWF-2000 [52] | Hockey Fight [53] | Movies [53] | Violent Flow [54] | |

|---|---|---|---|---|---|---|

| ACC | ||||||

| 0.4 | 0.1 | 81.8 | 79.3 | 82.3 | 83.5 | 84.0 |

| 0.2 | 79.3 (−2.5) | 76.3 (−3.0) | 78.6 (−3.7) | 80.6 (−2.9) | 80.2 (−3.8) | |

| 0.3 | 0.1 | 78.5 (−3.3) | 78.2 (−1.1) | 77.9 (−4.4) | 80.6 (−2.7) | 79.3 (−4.7) |

| 0.5 | 77.3 (−4.5) | 74.5 (−3.8) | 77.2 (−5.1) | 79.2 (−4.3) | 79.8 (−4.2) | |

| Methods | Dataset | ||||

|---|---|---|---|---|---|

| Baseline | Mean Teacher | DCL | RLVS [7] | RWF-2000 [52] | RLVS + RWF-2000 |

| C3D | × | × | 69.3 | 70.0 | 72.3 |

| √ | × | 73.3 (+4.0) | 75.5 (+5.50) | 76.0 (+3.7) | |

| × | √ | 71.4 (+2.1) | 74.5 (+2.1) | 74.25 (+1.75) | |

| √ | √ | 75.1 (+5.8) | 76.7 (+6.7) | 79.5 (+7.2) | |

| SCTF | × | × | 74.1 | 73.5 | 75.0 |

| √ | × | 76.3 (+2.2) | 78.0 (+4.5) | 78.3 (+3.3) | |

| × | √ | 76.2 (+2.1) | 76.5 (+6.0) | 77.5 (+2.5) | |

| √ | √ | 79.5 (+5.4) | 81.7 (+8.2) | 82.3 (+7.3) | |

| Methods | Dataset | ||||

|---|---|---|---|---|---|

| Baseline | Mean Teacher | DCL | RLVS [7] | RWF-2000 [52] | RLVS + RWF-2000 |

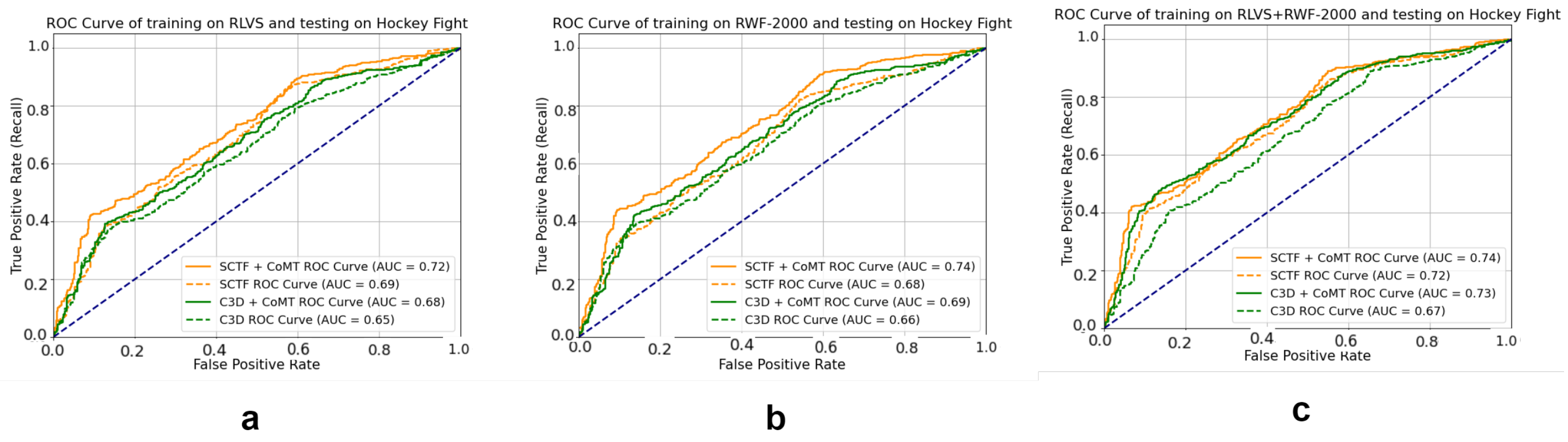

| C3D | × | × | 0.709 | 0.715 | 0.713 |

| √ | × | 0.728 (+0.019) | 0.749 (+0.034) | 0.742 (+0.029) | |

| × | √ | 0.716 (+0.007) | 0.734 (+0.021) | 0.729 (+0.013) | |

| √ | √ | 0.748 (+0.039) | 0.756 (+0.041) | 0.757 (+0.044) | |

| SCTF | × | × | 0.712 | 0.724 | 0.728 |

| √ | × | 0.731 (+0.019) | 0.732 (+0.008) | 0.738 (+0.010) | |

| × | √ | 0.728 (+0.016) | 0.729 (+0.005) | 0.736 (+0.008) | |

| √ | √ | 0.769 (+0.057) | 0.773 (+0.049) | 0.786 (+0.058) | |

| Methods | Dataset | ||||||

|---|---|---|---|---|---|---|---|

| Baseline | Mean Teacher | DCL | RLVS [7] | RWF-2000 [52] | Hockey Fight [53] | Movies [53] | Violent Flow [54] |

| C3D | × | × | 70.3 | 69.4 | 70.6 | 71.6 | 72.0 |

| √ | × | 74.0 (+3.7) | 75.3 (+5.9) | 76.0 (+5.4) | 78.5 (+7.5) | 76.0 (+3.3) | |

| × | √ | 72.5 (+2.2) | 73.8 (+4.4) | 74.4 (+3.8) | 72.5 (+4.5) | 70.0 (+4.0) | |

| √ | √ | 77.3 (+7.0) | 78.8 (+10.3) | 79.5 (+11.0) | 79.0 (+11.0) | 78.0 (+12.0) | |

| SCTF | × | × | 72.6 | 72.8 | 75.5 | 75.7 | 76.2 |

| √ | × | 79.5 (+6.9) | 78.0 (+5.2) | 80.0 (+4.5) | 79.5 (+3.8) | 82.60 (+6.4) | |

| × | √ | 75.5 (+2.9) | 74.6 (+1.8) | 78.3 (+2.8) | 77.3 (+1.6) | 79.3 (+3.1) | |

| √ | √ | 83.8 (+11.2) | 80.2 (+7.4) | 82.7 (+6.3) | 81.5 (+5.8) | 85.7 (+9.5) | |

| Methods | Dataset | ||||||

|---|---|---|---|---|---|---|---|

| Baseline | Mean Teacher | DCL | RLVS [7] | RWF-2000 [52] | Hockey Fight [53] | Movies [53] | Violent Flow [54] |

| C3D | × | × | 0.712 | 0.721 | 0.723 | 0.719 | 0.726 |

| √ | × | 0.726 (+0.014) | 0.737 (+0.016) | 0.742 (+0.019) | 0.751 (+0.032) | 0.748 (+0.022) | |

| × | √ | 0.719 (+0.007) | 0.729 (+0.008) | 0.738 (+0.015) | 0.727 (+0.008) | 0.740 (+0.014) | |

| √ | √ | 0.732 (+0.019) | 0.743 (+0.022) | 0.748 (+0.025) | 0.756 (+0.037) | 0.751 (+0.025) | |

| SCTF | × | × | 0.723 | 0.730 | 0.739 | 0.732 | 0.741 |

| √ | × | 0.743 (+0.020) | 0.755 (+0.025) | 0.762 (+0.023) | 0.786 (+0.054) | 0.791 (+0.050) | |

| × | √ | 0.736 (+0.013) | 0.742 (+0.012) | 0.769 (+0.030) | 0.751 (+0.019) | 0.773 (+0.032) | |

| √ | √ | 0.802 (+0.079) | 0.781 (+0.051) | 0.793 (+0.054) | 0.810 (+0.078) | 0.797 (+0.056) | |

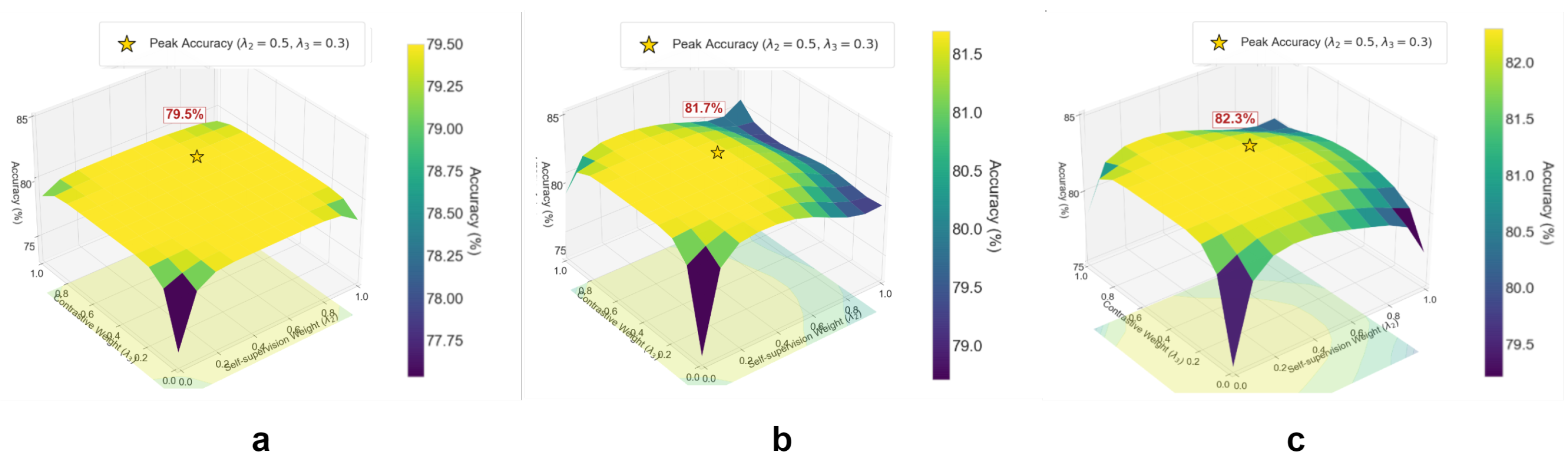

| Methods | RLVS [7] | RWF-2000 [52] | Hockey Fight [53] | Movies [53] | Violent Flow [54] | |

|---|---|---|---|---|---|---|

| ACC | ||||||

| 0.7 | 0.3 | 81.8 | 79.3 | 82.3 | 81.5 | 84.0 |

| 0.4 | 79.0 (−2.8) | 78.5 (−2.8) | 79.6 (−2.7) | 79.3 (−2.2) | 82.6 (−1.4) | |

| 0.2 | 78.9 (−2.9) | 79.0 (−0.3) | 79.8 (−2.5) | 78.9 (−2.6) | 80.9 (−3.1) | |

| 0.6 | 0.3 | 79.8 (−2.0) | 78.2 (−1.1) | 79.7 (−1.6) | 79.2 (−2.3) | 82.2 (−1.8) |

| 0.8 | 78.3 (−3.5) | 77.3 (−2.6) | 80.2 (−2.1) | 80.4 (−1.1) | 81.2 (−2.8) | |

| Augmentation Methods | Dataset | ||||||

|---|---|---|---|---|---|---|---|

| Augmenter in CoMT-VD | Rotarion | Cropping | RLVS [7] | RWF-2000 [52] | Hockey Fight [53] | Movies [53] | Violent Flow [54] |

| Occlusion Brightness Blending | × | × | 83.8 | 80.2 | 82.7 | 81.5 | 85.7 |

| × | √ | 81.6 (−2.2) | 79.3 (−0.9) | 81.5 (−1.2) | 79.0 (−2.5) | 83.6 (−2.1) | |

| √ | × | 80.3 (−3.5) | 79.1 (−1.1) | 80.9 (−1.8) | 81.8 (−1.9) | 84.5 (−1.2) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xia, Z.; Tan, Z.; Zhang, B. Overcoming Domain Shift in Violence Detection with Contrastive Consistency Learning. Big Data Cogn. Comput. 2025, 9, 286. https://doi.org/10.3390/bdcc9110286

Xia Z, Tan Z, Zhang B. Overcoming Domain Shift in Violence Detection with Contrastive Consistency Learning. Big Data and Cognitive Computing. 2025; 9(11):286. https://doi.org/10.3390/bdcc9110286

Chicago/Turabian StyleXia, Zhenche, Zhenhua Tan, and Bin Zhang. 2025. "Overcoming Domain Shift in Violence Detection with Contrastive Consistency Learning" Big Data and Cognitive Computing 9, no. 11: 286. https://doi.org/10.3390/bdcc9110286

APA StyleXia, Z., Tan, Z., & Zhang, B. (2025). Overcoming Domain Shift in Violence Detection with Contrastive Consistency Learning. Big Data and Cognitive Computing, 9(11), 286. https://doi.org/10.3390/bdcc9110286