1. Introduction

With the rapid development of Artificial Intelligence (AI) and multimodal learning [

1,

2,

3], Emotion Recognition (ER) [

4], particularly Bimodal Emotion Recognition (BER) [

5] and Multimodal Emotion Recognition (MER) [

6], has become a core component of effective Human-Computer Interaction (HCI) [

7,

8,

9]. By leveraging multiple modalities such as audio, text, and visual data, MER systems are capable of capturing the complex and subtle nature of human affective states, which are rarely conveyed through a single channel [

10,

11]. These systems have seen increasing adoption in various domains, including mental health monitoring, personalized learning, and affect-aware virtual assistants [

12,

13,

14]. However, BER systems based on audio–text analysis are more widely adopted as they combine linguistic content with paralinguistic features (prosody, tone). Their practicality in privacy-sensitive applications (e.g., voice assistants, telehealth) further drives preference for visual modalities [

15,

16].

The development of ER [

17,

18] models is based on psychological theories of emotion classification. An influential framework is Ekman’s basic emotions theory [

19], which identifies six universal emotions: happy, sad, anger, fear, disgust, and surprise. Another widely used model is Russell’s circumplex model [

20], which maps emotions onto a two-dimensional plane defined by arousal (high to low) and valence (pleasant to unpleasant). These psychological theories provide a conceptual foundation for computational methods used in emotion classification tasks [

21].

Recent developments in ER have seen a strong shift toward multimodal [

22] and Transformer-based [

23] architectures that leverage pre-trained encoders for audio and text, with increasingly sophisticated attention-based or graph-based fusion mechanisms. These models demonstrate impressive performance on benchmark corpora and continue to evolve with the integration of newer techniques such as State-Space Models (SSMs) (e.g., Mamba) [

24], adaptive attention [

25,

26], and unsupervised [

27,

28,

29] or weakly supervised learning [

30,

31]. However, despite their success, several key challenges persist. Firstly, many State-of-the-Art (SOTA) models are trained and evaluated on English corpora, which limits their effectiveness in multilingual contexts. The ability to use different languages simultaneously is important for a wide variety of multilingual human–computer interaction systems [

32]. Secondly, modality fusion often lacks fine-grained alignment—most methods either fuse at a high level or focus on short-range dependencies, failing to capture nuanced cross-modal dynamics over time. Moreover, corpora have issues such as class imbalance, variation in utterance lengths, and limited emotional diversity, which can lead to overfitting and poor generalization in real-world applications. Finally, current data augmentation strategies tend to be generic, lacking task-specific adaptation or emotional semantics, particularly in low-resource or cross-lingual settings. Therefore, no existing method currently addresses the interaction of linguistic diversity, fine-grained cross-modal alignment across variable-length utterances, and context-specific data augmentation within a unified framework.

To address these limitations, a BER method is proposed that integrates audio and text modalities using cross-lingual encoders fine-tuned jointly on Russian Emotional Speech Dialogs (RESD) (Russian) [

33] (

https://huggingface.co/datasets/Aniemore/resd, accessed on 28 September 2025) and Multimodal EmotionLines Dataset (MELD) (English) [

34] (

https://github.com/declare-lab/MELD, accessed on 28 September 2025) corpora. To combine the outputs of unimodal encoders, a fusion model is introduced, which is based on a Transformer architecture with cross-modal attention, enabling feature interaction across time and feature axes. To mitigate overfitting and improve generalization, three complementary data augmentation strategies are developed and systematically evaluated.

First, two sampling-based augmentation methods are introduced. The Stacked Data Sampling (SDS) method concatenates short utterances into longer, semantically diverse samples. SDS reduces the variability in sequence lengths and enriches the corpus. Second, the Template-based Utterance Generation (TUG) method applies generative augmentation using Large Language Models (LLMs) to synthesize emotionally relevant text samples, which are then converted to speech using high-fidelity Text-to-Speech (TTS) models. This method improves class balance and enhances emotional diversity in training. Finally, a LLM-guided label smoothing strategy is proposed that replaces conventional uniform smoothing with semantically-aware target distributions. Rather than assigning equal weight to non-target emotions, LLM generates soft labels that reflect nuanced emotional co-occurrence based on the input context.

This leads to the following research questions:

RQ1: Can a cross-lingual BER model improve generalization across languages?

RQ2: Can a hierarchical cross-modal attention mechanism effectively handle variable utterance lengths?

RQ3: Do corpus-specific augmentation strategies improve the generalization of cross-lingual BER models?

The main contributions of the article are as follows:

A Cross-Lingual BER model is proposed that integrates Mamba-based cross-lingual unimodal encoders with a Transformer-based cross-modal fusion model, achieving model generalization across English and Russian corpora.

Two complementary data sampling methods are introduced: segment-level SDS and TUG based on emotional TTS generation, both designed to enrich emotional variability and mitigate class imbalance.

A label smoothing method Label Smoothing Generation based on LLM (LS-LLM) is developed, which uses LLM to produce context-dependent soft labels, reducing model overconfidence by accounting for the complex nature of emotional co-occurrence.

The rest of the article is organized as follows: In

Section 2, SOTA ER methods and data augmentation strategies are described.

Section 3 provides a detailed explanation of the proposed method.

Section 4 outlines the research corpora, performance measures, and experimental results.

Section 5 discusses the advantages and limitations of the proposed method, as well as its applicability in intelligent systems and research questions. Finally,

Section 6 summarizes the key findings and outlines future research directions.

2. Related Work

This section reviews SOTA ER methods, covering unimodal, bimodal, and multimodal methods, with a focus on multimodal fusion techniques. It also discusses modern data augmentation strategies to mitigate overfitting in training data and address class imbalance issues.

2.1. State-of-the-Art Methods for Emotion Recognition

Early ER methods have primarily focused on single modalities such as video (e.g., facial expressions [

35], body language [

36]), text (e.g., sentiment [

37] and affective language [

38]), or audio (e.g., prosody [

39] and speech patterns [

40,

41]). Although these methods have demonstrated effectiveness in controlled settings, they often struggle to generalize to real-world scenarios where emotional expression is inherently multimodal.

To overcome these limitations, recent research [

4,

42,

43] has increasingly focused on BER systems that integrate complementary information from multiple channels. These systems are driven by the dual nature of spoken language: while text captures semantic content, audio encodes the way speech is delivered via intonation, rhythm, pitch, and other prosodic features. Crucially, these modalities exhibit complementary strengths: text-based models often struggle with linguistically neutral emotional content, whereas audio-based systems are sensitive to speaker variability and noise. By fusing both, the BER models create richer and more robust emotional representations [

44,

45]. As a result, ER has emerged as a core task in affective computing [

46], driven by advances in deep learning [

17,

18] and representation learning [

47,

48].

Various multimodal corpora have been developed to support the training and evaluation of ER models.

Table 1 presents an overview of commonly used corpora in English and Russian. Despite their differences in size and modality, many of these corpora face recurring issues such as class imbalance, annotation noise, and limited linguistic diversity, all of which restrict the generalizability of trained models. The generalizability of ER depends not only on corpus selection but also on robust preprocessing and feature extraction—critical steps that shape emotion modeling. As each modality (audio, text) encodes emotions differently, it requires specialized techniques to extract information. To align and integrate features across modalities, attention mechanisms [

18,

49,

50] have become a standard design element in BER architectures. Self-attention is effective for capturing long-range dependencies in text, while cross-modal attention allows the system to relate semantic and prosodic cues to improve emotional inference.

A comparative overview of ER methods is shown in

Table 2. Although earlier methods relied on hand-crafted features [

57,

58,

59], current methods leverage deep neural encoders trained directly on raw or minimally processed data [

60,

61,

62]. Textual encoders are commonly based on large pre-trained Transformer models such as Bidirectional Encoder Representations from Transformers (BERT) [

63], Robustly optimized BERT approach (RoBERTa) [

64], A Lite BERT (ALBERT) [

65], or multilingual embeddings such as Jina-v3 [

66] and Jina-v4 (

https://huggingface.co/jinaai/jina-embeddings-v4, accessed on 28 September 2025). For audio, recent methods rely on self-supervised learning models such as Wav2Vec [

32,

67,

68,

69], Extended HuBERT (ExHuBERT) [

70], and Whisper [

71,

72,

73]. For modality fusion, Transformer-based [

74,

75,

76,

77], Graph-based [

42,

78,

79,

80] and Mamba-based [

81,

82,

83,

84] models are predominantly employed, as they allow dynamic integration of information from both linguistic and acoustic modalities.

2.1.1. Transformer-Based Methods

Transformer-based architectures represent one of the most prominent directions in BER, utilizing attention mechanisms to align and integrate linguistic and acoustic characteristics. These models differ in their fusion strategies, ranging from early attention pooling to deep co-attentional encoding, and in how they refine input representations [

103].

Several recent models enhance speech processing through modality-specific attention. DropFormer [

92], for instance, applies Drop Attention to emphasize emotion-relevant segments and includes a Token Dropout Module to suppress irrelevant information. Similarly, Zhao et al. [

93] propose a dynamically refined multimodal framework that integrates Sliding Adaptive Window Attention (SAWA), a Gated Context Perception Unit (GCPU), and Dynamic Frame Convolution (DFC) to mitigate information misalignment and improve acoustic–textual interaction. García et al. [

94] investigate deep learning-based beamforming methods using simulated acoustic data in Human-Robot Interaction (HRI) scenarios. Sun et al. [

95] present the Multi-perspective Fusion Search Network (MFSN) based on Transformer that separates and optimally fuses textual and speech-related emotional content.

Other Transformer-based methods focus on cross-modal encoding. Ryumina et al. [

18] present a gated attention fusion mechanism that aggregates emotion features from RoBERTa and Wav2Vec2.0 across multiple corpora, achieving strong cross-corpus generalization. Delbrouck et al. [

86] design a modular Transformer encoder with co-attention and a glimpse layer to jointly model emotional signals from text and audio. Phukan et al. [

97] conduct a comprehensive evaluation of paralinguistic pre-trained models, demonstrating that TRILLsson, a model trained on non-semantic speech tasks, consistently outperforms others such as Whisper [

71], XLS-R [

104], and Waveform-based Language Model (WavLM) [

105].

Extending attention-based fusion to video or context modeling, Huang et al. [

101] introduce MM-NodeFormer, a Transformer model that dynamically reweights textual, acoustic, and visual inputs according to their emotional salience, treating audio and video as auxiliary to text. Luo et al. [

76] present a cross-modal attention consistency framework that regularizes representations from different modalities through consistency loss. Zhao et al. [

77] propose R1-Omni (

https://huggingface.co/StarJiaxing/R1-Omni-0.5B, accessed on 28 September 2025), a Reinforcement Learning (RL)-enhanced model that optimizes fusion weights for better robustness to distribution shifts and improves interpretability by modeling modality-specific contributions. Similarly, Kim and Cho [

74] target audio–text interaction using a Cross-modal Transformer and Focus-Attention, built atop RoBERTa and a Convolutional Neural Network (CNN)-Bidirectional LSTM network (BiLSTM) acoustic stack, representing one class of attention-based fusion methods. Leem et al. [

75] propose a dual-branch attention fusion model that dynamically weighs acoustic and linguistic inputs based on their relevance.

2.1.2. Graph-Based Methods

Beyond Transformers, several works explore graph-based architectures to explicitly model the relational structure of multimodal features [

106]. These methods [

107,

108] often aim to model both intra- and inter-modality interactions using graph convolutions or message-passing mechanisms.

Li et al. [

78] introduce a decoupled multimodal distillation framework that separates shared and modality-specific features, applying graph-based knowledge transfer to improve fusion. Focusing on conversational BER, Shi et al. [

79] introduce a two-stage fusion strategy: the first stage extracts emotional cues from text using Knowledge-based Word Relation Tagging (KWRT), while the second enriches speech input through a prosody-aware acoustic module. FrameERC [

80] applies framelet-based multi-frequency graph convolution to model emotional dynamics in conversations, and incorporates a dual-reminder fusion mechanism to balance high- and low-frequency emotional cues, reducing overreliance on textual signals.

2.1.3. Mamba-Based Methods

Recent research [

24,

109,

110,

111] directions explore alternatives to attention-based architectures using linear SSMs, such as Mamba, which offer improved scalability and efficiency for long-context emotion modeling. Mamba and its successors replace explicit attention with input-dependent recurrence, enabling linear-time inference and reduced memory usage [

112,

113].

Building on this idea, Coupled Mamba [

81] introduces inter-modal state coupling, allowing synchronized state transitions across modalities while preserving their individual dynamics. Wang et al. [

82] further apply model distillation to transfer knowledge from large Transformer models to compact Mamba-based Recurrent Neural Networks (RNNs) with minimal performance loss. OmniMamba [

83] (

https://huggingface.co/hustvl/OmniMamba, accessed on 28 September 2025) extends this paradigm into a unified multimodal framework, incorporating decoupled vocabularies, task-specific Low-Rank Adaptation (LoRA) [

114], and a two-stage training scheme. Finally, Gate DeltaNet [

84] enhances Mamba2 [

113] by combining gated recurrence with biologically inspired delta-rule updates and memory erasure, achieving improved long-sequence modeling.

2.2. State-of-the-Art Methods for Data Augmentation

Data augmentation strategies have become essential to improve the generalizability and robustness of ER models [

115]. These techniques help alleviate issues such as data imbalance [

116], noise [

117], and incomplete modalities [

102] by artificially generating various training examples. Recent studies propose several innovative augmentation strategies [

118,

119,

120] that can be directly applied to both unimodal and multimodal models.

For example, Wang et al. [

45] introduce IMDer, a diffusion-based model that recovers missing modalities by mapping noise back into their original distribution space, using available modalities as semantic conditions. Similarly, Wang et al. [

89] propose a method based on Mixup [

121] to enhance emotional representations in speech data by learning robust features from unbalanced corpora. Several studies have explored more feature-centric methods. Malik et al. [

122] present a modality dropout strategy, randomly omitting modalities during training to improve model robustness against missing or noisy inputs, while Gong et al. [

91] introduce LanSER, which derives weak emotion labels from speech transcripts via LLM analysis. Zhang and Li [

44] explore modality-aware data augmentation by perturbing features within and between modalities to create synthetic samples.

Recently, diffusion-based augmentation has emerged as a powerful tool for generating realistic and diverse emotional samples, particularly under low-resource conditions. Methods such as Diff-II [

123] and DiffEmotionVC [

124] have achieved significant improvements in affective recognition benchmarks through the synthesis of expressive multimodal signals.

In addition, several studies address the challenge of data imbalance in ER. Ritter-Gutierrez et al. [

98] propose a Dataset Distillation method using Generative Adversarial Network (GAN), which generates a smaller synthetic corpus while preserving key discriminative features. Similarly, Leem et al. [

99] develop the Keep or Delete framework, which selectively removes noisy frames during training, improving robustness in real-world scenarios. Other methods focus on handling domain shifts in cross-corpus tasks. For example, Hierarchical Distribution Adaptation [

28] mitigates domain shifts by employing nested Transformers to align emotion features across source and target corpora.

Continuing the exploration of data augmentation strategies, Stanley et al. [

125] investigate data augmentation using pitch-shift, time-stretch, and vocal tract length perturbation to generate more varied emotion representations. The Emotion Open Deep Network (EODN) [

100] addresses data imbalance and unseen emotional categories by employing an open-set recognition strategy combined with the Pleasure-Arousal-Dominance model of emotion (PAD)-based emotional space mapping. Furthermore, Purohit and Magimai-Doss [

126] propose a framework that enhances data efficiency and generalization through curriculum learning and adversarial data augmentation, focusing on pitch and formant perturbations.

On the other hand, Wu et al. [

127] introduce a novel method to cross-lingual ER that models inter-rater ambiguity in emotion labels by applying a new loss function called Belief Mismatch Loss. This method improves noise robustness by representing emotion labels as probability distributions to enable more flexible label matching. Similarly, Khan et al. [

128] present a semi-automated framework for ER on user feedback from low-rated software applications, using ChatGPT (

https://chatgpt.com, accessed on 28 September 2025) as both an annotator and mediator to improve emotion annotation quality based on a grounded emotion taxonomy. The SemEval-2025 [

129] shared task on multilingual ER further highlights this trend. It covers over 30 languages and continues to expand its corpora, underscoring the growing need for balanced data and robust annotation strategies across diverse linguistic contexts.

In ER, Franceschini et al. [

130] propose a novel unsupervised method based on modality-pairwise contrastive learning that eliminates the need for explicit emotion labels or conventional data augmentation. Their method outperforms supervised and unsupervised baselines by fusing text, audio, and visual features only at inference time. Padi et al. [

87] combine BERT-based text encoders with a ResNet-based speech encoder pre-trained on speaker recognition, incorporating transfer learning and spectrogram augmentation. Additionally, Dutta et al. [

131] introduce EmoComicNet, a multi-task model that uses emotion-oriented feature augmentation and a fusion strategy adaptable to missing modalities, including text and images.

Finally, Lee [

90] propose a MER framework that employs a sampling strategy, prioritizing emotionally ambiguous samples and using contrastive learning with modality-aware hard sample mining, thus improving discriminative feature learning across audio and text inputs. Sun et al. [

102] present an ER model that incorporates pseudo-label-based contrastive learning combined with modality dropout to enhance representation learning under noisy and incomplete modality conditions.

Together, these diverse methods demonstrate the growing importance of data augmentation in improving the performance and generalization of ER models, particularly in situations with incomplete or noisy data.

3. Proposed Method

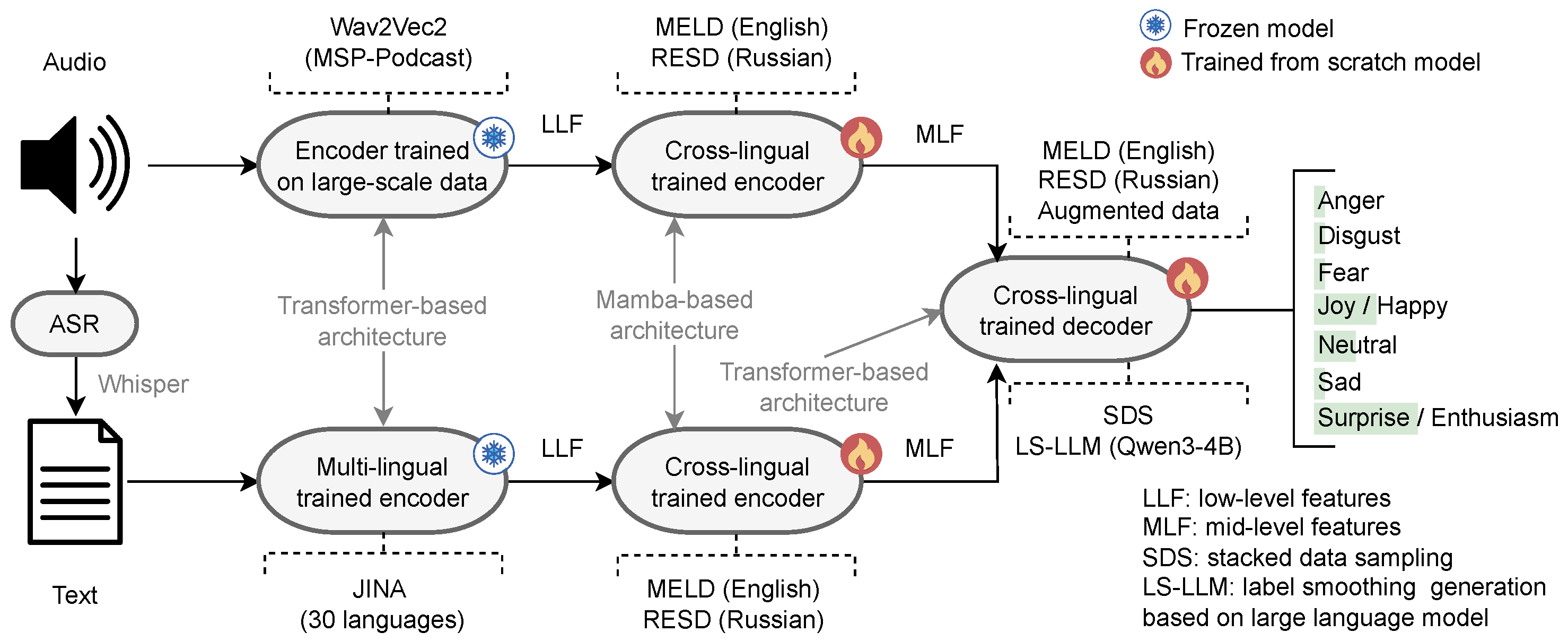

The proposed Cross-Lingual Bimodal Emotion Recognition method pipeline is illustrated in

Figure 1. The method takes an audio recording as input. Whisper [

71] is used for Automatic Speech Recognition (ASR) [

132] to extract orthographic transcriptions from the audio. Then both audio and text signals are passed through their respective frozen encoders for low-level feature extraction. A distinctive characteristic of the method is its cross-lingual unimodal encoders, which are trained to recognize emotions in two different languages and cultures: English and Russian. These encoders produce mid-level features that serve as inputs to the bimodal fusion model. The final model shares similar characteristics with the unimodal encoders. It is also trained using augmented data to improve its generalization capability. To achieve this, short utterances of a certain length are supplemented with other utterances bearing the same label using the SDS method. The target labels are then softened using a LLM-based method (LS-LLM), enabling the model to detect complex compound emotions. The method outputs probability distributions across seven emotions: anger, disgust, fear, joy/happy, neutral, sad, and surprise/enthusiasm.

This section describes the full methodological process of the proposed cross-lingual BER method. To ensure replicability and justify design decisions, ablation studies comparing alternative components are included. The final configuration used in the main experiments is explicitly indicated in each subsection. The detailed description of the method components is provided in the following

Section 3.1,

Section 3.2,

Section 3.3,

Section 3.4 and

Section 3.5.

3.1. Audio-Based Emotion Recognition

In research corpora, audio signals vary in sampling rate and duration [

133]. To ensure consistency, all audio signals are resampled at a uniform rate of 16 kHz. To account for varying durations, zero-padding is applied to the embeddings during batch formation, aligning them to the length of the longest sequence in the batch. Acoustic embeddings are extracted using two pre-trained models and their performance is compared across four different temporal models.

The first embedding extractor is based on the Wav2Vec2.0 emotion model pre-trained on the MSP-Podcast corpus [

32,

134] (

https://huggingface.co/audeering/wav2vec2-large-robust-12-ft-emotion-msp-dim, accessed on 28 September 2025). The second extractor uses the ExHuBERT emotion model pre-trained on 37 benchmark corpora [

70] (

https://huggingface.co/amiriparian/ExHuBERT, accessed on 28 September 2025). Both models generate embeddings of size

T × 1024, where

T is dynamically determined by the input audio signal duration. The embeddings feed into four temporal models: Long Short-Term Memory network (LSTM) [

135], Extended LSTM (xLSTM) [

136], Transformer [

137] and Mamba [

112]. The LSTM model captures temporal dependencies through recurrent connections, effectively modeling short- and mid-term contextual dynamics. However, its sequential nature limits parallelization and long-range dependency modeling. The xLSTM model modifies this structure by introducing multiplicative gating within its recurrent dynamics, which enhances gradient stability and allows for more efficient propagation of information over time. In contrast, Transformer leverages self-attention to capture global dependencies between all time steps simultaneously, enabling efficient context integration across long utterances, albeit with higher computational cost proportional to the sequence length squared. Mamba introduces a recent state-space model that replaces explicit recurrence and attention with linear dynamical updates, providing efficient long-context modeling with linear time complexity and reduced parameter count. Each model is trained using architecture-specific and training hyperparameters, which are optimized during training on validation subsets.

3.2. Text-Based Emotion Recognition

In the research corpora, the utterance length varies between samples. To ensure uniform input dimensions during vectorization, zero-padding is applied to the embeddings up to the maximum token count. This threshold is set to 95 (sub)tokens for the Jina-v3 [

66] and XLM-RoBERTa [

64] models and to 329 tokens for the CANINE-c model [

138]. The latter operates on character-level tokens rather than word-level tokens. For each token, XLM-RoBERTa and CANINE-c extract 768 features, while Jina-v3 produces 1024 features. The extracted embeddings feed into four temporal models: LSTM [

135], xLSTM [

136], Transformer [

137], and Mamba [

112]. Each model is trained with architecture-specific hyperparameters, which are optimized during training on validation subsets. Among the evaluated encoders, Mamba is selected for both audio and text modalities in the final model due to its superior performance on long sequences and computational efficiency, as detailed in

Section 4.3 and

Section 4.4.

3.3. Data Sampling Method

In this research, data sampling refers to the generation of new training samples to improve model performance. Two different data sampling strategies are investigated, each designed to address specific challenges inherent in the corpus.

The first strategy focuses on audio data sampling. New audio samples are generated during training by setting a segment length of

T seconds. If the input sample is shorter than the target duration, it is concatenated with another randomly selected sample that shares the same label. The corresponding text transcription is then extracted using the Whisper ASR model [

71]. This strategy, referred to as SDS, ensures uniform input lengths while preserving label consistency. In addition, it mitigates potential biases that may arise from uneven sample durations between classes.

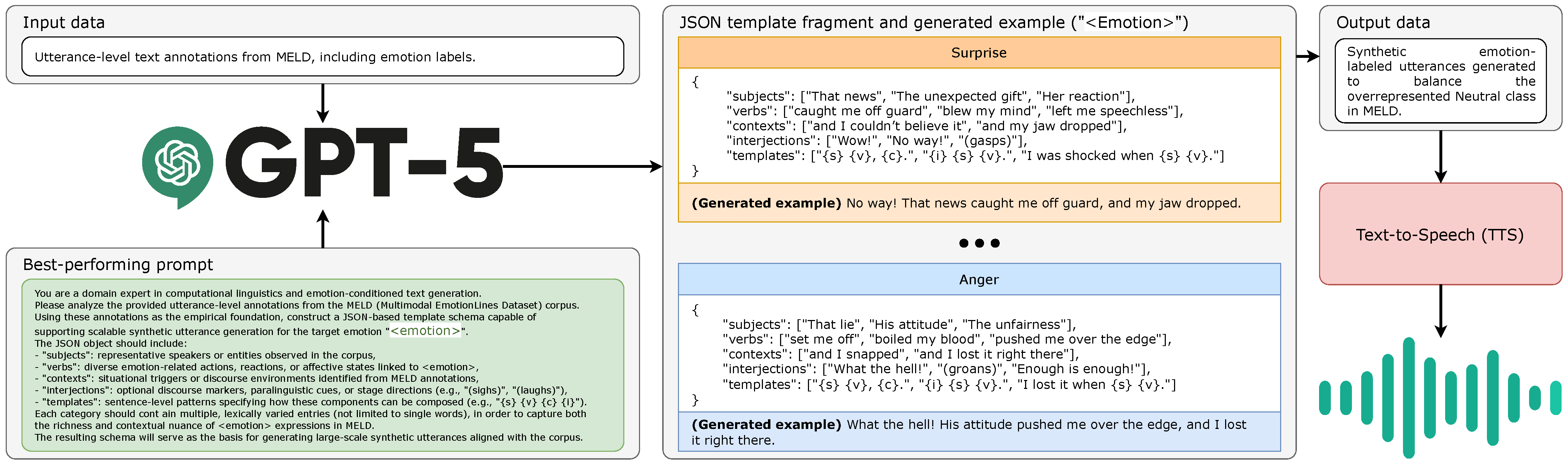

The second method employs TTS generation. In this pipeline, an emotion label is randomly sampled, after which a textual utterance is generated using ChatGPT-5 (

https://openai.com/index/introducing-gpt-5/, accessed on 28 September 2025), following a predefined template-based prompt. The prompt is designed to produce short, emotionally rich utterances that match the expressive, conversational style of the MELD corpus [

34] as illustrated in

Figure 2. The generated text, paired with its not-verbal tag (e.g., sighs, laughs, and others), is subsequently fed into the DIA-TTS model (

https://huggingface.co/nari-labs/Dia-1.6B, accessed on 28 September 2025) to synthesize the corresponding audio signal as illustrated in

Figure 3. This strategy, denoted as TUG, facilitates controlled data augmentation by targeting emotional and linguistic imbalances in the corpus. Notably, it allows for the precise adjustment of affective speech parameters without relying on additional human annotation.

Both sampling strategies are applied exclusively to the training subset to ensure that the evaluation measures reflect true generalization performance. To achieve a balance between data diversity and the model’s performance, the probability of generated samples (the proportion of augmented to original data) is iteratively tuned during experimentation.

3.4. Label Smoothing Method

Previous studies [

17,

139,

140,

141,

142] show that label smoothing improves generalization and stabilizes training by reducing model overconfidence. The techniques smooth one-hot vector by mixing it with a uniform distribution.

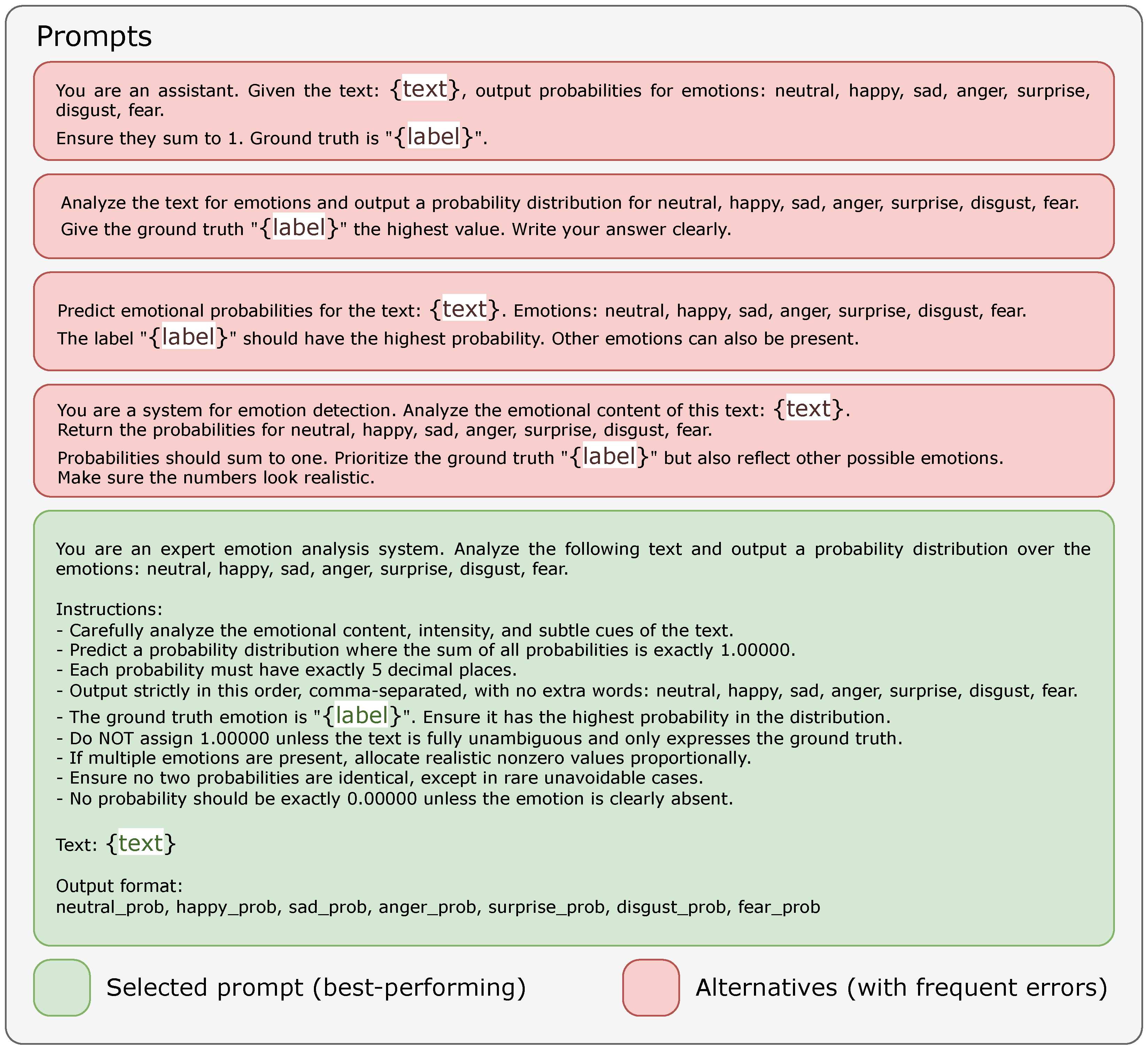

Instead of assigning uniform labels to non-target classes, as is done in trivial label smoothing, zero-shot [

143] LLMs are used to generate semantically informed soft labels. During training, each ground truth label is smoothed using the predicted emotion probabilities by LLM based on the input text. This method ensures that the target emotion receives the highest probability, while the remaining probabilities are realistically distributed among other relevant emotions.

This method, which is termed LS-LLM, produces context-aware label distributions that capture the subtleties and overlaps inherent in emotional expression, providing a more informative supervision signal. By aligning training labels with plausible emotional interpretations, LS-LLM encourages better generalization and robustness in ambiguous or multi-emotion cases. To generate these semantically informed soft labels, various prompts used to guide LLMs were tested, as illustrated in

Figure 4. It was found that prompt formulations enforcing strict probability formatting and distributional constraints produced the most reliable outputs. Simpler variants often resulted in invalid or less informative distributions.

Eight lightweight LLMs are compared: Falcon3-3B-Instruct (

https://huggingface.co/tiiuae/Falcon3-3B-Instruct, accessed on 28 September 2025), Phi-4-mini-instruct (

https://huggingface.co/microsoft/Phi-4-mini-instruct, accessed on 28 September 2025), Gemma-3-4b-it (

https://huggingface.co/google/gemma-3-4b-it, accessed on 28 September 2025), Qwen3-4B (

https://huggingface.co/Qwen/Qwen3-4B, accessed on 28 September 2025), Falcon-H1-3B-Instruct (

https://huggingface.co/tiiuae/Falcon-H1-3B-Instruct, accessed on 28 September 2025), Gemma-3n-E2B-it (

https://huggingface.co/google/gemma-3n-E2B-it, accessed on 28 September 2025), SmolLM3-3B (

https://huggingface.co/HuggingFaceTB/SmolLM3-3B, accessed on 28 September 2025) and Qwen3-4B-Instruct-2507 (

https://huggingface.co/Qwen/Qwen3-4B-Instruct-2507, accessed on 28 September 2025). The characteristics of the selected LLMs are presented in

Table 3. These models were selected because they are small enough to run efficiently (with a range of parameters from approximately 3B to 5.5B) and yet powerful enough to understand emotionally rich text. All the models are openly available, perform well on language tasks, and differ in their designs and the number of layers. This allows us to compare their performance. To improve model performance, the proportion (probability) of LLM-generated soft labels to one-hot vectors was iteratively optimized during experimentation.

3.5. Bimodal Emotion Recognition

Audio–text fusion is performed at both prediction-level and feature-level representations. For prediction-level fusion, a weighted combination of modality-specific probabilities is computed:

where

is the final predicted probability distribution;

and

are the audio and text modality predictions, respectively;

are learnable modality weights (constrained to

via softmax normalization). This model is referred to as Weighted Probability Fusion (WPF).

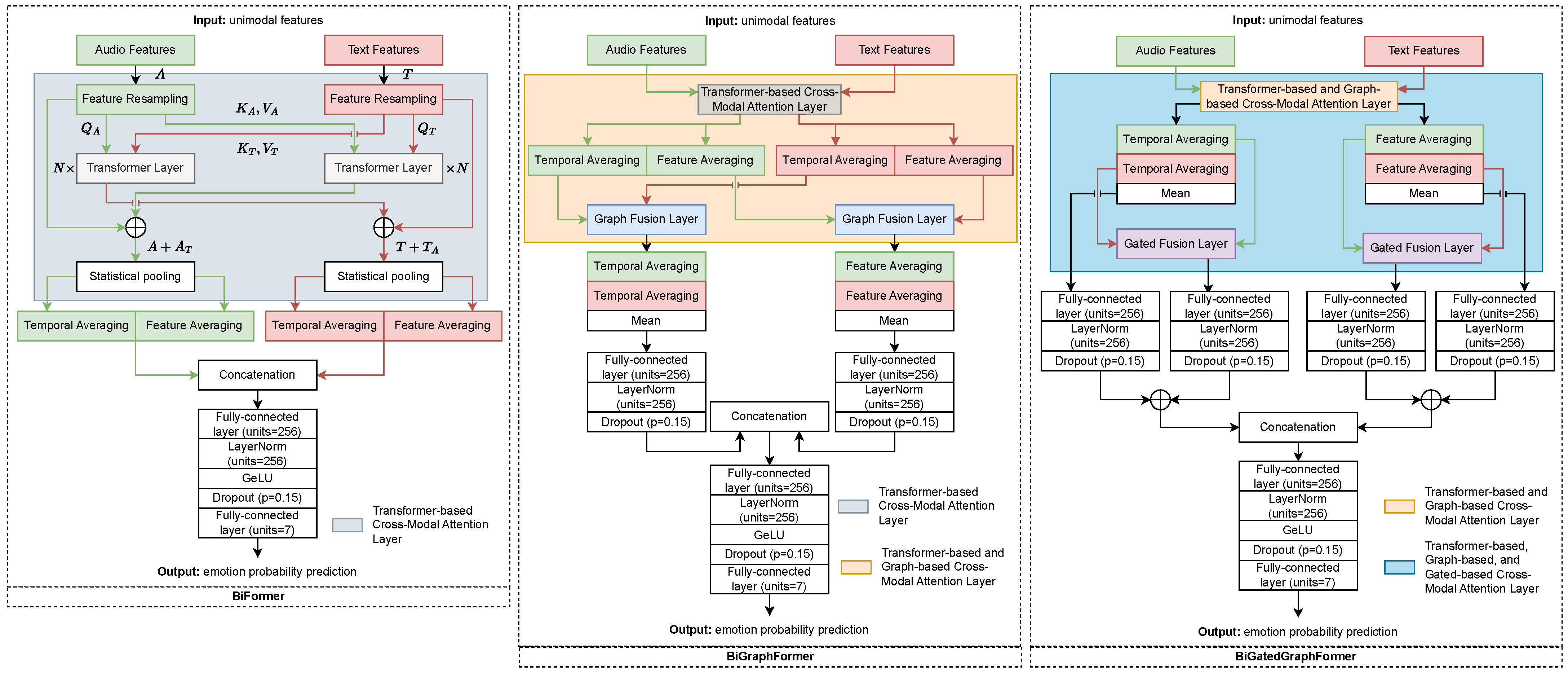

For feature-level fusion, several model architectures are proposed that dynamically account for the importance of features across modalities. The architectures of the proposed BER models are shown in

Figure 5. The components of the proposed architectures are shown in

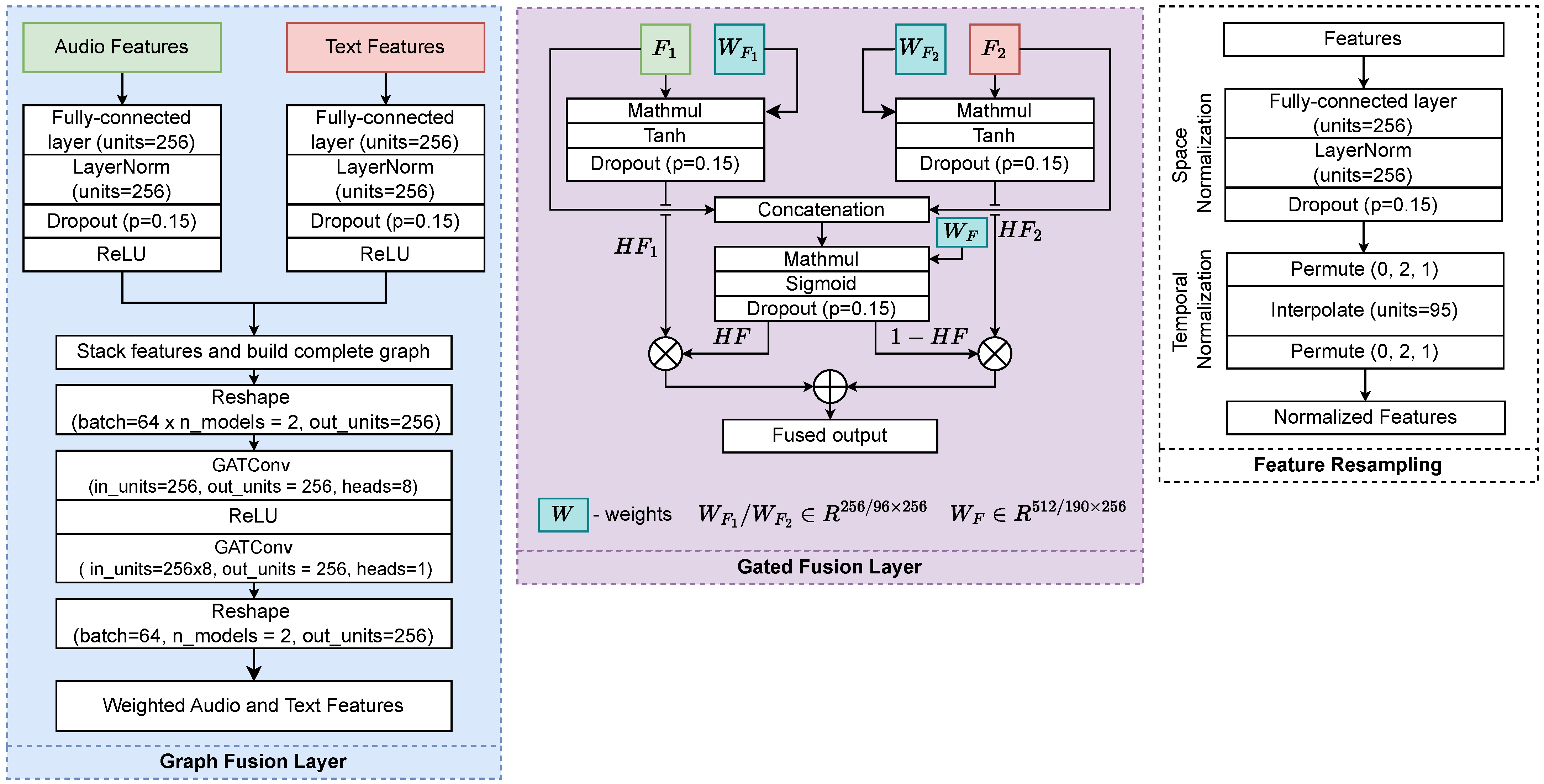

Figure 6. A key characteristic of these architectures is their ability to calculate statistical information from contextual representations at both: (1) the feature-level, which identifies the most informative features for the model’s final prediction; (2) the temporal-level, which determines the most relevant timesteps (for audio) or word sequences (for text) in the final prediction.

The intra- and inter-modal relationships between the two modalities are implemented through several distinct models:

Cross-modal Transformer (BiFormer). The input data consists of audio and text features with different dimensionalities. A feature resampling block is applied to normalize the input to a unified dimensionality. This block consists of (1) the fully connected layer that transforms the feature dimensions, the number of neurons is determined during training; (2) the temporal alignment layer standardizes the audio features length to match the text features (fixed at 95 timesteps) using linear interpolation. The modified input is passed through two Transformer blocks configured for cross-modal attention. In the first block, audio features act as queries () and the text features serve as keys/values ( and ). The second block reverses this configuration with text features as queries () and audio features as keys/values ( and ). The number of Transformer layers in each block is optimized during training. Each block produces an attention-weighted value matrix (in which the query-key interactions dynamically consider the importance of features), enhancing discriminative features and reducing less informative ones. Then these transformed matrices are combined with their original unimodal representations ( or ). These features are subjected to statistical pooling that averages both temporal (along the sequence length) and feature dimensions. The resulting concatenated vector is fed into a classifier to predict probabilities in seven emotion categories.

BiGraphFormer. In this model, a second level of modality fusion is introduced using two pairs of graph-based layers. Each pair utilizes as input either the temporally averaged audio and text features or the feature-wise averaged audio and text representations. The output of each Graph Fusion Layer is a set of weighted representations. The weighting results are from the mutual interactions between all node pairs in a fully connected graph. The outputs (weighted temporal and feature-based representations) are further averaged, passed through fully-connected layers and concatenated. The resulting concatenated vector is fed into a classifier to predict probabilities in seven emotion categories.

BiGatedGraphFormer. This model adds a third level of modality fusion using two pairs of gated-based layers. This layer implements attention mechanisms with learnable forget/amplify gates, automatically suppressing or enhancing input features based on their relevance to the prediction task. Unlike previous attention mechanisms, these gated-based layers generate new temporal and feature-based representations that take into account both modalities features varying importance degrees. These representations are passed through fully-connected layers and concatenated with averaged graph-based outputs. The resulting concatenated vector is fed into a classifier to predict probabilities in seven emotion categories.

BiMamba. A simple feature concatenation method using the Mamba-based architecture is also implemented. This architecture efficiently models long-range dependencies in the joint feature space while maintaining linear computational complexity.

The BiFormer model is adopted as the primary fusion mechanism in the final model, as it achieves the best trade-off between performance and complexity across both research corpora. BiGraphFormer, BiGatedGraphFormer, and BiMamba are included for completeness and are not part of the main pipeline. Additionally, for the best-performing models, WPF is applied, which takes into account: (1) the importance of unimodal probability predictions (audio-only and text-only); (2) the multimodal probability predictions derived from cross-modal interactions. Finally, different data sampling and augmentation strategies (SDS, TUG, and LS-LLM) are applied to the final best-performing model. For all models, the architecture-specific and training hyperparameters are optimized during training on validation subsets.

4. Experimental Research

The experiments are conducted in several stages. First, the unimodal encoders are fine-tuned. Unlike many SOTA solutions, the proposed method trains encoders in two corpora that cover Russian and English languages. This method improves the ability to generalize to new corpora. The patterns of SOTA pre-trained encoders are maintained and new temporal model patterns are added.

Then multi-corpus unimodal features are extracted from temporal models and used as input for bimodal models. This multi-stage training process allows us to create a BER model. The model demonstrates strong generalizability in new data, including different languages and cultural emotional expressions.

In this article, the best model configurations and training hyperparameters are selected based on the performance in the development subsets, and the performance measures obtained in the test subsets are reported.

4.1. Research Corpora

For this research, two corpora are selected: MELD [

34] and RESD [

33]. Both corpora pertain to the domain of dyadic interaction; however, they differ in language (English and Russian). This choice supports the development of multilingual models capable of analyzing cross-cultural and linguistic communication patterns.

The MELD corpus contains audiovisual data extracted from the TV series Friends, annotated for seven emotions: joy, sad, fear, anger, surprise, disgust, and neutral. The audio recordings include considerable background noise (e.g., applause, laughter, etc.). In contrast, the RESD corpus exclusively consists of laboratory-recorded acoustic data annotated for seven emotions as well: happy, sad, fear, anger, enthusiasm, disgust, and neutral. To enable joint model training on both corpora, the following emotions are aligned: joy and happy; enthusiasm and surprise. The distribution of emotion utterances across the subsets is presented in

Figure 7. Both corpora employ fixed subsets; however, while the RESD corpus only contains training and test subsets, the test subset is additionally utilized for validation purposes. The MELD corpus exhibits a pronounced class imbalance, whereas in RESD the distribution of utterances across classes is generally balanced.

Table 4 provides a summary of key statistics for the MELD and RESD corpora. MELD contains significantly more utterances (9989 in Train, 1109 in Development, and 2610 in Test) compared to RESD (1116 in Train and 280 in Test). In terms of utterance characteristics, MELD tends to have shorter utterances (averaging 14–15 (sub)tokens and 3.1–3.3 s in duration). In contrast, RESD utterances are longer on average, with 24 (sub)tokens and durations of 6.0–6.1 s. Notably, MELD’s Test subset includes a 305-s outlier. Despite these differences, both corpora exhibit similar utterance length distributions across subsets, although MELD demonstrates greater variability in maximum durations.

Data augmentation using the TUG method was restricted to the MELD corpus due to the DIA-TTS model’s incompatibility with Russian. Approximately 23,000 synthetic utterances and corresponding audio clips were generated. The number of samples was determined according to the class distribution imbalance in the original corpus, with minority classes augmented until their size equaled that of the dominant (neutral) class. To enhance emotional expressiveness, supported inline tags (e.g., sighs, laughs, and others) were inserted into the generated utterances to guide the DIA-TTS model in producing prosodically varied and affectively rich speech outputs.

4.2. Performance Measures and Loss Function

To evaluate the effectiveness of the proposed methods, various performance measures are used: Unweighted Average Recall (UAR), Weighted Average Recall (WAR), Macro F1-score (MF1), and Weighted F1-score (WF1). These measures are calculated as follows:

where

C is the number of classes;

is the number of true positives for class

i;

is the number of false negatives for class

i;

is the number of utterances in class

i;

N is the total number of utterances;

is the number of false positives for class

i. Both weighted (

and

) and unweighted measures (

and

) are used to evaluate the performance of the proposed methods, as the class utterances are unbalanced. Although weighted measures primarily reflect model performance in majority classes, unweighted measures provide a more accurate assessment of overall performance, capturing both majority and minority class predictions.

The weighted cross-entropy is employed as the loss function, defined as

where

C is the number of classes;

is the weight assigned to class

i to mitigate class imbalance;

is the truth label of emotion class

i;

corresponds to the predicted probability of class

i. The weighting helps to address class imbalance by assigning higher weights to underrepresented classes, thereby preventing the model from being biased towards the majority-represented classes.

4.3. Audio-Based Emotion Recognition

Experiments are conducted with several temporal models such as LSTM [

135], xLSTM [

136], Transformer [

137], and Mamba [

112]. The optimal configurations are determined through a grid search on different combinations of model hyperparameters and training settings. The best-performing configurations of audio-based models are presented in

Table 5. Among the audio-based models, Transformer has the best computational efficiency, with the least number of parameters (0.53 M) and the fastest training time (50 s/epoch), despite its larger disk space requirement (9.6 MB). LSTM has the longest training time (91 s/epoch) and the highest number of parameters (1.58 M). xLSTM reduces both the size (1.18 M) and training time (73 s) compared to LSTM, making it more efficient. Mamba offers a smaller model (0.79 M parameters, 3 MB) and faster training (51 s) than LSTM, while closely matching Transformer’s efficiency and using significantly less storage space.

The audio-based experimental results are summarized in

Table 6. Across all tested temporal models, Wav2Vec2.0-based embeddings consistently outperform ExHuBERT embeddings. This suggests that increasing training data volume (250 h vs. 150 h) may be more beneficial for building generalizable embeddings than expanding domain coverage (1 vs. 37 domains).

The comparative analysis of temporal models shows no substantial performance differences on MELD, while the Mamba architecture significantly outperforms the LSTM, xLSTM and Transformer models on RESD. This disparity likely arises from Mamba’s enhanced capacity to model long-range temporal dependencies.

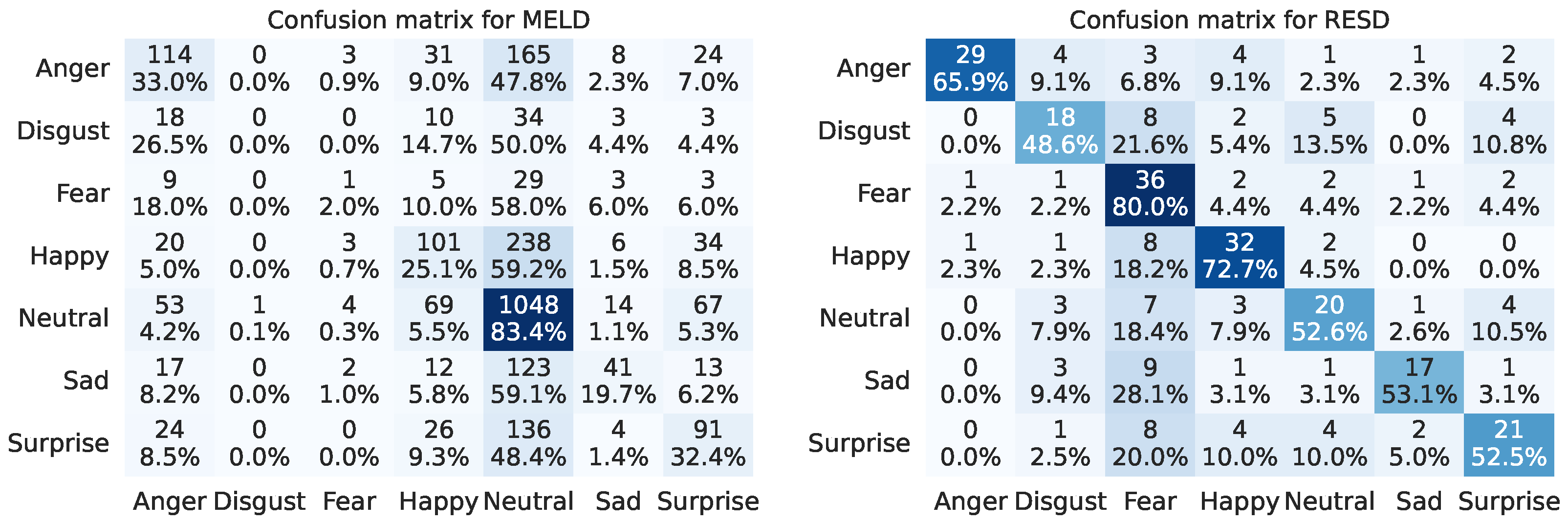

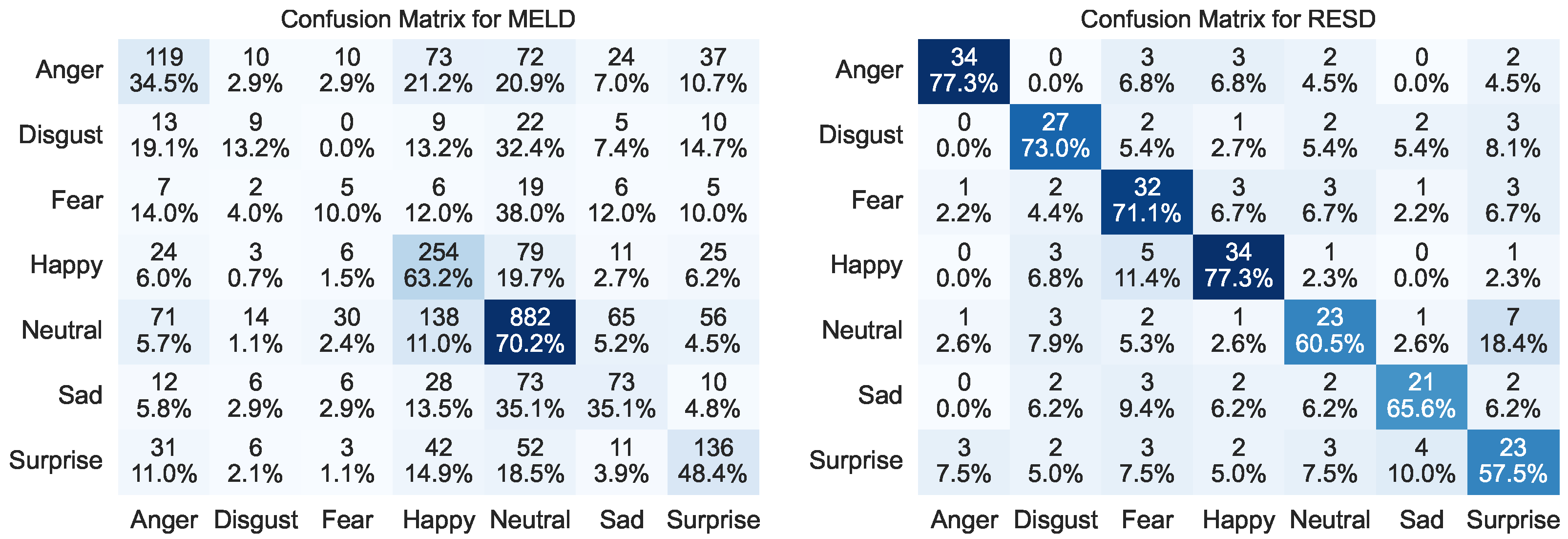

Figure 8 shows the confusion matrices obtained by the audio-based model. Despite joint training, the performance of the model correlates with the distribution of training samples. In MELD, classes such as Disgust, Fear, and Sad, which are the least frequent, achieve the lowest recall values (0.0%, 2.0%, and 19.7%, respectively). Although RESD is a more balanced corpus, class imbalance still affects the recall for the following emotions: Disgust (48.6%), Neutral (52.6%), Sad (53.1%) and Surprise (52.5%). Regarding cross-lingual differences in acoustic expression, emotions in English tend to be confused with Anger and Happy more often, while in Russian, they are often misclassified as Fear. This may be due to language-specific prosodic patterns, such as the use of a higher pitch or more distinct intonation to convey emotional intensity.

For bimodal fusion, the feature matrices extracted from Mamba’s final hidden states are employed in all subsequent experiments.

Table 6 presents the performance results of SOTA audio-based methods evaluated on the research corpora. To date, only one method (

https://github.com/aniemore/Aniemore, accessed on 28 September 2025) has been reported for RESD. This method demonstrates performance over 80% in all measures, while the same model shows a sharp decline for MELD, falling below 20%. This discrepancy indicates a clear issue of model overfitting to a single corpus.

Comparable or superior performance is achieved relative to other methods on the MELD corpus, while maintaining about 60% accuracy on the RESD corpus. Thus, the proposed audio-based method currently shows the best ER performance compared to SOTA audio-based methods and also demonstrates multilingual generalization capabilities.

4.4. Text-Based Emotion Recognition

The best configurations of text-based models, determined using the same methodology as for audio-based models, are presented in

Table 7. The comparison of computational cost of text-based models show clear trade-offs. The Transformer has the smallest size (1.58 M parameters, 6.03 MB), followed by Mamba (3.28 M, 12.50 MB), xLSTM (8.15 M, 31.08 MB), and LSTM (12.61 M, 48.09 MB). Surprisingly, Mamba, despite its smaller size, is the slowest to train (392 s/epoch), significantly slower than even the recurrent models and Transformer (45 s/epoch). Although Mamba is often considered computationally efficient due to its linear time architecture, the obtained results do not support this claim under the current experimental setup.

The text-based experimental results are shown in

Table 8. On average, Jina-v3-based and XLM-RoBERTa-based embeddings demonstrate comparable performance, while CANINE-c underperforms across all measures regardless of the employed temporal model. These results indicate that character-level tokenization is less effective than word-level tokenization for this task. Furthermore, despite being fine-tuned on only 30 languages, Jina-v3 outperforms XLM-RoBERTa, which was pre-trained on 100 languages. This suggests that training on a smaller but more carefully selected language set enables the model to capture more robust linguistic patterns and achieve better generalization capabilities.

The experimental results of the temporal models reveal distinct performance patterns across corpora: LSTM demonstrates superior effectiveness on MELD, while Mamba outperforms others (including xLSTM and Transformer) on RESD. This divergence arises from fundamental differences in token distribution between the corpora. In MELD, most words are known tokens, resulting in shorter informative sequences (with zero-padding beyond actual content). LSTM handles such compact sequences effectively. In RESD, texts contain more unknown words that split into (sub)tokens, creating longer sequences. Mamba performs better here due to (1) efficient long-sequence processing; (2) better handling of (sub)token patterns.

Figure 9 shows the confusion matrices obtained by the text-based model. The impact of class imbalance remains. In MELD, classes such as Disgust, Fear, and Sad, achieve the lowest recall values (11.8%, 14.0%, and 23.1%, respectively), although higher than for the audio-based model. In RESD, Sad and Surprise have lower recognition, with recall values of 25.0% and 17.5%, respectively. Cross-class confusions differ across languages. The model identifies errors in Fear vs. Sad and Anger vs. Happy in MELD. In RESD, however, it indicates confusion between Fear and Surprise, as well as Anger and Fear. These patterns suggest that language- and culture-specific ways of expressing emotions affect model performance beyond mere data imbalance, particularly through lexical and phrasing conventions that link distinct emotions to similar textual cues in each language.

For bimodal fusion, the 95 × 512 feature matrices extracted from Mamba’s final hidden states are employed in all subsequent experiments.

Table 8 presents the performance results of SOTA text-based methods evaluated on the MELD corpus. To the best of current knowledge, there are no published results for the text modality on the RESD corpus. The results on the MELD demonstrate that the proposed model’s performance remains below SOTA models. This gap stems from multilingual processing challenges, as Russian and English emotional patterns diverge significantly. Despite this limitation, the proposed model offers significant practical advantages: (1) it maintains operational capacity across languages where monolingual models typically fail; (2) it eliminates language-specific tuning requirements.

4.5. Bimodal-Based Emotion Recognition

The best model configurations for BER are presented in

Table 9. The analysis of optimal hyperparameters reveals key trends across bimodal architectures. Transformer-based models (BiFormer, BiGraphFormer, and BiGatedGraphFormer) have five layers, a hidden size of 256, and eight attention heads. In contrast, BiMamba reaches peak performance with fewer layers (four) but a larger hidden size (512). The training hyperparameters remain consistent across the models. In terms of computational cost, BiFormer is the most effective (9 s/epoch, 4.40 M parameters). Graph-based variants, on the other hand, require more computational resources (10–11 s/epoch, up to 6.37 M parameters). BiMamba is the slowest to train (16 s/epoch) and uses more parameters (4.89 M) than BiFormer.

The bimodal-based experimental results are shown in

Table 10. These results demonstrate that bimodal models consistently outperform unimodal baselines. Among them, BiFormer achieves the best (lowest) rank of 5.88, outperforming the text- and audio-based baselines (14.50 and 14.88, respectively), which corresponds to improvements of 8.62 and 9.00 in rank reduction. The WPF strategy, which aggregates predictions from both unimodal (audio/text) and bimodal models, exhibits variable effectiveness across different corpora. For example, on the MELD corpus, WPF significantly enhances performance (BiMamba-WPF reaches an UAR of 41.47%), suggesting that shorter utterances may benefit from weighted consensus between modalities. However, on the RESD corpus, this method performs worse. The BiGraphFormer model performs well on the RESD corpus, achieving a UAR of 68.51%. It uses a fully connected graph structure to process all audio–text feature pairs. This structure explicitly models cross-modal interactions and is likely more efficient for longer sequences. Notably, gated fusion variants (BiGatedGraphFormer) do not improve performance on either corpus. This indicates that Transformer- and graph-based attention mechanisms may be sufficient for cross-modal integration in the current experimental setting. Similarly, Mamba-based architectures (BiMamba) show limited effectiveness on research corpora. Their simplified feature concatenation lacks explicit cross-modal attention mechanisms that are required for robust multimodal fusion.

BiFormer’s superior performance over graph-based models on the MELD corpus suggests that standard attention mechanisms are sufficient to capture modality interactions in brief utterances. In contrast, RESD’s longer sequences benefit from explicit relational modeling through graph structures. Interestingly, WPF’s effectiveness appears to be inversely related to utterance length and complexity, as it is effective in MELD’s concise dialogues; it struggles with the more elaborate interactions in RESD, where joint bimodal learning becomes crucial. These observations are consistent with corpora statistics in that MELD contains shorter, more uniform utterances (average 14 (sub)tokens and 3.1 s), which favor probabilistic fusion. In comparison, RESD consists of longer sequences (average 24 (sub)tokens and 6.0 s), requiring deeper architectural integration of modalities. The results demonstrate that while WPF provides a straightforward yet effective enhancement for simpler corpora, more sophisticated bimodal architectures are essential to learn the complex multimodal interactions.

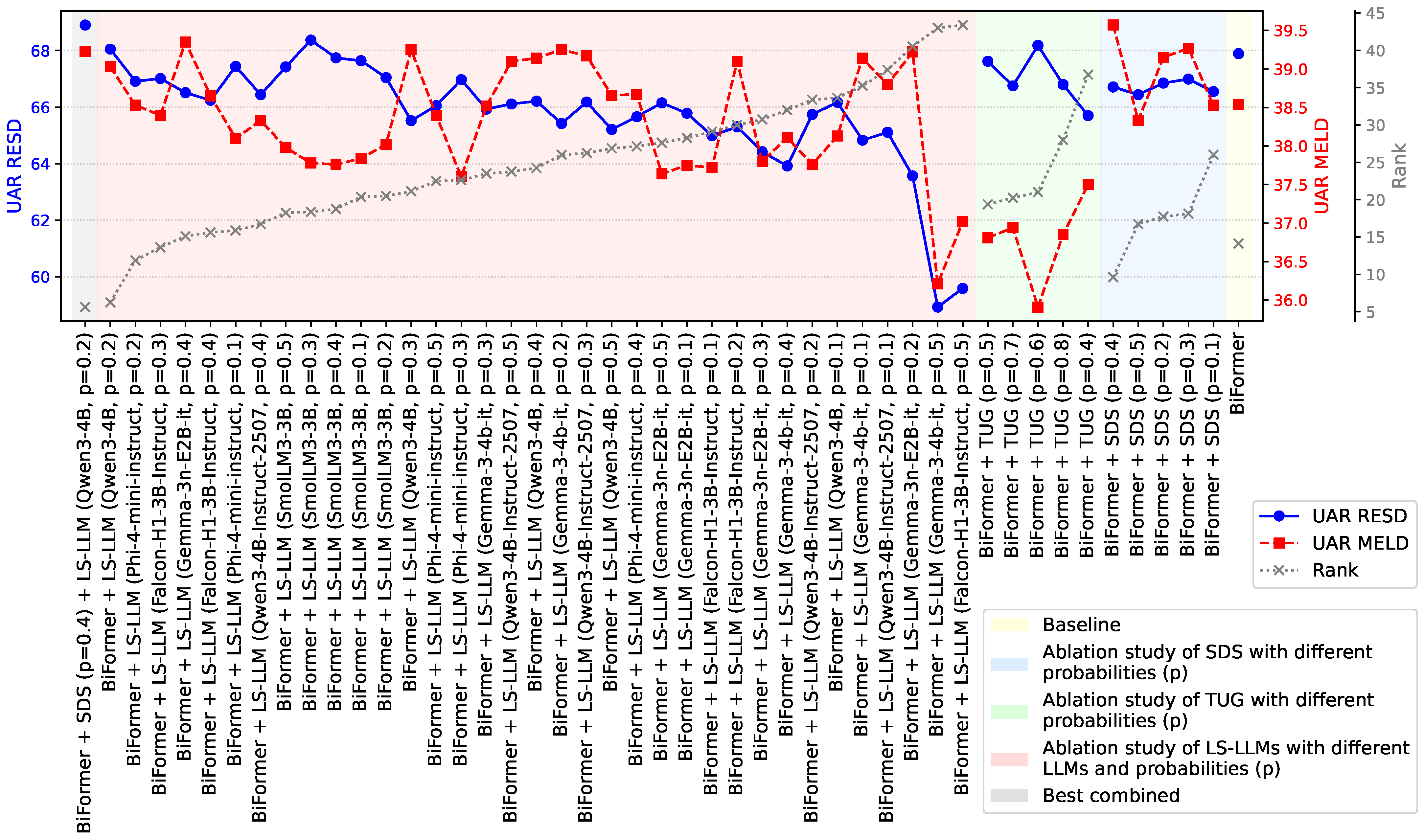

Table 10 also shows the high-performance results for various augmentation techniques in combination with the BiFormer model. More detailed results for all augmentation techniques with varying probabilities are presented in

Figure 10. The BiFormer model was selected for evaluation due to its consistently superior high rank performance across corpora. The results demonstrate the distinct effects of various sampling and label smoothing strategies on the MELD and RESD corpora. The SDS method yields the most notable improvement with a sampling probability of

p = 0.4, achieving a rank of 5.00, surpassing the baseline value of 5.88. The performance gain is particularly evident in the MELD corpus, where SDS increases the UAR from 38.54% to 39.57%. Given MELD’s elevated background noise and the high variability and brevity of its utterances, these results suggest that structured audio concatenation combined with Whisper-based transcription helps mitigate acoustic inconsistencies. As expected, no improvement was observed on the RESD corpus, confirming that SDS is most effective in scenarios with substantial variation in utterance duration.

In contrast, the TUG method failed to yield performance gains on either corpus. This finding highlights the limitations of current publicly available TTS models, such as DIA-TTS, in generating emotionally expressive and authentic-sounding speech. Moreover, the artificial nature of the TUG-generated utterance–audio pairs may introduce artifacts that negatively affect downstream performance, particularly in tasks that require fine-grained affective interpretation.

Using LLMs for label smoothing (LS-LLM) has different effects on performance, with the most notable improvements observed on the RESD corpus. The Qwen3-4B variant achieves its highest rank of 4.00% at p = 0.2, increasing UAR for the RESD corpus from 67.89% to 68.05%. This suggests that soft labels derived from LLMs enable more accurate detection of compound emotions in clean laboratory-recorded corpora such as RESD, where emotional distinctions tend to be more subtle and less obscured by noise. While the other LLMs, such as Phi-4-mini-instruct and Falcon-H1-3B-Instruct, also offer benefits compared to the baseline model, especially for MELD, they underperform relative to Qwen3-4B. This difference is due to the stronger ability of Qwen3-4B to follow instructions and its more nuanced representation of emotional context, which leads to higher-quality soft labels. This indicates that the quality of label smoothing depends on the LLM’s semantic understanding capabilities. Moreover, optimal smoothing probability (p = 0.2) suggests that excessive label interpolation may reduce the effectiveness of ground-truth annotations.

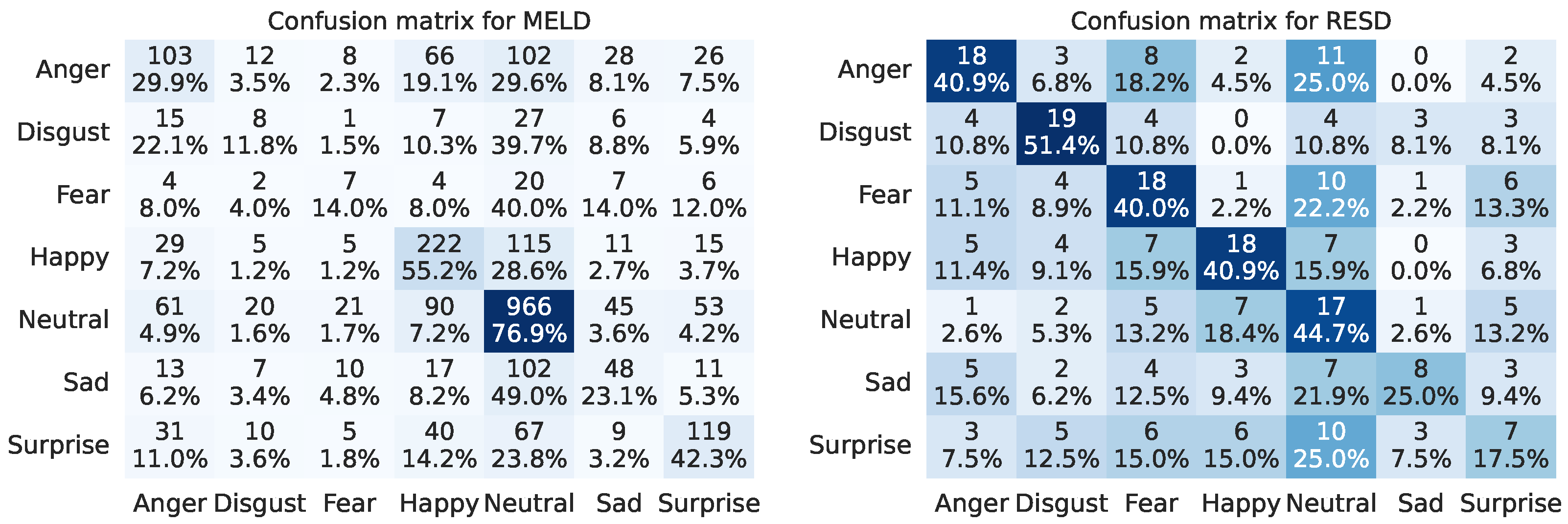

The combination of the two best augmentation methods (SDS with

p = 0.4 and LS-LLM with

p = 0.2) yielded a rank improvement of 2.63 over the baseline (2.63 vs. 5.88). Specifically, UAR gain for MELD was 0.69% (39.23% vs. 38.54%), while for RESD it reached 1.01% (68.90% vs 67.89%). These results demonstrate significant corpus-specific effects on data augmentation method performance. The integration of audio and text modal improves overall performance in both corpora, as shown by the higher diagonal values and improved recall in

Figure 11 compared to

Figure 8 and

Figure 9. For instance, Sad recall increases from 19.7% (by audio) and 23.1% (by text) to 35.1% (by bimodal) in MELD and from 25% (by text) and 53.1% (by audio) to 62.5% (by bimodal) in RESD. However, there are still language-specific patterns of confusion: in MELD, Fear is confused with Angry, and in RESD, Anger and Fear continue to be misclassified. This suggests that a bimodal fusion helps but does not completely eliminate emotion confusion caused by differences in how emotions are expressed in language.

Therefore, when corpus utterances exhibit duration imbalance, simply extending shorter utterances by concatenating additional samples of the same emotion proves beneficial. This method offers two advantages: (1) it normalizes utterance lengths; and (2) it generates novel training utterances. The combined effect substantially improves model performance. For corpora annotated under strict single-label protocols, LLM-based label smoothing helps uncover complex compound emotions. This method enhances prediction accuracy by reducing the model’s overconfidence and incorporating distributional patterns identified by LLM. Optimal performance on both corpora is achieved by combining multiple augmentation strategies. While individual methods may demonstrate corpus-specific effectiveness, their combination compensates for respective limitations and balances overall performance.

5. Discussion

In this research, a Cross-Lingual Bimodal Emotion Recognition method is proposed that uses two modality-specific encoders. Each encoder utilizes pre-trained, frozen Transformer-based models to extract low-level features (e.g., Wav2Vec2.0 for audio and Jina-v3 for text). These features are then processed by temporal encoders based on the Mamba architecture, which are trained from scratch to capture mid-level representations. Then, these modality-specific embeddings are integrated through a Transformer-based cross-modal fusion model, also trained from scratch, to perform the final emotion probabilities.

To enhance the model’s generalization capability across corpora with differing linguistic and acoustic characteristics, two data augmentation strategies are introduced: (1) SDS, which concatenates short utterances with others sharing the same emotion to create more context-rich emotional expressions of fixed duration; and (2) LS-LLM, which smooths the emotion distribution for ambiguous or multi-emotion utterances that are likely to have been mislabeled due to limitations in the annotation process.

The sequential application and comprehensive evaluation of all proposed solutions enabled the model to achieve strong performance on both corpora under joint training. For MELD, a relative improvement in UAR of 11.23% (28.00% vs. 36.17% vs. 38.54% vs. 39.23%) was observed when comparing the performance of audio-only, text-only, and bimodal models (with applying data augmentation methods). Similarly, for RESD, the relative UAR gain was 31.70% (60.79% vs. 37.20% vs. 67.89% vs. 68.90%) across the same model configurations.

However, the proposed method has several limitations. The effectiveness of label smoothing via LLMs depends on careful prompt engineering, which is difficult to generalize. Although lightweight Mamba encoders improve efficiency, they may be less accurate than larger models. The use of LLMs increases the computational cost and training latency. Finally, extending the model to include the visual modality and temporal uncertainty could further improve its robustness in real-world settings.

An analysis of the computational cost of the models is also provided. The total size of the models used in the proposed method is approximately 19.10 GB, which corresponds to 257.53 M parameters. Only 3% of the parameters are included in the trained models. The remaining parameters are taken from the Whisper, Jina-v3, and Wav2Vec2.0 models. All models were trained on an NVIDIA A100 with 80 GB of Graphics processing unit (GPU) memory [

147]. Training a single epoch of the audio encoder takes approximately 120 s with a batch size of 16, while training the text encoder takes around 80 s with a batch size of 32. Training the BiFormer model requires approximately 70 s with a batch size of 64. The proposed method supports real-time inference. The real-time factor for processing 1 s audio–text data is 0.11 s on a GPU (NVIDIA GeForce RTX 4080) and 0.70 s on a CPU (Intel Core i7), indicating that the method is capable of processing audio faster than its duration. The inference time scales linearly with the length of the input audio.

5.1. RQ1: Can a Cross-Lingual BER Model Improve Generalization Across Languages?

Figure 12 shows the word attention distribution of a proposed BER on semantically equivalent Russian and English sentence pairs, revealing different lexical salience patterns. In the top pair, despite language differences, the model predicts Neutral (75.5% for Russian, 99.4% for English), with attention concentrated on lexically aligned terms, suggesting partial cross-lingual alignment. In contrast, the bottom pair exposes a critical limitation: while both sentences express Sad, the model attends to literal, morphologically salient components rather than recognizing idiomatic or culturally entrenched expressions of grief. Notably, the Russian phrase, while semantically rich, lacks the conventional emotional framing found in the model’s training data. Its literal interpretation of “bleeding heart” evokes physical trauma rather than psychological sorrow, leading to a misattribution of emotion. This highlights the challenges of cross-lingual emotion modeling, which are not only caused by structural incongruences but also by the lack of culturally specific idioms in research corpora.

These results indicate that while the cross-lingual BER model achieves partial alignment for structurally similar expressions, it struggles with native-speaker-specific emotional idioms that do not appear in research corpora. Thus, cross-lingual generalization is possible but remains fragile without explicit modeling of linguistic and cultural diversity.

It should be noted that the model’s performance was evaluated on English and Russian, which are both Indo-European languages with relatively similar syntactic and semantic structures. Extending this model to other languages (such as Arabic, Korean, and others) would require emotion-annotated data and dedicated experiments. This is an important area for future research. Nevertheless, the proposed model is based on the multilingual Jina-v3 encoder [

66], which was pre-trained on over 30 languages and aligns semantically similar words across languages in a shared embedding space. Thus, the proposed cross-lingual BER model can, in principle, be applied to any supported language. However, without emotion-labeled data, it cannot reliably interpret culture-specific emotional expressions.

5.2. RQ2: Can a Hierarchical Cross-Modal Attention Mechanism Effectively Handle Variable Utterance Lengths?

This research question is addressed by the results in

Table 10 and the corresponding analysis in

Section 4.5. On MELD, which consists of short utterances (approximately 3.6 s long, with around 8 words each), the BiFormer model with one attention mechanism outperforms other models. Simpler fusion techniques, such as WPF, also work well in this setting. This implies that for short and fragmented expressions, global attention is sufficient to detect cross-modal emotions. Adding a hierarchical cross-modal attention mechanism using a graph- or gate-based fusion introduces unnecessary complexity in this case. In contrast, on the RESD corpus, which contains longer and more expressive utterances (average duration 6.0 s, approximately 24 words), the hierarchical graph-based BiGraphFormer model outperforms the simple BiFormer model. This suggests that modeling pairwise audio–text interactions in two stages can be beneficial when emotional signals develop over time and require more in-depth integration. Mamba-based models demonstrate low performance on both corpora, despite Mamba’s reputation for efficiently handling length-independent sequences. This suggests that linear state-space modeling alone is not enough to accurately BER.

In summary, it is important to adapt the fusion strategy depending on the length of the utterances. For short utterances, a lightweight cross-modal fusion model is more effective, while for longer utterances, a hierarchical cross-modal fusion model leads to improved performance. This finding supports the need for adaptive or hierarchical model architectures in real-world ER systems, which can adapt to different dialogue styles and contexts.

5.3. RQ3: Do Corpus-Specific Augmentation Strategies Improve the Generalization of Cross-Lingual BER Models?

This question is addressed by the results in

Table 10 and

Figure 10, which evaluate augmentation strategies using the BiFormer model as a consistent backbone. On the noisy and fragmented MELD, SDS, which extends short utterances by stacking same-emotion samples, improves performance by stabilizing input length and reducing acoustic variability. However, it does not bring any gain on the cleaner and more structured RESD corpus. Conversely, LS-LLM is particularly effective for RESD, as longer utterances often contain more nuanced or complex emotional cues that may not be captured by a single-label annotation scheme. By generating a context-aware distribution of soft labels, LS-LLM can help recover this affective complexity. However, on MELD, where utterances are short and noisy, the signal is less reliable, limiting the benefit of label smoothing. The TUG method, which creates emotional speech using TTS, fails to improve performance in both corpora. This confirms that SOTA TTS models cannot reliably generate emotional speech. Combining SDS and LS-LLM results in complementary improvements on both corpora. This shows that corpus-aware augmentation, especially when multiple strategies are used together, enhances cross-lingual generalization.

In summary, the choice of augmentation strategies should be based on the utterance characteristics. SDS can be used for variable-length or noisy utterances, while LS-LLM can be used for long or nuanced emotional utterances. Combining these strategies can improve overall performance, as it allows for adaptation and context-awareness in data augmentation, which benefits generalization.

5.4. Comparison with Sate-of-the-Art Methods

The SOTA methods are predominantly developed under a single-corpus setup, which limits their generalization to unseen data. In contrast, this research focuses on a multi-corpus setup to enhance cross-domain robustness. Nevertheless, a comparative evaluation of the proposed method with the SOTA methods in a single-corpus setup was also conducted. The results of this comparison are presented in

Table 11. The results demonstrate that the proposed method outperforms SOTA on both target measures, highlighting the advantages of the Transformer-based bimodal fusion model and the proposed data augmentation strategies.

5.5. Method Proposed in Intelligent Systems

To demonstrate the practical applicability of the proposed Cross-Lingual Bimodal Emotion Recognition system, an interactive prototype was implemented using the Gradio library (

https://www.gradio.app, accessed on 28 September 2025).

Figure 13 illustrates the user interface of the developed system. The system accepts an audio input recorded via a microphone. For transcript extraction, the Whisper model is employed, which provides accurate and robust transcription across multiple languages. The recognized emotions are visualized as bar plots, showing the predictions from unimodal models (text-only and audio-only) as well as from the bimodal model.

This system serves as a proof of concept and can be easily integrated into a range of real-world expert applications, including customer support services, voice assistants, emergency dispatch systems, and other interactive platforms requiring emotional awareness [

149,

150,

151]. Such a deployment highlights the potential of BER technologies to enhance HCI in cross-lingual emotion modeling.

6. Conclusions

This research addresses the task of Cross-Lingual Bimodal Emotion Recognition by proposing a novel method that integrates multilingual Mamba-based encoders with multilingual Transformer-based cross-modal fusion. The proposed method achieves robust generalization across two linguistically and structurally diverse corpora: MELD (English) and RESD (Russian). To enhance the generalizability of the method, various data augmentation strategies are introduced: SDS and LS-LLM. The proposed improvements lead to an increase in performance over the SOTA method. For MELD, the improvement is 0.58% (68.31% vs. 67.73%), and for RESD, it reaches 4.32% (85.25% vs. 80.93%). These results confirm the effectiveness of the proposed method in both cross-lingual and monolingual setups.

Unimodal feature extractors are systematically evaluated using various temporal encoders (LSTM, xLSTM, Transformer, and Mamba). In audio-based experiments, the Wav2Vec2.0 embeddings outperform ExHuBERT, and Mamba achieves superior performance on longer utterances. For text, word-level models such as Jina-v3 and XLM-RoBERTa outperform the character-level CANINE-c model. Mamba again shows better efficiency compared to other temporal models.

To perform bimodal fusion, a model is built based on three attention mechanisms: Transformer-, graph-, and gated-based attention. Among these, the Transformer-based model (BiFormer) shows the best average performance, with relative improvements of 16.23% and 7.14% over the text and audio baselines, respectively. The choice of attention mechanism proves corpus-dependent: Transformer-based attention mechanisms are sufficient for short utterances (MELD), whereas longer and more complex sequences (RESD) benefited from Transformer- and graph-based cross-modal integration.

The proposed data augmentation strategies also demonstrate clear benefits that are specific to the corpus. SDS improves performance on noisy, shortened and variable-duration utterances in MELD, while LS-LLM enhances recognition in cleaner and emotionally nuanced data of RESD. Notably, combining both strategies yields complementary gains, underscoring their synergistic potential. A third augmentation strategy, TUG, is also evaluated. TUG involves synthesizing emotional audio-text pairs to mitigate class imbalance. However, it proves to be ineffective due to the current inability of TTS models to reliably generate emotionally expressive speech.

In future work, the framework will be expanded to incorporate visual modalities, enabling comprehensive affective computing in dynamic, real-world environments.

Author Contributions

Conceptualization, E.R. and D.R.; methodology, E.R., A.A., T.A., D.K. and D.R.; software, D.R.; validation, E.R., A.A., T.A. and D.K.; formal analysis, E.R. and A.A.; investigation, E.R., A.A., T.A. and D.K.; resources, E.R., A.A., T.A., D.K. and D.R.; data curation, D.R.; writing—original draft preparation, E.R. and A.A.; writing—review and editing, E.R. and D.R.; visualization, E.R. and A.A.; supervision, D.R.; project administration, D.R.; funding acquisition, D.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Basic Research Program at the National Research University Higher School of Economics (HSE University) and made use of computational resources provided by the university’s HPC facilities.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| HCI | Human-Computer Interaction |

| MER | Multimodal Emotion Recognition |

| ER | Emotion Recognition |

| BER | Bimodal Emotion Recognition |

| SER | Speech Emotion Recognition |

| UAR | Unweighted Average Recall |

| WAR | Weighted Average Recall |

| MF1 | Macro F1-score |

| WF1 | Weighted F1-score |

| SDS | Stacked Data Sampling |

| GDS | Generated Data Sampling |

| TUG | Template-based Utterance Generation |

| GAN | Generative Adversarial Network |

| CER | Continuous Emotion Recognition |

| SOTA | State-of-the-Art |

| WPF | Weighted Probability Fusion |

| LLM | Large Language Model |

| LS-LLM | Label Smoothing Generation based on LLM |

| TTS | Text-to-Speech |

| RESD | Russian Emotional Speech Dialogs |

| MELD | Multimodal EmotionLines Dataset |

| ASR | Automatic Speech Recognition |

| SAWA | Sliding Adaptive Window Attention |

| GCPU | Gated Context Perception Unit |

| DFC | Dynamic Frame Convolution |

| MCFN | Modality-Collaborative Fusion Network |

| SSM | State-Space Model |

| BERT | Bidirectional Encoder Representations from

Transformers |

| RoBERTa | Robustly optimized BERT approach |

| ALBERT | A Lite BERT |

| HuBERT | Hidden-unit BERT |

| ExHuBERT | Extended HuBERT |

| CNN | Convolutional Neural Network |

| DCNN | Deep CNN |

| LSTM | Long Short-Term Memory network |

| BiLSTM | Bidirectional LSTM network |

| WavLM | Waveform-based Language Model |

| HRI | Human-Robot Interaction |

| MTL | Multi-Task Learning |

| DANN | Domain-Adversarial Neural Network |

| GPT | Generative Pre-trained Transformer |

| CM-ARR | Cross-Modal Alignment, Reconstruction, and

Refinement |

| MFSN | Multi-perspective Fusion Search Network |

| MFCC | Mel-Frequency Cepstral Coefficients |

| RL | Reinforcement Learning |

| GloVe | Global Vectors for Word Representation |

| COVAREP | COllaborative Voice Analysis REPository |

| BiGRU | Bidirectional Gated Recurrent Unit |

| CLIP | Contrastive Language-Image Pretraining |

| KWRT | Knowledge-based Word Relation Tagging |

| RNN | Recurrent Neural Network |

| LoRA | Low-Rank Adaptation |

| E-ODN | Emotion Open Deep Network |

| PAD | Pleasure-Arousal-Dominance model of emotion |

| xLSTM | Extended LSTM |

| TelME | Teacher-leading Multimodal fusion network for

Emotion recognition in conversation |

| GPU | Graphics Processing Unit |

| TCN | Temporal Convolutional Network |

References

- Liu, W.; Qiu, J.L.; Zheng, W.L.; Lu, B.L. Comparing Recognition Performance and Robustness of Multimodal Deep Learning Models for Multimodal Emotion Recognition. IEEE Trans. Cogn. Dev. Syst. 2021, 14, 715–729. [Google Scholar] [CrossRef]

- Geetha, A.; Mala, T.; Priyanka, D.; Uma, E. Multimodal Emotion Recognition with Deep Learning: Advancements, Challenges, and Future Directions. Inf. Fusion 2024, 105, 102218. [Google Scholar] [CrossRef]

- Wu, Y.; Mi, Q.; Gao, T. A Comprehensive Review of Multimodal Emotion Recognition: Techniques, Challenges, and Future Directions. Biomimetics 2025, 10, 418. [Google Scholar] [CrossRef] [PubMed]

- Ai, W.; Zhang, F.; Shou, Y.; Meng, T.; Chen, H.; Li, K. Revisiting Multimodal Emotion Recognition in Conversation from the Perspective of Graph Spectrum. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 11418–11426. [Google Scholar] [CrossRef]

- Dikbiyik, E.; Demir, O.; Dogan, B. BiMER: Design and Implementation of a Bimodal Emotion Recognition System Enhanced by Data Augmentation Techniques. IEEE Access 2025, 13, 64330–64352. [Google Scholar] [CrossRef]

- Khan, M.; Tran, P.N.; Pham, N.T.; El Saddik, A.; Othmani, A. MemoCMT: Multimodal Emotion Recognition using Cross-Modal Transformer-based Feature Fusion. Sci. Rep. 2025, 15, 5473. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A Review of Affective Computing: From Unimodal Analysis to Multimodal Fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Dixit, C.; Satapathy, S.M. Deep CNN with Late Fusion for Real Time Multimodal Emotion Recognition. Expert Syst. Appl. 2024, 240, 122579. [Google Scholar] [CrossRef]

- Huang, C.; Lin, Z.; Han, Z.; Huang, Q.; Jiang, F.; Huang, X. PAMoE-MSA: Polarity-Aware Mixture of Experts Network for Multimodal Sentiment Analysis. Int. J. Multimed. Inf. Retr. 2025, 14, 7. [Google Scholar] [CrossRef]

- Li, Q.; Gkoumas, D.; Sordoni, A.; Nie, J.Y.; Melucci, M. Quantum-Inspired Neural Network for Conversational Emotion Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 13270–13278. [Google Scholar] [CrossRef]

- Li, B.; Fei, H.; Liao, L.; Zhao, Y.; Teng, C.; Chua, T.S.; Ji, D.; Li, F. Revisiting Disentanglement and Fusion on Modality and Context in Conversational Multimodal Emotion Recognition. In Proceedings of the ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 5923–5934. [Google Scholar] [CrossRef]

- Tapaswi, M.; Zhu, Y.; Stiefelhagen, R.; Torralba, A.; Urtasun, R.; Fidler, S. MovieQA: Understanding Stories in Movies through Question-Answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4631–4640. [Google Scholar] [CrossRef]

- Lin, Z.; Madotto, A.; Shin, J.; Xu, P.; Fung, P. MoEL: Mixture of Empathetic Listeners. In Proceedings of the Conference on Empirical Methods in Natural Language Processing and International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 121–132. [Google Scholar] [CrossRef]

- Zhang, F.; Chai, L. A Review of Research on Micro-Expression Recognition Algorithms based on Deep Learning. Neural Comput. Appl. 2024, 36, 17787–17828. [Google Scholar] [CrossRef]