Abstract

Customer satisfaction is not just a significant factor but a cornerstone for smart cities and their organizations that offer services to people. It enhances the organization’s reputation and profitability and drastically raises the chances of returning customers. Unfortunately, customer support service through online chat is often not rated by customers to help improve the service. This study employs artificial intelligence and data augmentation to predict customer satisfaction ratings from conversations by analyzing the responses of customers and service providers. For the study, the authors obtained actual conversations between customers and real agents from the call center database of Jeddah Municipality that were rated by customers on a scale of 1–5. They trained and tested five prediction models with approaches based on logistic regression, random forest, and ensemble-based deep learning, and fine-tuned two pre-trained recent models: ArabicT5 and SaudiBERT. Then, they repeated training and testing models after applying a data augmentation technique using the generative artificial intelligence, GPT-4, to improve the unbalance in customer conversation data. The study found that the ensemble-based deep learning approach best predicts the five-, three-, and two-class classifications. Moreover, data augmentation improved accuracy using the ensemble-based deep learning model with a 1.69% increase and the logistic regression model with a 3.84% increase. This study contributes to the advancement of Arabic opinion mining, as it is the first to report the performance of determining customer satisfaction levels using Arabic conversation data. The implications of this study are significant, as the findings can be applied to improve customer service in various organizations.

1. Introduction

The concept of smart cities refers to cities that use technology and data to improve their residents’ quality of life [1]. Therefore, smart cities are concerned with customer satisfaction. When city residents receive enhanced services that satisfy them, they become more loyal and involved in urban development [2]. In addition, customer satisfaction ratings are essential because product and service providers and their decision-makers use them as a metric for managing and improving their products or services to decide the organization’s continuity or product life [3]. When customers are happy and satisfied, this positively affects the continuity of sales and attracts new customers, as satisfied customers refer others, leading to business continuity [4]. Furthermore, since it costs business owners five to seven times more to acquire new customers than to retain current customers [5], ensuring customer loyalty and satisfaction is crucial.

Organizations can use different mediums to interpret customer satisfaction, such as review websites, social media, or online chat software. Review websites and online chat software, whether live chat or chatbot, can have an advantage over social media, where customers can rate their reviews or conversations to indicate their level of satisfaction, thus reflecting how close or far they are from being satisfied. Improving customer support through online chat software requires knowing the satisfaction rating of the people served. Still, customers often leave customer support conversations without rating the support service, as they are not obligated to do so. At the same time, businesses need to know how customers rate their customer support services to make decisions based on these ratings that would improve their products, services, or customer support. They can enhance services or products and create offers based on clients’ needs. If customers are satisfied with customer support teams, businesses can promote themselves by showing that they care about customer satisfaction and how much their customers appreciate them. Also, organizations can encourage dedicated service providers who serve customers and frequently obtain the highest conversational rating after their service with financial or non-financial incentives to maintain their excellent service. Otherwise, looking for the reasons for bad ratings; if it is because of a particular service provider, then they can consider replacing the one responsible for their customer’s irritation, or if it is because the service was slow, then they can act upon that by developing a chatbot that transfers to a human agent if necessary. These considerations lead organizations and businesses to more profit, success, and eventual growth.

The common practice for rating customer satisfaction in online chat software is to ask the user to rate the provided service manually by selecting a suitable rating or writing a user opinion about it. Some online chat software has only two options for rating the conversation: satisfactory or unsatisfactory; others offer more levels for rating. A three-level rating is rating the conversation as satisfactory, neutral, or unsatisfactory. A five-level rating is rating the conversation as poor, fair, average, good, or excellent [6]. Identifying five levels indicating customer satisfaction can be more beneficial than determining only two statuses: positive or negative. Thus, business owners and service providers can measure their proximity to customer satisfaction. Finding effective automatic estimation methods for customer satisfaction in customer support chat services remains an essential challenge for research [7]. These methods add a significant feature to online chat software as they assume that user satisfaction can measure the performance of online chat software service [8]. Moreover, after extensive research, the authors found no studies on employing customer satisfaction with online chat software using the Arabic language. The absence of publicly available Arabic datasets for rated customer support conversations is likely to protect client confidentiality, as some clients share personal information during conversations [9].

This study aims to use artificial intelligence techniques and data augmentation to perform an automated rating of customer satisfaction with customer service conversations, focusing on the Arabic language.

The main contributions of this study are as follows:

- (1)

- This study presents the first reported results for predicting customer satisfaction using Arabic conversational data, unlike the current focus of Arabic literature on opinion mining, which normally uses reviews, news, and posts found on the X platform (previously known as Twitter). The data collected from the call center database of Jeddah Municipality and rated by its customers offers a unique perspective on customer satisfaction in Arabic. These findings are significant as they illuminate a previously unexplored area of research, underscoring the importance of understanding customer satisfaction in diverse linguistic contexts.

- (2)

- The study employs five different approaches at two-, three- and five-level ratings using customer service conversations from Jeddah Municipality. These approaches were chosen for their performance, including logistic regression [10], random forest [11], ensemble-based deep learning [12], ArabicT5 [13], and SaudiBERT [14]. The methodology’s thoroughness, which includes exploring various approaches, instills confidence in the research’s validity, providing a solid foundation for the findings.

- (3)

- The study is the first in the literature to apply data augmentation to conversational data using GPT-4 [15]. This innovative approach, which involves retraining using the five approaches with augmented data and testing using the original test data, adds a new dimension to the study of customer satisfaction prediction.

The remainder of this study comprises sections on related work, methodology, results with discussion, and conclusion and future work.

2. Related Work

Nowadays, online chat software is a popular communication tool for customer service [16]. Thus, it is vital to measure the performance of online chat software to guarantee customer satisfaction. As it is assumed that the performance of online chat software can be measured through user satisfaction, multiple research studies have emerged to estimate customer satisfaction [8]. The conversational datasets used in any of the following studies can be task-oriented conversations or non-task-oriented conversations [17,18]. The former conversations are in a close domain and short goal-oriented dialogues with specific tasks. The latter conversations are in an open domain, covering a broad range of topics for users and involving wide natural language expressions [19]. The authors in [8,18,20,21,22,23,24,25,26,27,28] used task-oriented conversations, while the authors in [7,29,30,31,32] used non-task-oriented conversations. These studies are further discussed in Section 2.2 and Section 2.3.

When datasets of different kinds are not diverse enough for model development, authors can increase them using data augmentation techniques. The related work will begin by giving an overview of related data augmentation concepts and studies.

2.1. Data Augmentation

The goal of developing machine learning models for handling supervised learning tasks is to give the model the ability to predict unseen data depending on previously observed data. One of the methods that could improve the generalization capability of machine learning models is data augmentation. Data augmentation is a technique that increases the variety of training sets without explicitly gathering new data [33]. It may overcome the challenge of an imbalanced dataset [34] and the issue of text data scarcity [35]. Using data augmentation in text processing tasks is still low and growing compared to its usage in image processing tasks. However, not all usages of this technique have been successful in text processing tasks [33]. Accordingly, there is a need to report the results of applying data augmentation in text processing tasks to indicate its success status [36], especially in this case, when predicting self-reported user satisfaction based on rated conversations. The performance of models applying data augmentation can be measured using standard metrics of accuracy, precision, recall, and F1-score, in addition to the confusion matrix [37]. A confusion matrix is used to visualize a classification model’s accurate and inaccurate predictions of test data within each class as a tabular representation [38]; for instance, for a model that predicts five rating classes, the confusion matrix distinguishes between accurate and inaccurate ratings, which are poor, fair, average, good, or excellent for customer service. It accomplishes this by offering a detailed analysis of true positive, true negative, false positive, and false negative predictions; this makes it a crucial evaluation tool for examining the classification results achieved by the models and measuring the models’ effectiveness in varying class distribution [39].

Data augmentation or expansion has different techniques and can be applied at different levels. The respective application details of those methods are classified into two categories: word level and sentence level.

2.1.1. Word Level Data Augmentation

This method generates new synthetic data by randomly swapping, deleting, inserting, and replacing at least one word in the text [36], where each operation is implemented as follows:

- Random Swap: Two randomly selected words from the sentence are defined, and their positions are exchanged. This process can be repeated multiple times.

- Random Delete: Words are randomly removed from the sentence with a particular probability p.

- Synonym Replacement: The list of stop words must be identified to exclude stop words from the sentence during the random selection of words that will be replaced with synonyms.

- Random Insert: After identifying the stop words list, a randomly selected word that is not a stop word gets replaced with its synonym but does not stay at the same position in the sentence. Instead, it gets inserted into a random position. This operation can be repeated multiple times [40]. During synonym replacement and random insert operations, the words can be replaced with their similar words with different techniques such as embedding-based approaches such as GloVe and Word2Vec, language dictionaries such as WordNet, and deep learning-based embeddings such as Transformers [41].

2.1.2. Sentence Level Data Augmentation

Implementation is more challenging at the sentence level than at the word level since data augmentation at the sentence level alters whole sentences to produce synthetic data. It leads to a paraphrased sentence. One frequently employed technique is machine translation models [36]. A back-translation method translates the original text data multiple times between the source and several target languages and then back-translated into the source language [42]. However, in the simplest form of back-translation, the text data gets translated from the source language into another language once and then back into the original language [43]. With this method, even though the sentences are not the same, the meaning of the synthetic text data will remain the same. The most sophisticated strategy is utilizing a generative model. Using the probability of the previous set of words, the model will generate new data word by word. This technique will further enrich the training data, enabling the synthetic data to have a distinct sentence structure from the original data [36]. Both back-translation and generative data augmentation approaches are classified as neural approaches [44]. Automatic paraphrase generation tools, including the GPT-4 model, produce sentences close to each other semantically based on a single input sentence [45]. Paraphrasing can be used for many natural language processing tasks [46], mainly when the target dataset is in a low-resource language, such as Arabic [47].

2.2. Studies Within Open-Domain

Dealing with the complexity of open-domain conversation context, such as live chat context, presents a significant challenge for models. Unlike close-domain ones with a chatbot, these conversations are more informal and have a denser context with multiple topics they can cover. In [29], the authors used a dataset of 4967 open-domain spoken conversations between Amazon Alexa users and their conversational agent, Roving Mind, to investigate whether learning evaluation models without using any more annotations than conversation rating is feasible. This dataset is unbalanced and rated from 1 to 5. The researchers developed a Support Vector Regression (SVR) model for automatic dialogue evaluation. The result of the Root Mean Square Error (RMSE) measured as 1.350 underscores the challenge of estimating subjective user ratings in open-domain conversations.

The user conversation rating is not the only indicator for predicting customer satisfaction. The authors [30] evaluated customer satisfaction directly from system logs without manual annotation using self-reported satisfaction surveys in a supervised classification paradigm. The self-reported satisfaction surveys are the ones by the end of each conversation asking the clients if they based their answer on their contact with customer service, how likely would they recommend the company to their family or friends as this question reflects a general appreciation rather than the questions about satisfaction on either the quality of explanations, support, advice, or solutions. The authors compared different classification schemes to classify the customers as promoters, passives, or detractors. The highest achieved accuracy with the 3-label scheme is 57.5%, with a macro-averaged F1-score of 46.2% using the convolutional neural networks (CNN) model. The chat conversations for this experiment were extracted from the contact center logs of Orange customer services and consisted of 79,476 conversations.

Predicting customer satisfaction using an unbalanced dataset is expected in the literature. Ref. [31] developed a Paradise model to predict the performance of Athena’s dialogue system, which went through thousands of conversations with real users. The authors used their corpus of Athena conversations comprising 32,235 unbalanced conversations distributed over a five-rating scale. The authors applied two metrics for conversation quality: user ratings and conversation length. They experimented with estimating these metrics using system-dependent and independent automatic features. The best model to predict user ratings, which was found challenging to predict, achieved an R2 value of 0.135 using a DistilBert model. In contrast, using the random forest model, the best model for predicting conversation length achieved an R2 of 0.862.

Some researchers apply a two-step approach to estimate the client rating. For instance, [7] proposed a counterfactual-long short-term memory network (CF-LSTM) approach that achieved an accuracy of 48.2% over open-domain conversations gathered from interactions with an Alexa Prize SocialBot consisting of 128,000 conversations. It predicts ratings depending on turn-level features using multiple regressors. Then, these features are projected to the dialogue level. In [32], the researchers developed a customer satisfaction estimation approach where the sentiment scores were generated for each conversation using LSTM for each user utterance; then, these embeddings were used for overall customer satisfaction estimation at the conversation level using regression models. Using unannotated data consisting of 6308 conversations from Alexa Prize SocialBot data, the proposed bidirectional LSTM (BiLSTM)-based temporal regression model achieved a higher prediction performance of 0.2366 using Spearman’s correlation in comparison with static Support Vector Regression (SVR).

2.3. Studies Within Closed-Domain

Most studies classifying user opinions based on conversations between clients and customer support use datasets with specified topics, such as movies or phone services. In [20], the authors proposed an objective and automatic approach that uses sentiment scores as a proxy for measuring satisfaction with service encounters using a chatbot. The authors used five open-source and commercial sentiment analysis methods through APIs. These methods are AFINN and VADER, which depend on sentiment lexicons, IBM’s sentiment analysis method, Microsoft’s sentiment analysis method, and Google’s sentiment analysis method, which rely on machine learning algorithms such as support vector machines or Naive Bayes. AFINN and VADER are lexicons attuned to sentiment in microblogging, such as the X platform (formerly Twitter) [48,49]. The authors tested for a probable correlation between the values of sentiment scores obtained from the five sentiment analysis methods and chatbot service encounter satisfaction received from a survey during an experiment. The experiment resulted in the ExpCorpus dataset, a task-oriented dataset used to find the most appropriate plan for mobile phones. Using Pearson’s correlation statistic, they found a strong positive correlation between sentiment scores and chatbot service encounter satisfaction values for IBM’s sentiment analysis method and a moderate positive correlation for the other four sentiment analysis methods (AFINN, VADER, Microsoft’s sentiment analysis method, and Google’s sentiment analysis method). In [28], the authors proposed a Multi-round Long Dialogue Sentiment Prediction model that applies Multidimensional Attention, shortly named (MLDSPMA). This method merges local and global attention to predict the conversation’s negative, neutral, or positive sentiment. Their experiment achieved an accuracy of 46.77% using their proposed model with the KdConv dataset that covers topics involving music, travel, and movies.

Experts may be involved in the data preparation phase of rating classification for user opinions. For instance, in [24], the researchers suggested a user satisfaction estimation approach to jointly predict turn-level response quality labels annotated by experts and conversation-level ratings self-reported by end users. The proposed BiLSTM-based model weighs the contribution from each turn toward the estimated conversation-level rating. The results reached an F-score of 71.07% for the dissatisfactory class as a binary classification using the proposed BiLSTM-based model with an attention mechanism for over 2133 conversations sampled from 24 Alexa domains.

There is an absence of a public conversational dataset of the Arabic language. One of the efforts to create a public English-based dataset was by [21]. The authors proposed a user satisfaction annotation dataset called User Satisfaction Simulation (USS), which contains 6800 conversations from different domains, including e-commerce and movie recommendation conversations and task-oriented dialogues constructed through Wizard of Oz experiments. The USS dataset is based on five collected datasets: CCPE, JDDC, MultiWOZ 2.1, ReDial, and SGD. The BERT-based model was used to estimate the rating of task-oriented conversation systems, and it achieved the best performance for cross-domain generalization. In contrast, a hierarchical Gated Recurrent Units (HiGRU)-based model performs best in in-domain user satisfaction estimation over CCPE with an F1-score of 27.4% for the dissatisfactory class. The CCPE dataset consists of 500 unbalanced text-based conversations.

Likewise, the authors in [27] used an unbalanced dataset to evaluate customer satisfaction. They aimed to improve the quality of service. The proposed methodology involved pre-processing, feature extraction, and classification using a support vector machine (SVM). The best-achieved result is an accuracy of 74.3% after classifying 2364 recordings of the KONECTADB dataset as satisfied and unsatisfied customers using the articulation feature set. Their data is voice-based recordings of customers’ opinions about the provided service through call center agents. Another study in [22] used an unbalanced dataset with their proposed schema-guided user satisfaction modeling SG-USM approach. To predict the user’s satisfaction level, SG-USM models the degree of fulfillment of the user’s preferences concerning the task attributes. After experimenting on benchmark datasets, the model achieved 64.7% accuracy using the SGD dataset.

Besides the English datasets, researchers in [25] constructed a dataset from Chinese customer service conversations from an e-commerce platform. They annotated all the utterances of the dataset with sentiment labels and used this dataset with their proposed Context-Assisted Multiple Instance Learning (CAMIL) model for service satisfaction analysis. The proposed approach has a macro-averaged F1-score of 78.6% and an accuracy of 78.5% for the Makeup dataset with 3540 balanced conversations. Furthermore, [26] used two Japanese recorded unbalanced call datasets classified into positive, neutral, and negative: acted and real datasets. They created the Hierarchical Multi-Task (HMT) model based on long short-term memory recurrent neural networks to capture contextual information. The real dataset consisting of 391 conversations achieved an accuracy of 74% with a macro-averaged F1-score of 57.1% for conversation-level estimation using HMT with bidirectional LSTM-RNN function (BLSTM) and unidirectional LSTM (ULSTM).

Datasets can be annotated with dialogue acts to estimate customer satisfaction and indicate the purpose the speaker intends to achieve with their words [50]. For instance, [23] proposed a framework to estimate user satisfaction in goal-oriented conversational systems by jointly learning dialogue act and user satisfaction estimation tasks. The highest performance of user satisfaction estimation using the proposed framework was achieved using a model, USDA (CLU), which jointly learns user satisfaction estimation and dialogue act identification by a latent subspace clustering network. USDA (CLU) reached an accuracy result of 65.1% for user satisfaction estimation using a subset of the JDDC dataset, consisting of 3300 Chinese e-commerce conversations classified as dissatisfied, neutral, or satisfied. In [18], the authors examined the feasibility of predicting user intent and satisfaction with movie recommendations by comparing multiple machine learning methods. The system integrates dialogue behavior features to predict user satisfaction with the recommendations. For user satisfaction prediction, the experimental results over an unbalanced dataset of 336 selected conversations from the ReDial dataset about movie recommendations, classified either as satisfactory or unsatisfactory conversations, show that leveraging dialogue behavior features enabled the Multilayer Perceptron (MLP) classification model to achieve competitive results: precision 89.90%, recall 56.81%, and F1-score 68.84%. Unlike the authors of [18], the authors of [8] used the entire ReDial dataset, classified into three classes, with their proposed estimator, namely sAtisfaction eStimation via the HAwkes Process (ASAP), where the Hawkes process models the dynamics in user satisfaction across interaction turns. The accuracy of predicting user ratings of close-domain conversations using ASAP is 66.0% on ReDial.

2.4. Summary

The classification of conversation ratings can take different forms: binary, three classes, five classes, and seven classes. Classifying conversations based on user opinion into five or more classes is more precise than classifying them into two or three classes, as it helps to organize and differentiate the data [51]. Most studies on predicting customer satisfaction using conversations classify the conversations into three classes and use datasets with a few instances in a closed domain, mainly using English. Unfortunately, although over 300 million people speak Arabic as a first language globally, Arabic conversational datasets were not found [52]. Additionally, text-based conversational data is receiving more attention than voice-based data. Finally, the use of data augmentation to develop models that predict conversation ratings, which were reported through end users, was not found in the literature. The previous studies are summarized in Table 1.

Table 1.

Summary of results from studies related to customer satisfaction based on conversational datasets.

3. Methodology

This section describes the dataset, relevant methods, and this study’s suggested approach for classifying customer satisfaction ratings with customer service conversations using original and augmented Arabic conversational data.

3.1. Dataset

This study used Arabic text-based conversations with five classes of self-reported ratings by the customers of Jeddah Municipality through the live customer support (JMCS) service, according to actual conversations between customers and real agents in city management-related queries. These conversations were extracted from the call center database of Jeddah Municipality from 6 October 2019, to 11 January 2024. Each entry in the dataset consists of personal data of the customer and service provider, a conversation between the customer and service provider, the duration of the conversation, the rating, and messages appearing between client and service provider responses indicating the reason for each conversation disconnection. The disconnection can either be due to the service provider intentionally closing, the customer intentionally closing, or a sudden technical error. The dataset contains 4005 conversations, with the distribution across classes as follows: 1504 for class 1 (i.e., poor), 144 for class 2 (i.e., fair), 190 for class 3 (i.e., average), 322 for class 4 (i.e., good), and 1845 for class 5 (i.e., excellent). The training data consists of 80% of the data, which is 3204, while the test data represents 20% from each class, totaling 801.

3.2. Relevant Methods

Two methods were used in this study to develop and test prediction models: training from scratch, which included approaches based on logistic regression (LR) [10], random forest (RF) [11], and ensemble-based deep learning (EDL) [12], and fine-tuning pre-trained recent models: ArabicT5 [13] and SaudiBERT [53]. These training approaches from scratch showed improved results in previous studies when used for rating classification with the widely known Arabic benchmark dataset HARD in [10,11,12]. In [10], the authors proposed the HARD dataset, which is suitable for opinion mining applications. It consists of 409,562 unbalanced reviews about hotels. Each review is associated with a rating from one to five, written in standard and colloquial Arabic, in addition to punctuation, emojis, and emoticons. The authors compared multiple models, and the highest accuracy result was 76.1%, using the LR approach that applied sets of features: bag-of-words for both unigram and bigram, combined with TF-IDF. In [11], the study developed shallow and deep learning models for opinion mining without extracting any features. The accuracy reached 74.2% in predicting the five ratings using the RF model on a balanced HARD dataset, while the precision, recall, and F1-score results were 74.0%. In [12], the study improved the five-level classification for ratings using the EDL approach on the HARD dataset. The authors used multiple resources, including the AraSenTi lexicon [54], a negation words list, and a booster words list, to extract the following features: the number of positive words, negative words, negation words, booster words, repeated characters, question marks, exclamation marks, periods, and commas, as well as the emotions score, the total weight of positive words, and the total weight of negative words. Then, they developed an ensemble of three classifiers based on CNN, BiLSTM, and CNN-BiLSTM with the FastText embeddings. To apply the features, they combined the prediction from the ensemble model with the MLP classifier. They achieved an accuracy performance of 76.58% using the balanced dataset and an accuracy of 77.75% using the unbalanced dataset.

Table 2 details the differences between data cleaning and normalization steps in each compared study for the LR, RF, and EDL approaches. The EDL approach has the advantage of considering emojis and emoticons; it replaces them with equivalent meanings and removes emails and URLs. In contrast, the RF approach has the advantage of applying repetitive letter removal.

Table 2.

Comparison of pre-processing steps within the data cleaning and normalization between the three approaches.

3.3. Proposed Approach

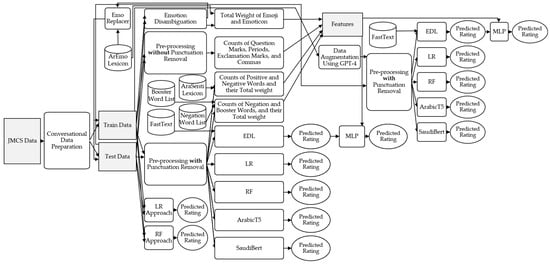

The study proposed a multi-faceted approach by applying several experiments to select the optimal model for classifying customer satisfaction ratings with customer service conversations using the JMCS dataset. The approach started with extracting the following information from each conversation: collected responses between the service provider and client, rating, and conversational features, which are the duration of the conversation, counters for who ended the conversation provided by the live chat (i.e., whether it was ended by the service provider, by the customer, or due to a technical error), and counters of the number of client and service provider responses. These features were extracted to explore whether they would impact performance positively or negatively. Then, to divide the data into training and testing sets, the authors did not use the common train_test_split function to randomly split the data each time for the development of a new model. The issue with this method is that each time it divides the data for each developed model (other than the first one), it would take some of the previously used training data as test data, and some of the previously used test data as training data for the next developed model, and so on. This study divided the data by creating a random number in a new column next to each data entry. Then, these data were sorted according to the randomly generated numbers. After that, the training and testing data were divided into two separate isolated files. The test data were never involved in the training process and were only used to evaluate the model after it had completed development using the training data. Therefore, it was treated as unseen data. Figure 1 illustrates the use of training and testing data in the conducted experiments and the main processes related to the methodology.

Figure 1.

A multi-faceted approach for choosing the model for the JMCS dataset.

Training and testing for all approaches were done for three arrangements of class prediction: predicting five classes, three classes, and two classes. No changes were needed to the rating values used to predict five classes, as the original data already had five ratings. However, for predicting three and two ratings, classes one and two were merged to indicate unsatisfactory ratings, and classes four and five were merged to indicate satisfactory ratings. To predict three rating classes, the unsatisfactory and satisfactory ratings were used in addition to those that belonged to class three, indicating a neutral opinion. To predict two rating classes, the data of class three were deleted, as these data were used to predict either unsatisfactory or satisfactory.

Then, five models, LR, RF, EDL, ArabicT5, and SaudiBERT, were developed and compared using the same pre-processing steps. Given that only the first three non-fine-tuned models have pre-processing steps, the authors selected the EDL approach’s pre-processing steps to replace the pre-processing steps of LR and RF, as the EDL model achieved higher performance. It has the following parameters: learning_rate = 0.001, epochs = 25, and batch_size = 128. After this comparison, the approaches of LR and RF, including the pre-processing steps for LR and RF, were also applied using JMCS data to see if the model’s performance would change. These experiments were done for the three arrangements of classes: predicting two, three, and five ratings.

Applying the EDL approach required generating emoji and emoticon lexicons for all three datasets of two, three, and five classes, followed by using multiple existing resources to generate text-based features. The generated ArEmo lexicon is used with the Emo replacer algorithm to replace all the emojis and emoticons in the text with their meaning after the text has been prepared with the emoticon disambiguation algorithm to remove enclosed brackets and uniform resource locators to avoid considering part of them as part or the whole part of an emoticon. Moreover, the ArEmo lexicon was used to calculate the total weight of emojis and emoticons after applying the emoticon disambiguation algorithm to the data for the same aforementioned reason. The AraSenti Lexicon was applied to count positive and negative words separately and their total weights. The lists of booster and negation words were used to count negation words and booster words. Finally, the data were pre-processed without removing the punctuation to count the number of the following features: question marks, periods, exclamation marks, and commas.

The complete pre-processing steps were applied to the data for training and testing the five models. The study implemented the same model architecture for the EDL model in [12]. The model is an ensemble of three models, CNN, BiLSTM, and CNN-BiLSTM, with FastText embeddings. The prediction from the ensemble model with a text-based feature, a conversational feature, or all features is input to the MLP classifier for training and testing the EDL-MLP model. In this study, the conversational features were tested in the EDL-MLP model for the first time.

Regarding the outliers removal by the EDL approach, all three LR, RF, and EDL models using JMCS data did not require reducing the text length, except for ArabicT5 and SaudiBERT. Thus, the average length of the characters in the responses was computed, resulting in 763; then, all the extra characters were manually removed from the middle, as the authors noticed that the most critical sentiments appeared at the beginning and the end of each conversation. In contrast, the middle part contained details regarding the customer’s request.

Training and testing of the five models, LR, RF, EDL, ArabicT5, and SaudiBERT, for predicting two, three, and five ratings, were repeated after applying the data augmentation technique through paraphrasing using generative artificial intelligence, GPT-4, to improve the unbalance in customer conversations data. When augmented data are used for training, testing is done only using the original test data, as test data should be left as unseen actual original data [55]. To prepare an augmented dataset for each category of classes: two, three, or five, first, the authors compared the number of data associated with all ratings. Then, they selected the highest number of data to increase all the other data associated with other ratings to this number. For instance, the largest amount of training data in the 5-class rating dataset was 1476, associated with rating 5. Therefore, the data within the other classes were increased repetitively with generated paraphrased text from each row until they reached 1476. Table 3 demonstrates detailed data distribution applied in this study’s experiments.

Table 3.

Data distribution before and after augmentation for predicting three ratings ranges: from one to five, from one to three, and from one to two.

For the development of the models, Google Colab was utilized as the platform of choice. This modern and efficient tool, combined with the Python programming language, version 3.8, allowed the implementation of the EDL approach with the runtime shape to high-ram. Notably, using ArabicT5 and SaudiBERT necessitated an A100 GPU and high-ram settings, while the approaches of LR and RF used the CPU. Finally, to evaluate the models’ performance, the study used the widely used classification report function from Sklearn. This Python package includes the applied metrics functions for calculating machine learning: accuracy, precision, recall, and F1-score. Additionally, the Matplotlib library was used to plot the confusion matrix, and the Seaborn library was used to create a heatmap for the confusion matrix.

4. Results and Discussion

After applying the EDL approach, which required generating a lexicon for each dataset within the different ratings of class five, three, and two, the three generated lexicons using the original data shared the exact details, except for the emotion score. These lexicons were merged, as shown in Table 4. After examining the resulting lexicon, the study found that customers do not use Eastern emoticons in their conversations, also called vertical emoticons, such as ‘(^_^)’. When comparing the number of emojis and emoticons within the majority sentiment in the ArEmo lexicon, which is negative, versus the number of rated conversations within the positive majority sentiment, the study found that unsatisfied customers, compared to satisfied customers, are more expressive toward the service using pictorial symbols. The study also found that Arabs can indicate the same Western emoticon differently from Westerners. This inconsistency is impacted by the direction of writing in Arabic, which is from right to left, as opposed to the direction of the English language, which is from left to right. For instance, in Western emoticons, to represent the sad face, the eyes in an emoticon are normally depicted by a colon towards the left, and a grin is depicted by a closed bracket as ‘:(’ or with an added space between eyes and mouth as ‘: (’. Also, Arabs either represent the sad face the same way Westerners do or by writing the colon towards the right and the grin with an open bracket instead of a closed bracket, as ‘) :’.

Table 4.

A sample from the ArEmo lexicon that displays emotion scores according to ratings.

The results of developing the five models (LR, RF, EDL, ArabicT5, and SaudiBERT) using the data cleaning and normalization methods of the EDL approach with original data are shown in Table 5. The terms A, P, R, F1, WA, MA, AraT5, and SaBert in Table 5 and the upcoming tables refer to accuracy, precision, recall, F1-score, weighted average, macro average, ArabicT5, and SaudiBERT. Moreover, all presented values are percentages. In the case of unbalanced data, where the class distribution is skewed, the weighted average score is more relevant as it considers the varying impact of each class on the average, thus offering an overview of the overall model performance [56]. While the macro average computes the average of each metric, whether precision, recall, or F1-score, specific to a class using the arithmetic mean [57], the weighted average is like the macro average but considers class proportions, providing a more accurate value when classes differ widely in size [58]. The weighted average score is computed for each class by applying weights determined by the volume of data in each class [56].

Table 5.

Comparison of the five models for predicting user satisfaction using the original data.

Generally, the EDL approach, compared to the other four models (LR, RF, EDL, ArabicT5, and SaudiBERT), achieved the highest performance in all three types of classification, from the class of rating five to the class of rating two. It is worth mentioning that no model achieved the lowest performance in all types of classification. For predicting five ratings, the EDL model improved the prediction over the next higher accuracy model, which is LR, by 10.76%. For predicting three ratings, the EDL model improved the prediction over the next higher accuracy model, ArabicT5, by 7.62%. For predicting two ratings, the EDL model improved the prediction over the next higher accuracy model, SaudiBERT, by 1.20%. The percentage of increase is computed as follows: |(Old value − New value)|/Old value × 100 [59]. Similarly, regarding the weighted average, the EDL model also achieved the highest performance considering accuracy, precision, recall, and F1-score in all three types of classification: five ratings, three ratings, and two ratings.

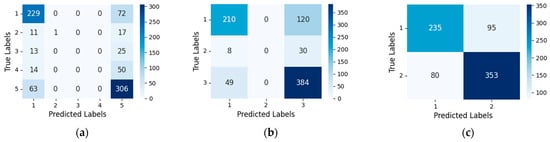

A detailed assessment is presented in Figure 2, which shows a visualization of the confusion matrix for predicting the rating of different classes using the original data. The sidebar on the right of each confusion matrix is a legend showing the scale of the cell counts. When the model successfully predicts a rating more than half the time while its precision is over 50%, it indicates frequent overall correct classifications, causing a low rate of false positives, indicated by the first row of each matrix except for the first cell on the left. Furthermore, when the recall rate exceeds 50%, it suggests that the model accurately predicts a substantial overall portion of the ratings, leading to minimal false negatives presented by the last row of each matrix except for the first cell on the right [60]. Notably, the model attempts to make predictions in all classes of ratings only when predicting two ratings, as the number of instances in the negative and positive classes are close to each other, thus not making biased predictions toward the majority class. The authors observed that the prediction is more effective using unbalanced small data when the difference between text-based data in each class is not too high. Furthermore, the large language models, including SaudiBERT and ArabicT5, do not guarantee an improved performance over traditional NLP models with the small dataset. Others reported a similar finding, including the authors in [61].

Figure 2.

Visualization of the confusion matrices for predicting five, three, and two ratings using JMCS original data. (a) Classification of five ratings; (b) Classification of three ratings; (c) Classification of two ratings.

To examine if the obtained lower results of the LR and RF models compared to the EDL model are due to their pre-processing method of cleaning and normalization, as seen in Table 6, both models of LR and RF were developed for all three types of rating classifications using the authors’ pre-processing method in [10,11] with the same original data. Comparing the results from this table with the previous table, where the LR and RF models were developed by applying the pre-processing phase of the EDL approach, the study found that the results decreased with the authors’ pre-processing methods in [10,11]. The reduced accuracy percentages are as follows: 17.76%, 16.66%, and 4.25% for predicting five, three, and two ratings using LR models, and 6.71%, 4.79%, and 4.96% for predicting five, three, and two ratings using RF models. Those decrease percentages were calculated with the same formula as the increase percentage [62]. Accordingly, the study excluded the possibility that the EDL approach’s pre-processing steps may negatively impact these models.

Table 6.

Results of predicting customer satisfaction using LR and RF approaches with their pre-processing methods and original data.

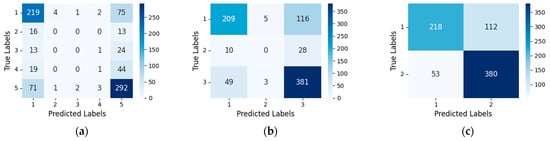

It should be noted that experimenting with augmented data does not imply an enhancement in results [63]. Thus, as shown by Table 7, when the five models—LR, RF, EDL, ArabicT5, and SaudiBERT—were developed and tested again using augmented data instead of the original data for all three types of classes, there was no improvement in accuracy, except for an improvement of 1.69% using the EDL model and an improvement of 3.84% using the logistic regression model. These results indicate that the impact of augmented data on model performance is not uniform and may vary based on the model type and the data nature. It is pertinent to mention that the augmented data used to train the models that achieved improved results, compared to the trained models using original data, do not have any class where the number of augmented data is greater than the number of original data.

Table 7.

Comparison of five models for predicting user satisfaction using augmented data.

The contrast in the models’ behavior when applying original and augmented data is interesting. While the models with original data in Figure 2 showed a lack of prediction attempts for some classes, the models using augmented data considered all classes of ratings when making predictions, as depicted in the confusion matrices in Figure 3.

Figure 3.

Visualization of the confusion matrices for predicting five, three, and two ratings using augmented data from JMCS. (a) Classification of five ratings; (b) Classification of three ratings; (c) Classification of two ratings.

However, there is a weakness with regards to the following classes of ratings: two, three, and four when classifying five ratings in Figure 2 as shown in (a) and weakness with class two when classifying three ratings as shown in (b), due to the limited number of conversations rated with these numbers by the customers. This makes it difficult for the models to learn the characteristics of the data within these classes, as when customers rate conversations on a scale from one to five, they tend to either be extremely satisfied (rated as excellent) or highly unsatisfied (rated as poor).

The classes with the fewest number of original data, as shown in Table 2, lead to less varied augmented data in those classes that have the number of augmented data larger than the original data, thus negatively affecting model learning, as most of the data are very close to each other in meaning while being synonyms unlike the opposite when classes have the number of original data larger than the augmented data, which is the case for the highest and lowest classes within each data distribution. The latter situation leads to more varied data reflecting reality and contributes to better prediction.

Accordingly, with customers focusing over the years on selecting either excellent or poor ratings, the most robust case for model prediction is to predict two classes: satisfactory and unsatisfactory. However, suggesting a threshold for future work on the minimum and maximum number of differences between the original and augmented data size within each class to improve predictions requires conducting many experiments.

Experimenting with eighteen different text-based and conversational features, in addition to all the features together using EDL models trained with original data, did not improve the results after training each of the following features with the combined MLP model, as presented in Table 8: the number of positive words, negative words, negation words, booster words, repeated characters, question marks, exclamation marks, periods, and commas, the emotions score, the total weight of positive words, the total weight of negative words, duration of the conversation, counters for the number of client and service provider (SP) responses, and three counters for who caused the connection cut during the conversations: one for the service provider, another for the customer, and the third for the technical error. Furthermore, using each feature in isolation was better than using all the features together for prediction, which is similar to [12]. Most features achieved the same highest accuracy results: 66.79%, 74.16%, and 77.06% for classes 5, 3, and 2, respectively. Only the duration feature mostly led to the lowest performance among the features used in isolation, probably due to the weak relationship between conversation duration and rating after measuring the Pearson correlation coefficient between these two variables. The hash in Table 8 is a sign of a number.

Table 8.

Results of the EDL model with different applied features using original data.

Unlike training models with original data and features, when the data is augmented, the performance of the EDL model predicting five ratings improved with each of the five features: weight of positive words, number of exclamation marks, number of connections cut due to technical error, number of service provider responses, and the number of total responses from both the client and service provider, as illustrated in Table 9. The remaining features did not enhance the results. However, the highest accuracy of those results was 64.42%, using the number of service provider responses and the total responses from both the client and service provider. This accuracy increase is 0.78% over the accuracy of the EDL model using augmented data without any features. Still, even with this increased accuracy, using original data without features for predicting five or three ratings obtained better performance than using augmented data with or without features. This was unlike predicting two ratings using the EDL model with augmented data, as explained earlier, which is the most efficient case for predicting customer satisfaction compared to all the results from all models. Therefore, when the available original data for opinion prediction is highly skewed in its class distribution, the authors recommend reducing the number of predicted classes by consolidating positive classes and consolidating negative classes to obtain better predictions by the EDL model in these classes, thereby enhancing overall performance after the application of data augmentation to conversational data using GPT-4.

Table 9.

Results of the EDL model with different applied features using augmented data.

5. Conclusions and Future Work

The results for predicting customer satisfaction based on ratings using Arabic conversational data in the literature indicate that this field of study is still in its infancy. The study utilized data primarily related to city management queries to serve digital governance and contribute to improving customer support services for organizations and businesses in cities. The study presented an approach employing data augmentation to create a model that can be integrated with customer support services for easier usability, enabling an automatic rating feature. The practical implication of this feature is to assist decision-makers in improving customer satisfaction. This initiative aligns with the smart city goal of enhanced service delivery and ultimately improves the quality of life for residents.

The EDL model consistently achieved superior results compared to other models when predicting customer satisfaction ratings across five-, three-, and two-rating scales, whether using the original or augmented data. It demonstrated noticeable robustness, showcasing consistent high performance across two diverse datasets with different sizes, domains, and characteristics—one from this study and the other from the study in [12]. Its ability to work well with different datasets suggests that it can handle various data distributions with potentially effective performance on new data in the future. Other theoretical implications include revealing new perspectives for the Arabic linguistic context and adding to the literature an effective automatic predictive method for customer satisfaction in customer support chat services to advance the field of Arabic machine learning.

Future directions for predicting customer satisfaction using Arabic conversational data include the following suggestions:

- Collect and deploy text-based, balanced, non-acted Arabic conversational data from one or multiple resources with at least five levels of ratings gathered from a customer support service for public use, after removing personal and private data while keeping emojis and emoticons.

- Develop an EDL-MLP model using conversational datasets in a non-Arabic language. The changes needed for this development are as follows: Replace the AraSenti lexicon with a word lexicon appropriate for the target language; substitute the FastText pre-trained embeddings with embeddings specific to the target language. If a list of booster and negation words for the target language does not exist, create one. When applying the pre-processing phase, ignore the part related to Arabic text. Additionally, note that the conversational features available in JMCS may be absent in other datasets. This variation depends on the contents of the online chat software used for customer support, as not all services may support these features.

- Re-rate all the Arabic conversational data of the selected dataset through experts, then compare testing the data rated by users on two models: a model trained with data rated by experts versus a model trained with data rated by users.

- Predict customer satisfaction using Arabic conversational data with additional models, data, and features.

Author Contributions

Conceptualization, R.F.A.-M. and A.Y.A.-A.; methodology, R.F.A.-M. and A.Y.A.-A.; software, R.F.A.-M.; validation, R.F.A.-M. and A.Y.A.-A.; formal analysis, R.F.A.-M. and A.Y.A.-A.; investigation, R.F.A.-M. and A.Y.A.-A.; resources, R.F.A.-M. and A.Y.A.-A.; data curation, R.F.A.-M.; writing—original draft, R.F.A.-M.; writing—review and editing, R.F.A.-M. and A.Y.A.-A.; visualization, R.F.A.-M.; supervision, A.Y.A.-A.; project administration, R.F.A.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Due to privacy concerns, the data used in this study is available upon request from the Jeddah Municipality.

Acknowledgments

The authors express their sincere gratitude and thanks to Jeddah Municipality and its General Supervisor of Digital Transformation Yasser Mohammed Alshareef and Halah Ali Dan for their generous support in supplying the data for their study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gupta, S.; Alharbi, F.; Alshahrani, R.; Arya, P.K.; Vyas, S.; Elkamchouchi, D.H.; Soufiene, B.O. Secure and Lightweight Authentication Protocol for Privacy Preserving Communications in Smart City Applications. Sustainability 2023, 15, 5346. [Google Scholar] [CrossRef]

- Żywiołek, J.; Schiavone, F. Perception of the Quality of Smart City Solutions as a Sense of Residents’ Safety. Energies 2021, 14, 5511. [Google Scholar] [CrossRef]

- Burity, J. The Importance of Logistics Efficiency on Customer Satisfaction. J. Mark. Dev. Compet. 2021, 15, 26–35. [Google Scholar]

- Ghoumrassi, A.; Țigu, G. The impact of the logistics management in customer satisfaction. Proc. Int. Conf. Bus. Excell. 2017, 11, 292–301. [Google Scholar] [CrossRef]

- Czinkota, M.R.; Kotabe, M.; Vrontis, D.; Shams, S.M.R. Direct Marketing, Sales Promotion, and Public Relations. In Marketing Management; Springer Nature: Cham, Switzerland, 2021; pp. 607–647. [Google Scholar] [CrossRef]

- Battineni, G.; Chintalapudi, N.; Amenta, F. AI Chatbot Design during an Epidemic like the Novel Coronavirus. Healthcare 2020, 8, 154. [Google Scholar] [CrossRef]

- Le, C.P.; Dai, L.; Johnston, M.; Liu, Y.; Walker, M.; Ghanadan, R. Improving Open-Domain Dialogue Evaluation with a Causal Inference Model. arXiv 2023, arXiv:2301.13372. [Google Scholar] [CrossRef]

- Ye, F.; Hu, Z.; Yilmaz, E. Modeling User Satisfaction Dynamics in Dialogue via Hawkes Process. arXiv 2023, arXiv:2305.12594. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, C.; Zhan, H.; Zhuang, Y. Federated Natural Language Generation for Personalized Dialogue System. arXiv 2021, arXiv:2110.06419. [Google Scholar] [CrossRef]

- Elnagar, A.; Khalifa, Y.S.; Einea, A. Hotel Arabic-reviews dataset construction for sentiment analysis applications. In Intelligent Natural Language Processing: Trends and Applications; Springer: Cham, Switzerland, 2018; pp. 35–52. [Google Scholar]

- Nassif, A.B.; Darya, A.M.; Elnagar, A. Empirical evaluation of shallow and deep learning clas-sifiers for Arabic sentiment analysis. Trans. Asian Low-Resour. Lang. Inf. Process. 2021, 21, 1–25. [Google Scholar]

- Al-Mutawa, R.F.; Al-Aama, A.Y. User Opinion Prediction for Arabic Hotel Reviews Using Lexicons and Artificial Intelligence Techniques. Appl. Sci. 2023, 13, 5985. [Google Scholar] [CrossRef]

- Arabic Text Classification Using Deep Learning (ArabicT5). 2024. Available online: https://huggingface.co/Hezam/ArabicT5_Classification (accessed on 25 February 2024).

- SaudiBERT. 2024. Available online: https://huggingface.co/faisalq/SaudiBERT (accessed on 19 May 2024).

- GPT-4 Is OpenAI’s Most Advanced System, Producing Safer and More Useful Responses. 2023. Available online: https://openai.com/index/gpt-4/ (accessed on 27 April 2024).

- Behera, R.K.; Bala, P.K.; Ray, A. Cognitive chatbot for personalised contextual customer service: Behind the scene and beyond the hype. Inf. Syst. Front. 2024, 26, 899–919. [Google Scholar] [CrossRef]

- Mendez, J.A.; Geramifard, A.; Ghavamzadeh, M.; Liu, B. Assistant Reinforcement Learning of Multi-Domain Dialog Policies via Action Embeddings. arXiv 2022, arXiv:2207.00468. [Google Scholar] [CrossRef]

- Cai, W.; Chen, L. Predicting User Intents and Satisfaction with Dialogue-based Conversational Recommendations. In UMAP 2020, Proceedings of the 28th ACM Conference on User Modeling, Adaptation and Personalization, Genoa, Italy, 12–18 July 2020; Association for Computing Machinery, Inc.: New York, NY, USA, 2020; pp. 33–42. [Google Scholar] [CrossRef]

- Spiliotopoulos, D.; Kotis, K.; Vassilakis, C.; Margaris, D. Semantics-Driven Conversational Interfaces for Museum Chatbots. In Culture and Computing. HCII 2020; Rauterberg, M., Ed.; Springer: Cham, Switzerland, 2020; Volume 12215, pp. 255–266. [Google Scholar] [CrossRef]

- Feine, J.; Morana, S.; Gnewuch, U. Measuring Service Encounter Satisfaction with Customer Service Chatbots using Sentiment Analysis. In Proceedings of the Internationale Tagung Wirtschaftsinformatik (WI2019), Siegen, Germany, 24–27 February 2019; p. 1115. [Google Scholar]

- Sun, W.; Zhang, S.; Balog, K.; Ren, Z.; Ren, P.; Chen, Z.; de Rijke, M. Simulating User Satisfaction for the Evaluation of Task-oriented Dialogue Systems. In SIGIR 2021, Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event Canada, 11–15 July 2021; Association for Computing Machinery, Inc.: New York, NY, USA, 2021; pp. 2499–2506. [Google Scholar] [CrossRef]

- Feng, Y.; Jiao, Y.; Prasad, A.; Aletras, N.; Yilmaz, E.; Kazai, G. Schema-Guided User Satisfaction Modeling for Task-Oriented Dialogues. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 2079–2091. [Google Scholar] [CrossRef]

- Deng, Y.; Zhang, W.; Lam, W.; Cheng, H.; Meng, H. User Satisfaction Estimation with Sequential Dialogue Act Modeling in Goal-Oriented Conversational Systems. In WWW’22, Proceedings of the ACM Web Conference 2022, Lyon France, 25–29 April 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 2998–3008. [Google Scholar] [CrossRef]

- Bodigutla, P.K.; Tiwari, A.; Matsoukas, S.; Valls-Vargas, J.; Polymenakos, L. Joint Turn and Dialogue level User Satisfaction Estimation on Multi-Domain Conversations. In Findings of the Association for Computational Linguistics: EMNLP 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 3897–3909. [Google Scholar] [CrossRef]

- Song, K.; Bing, L.; Gao, W.; Lin, J.; Zhao, L.; Wang, J.; Sun, C.; Liu, X.; Zhang, Q. Using customer service dialogues for satisfaction analysis with context-assisted multiple instance learning. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing (EMNLP 2019), Hong Kong, China, 3–7 November 2019; p. 198. [Google Scholar] [CrossRef]

- Ando, A.; Masumura, R.; Kamiyama, H.; Kobashikawa, S.; Aono, Y.; Toda, T. Customer Satisfaction Estimation in Contact Center Calls Based on a Hierarchical Multi-Task Model. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 715–728. [Google Scholar] [CrossRef]

- Parra-Gallego, L.F.; Orozco-Arroyave, J.R. Classification of emotions and evaluation of customer satisfaction from speech in real world acoustic environments. Digit. Signal Process. 2022, 120, 103286. [Google Scholar] [CrossRef]

- Yin, Y.; Zou, C.; Yuan, Z.; Bao, X. MLDSP-MA: Multidimensional Attention for Multi-Round Long Dialogue Sentiment Prediction. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; pp. 11405–11414. [Google Scholar]

- Cervone, A.; Gambi, E.; Tortoreto, G.; Stepanov, E.A.; Riccardi, G. Automatically Predicting User Ratings for Conversational Systems. CEUR Workshop Proc. 2018, 2253, 99–104. [Google Scholar] [CrossRef]

- Auguste, J.; Charlet, D.; Damnati, G.; Béchet, F.; Favre, B. Can We Predict Self-reported Customer Satisfaction from Interactions? In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 7385–7389. [Google Scholar] [CrossRef]

- Walker, M.A.; Harmon, C.; Graupera, J.; Harrison, D.; Whittaker, S. Modeling Performance in Open-Domain Dialogue with PARADISE. arXiv 2021, arXiv:2110.11164. [Google Scholar] [CrossRef]

- Kim, Y.; Levy, J.; Liu, Y. Speech Sentiment and Customer Satisfaction Estimation in Socialbot Conversations. Proc. Interspeech 2020, 1833–1837. [Google Scholar] [CrossRef]

- Pellicer, L.F.A.O.; Ferreira, T.M.; Costa, A.H.R. Data augmentation techniques in natural language processing. Appl. Soft Comput. 2023, 132, 109803. [Google Scholar] [CrossRef]

- Ashraf, M.T.; Dey, K.; Mishra, S. Identification of high-risk roadway segments for wrong-way driving crash using rare event modeling and data augmentation techniques. Accid. Anal. Prev. 2023, 181, 106933. [Google Scholar] [CrossRef]

- Peng, S.; Qu, D.; Zhang, W.L.; Zhang, H.; Li, S.; Xu, M. Easy and Effective! Data Augmentation for Knowledge-Aware Dialogue Generation Via Multi-Perspective Sentences Interaction. Neurocomputing 2025, 614, 128724. [Google Scholar] [CrossRef]

- Sujana, Y.; Kao, H.Y. LiDA: Language-Independent Data Augmentation for Text Classification. IEEE Access 2023, 11, 10894–10901. [Google Scholar] [CrossRef]

- Muaad, A.Y.; Raza, S.; Heyat, M.B.B.; Alabrah, A. An Intelligent COVID-19-Related Arabic Text Detection Framework Based on Transfer Learning Using Context Representation. Int. J. Intell. Syst. 2024, 2024, 8014111. [Google Scholar] [CrossRef]

- Lin, S.; Zhao, B.; Zhan, Y.; Yu, J.; Bian, X.; Li, D. Non-intrusive residential load identification based on load feature matrix and CBAM-BiLSTM algorithm. Front. Energy Res. 2024, 12, 1443700. [Google Scholar] [CrossRef]

- Islam, R.; Imran, A.; Rabbi, M.F. Prostate Cancer Detection from MRI Using Efficient Feature Extraction with Transfer Learning. Prostate Cancer 2024, 2024, 1588891. [Google Scholar] [CrossRef]

- Zhang, Z.; Poguda, A. Research on the Development of Data Augmentation Techniques in the Field of Machine Translation. Int. J. Open Inf. Technol. 2023, 11, 33–40. [Google Scholar]

- Azam, U.; Rizwan, H.; Syed, A.K.; Ali, B. Exploring Data Augmentation Strategies for Hate Speech Detection in Roman Urdu. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2022; pp. 4523–4531. Available online: https://aclanthology.org/2022.lrec-1.481 (accessed on 3 June 2023).

- Li, G.; Wang, H.; Ding, Y.; Zhou, K.; Yan, X. Data augmentation for aspect-based sentiment analysis. Int. J. Mach. Learn. Cybern. 2023, 14, 125–133. [Google Scholar] [CrossRef]

- Li, S.; Wang, Y.; Hu, H.; Ding, K.; Wang, Z.; Na, C. Daerbt: An Easy-to-Use and Effective Data Augmentation Method for Chinese Financial Textual Resources. SSRN Electron. J. 2023. [Google Scholar] [CrossRef]

- Ganganwar, V.; Rajalakshmi, R. MTDOT: A Multilingual Translation-Based Data Augmentation Technique for Offensive Content Identification in Tamil Text Data. Electronics 2022, 11, 3574. [Google Scholar] [CrossRef]

- Razaq, A.; Halim, Z.; Rahman, A.U.; Sikandar, K. Identification of paraphrased text in research articles through improved embeddings and fine-tuned BERT model. Multimed. Tools Appl. 2024, 83, 74205–74232. [Google Scholar] [CrossRef]

- Le, K.M.; Pham, T.; Quan, T.; Luu, A.T. LAMPAT: Low-Rank Adaption for Multilingual Paraphrasing Using Adversarial Training. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 18435–18443. [Google Scholar]

- Sweidan, A.H.; El-Bendary, N.; Elhariri, E. Autoregressive Feature Extraction with Topic Modeling for Aspect-based Sentiment Analysis of Arabic as a Low-resource Language. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024, 23, 1–18. [Google Scholar] [CrossRef]

- Kim, J.; Lim, C. Customer complaints monitoring with customer review data analytics: An integrated method of sentiment and statistical process control analyses. Adv. Eng. Inform. 2021, 49, 101304. [Google Scholar] [CrossRef]

- Chakraborty, K.; Bhatia, S.; Bhattacharyya, S.; Platos, J.; Bag, R.; Hassanien, A.E. Sentiment Analysis of COVID-19 tweets by Deep Learning Classifiers—A study to show how popularity is affecting accuracy in social media. Appl. Soft Comput. 2020, 97, 106754. [Google Scholar] [CrossRef] [PubMed]

- Licea-Haquet, G.L.; Reyes-Aguilar, A.; Alcauter, S.; Giordano, M. The Neural Substrate of Speech Act Recognition. Neuroscience 2021, 471, 102–114. [Google Scholar] [CrossRef] [PubMed]

- Babu, N.V.; Kanaga, E.G.M. Sentiment Analysis in Social Media Data for Depression Detection Using Artificial Intelligence: A Review. SN Comput. Sci. 2021, 3, 74. [Google Scholar] [CrossRef]

- El Idrysy, F.Z.; Hourri, S.; El Miqdadi, I.; Hayati, A.; Namir, Y.; Ncir, B.; Kharroubi, J. Unlocking the language barrier: A Journey through Arabic machine translation. Multimed. Tools Appl. 2024. [Google Scholar] [CrossRef]

- Tursunova, M. The Importance of Teaching English as a Second Language. Mod. Sci. Res. 2024, 3, 196–199. [Google Scholar]

- Al-Twairesh, N.; Al-Khalifa, H.; AlSalman, A. Arasenti: Large-scale twitter-specific Arabic sentiment lexicons. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Volume 1, pp. 697–705. [Google Scholar]

- Farady, I.; Lin, C.Y.; Chang, M.C. PreAugNet: Improve data augmentation for industrial defect classification with small-scale training data. J. Intell. Manuf. 2024, 35, 1233–1246. [Google Scholar] [CrossRef]

- Harnadi, B.; Widiantoro, A.D. Evaluating the Performance and Accuracy of Supervised Learning Models on Sentiment Analysis of E-Wallet. In Proceedings of the 2023 7th International Conference on Information Technology (InCIT), Chiang Rai, Thailand, 16–17 November 2023; pp. 175–180. [Google Scholar]

- Suryavanshi, A.; Mehta, S.; Jain, A.; Thapliyal, S.; Hariharan, S. Deep Learning Dermoscopy: Unveiling CNN-SVM Synergy in Skin Lesion Detection. In Proceedings of the 2023 4th International Conference on Intelligent Technologies (CONIT), Bangalore, India, 21–23 June 2024; pp. 1–5. [Google Scholar]

- Banerjee, D.; Sharma, N.; Upadhyay, D.; Singh, V. Hybrid CNN-RF Model for Accurate Casting Defect Forecasting. In Proceedings of the 2024 Asia Pacific Conference on Innovation in Technology (APCIT), Mysore, India, 26–27 July 2024; pp. 1–6. [Google Scholar]

- Al-Juboori, S.A.; Albtoosh, M. Improving the mechanical properties of conventional materials by nano-coating, Part-1. Mater. Sci. Non-Equilib. Phase Transform. 2019, 5, 112–119. [Google Scholar]

- Asif, M.; Al-Razgan, M.; Ali, Y.A.; Yunrong, L. Graph convolution networks for social media trolls detection use deep feature extraction. J. Cloud Comput. 2024, 13, 33. [Google Scholar] [CrossRef]

- Yang, E.; Li, M.D.; Raghavan, S.; Deng, F.; Lang, M.; Succi, M.D.; Huang, A.J.; Kalpathy-Cramer, J. Transformer versus traditional natural language processing: How much data is enough for automated radiology report classification? Br. J. Radiol. 2023, 96, 20220769. [Google Scholar] [CrossRef]

- Caluag, R.J.L.; Gervacio, A.G.M.B.; Juco, A.P.M.T.; Santos, I.M.M.; Oabel, N.A.A.; Aniano, S.M.; Amores, W., III. Antihyperglycemic Effect of Combined Pomelo (Citrus maxima) and Banana (Musa × paradisiaca L.) Peel Extract Against Induced Diabetic Sprague Dawley Rats. Res. Arch. Rising Sch. 2023. [Google Scholar] [CrossRef]

- Jahan, M.S.; Oussalah, M.; Beddia, D.R.; Arhab, N. A Comprehensive Study on NLP Data Augmentation for Hate Speech Detection: Legacy Methods, BERT, and LLMs. arXiv 2024, arXiv:2404.00303. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).