Individual Differences in Multisensory Interactions: The Influence of Temporal Phase Coherence and Auditory Salience on Visual Contrast Sensitivity

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

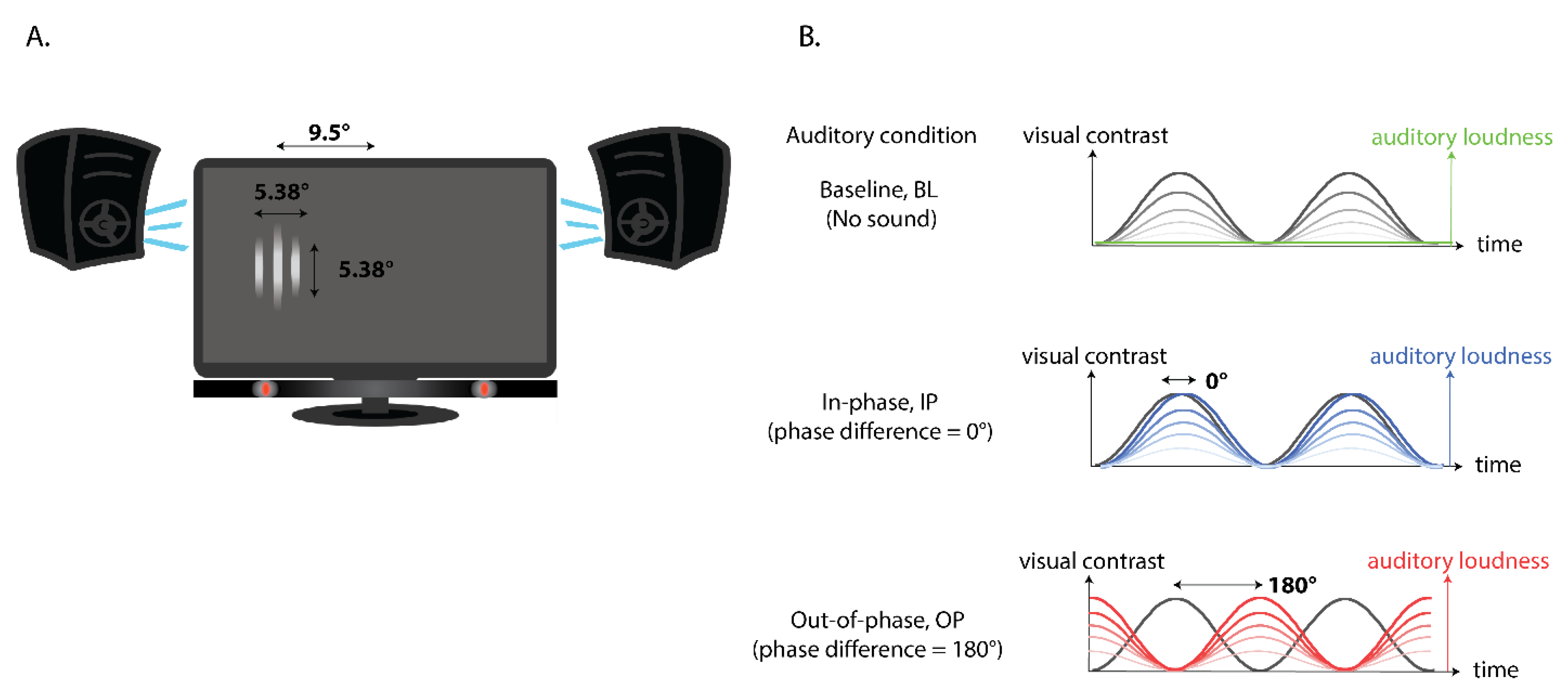

2.2. Apparatus and Stimuli

2.3. Procedure

2.4. Data Analysis

3. Results

3.1. Data Exclusion

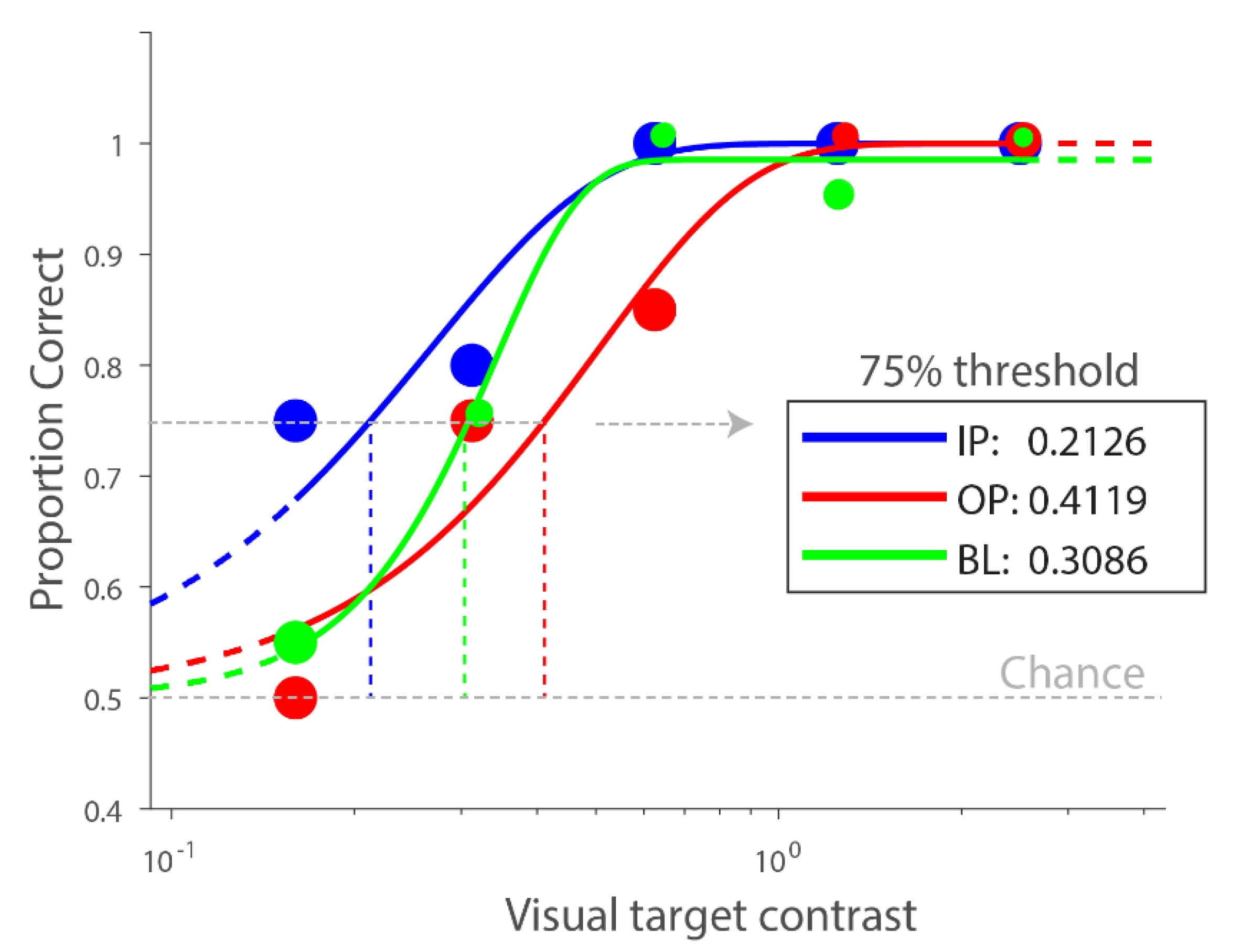

3.2. Multisensory Interactions without Accounting for Individual Differences

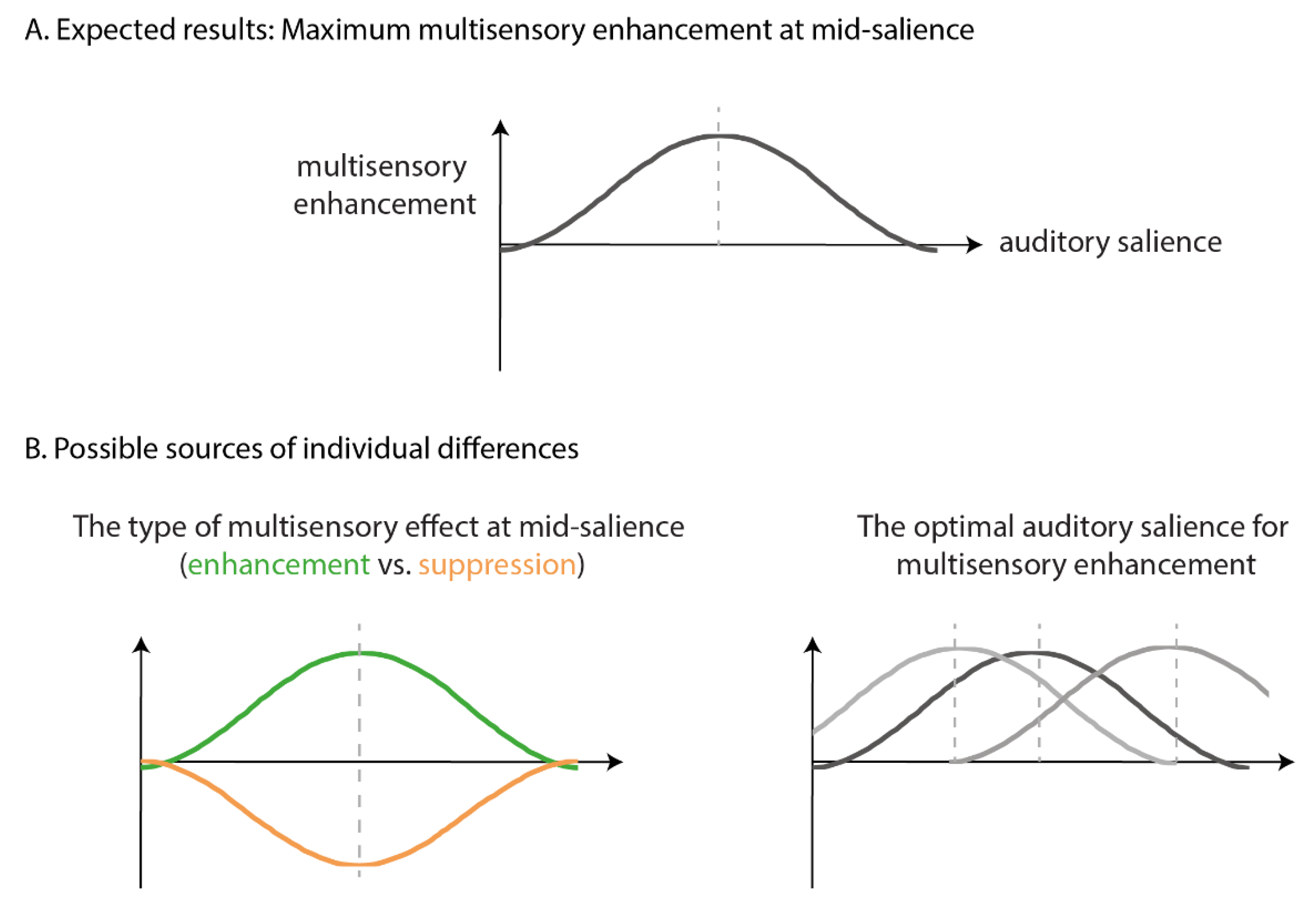

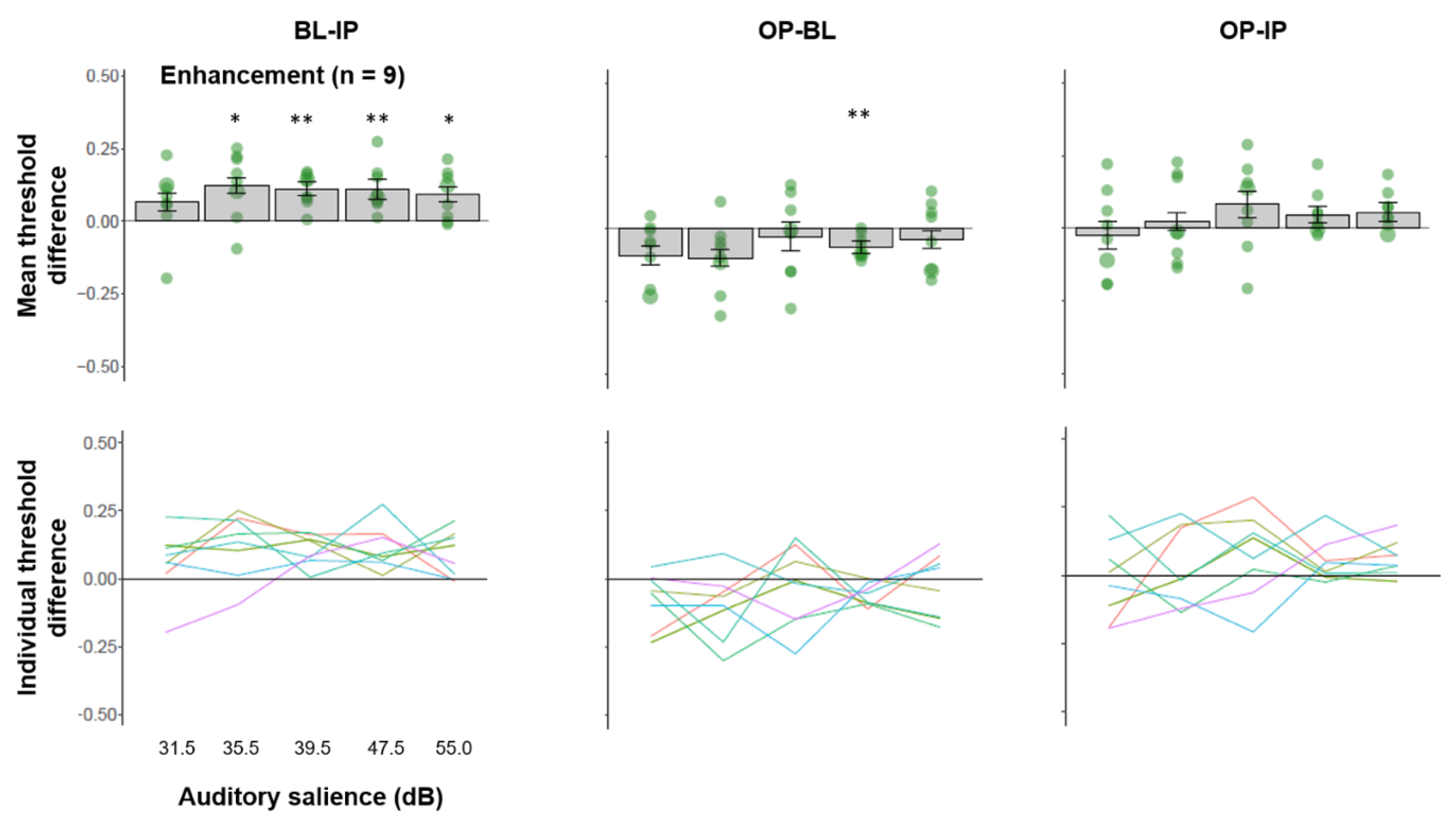

3.3. Multisensory Interactions in Individuals Showing Facilitated Visual Processing by IP Sound

3.4. Multisensory Interactions in Individuals Showing Suppressed Visual Processing by IP Sound

3.5. Multisensory Interactions Accounting for Individual Differences in Optimal Auditory Salience

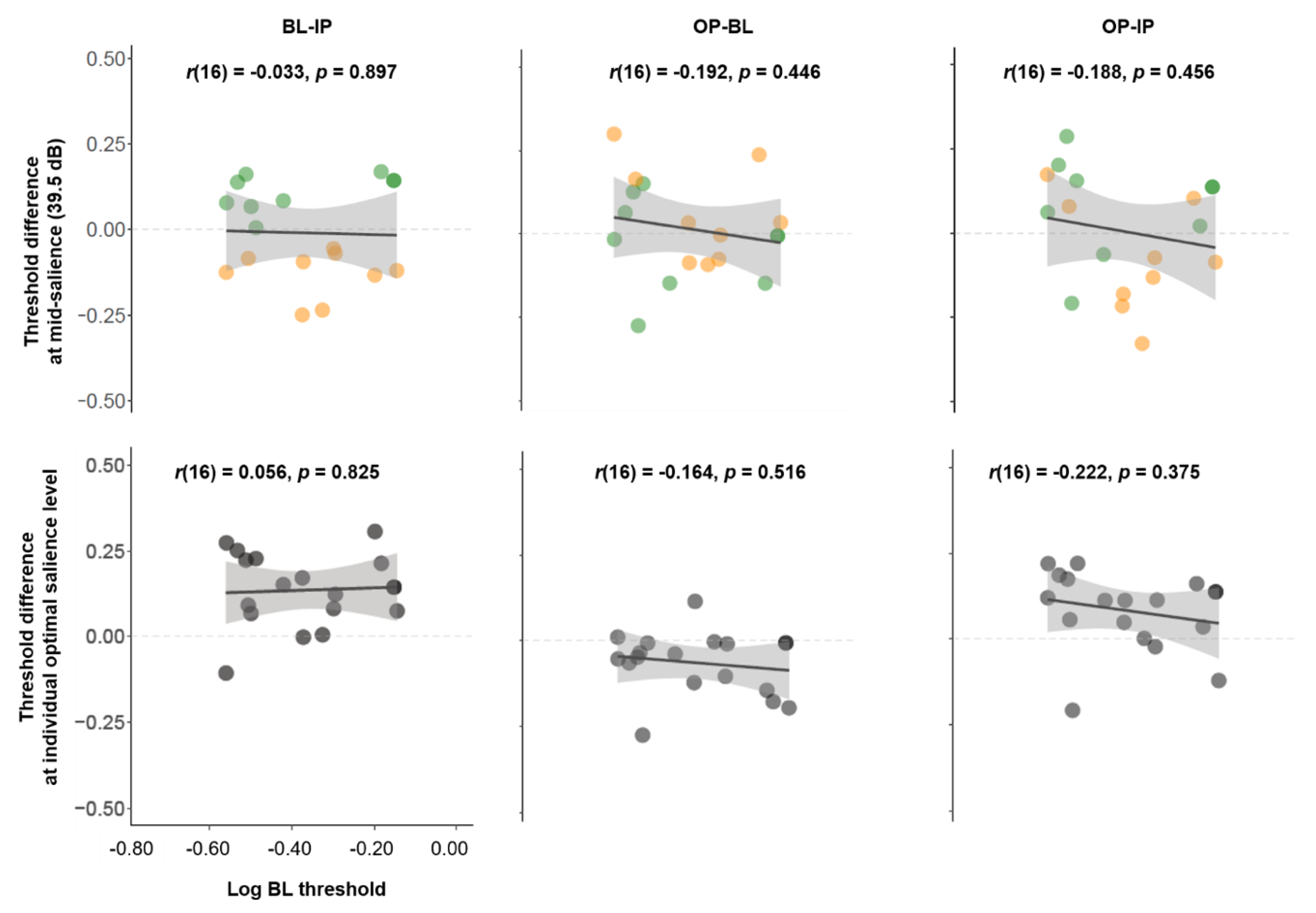

3.6. The Relationship between Unisensory Visual Performance and Multisensory Effects

4. Discussion

4.1. Individual Differences in Multisensory Enhancement

4.2. Underlying Mechanisms of Multisensory Enhancement

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Data Availability

References

- Odgaard, E.C.; Arieh, Y.; Marks, L.E. Cross-Modal Enhancement of Perceived Brightness: Sensory Interaction versus Response Bias. Percept. Psychophys. 2003, 65, 123–132. [Google Scholar] [CrossRef] [PubMed]

- Stein, B.E.; London, N.; Wilkinson, L.K.; Price, D.D. Enhancement of Perceived Visual Intensity by Auditory Stimuli: A Psychophysical Analysis. J. Cogn. Neurosci. 1996, 8, 497–506. [Google Scholar] [CrossRef] [PubMed]

- Bolognini, N.; Frassinetti, F.; Serino, A.; Làdavas, E. “Acoustical Vision” of below Threshold Stimuli: Interaction among Spatially Converging Audiovisual Inputs. Exp. Brain Res. 2005, 160, 273–282. [Google Scholar] [CrossRef] [PubMed]

- Noesselt, T.; Bergmann, D.; Hake, M.; Heinze, H.-J.; Fendrich, R. Sound Increases the Saliency of Visual Events. Brain Res. 2008, 1220, 157–163. [Google Scholar] [CrossRef] [PubMed]

- Noesselt, T.; Tyll, S.; Boehler, C.N.; Budinger, E.; Heinze, H.-J.; Driver, J. Sound-Induced Enhancement of Low-Intensity Vision: Multisensory Influences on Human Sensory-Specific Cortices and Thalamic Bodies Relate to Perceptual Enhancement of Visual Detection Sensitivity. J. Neurosci. 2010, 30, 13609–13623. [Google Scholar] [CrossRef]

- Doyle, M.C.; Snowden, R.J. Identification of Visual Stimuli Is Improved by Accompanying Auditory Stimuli: The Role of Eye Movements and Sound Location. Perception 2001, 30, 795–810. [Google Scholar] [CrossRef]

- Tivadar, R.I.; Retsa, C.; Turoman, N.; Matusz, P.J.; Murray, M.M. Sounds Enhance Visual Completion Processes. NeuroImage 2018, 179, 480–488. [Google Scholar] [CrossRef]

- Wallace, M.T.; Meredith, M.A.; Stein, B.E. Multisensory Integration in the Superior Colliculus of the Alert Cat. J. Neurophysiol. 1998, 80, 1006–1010. [Google Scholar] [CrossRef]

- Stevenson, R.A.; Bushmakin, M.; Kim, S.; Wallace, M.T.; Puce, A.; James, T.W. Inverse Effectiveness and Multisensory Interactions in Visual Event-Related Potentials with Audiovisual Speech. Brain Topogr. 2012, 25, 308–326. [Google Scholar] [CrossRef]

- Werner, S.; Noppeney, U. Superadditive Responses in Superior Temporal Sulcus Predict Audiovisual Benefits in Object Categorization. Cereb. Cortex 2010, 20, 1829–1842. [Google Scholar] [CrossRef]

- Meredith, M.A.; Stein, B.E. Spatial Factors Determine the Activity of Multisensory Neurons in Cat Superior Colliculus. Brain Res. 1986, 365, 350–354. [Google Scholar] [CrossRef]

- Meredith, M.A.; Nemitz, J.W.; Stein, B.E. Determinants of Multisensory Integration in Superior Colliculus Neurons. I. Temporal Factors. J. Neurosci. 1987, 7, 3215–3229. [Google Scholar] [CrossRef] [PubMed]

- Meredith, M.A.; Stein, B.E. Visual, Auditory, and Somatosensory Convergence on Cells in Superior Colliculus Results in Multisensory Integration. J. Neurophysiol. 1986, 56, 640–662. [Google Scholar] [CrossRef] [PubMed]

- Spence, C. Just How Important Is Spatial Coincidence to Multisensory Integration? Evaluating the Spatial Rule: Spatial Coincidence and Multisensory Integration. Ann. N. Y. Acad. Sci. 2013, 1296, 31–49. [Google Scholar] [CrossRef]

- Holmes, N.P. The Law of Inverse Effectiveness in Neurons and Behaviour: Multisensory Integration versus Normal Variability. Neuropsychologia 2007, 45, 3340–3345. [Google Scholar] [CrossRef]

- Frassinetti, F.; Bolognini, N.; Làdavas, E. Enhancement of Visual Perception by Crossmodal Visuo-Auditory Interaction. Exp. Brain Res. 2002, 147, 332–343. [Google Scholar] [CrossRef]

- Senkowski, D.; Saint-Amour, D.; Höfle, M.; Foxe, J.J. Multisensory Interactions in Early Evoked Brain Activity Follow the Principle of Inverse Effectiveness. NeuroImage 2011, 56, 2200–2208. [Google Scholar] [CrossRef]

- Leone, L.M.; McCourt, M.E. The Roles of Physical and Physiological Simultaneity in Audiovisual Multisensory Facilitation. i-Perception 2013, 4, 213–228. [Google Scholar] [CrossRef]

- Ross, L.A.; Saint-Amour, D.; Leavitt, V.M.; Javitt, D.C.; Foxe, J.J. Do You See What I Am Saying? Exploring Visual Enhancement of Speech Comprehension in Noisy Environments. Cereb. Cortex 2006, 17, 1147–1153. [Google Scholar] [CrossRef]

- Fister, J.K.; Stevenson, R.A.; Nidiffer, A.R.; Barnett, Z.P.; Wallace, M.T. Stimulus Intensity Modulates Multisensory Temporal Processing. Neuropsychologia 2016, 88, 92–100. [Google Scholar] [CrossRef][Green Version]

- Nidiffer, A.R.; Stevenson, R.A.; Krueger Fister, J.; Barnett, Z.P.; Wallace, M.T. Interactions between Space and Effectiveness in Human Multisensory Performance. Neuropsychologia 2016, 88, 83–91. [Google Scholar] [CrossRef] [PubMed]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A Flexible Statistical Power Analysis Program for the Social, Behavioral, and Biomedical Sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef] [PubMed]

- Brainard, D.H. The Psychophysics Toolbox. Spat. Vis. 1997, 10, 433–436. [Google Scholar] [CrossRef] [PubMed]

- Kleiner, M.; Brainard, D.; Pelli, D.; Ingling, A.; Murray, R.; Broussard, C. What’s New in Psychtoolbox-3. Perception 2007, 36, 1–16. [Google Scholar]

- Pelli, D.G. The VideoToolbox Software for Visual Psychophysics: Transforming Numbers into Movies. Spat. Vis. 1997, 10, 437–442. [Google Scholar] [CrossRef]

- Schütt, H.H.; Harmeling, S.; Macke, J.H.; Wichmann, F.A. Painfree and Accurate Bayesian Estimation of Psychometric Functions for (Potentially) Overdispersed Data. Vis. Res. 2016, 122, 105–123. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2017. [Google Scholar]

- Lawrence, M.A. Ez: Easy Analysis and Visualization of Factorial Experiments, R Package. 2016. Available online: http://github.com/mike-lawrence/ez (accessed on 21 August 2019).

- Hidaka, S.; Ide, M. Sound Can Suppress Visual Perception. Sci. Rep. 2015, 5, 10483. [Google Scholar] [CrossRef]

- Mendez-Balbuena, I.; Arrieta, P.; Huidobro, N.; Flores, A.; Lemuz-Lopez, R.; Trenado, C.; Manjarrez, E. Augmenting EEG-Global-Coherence with Auditory and Visual Noise: Multisensory Internal Stochastic Resonance. Medicine 2018, 97, e12008. [Google Scholar] [CrossRef]

- Caclin, A.; Bouchet, P.; Djoulah, F.; Pirat, E.; Pernier, J.; Giard, M.-H. Auditory Enhancement of Visual Perception at Threshold Depends on Visual Abilities. Brain Res. 2011, 1396, 35–44. [Google Scholar] [CrossRef]

- Nidiffer, A.R.; Diederich, A.; Ramachandran, R.; Wallace, M.T. Multisensory Perception Reflects Individual Differences in Processing Temporal Correlations. Sci. Rep. 2018, 8, 14483. [Google Scholar] [CrossRef]

- Carriere, B.N.; Royal, D.W.; Wallace, M.T. Spatial Heterogeneity of Cortical Receptive Fields and Its Impact on Multisensory Interactions. J. Neurophysiol. 2008, 99, 2357–2368. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Ghose, D.; Wallace, M.T. Heterogeneity in the Spatial Receptive Field Architecture of Multisensory Neurons of the Superior Colliculus and Its Effects on Multisensory Integration. Neuroscience 2014, 256, 147–162. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Van der Stoep, N.; Van der Stigchel, S.; Nijboer, T.C.W.; Van der Smagt, M.J. Audiovisual Integration in near and Far Space: Effects of Changes in Distance and Stimulus Effectiveness. Exp. Brain Res. 2016, 234, 1175–1188. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Targher, S.; Micciolo, R.; Occelli, V.; Zampini, M. The Role of Temporal Disparity on Audiovisual Integration in Low-Vision Individuals. Perception 2017, 46, 1356–1370. [Google Scholar] [CrossRef]

- Giard, M.H.; Peronnet, F. Auditory-Visual Integration during Multimodal Object Recognition in Humans: A Behavioral and Electrophysiological Study. J. Cogn. Neurosci. 1999, 11, 473–490. [Google Scholar] [CrossRef]

- Romei, V.; Murray, M.M.; Cappe, C.; Thut, G. The Contributions of Sensory Dominance and Attentional Bias to Cross-Modal Enhancement of Visual Cortex Excitability. J. Cogn. Neurosci. 2013, 25, 1122–1135. [Google Scholar] [CrossRef]

- Brang, D.; Taich, Z.J.; Hillyard, S.A.; Grabowecky, M.; Ramachandran, V.S. Parietal Connectivity Mediates Multisensory Facilitation. NeuroImage 2013, 78, 396–401. [Google Scholar] [CrossRef][Green Version]

- Murray, M.M.; Thelen, A.; Ionta, S.; Wallace, M.T. Contributions of Intraindividual and Interindividual Differences to Multisensory Processes. J. Cogn. Neurosci. 2018, 31, 360–376. [Google Scholar] [CrossRef]

- Cecere, R.; Rees, G.; Romei, V. Individual Differences in Alpha Frequency Drive Crossmodal Illusory Perception. Curr. Biol. 2015, 25, 231–235. [Google Scholar] [CrossRef]

- Howarth, C.I.; Treisman, M. Lowering of an Auditory Threshold Produced by a Light Signal Occurring after the Threshold Stimulus. Nature 1958, 182, 1093–1094. [Google Scholar] [CrossRef]

- Lippert, M.; Logothetis, N.K.; Kayser, C. Improvement of Visual Contrast Detection by a Simultaneous Sound. Brain Res. 2007, 1173, 102–109. [Google Scholar] [CrossRef] [PubMed]

- McDonald, J.J.; Teder-Sälejärvi, W.A.; Hillyard, S.A. Involuntary Orienting to Sound Improves Visual Perception. Nature 2000, 407, 906–908. [Google Scholar] [CrossRef] [PubMed]

- Spence, C.J.; Driver, J. Covert Spatial Orienting in Audition: Exogenous and Endogenous Mechanisms. J. Exp. Psychol. Hum. Percept. Perform. 1994, 20, 555–574. [Google Scholar] [CrossRef]

- McDonald, J.J.; Stormer, V.S.; Martinez, A.; Feng, W.; Hillyard, S.A. Salient Sounds Activate Human Visual Cortex Automatically. J. Neurosci. 2013, 33, 9194–9201. [Google Scholar] [CrossRef] [PubMed]

- Feng, W.; Störmer, V.S.; Martinez, A.; McDonald, J.J.; Hillyard, S.A. Sounds Activate Visual Cortex and Improve Visual Discrimination. J. Neurosci. 2014, 34, 9817–9824. [Google Scholar] [CrossRef]

- Watkins, S.; Shams, L.; Josephs, O.; Rees, G. Activity in Human V1 Follows Multisensory Perception. NeuroImage 2007, 37, 572–578. [Google Scholar] [CrossRef]

- Lakatos, P.; Chen, C.-M.; O’Connell, M.N.; Mills, A.; Schroeder, C.E. Neuronal Oscillations and Multisensory Interaction in Primary Auditory Cortex. Neuron 2007, 53, 279–292. [Google Scholar] [CrossRef]

- Molholm, S.; Ritter, W.; Murray, M.M.; Javitt, D.C.; Schroeder, C.E.; Foxe, J.J. Multisensory Auditory–Visual Interactions during Early Sensory Processing in Humans: A High-Density Electrical Mapping Study. Cogn. Brain Res. 2002, 14, 115–128. [Google Scholar] [CrossRef]

- Noesselt, T.; Rieger, J.W.; Schoenfeld, M.A.; Kanowski, M.; Hinrichs, H.; Heinze, H.-J.; Driver, J. Audiovisual Temporal Correspondence Modulates Human Multisensory Superior Temporal Sulcus Plus Primary Sensory Cortices. J. Neurosci. 2007, 27, 11431–11441. [Google Scholar] [CrossRef]

- Schroeder, C.E.; Lakatos, P. Low-Frequency Neuronal Oscillations as Instruments of Sensory Selection. Trends Neurosci. 2009, 32, 9–18. [Google Scholar] [CrossRef]

- Kayser, C.; Logothetis, N.K.; Panzeri, S. Visual Enhancement of the Information Representation in Auditory Cortex. Curr. Biol. 2010, 20, 19–24. [Google Scholar] [CrossRef]

- Andersen, T.S.; Mamassian, P. Audiovisual Integration of Stimulus Transients. Vis. Res. 2008, 48, 2537–2544. [Google Scholar] [CrossRef] [PubMed]

- Tye-Murray, N.; Sommers, M.; Spehar, B.; Myerson, J.; Hale, S. Aging, Audiovisual Integration, and the Principle of Inverse Effectiveness. Ear Hear. 2010, 31, 636–644. [Google Scholar] [CrossRef] [PubMed]

- Van Atteveldt, N.; Murray, M.M.; Thut, G.; Schroeder, C.E. Multisensory Integration: Flexible Use of General Operations. Neuron 2014, 81, 1240–1253. [Google Scholar] [CrossRef] [PubMed]

| Optimal Salience (Salience with Greatest BL-IP Threshold Difference) | 31.5 dB | 35.5 dB | 39.5 dB | 47.5 dB | 55.0 dB |

|---|---|---|---|---|---|

| Number of participants | 5 | 4 | 3 | 2 | 4 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chow, H.M.; Leviyah, X.; Ciaramitaro, V.M. Individual Differences in Multisensory Interactions: The Influence of Temporal Phase Coherence and Auditory Salience on Visual Contrast Sensitivity. Vision 2020, 4, 12. https://doi.org/10.3390/vision4010012

Chow HM, Leviyah X, Ciaramitaro VM. Individual Differences in Multisensory Interactions: The Influence of Temporal Phase Coherence and Auditory Salience on Visual Contrast Sensitivity. Vision. 2020; 4(1):12. https://doi.org/10.3390/vision4010012

Chicago/Turabian StyleChow, Hiu Mei, Xenia Leviyah, and Vivian M. Ciaramitaro. 2020. "Individual Differences in Multisensory Interactions: The Influence of Temporal Phase Coherence and Auditory Salience on Visual Contrast Sensitivity" Vision 4, no. 1: 12. https://doi.org/10.3390/vision4010012

APA StyleChow, H. M., Leviyah, X., & Ciaramitaro, V. M. (2020). Individual Differences in Multisensory Interactions: The Influence of Temporal Phase Coherence and Auditory Salience on Visual Contrast Sensitivity. Vision, 4(1), 12. https://doi.org/10.3390/vision4010012