Abstract

With the development of science and technology, many optimization problems in real life have developed into high-dimensional optimization problems. The meta-heuristic optimization algorithm is regarded as an effective method to solve high-dimensional optimization problems. However, considering that traditional meta-heuristic optimization algorithms generally have problems such as low solution accuracy and slow convergence speed when solving high-dimensional optimization problems, an adaptive dual-population collaborative chicken swarm optimization (ADPCCSO) algorithm is proposed in this paper, which provides a new idea for solving high-dimensional optimization problems. First, in order to balance the algorithm’s search abilities in terms of breadth and depth, the value of parameter G is given by an adaptive dynamic adjustment method. Second, in this paper, a foraging-behavior-improvement strategy is utilized to improve the algorithm’s solution accuracy and depth-optimization ability. Third, the artificial fish swarm algorithm (AFSA) is introduced to construct a dual-population collaborative optimization strategy based on chicken swarms and artificial fish swarms, so as to improve the algorithm’s ability to jump out of local extrema. The simulation experiments on the 17 benchmark functions preliminarily show that the ADPCCSO algorithm is superior to some swarm-intelligence algorithms such as the artificial fish swarm algorithm (AFSA), the artificial bee colony (ABC) algorithm, and the particle swarm optimization (PSO) algorithm in terms of solution accuracy and convergence performance. In addition, the APDCCSO algorithm is also utilized in the parameter estimation problem of the Richards model to further verify its performance.

1. Introduction

High-dimensional optimization problems generally refer to ones with high complexity and dimensions (exceeding 100). It often has the characteristics of non-linearity and high complexity. In real life, many problems can be expressed as high-dimensional optimization problems, such as large-scale job-shop-scheduling problems [1], vehicle-routing problems [2], feature selection [3], satellite autonomous observation mission planning [4], economic environmental dispatch [5], and parameter estimation. These kinds of optimization problems often greatly degrade the performance of the optimization algorithm as the dimension of the optimization problem increases, so it is extremely difficult to obtain the global optimal solution, which poses a technical challenge to solving many practical problems. Therefore, the study of high-dimensional optimization problems has important theoretical and practical significance [6,7].

The meta-heuristic optimization algorithm is a class of random search algorithms proposed by simulating biological intelligence in nature [8], and has been successfully applied in various fields, such as the Internet of Things [9], network information systems [10,11], multi-robot space exploration [12], and so on. At present, hundreds of algorithms have emerged, such as the particle swarm optimization (PSO) algorithm, the artificial bee colony (ABC) algorithm, the artificial fish swarm algorithm (AFSA), the bacterial foraging algorithm (BFA), the grey wolf optimizer (GWO) algorithm, and the sine cosine algorithm (SCA) [13]. These algorithms have become effective methods for solving high-dimensional optimization problems because of their simple structure and strong exploration and exploitation abilities. For example, Huang et al. proposed a hybrid optimization algorithm by combining the frog’s leaping optimization algorithm with the GWO algorithm and verified the performance of the algorithm on 10 high-dimensional complex functions [14]. Gu et al. proposed a hybrid genetic grey wolf algorithm for solving high-dimensional complex functions by combining the genetic algorithm and GWO and verified the performance of the algorithm on 10 high-dimensional complex test functions and 13 standard test functions [15]. Wang et al. improved the grasshopper optimization algorithm by introducing nonlinear inertia weight and used it to solve the optimization problem of high-dimensional complex functions. Experiments on nine benchmark test functions show that the algorithm has significantly improved convergence speed and convergence accuracy [16].

The chicken swarm optimization (CSO) algorithm is a meta-heuristic optimization algorithm proposed by Meng et al. in 2014, which simulates the foraging behavior of chickens in nature [17]. The algorithm realizes rapid optimization through information interaction and collaborative sharing among roosters, hens, and chicks. Because of its good solution accuracy and robustness, it has been widely used in network engineering [18,19], image processing [20,21,22], power systems [23,24], parameter estimation [25,26], and other fields. For example, Kumar et al. utilized the CSO algorithm to select the best peer in the P2P network and proposed an optimal load-balancing strategy. The experimental results show that it has better load balancing than other methods [18]. Cristin et al. applied the CSO algorithm to classify brain tumor severity in magnetic resonance imaging (MRI) images and proposed a brain-tumor image-classification method based on the fractional CSO algorithm. Experimental results show that this method has good performance in accuracy, sensitivity, and so on [20]. Liu et al. developed an improved CSO–extreme-learning machine model by improving the CSO algorithm and applied it to predict the photovoltaic power of a power system and obtained satisfactory results [23]. Alisan applied the CSO algorithm for the parameter estimation of the proton exchange membrane fuel cell model, and it exhibit particularly good performance [25].

Although the CSO algorithm has been successfully applied to various fields and solved many practical problems, the above application examples are all aimed at low-dimensional optimization problems. With the increase in the dimensions of the optimization problems, the CSO algorithm is prone to premature convergence. Therefore, for the optimization problem of high-dimensional complex functions, Yang et al. constructed a genetic CSO algorithm by introducing the idea of a genetic algorithm into the CSO algorithm and verified the performance of the proposed algorithm on 10 benchmark functions [27]. Although the convergence speed and stability were improved, the solution accuracy is still unsatisfactory. Gu et al. realized the solution to high-dimensional complex function optimization problems by removing the chicks in the chicken swarm and introducing an inverted S-shaped inertial weight to construct an adaptive simplified CSO algorithm [28]. Although the proposed algorithm is significantly better than some other algorithms in solution accuracy, there is still room for improvement in convergence speed. By introducing the dissipative structure and differential mutation operation into the basic CSO algorithm, Han constructed a hybrid CSO algorithm to avoid premature convergence in solving high-dimensional complex problems, and verified the performance of the proposed algorithm on 18 standard functions [29]. Although its convergence performance was improved, the solution accuracy should be further enhanced.

To address the aforementioned issues, we propose an adaptive dual-population collaborative CSO (ADPCCSO) algorithm in this paper. The algorithm solves high-dimensional complex problems by using an adaptive adjustment strategy for parameter G, an improvement strategy for foraging behaviors, and a dual-population collaborative optimization strategy. Specifically, the main technical features and originality of this paper are given below.

(1) The value of parameter G is given using an adaptive dynamic adjustment method, so as to balance the breadth and depth of the search abilities of the algorithm.

(2) To improve the solution accuracy and depth optimization ability of the CSO algorithm, an improvement strategy for foraging behaviors is proposed by introducing an improvement factor and adding a kind of chick’s foraging behavior near the optimal value.

(3) A dual-population collaborative optimization strategy based on the chicken swarm and artificial fish swarm is constructed to enhance the global search ability of the whole algorithm.

The simulation experiments on the selected standard test functions and the parameter estimation problem of the Richards model show that the ADPCCSO algorithm is better than some other meta-heuristic optimization algorithms in terms of solution accuracy, convergence performance, etc.

The rest of this paper is arranged as follows. In Section 2, the principle and characteristics of the standard CSO algorithm are briefly introduced. Section 3 describes the ADPCCSO algorithm proposed in this paper in detail, the improvement strategies of the algorithm, and the main implementation steps are presented in this section. Simulation experiments and analysis are presented in Section 4 to verify the performance of the proposed ADPCCSO algorithm. Finally, we conclude the paper in Section 5.

2. The Basic CSO Algorithm

CSO algorithm is a class of random search algorithm based on the collective intelligent behavior of chicken swarms in the process of foraging. In this algorithm, several randomly generated positions in the search range are regarded as several chickens, and the fitness function values of chickens are regarded as food sources. In light of the fitness function values, the whole chicken swarm is divided into the roosters, hens, and chicks, where roosters have the best fitness values, hens take second place, and chicks have the worst fitness values. The algorithm relies on the roosters, hens, and chicks to constantly conduct information interaction and cooperation sharing and finally finds the best food source [30,31]. The characteristics are as follows:

(1) The whole chicken swarm is divided into several subgroups, and each subgroup is composed of a rooster, at least one hen and several chicks. The hens and chicks look for food under the leadership of the roosters in their subgroups, and they will also obtain food from other subgroups.

(2) In the basic CSO algorithm, once the hierarchical relationship and dominance relationship between roosters, hens, and chicks are determined, they will remain unchanged for a certain period until the role update condition is met. In this way, they achieve information interaction and find the best food source.

(3) The whole algorithm realizes parallel optimization through the cooperation between roosters, hens, and chicks. The formulas corresponding to their foraging behaviors are as follows:

The roosters’ foraging behavior:

where stands for the position of the ith rooster at iteration t. Dim is the dimension of the problem to be solved. is a random number matrix with a mean value of 0 and a variance of . is a smallest positive normalized floating-point number in IEEE double precision. is the fitness function value of any rooster, and .

The hens’ foraging behavior is described by

where is the individual position of the ith hen, is the position of the group-mate rooster of the ith hen, is a randomly selected chicken, and .

The chicks’ foraging behavior is described by

where i is an index of the chick, and m is an index of the ith chick’s mother. is a follow coefficient.

3. ADPCCSO Algorithm

To address the issue of precocious convergence of the basic CSO algorithm in solving high-dimensional optimization problems, an ADPCCSO algorithm is proposed. First, to balance the breadth and depth search abilities of the basic CSO algorithm, an s-shaped function is utilized to adaptively adjust the value of parameter G. Then, in order to improve the solution accuracy of the algorithm, inspired by the literature [32], an improvement factor is used to dynamically adjust the foraging behaviors of chickens. At the same time, when the role-update condition is met, the chicks are arranged to search for food near the global optimal value, which can enhance the depth optimization ability of the algorithm. Finally, in view of the fact that the AFSA has unique behavior-pattern characteristics, which can make the algorithm quickly jump out of the local optimal solution in solving the high-dimensional optimization problems, it is integrated into the CSO algorithm to construct a dual-population collaborative optimization strategy based on chicken swarms and artificial fish swarms to enhance the global search ability, so as to achieve rapid optimization in the algorithm.

3.1. The Improvement Strategy for Parameter G

In the basic CSO algorithm, the parameter G determines how often the hierarchical relationship and role assignment of the chicken swarm are updated. The setting of an appropriate parameter G plays a crucial role in balancing the breadth and depth search abilities of the algorithm. Too large a value of G means that the information interaction between individuals is slow, which is not conducive to improving the breadth search ability of the algorithm. Too small a value of G will make the information interaction between individuals too frequent, which is not beneficial to enhancing the depth-optimization ability of the algorithm. Considering that the value of parameter G is a constant in the basic CSO algorithm, it is not conducive to balancing the search abilities between breadth and depth. We use Equation (7) to adaptively adjust the value of the parameter G; that is, in the early stage of the algorithm iteration, let G take a smaller value to enhance the breadth optimization ability of the algorithm; in the late stage of iteration of the algorithm, let G take a larger value to enhance the depth-optimization ability of the algorithm.

where t represents the current number of iterations and round () is a rounding function that can round an element to the nearest integer.

3.2. The Improvement Strategy for Foraging Behaviors

To improve the solution accuracy and depth-optimization ability of the algorithm, we construct an improvement strategy for foraging behaviors in this section; that is, an improvement factor is used in updating formulas of chickens. At the same time, in an effort to improve the depth optimization ability of CSO algorithm, the chicks’ foraging behavior near the optimal value is also added.

3.2.1. Improvement Factor

To enhance the optimization ability of the algorithm, a learning factor was integrated into the foraging formula of roosters in Reference [32], which can be shown as follows:

where M is the maximum number of iterations and and are the maximum and minimum values of the learning factor, whose values are 0.9 and 0.4, respectively.

The method in Reference [32] improved the optimization ability of the algorithm to a certain degree, but it only modified the position update formula of roosters, which is not conducive to further optimization of the algorithm. Therefore, we slightly modified the learning factor in Reference [32] and named it the improvement factor; that is, through trial and error, we set the maximum and minimum values of the improvement factor to be 0.7 and 0.1, respectively, and then used them in the foraging formulas of roosters, hens, and chicks. The experimental results have demonstrated that the solution accuracy and convergence performance are significantly improved. The modified foraging formulas for roosters, hens, and chicks are shown in Equations (10)–(12):

3.2.2. Chicks’ Foraging Behavior near the Optimal Value

To enhance the depth optimization ability of the CSO algorithm, when the role update condition is met, chicks are allowed to search for food directly near the current optimal value. The corresponding formula is as follows:

where is the global optimal individual position at iteration t. lb and ub are the upper and lower bounds of an interval set near the current optimal value.

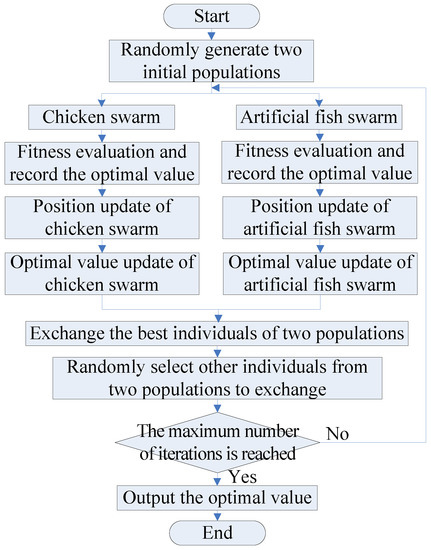

3.3. The Dual-Population Collaborative Optimization Strategy

To speed up the step of the algorithm jumping out of the local extrema, so as to quickly converge to the global optimal value, in view of the good robustness and global search ability of AFSA, the AFSA is introduced to construct a dual-population collaborative optimization strategy based on the chicken swarm and artificial fish swarm. With this strategy, the excellent individuals and several random individuals between the two populations are exchanged to break the equilibrium state within the population, so that the algorithm jumps out of the local extrema. The flow chart of the dual-population collaborative optimization strategy are shown in Figure 1.

Figure 1.

The flow chart of the dual-population collaborative optimization strategy.

The main steps are as follows:

- (1)

- Population initialization. Randomly generate two initial populations with a population size of N: the chicken swarm and the artificial fish swarm.

- (2)

- Chicken swarm optimization. Calculate the fitness function values of the entire chicken swarm and record the optimal value.

- (a)

- Update the position of chickens.

- (b)

- Update the optimal value of the current chicken swarm.

- (3)

- Artificial fish swarm optimization. Calculate the fitness function values of the entire artificial fish swarm and record the optimal value.

- (i)

- Update the positions of artificial fish swarm.

Update the positions of the artificial fish swarm; that is, by simulating fish behaviors of preying, swarming, and following, compare the fitness function values to find out the best behavior and execute this behavior. Their corresponding formulas are as follows.

The preying behavior:

where Xi is the position of the ith artificial fish. Step and Visual represent the step length and visual field of an artificial fish, respectively.

The swarming behavior:

where nf represents the number of partners within the visual field of the artificial fish. Xc is the center position.

The following behavior:

where Xmax is the position of an artificial fish with the optimal food concentration that can be found within the current artificial fish’s visual field.

- (ii)

- Update the optimal value of the current artificial fish swarm.

- (4)

- Interaction. To realize information interaction and thus break the equilibrium state within the population, first, select the optimal individuals in the chicken swarm and artificial fish swarm for exchange, and then select the remaining Num (Num < N) individuals randomly generated in the two populations for exchange.

- (5)

- Repeat steps (2)–(4) until the specified maximum number of iterations is reached and the optimal value is output.

3.4. The Design and Implementation of the ADPCCSO Algorithm

To address the premature convergence issue encountered by the basic CSO algorithm in solving high-dimensional optimization problems, the ADPCCSO algorithm is proposed. Firstly, the algorithm adjusts the parameter G adaptively and dynamically to balance the algorithm’s breadth and depth search ability. Then, the solution accuracy and depth-optimization ability of the algorithm are enhanced by using the improvement strategy for foraging behaviors described in Section 3.2. Finally, the dual-population collaborative optimization strategy is introduced to accelerate the step of the algorithm jumping out of the local extrema. The specific process is as follows:

- (1)

- Parameter initialization. The numbers of roosters, hens, and chicks are 0.2 × N, 0.6 × N, and N − 0.2 × N − 0.6 × N, respectively.

- (2)

- Population initialization. Initialize the two populations according to the method described in Section 3.3.

- (3)

- Chicken swarm optimization. Calculate the fitness function values of chickens and record the optimal value of the current population.

- (4)

- Conditional judgment. If t = 1, go to step (c); otherwise, execute step (a).

- (a)

- Judgment of the information interaction condition in the chicken swarm. If t%G = 1, execute step (b); otherwise, go to step (d).

- (b)

- Chicks’ foraging behavior near the optimal value. Chicks search for food according to Equations (13)–(15) in Section 3.2.2.

- (c)

- Information interaction. In light of the current fitness function values of the entire chicken swarm, the dominance relationship and hierarchical relationship of the whole population are updated to achieve information interaction.

- (d)

- Foraging behavior. The chickens with different roles search for food according to Equations (10)–(12).

- (e)

- Modification of the optimal value in the chicken swarm: after each iteration, the optimal value of the whole chicken swarm is updated.

- (5)

- Artificial fish swarm optimization. Calculate the fitness function values of the artificial fish swarm and record the optimal value of the current population.

- (i)

- In the artificial fish swarm, behaviors of swarming, following, preying, and random movement are executed to find the optimal food.

- (ii)

- Update the optimal value of the whole artificial fish swarm.

- (6)

- Exchange. This includes the exchange of the optimal individuals and the exchange of several other individuals in the two populations.

- (7)

- Judgment of ending condition for the algorithm. If the specified maximum number of iterations is reached, the optimal value will be output, and the program will be terminated. Otherwise, go to step (3).

3.5. The Time Complexity Analysis of the ADPCCSO Algorithm

In the standard CSO algorithm, if the population size of the chicken swarm is assumed to be N, then the dimension of the solution space is d, the iteration number of the entire algorithm is M, and the hierarchical relationship of the chicken swarm is updated every G iterations. The numbers of roosters, hens, and chickens in the chicken swarm e Nr, Nh, and Nc, respectively; that is, Nr + Nh + Nc = N. The calculation time of the fitness function value of each chicken is tf. Therefore, the time complexity of the CSO algorithm consists of two stages, namely, the initialization stage and the iteration stage [30,32].

In the initialization stage (including parameter initialization and population initialization), assume that the setting time of parameters is t1, the actual time required to generate a random number is t2, and the sorting time of the fitness function values is t3. Then, the time complexity of the initial stage is T1 = t1 + N × d × t2+ t3 + N × tf = O(N × d + N × tf).

In the iteration stage, let the time for each rooster, hen, and chick to update its position on each dimension be tr, th, and tc, respectively. The time it takes to compare the fitness function values between two individuals is t4, and the time it takes for the chickens to interact with information is t5. Therefore, the time complexity of this stage is as follows.

Therefore, the time complexity of the standard CSO algorithm is as follows.

T′ = T1 + T2 = O(N × d + N × tf) + O(N × M × d + N × M × tf) = O(N × M × d + N × M × tf).

On the basis of the standard CSO algorithm, the ADPCCSO algorithm adds the improvement factor in the position update formula of the chicken swarm, the foraging behavior of chicks near the optimal value, and the optimization strategy of the artificial fish swarm. It is assumed that the population size of the artificial fish swarm is N, and the tentative number when performing foraging behavior is try_number. In the swarming and following behaviors, it is necessary to count friend_number times when calculating the values of nf and Xmax. The time to calculate the improvement factor is t6, and the time it takes to perform the foraging, swarming, and following behaviors are t7, t8, and t9, respectively.

Therefore, the time complexity of adding the improvement factor in the position updating formula is T3 = M × N × t6 = O(M × N). The time complexity of the chicks’ foraging behavior near the optimal value is T4 = × d × Nc × tc = O( × d × Nc).

The time complexity of the artificial fish swarm optimization strategy is mainly composed of three parts: foraging behavior, swarming behavior, and following behavior. Its time complexity is as follows [33].

T5 = M × N × try_number × t7 × d + M × N × t8 × Friend_number × d + M × N × Friend_number × t9 × d = O(M × N ×

try_number × d) + O(M × N × Friend_number × d) + O(M × N × Friend_number × d) = O(M × N × d).

try_number × d) + O(M × N × Friend_number × d) + O(M × N × Friend_number × d) = O(M × N × d).

Therefore, the time complexity of the ADPCCSO algorithm is as follows.

It can be seen that the time complexity of the ADPCCSO and standard CSO algorithms is still in the same order of magnitude.

4. Simulation Experiment and Analysis

4.1. The Experimental Setup

In this study, our experiments were conducted on a desktop computer with an Intel® Pentium® CPU G4500 @ 3.5 GHz processor, 12 GB RAM, a Windows 7 operating system, and the programming environment of MATLABR2016a.

To verify the performance of he ADPCCSO algorithm in solving high-dimensional complex optimization problems, we selected 17 standard high-dimensional test functions in Reference [28] for experimental comparison, which are listed in Table 1. (Because the functions f18~f21 in Reference [28] are fixed low-dimensional functions, we only selected the functions f1~f17 for experimental comparison.) Here, the functions f1~f12 are unimodal functions. Because it is difficult to obtain the global optimal solution, they are often used to test the solution accuracy of the algorithms. The functions f13~f17 are multimodal functions, which are often used to verify the global optimization ability of the algorithms.

Table 1.

The description of the test functions.

To fairly compare the performance of various algorithms, we need to make all algorithms have the same number of function evaluations (FEs). In our paper, FEs = the population size × the maximum number of iterations, and considering that the population size and the maximum number of iterations of GCSO [27] and DMCSO [29] are both 100 and 1000, in the experiment, we also set these two parameters for the remaining algorithms to 100 and 1000, respectively. The experimental data in this paper are obtained by independently running all algorithms on each function for 30 times. Other parameter settings are shown in Table 2.

Table 2.

The parameter settings of all algorithms.

In Table 2, c1 and c2 are two learning factors and and are the upper and lower bounds of the inertial weight. hPercent and rPercent are the proportion of hens and roosters in the entire chickens, respectively. Nc, Nre, and Ned represent the numbers of chemotactic, reproduction, and elimination-dispersal operations, respectively. Visual, Step, and try_number represent the vision field, step length, and maximum tentative number of the artificial fish swarm, respectively. Limit is a control parameter for bees to abandon their food sources. Pc and Pm are crossovers and variation operators.

In Table 2, the parameters of AFSA are set after trial and error on the basis of the literature [31]. The parameter of ABC is set according to the study [34] where ABC has been proposed. The parameters of PSO, CSO, ASCSO-S [28], GCSO [27], and DMCSO [29] are set according to their corresponding references (namely the studies [27,28,29]), respectively.

4.2. The Effectiveness Test of Two Improvement Strategies

To verify the effectiveness of the two improved strategies proposed in Section 3.1 and Section 3.3, we have compared the ACSO, DCCSO, and CSO algorithms on 17 test functions in terms of the solution accuracy and convergence performance. Here, the ACSO algorithm is an adaptive CSO algorithm, that is, we only use Equation (7) to make adaptive dynamic adjustment to the parameter G in the CSO algorithm. The DCCSO algorithm refers to the fact that only the dual-population collaborative optimization strategy mentioned in Section 3.3 is used in CSO algorithm.

The experimental results of the above three algorithms on 17 test functions are listed in Table 3, where the optimal results are marked in bold. In Table 3, “Dim” is the dimension of the problem to be solved, “Mean” is the mean value, and “Std” is the standard deviation. “↑”, “↓”, and “=”, respectively, signify that the operation results obtained by the ACSO and DCCSO algorithms are superior to, inferior to, and equal to those obtained by the basic CSO algorithm.

Table 3.

The experimental comparison of two improvement strategies with Dim = 100.

It can be seen from Table 3 that the optimization results of the ACSO and DCCSO algorithms on almost all benchmark test functions are far superior to those of the CSO algorithm (on only function f2, the optimization results of DCCSO algorithm are slightly inferior to those of CSO algorithm); in particular, the experimental data on functions f10 and f11 reached the theoretical optimal values. This shows the effectiveness of the two improvement strategies proposed in Section 3.1 and Section 3.3 in terms of solution accuracy.

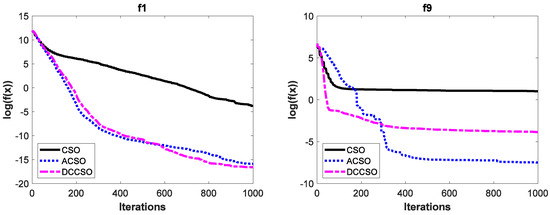

To verify the effectiveness of ACSO and DCCSO algorithms compared with the CSO algorithm in terms of the aspect of convergence performance, the convergence curves of the above three algorithms on some functions are shown in Figure 2. For simplicity, we only list the convergence curves of the aforementioned algorithms on functions f1, f9, f13, and f16, where functions f1 and f9 are unimodal functions and functions f13 and f16 are multimodal functions. In addition, in order to make the convergence curves clearer, we take the logarithmic processing for the average fitness values.

Figure 2.

The convergence curves of three algorithms on functions f1, f9, f13 and f16.

As can be seen from Figure 2, the convergence performance of both ACSO and DCCSO algorithms is significantly superior to that of the CSO algorithm, which proves the effectiveness of the two improvement strategies proposed in this paper in terms of convergence performance.

4.3. The Effectiveness Test of Improvement Strategy for Foraging Behaviors

To test the effectiveness of the improvement strategy proposed in Section 3.2, the learning-factor-based foraging behavior improvement strategy in the literature [32] is used for experimental comparison. At the same time, with the purpose of conducting experimental comparison more objectively and fairly, we let the ADPCCSO algorithm use the above-mentioned improvement strategies on 17 test functions to verify the performance of the improvement strategy in Section 3.3. The experimental results are listed in Table 4, where the ADPCCSO [32] indicates that the improvement strategy for foraging behavior in the literature [32] is used in the ADPCCSO. In addition, the number of optimal results calculated by each algorithm based on the mean value is also shown in Table 4.

Table 4.

The experimental results of improvement strategy for foraging behaviors.

As can be seen from Table 4, the ADPCCSO [32] only obtained optimal values on 5 functions, while the ADPCCSO algorithm obtained optimal values on 16 functions and the theoretical optimal values were obtained on 13 functions. Only on function f5 were the results of ADPCCSO algorithm slightly inferior to those of the ADPCCSO [32]. This shows the effectiveness of the improvement strategy proposed in Section 3.2 in terms of solution accuracy.

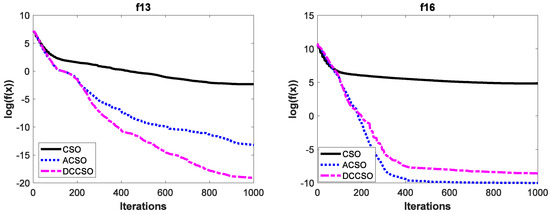

To test the effectiveness of the improvement strategy proposed in Section 3.3 in terms of convergence performance, the convergence curves of the above two algorithms are also listed in this section. For simplicity, only their convergence curves on functions f9 and f15 are given, which are shown in Figure 3. It is worth noting that in order to make the convergence curves look more intuitive and clearer, we also logarithm the average fitness values in this section.

Figure 3.

The convergence curves of two algorithms on functions f9 and f15.

It is obvious from Figure 3 that the convergence performance of the ADPCCSO algorithm is better than that of ADPCCSO [32] as a whole. Especially on function f15, the ADPCCSO algorithm has more obvious advantages in convergence performance, and it began to converge stably around the 18th generation.

4.4. Performance Comparison of Several Swarm Intelligence Algorithms

To test the advantages of the ADPCCSO algorithm proposed in this paper over other algorithms in solving high-dimensional optimization problems, in this section, it is compared with five other algorithms, namely ASCSO-S [28], ABC, AFSA, CSO, and PSO. Their best values, worst values, mean values, and standard deviations obtained on the 17 benchmark standard test functions are shown in Table 5, Table 6 and Table 7, where the best values are shown in bold. In addition, we also count the number of optimal values obtained by each algorithm based on the mean value, which are shown in Table 5, Table 6 and Table 7.

Table 5.

The experimental results of several algorithms on the 17 test functions with Dim = 30.

Table 6.

The experimental results of several algorithms on the 17 test functions with Dim = 100.

Table 7.

The experimental results of several algorithms on the 17 test functions with Dim = 500.

It is not difficult to see from Table 5, Table 6 and Table 7 that the ADPCCSO and ASCSO-S algorithms are far superior to the other four swarm intelligence algorithms in terms of solution accuracy and stability. Among them, the ADPCCSO algorithm has the best performance: in particular, when Dim = 500, it obtained the optimal values in all 17 functions, and the number of optimal results calculated by the ASCSO-S algorithm is 14. Additionally, on function f5, the operation results of the ADPCCSO algorithm at Dim = 100 and Dim = 500 are far better than those at Dim = 30, which also shows to a certain extent that the ADPCCSO algorithm is more suitable for handling higher-dimensional complex optimization problems.

As can be seen from Table 5, although the ABC algorithm obtained the optimal values in three functions, its optimization ability worsens as the dimension of the problem increases. On the contrary, AFSA shows a higher optimization ability (when Dim = 500, its optimization ability on 11 functions is much better than that of the ABC algorithm), which is one of the reasons why we constructed a dual-population collaborative optimization strategy based on a chicken swarm and an artificial fish swarm to solve high-dimensional optimization problems. It is noteworthy that the operation results of the PSO algorithm on function f8 are not given in Table 7. This is because when Dim = 500, its fitness function values often exceed the maximum positive value that the computer can represent, resulting in the algorithm being unable to obtain suitable operation results. This also shows that the PSO algorithm is not suitable for handling higher-dimensional complex optimization problems.

Below, we summarize why the solution accuracy of ADPCCSO and ASCSO-S algorithms is better than that of the other four algorithms. This may be due to the fact that both algorithms introduce an improvement factor (which is called an inertial weight) into the position update formula of the chicken swarm. The reason why the performance of the former in terms of solution accuracy is better than that of the latter may be because the former uses an improvement strategy for foraging behaviors, which not only improves the depth optimization ability of the algorithm but also improves its solution accuracy.

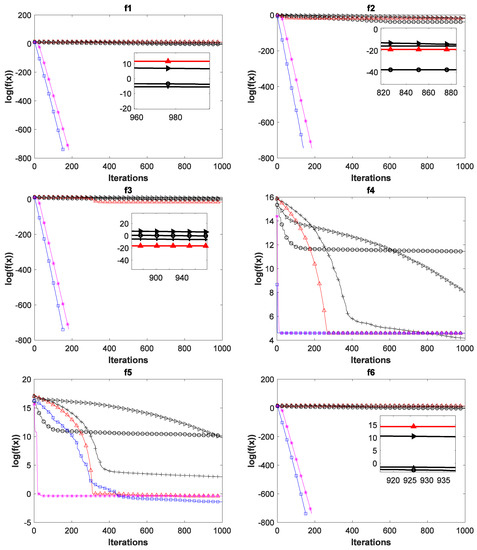

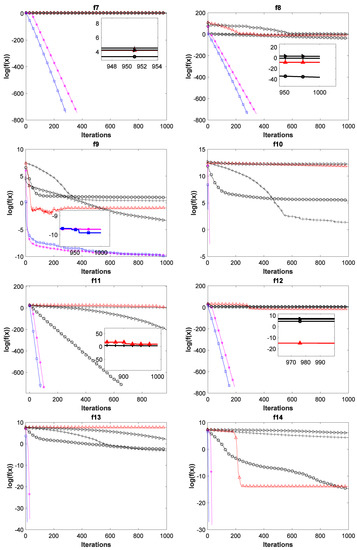

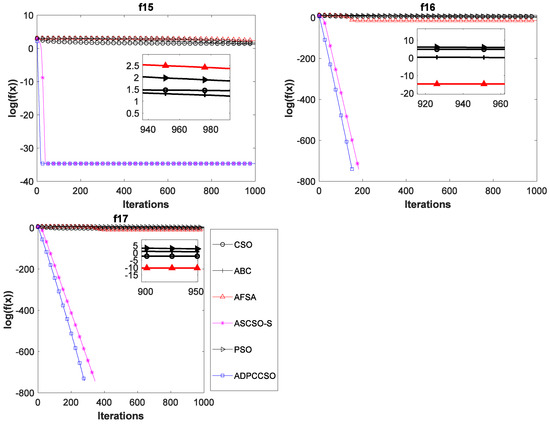

To verify the superiority of the ADPCCSO algorithm over other algorithms in terms of convergence performance, this paper presents the convergence curves of the above six algorithms on all 17 test functions with Dim = 100, which are shown in Figure 4. In Figure 4, the average fitness values of all ordinates are also logarithmic. In addition, in order to further present a clearer convergence effect, we have locally enlarged some convergence curves, which is why there are subgraphs in some convergence curves.

Figure 4.

Convergence curves of six swarm intelligence algorithms on 17 functions.

As can be seen from Figure 4, the ADPCCSO algorithm has the best convergence performance on 16 functions, but on only function f4, its convergence is slightly inferior to that of the ABC algorithm. ASCSO-S ranks second in terms of convergence performance, and AFSA and CSO are tied for third place. (This is another reason why we construct a dual-population collaborative optimization strategy based on the chicken swarm and artificial fish swarm).

Below, we summarize why the convergence performance of the ADPCCSO and ASCSO-S algorithms is better than that of the other four algorithms as a whole. This may be because both algorithms use adaptive dynamic adjustment strategies. The convergence performance of the former is superior to that of the latter, which may be due to the use of the dual-population collaborative optimization strategy in the ADPCCSO algorithm, which improves the convergence performance of the algorithm. In addition, by carefully observing Figure 4, it is not difficult to find that on functions f1–f3, f6–f8, f10–f14, and f16–f17, it seems that the convergence curves of ADPCCSO and ASCSO-S algorithms in the late iteration stage are not fully presented. This is because both algorithms have found the theoretical optimal value of 0 in these functions, and 0 has no logarithm.

4.5. Friedman Test of Algorithms

The Friedman test, or Friedman’s method for randomized blocks, is a non-parametric test method that does not require the sample to obey a normal distribution, and it only uses rank to judge whether there are significant differences in multiple population distributions. This method was proposed by Friedman in 1973. Because of its simple operation and no strict requirements for data, it is often used to test the performance of algorithms [28,35].

To further test the performance of the ADPCCSO algorithm proposed in this paper, in this section, the Friedman test is utilized to compare the performance of the above six algorithms from a statistical perspective. For the minimum optimization problem, the smaller the average ranking of the algorithm is, the better the performance of the algorithm is. In this section, the SPSS software is used to calculate the average ranking values of all algorithms. The statistical results are shown in Table 8. It is obvious from Table 8 that the ADPCCSO algorithm has the lowest average ranking of 1.5 and therefore has the best performance.

Table 8.

Friedman test results of algorithms.

4.6. Performance Comparison of Several Improved CSO Algorithms

To further verify the performance of ADPCCSO algorithm proposed in this paper, two improved CSO algorithms mentioned in the literature [27,29], namely GCSO [27] and DMCSO [29], have also been used to compare with the ADPCCSO algorithm. The experimental results are shown in Table 9. The experimental data of both algorithms are from the corresponding references. It is worth noting that the population size of the above three algorithms is 100, and the maximum number of iterations is 1000, which also facilitates a more fair and reasonable experimental comparison. Other parameter settings are shown in Table 2.

Table 9.

The experimental results of three improved CSO algorithms with Dim = 100.

In Table 9, GCSO [27] counts the operation results of 6 functions out of 17 test functions but only obtained the optimal values on the standard deviation of function f4 and the best values of functions f13 and f14. DMCSO [29] counted the operation results of 12 functions out of 17 test functions and only obtained the optimal values on function f4. However, the operation results of the ADPCCSO algorithm are better than those of the above two algorithms overall. On only function f4, the operation results of the ADPCCSO algorithm are worse than those of DMCSO [29]. This shows the advantages of the ADPCCSO algorithm.

4.7. Performance Test of ADPCCSO Algorithm for Solving Higher-Dimensional Problems

To further verify the performance of the ADPCCSO algorithm in solving higher-dimensional optimization problems, the relevant experiments for the proposed algorithm on 17 benchmark test functions with Dim = 1000 are also presented in this section. The corresponding experimental results are shown in Table 10.

Table 10.

The experimental results of the ADPCCSO algorithms with Dim = 1000.

As can be seen from Table 10, even when the dimension of the optimization problem is adjusted to 1000, the proposed algorithm can still achieve satisfactory optimization accuracy on most test functions; only on functions f4, f5, and f9 do the experimental data fluctuate slightly. This indicates that when the dimension increases, the proposed algorithm will not be greatly affected, which fully demonstrates that the ADPCCSO algorithm still has a competitive advantage in dealing with higher-dimensional optimization problems.

4.8. Parameter Estimation Problem of Richards Model

To verify the performance of ADPCCSO algorithm in solving practical problems, it is applied to the parameter estimation problem of the Richards model in this section. The Richards model is a growth curve model with four unknown parameters, which can adequately simulate the whole process of biological growth. Its mathematical formula is as follows [28,36,37]:

where stands for the growth amount at time t, and are four unknown parameters.

The core problem of the ADPCCSO algorithm for parameter estimation of the Richards model is the design of the fitness function. In this paper, the fitness function design method mentioned in the sties [28,36] is adopted; that is, the sum of squares of the difference between the observed and predicted values is used as the fitness function. The mathematical formula is as follows:

where yi is the actual growth amount observed at time i. In this section, the actual growth concentrations of glutamate listed in the studies [28,36] are used as the observation values, which are shown in Table 11. The optimal solutions obtained by different algorithms through 30 independent runs are listed in Table 12, where the experimental data of ASCSO-S [28] and VS-FOA [36] come from the corresponding references. The data in Table 13 are the growth concentration of glutamate calculated by using the data in Table 12 in Equation (21). In Table 13, “fit” represents the fitness function value.

Table 11.

The observed growth concentration of glutamate.

Table 12.

The experimental results of optimal solutions obtained by various algorithms.

Table 13.

The growth concentration of glutamate predicted by each algorithm.

To evaluate the effect of VS-FOA [36], ASCSO-S [28], and ADPCCSO in the parameter estimation of Richards model, we select the root mean square error, mean absolute error, and coefficient of determination as evaluation indexes to evaluate the performance of the above three algorithms. The formulas are as follows:

(1) The root mean square error:

where yi is the actual value observed and is the predicted value at time i. n is the number of actual values observed. The root mean square error is used to measure the deviation between the predicted values and the observed values. The smaller its value is, the better the predicted value is.

(2) The mean absolute error:

The mean absolute error is the mean value of the absolute error. It reflects the actual situation of the error of the predicted value better. The smaller its value is, the more precise the predicted value is.

(3) The coefficient of determination:

where is the mean value of the actual values observed. The coefficient of determination is generally used to evaluate the conformity between the predicted and actual values. The closer its value is to 1, the better the prediction effect.

The comparison results of the above three algorithms in the three evaluation indexes are shown in Table 14, where the optimal values are marked in bold.

Table 14.

The comparison results of three algorithms.

As can be seen from Table 14, the ADPCCSO algorithm has optimal values in both indexes. Although the ADPCCSO algorithm is slightly inferior to the other two algorithms in terms of the mean absolute error, its fitness function value is indeed the best of the three algorithms, which can be seen from Table 13. This preliminarily shows that the ADPCCSO algorithm can solve the parameter estimation problem of the Richards model.

5. Conclusions

In view of the precocious convergence problem that the basic CSO algorithm is prone to when solving high-dimensional complex optimization problems, an ADPCCSO algorithm is proposed in this paper. The algorithm first uses an adaptive dynamic adjustment method to give the value of parameter G, so as to balance the algorithm’s depth and breadth search ability. Additionally, then, the solution accuracy and depth optimization ability of the algorithm are improved by using a foraging-behavior-improvement strategy. Finally, a dual-population collaborative optimization strategy is constructed to improve the algorithm’s global search ability. The experimental results preliminarily show that the proposed algorithm has obvious advantages over other comparison algorithms in terms of solution accuracy and convergence performance. This provides new ideas for the study of high-dimensional optimization problems.

However, although the experimental results of the proposed algorithm on most given benchmark test functions have achieved obvious advantages over the comparison algorithms, there is still a gap between the actual optimal solutions obtained on several functions and their theoretical optimal solutions. Therefore, understanding how to improve the performance of the algorithm to better solve more complex large-scale optimization problems still needs further research. Moreover, in future research work, it is also a good choice to apply this algorithm to other fields, such as the constrained optimization problem, the multi-objective optimization problem, and the vehicle-routing problem.

Author Contributions

Conceptualization, J.L. and L.W.; methodology, J.L.; software, J.L.; validation, J.L., L.W. and M.M.; formal analysis, J.L.; investigation, J.L.; resources, J.L.; data curation, J.L.; writing—original draft preparation, J.L.; writing—review and editing, J.L.; visualization, J.L.; supervision, J.L.; project administration, J.L.; funding acquisition, L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Education Department of Hainan Province of China (Hnkyzc2023-3, Hnky2020-3), the Natural Science Foundation of Hainan Province of China (620QN230), and the National Natural Science Foundation of China (61877038).

Data Availability Statement

All data used to support the findings of this study are included within the article. Color versions of all figures in this paper are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yang, D.; Wu, M.; Li, D.; Xu, Y.; Zhou, X.; Yang, Z. Dynamic opposite learning enhanced dragonfly algorithm for solving large-scale flexible job shop scheduling problem. Knowl.-Based Syst. 2022, 238, 107815. [Google Scholar] [CrossRef]

- Li, J.; Duan, Y.; Hao, L.; Zhang, W. Hybrid optimization algorithm for vehicle routing problem with simultaneous delivery-pickup. J. Front. Comput. Sci. Technol. 2022, 16, 1623–1632. [Google Scholar]

- Tran, B.; Xue, B.; Zhang, M. Variable-Length Particle Swarm Optimization for Feature Selection on High-Dimensional Classification. IEEE Trans. Evol. Comput. 2019, 23, 473–487. [Google Scholar] [CrossRef]

- Gao, X.; Guo, Y.; Ma, G.; Zhang, H.; Li, W. Agile satellite autonomous observation mission planning using hybrid genetic algorithm. J. Harbin Inst. Technol. 2021, 53, 1–9. [Google Scholar]

- Larouci, B.; Ayad, A.N.E.I.; Alharbi, H.; Alharbi, T.E.; Boudjella, H.; Tayeb, A.S.; Ghoneim, S.S.; Abdelwahab, S.A.M. Investigation on New Metaheuristic Algorithms for Solving Dynamic Combined Economic Environmental Dispatch Problems. Sustainability 2022, 14, 5554. [Google Scholar] [CrossRef]

- Yang, Q.; Zhu, Y.; Gao, X.; Xu, D.; Lu, Z. Elite Directed Particle Swarm Optimization with Historical Information for High-Dimensional Problems. Mathematics 2022, 10, 1384. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, W.N.; Gu, T.; Jin, H.; Mao, W.; Zhang, J. An Adaptive Stochastic Dominant Learning Swarm Optimizer for High-Dimensional Optimization. IEEE Trans. Cybern. 2022, 52, 1960–1976. [Google Scholar] [CrossRef]

- Pellerin, R.; Perrier, N.; Berthaut, F. A survey of hybrid metaheuristics for the resource-constrained project scheduling problem. Eur. J. Oper. Res. 2020, 280, 395–416. [Google Scholar] [CrossRef]

- Forestiero, A. Heuristic recommendation technique in Internet of Things featuring swarm intelligence approach. Expert Syst. Appl. 2022, 187, 115904. [Google Scholar] [CrossRef]

- Forestiero, A.; Mastroianni, C.; Spezzano, G. Antares: An ant-inspired P2P information system for a self-structured grid. In Proceedings of the 2007 2nd Bio-Inspired Models of Network, Information and Computing Systems, Budapest, Hungary, 10–13 December 2007. [Google Scholar]

- Forestiero, A.; Mastroianni, C.; Spezzano, G. Reorganization and discovery of grid information with epidemic tuning. Future Gener. Comput. Syst. 2008, 24, 788–797. [Google Scholar] [CrossRef]

- Gul, F.; Mir, A.; Mir, I.; Mir, S.; Islaam, T.U.; Abualigah, L.; Forestiero, A. A Centralized Strategy for Multi-Agent Exploration. IEEE Access 2022, 10, 126871–126884. [Google Scholar] [CrossRef]

- Brajević, I.; Stanimirović, P.S.; Li, S.; Cao, X.; Khan, A.T.; Kazakovtsev, L.A. Hybrid Sine Cosine Algorithm for Solving Engineering Optimization Problems. Mathematics 2022, 10, 4555. [Google Scholar] [CrossRef]

- Huang, C.; Wei, X.; Huang, D.; Ye, J. Shuffled frog leaping grey wolf algorithm for solving high dimensional complex functions. Control Theory Appl. 2020, 37, 1655–1666. [Google Scholar]

- Gu, Q.H.; Li, X.X.; Lu, C.W.; Ruan, S.L. Hybrid genetic grey wolf algorithm for high dimensional complex function optimization. Control Decis. 2020, 35, 1191–1198. [Google Scholar]

- Wang, Q.; Li, F. Two Novel Types of Grasshopper Optimization Algorithms for Solving High-Dimensional Complex Functions. J. Chongqing Inst. Technol. 2021, 35, 277–283. [Google Scholar]

- Meng, X.; Liu, Y.; Gao, X.; Zhang, H. A New Bio-Inspired Algorithm: Chicken Swarm Optimization; Springer International Publishing: Cham, Switzerland, 2014; pp. 86–94. [Google Scholar]

- Kumar, D.; Pandey, M. An optimal load balancing strategy for P2P network using chicken swarm optimization. Peer-Peer Netw. Appl. 2022, 15, 666–688. [Google Scholar] [CrossRef]

- Basha, A.J.; Aswini, S.; Aarthini, S.; Nam, Y.; Abouhawwash, M. Genetic-Chicken Swarm Algorithm for Minimizing Energy in Wireless Sensor Network. Comput. Syst. Sci. Eng. 2022, 44, 1451–1466. [Google Scholar] [CrossRef]

- Cristin, D.R.; Kumar, D.K.S.; Anbhazhagan, D.P. Severity Level Classification of Brain Tumor based on MRI Images using Fractional-Chicken Swarm Optimization Algorithm. Comput. J. 2021, 64, 1514–1530. [Google Scholar] [CrossRef]

- Bharanidharan, N.; Rajaguru, H. Improved chicken swarm optimization to classify dementia MRI images using a novel controlled randomness optimization algorithm. Int. J. Imaging Syst. Technol. 2020, 30, 605–620. [Google Scholar] [CrossRef]

- Liang, J.; Wang, L.; Ma, M. A new image segmentation method based on the ICSO-ISPCNN model. Multimed. Tools Appl. 2020, 79, 28131–28154. [Google Scholar] [CrossRef]

- Liu, Z.F.; Li, L.L.; Tseng, M.L.; Lim, M.K. Prediction short-term photovoltaic power using improved chicken swarm optimizer—Extreme learning machine model. J. Clean. Prod. 2020, 248, 119272. [Google Scholar] [CrossRef]

- Othman, A.M.; El-Fergany, A.A. Adaptive virtual-inertia control and chicken swarm optimizer for frequency stability in power-grids penetrated by renewable energy sources. Neural Comput. Appl. 2021, 33, 2905–2918. [Google Scholar] [CrossRef]

- Ayvaz, A. An improved chicken swarm optimization algorithm for extracting the optimal parameters of proton exchange membrane fuel cells. Int. J. Energy Res. 2022, 46, 15081–15098. [Google Scholar] [CrossRef]

- Maroufi, H.; Mehdinejadiani, B. A comparative study on using metaheuristic algorithms for simultaneously estimating parameters of space fractional advection-dispersion equation. J. Hydrol. 2021, 602, 126757. [Google Scholar] [CrossRef]

- Yang, X.; Xu, X.; Li, R. Genetic chicken swarm optimization algorithm for solving high-dimensional optimization problems. Comput. Eng. Appl. 2018, 54, 133–139. [Google Scholar]

- Gu, Y.; Lu, H.; Xiang, L.; Shen, W. Adaptive Simplified Chicken Swarm Optimization Based on Inverted S-Shaped Inertia Weight. Chin. J. Electron. 2022, 31, 367–386. [Google Scholar] [CrossRef]

- Han, M. Hybrid chicken swarm algorithm with dissipative structure and differential mutation. J. Zhejiang Univ. (Sci. Ed.) 2018, 45, 272–283. [Google Scholar]

- Zhang, K.; Zhao, X.; He, L.; Li, Z. A chicken swarm optimization algorithm based on improved X-best guided individual and dynamic hierarchy update mechanism. J. Beijing Univ. Aeronaut. Astronaut. 2021, 47, 2579–2593. [Google Scholar]

- Liang, J.; Wang, L.; Ma, M. An Improved Chicken Swarm Optimization Algorithm for Solving Multimodal Optimization Problems. Comput. Intell. Neurosci. 2022, 2022, 5359732. [Google Scholar] [CrossRef]

- Gu, Y.C.; Lu, H.Y.; Xiang, L.; Shen, W.Q. Adaptive Dynamic Learning Chicken Swarm Optimization Algorithm. Comput. Eng. Appl. 2020, 56, 36–45. [Google Scholar]

- Fei, T. Research on Improved Artificial Fish Swarm Algorithm and Its Application in Logistics Location Optimization; Tianjin University: Tianjin, China, 2016. [Google Scholar]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Song, X.; Zhao, M.; Yan, Q.; Xing, S. A high-efficiency adaptive artificial bee colony algorithm using two strategies for continuous optimization. Swarm Evol. Comput. 2019, 50, 100549. [Google Scholar] [CrossRef]

- Wang, J. Parameter estimation of Richards model based on variable step size fruit fly optimization algorithm. Comput. Eng. Des. 2017, 38, 2402–2406. [Google Scholar]

- Smirnova, A.; Pidgeon, B.; Chowell, G.; Zhao, Y. The doubling time analysis for modified infectious disease Richards model with applications to COVID-19 pandemic. Math. Biosci. Eng. 2022, 19, 3242–3268. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).