Abstract

To enhance the convergence efficiency and solution precision of the Red-billed Blue Magpie Optimizer (RBMO), this study proposes a Multi-Strategy Enhanced Red-billed Blue Magpie Optimizer (MRBMO). The principal methodological innovations encompass three aspects: (1) Development of a novel dynamic boundary constraint handling mechanism that strengthens algorithmic exploration capabilities through adaptive regression strategy adjustment for boundary-transgressing particles; (2) Incorporation of an elite guidance strategy during the predation phase, establishing a guided search framework that integrates historical individual optimal information while employing a Lévy Flight strategy to modulate search step sizes, thereby achieving effective balance between global exploration and local exploitation capabilities; (3) Comprehensive experimental evaluations conducted on the CEC2017 and CEC2022 benchmark test suites demonstrate that MRBMO significantly outperforms classical enhanced algorithms and exhibits competitive performance against state-of-the-art optimizers across 41 standardized test functions. The practical efficacy of the algorithm is further validated through successful applications to four classical engineering design problems, confirming its robust problem-solving capabilities.

1. Introduction

Optimization problems are widespread [], defined as the process of identifying the optimal solution from feasible solutions to minimize or maximize a given objective []. Optimization problems are generally characterized by large scale, numerous constraints, complex parameter control, and high computational cost []. In many optimization problems, it is necessary to find an optimal solution to a particular problem with highly complex constraints in a reasonable time period []. Effective methods are required to solve optimization problems involving numerous decision variables, complex nonlinear constraints, and objective functions []. Traditional optimization methods, represented by gradient descent and Lagrange multiplier methods, derive their efficacy strictly from mathematical properties, such as the differentiability and convexity of the objective function, along with the explicit formulation of constraint conditions []. However, optimization problems commonly encountered in real-world production and daily life are typically characterized by large-scale, high-dimensional, and nonlinear features [], thus conventional methods often struggle to obtain acceptable solutions within a feasible time frame []. Specifically, the problem presents the following challenges:

- Multimodal: Many optimization problems exhibit multiple local optima, causing algorithms to easily become trapped in local optima and to fail to obtain satisfactory solutions.

- High-dimensional: As the number of decision variables increases, the problem’s dimensionality grows accordingly. The search space expands exponentially with increasing dimensions, leading to significantly higher problem complexity.

- Nonlinear: The objective functions of many problems are frequently nonlinear and may even be non-differentiable, rendering certain algorithms inapplicable due to their strict requirements on objective function properties.

- Multi-objective: Some problems require simultaneous optimization of multiple objectives that often exhibit conflicting relationships, making it difficult or impossible to find solutions that satisfy all objectives concurrently.

In contrast to conventional algorithms, heuristic algorithms [] demonstrate the capability to identify a feasible suboptimal solution for optimization problems within a reasonable timeframe or under constrained computational resources. This methodology achieves computational feasibility and suboptimality through a systematic trade-off between solution accuracy/precision and operational efficiency []. However, these approaches frequently encounter convergence limitations toward local optima due to their relatively simplistic search patterns, which restrict their exploration capacity in complex solution spaces. The metaheuristic algorithm further combines the stochastic algorithm with the local search of the traditional heuristic algorithm to enhance the ability of the algorithm to jump out of the local optimal solution. It combines the laws of nature and biological laws for better solution results compared to heuristic algorithms [].

Swarm Intelligence (SI) [] is a significant branch of Artificial Intelligence (AI) that is grounded in the intelligent collective behavior observed in social groups in nature []. Researchers draw inspiration from various species and natural phenomena, leading to the development of various metaheuristic optimization algorithms based on SI []. The Particle Swarm Optimization Algorithm (PSO) [] conceptualizes feasible solutions to optimization problems as particles within a search space. Each particle possesses a specific velocity and position, updating these attributes based on its historical optimal position and the best position identified within the group, thereby facilitating the search for superior solutions. In this context, each particle represents a candidate solution within the solution space. Ant Colony Optimization (ACO) [] is another metaheuristic optimization algorithm inspired by the foraging behavior of natural ant colonies. Its fundamental principle involves addressing combinatorial optimization problems by simulating the collaborative behavior of ants as they release and perceive pheromones during food-searching activities. This bio-inspired algorithm, governed by simple rules that emulate biological swarm intelligence, provides an effective means of tackling complex optimization challenges. The Firefly Algorithm (FA) [] is a metaheuristic driven by swarm intelligence inspired by the bioluminescent attraction mechanisms of fireflies. It utilizes brightness-mediated interactions to guide individuals toward optimal solutions, achieving a balance between global exploration and local exploitation through adaptive attraction dynamics and stochastic movement. Grey Wolf Optimization (GWO) [] establishes an optimization search framework based on biological group intelligence by simulating the hierarchy, collaborative predation strategies, and group decision-making mechanisms of grey wolf populations in nature. The algorithm categorizes individual grey wolves into a four-level hierarchical structure with a strict social division of labor: wolves serve as population leaders responsible for global decision-making; wolves act as secondary coordinators assisting in decision execution; wolves form the base population unit and engage in local searches; and wolves function as followers, completing comprehensive explorations of the solution space. The Whale Optimization Algorithm (WOA) [] mimics the feeding behavior of whales, particularly humpback whales, in the ocean, addressing complex optimization problems through strategies of distributed search, autonomous decision-making, and adaptive adjustment. The Sparrow Search Algorithm [] simulates the role differentiation of sparrows during foraging, distinguishing between the leader (the sparrow that locates food) and the follower (the sparrow that trails the leader). The leader primarily focuses on finding food, while the follower remains within a certain range of the leader to assist in locating food. Additionally, sparrows make positional adjustments to evade predators, and these behavioral characteristics are abstracted into key steps within the algorithm to optimize the objective function.

However, as asserted by the “No Free Lunch” (NFL) theorem [], every algorithm has inherent limitations, and no single algorithm can solve all optimization problems. Consequently, many researchers are dedicated to proposing new algorithms or enhancing existing ones. For instance, Zhu Fang et al. [] introduced a good point set strategy during the initialization phase of the dung beetle optimization algorithm to increase population diversity. They also proposed a new nonlinear convergence factor to balance exploration and exploitation within the algorithm, as well as a dynamic balancing strategy between the number of dung beetles spawning and foraging. Additionally, they introduced a strategy based on perturbations from quantum computation and t-distributions to promote the algorithm’s ability of optimization search. Ya Shen et al. [] proposed an improved whale optimization algorithm based on multiple population evolution to address the defects of the whale optimization algorithm, which has a slow convergence speed and easily falls into a local optimum. The population is further divided into three sub-populations based on the fitness value: exploratory population, exploitative population, and fitness population, and each sub-population performs a different updating strategy, and then it is experimentally verified that this multiple swarms co-evolutionary strategy can effectively enhance the algorithm’s optimization search capability. Yiying Zhang et al. [] proposed an EJaya algorithm, whose local exploitation strategy enhances the local search capability by defining upper and lower bound local attraction points (combining the current optimal, worst, and population mean information), while the global exploration strategy expands the search scope by using random perturbations of historical population information to avoid stagnation at the later stage of the algorithm. Dhargupta et al. [] proposed a Selective Opposition-based Grey Wolf Optimizer (SOGWO) that applies dimension-selective opposition learning to enhance exploration efficiency. By identifying -wolves through Spearman’s correlation analysis, the algorithm strategically targets low-status individuals for opposition learning, thereby reducing redundant search efforts and improving convergence speed while maintaining exploration–exploitation balance. Shuang Liang et al. [] proposed an Enhanced Sparrow Search Swarm Optimizer (ESSSO); firstly, ESSSO introduces an adaptive sinusoidal walking strategy (SLM) based on the von Mises distribution, which enables individuals to dynamically adjust the learning rate to improve the evolutionary efficiency; secondly, a learning strategy with roulette wheel selection (LSR) is adopted to maintain the population diversity and prevent premature convergence; furthermore, a two-stage evolutionary strategy (TSE) is designed, which includes a mutation mechanism (AMM) to enhance the local searching ability and accelerate the algorithm’s convergence rate through a selection mechanism (SMS). Through the study, it is shown that the appropriately improved intelligent algorithm has better adaptability and effectiveness in some complex applications, and also has certain advantages compared with other heuristic algorithms.

The Red-billed Blue Magpie Optimizer (RBMO) [] is a novel group intelligence-based metaheuristic algorithm inspired by the hunting behavior of red-billed blue magpies, which rely on community cooperation for activities such as searching for food, attacking prey, and food storage. The original paper applied the RBMO algorithm to various domains, including numerical optimization, engineering design problems, and UAV path planning. While the RBMO algorithm boasts a simple structure, minimal parameter requirements, and effective optimization performance, it still faces challenges in achieving optimal results for complex optimization problems. To address these challenges, we propose an improved version of the algorithm, termed the Multi-Strategy Enhanced Red-billed Blue Magpie Optimizer (MRBMO). This enhancement involves the design of a new boundary constraint and the establishment of an innovative prey attack model, which collectively improve the algorithm’s exploration and exploitation balance as well as its overall performance. The primary contributions of this paper are outlined as follows:

- A new boundary constraint processing method is designed and verified to accelerate the convergence speed and improve the exploitation ability of the algorithm. The method can also be used for other algorithms that requiring boundary processing.

- Inspired by particle swarm optimization (PSO), a new update method is redesigned in the development stage of the RBMO algorithm, which mainly introduces the optimal information of individual history to guide the algorithm to deeply explore the solution space. The step size is controlled by Lévy Flight to avoid the algorithm falling into the local optimal solution.

- The proposed MRBMO is evaluated on 41 benchmark functions. And its optimization performance is compared to eight other of the most advanced and high-performance algorithms. The MRBMO was successfully applied to four classical engineering design problems.

This paper is organized as follows. Section 2 introduces the foundational principles of the Red-billed Blue Magpie Optimization (RBMO) algorithm. In Section 3, we propose a multi-strategy enhanced version of the algorithm (MRBMO) to address the limitations of the original RBMO. Section 4 presents a comprehensive experimental comparison between MRBMO and eight other state-of-the-art optimization algorithms. To further validate the practical utility of the proposed improvements, Section 5 demonstrates the application of MRBMO in real-world engineering design problems. Finally, Section 6 summarizes the key contributions and findings of this study.

2. Red-Billed Blue Magpie Optimizer

In metaheuristic algorithms, exploration (diversification) and exploitation (intensification) work in tandem to determine the performance and efficiency of the algorithm []. On one hand, the algorithm must identify more promising regions by searching a broader space; on the other hand, it must also focus its resources on the in-depth development of these promising areas. Striking a balance between exploration and exploitation is often one of the primary challenges in the design of intelligent optimization algorithms []. An excessive inclination towards exploration can result in slow convergence and high computational costs, while an overemphasis on exploitation may cause the algorithm to prematurely converge to local optima, preventing it from discovering a globally optimal solution. In the Red-billed Blue Magpie Optimizer (RBMO), exploration and exploitation correspond to searching for prey and attacking prey, respectively.

2.1. Searching for Prey

When searching for prey, red-billed blue magpies usually operate in small groups (2–5 individuals) or collectively (more than 10 individuals) to exchange information. Therefore, two updating models were designed, with small groups and collective actions corresponding to Equations (1) and (2), respectively.

where t denotes the current iteration number, denotes the i-th new search agent position, p and q denote the number of randomly selected individuals from the population, p ranges between 2 and 5, and q ranges between 10 and n, n is the size of the population, denotes the m-th randomly selected individual, denotes the i-th individual, and denotes the randomly selected search agent in the current iteration, the and denote two independent uniformly distributed random variables, each defined over the interval [0, 1).

2.2. Attacking the Prey

In small group operations, the main targets are small prey or plants. The corresponding mathematical model is shown in Equation (3). When acting in a collective manner, red-billed blue magpies are able to collectively target larger prey, such as large insects or small vertebrates. The mathematical representation of this behavior is shown in Equation (4).

where represents the position of the food; it represents the solution with the minimum fitness value in the current iteration (i.e., the current best solution). are coefficients that decrease nonlinearly with the number of iterations for adaptive control of the search step, T denotes the maximum number of iterations, and and represent a random number used to generate a standard normal distribution (mean 0, standard deviation 1).

2.3. Food Storage

In the original RBMO, the food storage behavior is defined as a greedy rule, and the new location is reserved only when the new generation fitness value is better, such as Equation (5).

where and represent the fitness values before and after position updating for the i-th Red-billed Blue Magpie, respectively.

2.4. Detailed Process of the RBMO

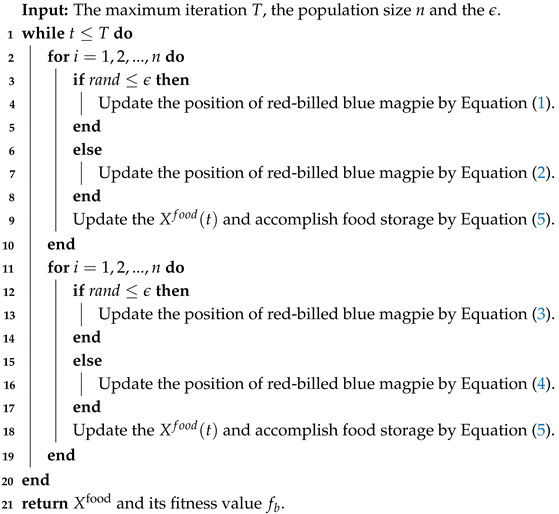

A control parameter has been incorporated into the RBMO algorithm to enable adaptive selection between different update strategies. Specifically, the overall framework of the RBMO is shown as Algorithm 1.

| Algorithm 1: The framework of the RBMO algorithm |

|

3. The Multi-Strategy Red-Billed Blue Magpie Optimizer

3.1. A New Way to Handle Boundary Constraints

In the original algorithm, when the dimension overstep occurs in the iterative process of the solution vector, the dimension overstep is usually assigned to the boundary in the way shown in Equation (6); this method is also used by most intelligent algorithms to transgress []. However, this method cannot effectively use historical search information, which can easily lead to premature loss of population diversity, which is not conducive to population convergence.

This study introduces a dynamic boundary correction strategy incorporating elite-guided dimensional adaptation. As formalized in Equation (7), the mechanism dynamically replaces out-of-bound dimension values with those from the global optimum’s corresponding dimensions. By leveraging dimensional information from elite individuals, this approach achieves three key advantages: (1) It establishes directional guidance through preferential dimension inheritance; (2) it fosters dimensional collaboration via cross-dimensional information exchange; and (3) it enhances exploitation capability through adaptive recombination of advantageous dimensional traits. The strategy effectively transforms isolated boundary handling into a coordinated optimization process, enabling more efficient exploitation of promising search regions while maintaining exploration diversity.

Here, d represents the dimension index (), where D is the total number of dimensions in the search space. and denote the upper and lower bounds of the d-th dimension, respectively, ensuring the solution remains within the feasible search region.

3.2. Individual Optimal Value Guidance

In the classical Particle Swarm Optimization (PSO) framework, the individual location updating mechanism integrates a dual guidance strategy that utilizes both the individual historical optimal solution (pbest) and the group historical optimal solution (gbest). This memory-driven approach effectively balances global exploration with local exploitation, providing a theoretical guarantee for algorithm convergence. However, in the original prey attack model of RBMO, the location update process only considered the one-way guidance of the global optimal solution. This limitation resulted in two significant drawbacks: (1) The neglect of individual cognitive experience led to a premature attenuation of population diversity; (2) The unipolar guidance mode is susceptible to causing search stagnation.

To address the aforementioned challenges, this study introduces an individual optimal value guidance strategy based on Lévy Flight [] during the attack phase on prey. This strategy has dual optimization characteristics: first, the optimal solution for each individual by establishing a memory bank of individual optimal solutions; second, it employs the Lévy Flight distribution to generate the search step size. The power-law step size distribution, characterized by its heavy-tail property, effectively accommodates both local fine search and global mutation exploration. The combination of short-range, high-frequency jumps and long-range, low-frequency jumps produced by Lévy Flight significantly increases the probability of escaping local optimal while ensuring population diversity. In this study, the Mantegna algorithm was used to achieve efficient Lévy Flight simulation, and the step calculation process is shown in Equation (8).

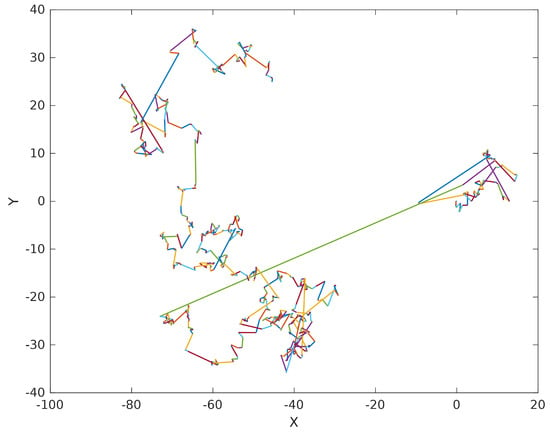

where denotes the shape parameter, usually , Γ represents the gamma function, and s specifies the step size. Figure 1 illustrates the motion trajectory of Lévy Flight, whose random walk mechanism alternating between long and short step lengths can enhance the algorithm’s global search efficiency and its local optima avoidance capabilities []. The updated attack prey position is subsequently determined by Equations (9) and (10).

Figure 1.

Lévy Flight Random Walk.

Here, denotes the historical best value of the i-th individual at the t-th iteration, and its update rule is defined in Equation (11). It is worth noting that represents the objective function.

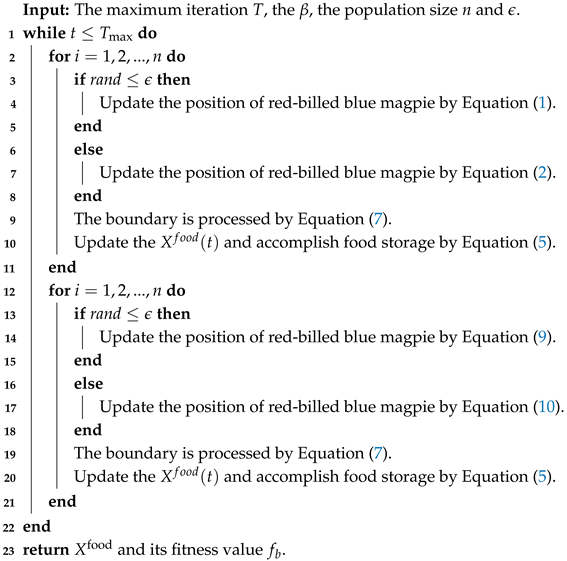

3.3. Detailed Process of the MRBMO

The overall framework of the Multi-strategy Red-billed Blue Magpie Optimizer (MRBMO) algorithm is presented in Algorithm 2. Crucially, this enhanced implementation introduces no additional computational overhead compared to the baseline, thus preserving equivalent time complexity.

| Algorithm 2: The framework of the MRBMO algorithm |

|

4. Numerical Experiment

The CEC2017 [] test set is a set of benchmark functions for evaluating and comparing the performance of intelligent optimization algorithms. The test suite consists of 29 single-objective functions, covering a variety of complexities and diversity, designed to simulate real-world optimization problems and provide a fair evaluation platform for algorithmic researchers. This suite incorporates unimodal functions (F1–F2), which are characterized by a single global optimum devoid of local optima. These functions are primarily employed to evaluate the convergence velocity and exploration efficiency of optimization algorithms. Simple multimodal functions (F3–F9), containing multiple local optima, are used to evaluate the ability of the algorithm to avoid falling into local optima. Hybrid functions (F10–F19), which are composed of multiple basic functions, have a complex search space and test the performance of the algorithm when dealing with nonlinear and discontinuous problems. Composition functions (F20–F29), which are generated by multiple basic functions through rotation, migration and other operations, have higher complexity and are used to evaluate the performance of algorithms in high-dimensional and complex problems. The CEC2022 [] benchmark function set is similar to CEC2017, with a total of 12 test functions: Unimodal functions, Basic functions, Hybrid functions, and Composition functions. CEC2022 is further optimized in terms of function complexity, diversity, and real-world problem simulation, enabling a more comprehensive evaluation of algorithm performance in different types of optimization problems. CEC2017 and CEC2022 test functions are detailed in Table A1 and Table A2.

4.1. Parameters Sensitivity Testing

In the stage of attacking prey, Lévy Flight is introduced as the step size. The parameter of Lévy Flight is a constant, and its value significantly influences the performance of the algorithm. To select the parameters and in the original algorithm, this paper designs a set of experiments for analysis. Specifically, we consider and . The CEC2022 benchmark was utilized in the test set, with the dimension of the vector X set to 20; the maximum number of iterations was 500, the population size was 30, and the experiment was repeated 30 times. The experimental results are summarized in Table 1, which documents the average performance metrics and the corresponding rankings for each parameter configuration across 30 independent trials. To statistically evaluate the overall algorithmic performance, the Friedman rank sum test was applied to the ranked results, with lower average ranks indicating superior performance. In particular, the combination of parameters and consistently achieved optimal performance on multiple benchmark functions. Based on these findings, this configuration is proposed as the recommended parameter setting for the proposed algorithm.

Table 1.

The influence of MRBMO’s parameters ( and ) tested on CEC2022.

4.2. Ablation Experiment

To ascertain the impact of the enhanced strategy introduced in this paper on the performance of the algorithm, an ablation experiment is meticulously designed in this section. Building upon the foundational RBMO algorithm, the first modification involves refining the out-of-bounds processing method, hereafter referred to as RBMO1. Subsequently, the hunting behavior within the original RBMO algorithm is revised, and a novel update mechanism, designated as RBMO2, is conceptualized. These two enhancements are then integrated to form MRBMO, which represents the ultimate refined algorithm presented in this study. A comparative analysis of RBMO1, RBMO2, and MRBMO against the original RBMO algorithm is conducted to comprehensively assess the magnitude of the improvements achieved.

The CEC2022 benchmark suite was utilized to evaluate algorithm performance, with metrics including minimum and average values, standard deviations, and Friedman rankings calculated for each test function (Table 2). For the unimodal function F1, both RBMO1 and RBMO2 achieved superior values compared to RBMO, indicating that both strategies enhanced the performance of RBMO to varying degrees. A closer examination reveals that RBMO2 attained not only smaller values but also exhibited greater stability than RBMO1, suggesting that the second strategy contributed more significantly to the improvement of RBMO. For the hybrid function F6, analysis of average values reveals that implementing either strategy individually compromised the algorithm’s ability to escape local optima. However, their synergistic combination demonstrated superior performance, yielding solutions closest to the theoretical optimum. On composite functions F9–F12, the combination of the two strategies enhanced the convergence performance of RBMO. However, there remains a discrepancy from the theoretical optimum. Observing the final mean rank, both strategies enhance the overall performance of RBMO to varying degrees, with their synergistic combination yielding the most favorable outcomes.

Table 2.

Ablation experiment of CEC2022.

4.3. Comparison of MRBMO with State-of-the-Art and Well-Established Algorithms

The comparison algorithms selected for this study encompass a diverse range of optimization techniques. These include the original Red-billed Blue Magpie Optimizer (RBMO), and two advanced algorithms: the Selective Opposition-based Grey Wolf Optimizer (SOGWO) [] and the Transient Trigonometric Harris Hawks Optimizer (TTHHO) []. Additionally, the classical Particle Swarm Optimization (PSO) [] is included, along with more recently developed methods, such as the Sparrow Search Algorithm (SSA) [] and the RIME Optimization Algorithm (RIME) []. Top-performing algorithms from the CEC competitions LSHADE_SPACMA [] are also incorporated. The parameter settings for each algorithm were strictly configured according to the recommendations provided in their respective original publications.

To further verify the effectiveness of the improved algorithm, the CEC2017 test function suite was used to fully verify the algorithm performance. First of all, the mean value, standard deviation, and ranking of the MRBMO and the comparison algorithm running 30 times with dimension 30, 50, and 100 were counted, respectively, as shown in Table 3, Table 4 and Table 5.

Table 3.

Statistics results of 30D.

Table 4.

Statistics results of 50D.

Table 5.

Statistics results of 100D.

- When the dimension is 30, MRBMO achieved first-ranked average values for the unimodal functions F1 and F2. These results demonstrate the algorithm’s superior exploration capabilities. For simple multimodal functions, MRBMO achieved first-place average scores in F3–F9, outperforming all other algorithms. These results indicate that MRBMO possesses strong local-optimum avoidance capabilities. However, an examination of the standard deviation values reveals significant fluctuations in the results obtained by MRBMO. In Hybrid function F17, MRBMO (2.56E+03) is slightly inferior to RBMO (2.42E+03), but significantly superior to other algorithms. Looking at the standard deviation, it can be found that MRBMO has a lower standard deviation in most functions, and achieves the second or third standard deviation in very few functions. It shows that its performance is highly stable in different problems with little fluctuation, strong adaptability to problem characteristics, and better robustness.

- Furthermore, we investigate the impact of dimensionality changes on the performance of MRBMO. Notably, in experiments with 50 and 100 dimensions, MRBMO consistently maintains a stable first-place average ranking across most test functions. This indicates that as the dimensionality increases, its search performance does not deteriorate. Collectively, MRBMO remains highly competitive in addressing complex high-dimensional optimization challenges.

A statistical analysis was conducted on the rankings of each algorithm across 29 benchmark functions in 30 dimensions. As illustrated in Figure 2, the MRBMO algorithm demonstrates superior performance, securing first place on 26 test functions while attaining second place on three functions (F17, F21 and F28). This dominance can be attributed to its multi-strategy framework, which effectively balances exploration and exploitation through dynamic boundary constraint handling and elite-guided Lévy Flight mechanisms. In contrast, other algorithms, such as SSA and PSO, exhibit significant performance fluctuations, demonstrating competitive results on specific functions while showing limited effectiveness on others.

Figure 2.

The radar of all algorithms.

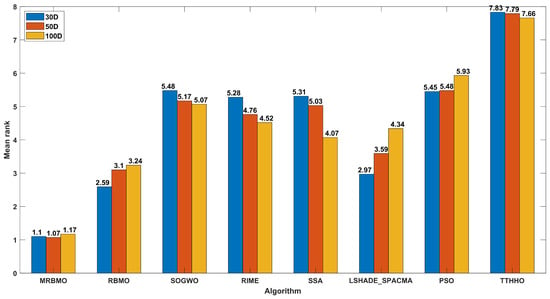

Furthermore, to rigorously evaluate the comprehensive performance of all algorithms, the Friedman rank-sum test was employed. A bar chart depicting the mean ranks for all algorithms is presented in Figure 3, where lower numerical rankings indicate superior performance. The results demonstrate that across all tested dimensionalities, the MRBMO algorithm consistently attained the lowest average rank. This indicates the excellent robustness and insensitivity to dimension changes of MRBMO.

Figure 3.

The results of the Friedman rank sum test of CEC2017.

In this study, the Wilcoxon rank sum test [], a nonparametric statistical method, was utilized to evaluate whether the performance differences between the improved method and the comparative algorithms are statistically significant. The test was conducted at a significance level of 0.05. A p-value lower than 0.05 indicates a significant difference between the two algorithms being compared, while a p-value of 0.05 or higher suggests that the performance of the two algorithms is not significantly different and can be considered comparable. The test results are presented in Table A3, Table A4 and Table A5, with data points exhibiting p-values greater than 0.05 highlighted in bold font. The experimental results are summarized in Table 6, where denotes the number of comparisons showing statistically significant differences, and represents the number of comparisons without statistically significant differences, with the final row presenting the aggregate results. It can be observed that the proposed method demonstrates statistically significant differences compared to competing algorithms in most test functions, and its superiority is substantiated.

Table 6.

Overall results of the Wilcoxon rank sum test on CEC2017.

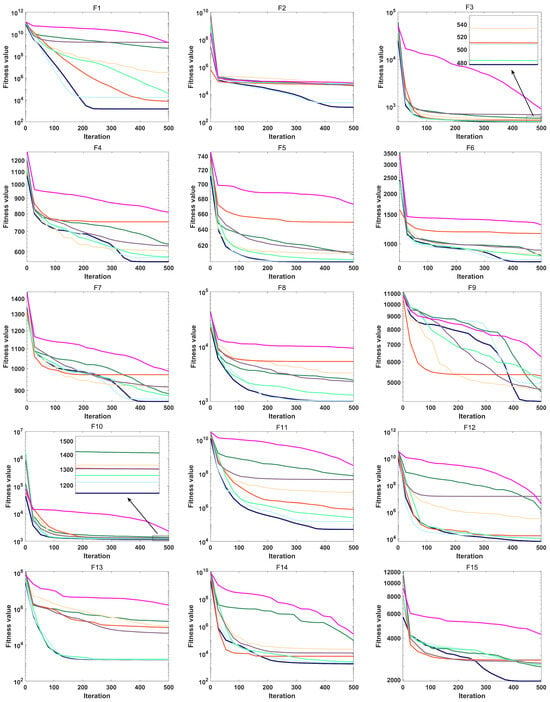

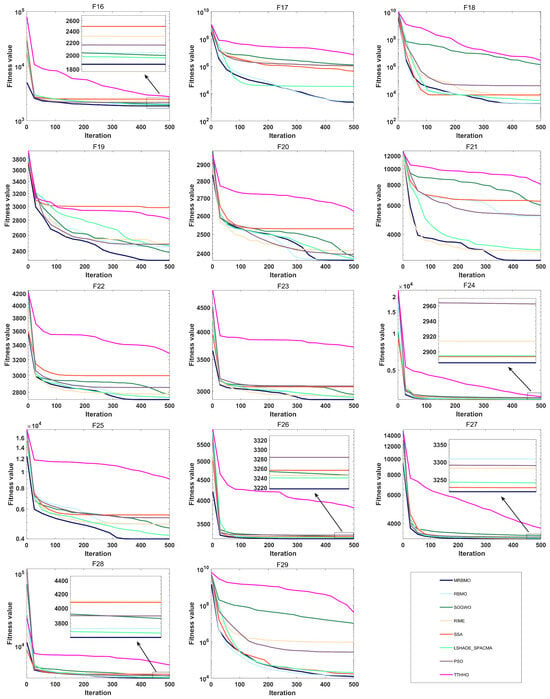

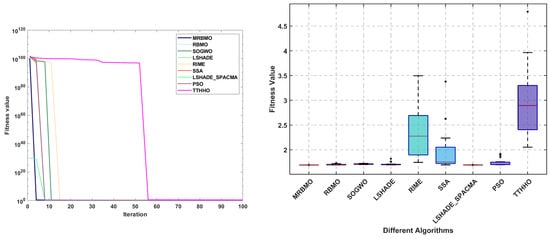

4.4. Assessing Convergence Performance

To assess both the accuracy and the convergence speed of the algorithms, convergence curves were plotted for MRBMO and the other algorithms at dimension 30, as illustrated in Figure 4 and Figure 5. It is worth noting that in each subplot, the horizontal axis represents the number of iterations, while the vertical axis represents the average convergence curve over 30 runs.

Figure 4.

Convergence curve of F1–F15.

Figure 5.

Convergence curve of F16–F29.

- During the optimization process of the unimodal test function F1, MRBMO exhibits faster convergence speed compared to other comparative algorithms. Notably, the MRBMO algorithm benefits from its enhanced global search capability, enabling it to obtain higher-quality feasible solutions. This advantageous characteristic is further validated in the optimization process of another unimodal function F2.

- During the optimization processes of simple multimodal functions F3 and F8, the MRBMO algorithm did not demonstrate significant competitive advantages. However, when addressing functions F4, F5, F6, and F7, MRBMO exhibited unique optimization characteristics as other comparative algorithms became trapped in local optima stagnation: Not only did the algorithm avoid convergence stagnation, but it also obtained superior solutions through significantly accelerated convergence, thereby validating the advancement of its local optima avoidance mechanism. Notably, in the optimization scenario of function F9, although MRBMO showed lower convergence rates than the SSA, RIME, and PSO algorithms during the initial phase (iteration count < 300), these contrast algorithms were constrained by premature convergence due to their inability to escape local extremum constraints. In stark contrast, MRBMO demonstrated stronger sustained optimization capability in later stages (iteration count > 300), eventually achieving gradual approximation to the global optimum. This phenomenon highlights the algorithm’s superiority in long-term convergence performance. Such phased performance disparities validate the design advantages of MRBMO in maintaining an exploration–exploitation balance, enabling it to demonstrate enhanced global convergence characteristics when addressing complex optimization problems.

- In the hybrid benchmark function tests, the MRBMO algorithm demonstrated significant performance advantages. Specifically, for the F11, F12, F14, F15, and F19 functions, this algorithm exhibited marked superiority over all reference algorithms in both key metrics: convergence rate and solution accuracy. Regarding the optimization of the F10 function, MRBMO showed the fastest convergence characteristics during the initial iteration phase (<100 iterations), with its solution quality rapidly approaching the theoretical optimum. During optimization processes for the F13, F17, and F18 functions, MRBMO achieved comparable convergence performance with the RBMO and LSHADE_SPACMA algorithms, with all three outperforming other comparative algorithms. Regarding the F16 function, in the early iteration stage, MRBMO merely maintained convergence levels comparable to those of other algorithms, without showcasing any remarkable superiority. In the later iteration stage, however, through continuous exploration, MRBMO managed to discover higher-quality solutions.

- The proposed MRBMO algorithm exhibits superior convergence rates for multiple composition functions. This is particularly evident in the test cases of F20, F21, F22, F23, F25, and F29. By contrast, for functions F24, F26, F27, and F28, the convergence performance of MRBMO is comparable to that of other comparative algorithms.

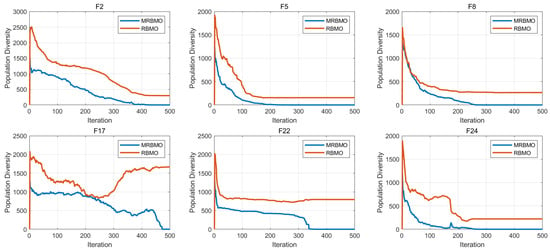

4.5. Population Diversity Analysis

Maintaining adequate population diversity throughout the optimization process is a critical factor influencing the performance of metaheuristic optimization algorithms, as highlighted in recent studies. Population diversity refers to the distribution of individuals within the search space and plays a pivotal role in balancing exploration and exploitation. When diversity is relatively high, individuals are more broadly distributed across the search space. This enhances the algorithm’s ability to explore various regions, thereby reducing the likelihood of becoming trapped in local optima. Conversely, lower levels of diversity may result in premature convergence, where the algorithm stagnates around suboptimal solutions due to insufficient exploration.

This section employs Equation (12) [] to quantify population diversity. In this equation, the parameter represents the degree of dispersion of the population relative to its center of mass during each iteration t. Specifically, denotes the value of the d-th dimension of the i-th individual at iteration t. Here, n represents the population size, and D is the total number of dimensions in the search space. By calculating the distance of each individual from the center of mass, this metric provides a numerical measure of how dispersed the population is at any given point in the optimization process.

Figure 6 compares the population diversity dynamics of RBMO and MRBMO on CEC2017 benchmark functions on 30 dimensions. The figure reveals that population diversity generally exhibits a declining trend throughout the optimization process. This decline is expected, as the optimization progresses and the algorithm increasingly focuses on refining solutions near the optimal region. However, a notable difference emerges between RBMO and MRBMO: MRBMO demonstrates consistently lower diversity compared to RBMO. This phenomenon can be attributed to the boundary constraint strategy implemented in MRBMO. This strategy is designed to guide individuals toward feasible regions of the search space, potentially accelerating convergence toward the current optimal solution. While this approach enhances exploitation by focusing the search around promising areas, it simultaneously reduces the breadth of exploration.

Figure 6.

Population diversity analysis.

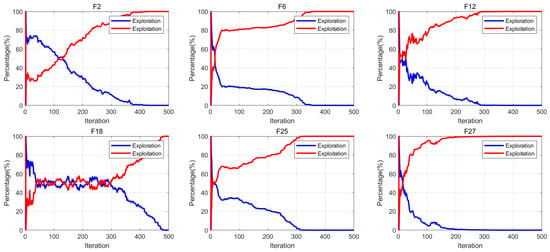

4.6. Exploration and Exploitation Evaluation

During evolutionary optimization processes, metaheuristic algorithms employ distinct coordination mechanisms to balance exploration and exploitation among population members. This section uses a methodology [] to quantify algorithmic search behavior through tracking dynamic variations in individual dimensional components, thereby enabling quantitative assessment of exploration–exploitation tendencies throughout the evolutionary process []. In this section, the exploration–exploitation balance of the algorithm is evaluated through the formulation defined in Equation (13). Where X denotes the population matrix at the t-th iteration (structured as an matrix), quantifies the dimensional diversity metric, corresponds to the median value of the d-th dimension across all individuals at iteration t, while indicates the d-th dimensional value of the i-th individual. The exploration-to-exploitation ratio (ERP/ETP) corresponds to percentage-based metrics derived from these measurements. The experimental findings are comprehensively illustrated in Figure 7. It can be observed that as the iterations proceed, the algorithm’s exploitation curve progressively ascends towards 100%, while the exploration curve correspondingly diminishes to zero. This pattern indicates that the algorithm predominantly engages in exploratory behaviors during the initial stages, shifting its emphasis to exploitation in subsequent phases.

Figure 7.

Exploration and exploitation evaluation.

5. Engineering Design Application

The engineering design optimization problem seeks to achieve optimal system performance through mathematical modeling and algorithmic solutions while satisfying physical constraints and performance metrics. To validate the efficacy of the proposed methodology, four engineering design problems were adopted in this section. For experimental rigor, identical parameters were configured: a population size of 30, a maximum iteration count of 100, and 30 independent trials to mitigate stochastic interference. Statistical metrics including the best value, mean, median, worst value, and standard deviation were systematically documented for each algorithm. Furthermore, convergence curves and box plots were generated to visualize the solution distributions and search efficiency across algorithmic approaches.

5.1. Extension/Compression Spring Design (TCSD)

The extension/compression spring design problem [], illustrated in Figure 8, seeks to minimize the spring weight by optimizing parameters such as the wire diameter (d), average coil diameter (D), and the number of active coils (N). This problem endeavors to identify the optimal parameter combination to achieve the desired performance while simultaneously minimizing the spring weight, thereby facilitating efficient and lightweight spring design for diverse applications. The mathematical model can be found in Appendix B.1.

Figure 8.

Curve and box plots of TCSD.

The statistical results of 30 independent operations are presented in Table 7. It can be observed that, when compared with RMBO, the method proposed in this paper reveals improvements in the average value, the worst value, and the standard deviation. Nevertheless, it should not be overlooked that there still exist disparities with LSHADE_SPACMA and LSHADE in relation to different indicators. As demonstrated in Figure 8, during the initialization phase, all algorithms present relatively high fitness value distributions. As the iterations advance, they converge rapidly. A further analysis of the statistical characteristics of boxplots indicates that the MRBMO, RBMO, LSHADE, and LSHADE_SPACMA algorithms display a compact interquartile range (IQR), which affirms the strong stability of their optimization processes. Notably, MRBMO has the lowest occurrence of outliers, accentuating its superiority in solution quality control.

Table 7.

Statistical results of TCSD.

5.2. Reducer Design Problem (RDP)

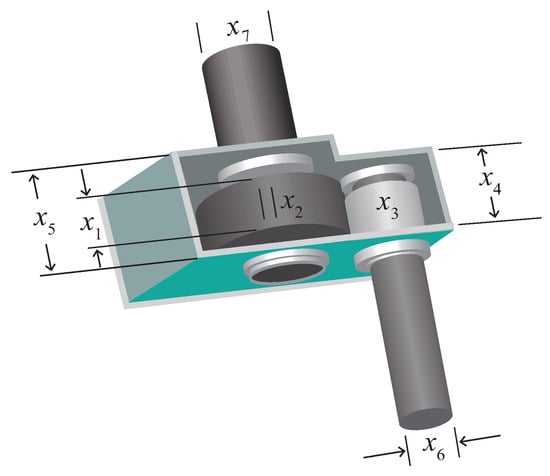

The schematic diagram of the speed reducer design problem [] is depicted in Figure 9. The problem involves seven design variables: end face width (), number of tooth modules (), number of teeth in the pinion (), length of the first shaft between the bearings (), length of the second shaft between the bearings (), diameter of the first shaft (), and diameter of the second shaft (). The objective of the problem is to minimize the total weight of the gearbox by optimizing seven variables. The mathematical model can be found in Appendix B.2.

Figure 9.

Structure diagram of RDP.

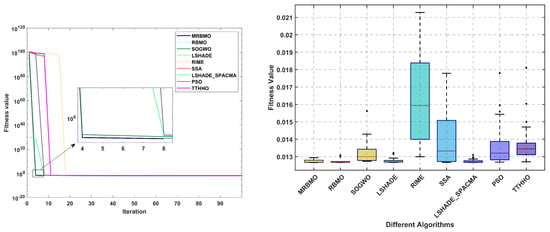

Table 8 presents the statistical results, with the optimal outcomes highlighted in bold. It is evident that MRBMO achieves the most favorable results in terms of the best, mean, and median values, ranking second only to LSHADE in the worst and standard deviation categories. Subsequently, the convergence curves and box plots of the algorithms are illustrated in Figure 10. An analysis of the convergence curves reveals that, with the exception of the TTHHO algorithm—which exhibits a significantly slower convergence pace, all the compared algorithms demonstrate comparable convergence speeds. The box plots summarize the distribution of fitness values obtained from 30 independent runs for each algorithm, encompassing the median, quartiles, and outliers. The TTHHO algorithm features a substantially larger box plot accompanied by numerous outliers, indicating significant performance variability and multiple data points that deviate markedly from the central values. Conversely, the other algorithms exhibit more compact box plots, reflecting a higher degree of stability in their performance.

Table 8.

Statistical results of RDP.

Figure 10.

Curve and box plots of RDP.

5.3. Welded Beam Design Problem (WBD)

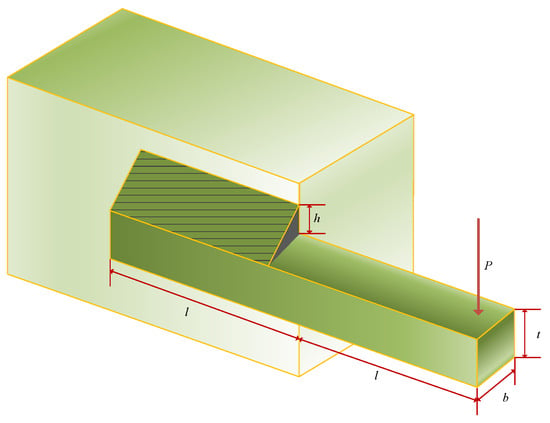

The objective of the welded beam design problem [] is to minimize the cost of the welded beam. As shown in Figure 11, the welded beam design problem exists with four parametric variables: weld thickness (h), length of the connected portion of the bar (l), height of the bar (t), and thickness of the reinforcement bar (b). The mathematical model can be found in Appendix B.3.

Figure 11.

Structure diagram of WBD.

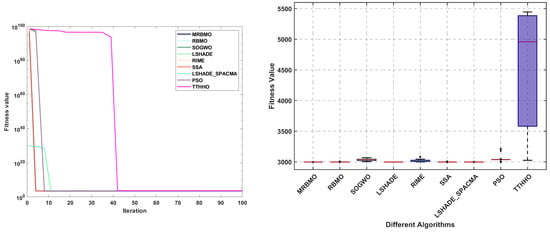

The statistical results of 30 independent operations are shown in Table 9. It can be observed that MRBMO achieves optimal values in all statistical metrics, demonstrating excellent stability. Figure 12 presents the convergence curves and box plots of all algorithms. The analysis reveals that the fitness values of the cost function are relatively large during initialization, but most algorithms subsequently converge rapidly to superior values. In the box plots, MRBMO and LSHADE_SPACMA exhibit relatively shorter box lengths, indicating that the distribution of fitness values obtained from 30 independent runs is more concentrated, suggesting potentially higher algorithmic stability. Conversely, RIME, SSA and TTHHO display noticeable outliers (denoted by the ’+’ symbols), implying these algorithms may occasionally yield exceptionally superior or inferior fitness values under specific conditions.

Table 9.

Statistical results of WBD.

Figure 12.

Curve and box plots of WBD.

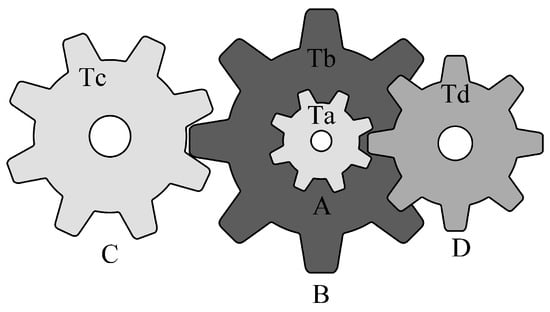

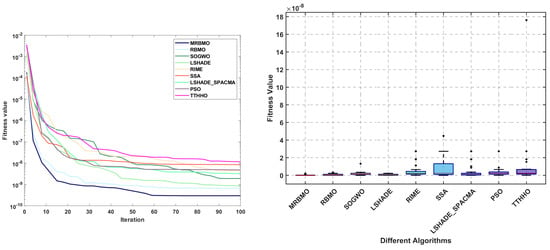

5.4. Gear Train Design Problem (GTD)

The primary objective of the gear train design problem [] is to minimize the specific cost of the transmission. As illustrated in Figure 13, the design variables for this problem consist of four gear quantities: , , , and . The mathematical model is presented in Appendix B.4.

Figure 13.

Structure diagram of GTD.

The statistical results of the algorithms are presented in Table 10. It is evident that all algorithms achieved comparable optimal solutions across the thirty independent trials. In terms of the mean, median, and standard deviation, MRBMO demonstrates enhanced stability in locating superior solutions. Notably, the worst-case values indicate that MRBMO and RBMO are of the same order of magnitude, significantly outperforming other algorithms and further validating their stability in GTD problems. Figure 14 illustrates the convergence curves and box plots, where MRBMO maintains a faster convergence rate throughout the entire process while exhibiting the fewest outliers, which substantiates its superior stability.

Table 10.

Statistical results of GTD.

Figure 14.

Curve and box plots of GTD.

6. Conclusions

This study proposes a Multi-Strategy Enhanced Red-billed Blue Magpie Optimizer (MRBMO) to address the limitations of convergence efficiency and solution precision inherent in the original RBMO algorithm. The primary innovations of MRBMO include the design of a novel dynamic boundary constraint processing mechanism, the introduction of an elite guidance strategy during the predation stage, and the implementation of a Lévy Flight strategy to adjust the search step size. These synergistic modifications effectively reconcile the exploration–exploitation trade-off in metaheuristic optimization.

Comprehensive experimental evaluations using the standardized CEC2017 and CEC2022 benchmark suites demonstrate that MRBMO outperforms classical optimizers (SOGWO, TTHHO, PSO) and achieves competitive results against a state-of-the-art algorithm (LSHADE_SPACMA). Nonparametric statistical analyses (Friedman rank-sum test and the Wilcoxon signed-rank test) statistically validate MRBMO’s superiority across multiple dimensional configurations (30D, 50D, 100D), confirming its algorithmic robustness. To systematically evaluate the contribution of each methodological enhancement, a comprehensive ablation study was conducted to quantify the individual effects of the proposed algorithmic components on overall performance. Furthermore, an experiment was carried out to assess the method’s capacity for balancing exploration and exploitation mechanisms, while a thorough analysis was performed on the algorithm’s population diversity characteristics to evaluate its evolutionary dynamics.

To validate the performance of the proposed method in practical applications, MRBMO was applied to four engineering design optimization problems: extension/compression spring design, reducer design, welded beam design, and gear train design. The experimental results demonstrate that MRBMO not only achieves superior convergence accuracy and speed but also exhibits enhanced stability and robustness.

Author Contributions

Conceptualization, B.H. and X.W.; methodology, Z.G.; software, L.W.; validation, M.Y., X.W. and L.W.; formal analysis, M.Y.; investigation, Z.G.; resources, B.H.; data curation, M.Y.; writing—original draft preparation, M.Y.; writing—review and editing, L.W.; visualization, Z.G.; supervision, B.H.; project administration, X.W.; funding acquisition, B.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Test Function Suite Details and Results of the Rank Sum Test

Table A1.

CEC2017 Test function details.

Table A1.

CEC2017 Test function details.

| Type | No. | Functions | Minimum Value |

|---|---|---|---|

| Unimodal functions | 1 | Shifted and Rotated Bent Cigar Function | 100 |

| 2 | Shifted and Rotated Zakharov Function | 200 | |

| Simple multimodal functions | 3 | Shifted and Rotated Rosenbrock’s Function | 300 |

| 4 | Shifted and Rotated Rastrigin’s Function | 400 | |

| 5 | Shifted and Rotated Expanded Scaffer’s F6 Function | 500 | |

| 6 | Shifted and Rotated Lunacek Bi_Rastrigin Function | 600 | |

| 7 | Shifted and Rotated Non-Continuous Rastrigin’s Function | 700 | |

| 8 | Shifted and Rotated Lecy Function | 800 | |

| 9 | Shifted and Rotated Schwefel’s Function | 900 | |

| Hybrid functions | 10 | Hybrid Function 1 (N = 3) | 1000 |

| 11 | Hybrid Function 2 (N = 3) | 1100 | |

| 12 | Hybrid Function 3 (N = 3) | 1200 | |

| 13 | Hybrid Function 4 (N = 4) | 1300 | |

| 14 | Hybrid Function 5 (N = 4) | 1400 | |

| 15 | Hybrid Function 6 (N = 4) | 1500 | |

| 16 | Hybrid Function 6 (N = 5) | 1600 | |

| 17 | Hybrid Function 6 (N = 5) | 1700 | |

| 18 | Hybrid Function 6 (N = 5) | 1800 | |

| 19 | Hybrid Function 6 (N = 6) | 1900 | |

| Composition functions | 20 | Composition Function 1 (N = 3) | 2000 |

| 21 | Composition Function 2 (N = 3) | 2100 | |

| 22 | Composition Function 3 (N = 4) | 2200 | |

| 23 | Composition Function 4 (N = 4) | 2300 | |

| 24 | Composition Function 5 (N = 5) | 2400 | |

| 25 | Composition Function 6 (N = 5) | 2500 | |

| 26 | Composition Function 7 (N = 6) | 2600 | |

| 27 | Composition Function 7 (N = 6) | 2700 | |

| 28 | Composition Function 9 (N = 3) | 2800 | |

| 29 | Composition Function 10 (N = 3) | 2900 |

Table A2.

CEC2022 Test function details.

Table A2.

CEC2022 Test function details.

| Type | No. | Functions | Minimum Value |

|---|---|---|---|

| Unimodal functions | 1 | Shifted and full Rotated Zakharov Function | 300 |

| Basic functions | 2 | Shifted and full Rotated Rosenbrock’s Function | 400 |

| 3 | Shifted and full Rotated Expanded Schaffer’s f6 Function | 600 | |

| 4 | Shifted and full Rotated Non-Continuous Rastrigin’s Function | 800 | |

| 5 | Shifted and full Rotated Levy Function | 900 | |

| Hybrid functions | 6 | Hybrid Function 1 (N = 3) | 1800 |

| 7 | Hybrid Function 2 (N = 6) | 2000 | |

| 8 | Hybrid Function 3 (N = 5) | 2200 | |

| Composition functions | 9 | Composition Function 1 (N = 5) | 2300 |

| 10 | Composition Function 2 (N = 4) | 2400 | |

| 11 | Composition Function 3 (N = 5) | 2600 | |

| 12 | Composition Function 4 (N = 6) | 2700 |

Table A3.

The results of Wilcoxon rank sum test p-value on CEC2017 when D = 30.

Table A3.

The results of Wilcoxon rank sum test p-value on CEC2017 when D = 30.

| Function | RBMO | SOGWO | RIME | SSA | LSHADE_SPACMA | PSO | TTHHO |

|---|---|---|---|---|---|---|---|

| F1 | 9.211E-05 | 3.020E-11 | 3.020E-11 | 5.692E-01 | 2.195E-08 | 3.020E-11 | 3.020E-11 |

| F2 | 2.499E-03 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F3 | 5.264E-04 | 6.722E-10 | 3.010E-07 | 3.042E-01 | 7.245E-02 | 1.430E-05 | 3.020E-11 |

| F4 | 2.838E-01 | 5.092E-08 | 3.474E-10 | 3.020E-11 | 8.564E-04 | 1.429E-08 | 3.020E-11 |

| F5 | 8.663E-05 | 3.338E-11 | 3.690E-11 | 3.020E-11 | 1.850E-08 | 3.020E-11 | 3.020E-11 |

| F6 | 2.416E-02 | 4.077E-11 | 6.066E-11 | 3.020E-11 | 3.965E-08 | 3.690E-11 | 3.020E-11 |

| F7 | 2.510E-02 | 1.254E-07 | 2.371E-10 | 3.020E-11 | 1.106E-04 | 6.722E-10 | 3.020E-11 |

| F8 | 1.873E-07 | 4.504E-11 | 3.020E-11 | 3.020E-11 | 2.371E-10 | 8.993E-11 | 3.020E-11 |

| F9 | 2.388E-04 | 6.669E-03 | 2.236E-02 | 7.119E-09 | 3.501E-03 | 3.778E-02 | 3.338E-11 |

| F10 | 1.174E-03 | 3.020E-11 | 8.993E-11 | 8.993E-11 | 2.879E-06 | 9.260E-09 | 3.020E-11 |

| F11 | 9.049E-02 | 3.020E-11 | 3.020E-11 | 7.389E-11 | 6.526E-07 | 3.020E-11 | 3.020E-11 |

| F12 | 7.483E-02 | 3.020E-11 | 3.020E-11 | 3.592E-05 | 8.315E-03 | 2.959E-05 | 3.020E-11 |

| F13 | 1.606E-06 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 4.077E-11 | 3.020E-11 | 3.020E-11 |

| F14 | 9.514E-06 | 3.020E-11 | 5.573E-10 | 5.494E-11 | 5.092E-08 | 5.072E-10 | 3.020E-11 |

| F15 | 7.288E-03 | 4.118E-06 | 3.352E-08 | 6.518E-09 | 4.744E-06 | 4.943E-05 | 3.690E-11 |

| F16 | 1.175E-04 | 3.368E-05 | 7.119E-09 | 1.957E-10 | 9.883E-03 | 6.010E-08 | 3.020E-11 |

| F17 | 3.403E-01 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.338E-11 | 3.020E-11 | 3.020E-11 |

| F18 | 4.639E-05 | 3.020E-11 | 3.020E-11 | 3.690E-11 | 4.444E-07 | 2.371E-10 | 3.020E-11 |

| F19 | 3.339E-03 | 1.529E-05 | 3.352E-08 | 2.669E-09 | 2.783E-07 | 1.988E-02 | 2.371E-10 |

| F20 | 2.772E-01 | 4.118E-06 | 3.825E-09 | 3.020E-11 | 2.052E-03 | 1.102E-08 | 5.573E-10 |

| F21 | 7.695E-08 | 7.380E-10 | 2.196E-07 | 2.154E-10 | 6.528E-08 | 1.174E-09 | 4.504E-11 |

| F22 | 4.353E-05 | 2.317E-06 | 3.820E-10 | 3.338E-11 | 1.297E-01 | 6.066E-11 | 3.020E-11 |

| F23 | 3.965E-08 | 2.572E-07 | 4.975E-11 | 3.020E-11 | 5.971E-05 | 3.020E-11 | 3.020E-11 |

| F24 | 1.174E-03 | 1.777E-10 | 3.197E-09 | 9.941E-01 | 1.302E-03 | 1.028E-06 | 3.020E-11 |

| F25 | 5.264E-04 | 6.526E-07 | 2.154E-10 | 5.092E-08 | 6.787E-02 | 5.561E-04 | 3.820E-10 |

| F26 | 1.765E-02 | 1.547E-09 | 9.533E-07 | 4.573E-09 | 4.639E-05 | 1.857E-09 | 3.020E-11 |

| F27 | 1.857E-09 | 3.020E-11 | 9.919E-11 | 3.034E-03 | 7.043E-07 | 9.756E-10 | 3.020E-11 |

| F28 | 3.835E-06 | 2.154E-06 | 3.820E-10 | 6.066E-11 | 6.520E-01 | 1.493E-04 | 3.020E-11 |

| F29 | 1.680E-03 | 3.020E-11 | 3.020E-11 | 9.334E-02 | 3.848E-03 | 7.599E-07 | 3.020E-11 |

Bold indicates values less than 0.05.

Table A4.

The results of Wilcoxon rank sum test p-value on CEC2017 when D = 50.

Table A4.

The results of Wilcoxon rank sum test p-value on CEC2017 when D = 50.

| Function | RBMO | SOGWO | RIME | SSA | LSHADE_SPACMA | PSO | TTHHO |

|---|---|---|---|---|---|---|---|

| F1 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F2 | 9.049E-02 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 4.504E-11 | 3.020E-11 | 3.020E-11 |

| F3 | 1.698E-08 | 3.020E-11 | 4.975E-11 | 4.676E-02 | 2.610E-10 | 7.389E-11 | 3.020E-11 |

| F4 | 5.092E-08 | 5.494E-11 | 3.690E-11 | 3.020E-11 | 2.439E-09 | 6.066E-11 | 3.020E-11 |

| F5 | 1.407E-04 | 3.338E-11 | 3.020E-11 | 3.020E-11 | 3.324E-06 | 3.020E-11 | 3.020E-11 |

| F6 | 1.311E-08 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 5.494E-11 | 3.020E-11 | 3.020E-11 |

| F7 | 2.377E-07 | 2.610E-10 | 1.411E-09 | 3.020E-11 | 6.010E-08 | 3.690E-11 | 3.020E-11 |

| F8 | 2.133E-05 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 1.957E-10 | 3.020E-11 | 3.020E-11 |

| F9 | 9.833E-08 | 9.926E-02 | 2.236E-02 | 2.380E-03 | 7.695E-08 | 1.154E-01 | 3.690E-11 |

| F10 | 2.371E-10 | 3.020E-11 | 3.020E-11 | 7.119E-09 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F11 | 4.573E-09 | 3.020E-11 | 3.020E-11 | 6.722E-10 | 1.287E-09 | 3.020E-11 | 3.020E-11 |

| F12 | 5.462E-06 | 3.020E-11 | 3.020E-11 | 3.497E-09 | 3.256E-07 | 1.777E-10 | 3.020E-11 |

| F13 | 2.126E-04 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F14 | 4.515E-02 | 3.020E-11 | 3.020E-11 | 7.739E-06 | 1.120E-01 | 7.483E-02 | 3.020E-11 |

| F15 | 6.203E-04 | 2.062E-01 | 7.043E-07 | 4.183E-09 | 1.175E-04 | 1.441E-02 | 3.020E-11 |

| F16 | 3.183E-03 | 1.809E-01 | 7.695E-08 | 1.102E-08 | 6.913E-04 | 6.377E-03 | 5.494E-11 |

| F17 | 6.414E-01 | 3.020E-11 | 3.020E-11 | 5.494E-11 | 1.337E-05 | 7.389E-11 | 3.020E-11 |

| F18 | 4.825E-01 | 3.020E-11 | 3.020E-11 | 3.953E-01 | 6.952E-01 | 1.988E-02 | 3.020E-11 |

| F19 | 2.499E-03 | 3.917E-02 | 6.765E-05 | 9.756E-10 | 3.159E-10 | 8.120E-04 | 2.227E-09 |

| F20 | 1.606E-06 | 3.820E-10 | 1.464E-10 | 3.020E-11 | 2.015E-08 | 3.690E-11 | 3.020E-11 |

| F21 | 3.825E-09 | 2.755E-03 | 4.676E-02 | 4.714E-04 | 1.254E-07 | 5.369E-02 | 3.020E-11 |

| F22 | 1.957E-10 | 2.371E-10 | 1.206E-10 | 3.020E-11 | 3.825E-09 | 3.020E-11 | 3.020E-11 |

| F23 | 1.070E-09 | 2.439E-09 | 2.154E-10 | 3.020E-11 | 7.773E-09 | 3.020E-11 | 3.020E-11 |

| F24 | 1.102E-08 | 3.020E-11 | 1.850E-08 | 4.427E-03 | 1.287E-09 | 8.891E-10 | 3.020E-11 |

| F25 | 1.529E-05 | 3.474E-10 | 5.494E-11 | 1.442E-03 | 6.518E-09 | 8.663E-05 | 3.020E-11 |

| F26 | 2.491E-06 | 4.077E-11 | 5.072E-10 | 2.371E-10 | 1.102E-08 | 2.154E-10 | 3.020E-11 |

| F27 | 8.993E-11 | 3.020E-11 | 2.610E-10 | 8.883E-06 | 1.957E-10 | 1.957E-10 | 3.020E-11 |

| F28 | 7.599E-07 | 3.159E-10 | 4.077E-11 | 5.494E-11 | 1.171E-02 | 2.669E-09 | 3.020E-11 |

| F29 | 6.528E-08 | 3.020E-11 | 3.020E-11 | 3.848E-03 | 1.777E-10 | 5.967E-09 | 3.020E-11 |

Bold indicates values less than 0.05.

Table A5.

The results of Wilcoxon rank sum test p-value on CEC2017 when D = 100.

Table A5.

The results of Wilcoxon rank sum test p-value on CEC2017 when D = 100.

| Function | RBMO | SOGWO | RIME | SSA | LSHADE_SPACMA | PSO | TTHHO |

|---|---|---|---|---|---|---|---|

| F1 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F2 | 4.119E-01 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 9.833E-08 | 3.020E-11 | 6.696E-11 |

| F3 | 3.020E-11 | 3.020E-11 | 4.077E-11 | 1.857E-09 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F4 | 1.202E-08 | 8.993E-11 | 8.993E-11 | 3.020E-11 | 1.464E-10 | 3.020E-11 | 3.020E-11 |

| F5 | 3.520E-07 | 6.696E-11 | 3.338E-11 | 3.020E-11 | 1.892E-04 | 3.020E-11 | 3.020E-11 |

| F6 | 3.197E-09 | 3.020E-11 | 3.690E-11 | 3.020E-11 | 3.338E-11 | 3.020E-11 | 3.020E-11 |

| F7 | 4.504E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 7.389E-11 | 3.020E-11 | 3.020E-11 |

| F8 | 3.256E-07 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 7.389E-11 | 3.020E-11 | 3.020E-11 |

| F9 | 1.957E-10 | 1.624E-01 | 1.383E-02 | 3.835E-06 | 7.389E-11 | 2.624E-03 | 3.020E-11 |

| F10 | 1.329E-10 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F12 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F13 | 9.587E-01 | 3.020E-11 | 3.020E-11 | 2.610E-10 | 9.533E-07 | 4.077E-11 | 3.020E-11 |

| F14 | 3.831E-05 | 3.020E-11 | 3.020E-11 | 2.922E-09 | 4.200E-10 | 4.504E-11 | 3.020E-11 |

| F15 | 9.533E-07 | 8.841E-07 | 1.613E-10 | 8.663E-05 | 7.088E-08 | 8.663E-05 | 3.020E-11 |

| F16 | 1.492E-06 | 5.570E-03 | 1.311E-08 | 3.825E-09 | 3.835E-06 | 5.573E-10 | 3.020E-11 |

| F17 | 4.637E-03 | 4.077E-11 | 3.020E-11 | 3.010E-07 | 2.068E-02 | 3.020E-11 | 3.020E-11 |

| F18 | 5.555E-02 | 3.020E-11 | 3.020E-11 | 3.770E-04 | 1.206E-10 | 3.020E-11 | 3.020E-11 |

| F19 | 4.207E-02 | 1.988E-02 | 5.607E-05 | 2.390E-08 | 4.504E-11 | 5.607E-05 | 7.389E-11 |

| F20 | 3.338E-11 | 3.690E-11 | 3.338E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F21 | 3.646E-08 | 9.470E-01 | 3.147E-02 | 3.671E-03 | 4.077E-11 | 1.635E-05 | 3.020E-11 |

| F22 | 4.077E-11 | 1.957E-10 | 7.389E-11 | 3.020E-11 | 2.610E-10 | 3.020E-11 | 3.020E-11 |

| F23 | 3.020E-11 | 3.020E-11 | 3.690E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F24 | 3.020E-11 | 3.020E-11 | 4.504E-11 | 3.825E-09 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

| F25 | 9.063E-08 | 1.411E-09 | 5.462E-09 | 9.833E-08 | 9.756E-10 | 2.371E-10 | 3.020E-11 |

| F26 | 2.254E-04 | 3.020E-11 | 3.338E-11 | 3.835E-06 | 4.975E-11 | 4.077E-11 | 3.020E-11 |

| F27 | 3.020E-11 | 3.020E-11 | 4.077E-11 | 1.429E-08 | 3.020E-11 | 4.077E-11 | 3.020E-11 |

| F28 | 5.600E-07 | 1.613E-10 | 3.020E-11 | 2.597E-05 | 7.599E-07 | 3.010E-07 | 3.020E-11 |

| F29 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 | 3.020E-11 |

Bold indicates values less than 0.05.

Appendix B. Engineering Application Design Issues

Appendix B.1. Extension/Compression Spring Problem

Minimize:

Subject to:

Appendix B.2. Reducer Design Problem

Minimize:

Subject to:

Appendix B.3. Welded Beam Problem

Minimize:

Subject to:

where:

Appendix B.4. Gear Train Design Problem

Minimize:

Subject to:

References

- Tang, J.; Liu, G.; Pan, Q. A review on representative swarm intelligence algorithms for solving optimization problems: Applications and trends. IEEE/CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Taghian, S.; Javaheri, D.; Sadiq, A.S.; Khodadadi, N.; Mirjalili, S. MTV-SCA: Multi-trial vector-based sine cosine algorithm. Clust. Comput. 2024, 27, 13471–13515. [Google Scholar] [CrossRef]

- Ming, F.; Gong, W.; Li, D.; Wang, L.; Gao, L. A competitive and cooperative swarm optimizer for constrained multiobjective optimization problems. IEEE Trans. Evol. Comput. 2022, 27, 1313–1326. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S.; Gholizadeh, H. A comprehensive survey: Whale Optimization Algorithm and its applications. Swarm Evol. Comput. 2019, 48, 1–24. [Google Scholar] [CrossRef]

- Chakraborty, S.; Saha, A.K.; Sharma, S.; Mirjalili, S.; Chakraborty, R. A novel enhanced whale optimization algorithm for global optimization. Comput. Ind. Eng. 2021, 153, 107086. [Google Scholar] [CrossRef]

- Wang, J.; Lin, Y.; Liu, R.; Fu, J. Odor source localization of multi-robots with swarm intelligence algorithms: A review. Front. Neurorobot. 2022, 16, 949888. [Google Scholar] [CrossRef]

- Jin, Y.; Wang, H.; Chugh, T.; Guo, D.; Miettinen, K. Data-driven evolutionary optimization: An overview and case studies. IEEE Trans. Evol. Comput. 2018, 23, 442–458. [Google Scholar] [CrossRef]

- Ji, X.F.; Zhang, Y.; He, C.L.; Cheng, J.X.; Gong, D.W.; Gao, X.Z.; Guo, Y.N. Surrogate and autoencoder-assisted multitask particle swarm optimization for high-dimensional expensive multimodal problems. IEEE Trans. Evol. Comput. 2023, 28, 1009–1023. [Google Scholar] [CrossRef]

- Bouaouda, A.; Sayouti, Y. Hybrid meta-heuristic algorithms for optimal sizing of hybrid renewable energy system: A review of the state-of-the-art. Arch. Comput. Methods Eng. 2022, 29, 4049–4083. [Google Scholar] [CrossRef]

- Vinod Chandra, S.S.; Anand, H.S. Nature inspired meta heuristic algorithms for optimization problems. Computing 2022, 104, 251–269. [Google Scholar]

- Dokeroglu, T.; Sevinc, E.; Kucukyilmaz, T.; Cosar, A. A survey on new generation metaheuristic algorithms. Comput. Ind. Eng. 2019, 137, 106040. [Google Scholar] [CrossRef]

- Ma, J.; Hao, Z.; Sun, W. Enhancing sparrow search algorithm via multi-strategies for continuous optimization problems. Inf. Process. Manag. 2022, 59, 102854. [Google Scholar] [CrossRef]

- Gad, A.G. Particle swarm optimization algorithm and its applications: A systematic review. Arch. Comput. Methods Eng. 2022, 29, 2531–2561. [Google Scholar] [CrossRef]

- Slowik, A.; Kwasnicka, H. Nature inspired methods and their industry applications—Swarm intelligence algorithms. IEEE Trans. Ind. Inform. 2017, 14, 1004–1015. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–11 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2007, 1, 28–39. [Google Scholar] [CrossRef]

- Yang, X.S. Nature-Inspired Metaheuristic Algorithms; Luniver Press: Frome, UK, 2010. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control. Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Zhu, F.; Li, G.; Tang, H.; Li, Y.; Lv, X.; Wang, X. Dung beetle optimization algorithm based on quantum computing and multi-strategy fusion for solving engineering problems. Expert Syst. Appl. 2024, 236, 121219. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, C.; Gharehchopogh, F.S.; Mirjalili, S. An improved whale optimization algorithm based on multi-population evolution for global optimization and engineering design problems. Expert Syst. Appl. 2023, 215, 119269. [Google Scholar] [CrossRef]

- Zhang, Y.; Chi, A.; Mirjalili, S. Enhanced Jaya algorithm: A simple but efficient optimization method for constrained engineering design problems. Knowl.-Based Syst. 2021, 233, 107555. [Google Scholar] [CrossRef]

- Dhargupta, S.; Ghosh, M.; Mirjalili, S.; Sarkar, R. Selective opposition based grey wolf optimization. Expert Syst. Appl. 2020, 151, 113389. [Google Scholar] [CrossRef]

- Liang, S.; Yin, M.; Sun, G.; Li, J.; Li, H.; Lang, Q. An enhanced sparrow search swarm optimizer via multi-strategies for high-dimensional optimization problems. Swarm Evol. Comput. 2024, 88, 101603. [Google Scholar] [CrossRef]

- Fu, S.; Li, K.; Huang, H.; Ma, C.; Fan, Q.; Zhu, Y. Red-billed blue magpie optimizer: A novel metaheuristic algorithm for 2D/3D UAV path planning and engineering design problems. Artif. Intell. Rev. 2024, 57, 1–89. [Google Scholar] [CrossRef]

- Črepinšek, M.; Liu, S.H.; Mernik, M. Exploration and exploitation in evolutionary algorithms: A survey. ACM Comput. Surv. (CSUR) 2013, 45, 1–33. [Google Scholar] [CrossRef]

- Jia, H.; Lu, C. Guided learning strategy: A novel update mechanism for metaheuristic algorithms design and improvement. Knowl.-Based Syst. 2024, 286, 111402. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.S. Evolutionary boundary constraint handling scheme. Neural Comput. Appl. 2012, 21, 1449–1462. [Google Scholar] [CrossRef]

- Seyyedabbasi, A. WOASCALF: A new hybrid whale optimization algorithm based on sine cosine algorithm and levy flight to solve global optimization problems. Adv. Eng. Softw. 2022, 173, 103272. [Google Scholar] [CrossRef]

- Wu, L.; Wu, J.; Wang, T. Enhancing grasshopper optimization algorithm (GOA) with levy flight for engineering applications. Sci. Rep. 2023, 13, 124. [Google Scholar] [CrossRef]

- Wu, G.; Mallipeddi, R.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition on Constrained Real-Parameter Optimization; Technical Report; National University of Defense Technology: Changsha, China; Kyungpook National University: Daegu, Republic of Korea; Nanyang Technological University: Singapore, 2017. [Google Scholar]

- Yazdani, D.; Branke, J.; Omidvar, M.N.; Li, X.; Li, C.; Mavrovouniotis, M.; Nguyen, T.T.; Yang, S.; Yao, X. IEEE CEC 2022 competition on dynamic optimization problems generated by generalized moving peaks benchmark. arXiv 2021, arXiv:2106.06174. [Google Scholar]

- Abdulrab, H.; Hussin, F.A.; Ismail, I.; Assad, M.; Awang, A.; Shutari, H.; Arun, D. Energy efficient optimal deployment of industrial wireless mesh networks using transient trigonometric Harris Hawks optimizer. Heliyon 2024, 10, e28719. [Google Scholar] [CrossRef] [PubMed]

- Su, H.; Zhao, D.; Heidari, A.A.; Liu, L.; Zhang, X.; Mafarja, M.; Chen, H. RIME: A physics-based optimization. Neurocomputing 2023, 532, 183–214. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Fattouh, A.M.; Jambi, K.M. LSHADE with semi-parameter adaptation hybrid with CMA-ES for solving CEC 2017 benchmark problems. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), Donostia, Spain, 5–8 June 2017; pp. 145–152. [Google Scholar]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H. DMDE: Diversity-maintained multi-trial vector differential evolution algorithm for non-decomposition large-scale global optimization. Expert Syst. Appl. 2022, 198, 116895. [Google Scholar] [CrossRef]

- Hussain, K.; Salleh, M.N.M.; Cheng, S.; Shi, Y. On the exploration and exploitation in popular swarm-based metaheuristic algorithms. Neural Comput. Appl. 2019, 31, 7665–7683. [Google Scholar] [CrossRef]

- Hu, G.; Huang, F.; Seyyedabbasi, A.; Wei, G. Enhanced multi-strategy bottlenose dolphin optimizer for UAVs path planning. Appl. Math. Model. 2024, 130, 243–271. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Bozorg-Haddad, O.; Chu, X. Gradient-based optimizer: A new metaheuristic optimization algorithm. Inf. Sci. 2020, 540, 131–159. [Google Scholar] [CrossRef]

- Zhou, L.; Liu, X.; Tian, R.; Wang, W.; Jin, G. A multi-strategy enhanced reptile search algorithm for global optimization and engineering optimization design problems. Clust. Comput. 2025, 28, 1–41. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J. Supercomput. 2023, 79, 7305–7336. [Google Scholar] [CrossRef]

- Chen, P.; Zhou, S.; Zhang, Q.; Kasabov, N. A meta-inspired termite queen algorithm for global optimization and engineering design problems. Eng. Appl. Artif. Intell. 2022, 111, 104805. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).