Towards Biologically-Inspired Visual SLAM in Dynamic Environments: IPL-SLAM with Instance Segmentation and Point-Line Feature Fusion

Abstract

1. Introduction

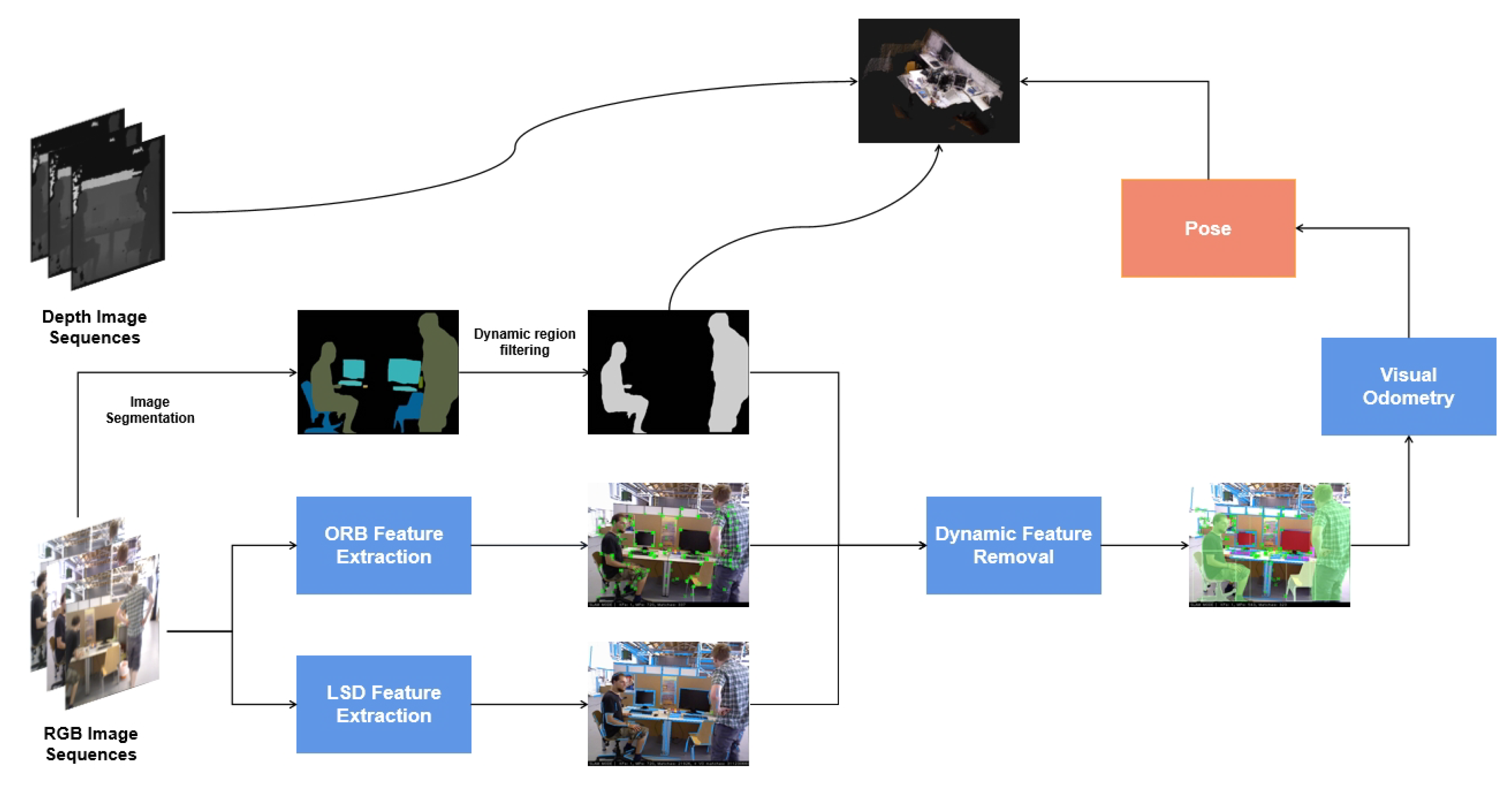

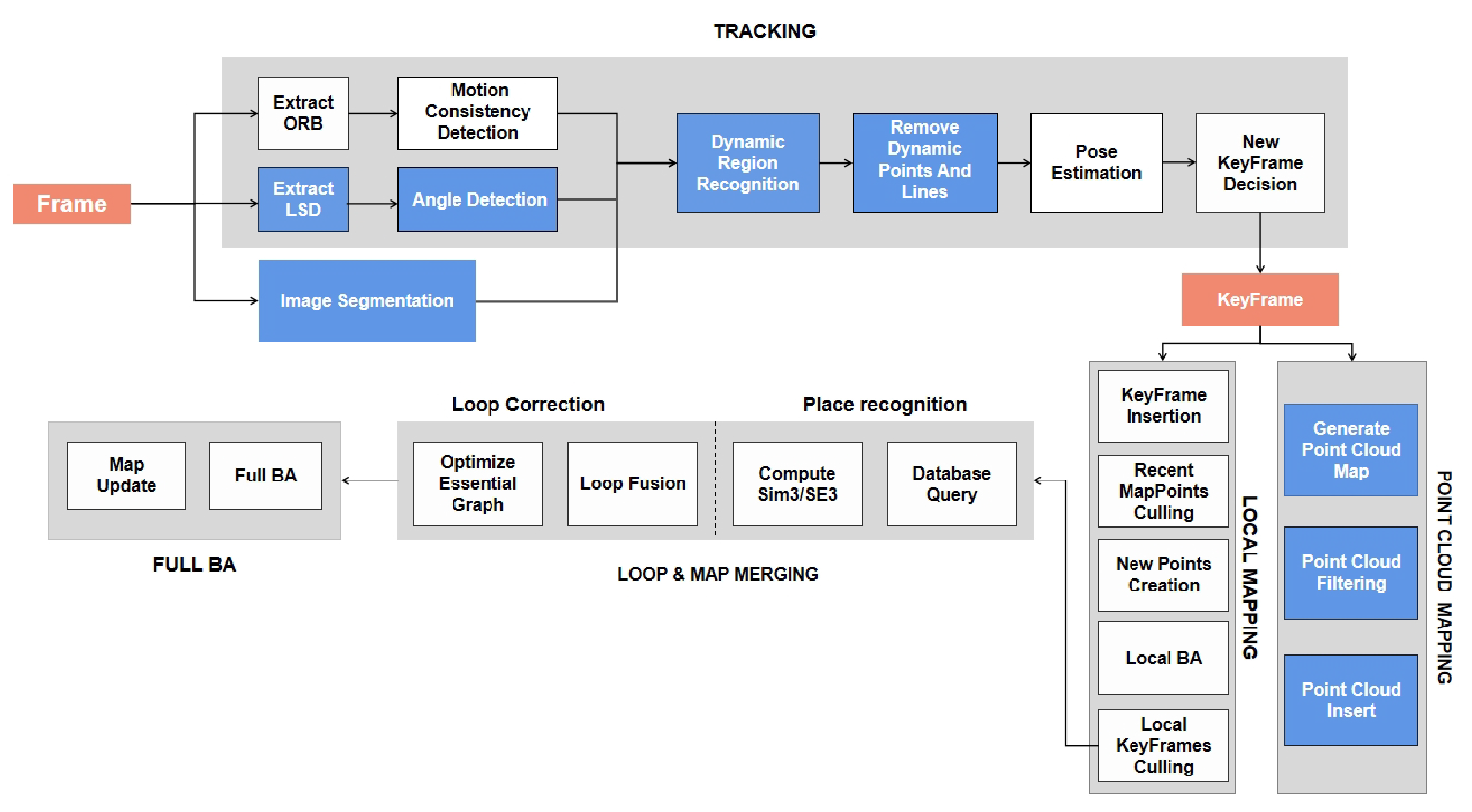

- A biologically-inspired visual SLAM framework is presented, which integrates instance segmentation and geometric feature fusion. The YOLOv8-seg model is employed to achieve precise contour-level detection of dynamic objects, providing semantic priors for robust dynamic region filtering.

- An angle-based filtering module is developed based on LSD-extracted line features, and an adaptive-weight reprojection error function is introduced. By jointly optimizing point and line constraints, the accuracy and robustness of camera pose estimation are significantly improved in dynamic environments.

- The proposed framework is implemented as a complete system, IPL-SLAM, and its effectiveness is validated on the TUM RGB-D dataset. The system demonstrates superior localization accuracy and high-quality semantic mapping compared to existing dynamic SLAM methods.

2. Related Work

2.1. Visual SLAM

2.2. Dynamic VSLAM

3. System Introduction

3.1. Overview of IPL-SLAM

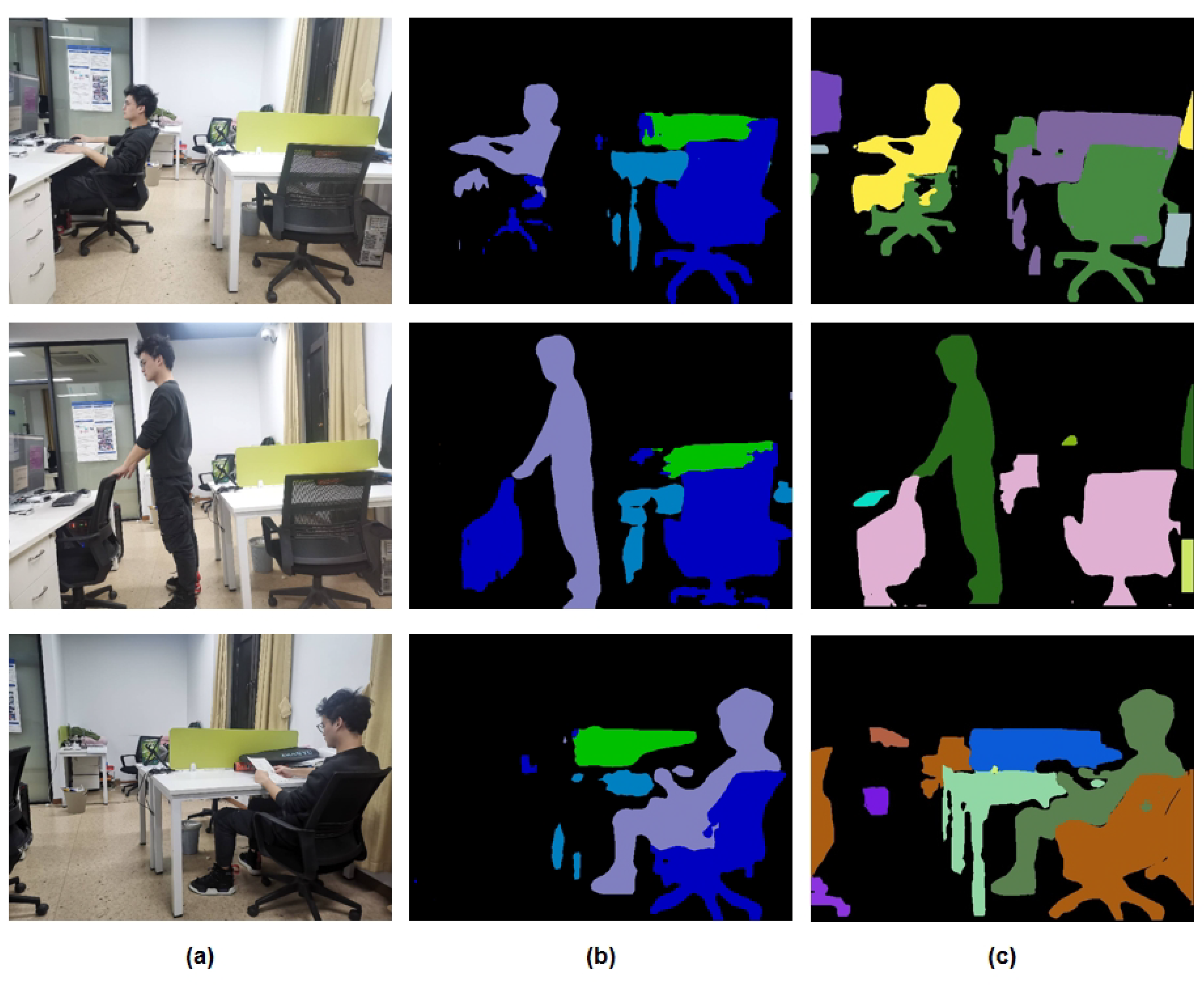

3.2. YOLOv8 Instance Segmentation

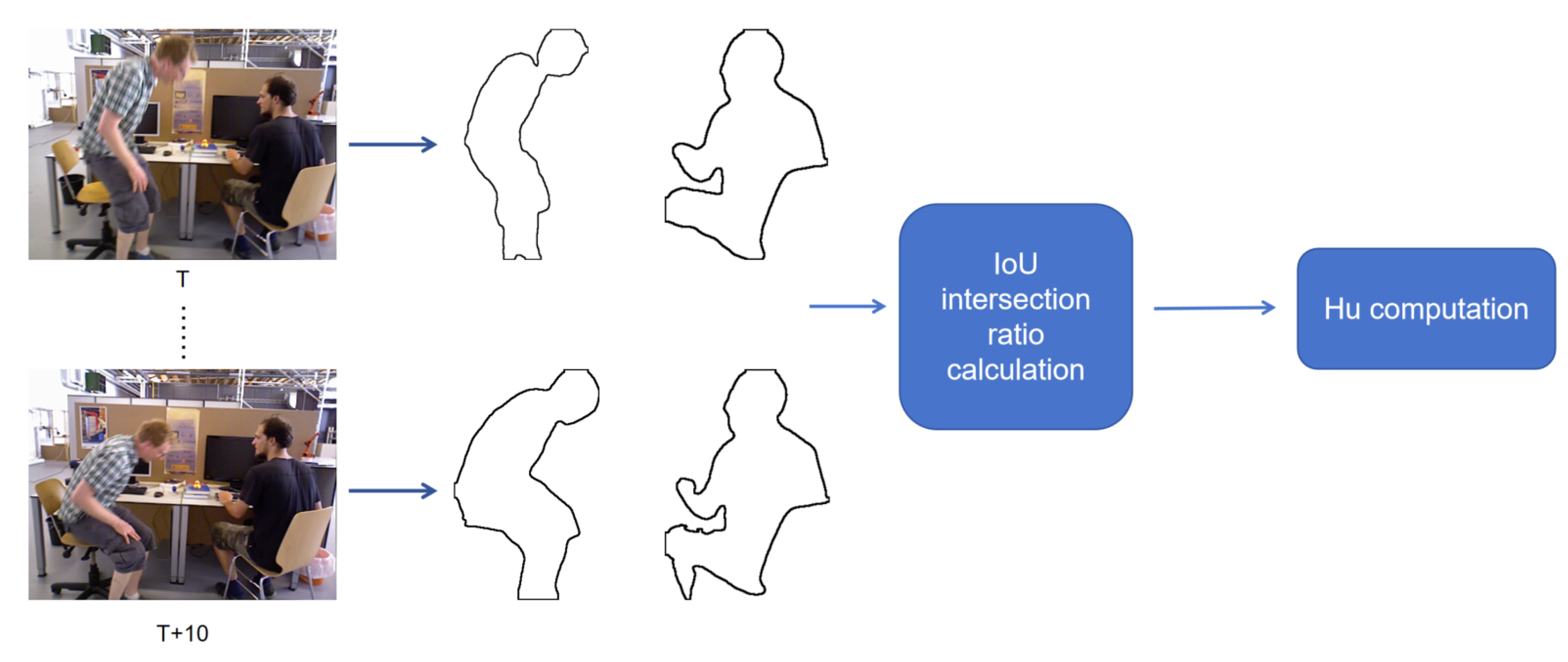

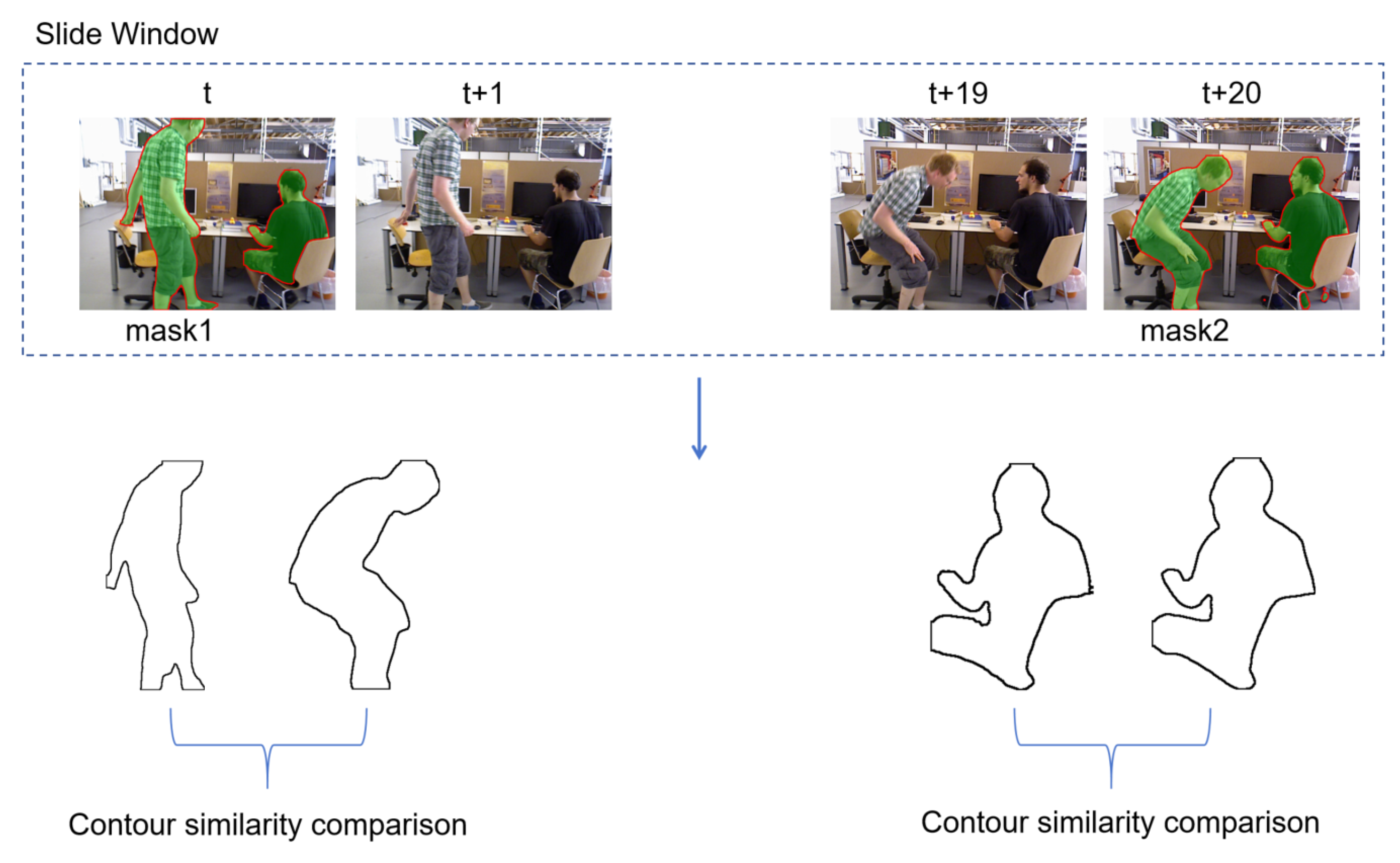

3.3. Dynamic Feature Filtering in Images Under Continuous Image Sequences

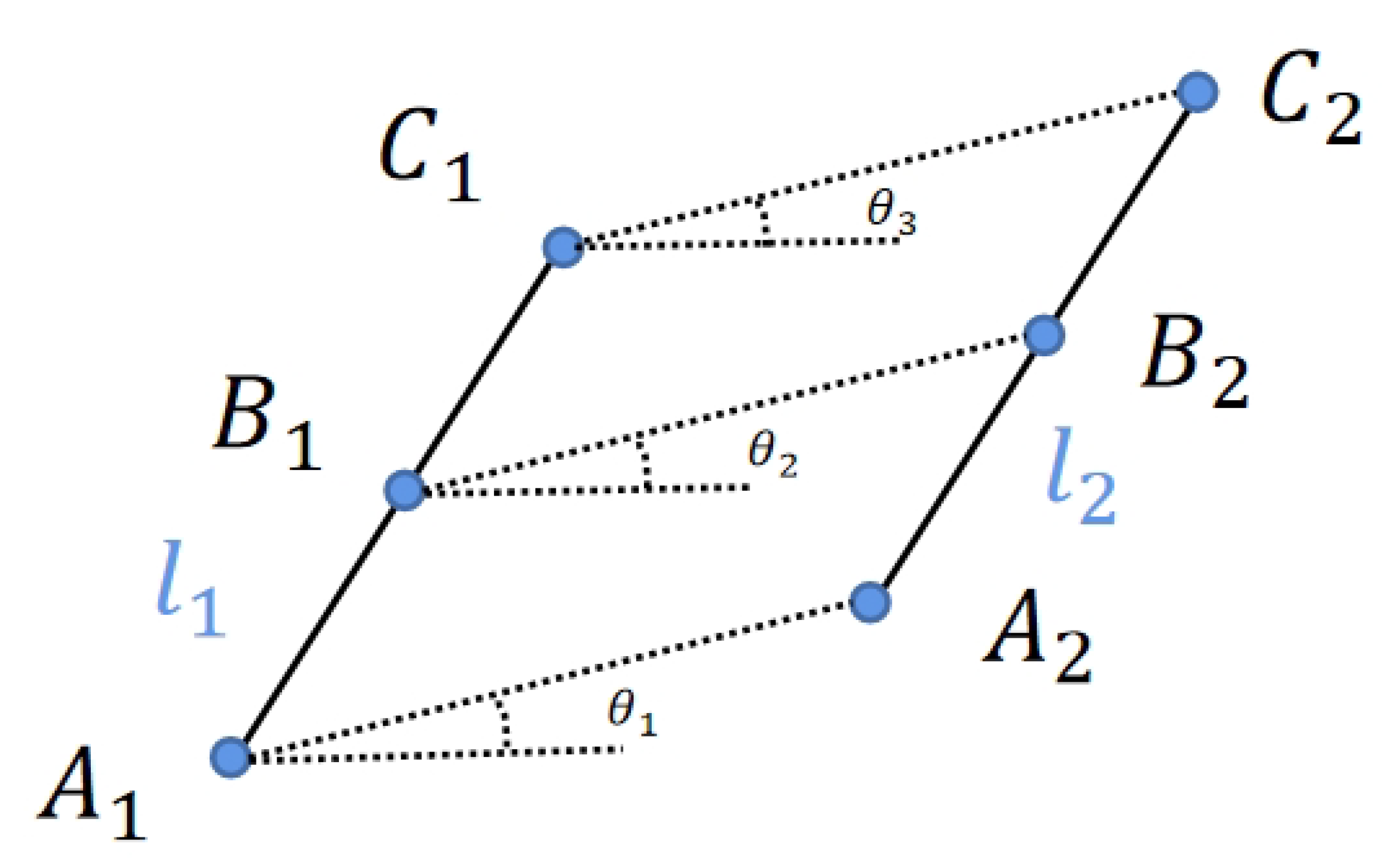

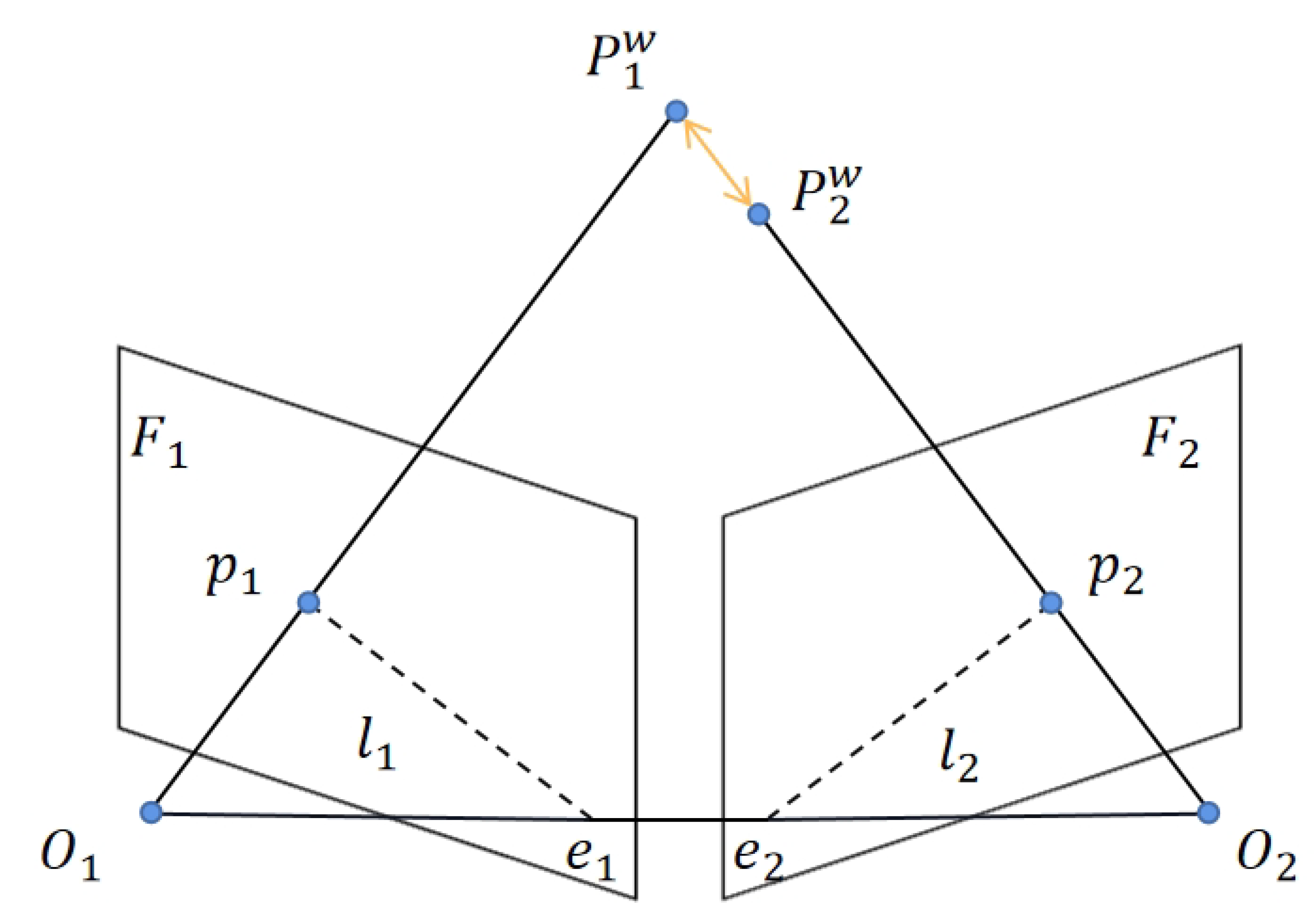

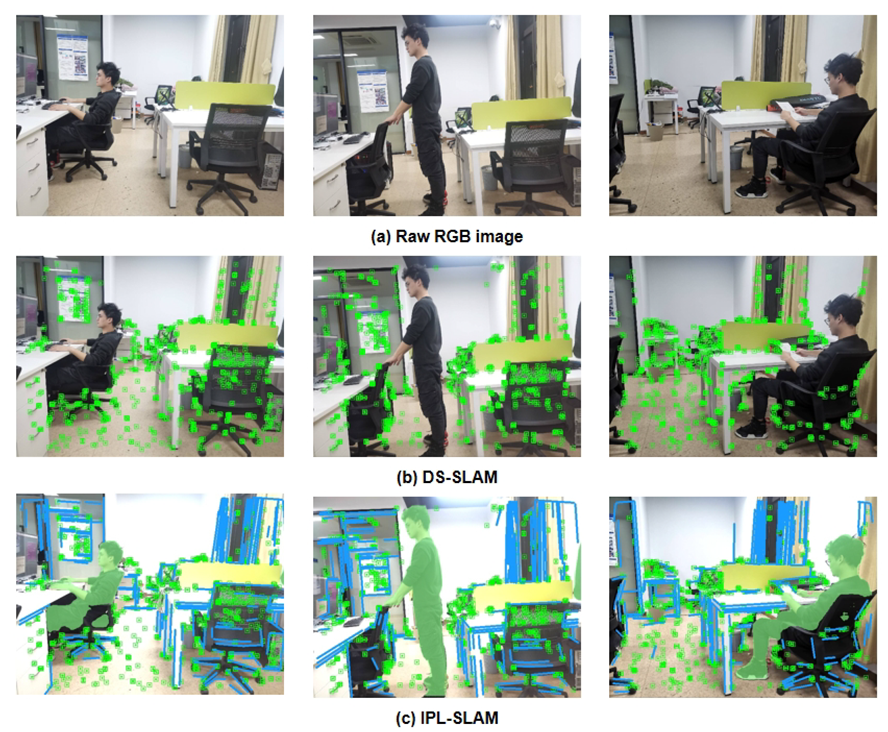

3.4. Remove Dynamic Point and Line Features

| Algorithm 1 Dynamic region recognition |

|

3.5. Optimization Model Based on Static Point and Line Feature

4. Experiments

4.1. Experiments in TUM RGB-D Dataset

4.2. Assessing the Efficiency of Time Utilization

4.3. Experiments in Real-World Environments

4.4. Point Cloud Map

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Andrew, A.M. Multiple view geometry in computer vision. Kybernetes 2001, 30, 1333–1341. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1271–1278. [Google Scholar]

- Smith, R.C.; Cheeseman, P. On the representation and estimation of spatial uncertainty. Int. J. Robot. Res. 1986, 5, 56–68. [Google Scholar] [CrossRef]

- Kohlbrecher, S.; Von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable SLAM system with full 3D motion estimation. In Proceedings of the 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan, 1–5 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 155–160. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part II 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 834–849. [Google Scholar]

- Endres, F.; Hess, J.; Engelhard, N.; Sturm, J.; Cremers, D.; Burgard, W. An evaluation of the RGB-D SLAM system. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1691–1696. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 15–22. [Google Scholar]

- Cheng, J.; Wang, C.; Meng, M.Q.H. Robust visual localization in dynamic environments based on sparse motion removal. IEEE Trans. Autom. Sci. Eng. 2019, 17, 658–669. [Google Scholar] [CrossRef]

- Cheng, J.; Zhang, H.; Meng, M.Q.H. Improving visual localization accuracy in dynamic environments based on dynamic region removal. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1585–1596. [Google Scholar] [CrossRef]

- Xu, G.; Yu, Z.; Xing, G.; Zhang, X.; Pan, F. Visual odometry algorithm based on geometric prior for dynamic environments. Int. J. Adv. Manuf. Technol. 2022, 122, 235–242. [Google Scholar] [CrossRef]

- Wang, R.; Wan, W.; Wang, Y.; Di, K. A new RGB-D SLAM method with moving object detection for dynamic indoor scenes. Remote Sens. 2019, 11, 1143. [Google Scholar] [CrossRef]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Z.; Liu, X.J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A semantic visual SLAM towards dynamic environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1168–1174. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 225–234. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. Brief: Binary robust independent elementary features. In Proceedings of the Computer Vision—ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; Proceedings, Part IV 11. Springer: Berlin/Heidelberg, Germany, 2010; pp. 778–792. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2564–2571. [Google Scholar]

- Wang, S.; Zheng, D.; Li, Y. LiDAR-SLAM loop closure detection based on multi-scale point cloud feature transformer. Meas. Sci. Technol. 2024, 35, 036305.1–036305.16. [Google Scholar] [CrossRef]

- Zhong, J.; Qian, H. Dynamic point-line SLAM based on lightweight object detection. Appl. Intell. 2025, 55, 260. [Google Scholar] [CrossRef]

- Li, C.; Jiang, S.; Zhou, K. DYR-SLAM: Enhanced dynamic visual SLAM with YOLOv8 and RTAB-Map. J. Supercomput. 2025, 81, 718. [Google Scholar] [CrossRef]

- Fang, Y.; Dai, B. An improved moving target detecting and tracking based on optical flow technique and kalman filter. In Proceedings of the 2009 4th International Conference on Computer Science & Education, Nanning, China, 25–28 July 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1197–1202. [Google Scholar]

- Li, S.; Lee, D. RGB-D SLAM in dynamic environments using static point weighting. IEEE Robot. Autom. Lett. 2017, 2, 2263–2270. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, M.; Meng, M.Q.H. Improving RGB-D SLAM in dynamic environments: A motion removal approach. Robot. Auton. Syst. 2017, 89, 110–122. [Google Scholar] [CrossRef]

- Mu, X.; He, B.; Zhang, X.; Yan, T.; Chen, X.; Dong, R. Visual navigation features selection algorithm based on instance segmentation in dynamic environment. IEEE Access 2019, 8, 465–473. [Google Scholar] [CrossRef]

- Elayaperumal, D.; KS, S.S.; Jeong, J.H.; Joo, Y.H. Semi-supervised one-shot learning for video object segmentation in dynamic environments. Multimed. Tools Appl. 2025, 84, 3095–3115. [Google Scholar] [CrossRef]

- Zhang, L.; Wei, L.; Shen, P.; Wei, W.; Zhu, G.; Song, J. Semantic SLAM based on object detection and improved octomap. IEEE Access 2018, 6, 75545–75559. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Xu, Z.; Song, Y.; Pang, B.; Xu, Q.; Yuan, X. Deep learning-based visual slam for indoor dynamic scenes. Appl. Intell. 2025, 55, 434. [Google Scholar] [CrossRef]

- Zhu, H.; Bai, S.; Shi, J.; Lu, J.; Zuo, X.; Huang, S.; Yao, X. Ellipsoid-SLAM: Enhancing dynamic scene understanding through ellipsoidal object representation and trajectory tracking. Vis. Comput. 2025, 41, 7493–7508. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liu, Y.; Miura, J. RDS-SLAM: Real-time dynamic SLAM using semantic segmentation methods. IEEE Access 2021, 9, 23772–23785. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Meng, W.; He, Z.; Lin, Z.; Shi, D.; Guo, Z.; Huang, J.; Park, B.; Wang, Y. Image-free Hu invariant moment measurement by single-pixel detection. Opt. Laser Technol. 2025, 181, 111581. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 573–580. [Google Scholar]

- Hu, X.; Zhang, Y.; Cao, Z.; Ma, R.; Wu, Y.; Deng, Z.; Sun, W. CFP-SLAM: A Real-time Visual SLAM Based on Coarse-to-Fine Probability in Dynamic Environments. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 4399–4406. [Google Scholar]

- Wu, W.; Guo, L.; Gao, H.; You, Z.; Liu, Y.; Chen, Z. YOLO-SLAM: A semantic SLAM system towards dynamic environment with geometric constraint. Neural Comput. Appl. 2022, 34, 6011–6026. [Google Scholar] [CrossRef]

- Zhang, C.; Huang, T.; Zhang, R.; Yi, X. PLD-SLAM: A new RGB-D SLAM method with point and line features for indoor dynamic scene. ISPRS Int. J. Geo-Inf. 2021, 10, 163. [Google Scholar] [CrossRef]

| DS-SLAM | IPL-SLAM | |||||||

|---|---|---|---|---|---|---|---|---|

| Seq. | RMSE | Mean | Median | STD | RMSE | Mean | Median | STD |

| fr3/w/xyz | 0.0288 | 0.0206 | 0.0166 | 0.0201 | 0.0129 | 0.0112 | 0.0105 | 0.0068 |

| fr3/w/rpy | 0.4752 | 0.4051 | 0.2856 | 0.2484 | 0.0827 | 0.0592 | 0.0526 | 0.0415 |

| fr3/w/half | 0.0283 | 0.0242 | 0.0209 | 0.0147 | 0.0315 | 0.0269 | 0.0226 | 0.0164 |

| fr3/w/static | 0.0076 | 0.0069 | 0.0063 | 0.0036 | 0.0113 | 0.0104 | 0.0098 | 0.0043 |

| fr3/s/rpy | 0.0213 | 0.0155 | 0.0118 | 0.0146 | 0.0198 | 0.0149 | 0.0109 | 0.0122 |

| Seq. | ORB-SLAM2 | Dyna-SLAM | CFP-SLAM | YOLO-SLAM | PLD-SLAM | DPL-SLAM | IPL-SLAM |

|---|---|---|---|---|---|---|---|

| fr3/w/xyz | 0.5359 | 0.0158 | 0.0141 | 0.0194 | 0.0144 | 0.0627 | 0.0129 |

| fr3/w/rpy | 0.7408 | 0.0402 | 0.0368 | 0.0933 | 0.2212 | 0.0310 | 0.0827 |

| fr3/w/half | 0.3962 | 0.0274 | 0.0237 | 0.0268 | 0.0261 | 0.0242 | 0.0315 |

| fr3/w/static | 0.3540 | 0.0080 | 0.0066 | 0.0094 | 0.0065 | 0.0068 | 0.0113 |

| fr3/s/rpy | 0.0214 | 0.0302 | 0.0253 | – | 0.0222 | – | 0.0198 |

| Seq. | YOLOv8 | YOLOv8 + LSD | YOLOv8 + Motion Consistency Checks | YOLOv8 + LSD + Adaptive Weights |

|---|---|---|---|---|

| fr3/w/xyz | 0.012976 | 0.012945 | 0.012923 | 0.012909 |

| fr3/w/rpy | 0.082932 | 0.082801 | 0.082795 | 0.082748 |

| fr3/w/half | 0.031625 | 0.031523 | 0.031567 | 0.031549 |

| fr3/w/static | 0.011356 | 0.011332 | 0.011341 | 0.011324 |

| Seq. | ORB Feature Extraction (DS-SLAM) | SegNet (DS-SLAM) | ORB + LSD Feature Extraction (IPL-SLAM) | YOLOv8 (IPL-SLAM) |

|---|---|---|---|---|

| fr3/w/xyz | 15.65 | 75.25 | 56.35 | 125.18 |

| Real-world scene | 18.23 | 78.36 | 58.56 | 127.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Yao, D.; Liu, N.; Yuan, Y. Towards Biologically-Inspired Visual SLAM in Dynamic Environments: IPL-SLAM with Instance Segmentation and Point-Line Feature Fusion. Biomimetics 2025, 10, 558. https://doi.org/10.3390/biomimetics10090558

Liu J, Yao D, Liu N, Yuan Y. Towards Biologically-Inspired Visual SLAM in Dynamic Environments: IPL-SLAM with Instance Segmentation and Point-Line Feature Fusion. Biomimetics. 2025; 10(9):558. https://doi.org/10.3390/biomimetics10090558

Chicago/Turabian StyleLiu, Jian, Donghao Yao, Na Liu, and Ye Yuan. 2025. "Towards Biologically-Inspired Visual SLAM in Dynamic Environments: IPL-SLAM with Instance Segmentation and Point-Line Feature Fusion" Biomimetics 10, no. 9: 558. https://doi.org/10.3390/biomimetics10090558

APA StyleLiu, J., Yao, D., Liu, N., & Yuan, Y. (2025). Towards Biologically-Inspired Visual SLAM in Dynamic Environments: IPL-SLAM with Instance Segmentation and Point-Line Feature Fusion. Biomimetics, 10(9), 558. https://doi.org/10.3390/biomimetics10090558