3.1. The Eye as a Rotating Rigid Ball

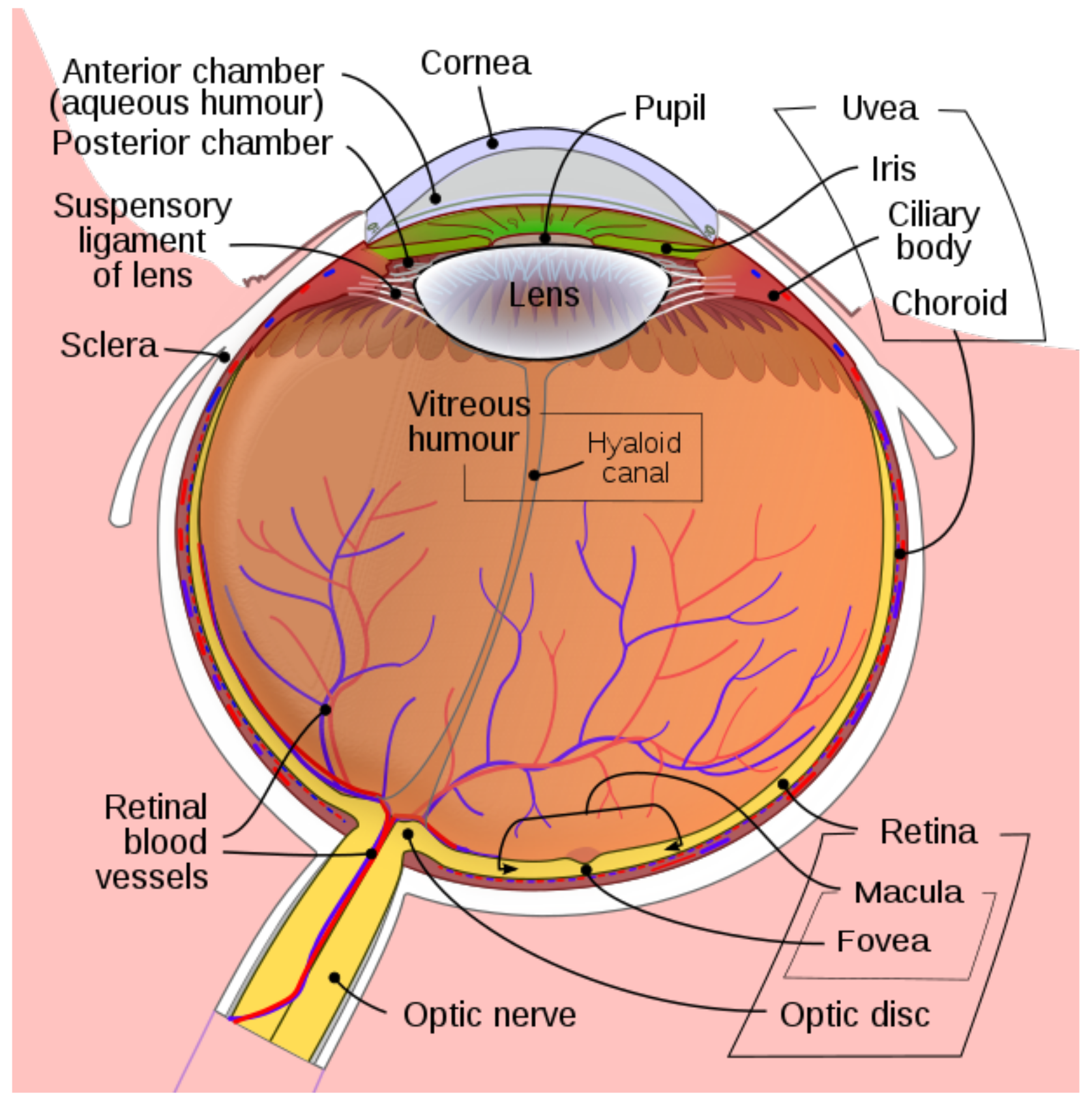

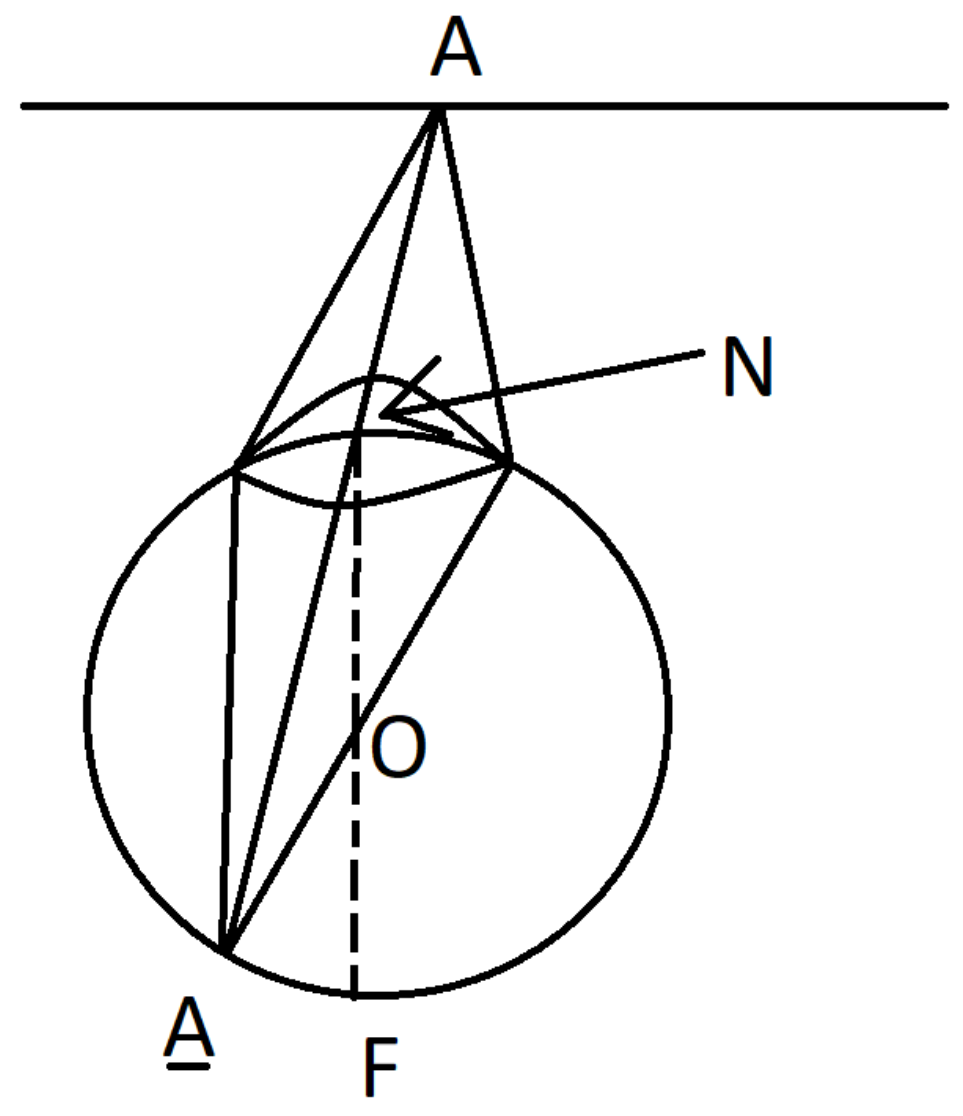

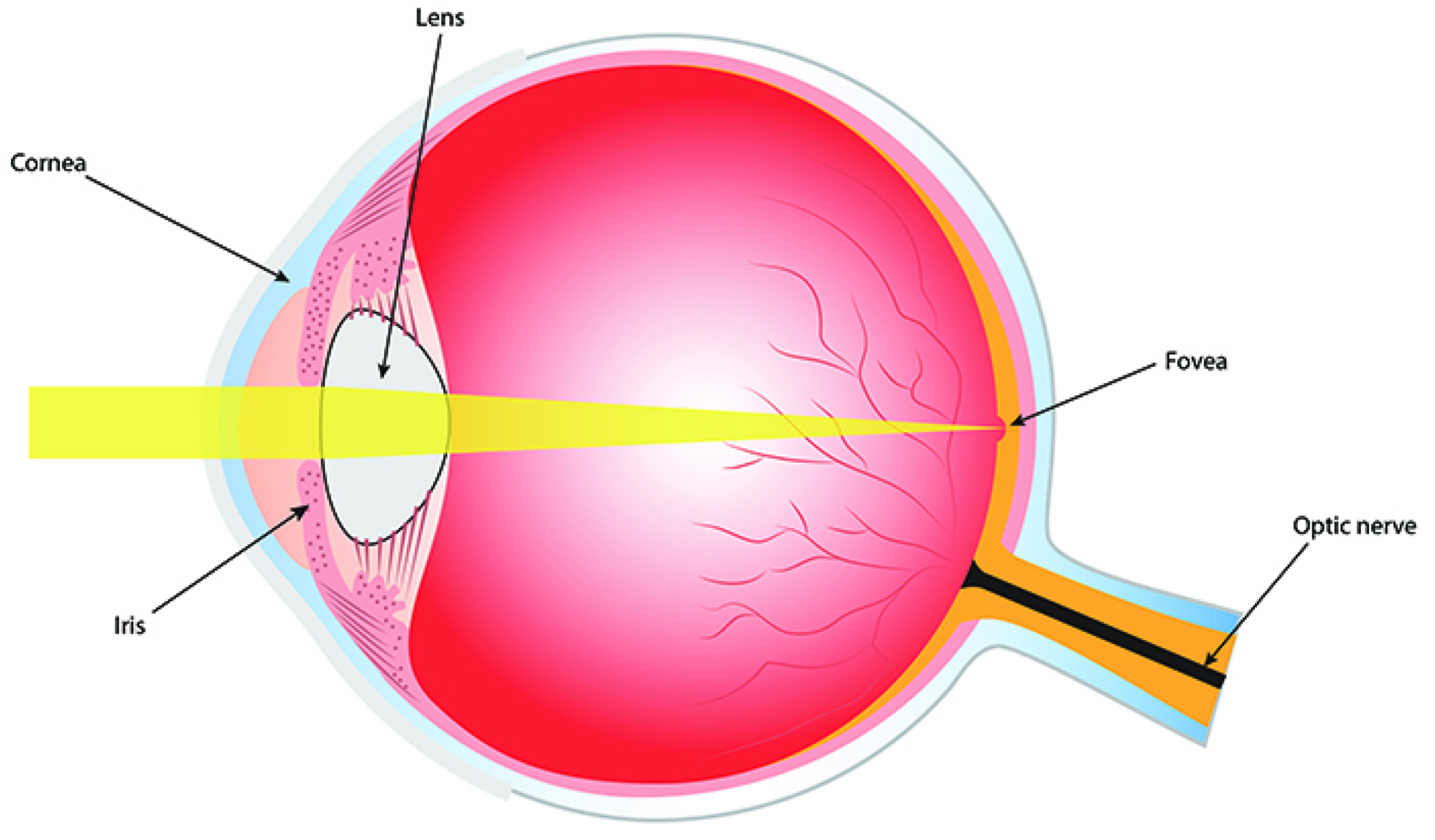

From a mechanical point of view, the eye is a rigid ball which can rotate around its center O. The retina occupies only part of the eye sphere but for simplicity, we identify it with the whole eye sphere . We will assume that the eye nodal point N (or optical center) belongs to the eye sphere and the opposite point F of the sphere at the center of the fovea.

For a fixed position of the head, there is a standard initial position of the eye sphere, described by the canonical orthonormal frame , which determines the standard coordinates of the Euclidean space with center O. We will consider these coordinates as the spatiotopic (or the world-centered) coordinates and at the same time as the head-centered coordinates. Here i indicates the standard frontal direction of the gaze, j is the lateral direction from left to right which is orthogonal to i and k is the vertical direction up.

Any other position of the eye is described by an orthogonal transformation which maps the frame into another frame where is the new direction of the gaze. Recall that any movement is a rotation about some axis through some angle .

Definition of a Straight Line by Helmholz

If the frontal plane (orthogonal to the line of sight) is far enough away compared to the size of the eyeball, then the central projection can be considered as a conformal map.

H. von Helmholtz gave the following physiological definition of a straight line:

A straight line is a curve , which is characterized by the following property: when the gaze moves along the curve ℓ, the retinal image of ℓ does not change.

Indeed, given a straight line

, let us denote by

the plane through

ℓ and the center

O of the eye ball and by

n its normal vector. Assume that for the standard position

of the eye, the gaze is concentrated on the point

, i.e.,

. The retina image of

ℓ belongs to the intersection

between

and the standard position

of the eye sphere. When the gaze moves along

, the eye rotates with the axis

n. Since at each moment

t the new position of the eye sphere is

, the retina image

remains the same for all

t.

We will see that saccades correspond to such movements along the straight lines.

3.3. The Geometry of the Quaternions

Now we recall the basic facts about quaternions and the Hopf bundle, which are we need for reformulation of Donders’ and Listing’s laws in terms of Listing’s section of the Hopf bundle.

Let

be the algebra of quaternions with the unit 1, where the space

of the imaginary quaternions is the standard Euclidean vector space with the orthonormal basis

and the product

of two elements from

E is the sum of their scalar product and the cross-product:

The group

of unit quaternions are naturally identified with the three dimensional sphere

and its Lie algebra is the algebra

of imaginary quaternions with the cross-product as the Lie bracket.

Denote by

the (exact) left representation and by

the (exact) right representation, which commutes with the left representation. They define the representation

with the kernel

.

The representation

is called the

adjoint representation. It has the kernel

, acts trivially on the real line

and defines the isomorphism

which shows that the group

is the universal covering of the orthogonal group

. The standard scalar product

in

, where for

the

is the conjugated quaternion, induces the standard Riemannian metric of the unit 3-sphere

, which is invariant with respect to the (transitive) actions of the group

. The group

preserves the points

(which will be considered as poles of

) and acts transitively on the equator

, which is the standard Euclidean unite sphere of the Euclidean space

. The geodesics of

are the great circles (the intersections of

with 2-subspaces of

).

The following simple facts are important for us and we state them as

Lemma 1. - (i)

Any point different from belongs to unique 1-parameter subgroup (the meridian) and can be canonically represented aswhere is the closest to a point of the equator. - (ii)

Points bijectively corresponds to oriented 1-parameter subgroupsof , parametrized by the arclength. - (iii)

Any orbit of the left action of an one-parameter subgroup (as well as the right action) is a geodesic of the sphere . All geodesics are exhausted by such orbits.

3.3.1. The Adjoint Action of the Group

Lemma 2. - (i)

The 1-parameter subgroup of generated by a unit vector acts on the sphere as the 1-parameter group of rotation w.r.t. the axe v: - (ii)

More generally, letbe a geodesic of , considered as the orbit of an 1-parameter subgroup . Then for the adjoint action of the curve is given by In other words, the orbit is the circle, obtained from the point by action of the group of rotations w.r.t. the axe v.

Proof. - (i)

The adjoint image

of the one-parameter subgroup is an one-parameter subgroup of

, which preserves the vector

, hence the group

of rotation w.r.t.

v. To calculate the angle of the rotation, we apply

to a vector

, which anticommutes with

v, as follows

This shows that .

- (ii)

follows from

and the following calculation

□

3.3.2. The Hopf Bundle and Listing’s Sphere

The Hopf bundle is defined as the natural projection

of

to the

-orbit

of the point

i.

The base sphere is called the Euclidean 2-sphere. The points will be considered the north and south poles of . We denote by the equator of .

The Hopf bundle is a non trivial bundle and has no global section. However, by removing just one point

with the preimage

from the base sphere

, we will construct the canonical section

of the bundle

over the punctured sphere

.

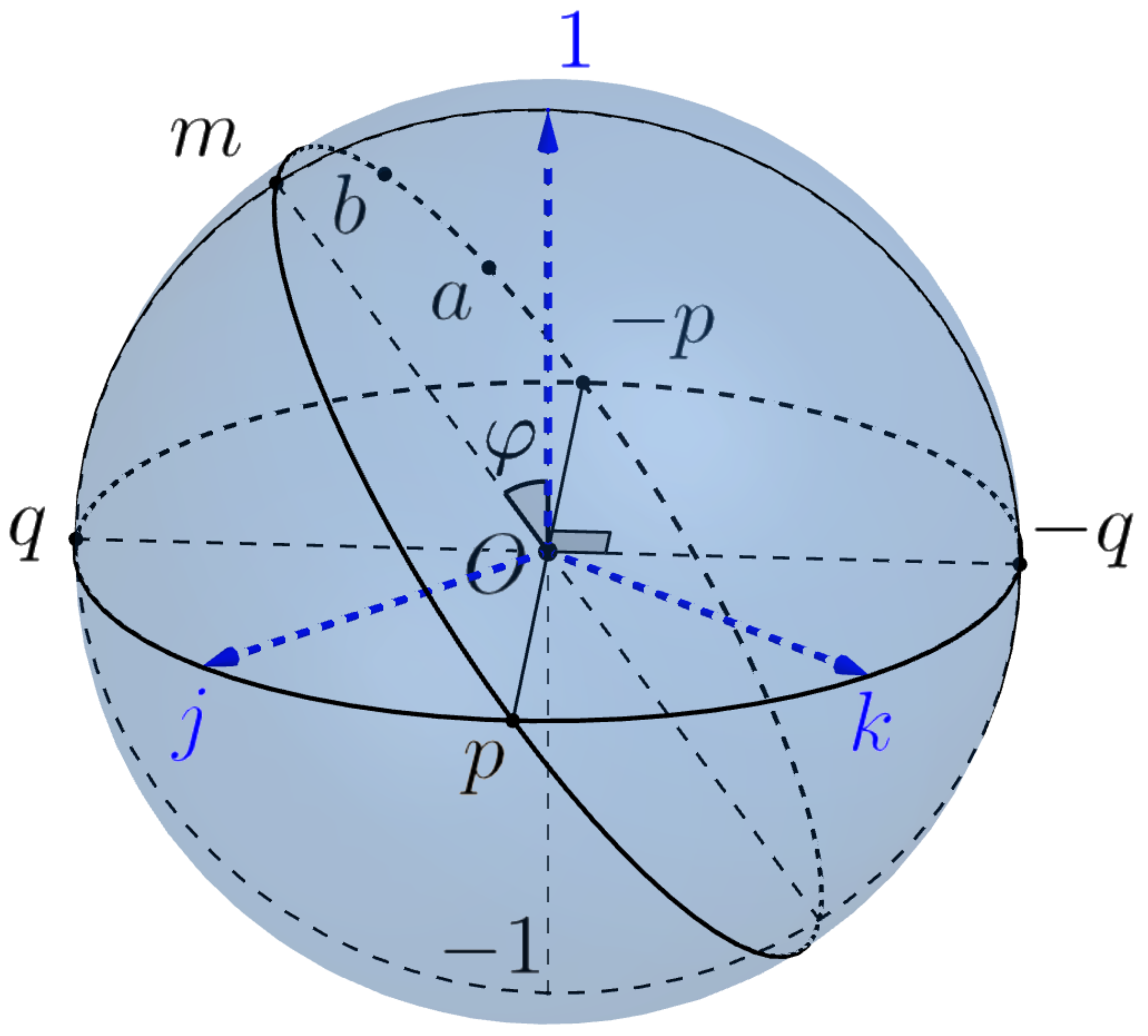

First of all, we define Listing’s sphere and Listing’s hemisphere, which play a central role in the geometry of saccades. The

Listing’s sphere is intersection

of the 3-sphere with the subspace

spanned by vectors

. In other words, it is the equator of the 3-sphere

w.r.t. the poles

, see

Figure 9.

We consider the point (resp., −1) as north (resp. south) pole of Listing’s sphere and denote by (resp., ) the open north (resp., south) hemisphere and by (resp., ) the closed hemisphere. Note that the equator of Listing’s sphere coincides with the equator of the Euclidean sphere .

3.3.3. Geometry of Listing’s Hemisphere

We consider Listing’s sphere as the Riemannian sphere with the induced metric of curvature 1 equipped with the polar coordinates centered at the north pole . The geodesics of are big circles. Any point of belongs to the unique 1-parameter subgroup of .

Any point

, different from

, can be canonically represented as

where

is the polar radius (the geodesic distance to the pole

(such that

is the geographic latitude) and

is the geographic longitude of the point

a. The point

is the geodesic projection of

a to the equator, i.e., the closest to

a point of the intersection of

with the equator

.

Note that the coordinate lines are big circles (meridians), in particular, is zero ("greenwich") meridian and the coordinate lines are parallels. The only geodesic parallel is the zero parallel, i.e., the equator .

The open Listing’s hemisphere is geodesic convex. This means that any two distinct points determine a unique (oriented) geodesic of the sphere and are joined by a unique geodesic segment .

Canonical Parametrization of Geodesics

Let be two distinct points and the oriented geodesic. Denote by p the first point of intersection of with the equator .

If

then the geodesic is an 1-parameter subgroup and

is its canonical parametrization.

If , the unique top point , with the maximal latitude has the form where is the geodesic projection of m to and , hence .

Then

where

and

, is the

canonical parametrization of the geodesic

.

The intersection of the geodesic with the Listing hemisphere is called the Listing’s semicircle.

3.3.4. Properties of the Restriction of the Hopf Map to Listing’s Sphere

Theorem 1. The restriction of the Hopf map χ to the Listing sphere is a branch covering. More precisely

- (i)

It maps the poles of the sphere into the pole i of the sphere and the equator into the south pole .

- (ii)

Any different from point belongs to a unique 1-parameter subgroup (the meridian of Listing’s sphere) which can be written as where is the equatorial point of .

The map is a locally isometric covering of the meridian of Listing’s sphere onto the meridian of the Euclidean sphere through the point . The restriction of χ to the semicircle is a diffeomorphism.

- (iii)

More generally, let be a geodesic through points with the canonical parametrization It is the orbit of 1-parameter group ,and the Hopf mapping χ maps it into the orbitof the 1-parameter group of rotations . In other words, the circle is obtained by rotating the point about the axis . - (iv)

The restriction of the map χ to the Listing hemisphere is a diffeomorphism .

Proof. (i)–(ii) follow from the remark that quaternions commute with i and the quaternions from anticommute with i. Hence for .

(iv) follows from or from Lemma 2. □

3.3.5. Listing Section

According to the Theorem, the Hopf map defines a diffeomorphism

Since the preimage

is the equator of Listing’s sphere

, the inverse map

where

is a section of the principal bundle

We call the section s the Listing section.

3.4. The Physiological Interpretation: Donders’ and Listing’s Laws and Geometry of Saccades

We use the developed formalism to give an interpretation of Donders’ and Listing’s laws and to study the saccades and drifts.

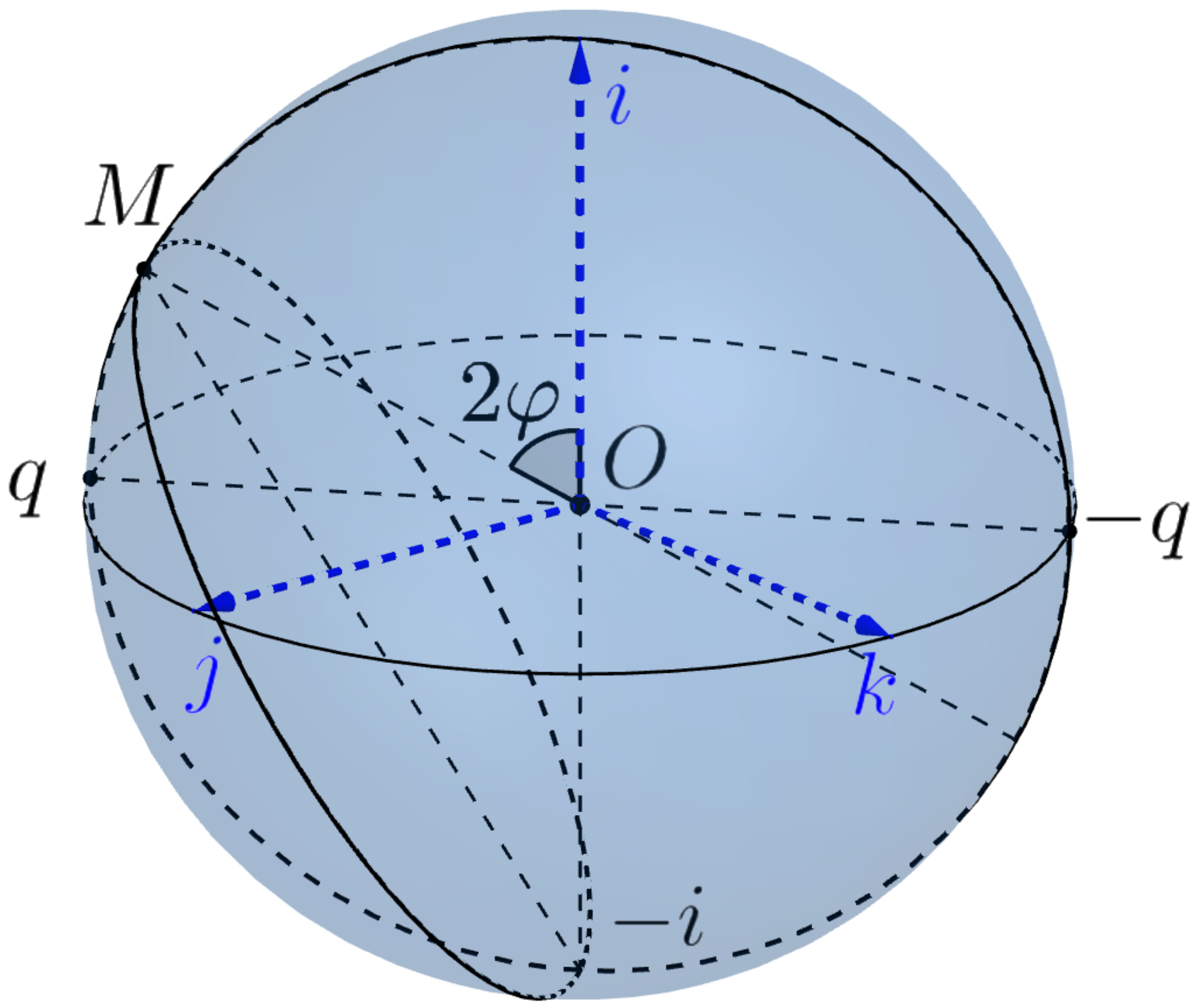

We consider the Euclidean sphere

as the model of the eye sphere, see

Figure 10, (the boundary of the eye ball

) with the center at the origin 0. We assume that the head is fixed and the standard basis

determines the standard initial position of the eye, where the first vector

i (the

gaze vector) indicates the standard frontal direction of the gaze, the second vector

j gives the lateral direction from right to left and

k is the vertical direction up.

The coordinates associated with the standard basis are the head-centered and spatiotopic (or world-centered) coordinates. A general position of the eye, which can rotate around the center 0 is determined by the orthonormal moving (retinatopic) frame , which determine the (moving) retina-centered coordinates .

The configuration space of the rotating sphere is identified with the orthogonal group

, an orthogonal transformation

R define the frame

It is more convenient to identify the configuration space with the group

of unit quaternions, which is the universal cover of

. The corresponding

-covering is given by the adjoint representation

A unit quaternion gives rise the orthogonal transformation and the frame which defines the new position of the eye. We have to remember that opposite quaternions represent the same frame and the same eye position. Note that a direction of the gaze determines the position of the eye up to a rotation w.r.t. the axe . Such rotation is called the twist.

Donders’ law states that if the head is fixed, then there is no twist. More precisely, the position of the gaze

determines the position of the eye, i.e., there is a (local) section

of the Hopf bundle

In other words, the admissible configuration space of the eye is two-dimensional. Physiologists were very puzzled by this surprise. Even the great physiologist and physicist Hermann von Helmholtz doubted the justice of this law and recognized it only after their own experiments. However, from the point of view of the modern control theory, it is very natural and sensible. The complexity of motion control in 3-dimensional configuration space compared to control on the surface is similar to the difference between piloting a plane and driving a car.

Listing’s law specifies the section s. In our language, it can be stated as follows.

Listing’s law. The section of Donder’s law is the Listing’s section

where

, which is the inverse diffeomorphism to the restriction

of the Hopf projection to Listing’s hemisphere.

In other words, a gaze direction

determines the position

of the eye as follows

Saccades

We define a

saccade as a geodesic segment

of the geodesic semicircle

. Recall that the semicircle

, (where

p is the first point of the intersection of the oriented geodesic

with the equator

,

is the top point of

and

q is the equatorial point of the meridian of the point

m), has the natural parametrization

where

. We may chose the vector

q, defined up to a sign, such that

.

The image

is the circle

(without the point

), obtained by the rotating of the point

with respect to the axe

, or, in other words, it is the section of the punctured sphere

by the plane

with the normal vector

, where

. The segment

is the

gaze curve, the curve, which describes the evolution of the gaze during the saccade

.

The natural question arises. If the gaze circle is not a meridian, it is not a geodesic of and the gaze curve is not the shortest curve of the sphere, joint A and B. Why the eye does not rotate such that the gaze curve is not the geodesic?

The answer is the following. If all gaze curves during saccades would be geodesics, then we get the twist and the configuration space of the eye becomes three-dimensional. Assume that the gaze curve of three consecutive saccades is a geodesic triangle which starts and finishes in the north pole . Since the sphere is a symmetric space, moreover, the space of constant curvature, the movements along a geodesic induce a parallel translation of tangent vectors. This implies that after saccadic movements along the triangle, the initial position of the eye will rotates w.r.t. the normal axe i on the angle which is proportional to the area of the triangle. Hence, a twist will appear.

Fortunately, since the retina image of the fixation point during FEM remain in the fovea with the center at , the gaze curve remains in a small neighborhood of the standard position i. In this case, the deviation of the gaze curve during MS from the geodesic will be very small. This is important for energy minimization, since during wakefulness, 2–3 saccades occur every second. Hence more than 100,000 saccades occur during the day.

Consider the stereographic projection of the sphere onto the tangent plane at the point . It is a conformal diffeomorphism, which maps any gaze circle onto a straight line and any gaze curve of a saccade onto an interval where is the point of the intersection of the tangent plane with the line and similar for . More precisely, where . The spherical n-gon , formed by gaze curves of saccades, maps into the n-gone on the plane, such that the angles between adjacent sides are preserved.

3.5. Listing’s Section and Fixation Eye Movements

Below we propose an approach to description of information processing in dynamics.

3.5.1. Retinotopic Image of a Stable Stimulus during Eye Movements

Recall that the direction of the gaze determines the position of the eye, which determines the frame and associated retinotopic coordinates.

Let the eye look for some time at a stationary surface, for example, at a plane , and the gaze describes a curve and hence is directed to the points of the stimulus . Then the eye position is defined by the curve . We call Listing’s curve.

The retinal image of the points forms the curve .

Moreover, if

it the retinal image of a point

at

, then due to eye movement, the retinal image

of the same point

B at the moment

t will be

Hence the retinal curve is the retinal image of the external point B. Indeed, in retinotopic coordinates, the eye is stable and the external plane is rotating in the opposite direction and at the moment t take the position . The point is the new position of the point .

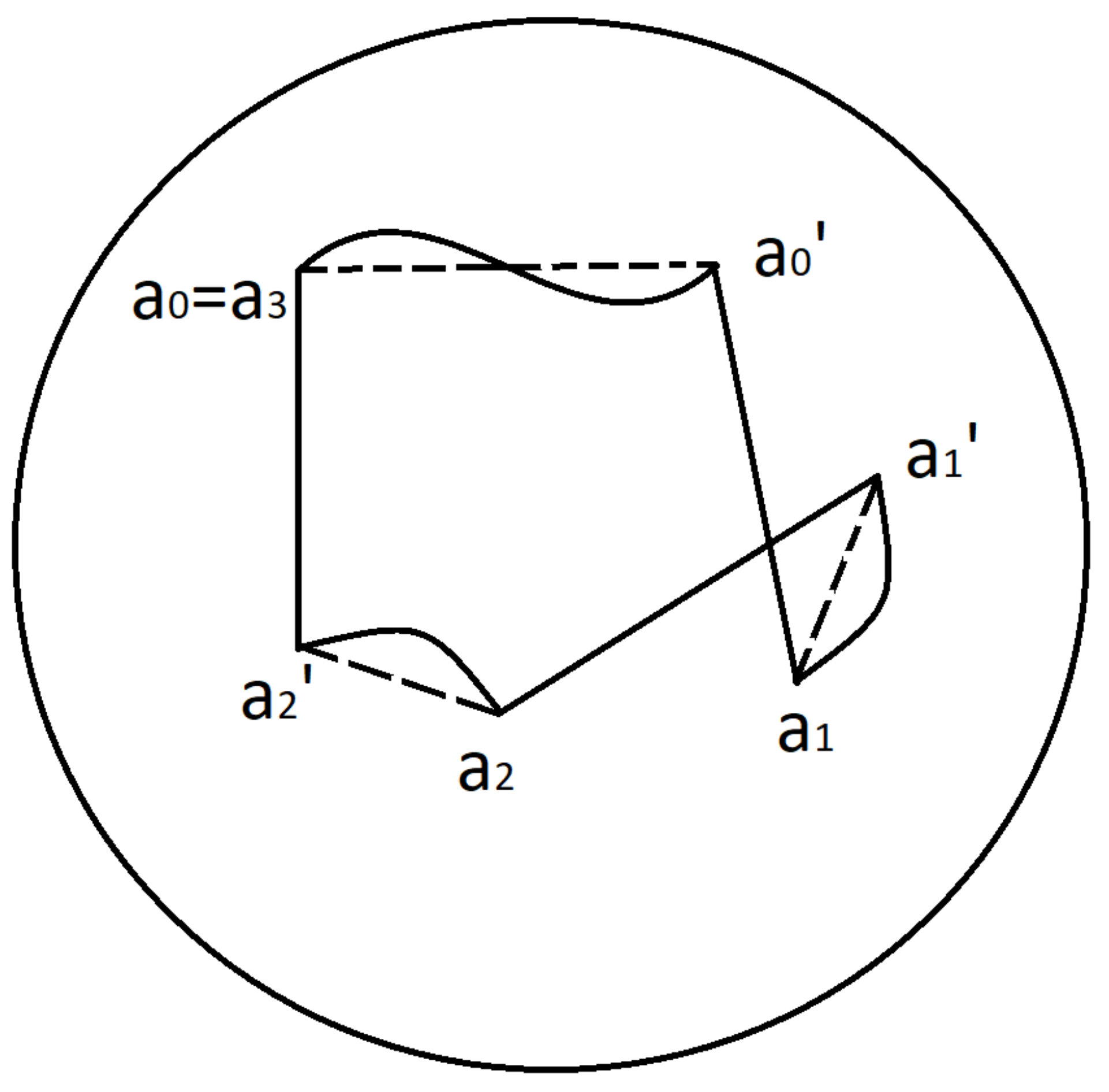

3.5.2. n-Cycles of Fixation Eye Movements

We define a

fixation eye movement n-cycle as a FEM which starts and finishes at the standard eye position

and consists of

n drifts

and

n microsaccades

between them. We will assume that MSs are instantaneous movements and occur at times

. Then the corresponding Listing’s curve can be written as

We associate with

n-cycle the spherical polygon

with

vertices (

-gone)

The sides represent saccades and the sides corresponds to the drifts .

Using the stereographic projection of Listing’s sphere from the south pole to the tangent plane , we can identify P with an -gone on the tangent plane .

In the case of saccade, Listing’s curve is a segment . Hence all saccades of n-cycle are determined by the position of their initial and final points in Listing’s hemisphere, i.t. by points .

For example, a 3-cycle is characterised by the hexagon

and consists of 3 drifts and 3 MSs:

An example of 3-cycle and associated hexagon is depicted in

Figure 11.

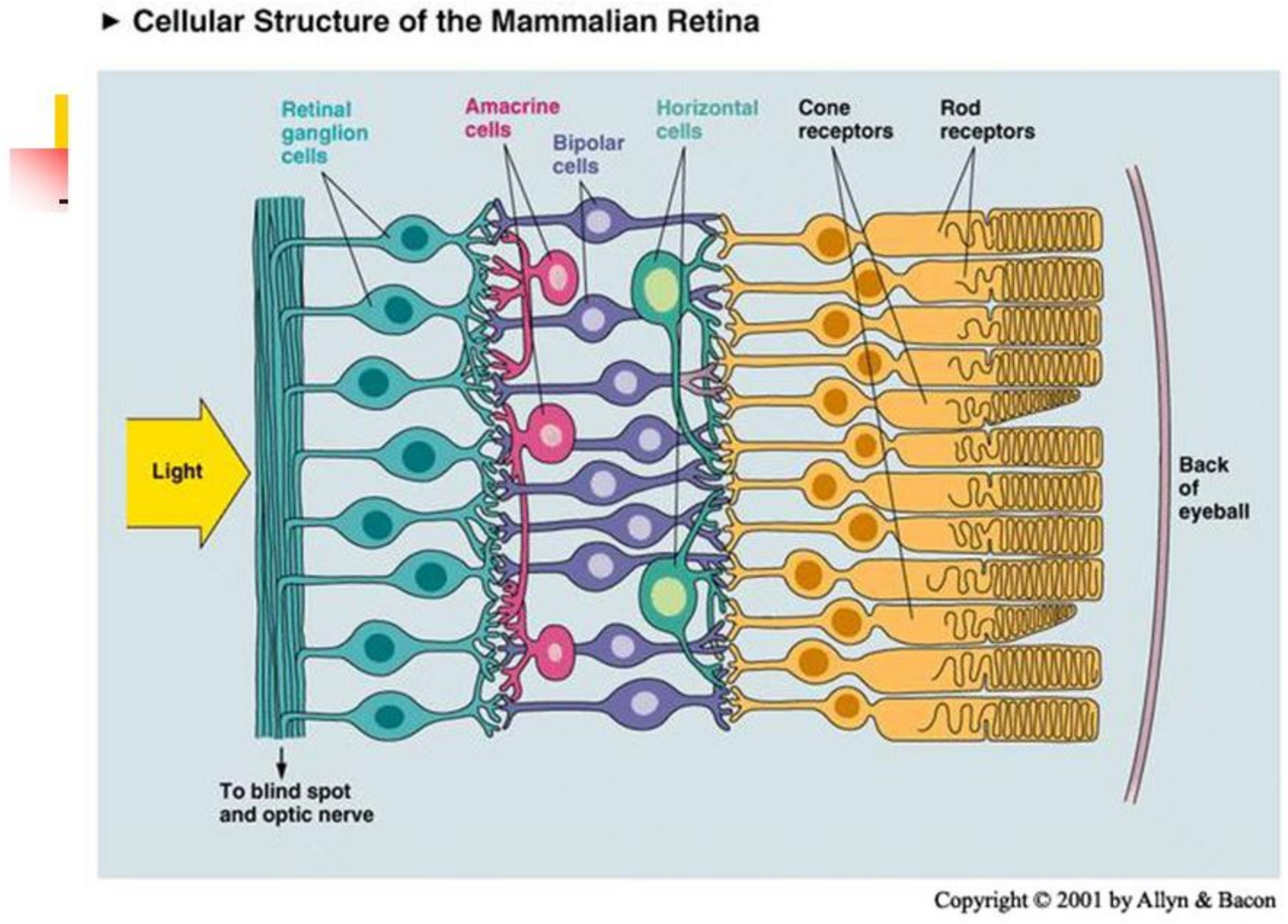

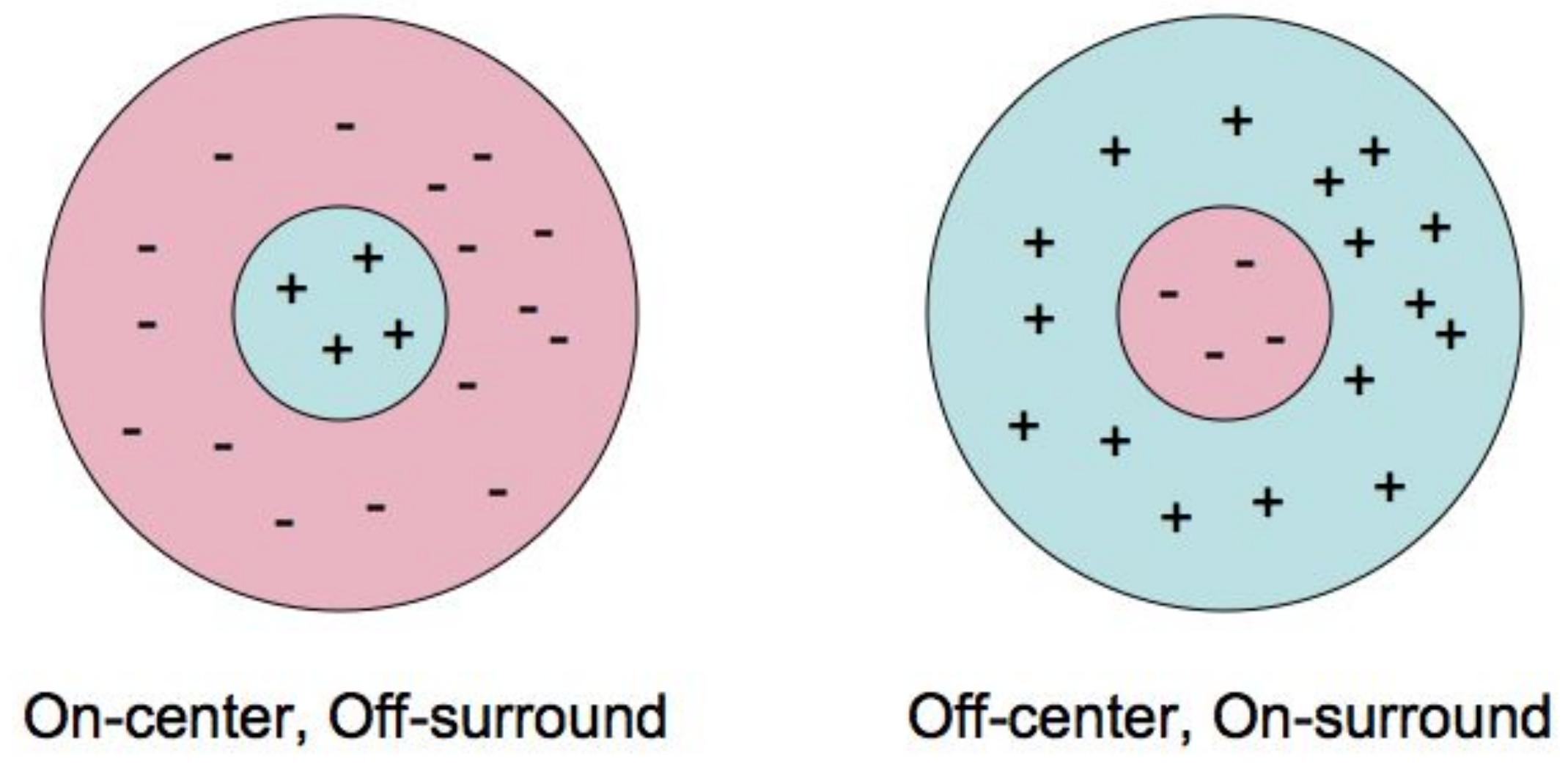

We suppose that during n-cycle with a Listing’s curve the visual system perceives local information about the stimulus, more precisely, information about points B whose retinal image belong to the fovea. The information needed for such local pattern recognition during a FEM cycle consists of two parts:

- (a)

The dynamical information about Listing’s curve , coded in oculomotor command signals. A copy of these signals (corollary discharge (CD)) is sent from the superior colliculus through the MD thalamus to the frontal cortex. It is responsible for visual stability, that is the compensation of the eye movements and perception of stable objects as stable.

- (b)

The visual information about characteristics of a neighborhood of points B of the stimulus which is primary encoded into the chain of photoreceptors along the closed retinal curve , which represents the point B during FEM. Then this information is sent for decoding through LGN to the primary visual cortex and higher order visual structures. In particular, if is the gaze curve with the initial direction to external point , the point of fixation A is represented by the retinal curve with .

3.6. A Model of Fixation Eye Movements

At first, we consider a purely deterministic scheme for processing information encoded in CD and visual cortex.

Then we discuss the problem of extending this model to a stochastic model. We state our main assumptions. If the opposite is not stated, we assume that we are working in spatiotopic coordinates associated to .

1. We assume that CD contains information about the eye position during the beginning and the end of the saccades , (which is equivalent to information about the gaze positions) and about the corresponding time .

2. We assume also that CD has information about Listing’s curve of the drift from the point to the point . (This assumption is not realistic and later we will revise it.)

3. Let

B be a point of the stable stimulus and

its retina image at the time

. Then during the drift

the image of

B is the retina curve

. We denote by

the characteristics of this image

B, which is recorded in the activation of photoreceptors along the retinal curve

during the drift

and then in firing of visual neurons in V1 cortex and higher order visual subsystems. Note that the information about the external stable point

B is encoded into the dependent on time vector–function

. This is a manifestation of a phenomenon that E. Ahissar and A. Arieli [

12] aptly named ‘figurating space by time’.

4. We assume that the (most) information about the drift , encoded in Listing’s curve and about the characteristic functions , is encoded in the coordinate system, associated to the end point of the preceding saccade . We remark that if , then associated with coordinate system is obtained from the spatiotopic coordinates by the rotation along the axe p of the Listing plane through the angle . (These coordinates are the retinotopic coordinates at the time ).

5. Let

C be another point of the stable stimulus with the retina image

at

and

the characteristic function of the retina image

of

C during drift

. Then the visual system is able to calculate the visual distance between point

during drift

as an appropriate distance between their characteristic functions

.

6. We assume that the change of coordinates (remapping) appear during each saccade. So for example during 3-cycle, the system uses the coordinates associated to the following points of Listing’s hemisphere

Here the interval indicates the time of the drift when the coordinates is used.

7. In particular, this means that the information about the characteristic function of the external point B along the retinal curves during the drift is encoded into the coordinates associated to the end point of the preceding saccade (which are the retinotopic coordinates at the time ).

To recalculate the characteristic function in terms of the spatiotopic coordinates, associated to , it is sufficient to know the point .

8. Following M. Zirnsak and T. Moore [

46], we suppose that during the drift

, the visual system chooses an external saliency point

A as the target for the next gaze position. More precisely, it fixes the retinal image

of this point w.r.t. coordinates associated with

(which are retinotopic coordinates at the moment

). After the next saccade

(at the moment

) the point

will become the point

F (the center of the fovea) and after the saccade the point

A will be the target point of the gaze vector

,

.

9. This allows to give an explanation of the presaccadic shift or receptive fields.

The above assumption means that before the time of the saccade , the visual system knows the future gaze vector with respect to the coordinates, associated with . Of course, this information may be obtained only due to collaboration of the visual system with the ocular motor system. At some moment 100 ms these subsystems recalculate the characteristic functions from the coordinates into the new coordinates, associated to the future gaze point and send this information to neurons of different visual systems.

This leads to the shift of receptive field, discovered in [

45]. The information about the future characteristic functions will contains some mistakes since the real position of the eye at the moment

is different from the position

. It is observed as dislocation (compression) of the image in space and time [

16,

17,

18,

19]. After the saccade, this mistakes are corrected. One of the way to reduce such dislocation is to increase the frequency of microsaccades.

3.6.1. Diffusion Maps and Stochastic Model of FEM

It seems that the assumption 1. that the CD contains information about the eye position at the beginning and the end of each saccade is rather reasonable. However, the assumption 2. must be clarified. Since the drift trajectory (Listing curve) can be arbitrary, it is difficult to believe that the CD stores information about its shape even for a short time. It is naturally to assume that the drift is a random walk and the ocular motor system and CD store information about random trajectory of the drift. Similarly, the characteristic functions , which contain information about the stable stimulus B, recorded by photoreceptors during the drift becomes a random function.

3.6.2. Diffusion Map by R.R. Coifman and S. Lafon

We shortly recall the basis ideas of the diffusion maps (or diffusion geometry) by R.R. Coifman and S. Lafon [

34,

35], which we will need.

The diffusion geometry on a (compact oriented) manifold

M with a volume form

such that

is determined by a

kernel i.e., a non negative and symmetric (

function on

. An example of the kernel is the Gauss kernel

, of the Euclidean space or the heat kernel of a Riemannian manifold. The normalization of the kernel gives the

transition Markov kernel

which defines a random walk on

M. The value

is considered as the probability to jump in one step from the point

x to the point

y.

The associated diffusion operator

P on the space of function is defined by

Then the probability density to move form

x to

y in

steps is described by the kernel

associated to the

power

of the operator

P such that

It can be defined for any

in terms of the eigenvectors and eigenfunction of the operator

P [

34]. So any point

determines a family of the

bump functions on

M, which characterize the local structure of a small neighborhood of

x. We call

the

trajectory of random walk (or

random trajectory) started from

x during time interval

R.R. Coifman and S. Lafon [

34] define the

diffusion distance between points

as the

-distance between the bump functions (or random trajectories)

and

, started form these points:

Let

be eigenvalues of the diffusion operator

P and

associated eigenfunctions. Then for sufficiently big number

m, the diffusion distance

is approximated by the function

given by

In other worlds, the map (called the

diffusion map)

is closed to the isometric map of the manifold

M with the diffusion metric

to the Euclidean space

. If the manifold

M is approximated by a finite systems of points

, the diffusion map gives a dimensional reduction of the system

X.

3.6.3. Remarks on Stochastic Description of Drift as Random Walk and Possible Application of Diffusion Distance

The idea that FEMs is a stochastic process and may be described as a random walk has a long history, [

29,

30,

31,

32,

33,

42].

1. We assume that the drift is a random walk on the Listing hemisphere

defined by some kernel. The question is to chose an appropriate kernel. The first guess is to assume that it is the heat kernel of the round (hemi)sphere. The short-time asymptotic of the heat kernel of the round sphere is known, see [

47]. The functional structure of the retina which records light information, is very important for choosing the kernel. Inhomogeneity of the retina shows that the first guess is not very reasonable. It seems that the more natural assumption is that the system uses the heat kernel for the metric on Listing hemisphere, which corresponds to the physiological metric of the retina. Recall that it is the pull back of the physical metric of the V1 cortex with respect to the retinotopic mapping.

2. We assume that the drift is a random walk in Listing’s hemisphere, defined by some kernel. Then by the drift trajectory

from the point

we may understand the random trajectory on

(or the bump function)

during the time interval

. It has no fixed end point but it allows to calculate the probability that the end point belongs to any neighbourhood of the point

. The situation is similar to Feynman’s path integral formulation of quantum mechanics. Moreover, if by a point we will understand not a mathematical point but a small domain, e.g., the domain which corresponds to the receptive field of a visual neuron in V1 cortex or the composite receptive field of a V1 column (which is 2–4 time larger) [

37], then we may speak about random drift

from the point

to the point

with the bump function

(“the random trajectory”). Roughly speaking, this function gives the probability that the random drift from the point

to the point

after

steps comes to the point

.

3. Due to diffeomorphism defined by the Hopf map

, we may identify the random walk in

with the random walk on the eye sphere

. A drift

in

induces the “drift” of a point

given by

Let

A be the fixation point of the gaze at the initial moment

, such that its retina image is

. Then the retina image of the point

A during the drift

is the curve

. More generally, if

is the retina image at

of any other point

B of the stimulus, then the retina image during the drift

is

In the stochastic case, the drift

is characterized by the random trajectory

, and associated “drift” of points in

by the random trajectory

where

and

is Listing’s section. Note that the right hand side does not depend on the point

.

We conjecture that the ocular motor control system detects information about random trajectories in and and the corollary discharge get a copy of this information.

It seems that the proposed explanation for shifting receptive fields may be generalized to the stochastic case.

4. Let B be a stable stimulus and its retina image at and the retina image at the time . Denote by the characteristic function, which describes the visual information about a stable stimulus point B with the retina image during the drift . If the drift is considered as a random walk, the information about the drift curve is encoded in the function and the characteristic function becomes a random function and is described by the bump function on . We suppose that the visual system calculates the visual distance between external points as the diffusion distance between the associated bump functions.

5. We also conjecture that like in deterministic case, the information about the random trajectory of the drift encoded in CD and the information about characteristic bump function, encoded in different structures of the visual cortex are sufficient for stabilization of visual perception. The problem reduces to recalculation of all information in spatiotopic coordinates, associated with the point .