Abstract

This paper presents an extension of work from our previous study by investigating the use of Local Quinary Patterns (LQP) for breast density classification in mammograms on various neighbourhood topologies. The LQP operators are used to capture the texture characteristics of the fibro-glandular disk region () instead of the whole breast area as the majority of current studies have done. We take a multiresolution and multi-orientation approach, investigate the effects of various neighbourhood topologies and select dominant patterns to maximise texture information. Subsequently, the Support Vector Machine classifier is used to perform the classification, and a stratified ten-fold cross-validation scheme is employed to evaluate the performance of the method. The proposed method produced competitive results up to and accuracy based on 322 and 206 mammograms taken from the Mammographic Image Analysis Society (MIAS) and InBreast datasets, which is comparable with the state-of-the-art in the literature.

1. Introduction

In 2014, there were more than 55,000 malignant breast cancer cases diagnosed in the United Kingdom (UK), with more than 11,000 mortalities [1]. In the United States (US), it was estimated that more than 246,000 malignant breast cancer cases were diagnosed in 2016, with approximately 16% of women expected to die [2]. Many studies have indicated that breast density is a strong risk factor for developing breast cancer [3,4,5,6,7,8,9,10,11,12] because breast cancer has a very similar appearance to dense tissues, which makes it difficult to detect in mammograms. Keller et al. [13] investigate the associations between breast cancer and the Laboratory for Individualized Breast Radiodensity Assessment (LIBRA) tool. Their study found that there is a significant association between breast cancer and the Gail risk factor plus Body Mass Index (BMI).

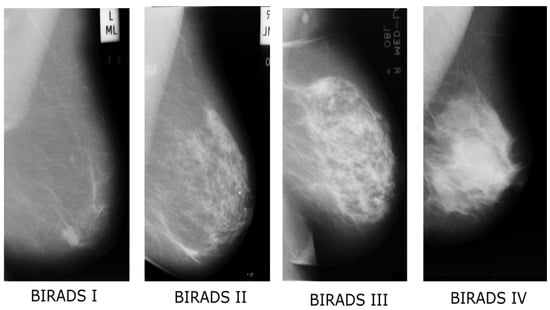

Therefore, an accurate breast density estimation is an important step during the screening procedure because women with dense breasts can be six times more likely to develop breast cancer [1,2]. Although most experienced radiologists can do this task, manual density classification is impractical, tiring, time-consuming and often results vary between radiologists. Computer Aided Diagnosis (CAD) systems can reduce these problems providing robust, reliable, fast and consistent diagnosis results. Currently, in the US, breast density is visually assessed by radiologists who classify density according to the fourth edition of Breast Imaging-Reporting and Data System (BI-RADS) into four classes based on the following criteria: (a) BI-RADS I (0–25% dense tissues, predominantly fat); (b) BI-RADS II (26–50% dense tissues, fat with some fibro-glandular tissue); (c) BI-RADS III (51–75% dense tissues, heterogeneously dense); and (d) BI-RADS IV (above 75% dense tissues, extremely dense) used in this study. Figure 1 shows four examples of breasts with different BI-RADS classifications.

Figure 1.

Examples of breast density according to Breast Imaging-Reporting and Data System classes (BI-RADS).

In this paper, we present a fully automatic method for breast density classification using local pattern information extracted using Local Quinary Patterns (LQP) operators from the fibro-glandular region instead of from the whole breast area as all of the studies in the literature have done. In particular, this paper extends our previous work in [14] by investigating the various neighbourhood topologies and different dominant local patterns. The contributions of our study are:

- To the best of our knowledge, this is the first study attempting to use local patterns extracted using the LQP operators in the application of breast density classification.

- We investigate the effects of the local pattern information when obtained using different resolutions, various neighbourhood topologies, different orientations and different dominant local patterns.

- We show the importance of extracting features from the fibro-glandular region instead of from the whole breast area as all of the current studies in the literature have done.

This paper adds the following extensions to our initial work in [14]:

- We have made a significant extension to the literature review of this study.

- We have extended the evaluation results for the circle topology, which was originally presented in [14] covering different aspects such as parameter selection, orientations and quantitative comparison.

- We investigate the topology aspect of the proposed method such as ellipse, parabola and hyperbola.

- We further evaluated the proposed method on the InBreast [15] dataset.

The remainder of the paper is organised as follows: Section 2 summarises related work in the literature; we present the technical details of the proposed method in Section 3 and present experimental results in Section 4 including the quantitative evaluation and comparisons with existing studies in the literature. Finally, we discuss and present possible future work in Section 5 and conclude the study in Section 6.

2. Literature Review

One of the earliest approaches to breast density assessment was a study by Boyd et al. [16] using interactive thresholding known as Cumulus, where regions with dense tissue were segmented by manually tuning the grey-level threshold value. The most popular approaches are based on the first and second-order (e.g., Grey Level Co-occurrence Matrix) statistical features as used by Oliver et al. [3], Bovis and Singh [4], Muštra et al. [17] and Parthaláin et al. [6]. Texture descriptors such as local binary patterns (LBP) were employed in the study of Chen et al. [7] and Bosch et al. [8] and Textons were used by Chen et al. [7], Bosch et al. [8] and Petroudi et al. [12]. Other texture descriptors also have been evaluated such as fractal-based [5,10], topography-based [9], morphology-based [3] and transform-based features (e.g., Fourier, Discrete Wavelet and Scale-invariant feature) [4,8].

There are many breast density classification methods in the literature, but only a few studies have achieved accuracies above 80%. The methods of Oliver et al. [3] and Parthaláin et al. [6] extract a set of features from dense and fatty tissue regions segmented using a fuzzy c-means clustering technique for input into the classifier. Oliver et al. [3] achieved 86% accuracy, and Parthaláin et al. [6], who used a sophisticated feature selection framework, achieved 91.4% accuracy based on the Mammographic Image Analysis Society (MIAS) database [18]. Bovis and Singh [4] produced 71.4% accuracy based on a combined classifier paradigm in conjunction with a combination of the Fourier and Discrete Wavelet Transforms, and first and second-order statistical features. Chen et al. [7] made a comparative study on the performance of local binary patterns (LBP), local grey-level appearance (LGA), Textons and basic image features (BIF) and reported accuracies of 59%, 72%, 75% and 70%, respectively. Later, they proposed a method by modelling the distribution of the dense region in topographic representations and reported a slightly higher accuracy of 76%. Petroudi et al. [12] implemented the Textons approach based on the Maximum Response 8 (MR8) filter bank. The distribution was used to compare each of the resulting histograms from the training set with all the learned histogram models from the training set and reported 75.5% accuracy. He et al. [11] achieved an accuracy of 70% using the relative proportions of the four Tabár building blocks. Muštra et al. [17] captured the characteristics of the breast region using multi-resolution of first and second-order statistical features and reported 79.3% accuracy.

Tamrakar and Ahuja [19] investigate the study of two-class classification (benign versus malignant) patch-based density problem by considering each of the BI-RADS classes. They investigated various texture descriptors and proposed a new feature extraction method called Histogram of Orientated Texture to achieve more than classification accuracy. Ergin and Kilinc [20] used the Histogram of Oriented Gradients (HOG), Dense Scale Invariant Feature Transform (DSIFT), and Local Configuration Pattern (LCP) methods to extract the rotation- and scale invariant features for all tissue patches to achieve above accuracy. Gedik [21] used fast finite Shearlet transform as a feature extraction technique and used t-test statistics as a feature selection procedure. The author reported an average of more than accuracy for two-class classification across two different datasets.

With the advent of deep learning techniques achieving performance similar to human readers [22], they are gaining attention from computer scientists across different research fields. In medical image analysis, deep learning techniques have been used in segmentation and classification problems related to the brain, eye, chest, digital pathology, breast, cardiac, abdomen and musculoskeletal imaging [23]. In the application of breast imaging, particularly in mammography, Kallenberg et al. [24] showed the potential of unsupervised deep learning methods applied to breast density segmentation and mammographic risk scoring on three different clinical datasets, and a strong positive correlation was found compared with manual annotations from expert radiologists. In a study of Ahn et al. [25], a Convolutional Neural Network (CNN) was used to learn the characteristics of dense and fatty tissues from 297 mammograms. Based on the 100 mammograms used as a testing dataset, the authors reported the correlation coefficient between the breast estimates by the CNN and those by the expert’s manual measurement to be 0.96. Arevalo et al. [26] proposed a hybrid CNN method to segment breast mass and reported 0.83 in the area under the curve (AUC) value. Qui et al. [27] proposed three pairs of convolution-max-pooling layers that contain 20, 10, and five feature maps to predict short-term breast cancer risk and achieved 71.4%. Using a deeper network approach, Cheng et al. [28] proposed an eight multi-layer deep learning algorithm to employ three pairs of convolution-max-pooling layers for mammographic masses classification and reported AUC of 0.81. In a similar problem domain (mass classification), Jiao et al. [29] used CNN and a decision mechanism along with intensity information and reported an average of 95% accuracy on different sizes of datasets.

Based on the results reported in the literature, the majority of the proposed methods has used traditional machine learning algorithms tested on 4-class breast density classification and achieved below 80% accuracy. This indicates that breast density classification is a challenging task due to the complexity of tissue appearance in the mammograms such as wide variations in texture and ambiguous texture patterns within the breast region. Although numerical results from deep learning based methods are quite promising, none of these studies has attempted the 4-class density classification problem. In fact, the majority of the deep learning based methods are based on 2-class classification problems (e.g., malignant versus benign, and fatty versus dense tissues). In this paper, texture features were extracted from the fibro glandular region () only instead of from the whole breast region in order to obtain more specific descriptive information. The motivation for this approach is that, in most cases, the non- contains mostly fatty tissues regardless of its BI-RADS class, and most dense tissues are located and start to develop within the . Therefore, extracting features from the whole breast region means obtaining multiclass texture information, which makes the extracted features less discriminant for the BI-RADS classes.

3. Methodology

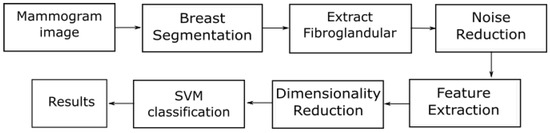

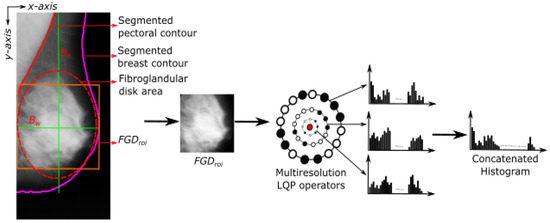

Figure 2 shows an overview of the proposed methodology. Firstly, we segment the breast area and estimate the area. Subsequently, we use a simple median filter using a sliding window for noise reduction and employ the multiresolution LQP operators with different neighbourhood topologies and orientations to capture the microstructure information within the . For dimensionality reduction, we select only dominant patterns in the feature space to remove redundant or unnecessary information. Finally, we train the Support Vector Machine (SVM) classifier to build a predictive model and use it to test each unseen case.

Figure 2.

An overview of the proposed breast density methodology.

3.1. Pre-Processing

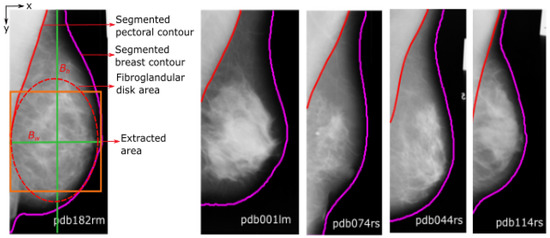

To segment the breast and pectoral muscle region, we used the method in [30], which is based on Active Contours without edges for the breast boundary estimation and contour growing with edge information for the pectoral muscle boundary estimation. The leftmost image in Figure 3 shows the estimated area. To extract , we find , which is the longest perpendicular distance between the y-axis and the breast boundary (magenta line in Figure 3). The width and the height of the square area of the (amber line Figure 3) can be computed as with the centre located at the intersection point between and lines. is the height of the breast, which is the longest perpendicular distance between the x-axis and the breast boundary. is then relocated to the middle of to get the intersection point. The size of the varies depending on the width of the breast. Figure 3 shows examples of segmentation results using our method in [30].

Figure 3.

Example of segmentation results using our method in [30].

3.2. Feature Extraction

In the feature extraction stage, we used the LQP mathematical operators to extract local pattern information within the , which is a variant of Local Binary Pattern (LBP). The LBP operators were first proposed by Ojala et al. [31,32] to encode pixel-wise information. The value of the LBP code of the pixel is given by:

where R is the radius of the circle that form the neighbourhood, P is the number of pixels in the neighbourhood, is the grey level value of the centre pixel, and is the grey level value of the neighbour. Later, Tan and Triggs [33] modified the approach by introducing Local Ternary Pattern (LTP) operators, which threshold the neighbouring pixels using a three-value encoding system based on a constant threshold set by the user. In our previous study [34], experimental results suggest that the LTP operators using a combination of uniform and nonuniform mapping patterns () produced robust texture descriptors that can be used for density classification. Once the LTP code is generated, it is split into two binary patterns by considering its positive and negative components:

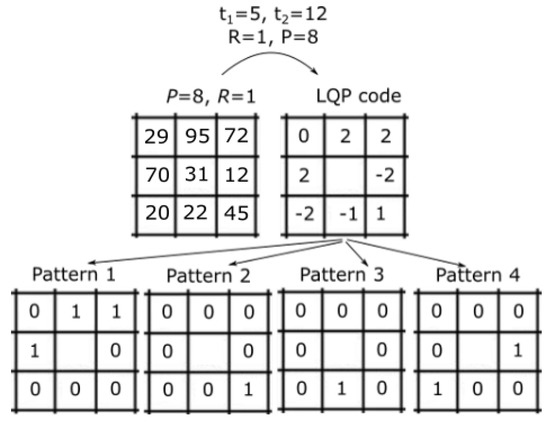

where is a threshold value set by the user. Nanni et al. [35] introduced a five-value encoding system called LQP. The LBP, LTP and LQP are similar in terms of architecture as each is defined using a circle centred on each pixel and the number of neighbours. The main difference is that the LBP, LTP and LQP threshold the neighbouring pixels into two (1 and 0), three (, 0 and 1) and five (2, 1, 0, and ) values, respectively. This means that, for LQP, the difference between the grey-level value of the centre pixel () and a neighbour’s grey level () can assume five values, whereas LBP and LTP consider two and three values, respectively. The value of LQP code of the pixel is given by:

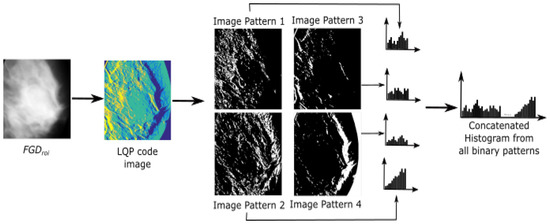

where and are threshold values set by the user, and . Once the LQP code is generated, it is split into four binary patterns by considering its positive, zero and negative components, as illustrated in Figure 4, using the following conditions:

Figure 4.

An illustration of computing the Local Quinary Pattern (LQP) code using and , resulting in four binary patterns.

In this case, feature extraction is the process of computing the frequencies of all binary patterns and presenting the occurrences in a histogram, which represents the number of appearances of edges, corners, spots, and lines within the . This means that the size of the histogram is . To enrich texture information, we compute texture information at different resolutions, which can be achieved by concatenating histogram features extracted using different values of R and P in the LQP operators. In this paper, resolution is controlled by the radius of the circle (e.g., different window sizes) and a different number of neighbours. Figure 4 shows an example of converting neighbouring pixels to an LQP code and binary code, resulting in four binary patterns. In the LQP code, there are five numerical values generated based on Equation (5), whereas binary patterns 1, 2, 3 and 4 are generated based on Equations (6)–(9), respectively.

Figure 5 shows an example of the feature extraction process using multiresolution LQP operators. Note that each resolution produces four binary patterns (see Figure 4) resulting in four histograms. Subsequently, these four histograms are concatenated into a single histogram, which represents the feature occurrences of a single resolution. The illustration in Figure 5 uses three resolutions, producing three primary histograms (note that each main histogram is a result of concatenation from four histograms of four binary patterns) that are merged into a single histogram as the actual representation of the feature occurrences in the .

Figure 5.

An overview of the feature extraction using multiresolution LQP operators. Black dots in multiresolution LQP operators mean neighbours with less value than the central pixel (red dot). Note that the outer circle with bigger dots represent a larger R value.

Figure 6 shows four images of binary patterns generated based on the conditions in Equations (6)–(9). The LQP code image is generated using the term in Equation (5). All histograms from binary patterns are concatenated to produce a single histogram. This process is repeated depending on the number of resolutions chosen by the user. In the proposed approach, this process was repeated three times as we used three different resolutions such as , , .

Figure 6.

Four images of binary patterns generated from the LQP code image.

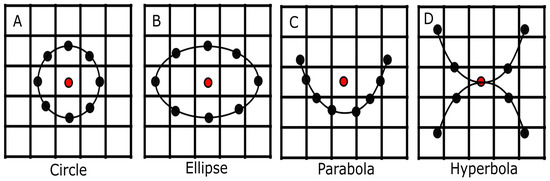

3.3. Neighbourhood Topology

The typical neighbourhood topology used in the LBP operators is a circle. In this study, we are interested in investigating the effects of the proposed method when using different neighbourhood topologies. For this purpose, we employed three further topologies, namely ellipse, parabola and hyperbola, as illustrated in Figure 7, where eight neighbours (black dots) were used and distributed equally. The central point is represented by the red dot in each topology. The value for each neighbour is the gray level value of a pixel that is located nearest to it. We summarise the equation and parameters involved in Table 1 for each topology as defined by Nanni et al. [35].

Figure 7.

Five different neighbourhood topologies employed in our study.

Table 1.

Parameter descriptions for all neighbourhood topologies. Note that a and b are the semimajor and semiminor axis lengths, and c is the distance between vertex and focus.

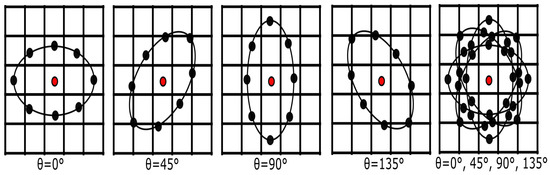

3.4. Topology Orientations

In this study, we further investigate the effects on the classification results when using local pattern information extracted at different orientations (). For this purpose, we investigated three other directions: , and (note that is the default orientation). Furthermore, these orientations also can be combined to create a new topology as illustrated in the rightmost image in Figure 8.

Figure 8.

Ellipse topology at different orientations and its combination.

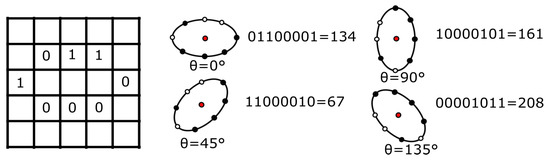

Topology orientation can be achieved through spatial rotation of the binary pattern. To illustrate this process, consider Figure 9, which represents a binary pattern in an ellipse topology (for simplicity, assume that the pattern was generated after the process in Figure 6 was implemented). In this example, the initial decimal value for the binary pattern 01100001 is 134 but rotating the pattern at produces a new decimal value of 67 (binary pattern becomes 11000010). Similarly, rotation at and result in 161 and 208 decimal values, respectively. The same rotation process applies to the circle, parabola and hyperbola topologies.

Figure 9.

Spatial rotation in an ellipse topology resulting in different decimal values. The decimal value is calculated in a clockwise direction.

3.5. Dominant Patterns

Selecting dominant patterns is an important process for dimensionality reduction purposes due to the large number of features (e.g., concatenation of several histograms). According to Guo et al. [36], a set of dominant patterns for an image is the minimum set of pattern types that can cover of all patterns of an image. In other words, dominant patterns are patterns that occur frequently in training images. Therefore, to find the dominant patterns, we apply the following procedure. Let be images in the training set. Firstly, we compute the multiresolution histogram feature () for each training image. Secondly, we perform a bin-wise summation for all the histograms to find the pattern’s distribution from the training set. Subsequently, the resulting histogram () is sorted in descending order, and the patterns corresponding to the first D bins are selected, where D can be calculated using the following equation:

Here, N is the total number of patterns and n is the threshold value set by the user. For example, means removing patterns that have less than 3% occurrence in . This means only the most frequently occurring patterns will be retained for training and testing. The smaller the value of n, the smaller the number of patterns selected.

3.6. Classification

Once the feature extraction is completed, the SVM is employed as our classification approach using a polynomial kernel. The GridSearch technique is used to explore the best two parameters (complexity (C) and exponent (e)). We test all possible values of C and e ( and with interval ) and select the best combination based on the highest accuracy in the training phase. The SVM classifier was trained, and, in the testing phase, each unseen from the testing set is classified as BI-RADS I, II, III or IV.

4. Experimental Results

To test the performance of the method, we used the Mammographic Image Analysis Society (MIAS) dataset [18], which consists of 322 mediolateral-oblique (MLO) mammograms of 161 women. The spatial resolution of the images is and quantised to eight bits with a linear optical density in the range 0 to . The density distribution for BI-RADS classes are as follows: 60 (BI-RADS I), 105 (BI-RADS II), 129 (BI-RADS III) and 31 (BI-RADS IV). The second database used in this study is the InBreast dataset [15], which consists of 410 images of 115 women. Since our study concentrates on breast representation in MLO views, only 206 MLO mammograms were taken into our experiment. The pixel size of all images is 70 mm (microns) and 14-bit contrast resolution. The image matrix was or pixels. The density distribution for BI-RADS classes are as follows: 69 (BI-RADS I), 74 (BI-RADS II), 49 (BI-RADS III) and 14 (BI-RADS IV). Each image contains BI-RADS information (e.g., BI-RADS class I, II, III or IV) provided by an expert radiologist. A stratified ten runs 10-fold cross validation scheme was employed, where the patients were randomly split into 90% for training and 10% for testing, and repeated 100 times. Accuracy () is used to measure the performance of the method, which represents the total number of correctly classified images as a proportion of the total number of images. Both datasets were annotated by expert radiologists based on the fourth edition of the BI-RADS system. Therefore, evaluation of the proposed method is based on the fourth edition guidelines. All images in both datasets are in .tif format (8 bits) with greylevel range from 0 to 255.

4.1. Results on Different Multiresolutions

This section presents quantitative results for the proposed method when using different parameter values (e.g., P, R, a, b and c) in the LQP operators. We evaluated the method using the following three different multiresolutions and tested it on various neighbourhood topologies:

- Small multiresolution (),

- Medium multiresolution (),

- Large multiresolution ().

All associated parameters are summarised in Table 2. Note that these parameter values were determined empirically, as the aim of our study is not to optimise the LQP parameters but to investigate the effects of neighbourhood topology in the feature extraction process using the LQP operators. Moreover, since there are many possible combinations of parameter values in each topology, testing all the values is impractical and time-consuming. Nevertheless, we selected several values empirically to show the effects of these parameters in our study.

Table 2.

Parameters values for different multiresolutions on different neighbourhood topologies.

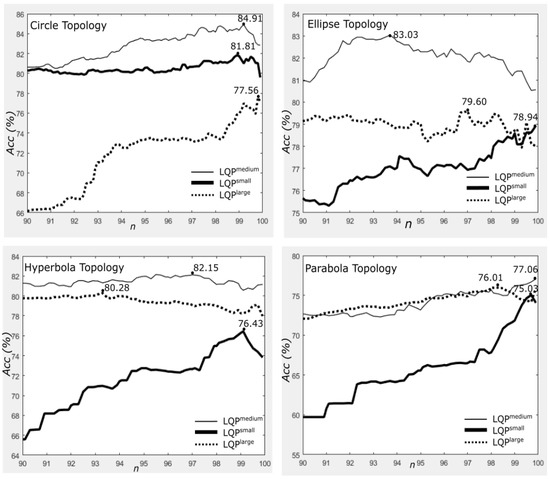

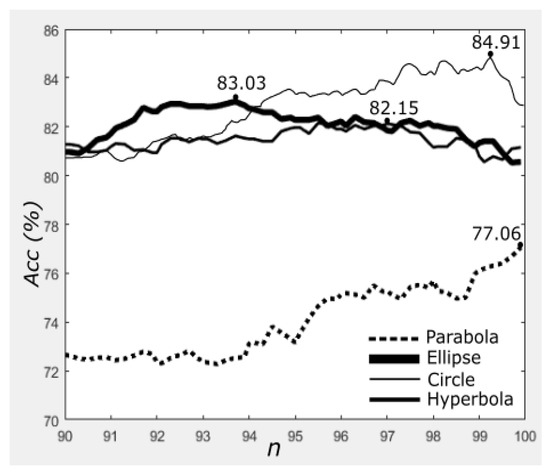

We present quantitative results for the proposed method in Figure 10 using small, medium and large multiresolutions covering four different neighbourhood topologies. Experimental results suggest that the outperformed and regardless of its topology and the number of dominant patterns selected, with the best accuracy of , followed by the approach at . The achieved the third best accuracy and the outperformed both and . This suggests that using a combination of medium sizes of R captures more discriminant features regardless of the topology. Previous studies [37,38,39] on texture descriptors across different window sizes found that using a small value of R does not capture sufficient information about the regions due to the limited intensities and grey level variations. On the other hand, using a large R tends to alter the actual representation of the area, especially when one class dominates over another class. For example, if there is a small dense region within the large circle area that mostly contains fatty tissues, combining different multi-resolutions such as do not provide significant improvement on the classification accuracy () due to insufficient textural information in and over-representation of the actual textural information from . We further tested and achieved an accuracy of .

Figure 10.

Quantitative results using different multiresolutions based on different neighbourhood topologies. Note that the x-axis and y-axis represent the accuracy (percentage of correctly classified cases) and the percentage of dominant patterns, respectively.

The results in Figure 10 also indicate that the parameter n plays an important role in the performance of the method. It can be observed that the best classification results were achieved when for . For and , the best classification results were achieved when and , respectively. Moreover, selecting reduces the classification accuracy significantly, especially for , and compared with their best accuracies. Nevertheless, produced on average across different values of n () and different topologies. The produced better results (on average across different values of n) than , , and . Regarding accuracy, the produced just under 2% higher compared to the but approximately 1% higher than the result of . For the parabola topology, none of the multiresolution approaches achieved , and the highest accuracy was obtained using , with just over 77% correct classification.

4.2. Results on Different Thresholds

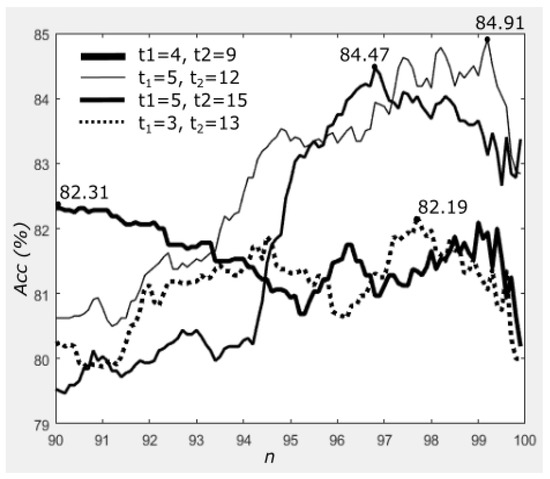

For the threshold values, we have investigated several combinations of and found that and produced the best accuracy for all experiments. However, for comparison, we report the performance of the method using , and for the circle topology. We also show the effect on performance when varying n from 90 to 99.9 with intervals of 0.1.

Figure 11 shows quantitative results for using the following thresholds: (): , , and . Note that these parameters are determined empirically, and there are many possible values that can be tested. It can be observed that threshold values of produced the best accuracy of 84.91% with followed by with . The other threshold values still produced good results compared with most of the proposed methods in the literature. Once again, it can be observed that selecting dominant patterns play a significant role in the classification accuracy. In Figure 11, the proposed method produced very good results when for both and .

Figure 11.

Quantitative results using different values of and on circle neighbourhood topology. Note that the x-axis and y-axis represent the accuracy (percentage of correctly classified cases) and the percentage of dominant patterns, respectively.

4.3. Fibro-Glandular Region versus Whole Breast Region

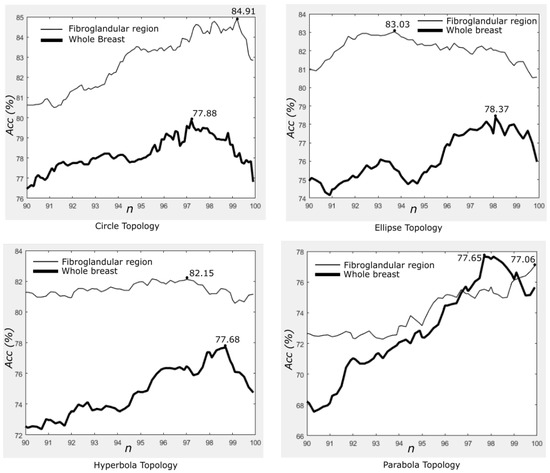

In this section, we present quantitative results when classifying unseen cases based on local pattern information extracted from the entire breast area (as all of the current studies in the literature have done) versus pattern information obtained from the only.

For this purpose, we conducted an experiment using with . We selected medium multiresolution, as this approach produces the best results compared with small and large multiresolution methods. Results can be seen in Figure 12 (top left) that suggest that textures from the fibro-glandular disk region are sufficient to differentiate breast density. Extracting features from the whole breast produced up to 77.88% accuracy with , which is lower than when features are extracted from the only. Secondly, to further support these results, we conducted additional experiments using , and . As can be observed in Figure 12, regardless of topology, features extracted from the once again produced more discriminant descriptors than the ones obtained from the whole breast region.

Figure 12.

Quantitative results based on features extracted from the whole breast versus fibro-glandular disk region covering four different topologies. Note that the x-axis and y-axis represent the accuracy (percentage of correctly classified cases) and the percentage of dominant patterns, respectively.

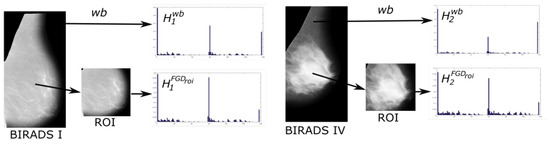

Our explanation for these results is that, in many cases, non-fibro-glandular disk areas predominantly contain fatty tissues regardless of their BI-RADS class because most dense tissues start to develop within the . Therefore, capturing texture information outside the means extracting similar information resulting in less discriminative features across BI-RADS classes [40]. In cases where the non-fibro-glandular disk region is dominated by dense tissue, the mostly contains dense masses. For example, Figure 13 shows histograms extracted from the whole breast regions () and the . To measure the difference quantitatively, we used the distance (d) to gauge the difference between these histograms and found for and , and for and . This means that is more similar to than and .

Figure 13.

Histograms extracted from the versus region of interest (ROI) with BIRADS class I and IV.

4.4. Results on Different Neighbourhood Topologies

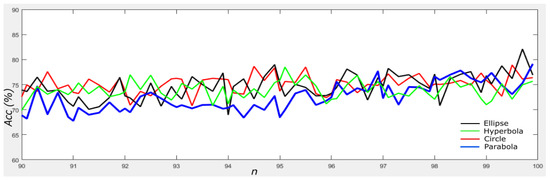

To summarise the performance of the proposed method using different neighbourhood topologies, we present the results evaluated based on the MIAS dataset [18], all in a single graph as shown in Figure 14. The results are based on medium size multiresolution (e.g., ). All associated parameters remain the same as in Table 2. Experimental results in Figure 14 suggest that three of the topologies employed, namely circle, ellipse and hyperbola, produced consistent results () across different values of n (), whereas failed to achieved accuracy above 80%. The circle topology achieved the highest accuracy of 84.91% followed by ellipse and hyperbola with and 82.15%, respectively. In terms of accuracy, the main difference between the and the is that the tends to produce higher results when and for .

Figure 14.

Quantitative results (MIAS dataset [18]) of based on features extracted from the fibro-glandular disk region on different neighbourhood topologies. Note that the x-axis and y-axis represent the accuracy (percentage of correctly classified cases) and the percentage of dominant patterns, respectively.

We further tested the proposed method using the InBreast dataset [15] and present the results in Figure 15. All associated parameters are the same as in Table 2. It can be observed that the ellipse topology performed the best with over accuracy at , whereas the circle topology produced close to at and . The parabola topology achieved the highest accuracy () at and at for the hyperbola topology. On average, the proposed method achieved , , and accuracy for the circle, ellipse, hyperbola and parabola, respectively, across different n.

Figure 15.

Quantitative results (InBreast dataset [15]) of based on features extracted from the fibro-glandular disk region on different neighbourhood topologies. Note that the x-axis and y-axis represent the accuracy (percentage of correctly classified cases) and the percentage of dominant patterns, respectively.

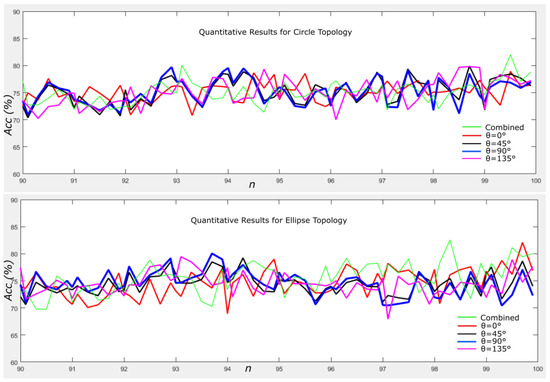

4.5. Results on Different Orientations

To investigate the effect on the performance at various orientations (), we conducted several experiments by varying with the following values: (which is the default orientation), , and . The parameter values remain the same for all medium size multiresolution approaches with []. Therefore, the new parameter settings are presented in Table 3. Note that only the circle and ellipse topologies are tested due to their promising results presented in Section 4.4. Combining different orientations for the ellipse results in the neighbourhood architecture becoming more complex as presented in the rightmost image in Figure 8. To assess the effect of a single orientation, first, we set a constant value of across different resolutions. For example, for the circle (), (from now on, we call it , where ). Secondly, we vary the value of (as presented in Table 3), hence the parameters are (from now on, we call it , where * indicates all four orientations).

Table 3.

Parameter values for different neighbourhood topologies and orientations.

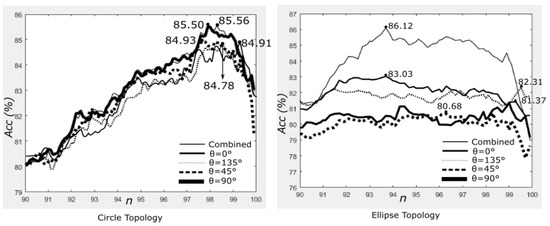

The left image of Figure 16 shows classification accuracies using the circle neighbourhood topology. Individually, experimental results suggest that the produced the best accuracy of , which indicates density patterns are more visible at this orientation followed by with accuracy . Overall results suggest that multiresolution can produce consistent results (>) regardless of the orientation with . Combining all orientations suggests that produced 85.56%, which is very similar to using a single orientation. On the other hand, the right image in Figure 16 shows that produced 86.12% accuracy, which is noticeable compared to the second best accuracy of (83.03%). The , and produced the best accuracy of 80.68%, 81.37% and 82.31%, respectively, when tested using .

Figure 16.

Quantitative results using different orientations evaluated on the MIAS dataset [18]. Note that the x-axis and y-axis represent the accuracy (percentage of correctly classified cases) and the percentage of dominant patterns, respectively.

Figure 17 shows classification accuracies using the circle and ellipse neighbourhood topologies for the InBreast [15] dataset. Note that we used the same parameter values as presented in Table 3. It can be observed that combining all orientations (, , , ) produced the highest accuracy of just over for both topologies. However, each orientation tends to produce an accuracy between to , which is lower than the results when tested on the MIAS dataset [18]. This might be due to a smaller number of samples and imbalance number of instances across different classes, particularly BI-RADS IV (only ). The accuracy variations shown in Figure 17 indicate that the selection of n is crucial in order to optimise the performance of the proposed method. Individually, the ellipse topology produced the second best accuracy of at , whereas the circle topology produced an accuracy of at .

Figure 17.

Quantitative results using different orientations evaluated on the InBreast dataset [15]. Note that the x-axis and y-axis represent the accuracy (percentage of correctly classified cases) and the percentage of dominant patterns, respectively.

4.6. Summary of Quantitative Results

The quantitative results for the sensitivity (), specificity () and area under the curve () are summarised in Table 4 and Table 5 for both MIAS [18] and InBreast [15] datasets, respectively. We only tested the for circle and ellipse topologies because they are proven to be more effective based on the results presented in Section 4.1, Section 4.2, Section 4.3, Section 4.4 and Section 4.5 compared to the other topologies. Since the classification procedure in this study is a non-binary or multiclass [41] problem, we used the pairwise analysis approach [42] to calculate the , and values to further evaluate the proposed method. The measures the proportion of actual true positives that are correctly identified and the represents the proportion of actual true negatives correctly identified. The (or also known as the value) indicates the trade-off between the true positive rate against the false positive rate.

Table 4.

Overall quantitative results for MIAS [18] database using circle and ellipse topologies. The parameters R and P are based on the values in Table 3.

Table 5.

Overall quantitative results for InBreast [15] database using circle and ellipse topologies. The parameters R and P are based on the values in Table 3.

Note that the standard deviations in Table 4 and Table 5 are quite large due to the effect of imbalance in the number of instances across different classes. For example, the proportion of samples for BI-RADS IV are quite small in both datasets. In a case where the testing set contains only a small number (e.g., 4 samples) of samples from BI-RADS IV and only two of the samples are correctly classified, this results in sensitivity that not only increases the standard deviation but the average , and . Overall, the proposed method achieved the highest () with () and () when evaluated on the MIAS dataset [18]. Nevertheless, the results are lower when tested on the InBreast dataset [15] with the best values of , and for , and , respectively.

5. Discussion

We present this section as three subsections that cover quantitative comparison with current methods in the literature, effects of parameter selection on the proposed method and future work.

5.1. Quantitative Comparisons

For quantitative comparison with other methods in the literature, to minimise bias, we selected those studies that have used the MIAS database [18], four-class classification, and used the same evaluation technique (10-fold cross validation) as in this study. Table 6 shows studies that have been conducted using the same evaluation technique on four-class classification. Note that all studies have used all of the 322 mammograms in the MIAS database [18].

Table 6.

Quantitative comparison of the current studies in the literature.

Based on the classification results presented in Table 6, Parthaláin et al. [6] achieved the best accuracy of 91.4% using a combination of first and second-order statistical features and morphological features. Our proposed method achieved up to 86.13% accuracy, which is better than the majority of the methods presented in Table 6. Although Parthaláin et al. [6] achieved the best accuracy, our method is simpler because we use only the LQP operators to extract local pattern information, whereas the method in [6] used several feature extraction techniques. In fact, the Fuzzy C-means clustering is time-consuming, as every pixel needs to be classified as to whether it belongs to the fatty or non-fatty class. Similar to the study of Oliver et al. [3], the FCM clustering technique was employed and the sequential forward selection (SFS) algorithm was used to select a set of discriminant features. Once again, this process is more complicated because each feature will be compared with the other available features when calculating discriminant features. In contrast, our method selected dominant patterns that are based on the number of occurrences. It does not quantitatively compare each feature vector to the other features, which reduces computational time.

Previously, Rampun et al. [40] used the LTP operator to extract local information from the and achieved a promising result of 82.33% accuracy. However, this method suffers from having to deal with a large number of features due to the multi-orientation approach (e.g., eight histograms from eight orientations were concatenated). The main difference compared with the proposed method is that the method in [40] concatenated eight orientations and no feature selection was performed, whereas our method performed feature selection by selecting dominant patterns only and investigated different neighbourhood topologies and different resolutions. Muštra et al. [17] developed a method using first and second-order statistical features and employed a complex feature selection framework (e.g., combining several feature selection techniques). Nevertheless, considering the types of features used, they achieved comparable results of almost 80% accuracy. Chen et al. [7,9] investigated several ‘bag-of-words’ techniques (e.g., LBP, BIF, Textons and LGA) and reported that the Textons (based on MR8) and the LGA approaches achieved the highest accuracies of 76% and 75%, respectively.

Bovis and Singh [4] reported 71.4% accuracy based on a combined classifier paradigm and He et al. [4] produced 70% accuracy using the proportion of four Tabár building blocks. In an unsupervised approach, Petroudi et al. [12] achieved 75.5% classification accuracy using a Textons (MR8) approach to characterise breast appearance. Based on the numerical results presented in the literature, there is still scope for improvement regarding accuracy, which indicates that four-class classification is a challenging task. In our case (based on MIAS database [18]), this might be due to: (a) strong artefacts because images were scanned which also may have altered the actual representation of the breast tissues and (b) an imbalanced number of data across different class. The MIAS database [18] contains 87, 103, 95, 37 cases for BI-RADS I, II, III and IV, respectively. The number of cases for BI-RADS IV is small, which may cause the prediction model to under represent this class.

5.2. Effects of Parameter Selection

There are many parameters involved in this study, and all of them were determined empirically. The aim of our study is not to optimise these parameters in our classification method but to investigate whether the LQP operators can produce discriminant features that can represent breast density based on different neighbourhood topologies. Based on the experimental results shown in Section 4.1, Section 4.2, Section 4.3, Section 4.4 and Section 4.5, all parameters have a significant effect on the performance results. However, the and produced better results than the other topologies. This may be due to the texture appearance in breasts being mostly anisotropic, and these topologies are better at dealing with these types of structural information (due to their anisotropic shapes) than the parabola and hyperbola. In terms of the selection of R, a large value of R (e.g., 15 or 17) caused one class to be over represented compared with the other classes, whereas a small value of R (e.g., 1 or 2) limits the texture information within the region. On the other hand, a large value of P (e.g., 22) increases the number of patterns but at the same time increases the information complexity in the feature space, and small P (e.g., 4 or 6) results in insufficient texture patterns. In terms of and values, we found that the results in our study are higher than the ones found in the study of Nanni et al. [35].

Using a multiresolution approach increases the opportunity for capturing textural information that is not represented on a single scale. This is similar when dealing with textures that are more visible in multi-orientations rather than a single orientation. Nevertheless, multiresolution and multi-orientation approaches also have their disadvantages such as increasing the number of features, which also means it increases the possibility of capturing similar information. One way to reduce this problem is to select dominant patterns by taking features that have high occurrences only, but this still does not solve the problem of retaining two sets of similar features with high occurrences. In this study, before selecting dominant features, the number of features is approximately 200. Performing feature selection results in a much smaller number of features (between 50 to 80). For example, selecting results in 70 to 80 dominant features, whereas retains 55 to 65 dominant features. The main reason for the significant reduction is due to sparse features (large percentage of zeros) that has been reported in [7,9,35]. Since the LQP operators produced sparse features in our case, this has significant variation on the classification accuracies. As can be observed in Figure 10, Figure 11 and Figure 12, even a small n can change the results significantly because a small n can remove a large number of many features.

When combining all four orientations, we found that the produced the highest accuracy, over 3% better than using a single orientation. In contrast, the produced only 0.06% improvement over . This is probably because the topology of neighbourhood distribution has changed to more flexible to a ‘circle-like’ and ‘ellipse-like’ (see the rightmost image in Figure 8, where the central region is ‘circle-like’ and the outer shape is ‘ellipse-like’). In this case, the topology is able to capture information using both circle and ellipse neighbourhood distribution.

5.3. Future Work

For future work, we plan to develop a method that can automatically estimate and as well as combine multiresolution LQP features with ‘bag-of-words’ features such as Textons and MR8. Furthermore, we plan to use a deep learning approach to find discriminant features and combine them with handcrafted features (e.g., local patterns from LQP operators). Although several studies [22,23,24,25,26,27,28,29] have investigated the use of deep learning in breast density classification, to the best of our knowledge, the separation was only based on two- or three-class classification (none of the deep learning based approaches has been applied to four-class classification). In fact, no study has been conducted combining non-handcrafted and handcrafted features in breast density classification.

6. Conclusions

In conclusion, we have developed a breast density classification method using multiresolution LQP operators applied only within the fibro-glandular disk area, which is the most prominent region of the breast instead of the whole breast region as used in other current studies [3,4,5,6,7,8,9,11,12]. Experimental results indicate that the multiresolution LQP features are robust compared with the other methods such as LBP, Textons based approaches and LTP due to the five encoding system that generates more texture patterns. Moreover, the multiresolution approach provides complementary information from different parameters that cannot be captured in a single resolution. We further studied the effects on performance when using different neighbourhood topologies (e.g., ellipse, parabola and hyperbola), and found that the outperformed , and . Nevertheless, when combining four orientations (), the proposed method achieved the best accuracy—up to 86.12% () based on the MIAS dataset [18], which is better than the other LQP approaches employed in this study. Finally, the proposed method produced competitive results based on two datasets (MIAS [18] and InBreast [15]) compared with some of the best accuracies reported in the literature.

Acknowledgments

This research was undertaken as part of the Decision Support and Information Management System for Breast Cancer (DESIREE) project. The project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 690238.

Author Contributions

In this study, Andrik Rampun did all the experiments and evaluations discussed. Bryan W. Scotney, Philip J. Morrow, Hui Wang and John Winder supervised the project and contributed equally in preparation of the final version of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AUC | Area Under the Curve |

| BIF | Basic Image Features |

| BI-RADS | Breast Imaging-Reporting and Data System |

| CAD | Computer Aided Diagnosis |

| CNN | Convolutional Neural Network |

| FCM | Fuzzy C-means |

| LBP | Local Binary Pattern |

| LGA | Local Grey-level Appearance |

| LTP | Local Ternary Pattern |

| LQP | Local Quinary Pattern |

| MR8 | Maximum Response 8 filter bank |

| SFS | Sequential Forward Selection |

| SVM | Support Vector Machine |

| Fibro Glandular Region |

References

- Cancer Research UK. Breast cancer statistics. 2014. Available online: http://www.cancerresearchuk.org/health-professional/cancer-statistics/statistics-by-cancer-type/breast-cancer (accessed on 6 January 2017).

- Breast Cancer. U.S. Breast Cancer Statistics. 2016. Available online: http://www.breastcancer.org/symptoms/understand_bc/statistics (accessed on 6 January 2017).

- Oliver, A.; Freixenet, J.; Martí, R.; Pont, J.; Perez, E.; Denton, E.R.E.; Zwiggelaar, R. A Novel Breast Tissue Density Classification Methodology. IEEE Trans. Inf. Technol. Biomed. 2008, 12, 55–65. [Google Scholar] [CrossRef] [PubMed]

- Bovis, K.; Singh, S. Classification of Mammographic Breast Density Using a Combined Classifier Paradigm. In Proceedings of the 4th International Workshop on Digital Mammography, Nijmegen, Netherlands, 7–10 June 2002; pp. 177–180. [Google Scholar]

- Oliver, A.; Tortajada, M.; Lladó, X.; Freixenet, J.; Ganau, S.; Tortajada, L.; Vilagran, M.; Sentś, M.; Martí, R. Breast Density Analysis Using an Automatic Density Segmentation Algorithm. J. Digit. Imaging 2015, 28, 604–612. [Google Scholar] [CrossRef] [PubMed]

- Parthaláin, N.M.; Jensen, R.; Shen, Q.; Zwiggelaar, R. Fuzzy-rough approaches for mammographic risk analysis. Intell. Data Anal. 2010, 14, 225–244. [Google Scholar]

- Chen, Z.; Denton, E.; Zwiggelaar, R. Local feature based mamographic tissue pattern modelling and breast density classification. In Proceedings of the 4th International Conference on Biomedical Engineering and Informatics (BMEI), Shanghai, China, 15–17 October 2011; pp. 351–355. [Google Scholar]

- Bosch, A.; Munoz, X.; Oliver, A.; Martí, J. Modeling and Classifying Breast Tissue Density in Mammograms. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 1552–1558. [Google Scholar]

- Chen, Z.; Oliver, A.; Denton, E.; Zwiggelaar, R. Automated Mammographic Risk Classification Based on Breast Density Estimation. In Pattern Recognition and Image Analysis; Volume 7887 of the series Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; pp. 237–244. [Google Scholar]

- Wolfe, J.N. Risk for breast cancer development determined by mammographic parenchymal pattern. Cancer 1976, 37, 2486–2492. [Google Scholar] [CrossRef]

- He, W.; Denton, E.; Stafford, K.; Zwiggelaar, R. Mammographic Image Segmentation and Risk Classification Based on Mammographic Parenchymal Patterns and Geometric Moments. Biomed. Signal Process. Control 2011, 6, 321–329. [Google Scholar] [CrossRef]

- Petroudi, S.; Kadir, T.; Brady, M. Automatic Classification of Mammographic Parenchymal Patterns: A Statistical Approach. In Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE Cat. No.03CH37439), Cancun, Mexico, 17–21 September 2003; Volume 1, pp. 798–801. [Google Scholar]

- Keller, B.M.; Chen, J.; Daye, D.; Conant, E.F.; Kontos, D. Preliminary evaluation of the publicly available Laboratory for Breast Radiodensity Assessment (LIBRA) software tool: Comparison of fully automated area and volumetric density measures in a case-control study with digital mammography. Breast Cancer Res. 2015, 17, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Rampun, A.; Morrow, P.J.; Scotney, B.W.; Winder, R.J. Breast density classification in mammograms using local quinary patterns. In Proceedings of the Annual Conference on Medical Image Understanding and Analysis MIUA 2017: Medical Image Understanding and Analysis, Edinburgh, UK, 11–13 July 2017; pp. 365–376. [Google Scholar]

- Moreira, I.C.; Amaral, I.; Domingues, I.; Cardoso, A.; Cardoso, M.J.; Cardoso, J.S. INbreast: Toward a full-field digital mammographic database. Acad. Radiol. 2011, 19, 236–428. [Google Scholar] [CrossRef] [PubMed]

- Byng, J.W.; Boyd, N.F.; Fishell, E.; Jong, R.A.; Yaffe, M.J. Automated analysis of mammographic densities. Phys. Med. Biol. 1996, 41, 909–923. [Google Scholar] [CrossRef] [PubMed]

- Muštra, M.; Grgić, M.; Delać, K. A Novel Breast Tissue Density Classification Methodology. Breast Density Classification Using Multiple Feature Selection. Automatika 2012, 53, 362–372. [Google Scholar]

- Suckling, J.; Parker, J.; Dance, D.; Astley, S.; Hutt, I.; Boggis, C.; Ricketts, I. The mammographic image analysis society digital mammogram database. Proc. Excerpta Med. Int. Congr. Ser. 1994, 375–378. [Google Scholar]

- Tamrakar, D.; Ahuja, K. Density-Wise Two Stage Mammogram Classification Using Texture Exploiting Descriptors. arXiv, 2017; arXiv:1701.04010. [Google Scholar]

- Ergin, S.; Kilinc, O. A new feature extraction framework based on wavelets for breast cancer diagnosis. Comput. Biol. Med. 2015, 51, 171–182. [Google Scholar] [CrossRef] [PubMed]

- Gedik, N. A new feature extraction method based on multiresolution representations of mammograms. Appl. Soft Comput. 2016, 44, 128–133. [Google Scholar] [CrossRef]

- Kooi, T.; Litjens, G.; van Ginnekena, B.; Gubern-Méridaa, A.; Sánchez, C.I.; Manna, R.; den Heeten, A.; Karssemeijer, N. Large scale deep learning for computer aided detection of mammographic lesions. Med. Image Anal. 2017, 35, 303–312. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Kallenberg, M.; Petersen, K.; Nielsen, M.; Ng, A.; Diao, P.; Igel, C.; Vachon, C.; Holland, K.; Karssemeijer, N.; Lillholm, M. Unsupervised deep learning applied to breast density segmentation and mammographic risk scoring. IEEE Trans. Med. Imaging 2016, 35, 1322–1331. [Google Scholar] [CrossRef] [PubMed]

- Ahn, C.K.; Heo, C.; Jin, H.; Kim, J.H. A Novel Deep Learning-based Approach to High Accuracy Breast Density Estimation in Digital Mammography. In Proceedings of the SPIE Medical Imaging 2017: Computer-Aided Diagnosis, Orlando, FL, USA, 3 March 2017; Volume 10134. [Google Scholar]

- Arevalo, J.; González, F.A.; Ramos-Pollán, R.; Oliveira, J.L.; Lopez, M.A.G. Representation learning for mammography mass lesion classification with convolutional neural networks. Comput. Methods Program. Biomed. 2016, 127, 248–257. [Google Scholar]

- Qiu, Y.; Wang, Y.; Yan, S.; Tan, M.; Cheng, S.; Liu, H.; Zheng, B. An initial investigation on developing a new method to predict short-term breast cancer risk based on deep learning technology. In Proceedings of the SPIE Medical Imaging 2016: Computer-Aided Diagnosis, San Diego, CA, USA, 24 March 2016; Volume 9785. [Google Scholar]

- Cheng, J.Z.; Ni, D.; Chou, Y.H.; Qin, J.; Tiu, C.M.; Chang, Y.C.; Huang, C.S.; Shen, D.; Chen, C.M. Computer-Aided Diagnosis with Deep Learning Architecture: Applications to Breast Lesions in US Images and Pulmonary Nodules in CT Scans. Sci. Rep. 2016, 15, 24454. [Google Scholar] [CrossRef] [PubMed]

- Jiao, Z.; Gao, X.; Wang, Y.; Li, J. A deep feature based framework for breast masses classification. Neurocomputing 2016, 197, 221–231. [Google Scholar] [CrossRef]

- Rampun, A.; Morrow, P.J.; Scotney, B.W.; Winder, R.J. Fully Automated Breast Boundary and Pectoral Muscle Segmentation in Mammograms. Artif. Intell. Med. 2017, 79, 28–41. [Google Scholar] [CrossRef] [PubMed]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Hadid, A.; Pietikainen, M.K.; Zhao, G.; Ahonen, T. Computer Vision Using Local Binary Patterns; Springer: London, UK, 2011; pp. 13–47. [Google Scholar]

- Tan, X.; Triggs, B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. In Analysis and Modelling of Faces and Gestures; Springer: Berlin/Heidelberg, Germany, 2007; pp. 168–182. [Google Scholar]

- Rampun, A.; Morrow, P.J.; Scotney, B.W.; Winder, J. A Quantitative Study of Local Ternary Patterns for Risk Assessment in Mammography. In Proceedings of the International Conference on Innovation in Medicine and Healthcare, Vilamoura, Portugal, 21–23 June 2017; pp. 283–286. [Google Scholar]

- Nanni, L.; Luminia, A.; Brahnam, S. Local binary patterns variants as texture descriptors for medical image analysis. Artif. Intell. Med. 2010, 49, 117–125. [Google Scholar] [CrossRef] [PubMed]

- Gio, Y.; Zhao, G.; Pietikäinen, M. Discriminative features for feature description. Pattern Recognit. 2012, 45, 3834–3843. [Google Scholar] [CrossRef]

- Rampun, A.; Wang, L.; Malcolm, P.; Zwiggelaar, R. A quantitative study of texture features across different window sizes in prostate t2-weighted mri. Procedia Comput. Sci. 2016, 90, 74–79. [Google Scholar] [CrossRef]

- Rampun, A.; Tiddeman, B.; Zwiggelaar, R.; Malcolm, P. Computer aided diagnosis of prostate cancer: A texton based approach. Med. Phys. 2016, 43, 5412–5425. [Google Scholar] [CrossRef] [PubMed]

- Rampun, A.; Zheng, L.; Malcolm, P.; Tiddeman, B.; Zwiggelaar, R. Computer-aided detection of prostate cancer in T2-weighted MRI within the peripheral zone. Phys. Med. Biol. 2016, 61, 4796–4825. [Google Scholar] [CrossRef] [PubMed]

- Rampun, A.; Morrow, P.J.; Scotney, B.W.; Winder, R.J. Breast density classification in mammograms using local ternary patterns. In Proceedings of the International Conference Image Analysis and Recognition ICIAR 2017: Image Analysis and Recognition, Montreal, QC, Canada, 5–7 July 2017; pp. 463–470. [Google Scholar]

- Aly, M. Survey on Multiclass Classification Methods. 2005. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.175.107 (accessed on 4 December 2017).

- Landgrebe, T.C.W.; Duin, R.P.W. Approximating the multiclass ROC by pairwise analysis. Pattern Recognit. Lett. 2007, 28, 1747–1758. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).