Unified Multi-Modal Object Tracking Through Spatial–Temporal Propagation and Modality Synergy

Abstract

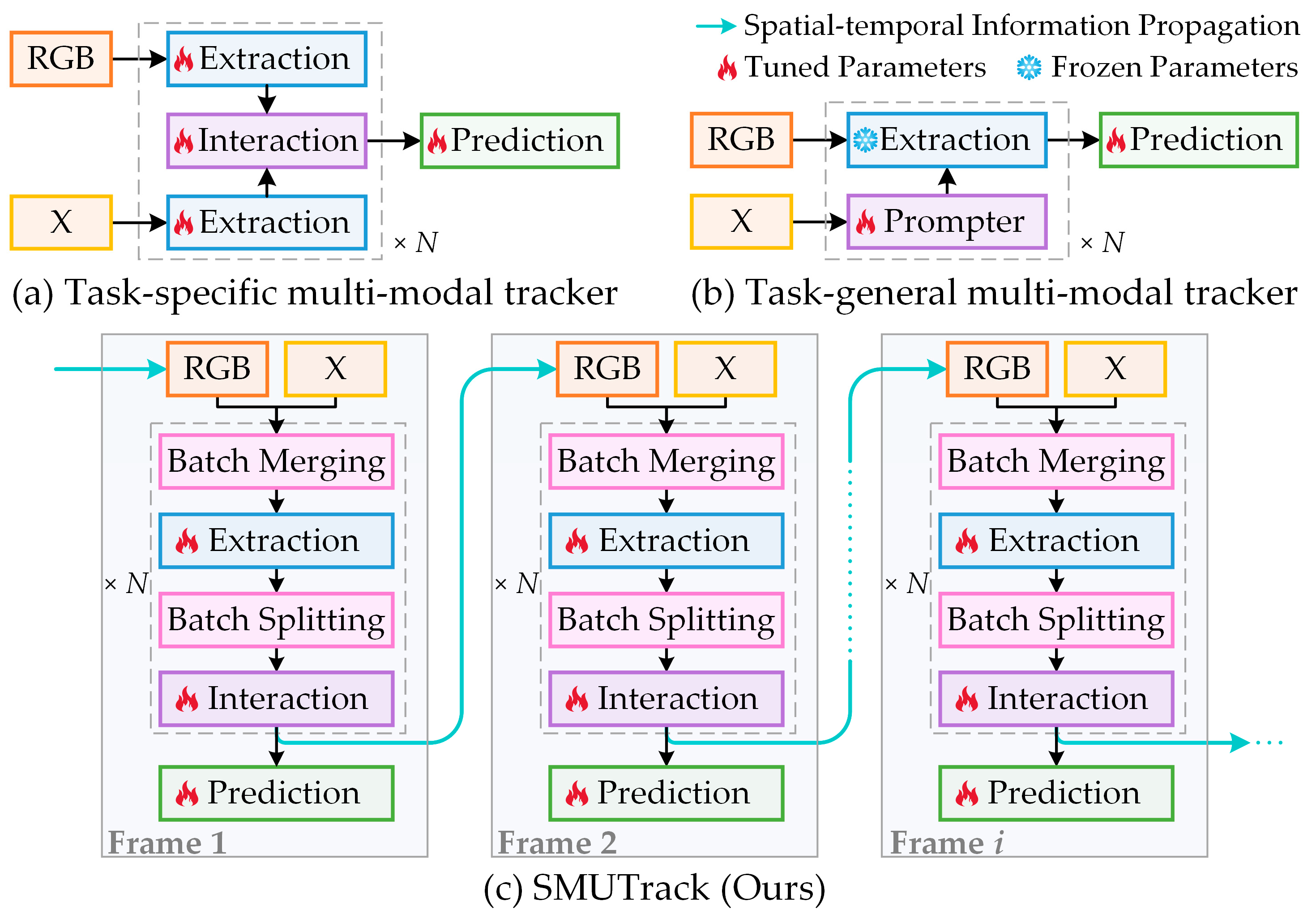

1. Introduction

2. Related Works

2.1. Multi-Modal Object Tracking

2.2. Spatial–Temporal Modeling

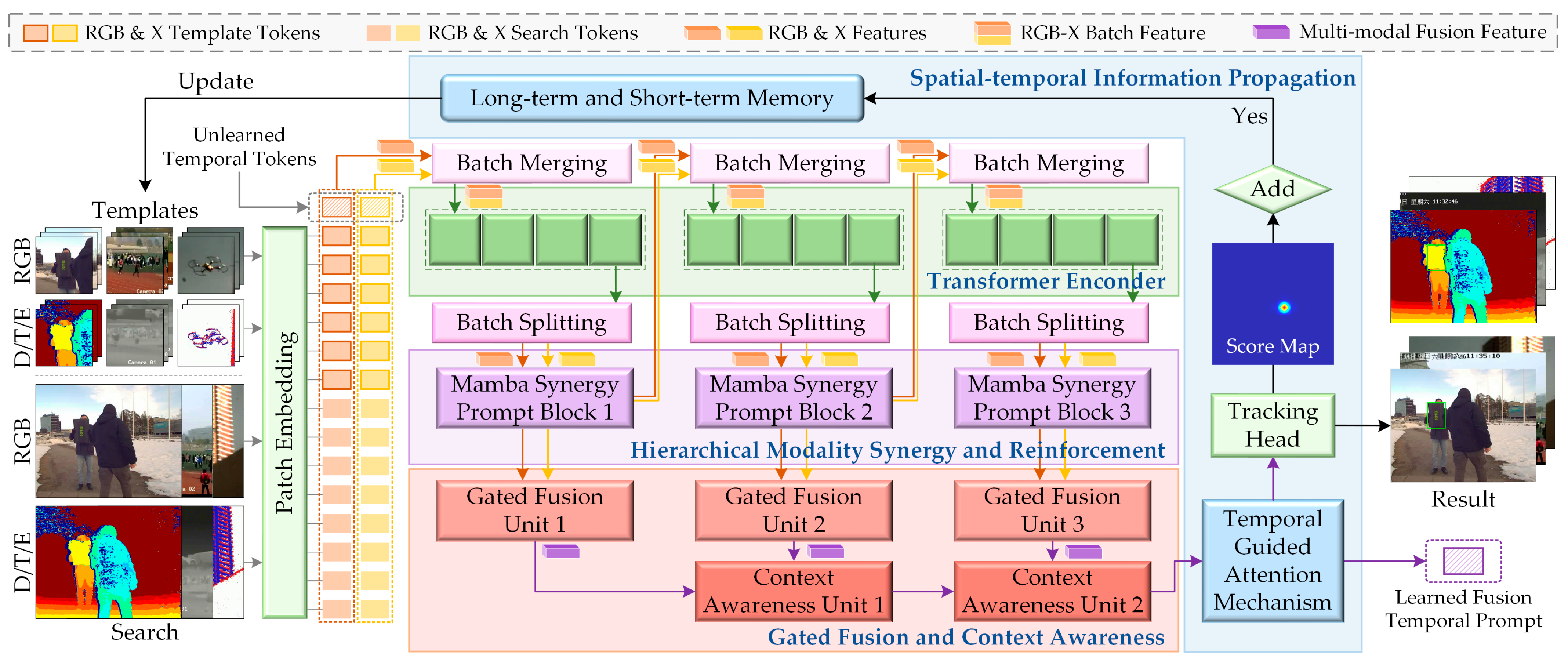

3. Methods

3.1. Feature Encoding

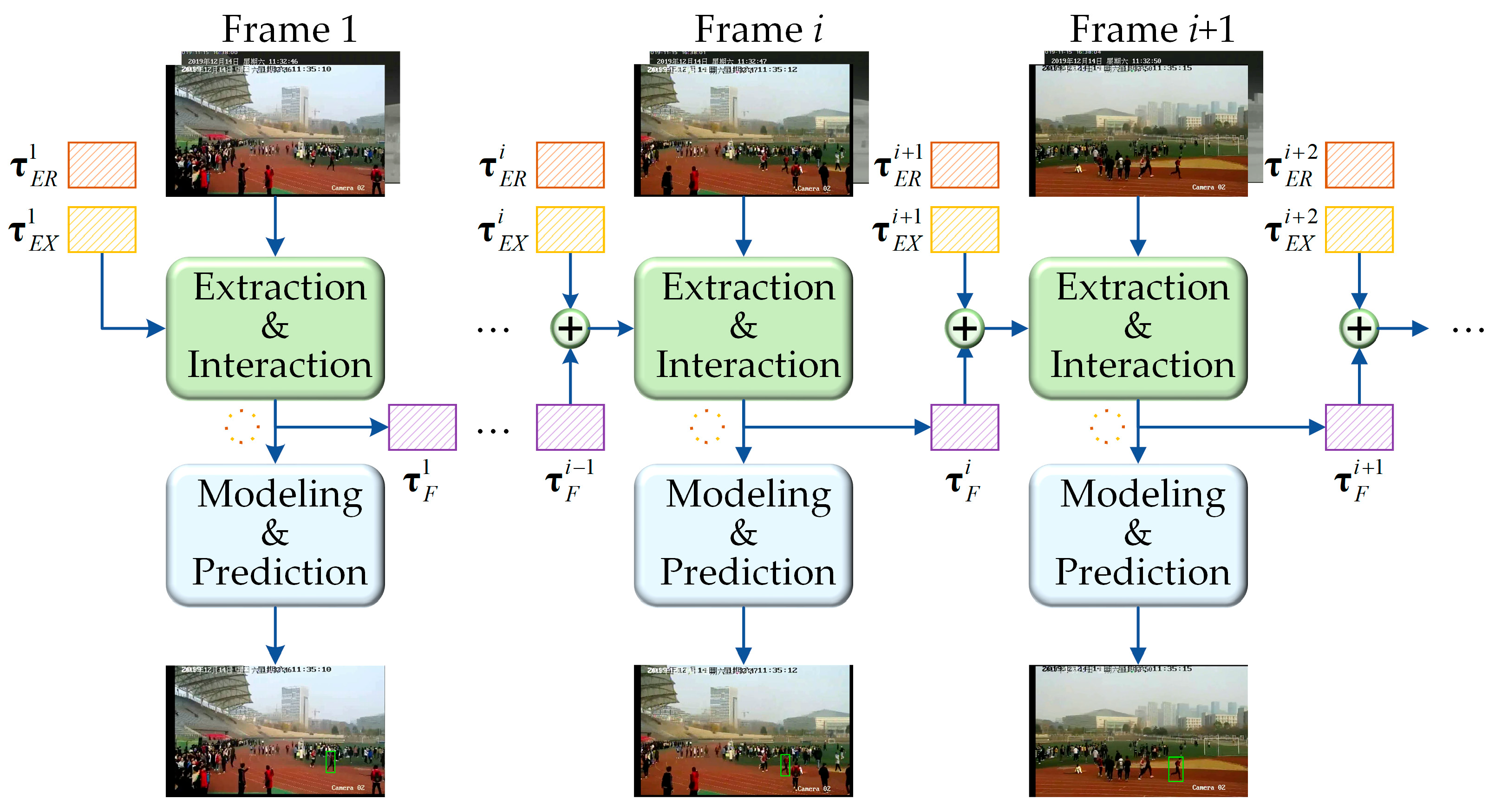

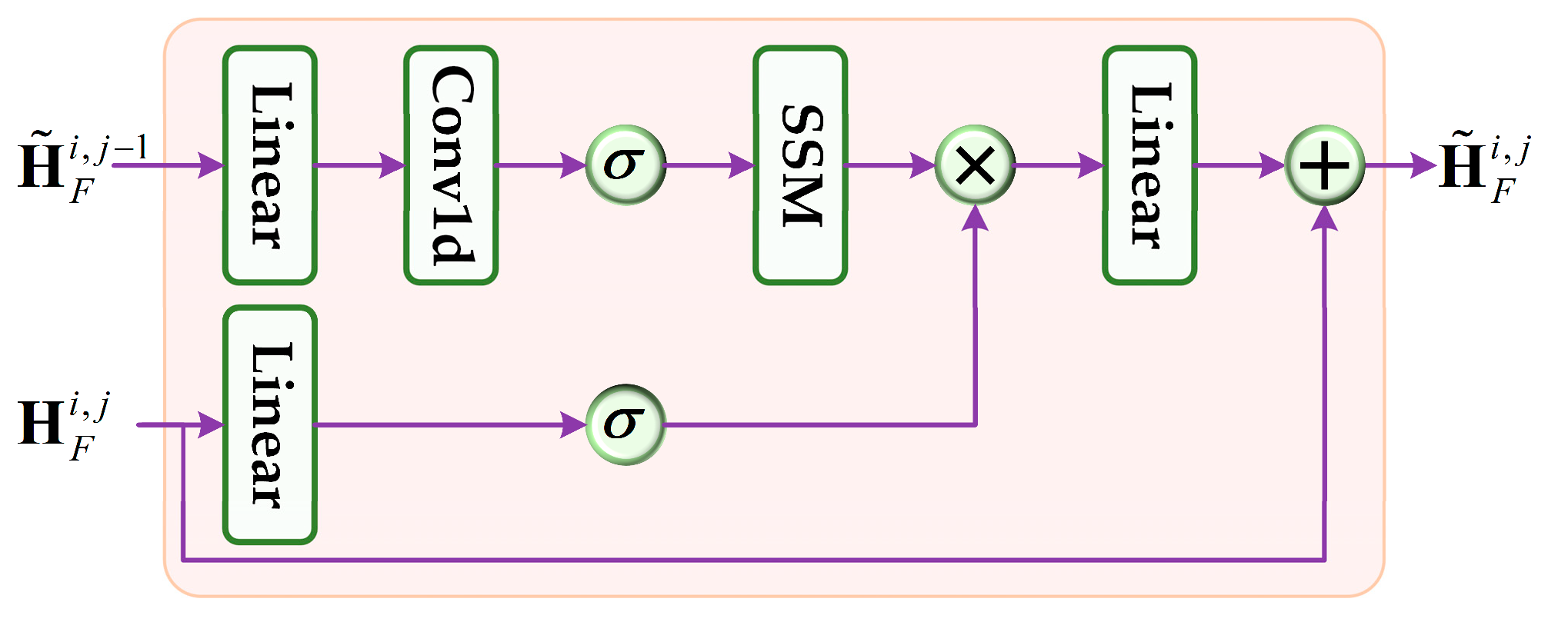

3.2. Spatial–Temporal Information Propagation

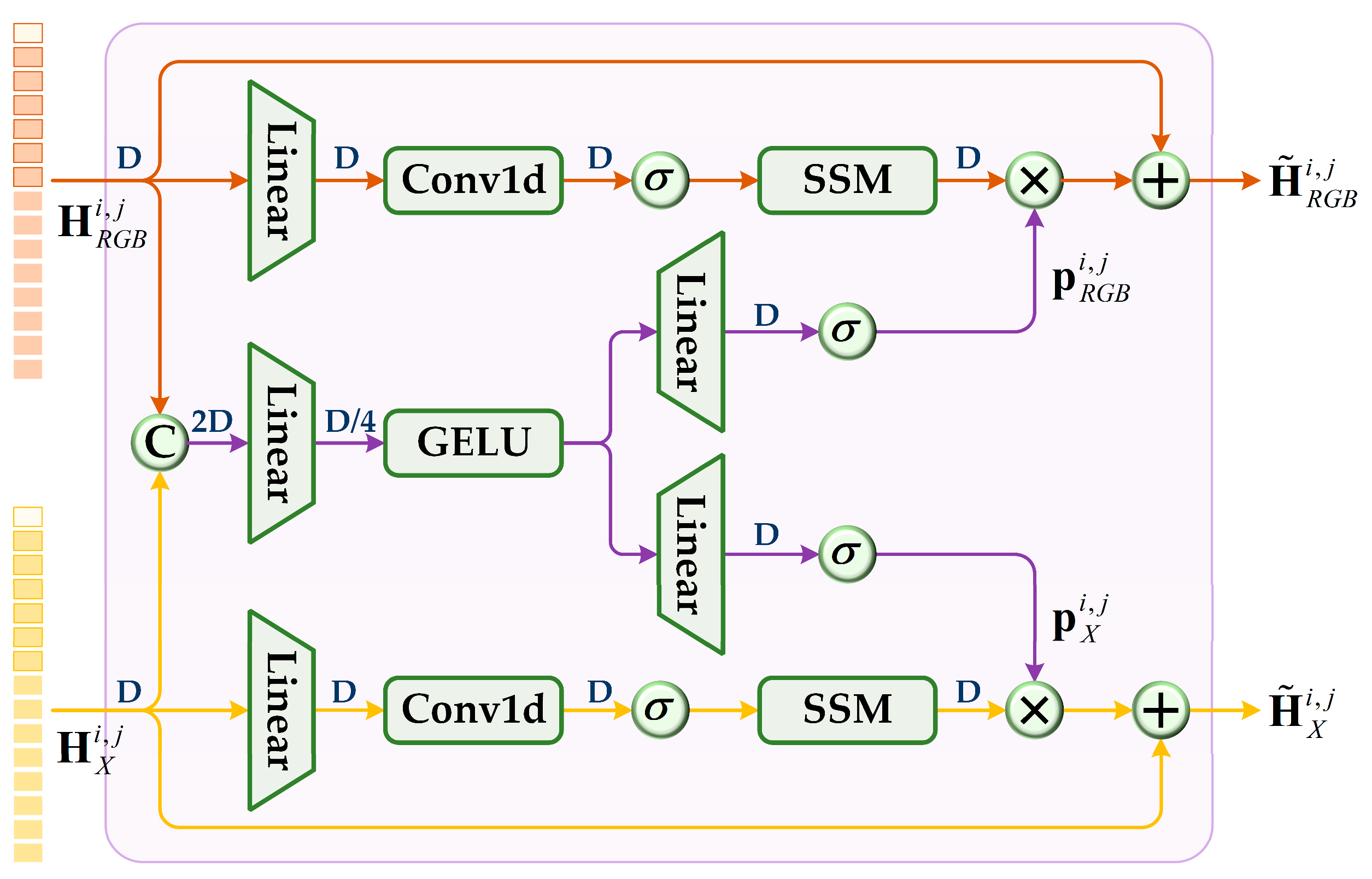

3.3. Hierarchical Modality Synergy and Reinforcement

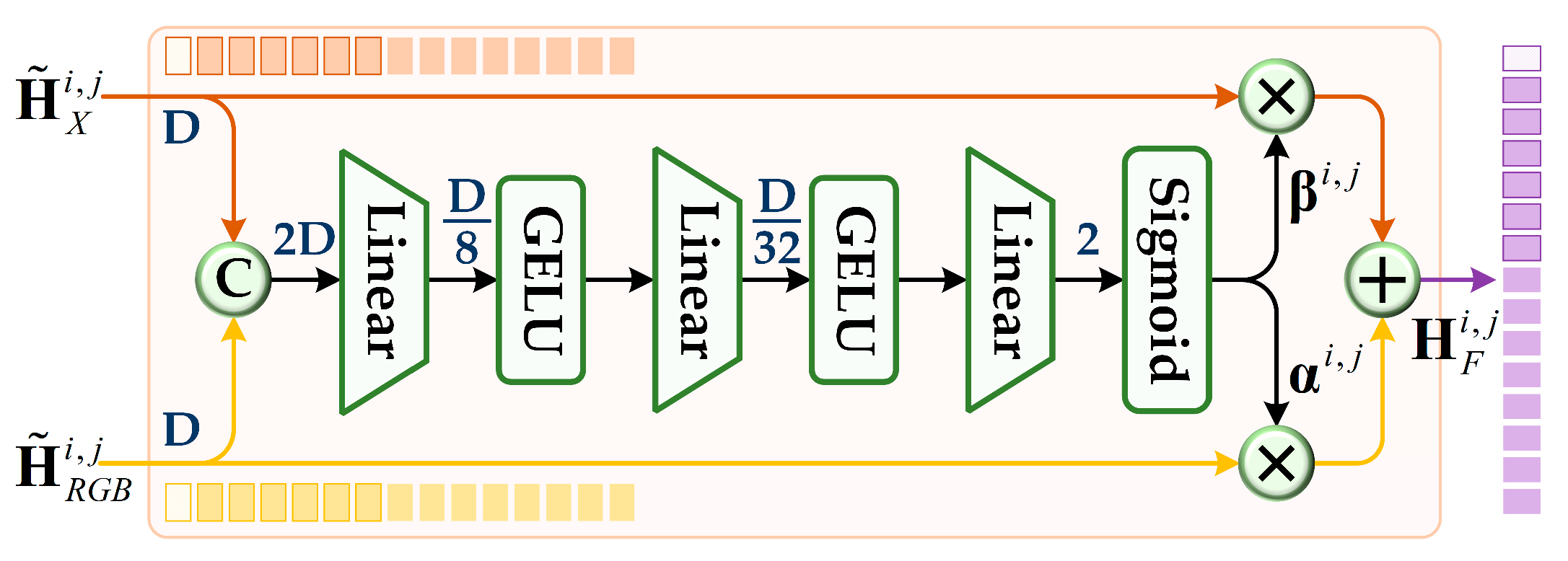

3.4. Gated Fusion and Context Awareness

4. Experiments and Results

4.1. Implementation Settings

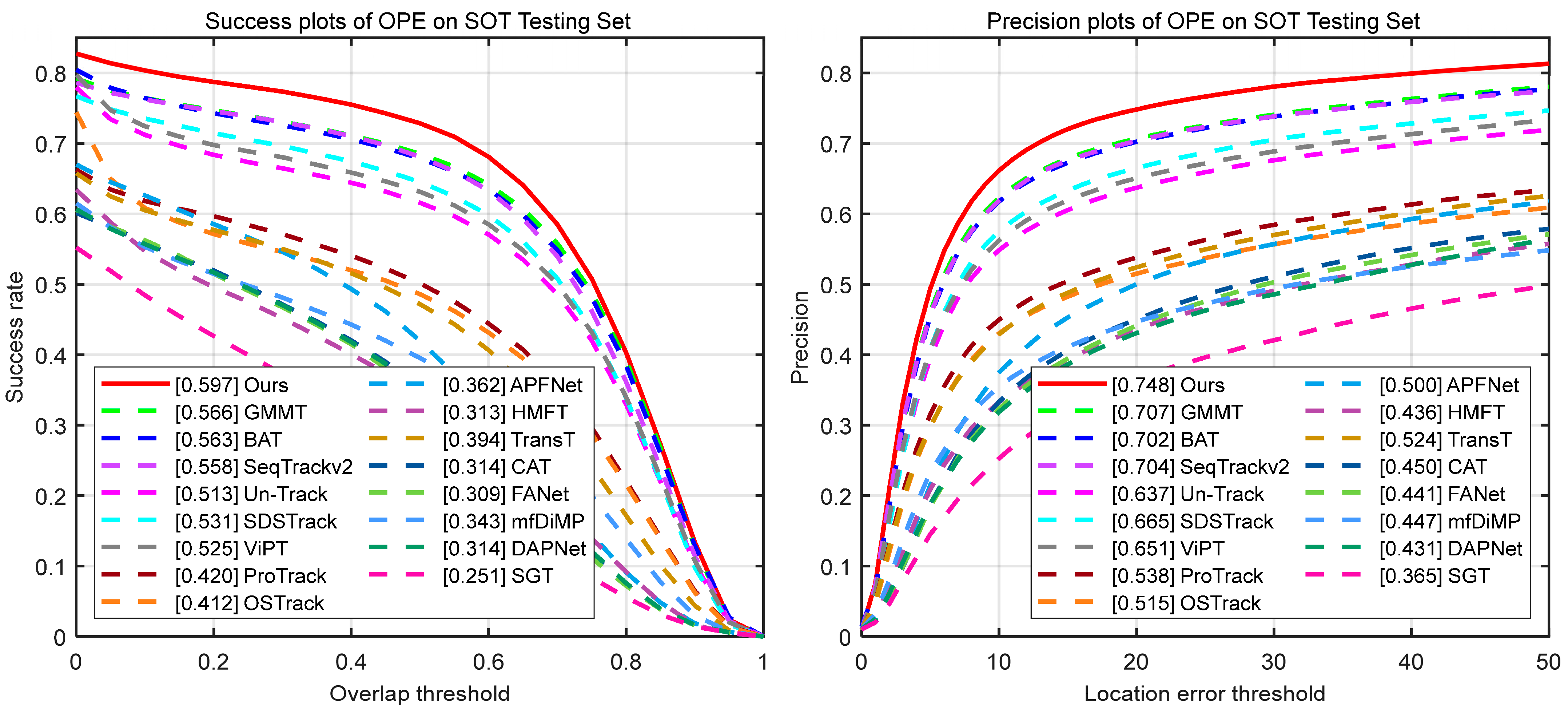

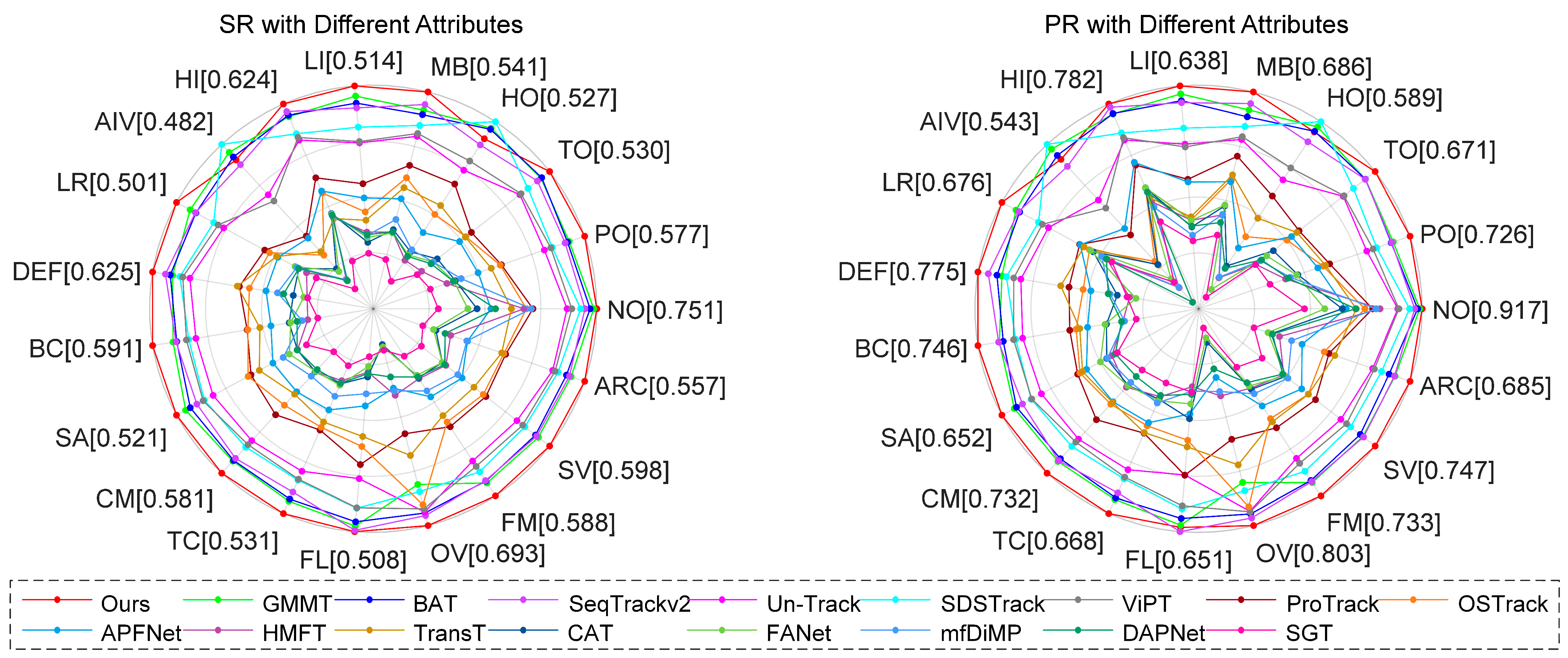

4.2. Comparison with State-of-the-Arts

4.2.1. Comparison on RGB-D Datasets

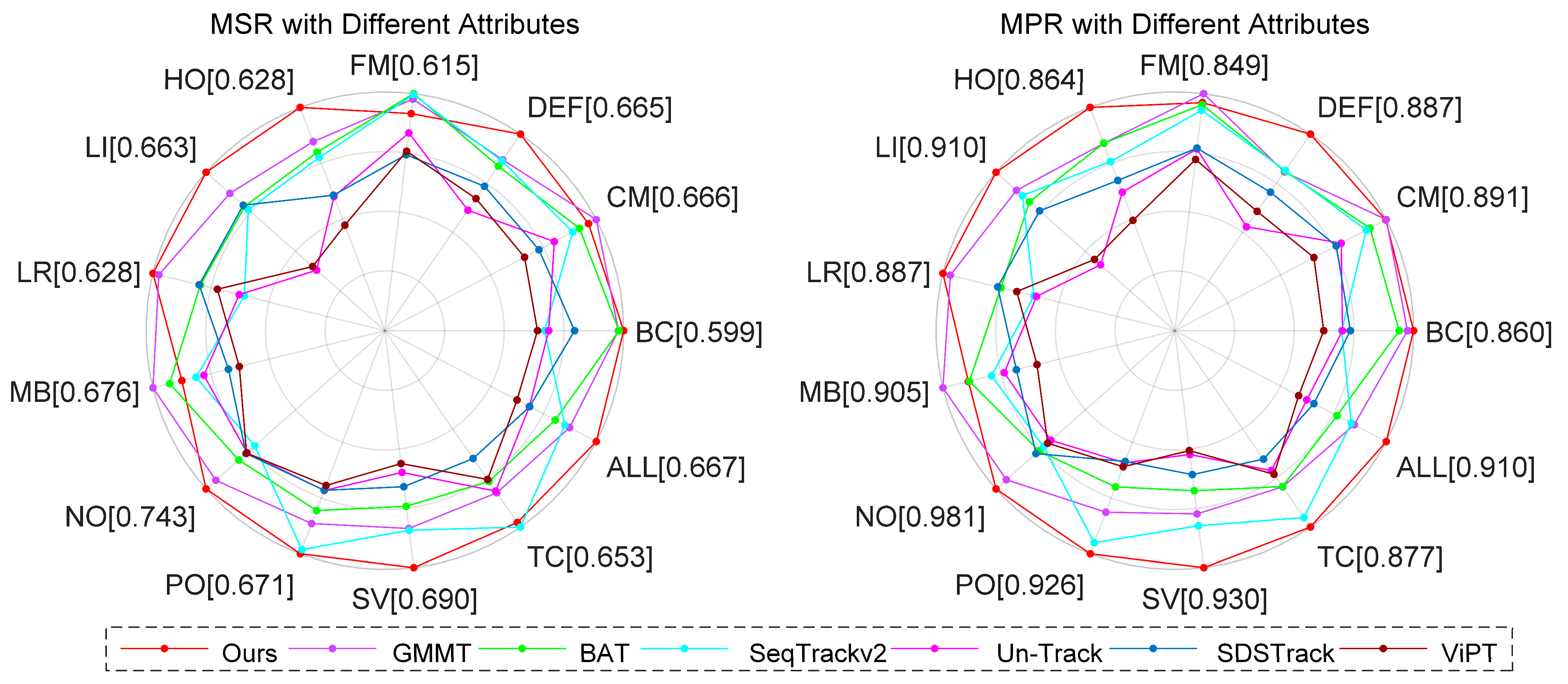

4.2.2. Comparison on RGB-T Datasets

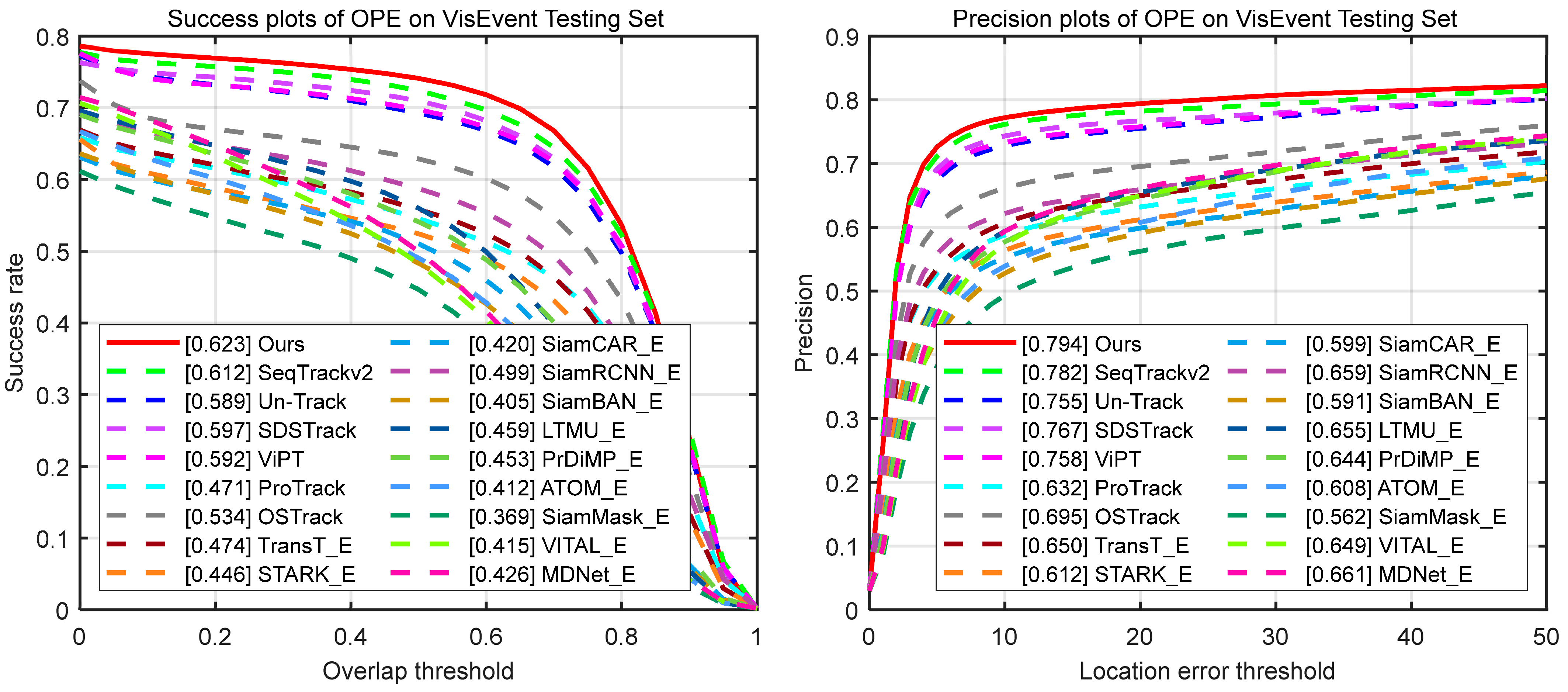

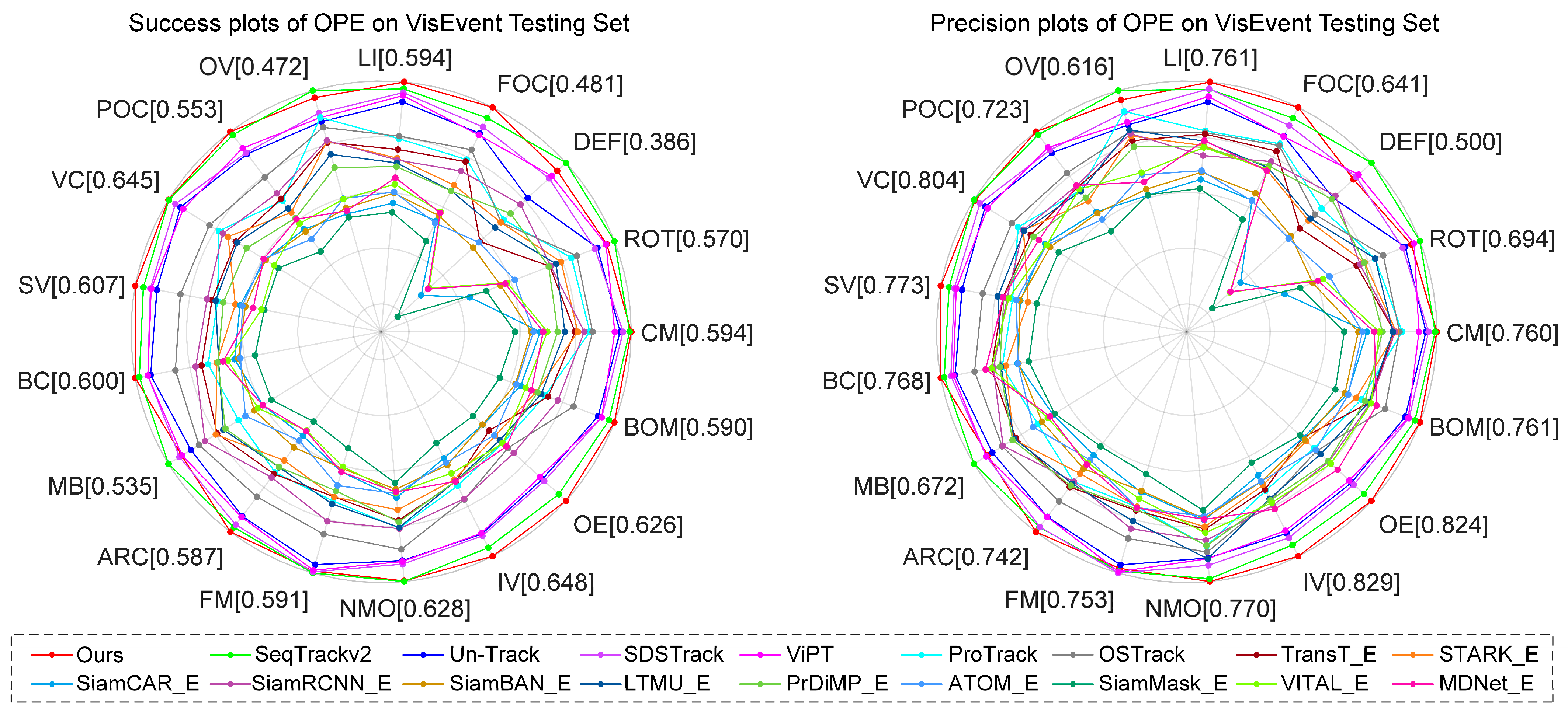

4.2.3. Comparison on RGB-E Datasets

4.3. Ablation Study

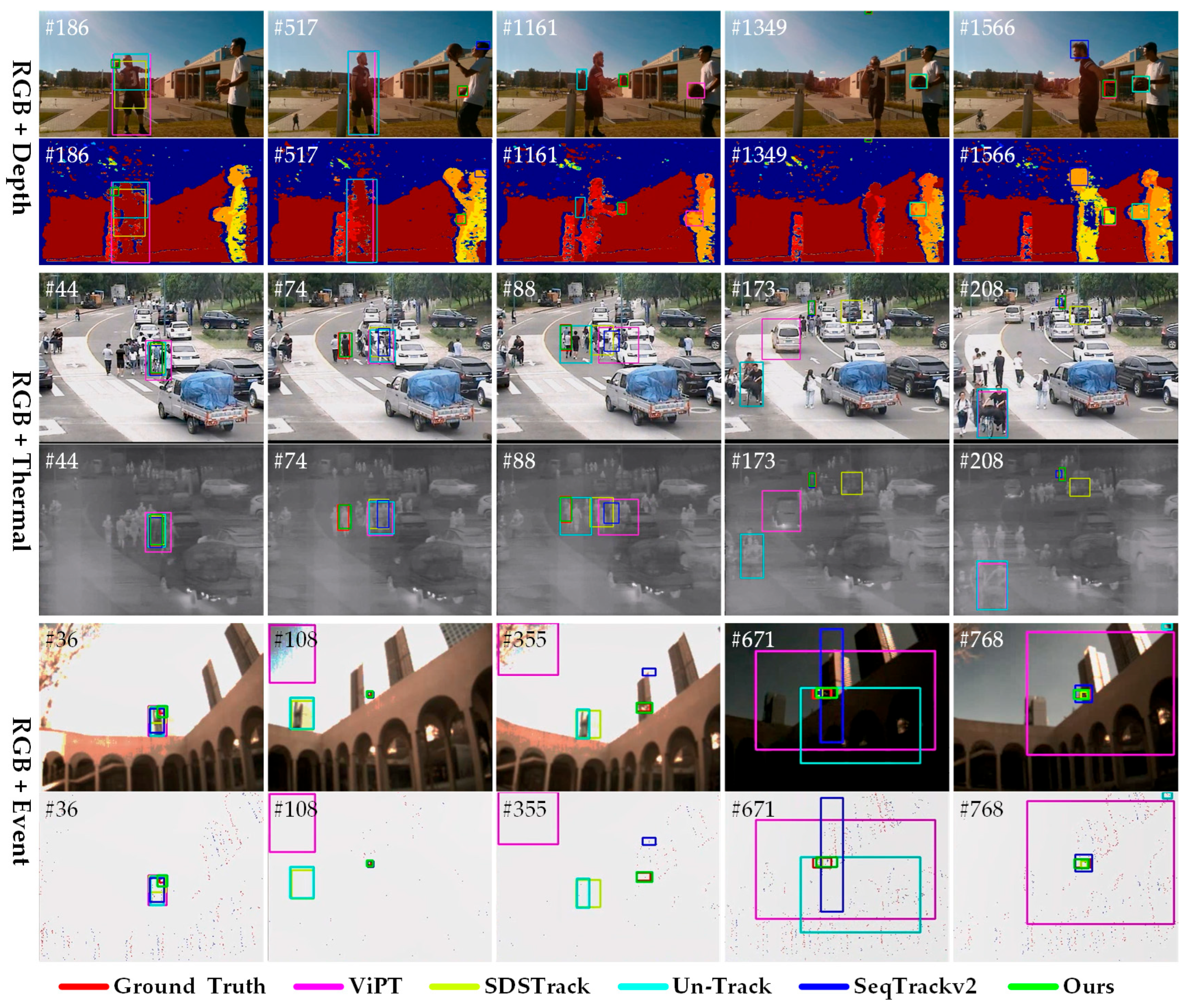

4.4. Visualization Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 341–357. [Google Scholar]

- Wei, X.; Bai, Y.; Zheng, Y.; Shi, D.; Gong, Y. Autoregressive visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9697–9706. [Google Scholar]

- Wang, J.; Wu, Z.; Chen, D.; Luo, C.; Dai, X.; Yuan, L.; Jiang, Y.G. OmniTracker: Unifying Visual Object Tracking by Tracking-with-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 3159–3174. [Google Scholar] [CrossRef]

- Zhou, J.; Dong, Y.; Du, B. SiamTITP: Incorporating Temporal Information and Trajectory Prediction Siamese Network for Satellite Video Object Tracking. IEEE Trans. Image Process. 2025, 34, 4120–4133. [Google Scholar] [CrossRef] [PubMed]

- Lin, J.; Chen, J.; Peng, K.; He, X.; Li, Z.; Stiefelhagen, R.; Yang, K. EchoTrack: Auditory Referring Multi-Object Tracking for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2024, 25, 18964–18977. [Google Scholar] [CrossRef]

- Cho, M.; Kim, E. 3D LiDAR Multi-Object Tracking with Short-Term and Long-Term Multi-Level Associations. Remote Sens. 2023, 15, 5486. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, L.; Patel, V.M.; Xie, X.; Lai, J. View-decoupled transformer for person re-identification under aerial-ground camera network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 22000–22009. [Google Scholar]

- Meng, W.; Duan, S.; Ma, S.; Hu, B. Motion-Perception Multi-Object Tracking (MPMOT): Enhancing Multi-Object Tracking Performance via Motion-Aware Data Association and Trajectory Connection. J. Imaging 2025, 11, 144. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, T.; Liu, K.; Zhang, B.; Chen, L. Recent advances of single-object tracking methods: A brief survey. Neurocomputing 2021, 455, 1–11. [Google Scholar] [CrossRef]

- Black, D.; Salcudean, S. Robust object pose tracking for augmented reality guidance and teleoperation. IEEE Trans. Instrum. Meas. 2024, 73, 9509815. [Google Scholar] [CrossRef]

- Tang, Z.; Xu, T.; Li, H.; Wu, X.-J.; Zhu, X.; Kittler, J. Exploring fusion strategies for accurate RGBT visual object tracking. Inf. Fusion 2023, 99, 101881. [Google Scholar] [CrossRef]

- Gao, S.; Yang, J.; Li, Z.; Zheng, F.; Leonardis, A.; Song, J. Learning dual-fused modality-aware representations for RGBD tracking. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 478–494. [Google Scholar]

- Zhu, X.-F.; Xu, T.; Tang, Z.; Wu, Z.; Liu, H.; Yang, X.; Wu, X.-J.; Kittler, J. RGBD1K: A large-scale dataset and benchmark for RGB-D object tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 3870–3878. [Google Scholar]

- Sun, C.; Zhang, J.; Wang, Y.; Ge, H.; Xia, Q.; Yin, B.; Yang, X. Exploring Historical Information for RGBE Visual Tracking with Mamba. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–17 June 2025; pp. 6500–6509. [Google Scholar]

- Gao, L.; Ke, Y.; Zhao, W.; Zhang, Y.; Jiang, Y.; He, G.; Li, Y. RGB-D visual object tracking with transformer-based multi-modal feature fusion. Knowl.-Based Syst. 2025, 322, 113531. [Google Scholar] [CrossRef]

- Zhang, T.; He, X.; Jiao, Q.; Zhang, Q.; Han, J. AMNet: Learning to Align Multi-Modality for RGB-T Tracking. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7386–7400. [Google Scholar] [CrossRef]

- Zhu, Z.; Hou, J.; Wu, D.O. Cross-modal orthogonal high-rank augmentation for rgb-event transformer-trackers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 22045–22055. [Google Scholar]

- Yuan, D.; Zhang, H.; Shu, X.; Liu, Q.; Chang, X.; He, Z.; Shi, G. Thermal Infrared Target Tracking: A Comprehensive Review. IEEE Trans. Instrum. Meas. 2024, 73, 5000419. [Google Scholar] [CrossRef]

- Xue, Y.; Zhang, J.; Lin, Z.; Li, C.; Huo, B.; Zhang, Y. SiamCAF: Complementary Attention Fusion-Based Siamese Network for RGBT Tracking. Remote Sens. 2023, 15, 3252. [Google Scholar] [CrossRef]

- Yang, J.; Gao, S.; Li, Z.; Zheng, F.; Leonardis, A. Resource-efficient rgbd aerial tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13374–13383. [Google Scholar]

- Hu, X.; Zhong, B.; Liang, Q.; Zhang, S.; Li, N.; Li, X. Toward Modalities Correlation for RGB-T Tracking. IEEE Trans. Circuit Syst. Video Technol. 2024, 34, 9102–9111. [Google Scholar] [CrossRef]

- Wang, X.; Li, J.; Zhu, L.; Zhang, Z.; Chen, Z.; Li, X.; Wang, Y.; Tian, Y.; Wu, F. Visevent: Reliable object tracking via collaboration of frame and event flows. IEEE Trans. Cybern. 2023, 54, 1997–2010. [Google Scholar] [CrossRef] [PubMed]

- Cao, B.; Guo, J.; Zhu, P.; Hu, Q. Bi-directional adapter for multimodal tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 927–935. [Google Scholar]

- Zhu, J.; Lai, S.; Chen, X.; Wang, D.; Lu, H. Visual prompt multi-modal tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9516–9526. [Google Scholar]

- Hou, X.; Xing, J.; Qian, Y.; Guo, Y.; Xin, S.; Chen, J.; Tang, K.; Wang, M.; Jiang, Z.; Liu, L. Sdstrack: Self-distillation symmetric adapter learning for multi-modal visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 26551–26561. [Google Scholar]

- Ye, P.; Xiao, G.; Liu, J. AMATrack: A Unified Network with Asymmetric Multimodal Mixed Attention for RGBD Tracking. IEEE Trans. Instrum. Meas. 2024, 73, 2526011. [Google Scholar] [CrossRef]

- Tang, Z.; Xu, T.; Wu, X.; Zhu, X.-F.; Kittler, J. Generative-based fusion mechanism for multi-modal tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 5189–5197. [Google Scholar]

- Wu, Z.; Zheng, J.; Ren, X.; Vasluianu, F.-A.; Ma, C.; Paudel, D.P.; Van Gool, L.; Timofte, R. Single-model and any-modality for video object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19156–19166. [Google Scholar]

- Chen, X.; Peng, H.; Wang, D.; Lu, H.; Hu, H. Seqtrack: Sequence to sequence learning for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14572–14581. [Google Scholar]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10448–10457. [Google Scholar]

- Zhang, M.; Zhang, Q.; Song, W.; Huang, D.; He, Q. PromptVT: Prompting for Efficient and Accurate Visual Tracking. IEEE Trans. Circuit Syst. Video Technol. 2024, 34, 7373–7385. [Google Scholar] [CrossRef]

- Ying, G.; Zhang, D.; Ou, Z.; Wang, X.; Zheng, Z. Temporal adaptive bidirectional bridging for RGB-D tracking. Pattern Recognit. 2025, 158, 111053. [Google Scholar] [CrossRef]

- Xie, J.; Zhong, B.; Mo, Z.; Zhang, S.; Shi, L.; Song, S.; Ji, R. Autoregressive Queries for Adaptive Tracking with Spatio-Temporal Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19300–19309. [Google Scholar]

- Cao, Z.; Huang, Z.; Pan, L.; Zhang, S.; Liu, Z.; Fu, C. Towards real-world visual tracking with temporal contexts. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 15834–15849. [Google Scholar] [CrossRef]

- Cao, Z.; Huang, Z.; Pan, L.; Zhang, S.; Liu, Z.; Fu, C. TCTrack: Temporal contexts for aerial tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14798–14808. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Yan, S.; Yang, J.; Käpylä, J.; Zheng, F.; Leonardis, A.; Kämäräinen, J.-K. Depthtrack: Unveiling the power of rgbd tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10725–10733. [Google Scholar]

- Li, C.; Xue, W.; Jia, Y.; Qu, Z.; Luo, B.; Tang, J.; Sun, D. LasHeR: A large-scale high-diversity benchmark for RGBT tracking. IEEE Trans. Image Process. 2021, 31, 392–404. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Hong, L.; Yan, S.; Zhang, R.; Li, W.; Zhou, X.; Guo, P.; Jiang, K.; Chen, Y.; Li, J.; Chen, Z. Onetracker: Unifying visual object tracking with foundation models and efficient tuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19079–19091. [Google Scholar]

- Yang, J.; Li, Z.; Zheng, F.; Leonardis, A.; Song, J. Prompting for multi-modal tracking. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 3492–3500. [Google Scholar]

- Xie, F.; Wang, C.; Wang, G.; Cao, Y.; Yang, W.; Zeng, W. Correlation-aware deep tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8751–8760. [Google Scholar]

- Mayer, C.; Danelljan, M.; Paudel, D.P.; Van Gool, L. Learning target candidate association to keep track of what not to track. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 13444–13454. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Pflugfelder, R.; Kämäräinen, J.-K.; Chang, H.J.; Danelljan, M.; Cehovin, L.; Lukežič, A. The ninth visual object tracking vot2021 challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2711–2738. [Google Scholar]

- Qian, Y.; Yan, S.; Lukežič, A.; Kristan, M.; Kämäräinen, J.-K.; Matas, J. DAL: A deep depth-aware long-term tracker. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7825–7832. [Google Scholar]

- Zhao, P.; Liu, Q.; Wang, W.; Guo, Q. Tsdm: Tracking by siamrpn++ with a depth-refiner and a mask-generator. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 670–676. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Kämäräinen, J.-K.; Danelljan, M.; Zajc, L.Č.; Lukežič, A.; Drbohlav, O. The eighth visual object tracking VOT2020 challenge results. In Proceedings of the Computer Vision–ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; Proceedings, Part V. pp. 547–601. [Google Scholar]

- Dai, K.; Zhang, Y.; Wang, D.; Li, J.; Lu, H.; Yang, X. High-performance long-term tracking with meta-updater. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6298–6307. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Pflugfelder, R.; Kämäräinen, J.-K.; Čehovin Zajc, L.; Drbohlav, O.; Lukezic, A.; Berg, A. The seventh visual object tracking VOT2019 challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2206–2241. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4660–4669. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Kämäräinen, J.-K.; Chang, H.J.; Danelljan, M.; Zajc, L.Č.; Lukežič, A. The tenth visual object tracking vot2022 challenge results. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 431–460. [Google Scholar]

- Wang, H.; Liu, X.; Li, Y.; Sun, M.; Yuan, D.; Liu, J. Temporal adaptive rgbt tracking with modality prompt. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 5436–5444. [Google Scholar]

- Hui, T.; Xun, Z.; Peng, F.; Huang, J.; Wei, X.; Wei, X.; Dai, J.; Han, J.; Liu, S. Bridging search region interaction with template for rgb-t tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13630–13639. [Google Scholar]

- Xiao, Y.; Yang, M.; Li, C.; Liu, L.; Tang, J. Attribute-based progressive fusion network for rgbt tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 22 February–1 March 2022; pp. 2831–2838. [Google Scholar]

- Zhang, P.; Zhao, J.; Wang, D.; Lu, H.; Ruan, X. Visible-thermal UAV tracking: A large-scale benchmark and new baseline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8886–8895. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Montreal, QC, Canada, 10–17 October 2021; pp. 8126–8135. [Google Scholar]

- Wang, C.; Xu, C.; Cui, Z.; Zhou, L.; Zhang, T.; Zhang, X.; Yang, J. Cross-modal pattern-propagation for RGB-T tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7064–7073. [Google Scholar]

- Li, C.; Liu, L.; Lu, A.; Ji, Q.; Tang, J. Challenge-aware RGBT tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 222–237. [Google Scholar]

- Zhu, Y.; Li, C.; Tang, J.; Luo, B. Quality-aware feature aggregation network for robust RGBT tracking. IEEE Trans. Intell. Veh. 2020, 6, 121–130. [Google Scholar] [CrossRef]

- Zhang, L.; Danelljan, M.; Gonzalez-Garcia, A.; Van De Weijer, J.; Shahbaz Khan, F. Multi-modal fusion for end-to-end RGB-T tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2252–2261. [Google Scholar]

- Zhu, Y.; Li, C.; Luo, B.; Tang, J.; Wang, X. Dense feature aggregation and pruning for RGBT tracking. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 465–472. [Google Scholar]

- Li, C.; Zhao, N.; Lu, Y.; Zhu, C.; Tang, J. Weighted sparse representation regularized graph learning for RGB-T object tracking. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1856–1864. [Google Scholar]

- Li, C.; Liang, X.; Lu, Y.; Zhao, N.; Tang, J. RGB-T Object Tracking: Benchmark and Baseline. Pattern Recognit. 2019, 96, 106977. [Google Scholar] [CrossRef]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6269–6277. [Google Scholar]

- Voigtlaender, P.; Luiten, J.; Torr, P.H.; Leibe, B. Siam r-cnn: Visual tracking by re-detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6578–6588. [Google Scholar]

- Danelljan, M.; Gool, L.V.; Timofte, R. Probabilistic regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7183–7192. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6668–6677. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar]

- Song, Y.; Ma, C.; Wu, X.; Gong, L.; Bao, L.; Zuo, W.; Shen, C.; Lau, R.W.; Yang, M.-H. Vital: Visual tracking via adversarial learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8990–8999. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

| Category | Related Works | Data Types | Descriptions |

|---|---|---|---|

| Task-specific | AMATrack [27] | RGB-D | Designed for a specific RGB-X task, they may excel at one type of task but fail to consider potential modality relationships across tasks. |

| AMNet [17] | RGB-T | ||

| GMMT [28] | RGB-T | ||

| RT-MDNet [23] | RGB-E | ||

| Task-general | ViPT [25] | RGB-D, RGB-T, RGB-E | Only a few prompts are needed for learning, but modality synergy and generalization capabilities still need improvement. |

| SDSTrack [26] | |||

| Un-Track [29] |

| Category | Related Works | Descriptions |

|---|---|---|

| Updating appearance representations | SeqTrack [30] | Online template update strategies are typically employed to capture real-time appearance changes, yet sparse template updates struggle to establish stable spatial–temporal correlations. |

| STARK [31] | ||

| PromptVT [32] | ||

| TABBTrack [33] | ||

| Modeling temporal dependencies | ARTrack [3] | Learning a global temporal representation across video sequences enables effective perception of trajectory evolution processes. |

| AQATrack [34] | ||

| TCTrack [36] |

| Method | Source | VOT-RGBD22 | DepthTrack | ||||

|---|---|---|---|---|---|---|---|

| EAO | Acc. | Rob. | F-Score | Re | Pr | ||

| SMUTrack | Ours | 76.9 | 82.0 | 93.0 | 63.9 | 63.8 | 64.0 |

| SeqTrackv2 [30] | CVPR’24 | 74.4 | 81.5 | 91.0 | 63.2 | 63.4 | 62.9 |

| OneTracker [42] | CVPR’24 | 72.7 | 81.9 | 87.2 | 60.9 | 60.4 | 60.7 |

| Un-Track [29] | CVPR’24 | 71.8 | 82.0 | 86.4 | 61.0 | 61.0 | 61.0 |

| SDSTrack [26] | CVPR’24 | 72.8 | 81.2 | 88.3 | 61.4 | 60.9 | 61.9 |

| SPT [14] | AAAI’23 | 65.1 | 79.8 | 85.1 | 53.8 | 54.9 | 52.7 |

| ViPT [25] | CVPR’23 | 72.1 | 81.5 | 87.1 | 59.4 | 59.6 | 59.2 |

| ProTrack [43] | ACMMM’22 | 65.1 | 80.1 | 80.2 | 57.8 | 57.3 | 58.3 |

| OSTrack [2] | ECCV’22 | 67.6 | 80.3 | 83.3 | 52.9 | 52.2 | 53.6 |

| SBT-RGBD [44] | CVPR’22 | 70.8 | 80.9 | 86.4 | - | - | - |

| DMTracker [13] | ECCV’22 | 65.8 | 75.8 | 85.1 | - | - | - |

| DeT [39] | ICCV’21 | 65.7 | 76.0 | 84.5 | 53.2 | 50.6 | 56.0 |

| STARK-RGBD [31] | ICCV’21 | 64.7 | 80.3 | 79.8 | - | - | - |

| KeepTrack [45] | ICCV’21 | 60.6 | 75.3 | 79.7 | - | - | - |

| DRefine [46] | ICCV’21 | 59.2 | 77.5 | 76.0 | - | - | - |

| DAL [47] | ICPR’21 | - | - | - | 42.9 | 36.9 | 51.2 |

| TSDM [48] | ICPR’21 | - | - | - | 38.4 | 37.6 | 39.3 |

| DDiMP [49] | ECCV’20 | - | - | - | 48.5 | 46.9 | 50.3 |

| ATCAIS [49] | ECCV’20 | 55.9 | 76.1 | 73.9 | 47.6 | 45.5 | 50.0 |

| LTMU-B [50] | CVPR’20 | - | - | - | 46.0 | 41.7 | 51.2 |

| GLGS-D [49] | ECCV’20 | - | - | - | 45.3 | 36.9 | 58.4 |

| Siam-LTD [49] | ECCV’20 | - | - | - | 37.6 | 34.2 | 41.8 |

| LTDSEd [51] | ICCVW’19 | - | - | - | 40.5 | 38.2 | 43.0 |

| SiamM-Ds [51] | ICCVW’19 | - | - | - | 33.6 | 26.4 | 46.3 |

| DiMP [1] | ICCV’19 | 54.3 | 70.3 | 73.1 | - | - | - |

| ATOM [52] | CVPR’19 | 50.5 | 69.8 | 68.8 | - | - | - |

| Method | Source | LasHeR | RGBT234 | |||

|---|---|---|---|---|---|---|

| SR | PR | NPR | MSR | MPR | ||

| SMUTrack | Ours | 59.7 | 74.8 | 71.3 | 66.7 | 91.0 |

| GMMT [28] | AAAI’24 | 56.6 | 70.7 | 67.0 | 65.0 | 88.3 |

| BAT [24] | AAAI’24 | 56.3 | 70.2 | 66.4 | 64.1 | 86.8 |

| TATrack [54] | AAAI’24 | 56.1 | 70.2 | 66.7 | 64.4 | 87.2 |

| SeqTrackv2 [30] | CVPR’24 | 55.8 | 70.4 | 67.2 | 64.7 | 88.0 |

| OneTracker [42] | CVPR’24 | 53.8 | 67.2 | - | 64.2 | 85.7 |

| Un-Track [29] | CVPR’24 | 51.3 | 63.7 | 60.1 | 62.5 | 84.2 |

| SDSTrack [26] | CVPR’24 | 53.1 | 66.5 | 62.7 | 62.5 | 84.8 |

| TBSI [55] | CVPR’23 | 55.6 | 69.2 | 65.7 | 63.7 | 87.1 |

| ViPT [25] | CVPR’23 | 52.5 | 65.1 | 61.7 | 61.7 | 83.5 |

| ProTrack [43] | ACMMM’22 | 42.0 | 53.8 | 49.8 | 59.9 | 79.5 |

| OSTrack [2] | ECCV’22 | 41.2 | 51.5 | 48.2 | 54.9 | 72.9 |

| APFNet [56] | AAAI’22 | 36.2 | 50.0 | 43.9 | 57.9 | 82.7 |

| HMFT [57] | CVPR’22 | 31.3 | 43.6 | 38.1 | - | - |

| TransT [58] | CVPR’21 | 39.4 | 52.4 | 48.0 | - | - |

| CMPP [59] | CVPR’20 | - | - | - | 57.5 | 82.3 |

| CAT [60] | ECCV’20 | 31.4 | 45.0 | 39.5 | 56.1 | 80.4 |

| FANet [61] | TIV’20 | 30.9 | 44.1 | 38.4 | 55.3 | 78.7 |

| mfDiMP [62] | ICCVW’19 | 34.3 | 44.7 | 39.5 | 42.8 | 64.6 |

| DAPNet [63] | ACMMM’19 | 31.4 | 43.1 | 38.3 | - | - |

| SGT [64] | ACMMM’17 | 25.1 | 36.5 | 30.6 | 47.2 | 72.0 |

| Method | Source | VisEvent | ||

|---|---|---|---|---|

| SR | PR | NPR | ||

| SMUTrack | Ours | 62.3 | 79.4 | 75.4 |

| SeqTrackv2 [30] | CVPR’24 | 61.2 | 78.2 | 73.9 |

| OneTracker [42] | CVPR’24 | 60.8 | 76.7 | - |

| Un-Track [29] | CVPR’24 | 58.9 | 75.5 | 71.0 |

| SDSTrack [26] | CVPR’24 | 59.7 | 76.7 | 72.3 |

| ViPT [25] | CVPR’23 | 59.2 | 75.8 | 71.5 |

| ProTrack [43] | ACMMM’22 | 47.1 | 63.2 | 56.0 |

| OSTrack [2] | ECCV’22 | 53.4 | 69.5 | 64.6 |

| TransT_E [58] | CVPR’21 | 47.4 | 65.0 | 58.3 |

| STARK_E [31] | ICCV’21 | 44.6 | 61.2 | 53.7 |

| SiamCAR_E [66] | CVPR’20 | 42.0 | 59.9 | 52.8 |

| SiamRCNN_E [67] | CVPR’20 | 49.9 | 65.9 | 60.6 |

| LTMU_E [50] | CVPR’20 | 45.9 | 65.5 | 57.0 |

| PrDiMP_E [68] | CVPR’20 | 45.3 | 64.4 | 55.5 |

| SiamBAN_E [69] | CVPR’20 | 40.5 | 59.1 | 50.7 |

| ATOM_E [52] | CVPR’19 | 41.2 | 60.8 | 50.8 |

| SiamMask_E [70] | CVPR’19 | 36.9 | 56.2 | 47.1 |

| VITAL_E [71] | CVPR’18 | 41.5 | 64.9 | 52.9 |

| MDNet_E [72] | CVPR’16 | 42.6 | 66.1 | 55.7 |

| Components | DepthTrack | LasHeR | VisEvent | Δ | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | SIP | HMSR | GFCA | F-Score | Re | Pr | SR | PR | NPR | SR | PR | NPR | |

| √ | 58.3 | 58.7 | 58.0 | 53.8 | 67.0 | 63.5 | 61.1 | 77.9 | 74.1 | - | |||

| √ | √ | 63.2 | 63.4 | 63.0 | 57.5 | 72.4 | 68.8 | 61.2 | 78.1 | 74.3 | 3.3 | ||

| √ | √ | √ | 64.3 | 64.3 | 64.4 | 59.5 | 74.6 | 71.0 | 61.9 | 78.3 | 74.9 | 1.3 | |

| √ | √ | √ | √ | 63.9 | 63.8 | 64.0 | 59.7 | 74.8 | 71.3 | 62.3 | 79.4 | 75.4 | 0.2 |

| Number | GFU | CAU | DepthTrack | LasHeR | VisEvent | Δ | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F-Score | Re | Pr | SR | PR | NPR | SR | PR | NPR | ||||

| 1 | × | × | 64.3 | 64.3 | 64.4 | 59.5 | 74.6 | 71.0 | 61.9 | 78.3 | 74.9 | - |

| 2 | √ | × | 61.9 | 62.0 | 61.9 | 59.5 | 74.8 | 71.2 | 62.2 | 79.4 | 75.3 | −0.6 |

| 3 | × | √ | 62.6 | 62.2 | 62.9 | 59.2 | 74.5 | 70.6 | 61.2 | 78.0 | 74.2 | −0.9 |

| 4 | √ | √ | 63.9 | 63.8 | 64.0 | 59.7 | 74.8 | 71.3 | 62.3 | 79.4 | 75.4 | 0.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, J.; Zuo, H.; Wei, Y.; Li, M.; Zhang, J. Unified Multi-Modal Object Tracking Through Spatial–Temporal Propagation and Modality Synergy. J. Imaging 2025, 11, 421. https://doi.org/10.3390/jimaging11120421

Wu J, Zuo H, Wei Y, Li M, Zhang J. Unified Multi-Modal Object Tracking Through Spatial–Temporal Propagation and Modality Synergy. Journal of Imaging. 2025; 11(12):421. https://doi.org/10.3390/jimaging11120421

Chicago/Turabian StyleWu, Jiajia, Haorui Zuo, Yuxing Wei, Meihui Li, and Jianlin Zhang. 2025. "Unified Multi-Modal Object Tracking Through Spatial–Temporal Propagation and Modality Synergy" Journal of Imaging 11, no. 12: 421. https://doi.org/10.3390/jimaging11120421

APA StyleWu, J., Zuo, H., Wei, Y., Li, M., & Zhang, J. (2025). Unified Multi-Modal Object Tracking Through Spatial–Temporal Propagation and Modality Synergy. Journal of Imaging, 11(12), 421. https://doi.org/10.3390/jimaging11120421