Abstract

In resource-constrained edge agricultural environments, the accurate recognition of toxic weeds poses dual challenges related to model lightweight design and the few-shot generalization capability. To address these challenges, a multi-strategy recognition framework is proposed, which integrates a lightweight backbone network, a pseudo-labeling guidance mechanism, and a contrastive boundary enhancement module. This approach is designed to improve deployment efficiency on low-power devices while ensuring high accuracy in identifying rare toxic weed categories. The proposed model achieves a real-time inference speed of 18.9 FPS on the Jetson Nano platform, with a compact model size of 18.6 MB and power consumption maintained below 5.1 W, demonstrating its efficiency for edge deployment. In standard classification tasks, the model attains 89.64%, 87.91%, 88.76%, and 88.43% in terms of precision, recall, F1-score, and accuracy, respectively, outperforming existing mainstream lightweight models such as ResNet18, MobileNetV2, and MobileViT across all evaluation metrics. In few-shot classification tasks targeting rare toxic weed species, the complete model achieves an accuracy of 80.32%, marking an average improvement of over 13 percentage points compared to ablation variants that exclude pseudo-labeling and self-supervised modules or adopt a CNN-only architecture. The experimental results indicate that the proposed model not only delivers strong overall classification performance but also exhibits superior adaptability for deployment and robustness in low-data regimes, offering an effective solution for the precise identification and ecological control of toxic weeds within intelligent agricultural perception systems.

1. Introduction

With the advancement of precision agriculture, the automatic identification and precise elimination of farmland weeds have become critical components in ensuring crop yields, reducing pesticide usage, and enabling intelligent agricultural management [1]. In particular, toxic or highly invasive weeds—collectively referred to as “toxic weeds”—pose severe threats due to their rapid reproduction, aggressive competition with crops, and potential toxicity to humans and animals [2]. Therefore, accurate and efficient recognition of toxic weeds holds significant importance in agricultural production. Especially in complex field environments, achieving precise detection of toxic weeds is vital to provide reliable decision-making support for agricultural robots and UAV-based spraying systems [3].

Conventional toxic weed identification methods primarily rely on manual visual inspection and handcrafted feature extraction techniques [4]. These approaches often utilize low-level image features such as texture, color, edges, or shape, combined with classifiers like support vector machines (SVMs), k-nearest neighbors (KNNs), or decision trees [5]. While these methods may yield acceptable performance in controlled environments, their application in real-world agricultural fields faces two major challenges: First, variations in lighting, weed occlusion, background interference, and camera angles significantly degrade image quality, hindering the stable extraction of traditional features [6]. Second, manually defined features lack high-level semantic representation, making it difficult to handle the complexity introduced by diverse toxic weed species, morphological similarity, and imbalanced class distributions [7]. As a result, traditional approaches generally suffer from low recognition accuracy and poor generalization, failing to meet the requirements of modern automatic agricultural recognition systems.

In recent years, deep learning-based approaches have emerged as mainstream solutions for farmland image recognition, driven by rapid advancements in computer vision technologies [8]. In particular, convolutional neural networks (CNNs) have demonstrated superior performance in tasks such as plant disease identification, crop classification, and agricultural object detection due to their powerful feature extraction capabilities [9]. In the context of toxic weed recognition, popular CNN architectures such as the YOLO family, ResNet, and DenseNet have been employed for in-field image detection and classification, achieving improvements in accuracy and robustness to some extent [10]. However, these deep network-based models typically incur high computational costs, significant memory usage, and extended inference time, rendering them unsuitable for deployment on edge agricultural devices such as UAVs, robots, or handheld terminals [11]. Moreover, CNNs are prone to overfitting when trained on imbalanced datasets or scarce toxic weed images. Their performance often deteriorates for minority classes and when inter-class feature overlap is high, leading to misclassifications [12].

In order to address the challenge of deployment efficiency, recent research has focused on applying lightweight neural architectures to agricultural vision tasks. Among them, the MobileNet series stands out as one of the most prominent lightweight CNN designs, introducing depth-wise separable convolutions that decompose standard convolutions into channel-wise and point-wise operations, thereby significantly reducing computational cost [13,14]. GoogLeNet and ShuffleNet further optimized channel shuffling and residual compression to improve efficiency [15]. More recently, MobileViT has been proposed, which integrates lightweight CNNs with Transformer modules. This hybrid design preserves local perception while incorporating long-range dependency modeling, offering a promising direction for handling the complex textures and semantic variations in agricultural scenarios [16].

Although lightweight architectures enhance computational efficiency, their performance in identifying toxic weed species with limited samples remains suboptimal. This limitation arises primarily because conventional supervised learning heavily relies on large-scale annotated datasets, thereby constraining the extraction of latent feature distributions from unlabeled images [17]. To overcome this issue, self-supervised learning and pseudo-labeling strategies have emerged as effective solutions for improving recognition under limited supervision [18,19]. Self-supervised learning leverages pretext tasks such as contrastive prediction and image reconstruction to learn semantic representations without annotations [20]. In particular, contrastive learning (CL) methods (e.g., SimCLR and MoCo) enhance robustness by enforcing similarity constraints among feature representations [21]. Building upon this, pseudo-labeling, as a widely adopted semi-supervised strategy, has been applied to semantic segmentation and few-shot classification tasks [22,23]. The core idea is to use a trained model to generate soft labels for unlabeled data, incorporating high-confidence predictions into the training set to enhance the generalization ability [24]. Along this line, Guo et al. [25] proposed a semi-supervised semantic segmentation method for maize field weeds, which combines pseudo-label generation with consistency regularization. Remarkably, the method achieves near or even superior performance to full supervision while requiring only 20% of annotated data. Similarly, Li et al. [26] introduced a semi-supervised weed detection framework that integrates diffusion generative models with attention mechanisms, achieving an accuracy of approximately 0.94, a recall of 0.90, and an F1-score of 0.92. In another study, Li et al. [27] developed an improved pseudo-labeling student–teacher detection framework. With only 10% labeled data, the framework attained about 76% of full-supervised performance on CottonWeedDet3 and 96% on CottonWeedDet12. Furthermore, Huang and Bais [28] proposed an unsupervised domain adaptation framework that integrates greedy pseudo-label selection with softIoU-based co-training, significantly boosting the mean intersection over union (mIoU) in target domains. Their method also demonstrates robust adaptability across devices and growing seasons.

Although these approaches demonstrate encouraging progress, they remain limited in several respects. Many rely on complex model architectures or generative components, which restrict their applicability to low-power edge devices. Moreover, in most existing methods, pseudo-labels are generated in a static, one-off manner, making them highly sensitive to early prediction errors. This issue is particularly severe for visually similar weed species, where inaccurate pseudo-labels blur inter-class boundaries and weaken discriminative learning. Consequently, there remain critical challenges in noxious weed recognition, including (i) high inter-class similarity among species, (ii) severe data imbalance and scarcity in few-shot scenarios, and (iii) the demand for lightweight yet accurate models suitable for real-time edge deployment. These challenges motivate the development of a unified framework that balances accuracy, compactness, and generalization.

To address the above challenges, a toxic weed recognition method is proposed by integrating a lightweight architectural design with pseudo-label-guided contrastive learning, aiming to achieve high accuracy, low latency, and robust few-shot recognition suitable for resource-constrained edge platforms. The method introduces multiple innovations in both network structure and learning mechanisms, with the main contributions summarized as follows: A lightweight Transformer–CNN hybrid backbone is designed to substantially reduce model size and computational cost while maintaining a strong representational capacity, in which local texture details are extracted by CNN modules and long-range dependencies are captured by Transformer modules with window-based attention; through structural fusion and channel attention integration, stable real-time performance and high energy efficiency are achieved on edge platforms such as Jetson Nano. To address the scarcity of toxic weed samples, a pseudo-label generation and dynamic refinement mechanism is introduced, whereby soft labels are predicted for unlabeled images after initial training, refined through a class-wise mean optimization strategy, and subsequently incorporated into further training so that robustness for minority classes is enhanced and class imbalance is alleviated. To improve discrimination among visually similar weed categories, a contrastive and boundary enhancement module is developed, where image pairs are optimized with contrastive loss to bring intra-class features closer and push inter-class features farther apart, and a boundary alignment loss is employed to strengthen sensitivity to class boundaries, thereby improving precision for confusing categories such as Digitaria sanguinalis and Setaria viridis. Finally, extensive experiments are conducted across multiple toxic weed datasets and edge hardware platforms (Jetson Nano, Jetson Xavier, and Raspberry Pi 4), with comparisons against ResNet18, MobileViT, ShuffleNet, and other state-of-the-art models; ablation studies and Grad-CAM visualizations further validate the contributions of each module, and competitive recognition accuracy is achieved with a reduced model size and inference time, along with notable improvements for few-sample categories, demonstrating strong potential for practical deployment.

In summary, the proposed framework addresses the twin challenges of edge deployment and few-shot recognition by achieving a trade-off between accuracy, efficiency, and robustness, ultimately aiming to support reliable toxic weed monitoring in practical agricultural settings.

2. Materials and Methods

2.1. Data Collection

To construct a diverse and representative toxic weed recognition dataset, a comprehensive data acquisition campaign was conducted across several typical agricultural zones in China. These included the North China Plain (Handan in Hebei Province and Weifang in Shandong Province), the southwestern hilly region (Zizhong in Sichuan Province and Tongnan in Chongqing Municipality), and the arid farming zones of Northwest China (Qingyang in Gansu Province and Zhongwei in Ningxia Hui Autonomous Region), as well as additional curated online images obtained from publicly available sources. Data collection spanned the entire agricultural production cycle, from spring sowing to autumn harvest, with a particular focus on the critical growth period between April and October. This time frame was selected to ensure the inclusion of toxic weeds at different phenological stages and under varying field conditions.

Image acquisition was carried out using a combination of handheld devices and UAV platforms. The handheld setup consisted of Android-based devices equipped with high-definition camera modules (12 megapixels), used for capturing close-range images of leaf textures and weed distributions. For aerial views, a DJI Phantom 4 RTK drone was deployed, outfitted with both a multispectral imaging module and a standard RGB sensor, enabling high-resolution top-down imagery of entire field plots. Each data collection site was sampled at three different times of day—morning, noon, and evening—to incorporate variations in lighting conditions and view angles, thereby enhancing the model’s robustness to real-world deployment scenarios.

To ensure broad taxonomic coverage and annotation accuracy, ten toxic weed species were selected as primary targets based on their high prevalence, agricultural threat, and morphological similarity. These included Datura stramonium, Xanthium sibiricum, Solanum nigrum, Alopecurus aequalis, Stellera chamaejasme, Echinochloa crus-galli, Portulaca oleracea, Setaria viridis, Sonchus oleraceus, and Eleusine indica, as shown in Table 1 and Figure 1. All field images were captured by a team with agronomic and plant protection expertise. In parallel, physical weed specimens were collected on site as references. Image labels were independently verified by at least two certified plant protection specialists to ensure labeling accuracy and consistency.

Table 1.

Toxic weed image dataset: source and category-level distribution (total ∼7000 images).

Figure 1.

Example samples of collected weed images from various categories.

The collected imagery was categorized into three types: The first category consisted of high-resolution close-up images, focusing on leaf textures and morphological details suitable for local texture modeling and fine-grained structural recognition. The second category comprised wide-angle field images, which emphasized background complexity and low object saliency, offering a challenging setup for robust detection algorithms. The third category included multi-scale aerial images with common field artifacts, such as occlusion, weed–crop overlap, and glare, used to evaluate model performance under non-ideal deployment conditions.

2.2. Data Augmentation

In toxic weed image recognition tasks, data preprocessing and augmentation serve as critical steps to enhance model performance, mitigate overfitting, and improve robustness for few-shot categories. Especially under real-world conditions characterized by data imbalance and extreme scarcity of toxic weed samples, the implementation of effective augmentation strategies plays a vital role in improving the generalization capability. This section introduces key techniques including basic image augmentation, few-shot enhancement, and the construction of positive and negative pairs in self-supervised CL. The theoretical principles are elaborated and supported with corresponding mathematical formulations.

First, standard image augmentation operations represent a common approach to improving model robustness, particularly under challenging field conditions such as variable illumination, severe occlusion, and complex backgrounds. In this study, techniques such as random cropping, color perturbation, and blur simulation were adopted to enhance the model’s adaptability to noise and partial occlusion. For example, given an original image I of size , a cropped image is generated by selecting a random position and crop size , as defined by

Color perturbation includes changes in brightness, contrast, saturation, and hue. A common approach introduces a perturbation factor to each RGB channel, resulting in an augmented image :

where is sampled from a uniform distribution. Blur simulation (e.g., Gaussian blur) is applied by convolving the image with a spatial filter to smooth local texture details, improving model resilience to image blurring or low resolution. Gaussian blur is expressed as

where is the 2D Gaussian kernel, and k is the kernel radius. The blurring operation smooths features via weighted aggregation of neighboring pixel values.

Beyond basic augmentation, two strategies—MixUp and CutMix—were employed to enhance the sample diversity and quality of minority toxic weed classes. MixUp is a linear interpolation-based technique. Given two samples and , the augmented pair is generated as follows:

where is drawn from a Beta distribution . The resulting samples reside in a continuous space formed by weighted blending of images and labels, which encourages the model to learn linearly separable representations and alleviates decision boundary instability for few-shot classes.

In contrast, CutMix performs region-level augmentation by pasting a rectangular patch from image onto . The augmented image and label are computed as

where M is a binary mask with indicating the pasted region, ⊙ denotes element-wise multiplication, and represents the patch proportion. CutMix is particularly effective under partial occlusion and background clutter, which are common in toxic weed images.

Building upon these augmentation strategies, a self-supervised CL mechanism was integrated to extract structural information from unlabeled images and enhance representation learning and generalization. Inspired by methods such as SimCLR, positive and negative pairs were constructed from toxic weed images for contrastive training. Let denote an input image. Two augmented views, , are generated via random transformations and , respectively. After projection through an encoder , the feature vectors are obtained as and . The contrastive loss is defined as

where denotes cosine similarity between normalized vectors, is the temperature parameter, and N is the number of samples in the batch. Positive pairs are created from different augmentations of the same image, while negative pairs are drawn from other images in the batch. Minimizing this loss encourages the model to pull together features from the same class and push apart features from different classes, even in the absence of label information.

In few-shot toxic weed scenarios, CL is especially beneficial, as it enables the model to capture discriminative attributes such as texture, edge, and morphology without supervision. In practice, a predefined set of augmentation combinations (e.g., random rotation with color perturbation and cropping with blurring) is used to construct stable positive and negative pairs. These transformations ensure semantic consistency while introducing sufficient variation to increase contrastive difficulty, thereby promoting the learning of robust and generalizable representations.

2.3. Proposed Method

2.3.1. Overall

This study proposes a toxic weed recognition framework tailored for edge deployment and few-shot learning scenarios. The framework consists of three core modules: a lightweight hybrid feature extraction backbone, a pseudo-label generation and dynamic update module, and a contrastive enhancement with boundary-aware optimization module. The preprocessed images are initially fed into a Transformer–CNN hybrid backbone, which extracts multi-scale semantic features through alternately stacked convolutional blocks and window-based attention blocks. The convolutional components are designed to capture local texture and edge structures, making them suitable for modeling fine-grained morphological variations of weed leaves, while the Transformer layers model global contextual dependencies, enhancing robustness to background interference and long-range correlations. The extracted features are then propagated through two parallel training branches: a pseudo-label-guided branch and a CL branch.

In the pseudo-label branch, a portion of the unlabeled images is passed through the backbone to generate soft label predictions. High-confidence pseudo-labels are selected via confidence thresholding and category-wise prototype matching. These labels are then combined with the ground-truth-labeled data to form an augmented training set for subsequent iterations. This pseudo-labeling strategy effectively expands the training set, significantly improving the recognition performance of rare weed categories. Meanwhile, all training samples, including those with pseudo-labels, are utilized to construct positive and negative pairs for the contrastive enhancement module. By constraining the similarity relationships among feature representations, the model is encouraged to pull together intra-class instances and push apart inter-class ones, thereby optimizing the discriminability of the feature space. For samples near category boundaries, an additional boundary-enhancing mechanism is introduced to improve decision consistency in ambiguous regions. Ultimately, these three modules are jointly optimized within a unified training framework, where supervised classification loss, self-supervised contrastive loss, and boundary-aware loss function in tandem to drive the model toward learning discriminative, generalizable representations under data-scarce conditions while maintaining deployment efficiency on edge platforms.

2.3.2. Lightweight Hybrid Backbone

In this work, the lightweight hybrid feature extraction module serves as the core component of the recognition architecture, combining the local modeling advantages of CNNs with the global context modeling capabilities of visual Transformers. The entire structure is designed to follow a progressive encoding path, transitioning from low-resolution inputs to high-level semantic features and from local perception to global understanding, as shown in Figure 2. The input image with resolution is first processed through a standard convolution, increasing the channel dimension from 3 to 16. This is followed by a patch embedding operation that maps the input into a compact latent representation suitable for the main backbone network.

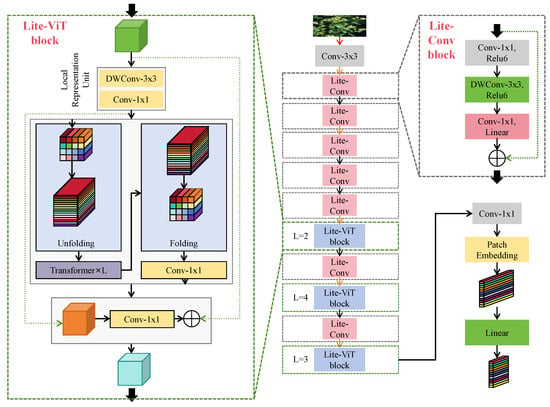

Figure 2.

The proposed lightweight hybrid backbone architecture. This network alternately stacks lightweight convolutional modules (Lite-Conv) and lightweight Transformer modules (Lite-ViT block) across multiple resolution stages (112 × 112, 56 × 56, 28 × 28, 14 × 14) for hierarchical feature extraction.

The backbone is composed of multiple interleaved Lite-Conv and Lite-ViT blocks. In the initial two stages, four Lite-Conv blocks are applied to extract low-level texture features, with spatial resolutions progressively reduced to and and channel dimensions increased to 32, 64, and 128. Each Lite-Conv block consists of a depth-wise separable convolution (DWConv-), followed by two point-wise convolutions. ReLU6 activation is used to enhance nonlinearity [29], and residual connections are added to improve gradient flow and stability. Subsequently, several Lite-ViT blocks are introduced, maintaining the same resolution and channel dimensions while extending the receptive field. Each Lite-ViT block implements an unfold–fold operation to flatten local features into token sequences, which are then fed into a Transformer sub-layer comprising multi-head self-attention and a feedforward network, normalized by LayerNorm. To meet resource constraints on edge devices, the depth of the Transformer sub-layers is set to , , and at successive stages, and the total token count is limited to no more than 196, significantly reducing the computational burden compared to conventional ViT structures.

This architectural design achieves a desirable trade-off between parameter efficiency and representational power. From a complexity standpoint, a conventional convolutional layer involves parameter count on the order of , where K is the kernel size. In contrast, the DWConv used in the Lite-Conv blocks operates on parameters, while the subsequent convolutions handle channel interactions. This design yields a complexity reduction close to a factor of compared to standard convolutions, significantly reducing floating-point operations (FLOPs). For Transformer sub-layers, the main computational cost resides in the attention mechanism, with complexity , where N is the number of tokens, and d is the embedding dimension. By restricting N to a window, the attention computation becomes tractable for real-time inference.

Functionally, the CNN modules are responsible for capturing low-level features such as edges, textures, and colors, while the Transformer modules enhance the global semantic structure and long-range dependencies. Their fusion produces robust representations that jointly preserve local detail and global context, leading to improved performance in complex agricultural imagery where weeds often exhibit fine-grained morphological similarities under cluttered backgrounds. Overall, the lightweight hybrid backbone not only ensures structural efficiency for edge deployment but also provides high-quality feature representations for downstream pseudo-label generation and CL modules.

2.3.3. Pseudo-Label Generator

In this study, the pseudo-label generation and dynamic update module serves as a core component of the semi-supervised optimization strategy, aiming to alleviate the limitations caused by insufficient labeled data and poor generalization performance on rare noxious weed categories. This module shares the feature extractor with the lightweight backbone network, and its output is simultaneously directed into two symmetric branches: one for labeled samples and the other for unlabeled samples. Through a prototype queue and similarity projection mechanism, pseudo-labels for unlabeled images are generated and dynamically refined. This prediction–correction–update cycle is iteratively conducted during training and jointly optimized with contrastive and semantic supervision in the feature space, thereby enhancing the model’s discriminative capability under label-scarce conditions.

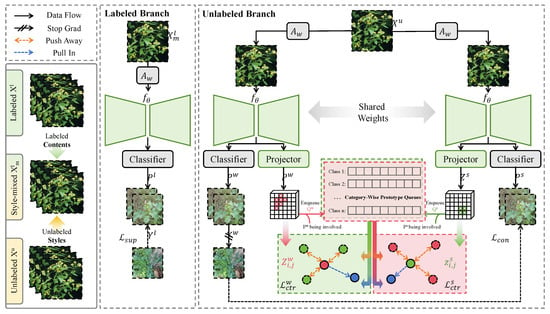

As illustrated in Figure 3, the pseudo-label branch receives high-dimensional feature maps from the lightweight backbone with an initial size of . A projection layer compresses the channel dimension to , with its weights shared between the labeled and unlabeled paths. The projected features are then fed into an asymmetric classification head comprising a convolution with 64 output channels, followed by ReLU activation and BatchNorm, and a projection convolution producing output channels. A softmax activation is applied to obtain the pseudo-label probability distribution.

Figure 3.

Architecture of the pseudo-label generator and dynamic update module. Features extracted from the shared backbone are processed through classifier and projector layers into both labeled and unlabeled branches. By leveraging category-wise prototype queues and similarity-based filtering, pseudo-labels for unlabeled samples are predicted and dynamically selected.

For an unlabeled sample , the projected feature is denoted as , and its pseudo-label distribution is given by , where W represents the classifier weights. If the maximum confidence exceeds a threshold , a pseudo-label is generated. To avoid label noise, a class-wise prototype queue is constructed, where each prototype is computed as

where is the feature queue for class k. Each queue maintains a fixed length of feature vectors. At the beginning of training, the queues are initialized using the mean embeddings of the available labeled samples in each class. The similarity is then computed. A pseudo-label is accepted only if the class with the highest similarity matches ; otherwise, the sample is placed into a delayed update queue for future evaluation. When a queue is full, the oldest entry is removed in a first-in–first-out manner. Additionally, the prototype queue is updated after each training iteration via the exponential moving average:

where is the update rate, empirically set to 0.2 to balance stability and adaptiveness. This exponential moving average update treats each prototype as a weighted accumulation of past features with exponentially decaying coefficients, ensuring that prototypes evolve smoothly rather than being dominated by noisy individual samples. Since the recursion defines a contraction mapping when the underlying feature distribution becomes approximately stationary, the prototypes are guaranteed to converge toward stable representations that approximate class means.

This module is used jointly with the contrastive enhancement module to optimize the inter-class discriminability in the feature space. For each pseudo-labeled pair and ground-truth-labeled pair , if , the pair is treated as a positive match; otherwise, it is treated as a negative match. The corresponding joint contrastive loss is defined as

This loss maximizes the co-occurrence likelihood between pseudo- and real features of the same class while minimizing the distance to other classes, reinforcing boundary discrimination. Specifically, prototype regularization alleviates label drift, and dynamic update ensures continuous improvement in pseudo-label quality during training.

2.3.4. Contrastive and Boundary Enhancement

The contrastive and boundary enhancement module is designed to address issues such as morphological similarity between weed categories, blurred boundaries, and class imbalance, thereby improving the model’s ability to distinguish confusing categories and enhancing the clarity of the feature space. This module includes two parts: a contrastive branch to stretch the feature space and a boundary alignment branch to optimize inter-class decision boundaries. These modules operate in parallel with the lightweight backbone and pseudo-label generator to form a discriminative enhancement pathway.

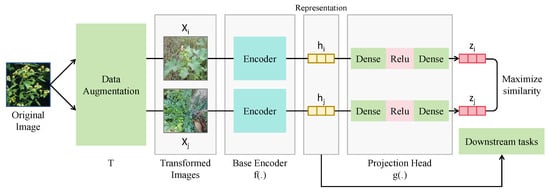

Based on the SimCLR framework, as illustrated in Figure 4, two augmented versions and of image are generated using transformations and , respectively. They are passed through a shared encoder to obtain intermediate features and with dimensions . A projection head , composed of two fully connected layers (with intermediate dimension 64 and final dimension 32), transforms them into projection vectors . The NT-Xent loss is used as the contrastive objective:

where denotes cosine similarity, is a temperature parameter, and N is the batch size. This objective encourages intra-class compactness and inter-class separation, particularly improving performance on weeds with minor shape variations.

Figure 4.

Illustration of the overall architecture of the “contrastive and boundary enhancement” module. Based on the SimCLR framework, the module applies two distinct data augmentations ( and ) to the input image, followed by feature extraction using a shared-weight base encoder , producing intermediate representations and . These features are then passed through a projection head , consisting of two fully connected layers, to generate contrastive embeddings and .

However, contrastive loss alone cannot optimize decision boundaries. Therefore, a boundary alignment loss is introduced, which penalizes uncertain predictions based on entropy. Given the prediction probability of a sample , its entropy is computed as

samples with high entropy (close to ) are considered boundary samples and are included in the loss:

where is the set of high-entropy samples. This encourages the model to produce more confident predictions near class boundaries.

Together, these modules form a joint regularization mechanism. The contrastive loss enhances consistency in the embedding space, while the boundary loss improves stability around ambiguous regions. Combined with prototype constraints, the total objective becomes

where is the standard cross-entropy loss, and and are hyperparameters set to 0.3 and 0.1, respectively. This objective minimizes the expected risk under cross-modal perturbation, class ambiguity, and pseudo-label drift, significantly enhancing model robustness and accuracy under few-shot conditions.

Notably, the module is only activated during training and introduces no inference time overhead. The projection head is discarded at test time. Experimental results confirm that integrating contrastive and boundary alignment strategies yields substantial improvements on confusing weed pairs while maintaining real-time deployment efficiency, rendering it a critical component for practical applications.

3. Results

3.1. Experimental Setup

3.1.1. Experimental Platform and Hyperparameter Settings

To evaluate the practical deployment capacity and performance of the proposed lightweight noxious weed recognition framework across various edge environments, experiments were conducted on multiple hardware platforms, including NVIDIA Jetson Nano (NVIDIA Corporation, Santa Clara, CA, USA), Jetson Xavier NX (NVIDIA Corporation, Santa Clara, CA, USA), Raspberry Pi 4 Model B (Raspberry Pi Foundation, Cambridge, UK), and the desktop-grade NVIDIA RTX 3090 GPU (NVIDIA Corporation, Santa Clara, CA, USA). The Jetson Nano integrates a 128-core Maxwell GPU, a quad-core ARM Cortex-A57 CPU, and 4 GB LPDDR4 memory, making it suitable for lightweight edge deployment. The Jetson Xavier NX features a 384-core Volta GPU with 48 Tensor Cores, a 6-core Carmel ARMv8.2 CPU, and 8 GB LPDDR4x memory, providing enhanced computational power for real-time edge inference. The Raspberry Pi 4 Model B employs a Broadcom BCM2711 quad-core Cortex-A72 processor and supports up to 8 GB LPDDR4 memory, optimized for low-power, cost-sensitive scenarios. The RTX 3090, with 10496 CUDA cores and 24 GB GDDR6X video memory, served as the primary platform for large-scale model pretraining and comparative analysis, while the Jetson Nano, Xavier NX, and Raspberry Pi 4 were used exclusively for deployment evaluation and inference benchmarking.

The software environments were aligned as closely as possible across platforms. On the desktop GPU server, Ubuntu 20.04 LTS was used with CUDA 11.x and cuDNN 8.x. For Jetson devices, the official NVIDIA JetPack 4.6 distribution (based on Ubuntu 18.04, CUDA 10.2, and cuDNN 8.2) was adopted to enable GPU acceleration. All models were implemented in PyTorch 1.11 under Python 3.8, with supplementary libraries such as OpenCV and Albumentations used for image preprocessing and data augmentation. For deployment evaluation, models were exported to ONNX format and accelerated with TensorRT to maximize computational efficiency on edge platforms. Inference latency was measured using the system clock, and power consumption was monitored with tegrastats on Jetson devices and an external USB power meter on Raspberry Pi to ensure a fair comparison.

The dataset was split into training, validation, and testing subsets at a ratio of 6:2:2, with class distributions balanced across all subsets. Within the training set, a five-fold cross-validation strategy was applied to enhance robustness under low-shot conditions. Specifically, in each iteration, one fold was used for validation and the remaining four for training, and the results were averaged over five runs to reduce sample bias. Random seeds were fixed across PyTorch, NumPy, and CUDA to ensure reproducibility.

All experiments were conducted on an RTX 3090 GPU. The AdamW optimizer was employed with an initial learning rate of , adjusted by a cosine annealing schedule. The batch size was set to 32, and training was performed for 200 epochs. In the contrastive learning branch, the temperature parameter was fixed at 0.07, and a confidence threshold of 0.8 was used for pseudo-label selection. To mitigate early-stage label drift, class-wise mean initialization was applied. In addition, weight decay was set to for regularization, and dropout with a rate of 0.1 was applied within Transformer layers to reduce overfitting. All input images were resized to . The lightweight hybrid backbone consisted of four Lite-Conv blocks, followed by three Lite-ViT stages, with channel dimensions progressively expanded from 32 to 128. Each Lite-ViT block used a token embedding size of 64 and a window size of . The projection head in the contrastive branch was implemented as two fully connected layers with dimensions [64, 32]. This configuration ensured stable convergence, effective generalization, and compatibility with the computational constraints of lightweight architectures.

3.1.2. Baseline

In the baseline comparison, several widely adopted lightweight and moderately complex models were selected for reference, including ResNet18 [30], MobileNetV2 [31], GoogLeNet [32], MobileViT [33], ShuffleNet [34], SparseSwin [35], and CSWin-MBConv [36]. All models were trained and evaluated under a unified dataset and experimental protocol.

ResNet18, a representative residual network with a moderate model size, has been recognized for its stable training dynamics and robust feature representation capability. MobileNetV2, employing an inverted residual structure and depth-wise separable convolutions, significantly reduces the number of model parameters while maintaining competitive accuracy, making it suitable for mobile deployment. GoogLeNet, built on the Inception architecture, emphasizes computational efficiency and achieves a good trade-off between accuracy and speed, though its classification performance is weaker than that of more recent architectures. MobileViT integrates the local inductive biases of CNNs with the global context modeling power of transformers, enabling effective cross-scale feature extraction. ShuffleNet achieves efficient computation through channel shuffling and grouped convolutions, demonstrating high computational efficiency on low-power devices. SparseSwin employs sparse latent tokens within the Swin Transformer framework, reducing parameters while enhancing high-level feature extraction, thus achieving a strong trade-off between efficiency and accuracy. CSWin-MBConv parallelizes CNN-based local feature extraction and Transformer-based global modeling, with an efficient fusion module that boosts identification accuracy while keeping the model compact.

3.1.3. Evaluation Metrics

To comprehensively evaluate the classification performance of the proposed method, four commonly used metrics were adopted: precision, recall, F1-score, and accuracy. The mathematical definitions are as follows:

In the above formulas, denotes the number of true positives, the number of false positives, the number of false negatives, and the number of true negatives. Together, these metrics provide a comprehensive assessment of the model’s ability to correctly identify toxic weeds while minimizing false detections and missed cases.

On this basis, multiple ablation studies were conducted to assess the independent contributions of the proposed components. These included (1) removing the pseudo-labeling module, (2) removing the CL module, and (3) replacing the hybrid backbone with a pure CNN-based architecture. Each variant was evaluated to verify the effectiveness of pseudo-label-guided supervision, self-supervised contrastive enhancement, and hybrid structural design, respectively. All baseline and ablation methods were deployed and tested on actual edge platforms such as Jetson Nano and Raspberry Pi. Evaluation metrics included inference speed (FPS), average per-frame latency, and power consumption (W), thereby providing a comprehensive assessment of the deployment performance and energy efficiency advantages of the proposed method in practical environments.

3.2. Classification Performance Comparison of Different Models

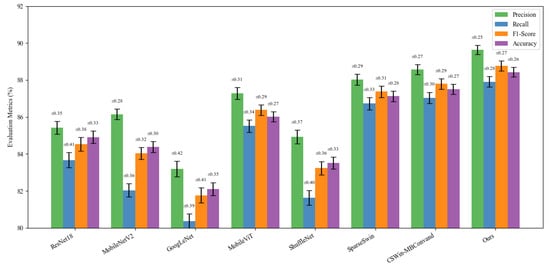

This experiment was conducted to evaluate the overall performance of the proposed method in the task of toxic weed image classification by comparing it against current mainstream lightweight and moderately complex models. The focus was placed on the differences across four key evaluation metrics: precision, recall, F1-score, and accuracy. The baseline models included ResNet18, MobileNetV2, GoogLeNet, MobileViT, ShuffleNet, SparseSwin, and CSWin-MBConv, which represent a spectrum of architectures ranging from classical CNN structures to models integrating Transformer mechanisms. All models were trained and tested on the same dataset and under identical experimental protocols to ensure the fairness and reproducibility of the results, as summarized in Table 2 and Figure 5 and further illustrated through heatmap visualization in Figure 6.

Table 2.

Classification performance comparison of different models (mean ± std over 5 runs).

Figure 5.

Classification performance comparison across different lightweight models. The bar chart presents the precision, recall, F1-score, and accuracy (%) of eight models (ResNet18, MobileNetV2, GoogLeNet, MobileViT, ShuffleNet, SparseSwin, CSWin-MBConvand, and the proposed model).

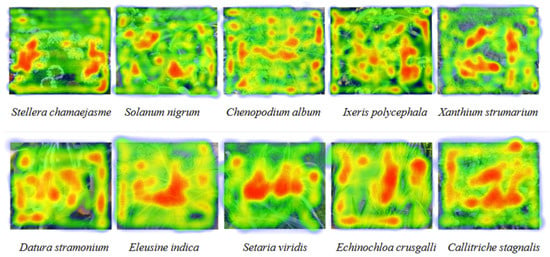

Figure 6.

Heatmap visualization showing the discriminant regions of a lightweight CNN–Transformer hybrid network for identifying ten noxious weeds. Red areas indicate the discriminant features that the model pays the most attention to, while green and blue areas indicate less attention.

As shown in the results, the proposed model achieved the highest performance across all four evaluation metrics, with an accuracy of 88.43% and an F1-score of 88.76%, representing an approximate 2% improvement over MobileViT. While MobileViT, a lightweight model integrating both CNN and Transformer modules, demonstrated strong performance due to its global modeling capability—particularly effective for capturing fine-grained structures in plant leaf textures—classical CNN-based architectures such as ResNet18 and MobileNetV2 showed limitations in recall due to the lack of mechanisms for modeling long-range dependencies. GoogLeNet has limited fine-grained feature representation capabilities, and its classification accuracy lags behind that of other models. ShuffleNet, designed for computational efficiency via channel shuffling and group convolutions, showed a weaker modeling capacity for complex textures. SparseSwin leveraged sparse attention to capture hierarchical features and reached competitive accuracy but showed limited gains on highly similar categories. CSWin-MBConv improved inter-class separability through CNN–Transformer fusion yet exhibited fluctuations in recall for rare and confusing species. The proposed method, enhanced by pseudo-labeling and contrastive boundary learning mechanisms built upon a compact hybrid backbone, retained structural efficiency while improving feature discriminability and boundary sensitivity.

3.3. Model Efficiency Comparison on Jetson Nano

This experiment was designed to validate the runtime efficiency of the proposed model when deployed on a real-world edge device, Jetson Nano, thereby assessing its applicability in resource-constrained settings. The model was compared against mainstream lightweight networks based on five metrics: model size, FLOPs, FPS, latency, and power consumption. The baseline models—ResNet18, MobileNetV2, GoogLeNet, MobileViT, ShuffleNet, SparseSwin, and CSWin-MBConv—were selected for their widespread use and favorable trade-offs in embedded AI applications.

As shown in Table 3, the proposed model achieved either the best or near-best performance across all five metrics. Notably, it reached 18.9 FPS and 52.9 ms latency, demonstrating excellent real-time capabilities. With a compact size of 18.6 MB and a low computational cost of 0.41 GFLOPs, the model was highly efficient without compromising structural integrity. ResNet18 exhibited high computational demand due to deep residual stacking, resulting in increased latency and energy consumption. MobileNetV2 and ShuffleNet significantly reduced operations via depth-wise separable convolutions and grouped channel transformations, although they traded off some feature expressiveness. GoogLeNet, leveraging its Inception modules, provided favorable speed–accuracy trade-offs, but the structural redundancy introduced moderate computational cost relative to newer lightweight designs. MobileViT benefited from global modeling via Transformers but incurred latency overhead due to attention computation. SparseSwin, equipped with sparse attention for hierarchical representation, achieved reasonable runtime efficiency but required additional operations that slightly increased latency compared to its CNN-based counterparts. CSWin-MBConv, by integrating CNN-style local extraction with Transformer-based global modeling, delivered balanced efficiency across metrics, though its hybrid structure introduced non-negligible computational overhead. By contrast, the proposed model adopted hierarchical feature fusion and dynamic pseudo-label filtering, effectively suppressing redundant pathways while aligning features and enhancing boundary representations.

Table 3.

Model efficiency comparison on Jetson Nano.

3.4. Performance on Rare Weed Categories Under Few-Shot Setting

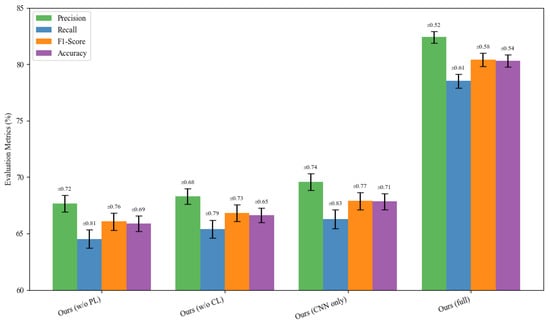

This experiment aimed to evaluate the capability of the proposed model in recognizing rare toxic weed species under few-shot learning scenarios, reflecting its generalization capacity in low-sample settings. Three ablation configurations were tested: without pseudo-labeling (w/o PL), without CL (w/o CL), and using a CNN-only backbone. These were compared against the full model. Performance was assessed via precision, recall, F1-score, and accuracy to examine the contributions of each module to few-shot learning performance.

As shown in Table 4 and Figure 7, the full model significantly outperformed all ablation baselines across all metrics. The F1-score and accuracy reached 80.41% and 80.32%, respectively, representing an improvement of over 12% compared to any variant with a removed component. The pseudo-labeling module enhanced sample representation by introducing confidence-based supervision, thus mitigating overfitting risks in low-resource settings. The CL module imposed semantic constraints by pulling together intra-class features while pushing apart inter-class features in the representation space, effectively improving boundary discrimination. The hybrid CNN–Transformer backbone provided the dual advantage of local texture modeling and global context aggregation.

Table 4.

Performance on rare weed categories under few-shot setting (mean ± std over 5 runs).

Figure 7.

Ablation study on rare weed classification under few-shot settings. The bar chart compares the impact of different components—pseudo-labeling (PL), CL, and CNN backbone—on classification performance. The full model achieves the highest scores across all metrics (precision, recall, F1-score, accuracy), validating the effectiveness of the proposed modules.

4. Discussion

4.1. Practical Deployment Analysis

The proposed lightweight poisonous weed recognition model, which integrates pseudo-label guidance and contrastive enhancement strategies, achieves a favorable balance between recognition accuracy and deployment efficiency on edge devices. Its practical value lies not only in the theoretical performance metrics but also in its potential for real-world application within actual agricultural production environments.

In alpine meadow ecosystems of northern regions or arid and semi-arid zones in western China, early detection of poisonous weeds plays a vital role in supporting forage cultivation and livestock development. In particular, the alpine meadows of the Qinghai–Tibet Plateau and its northeastern margin (e.g., Tianzhu Tibetan Autonomous County and Gannan Tibetan Autonomous Prefecture in Gansu Province) represent some of the most typical grassland ecosystems, with critical functions in water conservation, climate regulation, and livestock production. However, due to climate warming, increased grazing intensity, and cumulative human disturbances, these regions are experiencing severe grassland degradation, accompanied by a rapid expansion of poisonous weed populations—now considered one of the major ecological threats to grassland productivity and livestock health.

The poisonous weed species in the alpine meadows of Tianzhu and Gannan are characterized by strong toxicity, perennial root systems, high reproductive capacity, and rejection by livestock. Representative species include the following:

- Stellera chamaejasme (wolf poison): One of the most destructive alpine toxic weeds with massive root systems and high aboveground biomass. It contains potent diterpenoid toxins and exhibits aggressive expansion, competing fiercely with high-quality forage.

- Aconitum gymnandrum (aconite): Contains highly toxic aconitine alkaloids that can cause livestock death even upon minimal ingestion.

- Pedicularis kansuensis: A semi-parasitic species that thrives in degraded meadows; it is non-lethal but has extremely poor palatability and strong competitive ability.

- Astragalus adsurgens: A leguminous poisonous weed that contains cyanogenic glycosides, inducing chronic poisoning if overgrazed.

- Cicuta virosa (water hemlock): Distributed in meadow wetland margins; its tuber contains cicutoxin, a powerful neurotoxin that causes respiratory paralysis.

These weeds not only pose direct toxic threats to livestock but also suppress the renewal of desirable forage species through ecological niche competition, leading to biodiversity loss and a decline in pasture productivity. In the context of grassland ecosystem conservation and intelligent management, achieving accurate, rapid, and low-cost recognition of such weeds has become a core technical requirement for smart animal husbandry and precision grassland monitoring. However, current image-based recognition studies face two major challenges: (1) Field image acquisition conditions are complex (e.g., variable illumination, occlusion, and background noise), while many poisonous weeds exhibit interspecies similarity and strong phenotypic variation, resulting in high recognition difficulty. (2) Some toxic species are rare and difficult to annotate, limiting the generalization of deep models. Therefore, it is necessary to develop models that balance lightweight deployment with few-shot adaptability, targeting edge platforms such as drones and robots.

The proposed model demonstrates stable performance on lightweight terminals such as Jetson Nano and Raspberry Pi, making it suitable for deployment in low-cost unmanned vehicles or portable imaging devices to support rapid in-field detection by farmers and grassland stewards. Its strong improvement in few-shot category recognition is particularly valuable for ecological restoration and invasive species monitoring. In fragile ecosystems, newly emerging poisonous weeds often belong to rare categories, for which conventional recognition systems provide unreliable identification. By integrating contrastive enhancement with dynamic pseudo-label refinement, the proposed framework achieves robust generalization under severely limited annotation. For example, in the management of the Tengger Desert margins, rapid identification of invasive species such as Allium mongolicum and Ricinus communis can facilitate grassland stabilization and biodiversity conservation. Beyond ecological monitoring, the framework also has important implications for agriculture and horticulture. Accurate recognition of toxic and invasive weeds reduces dependence on broad-spectrum herbicides, thereby lowering chemical inputs, minimizing soil and water contamination, and supporting sustainable production practices. In horticultural systems, where crop diversity is high and chemical overuse may compromise both yield quality and market value, early and precise detection of rare weeds can help reduce manual labor costs and economic losses. Thus, the proposed approach not only advances algorithmic design but also contributes practical environmental and economic benefits, reinforcing its relevance for intelligent agricultural management and sustainable horticultural development.

4.2. Limitations and Future Work

Despite the favorable performance of the proposed lightweight toxic weed recognition model in terms of classification accuracy, edge deployment efficiency, and few-shot generalization, several limitations remain to be addressed in future work. First, the model currently relies on pre-acquired still images and demonstrates limited robustness under dynamic field conditions where image blurring or distortion may result from lighting variability, occlusion, or wind-induced vegetation movement. Future extensions may incorporate temporal information or multi-angle image fusion to enhance adaptability to complex spatial environments. Second, although pseudo-labeling and contrastive augmentation mechanisms effectively improve performance in few-shot scenarios, their consistency across different weed species remains variable. More stable confidence regulation strategies or the integration of external prior knowledge, such as expert-guided knowledge graphs, may further strengthen semantic representation and boundary discrimination, particularly for low-sample categories. Finally, cross-regional generalization has not yet been explored. Since the model was trained and evaluated under specific geographic and ecological conditions, its applicability to broader environments remains uncertain. Future research may employ federated learning or domain adaptation techniques to enable collaborative training across decentralized data sources while preserving data privacy, thereby supporting scalable deployment across diverse ecological systems.

5. Conclusions

With the accelerated advancement of automation in grassland management, the efficient recognition and precise control of toxic weeds have become critical to enhancing forage yield and ensuring ecological safety. However, conventional deep learning approaches face significant limitations when deployed on low-power devices or applied to the recognition of few-shot weed categories. To address these challenges, a toxic weed recognition method is proposed that integrates pseudo-label generation, contrastive boundary enhancement mechanisms, and a lightweight hybrid backbone architecture. This approach aims to maintain high classification accuracy while improving deployment efficiency and generalization capability under data-scarce conditions. Experimental results demonstrate that the proposed method achieves outstanding runtime efficiency on edge devices such as the Jetson Nano, with a compact model size of only 18.6 MB, an inference speed of 18.9 FPS, and power consumption maintained within 5.1 W, indicating strong potential for real-world deployment. In standard classification tasks, the model achieves 89.64% precision, 87.91% recall, 88.76% F1-score, and 88.43% accuracy, outperforming all mainstream lightweight baselines. Furthermore, in few-shot scenarios, the proposed method attains over 80.32% accuracy on several rare toxic weed categories, achieving an average improvement of approximately 13 percentage points compared to variants without pseudo-labeling or contrastive enhancement or with a CNN-only backbone, thereby validating the effectiveness of the multi-module integration strategy.

Author Contributions

Conceptualization, R.L., B.Y., B.Z. and S.Y.; Methodology, R.L., B.Y. and B.Z.; Software, R.L., B.Y. and B.Z.; Validation, H.M., Y.Q. and X.L.; Formal analysis, X.L.; Investigation, Y.Q. and X.L.; Resources, H.M.; Data curation, H.M.; Writing – original draft, R.L., B.Y., B.Z., H.M., Y.Q., X.L. and S.Y.; Visualization, Y.Q.; Supervision, S.Y.; Project administration, S.Y.; Funding acquisition, S.Y., R.L. and B.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (2024YFC2607600), the National Natural Science Foundation of China (32372631), the Pinduoduo-China Agricultural University Research Fund (PC2023B02018), and the 2115 Talent Development Program of China Agricultural University.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jin, X.; Che, J.; Chen, Y. Weed identification using deep learning and image processing in vegetable plantation. IEEE Access 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- Wang, S.; Cheng, W.; Tan, H.; Guo, B.; Han, X.; Wu, C.; Yang, D. Study on Diversity of Poisonous Weeds in Grassland of the Ili Region in Xinjiang. Agronomy 2024, 14, 330. [Google Scholar] [CrossRef]

- Mishra, A.M.; Gautam, V. Weed Species Identification in Different Crops Using Precision Weed Management: A Review. In Proceedings of the ISIC, New Delhi, India, 25–27 February 2021; pp. 180–194. [Google Scholar]

- Coleman, G.R.; Bender, A.; Hu, K.; Sharpe, S.M.; Schumann, A.W.; Wang, Z.; Bagavathiannan, M.V.; Boyd, N.S.; Walsh, M.J. Weed detection to weed recognition: Reviewing 50 years of research to identify constraints and opportunities for large-scale cropping systems. Weed Technol. 2022, 36, 741–757. [Google Scholar] [CrossRef]

- Sinlae, A.A.J.; Alamsyah, D.; Suhery, L.; Fatmayati, F. Classification of Broadleaf Weeds Using a Combination of K-Nearest Neighbor (KNN) and Principal Component Analysis (PCA). Sink. J. Dan Penelit. Tek. Inform. 2021, 6, 93–100. [Google Scholar]

- Chen, S.; Memon, M.S.; Shen, B.; Guo, J.; Du, Z.; Tang, Z.; Guo, X.; Memon, H. Identification of weeds in cotton fields at various growth stages using color feature techniques. Ital. J. Agron. 2024, 19, 100021. [Google Scholar] [CrossRef]

- Janneh, L.L.; Zhang, Y.; Cui, Z.; Yang, Y. Multi-level feature re-weighted fusion for the semantic segmentation of crops and weeds. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 101545. [Google Scholar] [CrossRef]

- Zhang, Y.; Wa, S.; Liu, Y.; Zhou, X.; Sun, P.; Ma, Q. High-accuracy detection of maize leaf diseases CNN based on multi-pathway activation function module. Remote Sens. 2021, 13, 4218. [Google Scholar]

- Zhou, X.; Chen, S.; Ren, Y.; Zhang, Y.; Fu, J.; Fan, D.; Lin, J.; Wang, Q. Atrous Pyramid GAN Segmentation Network for Fish Images with High Performance. Electronics 2022, 11, 911. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Vali, E.; Fountas, S. Combining generative adversarial networks and agricultural transfer learning for weeds identification. Biosyst. Eng. 2021, 204, 79–89. [Google Scholar] [CrossRef]

- Shao, Y.; Guan, X.; Xuan, G.; Gao, F.; Feng, W.; Gao, G.; Wang, Q.; Huang, X.; Li, J. GTCBS-YOLOv5s: A lightweight model for weed species identification in paddy fields. Comput. Electron. Agric. 2023, 215, 108461. [Google Scholar] [CrossRef]

- Islam, M.D.; Liu, W.; Izere, P.; Singh, P.; Yu, C.; Riggan, B.; Zhang, K.; Jhala, A.J.; Knezevic, S.; Ge, Y.; et al. Towards real-time weed detection and segmentation with lightweight CNN models on edge devices. Comput. Electron. Agric. 2025, 237, 110600. [Google Scholar] [CrossRef]

- Hu, R.; Su, W.H.; Li, J.L.; Peng, Y. Real-time lettuce-weed localization and weed severity classification based on lightweight YOLO convolutional neural networks for intelligent intra-row weed control. Comput. Electron. Agric. 2024, 226, 109404. [Google Scholar] [CrossRef]

- Muthulakshmi, M.; Sharvani, S.; Varshini, S.; MS, S. Weed Crop Identification and Classification Using Transfer Learning with Variants of MobileNet and DenseNet. In Proceedings of the 2025 International Conference in Advances in Power, Signal, and Information Technology (APSIT), Bhubaneswar, India, 23–25 May 2025; pp. 1–6. [Google Scholar]

- Liu, Z.; Jung, C. Deep sparse depth completion using multi-scale residuals and channel shuffle. IEEE Access 2024, 12, 18189–18197. [Google Scholar] [CrossRef]

- Liu, X.; Sui, Q.; Chen, Z. Real time weed identification with enhanced mobilevit model for mobile devices. Sci. Rep. 2025, 15, 27323. [Google Scholar] [CrossRef]

- Chen, D.; Lu, Y.; Li, Z.; Young, S. Performance evaluation of deep transfer learning on multi-class identification of common weed species in cotton production systems. Comput. Electron. Agric. 2022, 198, 107091. [Google Scholar] [CrossRef]

- Fang, B.; Li, X.; Han, G.; He, J. Rethinking pseudo-labeling for semi-supervised facial expression recognition with contrastive self-supervised learning. IEEE Access 2023, 11, 45547–45558. [Google Scholar] [CrossRef]

- Mishra, A.M.; Kaur, P.; Singh, M.P.; Singh, S.P. A self-supervised overlapped multiple weed and crop leaf segmentation approach under complex light condition. Multimed. Tools Appl. 2024, 83, 68993–69018. [Google Scholar] [CrossRef]

- Cascante-Bonilla, P.; Tan, F.; Qi, Y.; Ordonez, V. Curriculum labeling: Revisiting pseudo-labeling for semi-supervised learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 6912–6920. [Google Scholar]

- Güldenring, R.; Nalpantidis, L. Self-supervised contrastive learning on agricultural images. Comput. Electron. Agric. 2021, 191, 106510. [Google Scholar] [CrossRef]

- Ran, L.; Li, Y.; Liang, G.; Zhang, Y. Pseudo labeling methods for semi-supervised semantic segmentation: A review and future perspectives. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 3054–3080. [Google Scholar] [CrossRef]

- Lin, X.; Wa, S.; Zhang, Y.; Ma, Q. A dilated segmentation network with the morphological correction method in farming area image Series. Remote Sens. 2022, 14, 1771. [Google Scholar] [CrossRef]

- Kage, P.; Rothenberger, J.C.; Andreadis, P.; Diochnos, D.I. A review of pseudo-labeling for computer vision. arXiv 2024, arXiv:2408.07221. [Google Scholar] [CrossRef]

- Guo, Z.; Xue, Y.; Wang, C.; Geng, Y.; Lu, R.; Li, H.; Sun, D.; Lou, Z.; Chen, T.; Shi, J.; et al. Efficient weed segmentation in maize fields: A semi-supervised approach for precision weed management with reduced annotation overhead. Comput. Electron. Agric. 2025, 229, 109707. [Google Scholar] [CrossRef]

- Li, R.; Wang, X.; Cui, Y.; Xu, Y.; Zhou, Y.; Tang, X.; Jiang, C.; Song, Y.; Dong, H.; Yan, S. A Semi-Supervised Diffusion-Based Framework for Weed Detection in Precision Agricultural Scenarios Using a Generative Attention Mechanism. Agriculture 2025, 15, 434. [Google Scholar] [CrossRef]

- Li, J.; Chen, D.; Yin, X.; Li, Z. Performance evaluation of semi-supervised learning frameworks for multi-class weed detection. Front. Plant Sci. 2024, 15, 1396568. [Google Scholar] [CrossRef]

- Huang, Y.; Bais, A. Unsupervised domain adaptation for weed segmentation using greedy pseudo-labelling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2484–2494. [Google Scholar]

- Zhang, L.; Zhang, Y.; Ma, X. A new strategy for tuning ReLUs: Self-adaptive linear units (SALUs). In Proceedings of the ICMLCA 2021; 2nd International Conference on Machine Learning and Computer Application, Shenyang, China, 17–19 December 2021; 2021; pp. 1–8. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.E.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar] [CrossRef]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Pinasthika, K.; Laksono, B.S.P.; Irsal, R.B.P.; Shabiyya, S.; Yudistira, N. SparseSwin: Swin transformer with sparse transformer block. Neurocomputing 2024, 580, 127433. [Google Scholar] [CrossRef]

- Cui, J.; Zhang, Y.; Chen, H.; Zhang, Y.; Cai, H.; Jiang, Y.; Ma, R.; Qi, L. CSWin-MBConv: A dual-network fusing CNN and Transformer for weed recognition. Eur. J. Agron. 2025, 164, 127528. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).