Abstract

The transformative potential of big data across various industries has been demonstrated. However, the data held by different stakeholders often lack interoperability, resulting in isolated data silos that limit the overall value. Collaborative data efforts can enhance the total value beyond the sum of individual parts. Thus, big data sharing is crucial for transitioning from isolated data silos to integrated data ecosystems, thereby maximizing the value of big data. Despite its potential, big data sharing faces numerous challenges, including data heterogeneity, the absence of pricing models, and concerns about data security. A substantial body of research has been dedicated to addressing these issues. This paper offers the first comprehensive survey that formally defines and delves into the technical details of big data sharing. Initially, we formally define big data sharing as the act of data sharers to share big data so that the sharees can find, access, and use it in the agreed ways and differentiate it from related concepts such as open data, data exchange, and big data trading. We clarify the general procedures, benefits, requirements, and applications associated with big data sharing. Subsequently, we examine existing big data-sharing platforms, categorizing them into data-hosting centers, data aggregation centers, and decentralized solutions. We then identify the challenges in developing big data-sharing solutions and provide explanations of the existing approaches to these challenges. Finally, the survey concludes with a discussion on future research directions. This survey presents the latest developments and research in the field of big data sharing and aims to inspire further scholarly inquiry.

1. Introduction

The proliferation of the Internet of Things (IoT) [1,2], social media platforms [3], and related technologies has led to an unprecedented surge in data generation, collection, and processing. This high-volume, high-velocity, and high-diversity data, commonly referred to as big data, represents a paradigm shift in information technology [4]. Beyond its scale, big data delivers measurable value across domains: it reduces operational costs, enhances efficiency, and enables data-driven decision making in industries [5], commerce [6], and public services [7]. For instance, Google’s advertising engine, powered by big data, accounts for more than 75% of the enterprise’s total revenue in 2024.

Recently, big data sharing, referring to the act of data sharers to share big data so that the sharees can find, access, and use it in the agreed ways, has been receiving extensive attention from industries and academia because the total value of shared data is much more significant than the sum of individual parts [8]. Moreover, certain tasks cannot be completed without big data from different stakeholders [9]. For example, accurate disease diagnosis requires excessive hospitalized cases worldwide, while the result from a single hospital is far from usable [10].

Despite the recognized benefits of large-scale data sharing, data controllers, including enterprises, healthcare institutions, and other organizations, frequently withhold their datasets. This reluctance stems from a range of technical, legal, and strategic concerns [11]. First, different enterprises have conflicts of interest and are unwilling to share big data’s great value with others [12]. Second, many types of data, e.g., electronic health records and personal bank bills, are sensitive, and sharing them raises severe privacy concerns [13]. Furthermore, big data sharing among countries and regions can even be illegal because of the legal regulations on digital data and copyright worldwide [14].

These overarching barriers give rise to a set of formidable technical challenges that hinder the maturation of big data sharing [15,16,17]. To overcome the reluctance to share, a systematic framework must address these core problems. For instance, data from different sources often exist in heterogeneous formats that must be standardized for interoperability [15]. As a tradable commodity, big data requires a uniform value assessment mechanism and rational pricing models [16]. Most critically, robust security and privacy-preserving techniques are non-negotiable prerequisites to protect data before, during, and after the sharing process [17]. Effectively enabling big data sharing, therefore, hinges on systematically resolving these challenges.

Although the literature offers a variety of partial remedies, a holistic survey dedicated to big data sharing is still missing. Existing survey papers either treat big data and big data sharing in the abstract [4,18,19] or restrict their scope to a single facet, e.g., security and privacy [20,21], incentives [22], spatio-temporal data [23,24], machine-learning workflows [25], health informatics [26], Internet-of-Things streams [27], multimedia content [28], digital forensics investigations [29], or social media analytics [30]. Likewise, domain-specific studies concentrate on high-value silos such as scholarly repositories [31] or electronic health records [32], while cryptographic surveys focus on isolated mechanisms, e.g., proxy re-encryption [33]. Consequently, current knowledge remains fragmented; a comprehensive map of the technical landscape and application demands for general big data sharing is yet to be drawn.

To this end, we conduct a comprehensive survey about big data sharing from the perspectives of definition, applications, platforms, challenges, solutions, future directions, etc. We answer the following important questions that are broadly concerned in the academia and industries. First, what is big data sharing and why is it so important? Second, what are the requirements and possible system architectures for developing big data-sharing platforms? Third, what are the technical challenges and feasible solutions to deliver a big data-sharing solution? Finally, how can emerging technologies help big data sharing? The unique contributions of this paper are as follows:

- To the best of our knowledge, this paper is the first comprehensive survey that formally defines and delves into the technical details of big data sharing.

- We present the readers with the state-of-the-art development and research of big data sharing by articulating the definition, general workflow, and requirements and summarizing the existing popular platforms, challenging issues, and solutions.

- The promising future directions, i.e., blockchain-based big data sharing and edge as big data-sharing infrastructure, are identified and may incentivize future research.

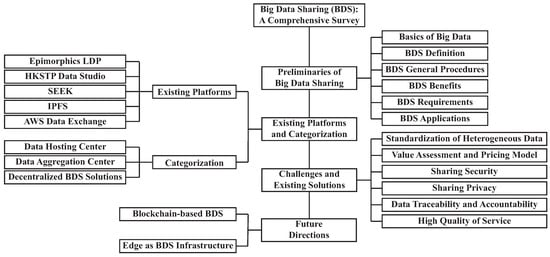

The survey structure is depicted in Figure 1. Section 2 clarifies the concept of big data sharing: we define the term, outline a generic sharing workflow, itemize its benefits and requirements, catalogue application domains, and distinctively compare it with related notions to expose subtle but important differences. Section 3 reviews representative platforms, classifying them by architecture into data-hosting centers, data aggregation hubs and fully decentralized solutions. Section 4 distills the key technical impediments to sharing and surveys the counter-measures proposed to date. Finally, Section 5 charts open research avenues.

Figure 1.

The structure of this survey, covering the concept, platforms, challenges, and solutions of big data sharing.

2. Preliminaries of Big Data Sharing

2.1. Basics of Big Data

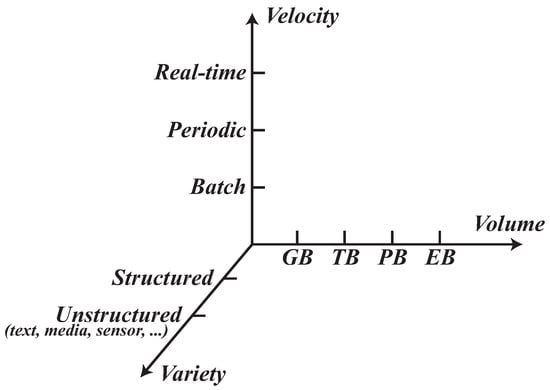

A foundational understanding of big data is essential prior to examining its sharing paradigms. The term, in circulation since the late 1990s, gained significant traction after its feature in the Communications of the ACM in 2009 [34]. The canonical definition of big data is encapsulated by the “3Vs” model, as depicted in Figure 2, which is a framework that has been widely adopted by industry leaders such as Gartner, IBM, and Microsoft. The first dimension, volume, describes datasets of a scale so vast that they overwhelm the capacity of conventional software to capture, manage, and process within a reasonable timeframe [18]. The second, velocity, signifies the rapid rate at which these data are generated and must be processed. Finally, variety refers to the heterogeneous nature of the data, which encompasses diverse formats and modalities ranging from structured tables to unstructured text and media.

Figure 2.

Primary characteristics of big data.

In addition to volume, variety, and velocity, another dimension, value, has garnered significant attention from both industry and academia in recent years. A paradigmatic illustration of big data’s economic and epidemiological value is Google LLC’s now-classic 2009 influenza study [35]. By mining billions of anonymized search queries, the company produced real-time estimates of influenza activity that were markedly more timely than those disseminated by traditional public health surveillance centers. During the pandemic, users’ query profiles deviated systematically from baseline patterns; the volume and geographic distribution of influenza-related keywords correlated strongly with subsequent laboratory-confirmed cases. Google distilled 45 query clusters whose temporal dynamics tracked the ongoing outbreak, embedded these signals in a linear regression model, and generated weekly forecasts of influenza prevalence at the U.S. state level. The results, reported in Nature [36], demonstrated that passively collected digital traces can function as an early-warning system, underscoring the transformative potential of large-scale behavioral data for population health. Beyond social impact, big data holds the potential to generate revenue and reduce costs for enterprises, such as enhancing recommendation systems for e-commerce platforms and optimizing pricing strategies for airlines.

2.2. Definition of Big Data Sharing

While big data holds the potential to generate significant value for both society and enterprises, several challenges impede its development, including inefficient data representation, suboptimal analytical mechanisms, and a lack of data cooperation. This paper addresses the issue of data cooperation through the lens of big data sharing.

Formally speaking, big data sharing refers to the act of data sharers to share big data so that the sharees can find, access, and use it in the agreed ways. Normally, the volume of shared data is at least GB level. Several related concepts, such as open data [37], data exchange [38,39], and big data trading [40], bear similarities to big data sharing but also exhibit distinct differences. The following discussion elucidates these concepts and their distinctions from big data sharing.

In its conventional usage, open data denotes the provision of the research-generated and governmental datasets to the wider academic community and even the public. The scope of these datasets is typically restricted to scholarly outputs or statistics of citizens, and the ethos is one of maximal openness. By contrast, “big data sharing” embraces data assets whose volume, heterogeneity, and economic value far exceed those of traditional research and governmental repositories. The sheer scale and sensitivity of such resources render openness untenable; instead, they mandate high-performance infrastructures, uniform semantic representations, and security architectures capable of guaranteeing confidentiality, integrity, and controlled access. Consequently, while open data prioritizes transparency, big data sharing is defined by the imperative of secure, yet still efficient, dissemination.

Data exchange is construed in two principal senses. The first, mainly predominant in the database literature, denotes the algorithmic transformation of an instance valid under a source schema into an instance conforming to a target schema while preserving semantic fidelity. Because this interpretation concerns schema mapping rather than dissemination, it lies outside the scope of the present study. The second construal, adopted here, characterizes data exchange as the bilateral transfer of usage rights over a dataset between autonomous parties. Under this reading, the term “exchange” presupposes symmetry: each participant simultaneously cedes and acquires identical legal–technical entitlements, namely, the prerogative to access and exploit the data in question.

Big data sharing, by contrast, is not predicated on reciprocity; its objective is the scalable discovery, secure access, and value-generating utilization of massive, heterogeneous datasets. Consequently, the two paradigms diverge in purpose: data exchange centers on the equitable reallocation of rights, whereas big data sharing emphasizes the facilitation of downstream use.

Big data trading involves the buying and selling of large datasets [41]. This topic has gained prominence in recent years as enterprises and commercial organizations recognize the potential to monetize valuable collected data. Concurrently, other entities, such as universities and companies, require data for purposes like research and product quality improvement. Big data trading can be viewed as a subset of big data sharing, as it is confined to commercial use, whereas big data sharing is not restricted by commercial considerations.

As summarized in Table 1, the nuanced differences between big data sharing, open data, data exchange, and big data trading can be understood across the dimensions of data types, incentives, and commerciality. Big data sharing serves as the most encompassing paradigm, which is characterized by its flexibility across all three dimensions. In contrast, open data is a significantly narrower term, which is typically confined to non-commercial, non-remunerative exchanges of scholarly and governmental data. Data exchange is distinguished by its transactional logic, which is predicated on a reciprocal transfer of usage rights rather than the unrestricted forms of reward possible in big data sharing. Finally, big data trading is conceptualized as a specialized subset of big data sharing, defined explicitly by its commercial imperative, whereas the parent concept remains agnostic to commerciality.

Table 1.

Relationship and differences between big data sharing, open data, data exchange, and big data trading.

2.3. General Procedures of Big Data Sharing

The process of big data sharing can be conceptualized as a three-stage workflow: data publishing, data search, and the final act of data sharing. The process commences with data publishing, wherein data owners prepare and announce their datasets. The objective of this initial phase is not necessarily to release the raw data but rather to populate the sharing platform’s catalog with descriptive metadata, thereby informing potential users of the data’s existence and content while allowing owners to retain control and implement access policies. Subsequently, prospective users engage in data searches, querying the platform to discover datasets that meet their specific requirements [42]. This discovery phase is critical, as the platform’s utility is largely determined by its ability to efficiently connect its user base with valuable data. The workflow culminates in the terminal phase of data sharing, which is a transactional step initiated by an access request from a user. The data owner retains unilateral authority to approve or deny this request. Upon approval, the transaction is recorded, formally establishing the owner as the sharer and the user as the sharee.

2.4. Benefits of Big Data Sharing

Big data sharing generates multi-level value. Data sharees obtain access to high-volume, heterogeneous datasets that can be repurposed for research, innovation, or operational optimization. Data sharers, in turn, accrue reputational capital, expanded market visibility, and direct monetary remuneration where licensing permits. At the societal level, aggregated data assets fuel scientific discovery, evidence-based policy, and infrastructural efficiencies, thereby advancing the public good. Benefits accruing to individual sharees are deliberately omitted here, as they are contingent upon domain-specific use cases and cannot be meaningfully generalized.

The benefits for data sharers can be summarized as follows:

- For researchers, sharing scholarly data increases the visibility of their work and can strengthen their academic reputations. Shared materials typically comprise full texts, source code, experimental tools, and evaluation datasets. Open data encourage replication and comparative studies: open-access articles record 89% more full-text downloads and 42% more PDF downloads than paywalled equivalents [43], while publicly available medical datasets attract 69% more citations after controlling for journal impact, publication date, and institutional affiliation [44].

- For enterprises and public sectors, big data sharing can enhance recognition and foster ongoing collaboration. Over the past decade, national open-government portals, exemplified by data.gov.uk, data.gov, and data.gov.sg, have proliferated, furnishing citizens, firms, and researchers with standardized access to public-sector datasets. Empirical studies indicate that such transparency measures enhance institutional trust and stimulate civic engagement [45]. Parallel developments are evident in the private sector, where enterprises leverage big data sharing as a strategic marketing and innovation instrument. A prominent modality is the datathon: firms release curated datasets to the public and sponsor predictive-modelling contests. Kaggle, the largest online community of data scientists and machine-learning practitioners, currently hosts several thousand public datasets together with reproducible code notebooks, thereby lowering transaction costs and fostering collaborative analytics between industry and academia.

- Monetary rewards are a clear incentive for sharees engaging in big data sharing, particularly from a commercial perspective. The daily deluge of high-value data produced by billions of low-cost devices and users constitutes a major commercial asset. Facebook, for instance, with over two billion monthly active accounts, generates approximately four petabytes of new data each day. Legal prohibitions on direct sale, imposed by privacy statutes and platform terms of service, do not diminish this asset’s worth; instead, the expected future monetization of these data underpins Facebook’s market capitalization, which surpassed USD 1.45 trillion in October 2024. The immense volume and value of data creates unprecedented opportunities for monetization and new business models. Particularly, dedicated trading venues such as Japan Data Exchange Inc. and Shanghai Data Exchange Corp. have emerged to facilitate the compliant, market-mediated exchange of big data rights while respecting regulatory constraints.

The benefits of big data sharing for the public good can be summarized as follows:

- Promotion of academic integrity: Big data sharing fosters academic integrity, which is the ethical standard that mandates the avoidance of plagiarism and cheating in academic endeavors. By making scholarly data accessible, research findings become more reproducible, as others can replicate specific experiments. This transparency encourages researchers to exercise greater caution when publishing their findings, thereby creating a virtuous cycle that enhances academic integrity. More broadly, sharing big data ensures that the evidence underpinning scientific results is preserved, which is crucial for the advancement of science.

- Incentivization for data quality management: Sharing high-velocity data (e.g., from IoT networks) facilitates real-time decision making in domains like smart cities and supply chain management. However, high-velocity data are usually low quality, and making these data publicly available creates reputational incentives for researchers to implement rigorous data management workflows and to enforce stringent quality-control procedures. Large-scale repositories invariably contain redundant records that inflate storage costs and degrade query performance. Sharers can exploit big-data reduction techniques, e.g., deduplication, stratified sampling, or lossless compression, to eliminate superfluous information while preserving analytical utility. The resulting high-quality datasets not only attract a broader user base but also lower the indirect costs (bandwidth, replication, and curation) imposed on the hosting infrastructure without eroding the intrinsic scientific value of the resource.

- Facilitation of collaboration and innovation: Big data sharing encourages increased collaboration and connectivity among researchers, potentially leading to significant new discoveries within a field. Data serve as the bedrock of scientific progress and are typically acquired through substantial effort and publicly funded projects. However, their utility is often confined to generating scientific publications, leaving much data underutilized. Big data sharing offers a more efficient approach by enabling researchers to share resources. Furthermore, big data sharing enables societal-level insights that are impossible with smaller datasets. Examples include the Google Flu study [35] and large-scale climate modelling [46].

While the benefits of big data sharing are evident from the perspectives of data providers and the public good, it is important to acknowledge that there are potential drawbacks. For instance, sharing sensitive data can raise privacy concerns, and determining data ownership can become complex during the sharing process. However, these challenges are not the focus of this paper. Readers interested in exploring the potential drawbacks of big data sharing are encouraged to consult [47,48] for further information.

2.5. Requirements of Big Data-Sharing Solutions

While big data sharing offers numerous benefits, designing an effective solution is complex due to several critical requirements. This section identifies and elaborates on the fundamental requirements for big data-sharing solutions: security and privacy, flexible access control, reliability, and high performance.

First, security and privacy are paramount in big data sharing: without rigorous safeguards, prospective users will withhold both data and custom. These requirements decompose into three interrelated dimensions: data security (protection against unauthorized access or alteration), user privacy (preservation of the identity and behavior of data consumers), and data privacy (assurance that the content itself does not reveal sensitive information):

- Data security. Big data sharing is inherently dyadic: a trustworthy solution must guarantee that no entity other than the two designated parties can read or modify the dataset. Access and alteration rights must be strictly predicated on explicit, fine-grained authorizations issued by the data sharer. Moreover, the architecture has to provide verifiable recovery mechanisms that can reconstruct both the data and the immutable sharing log in the event of corruption or malicious destruction.

- User privacy. Within big data-sharing ecosystems, the identities of both the data sharer and sharee must be shielded from external observers; ideally, they should also remain mutually concealed. The transaction should foreground the dataset itself while rendering the participating parties provably anonymous.

- Data privacy. Datasets such as electronic health records combine high analytic value with extreme sensitivity. To preserve privacy while enabling big data sharing, custodians must apply protective transformations, e.g., masking, generalization, and cryptographic obfuscation, before any external release.

Second, a big data-sharing platform must implement fine-grained, policy-based, flexible access control that explicitly answers three questions: (i) what is being shared (the data object); (ii) with whom it is shared (the recipient set); and (iii) how it is shared (the technical modality). Candidate modalities include the following:

- Big data preview. Under a preview regime, the sharee receives only a down-sampled or fragmentary surrogate, e.g., a textual excerpt, a low-resolution video frame, or an audio snippet, rendered through a functionality-restricted viewer. This partial disclosure permits value assessment while withholding the native dataset.

- Search over big data. The sharer exposes a controlled query interface that accepts only pre-approved query types, e.g., keyword, range, ranked, or similarity search, while restricting the sharee to search operations. The sharer retains full authority over permissible query grammars and returned data formats, thereby prohibiting bulk retrieval or direct inspection of the underlying corpus.

- Nearline computation. The sharee can perform operations using a combination of predefined interfaces, extending beyond the search to include actions like addition, deletion, and updates. “Nearline” indicates that computation is nearly online and quickly accessible without human intervention.

- Big data transfer. The sharer directly transfers the data to the sharee, allowing for a wide range of operations. Post-transfer operations depend on the contractual agreement between the parties. For instance, if data ownership is not transferred, the sharee is legally prohibited from further disseminating the data.

Third, a big data-sharing architecture must guarantee demonstrably high reliability: any protracted outage or data loss immediately erodes trust and undermines the economic and scientific incentives for sharing. The platform should exhibit minimal risk of malfunction, as system failures could result in significant data value loss. Avoiding a single point of failure is key with decentralization being critical to mitigating this risk. Reliability must encompass all platform functions: data publishing, data search, and data sharing. Once data are published, they should be searchable and shareable by the data owner. Search results must be accurate and comprehensive, and predefined rules must be upheld post-sharing.

Finally, a big data-sharing platform must deliver sustained high performance because suboptimal throughput or latency quickly negates the utility of massive datasets and discourages prospective users. The platform must efficiently handle numerous users and transactional records, including publishing, searching, and sharing activities. Users span enterprises, organizations, government sectors, and individuals, while the data involved are of high volume. The platform should facilitate efficient data publication and sharing with high-performance data searches enabling sharees to promptly discover needed datasets.

2.6. Big Data-Sharing Applications

Big data sharing finds applications across various domains, including healthcare [49], supply chain management [50], open government [45], and clean energy [51]. This discussion focuses on two prominent applications that have garnered significant industrial and academic interest in recent years: healthcare and data trading.

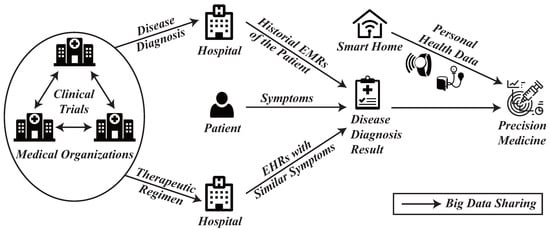

Healthcare is an essential societal domain: morbidity, trauma, and medical emergencies occur continuously, generating an unremitting demand for accurate diagnosis, effective intervention, and long-term condition management. These activities produce vast, heterogeneous data assets, e.g., electronic health records (EHRs) generated by hospitals, trial datasets curated by research organizations, and real-time wellness measurements collected in smart-home environments. Interoperability among these disparate sources, achieved through secure big data sharing, promises to accelerate biomedical discovery, enhance clinical decision making, and optimize population-level health outcomes. In 2020, the U.S. National Institutes of Health, the world’s largest funder of biomedical research, finalized a policy supporting healthcare data sharing [52].

First, big data sharing can enhance the understanding of individual hospital cases [53]. When a hospital admits a new patient, particularly one with atypical symptoms, physicians must reference past cases with similar characteristics. Additionally, the patient’s historical EHRs are invaluable for accurate disease diagnosis. In the medical field, big data sharing, known as healthcare information exchange (HIE), has become prevalent for facilitating the acquisition of EHRs between hospitals. This exchange enables doctors to gain a deeper understanding of various cases and diseases.

Moreover, big data sharing aids in the discovery of scientific insights, such as disease prediction and therapeutic regimen development, through the aggregation and analysis of clinical trials [54]. In recent years, machine learning-based medical data analysis has attracted considerable attention from governments, industry, and academia. Machine learning approaches require extensive datasets as input, which a single medical organization may struggle to provide. Consequently, the aggregation of clinical trials is crucial, and big data sharing serves as a means to achieve this.

Finally, sharing data from smart homes significantly contributes to precision medicine [49]. With advancements in Internet of Things technology, smart healthcare devices have become commonplace in daily life, such as smartwatches for heart rate monitoring and smart sphygmomanometers for blood pressure measurement. The vast array of personal health data collected is invaluable for precision medicine, allowing doctors to consider individual variability in environment and lifestyle when devising treatment plans or offering preventive advice.

Figure 3 illustrates a prototypical big data-sharing ecosystem in healthcare. Clinical trial datasets are pooled among research institutes to expedite investigations of existing diseases and to evaluate novel therapeutic regimens. Hospitals integrate patients’ longitudinal EHRs with real-time symptomatology and with EHR cohorts exhibiting comparable phenotypes to refine diagnoses. Concurrently, personal health streams generated in smart home environments are channeled into precision-medicine pipelines, enabling individualized therapeutic strategies.

Figure 3.

Big data sharing for healthcare.

Big data trading has crystallized into a distinct business paradigm driven by the concomitant surge in data volume and commercial demand [55]. Both industry consortia and academic communities are actively articulating architectural and economic frameworks tailored to this emergent commodity, whose defining characteristics, including non-rivalry, experience good properties, and economies of scale, differentiate it sharply from conventional assets.

Industrial uptake. QUODD (https://www.quodd.com/, accessed on 1 September 2025) curates a vertically integrated marketplace specializing in anonymized feeds from global financial institutions and FinTech operators. Amazon’s AWS Data Exchange federates hundreds of licensed data providers with downstream analytics consumers through a fully managed, API-driven brokerage. In China, state-level industrial policy has incubated quasi-public institutes: the Guiyang Global Big Data Exchange (2014) (https://www.gzdex.com.cn/, accessed on 1 September 2025) and the Shanghai Data Exchange (2016) (https://www.chinadep.com/, accessed on 1 September 2025) operate under regulatory sandboxes that recognize data as a factor of production equivalent to land, labor, and capital.

Academic advances. Complementing these commercial deployments, a growing corpus of scholarship interrogates the micro-economic and algorithmic underpinnings of data markets. Zheng et al. introduce Arete, which was the first decentralized architecture for mobile crowd-sensed data that dynamically aligns online pricing with contributor remuneration through a Nash-bargaining reward-splitting mechanism [56]. Oh et al. formulate a multi-stage, non-cooperative game in which heterogeneous providers optimize the trade-off between privacy-preserving noise injection and revenue-maximizing valuation [55]. Zhao et al. quantify how increased data variety alters the strategic interaction between content providers and internet-service providers under sponsored-data tariffs, revealing a non-monotonic relationship between variety and market surplus [57].

3. Existing Platforms and Categorization

Big data sharing has attracted extensive attention from academia, industries, and governments, and various big data-sharing platforms have been developed. This section first surveys representative big data-sharing platforms currently operational worldwide; subsequently, we propose a systematic taxonomy of these systems and delineate their underlying mechanisms.

3.1. Existing Platforms

There are many big data-sharing platforms worldwide. In the following, we introduce five representative big data-sharing platforms from different regions, for different purposes (academic/commercial), and with different system architectures (centralized/decentralized).

3.1.1. Epimorphics Linked Data Platform

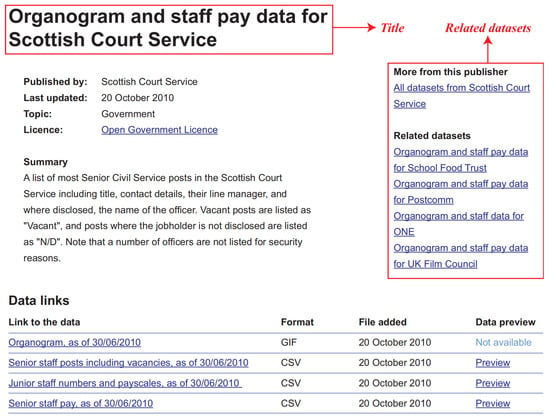

The Linked Data Platform (LDP) (https://www.epimorphics.com/, accessed on 1 September 2025) is an advanced solution for big data sharing developed by Epimorphics Ltd., Bristol, UK. The LDP serves dual purposes. First, it functions as a software solution that can be deployed as local infrastructure to facilitate big data sharing. For instance, a university might implement the LDP to enable data sharing among its faculties, departments, and administrative offices. Second, the LDP acts as a platform utilized by the U.K. government to host data accessible to a broad spectrum of public and private sectors. This paper focuses exclusively on the latter application as it pertains to existing platforms for big data sharing.

Users of the LDP can publish datasets and display their descriptions and links. As illustrated in Figure 4, these descriptions encompass various elements, including the title, publisher, publication date, latest update, topic, license, summary, data links, and contact information. The data links section may contain multiple datasets, each containing a URL to the database, data format, publication date, and a preview option if enabled by the data provider. Essentially, data providers retain their data on their own servers while publishing descriptions of their datasets on the LDP.

Figure 4.

An example dataset provided by Epimorphics LDP.

In addition to data publishing, the LDP offers robust data search capabilities. Users can search for big data using general keywords; datasets with descriptions containing these keywords will be displayed. Moreover, users can refine their searches by filtering datasets based on criteria such as publisher, topic, license, and data format. When a dataset’s description is displayed, related datasets are also suggested to the user. Regarding data sharing, the LDP supports only the download of raw data, as the original datasets are maintained by the data owners themselves. It is important to note that the LDP does not track the usage of the data once it has been downloaded from the data owners.

The LDP’s primary strength lies in managing high semantic variety, allowing data owners to publish rich, standardized metadata about their datasets without hosting the data itself. However, because data providers retain their data on their own servers, the LDP does not directly address the challenges of high volume or high velocity; these responsibilities are delegated to the original data owners.

3.1.2. HKSTP Data Studio

The Hong Kong Science and Technology Parks Corporation (HKSTP) established a pivotal platform, originally launched as Data Studio (https://sp.hkstp.org/, accessed on 1 September 2025) in 2007, to advance the government’s smart city initiative. This platform has since evolved into the Digital Service Hub, broadening its mission to foster a comprehensive ecosystem for digital innovation centered on AI models, big data, and high-performance computing. Its primary objective is to serve as a nexus where public and private entities can collaborate, leveraging a rich repository of data to develop cutting-edge solutions. The Hub hosts foundational government data spanning areas like city management and employment while also providing exclusive access to high-value, specialized datasets from strategic partners such as the Hospital Authority (HA) and Radio Television Hong Kong (RTHK).

The methodology for data interaction on Data Studio has matured significantly beyond its initial offerings. Initially, Data Studio enabled static data sharing via shareable links and real-time data sharing through Application Programming Interfaces (APIs) in high velocity. Later, the platform introduced far more sophisticated and secure data collaboration models. A key innovation lies in the establishment of controlled environments like the Data Collaboration Lab, which was launched in partnership with the Hospital Authority. The so-called “data clean room” model allows users to analyze sensitive, anonymous clinical data without direct access or extraction, thereby ensuring privacy, security, and full auditability. It is further complemented by integrated services like “HPC as a Service”, which provides the robust computational power necessary to process these large-scale datasets directly within the Hub’s secure infrastructure, representing a shift toward providing holistic, value-added analytical environments. The “data clean room” model is a direct architectural solution to the data privacy and fine-grained access control requirements mentioned in Section 2.5.

This evolution addresses critical limitations inherent in earlier data-sharing platforms. The previous challenge of being unable to track how shared data are utilized is fundamentally resolved by the data clean room model, where all queries and analytical activities are logged, providing data owners with robust governance and oversight. This controlled environment inherently mitigates copyright and usage concerns far more effectively than a simple user registry for APIs. However, the Digital Service Hub remains a proprietary, service-oriented platform focused on the Hong Kong ecosystem. Its source code is not publicly available, and its operational model is to function as a central innovation hub rather than to offer a replicable, open-source data-sharing solution for other enterprises to deploy independently.

HKSTP Data Studio is designed to handle data characterized by high variety and velocity. It aggregates diverse datasets from public and private sectors and supports real-time data sharing via APIs, making it suitable for dynamic smart city applications. To tackle the computational demands of high-volume datasets, the platform integrates “HPC as a Service,” providing the necessary power for large-scale analysis within its secure environment, thus offering a comprehensive solution across all three dimensions of big data.

3.1.3. SEEK

SEEK (https://seek4science.org/, accessed on 1 September 2025) is a big data-sharing platform specifically developed by a consortium of scientists to facilitate the sharing of datasets and models among researchers in the field of systems biology [58]. Systems biology, characterized by the computational and mathematical modelling of intricate biological networks, demands the integration of heterogeneous datasets and corresponding analytical models. SEEK was conceived to satisfy this requirement by federating otherwise isolated experimental and modelling resources across institutional boundaries; the platform is further released as open-source software, enabling community-driven extension and transparent reuse.

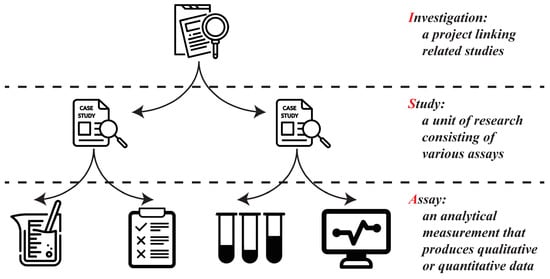

Researchers can publish their high-variety data within SEEK in the form of projects, which may include raw datasets, standard operating procedures (SOPs), models, publications, and presentations. SEEK supports version control and allows researchers to update shared data as needed. Models can be simulated within SEEK if they adhere to the Systems Biology Markup Language (SBML). Upon publication, the metadata from each project are extracted using the Resource Description Framework (RDF), enabling semantic query-based searches. A distinctive feature of systems biology is the inherent interconnection of datasets and models. To facilitate the understanding and exploration of these links, SEEK employs the ISA (Investigation, Study, and Assay) data model [59], as depicted in Figure 5.

Figure 5.

ISA model in SEEK.

However, SEEK has certain limitations. It is not designed for the sharing of general big data, as it is tailored specifically for systems biology research. Furthermore, similar to LDP and Data Studio, once data are shared on SEEK and downloaded by users, there is no mechanism to track how the data are subsequently utilized.

The SEEK platform’s primary strength lies in its sophisticated management of high variety within the domain of systems biology. It uses the ISA data model to federate complex and interconnected project assets. While it is not designed to handle the extreme volume or velocity seen in commercial platforms, the semantic richness and intricate relationships between its heterogeneous data assets firmly place it within the big data paradigm, prioritizing complexity over sheer scale.

3.1.4. InterPlanetary File System

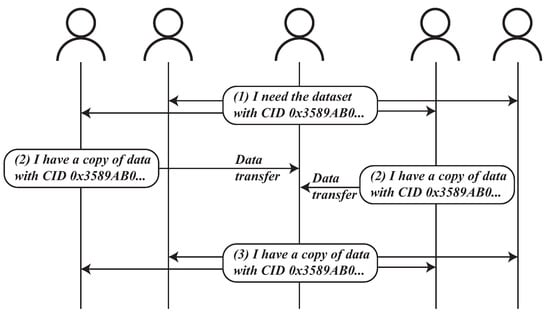

The InterPlanetary File System (IPFS) (https://ipfs.tech/, accessed on 1 September 2025) is a distributed peer-to-peer (P2P) network designed for high-reliability data storage and sharing initiated by Protocol Labs. To publish an artifact, a user serializes the content, computes its cryptographic hash, and derives a self-describing content identifier (CID). This CID functions as a location-independent address; any peer possessing the CID can retrieve the corresponding artifact from the IPFS network. Content availability is advertised through a distributed hash table (DHT) that maps each CID to the set of peers currently storing the blocks. Consequently, every participant curates a local repository by declaring which CIDs it seeds, which it wishes to cache, and which it explicitly discards.

Within the IPFS P2P network, all users are considered equal. As illustrated in Figure 6, a user seeking a particular piece of data can retrieve it from other users, thereby becoming a data provider themselves. Despite its innovative approach, the IPFS encounters several challenges in the realm of big data sharing. A significant issue is the lack of motivation among users to share large datasets, as doing so demands substantial storage and network resources. In contrast, public cloud storage offers a more user-friendly alternative, requiring no maintenance. This lack of incentive results in a scarcity of nodes within the IPFS network, undermining its reliability as a distributed system.

Figure 6.

A running example of IPFS.

In response to these challenges, Protocol Labs has introduced Filecoin, which is a platform that leverages blockchain technology to incentivize the use of IPFS by offering monetary rewards for providing data storage resources [60]. However, users of the platform must incur significant costs for data storage. Consequently, Filecoin faces the formidable task of competing with established cloud storage providers, which may prove to be a considerable challenge.

IPFS offers a decentralized architectural solution for storing and sharing large-volume data by distributing it across a peer-to-peer network. Its content-addressing model is inherently well suited to managing variety, as any type of static file can be uniformly addressed and retrieved. However, the platform is not designed for high-velocity streaming data, functioning as a file retrieval system rather than a real-time processing engine, and its performance can be inconsistent depending on network participation.

3.1.5. Amazon Web Services Data Exchange

In November 2019, Amazon, a leading cloud service provider, introduced a new service known as Amazon Web Services (AWS) Data Exchange (https://aws.amazon.com/data-exchange/, accessed on 1 September 2025). This service is designed to offer customers a secure means of discovering, subscribing to, and utilizing third-party data. At its launch, AWS Data Exchange featured contributions from over 100 data providers, offering more than 1000 datasets, as announced by Amazon.

AWS Data Exchange is structured around two principal actor types: data providers and data subscribers. Providers publish products, either gratis or fee-based, together with machine-readable license clauses that encode pricing schedules and permissible use. An integrated versioning facility allows in situ updates without invalidating extant subscriptions. Subscribers acquire access through monthly or annual entitlements; during the active term, they may download the corpus for local processing and automatically receive notifications of any revision. The service’s native integration with the wider AWS ecosystem constitutes a key advantage: datasets can be streamed directly into Amazon S3 via the console, representational state transfer (RESTful) APIs, or command-line interface (CLI), while update events are pushed to subscribers through CloudWatch in near real time.

The platform has been widely adopted for disseminating large-scale reference corpora, including around 200 COVID-19-related datasets catalogued in Table 2. It handles the high-volume data via integration with the high-performance Amazon S3 data storage service. Nevertheless, several limitations persist. First, providers bear the full cost of Amazon S3 storage; for voluminous big data assets, this expenditure can outweigh the marginal licensing revenue. Second, subscription mechanics require subscribers to disclose personally identifying information to each publisher, engendering user privacy leakage. Third, although governed by comprehensive contractual clauses, datasets ultimately reside on AWS-controlled infrastructure, leaving open the residual risk of insider misuse and data privacy concerns.

Table 2.

COVID-19 datasets on AWS Data Exchange.

AWS Data Exchange excels at handling massive volumes through its native integration with Amazon S3, enabling the transfer of petabyte-scale datasets. It is also highly effective at managing variety, as its marketplace is data-agnostic and supports virtually any file-based data product. The platform is less focused on real-time velocity; however, as its subscription-based model is oriented around batch updates and discrete dataset revisions, positioning it as a marketplace for curated data products rather than live data streams.

3.2. Categorization of Existing Platforms

This section categorizes the platforms described in Section 3.1 into data-hosting centers, data aggregation centers, and decentralized data-sharing solutions. Furthermore, we identify their unique features and challenges.

3.2.1. Data-Hosting Center

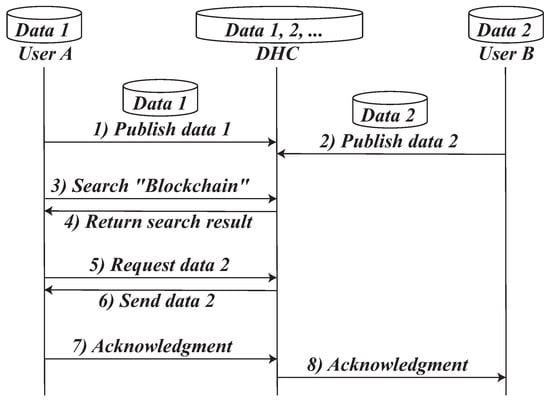

The functions of a data-hosting center (DHC) bear similarities to those of a portfolio manager in the financial sector. A portfolio manager raises capital from investors and allocates it to stocks or bonds to generate financial returns. Analogously, a DHC aggregates original big data from data owners and identifies potential data users who can share these data in exchange for rewards. Both portfolio managers and DHCs exercise complete control over the funds from investors and the big data from data owners, respectively. However, DHCs differ from portfolio managers due to the replicable nature of big data. Unlike money, big data can be easily duplicated, which often leads DHCs to make data publicly accessible. SEEK and Amazon Web Services Data Exchange are typical data-hosting centers.

Figure 7 depicts the canonical workflow of a DHC. In the initial phase, data owners (e.g., Users A and B) deposit their datasets (denoted data 1 and 2) with the DHC. User A subsequently issues a keyword query (“blockchain”) against the center’s consolidated catalogue; the DHC returns the corresponding metadata descriptions. After reviewing the results, User A requests dataset 2, whereupon the DHC mediates the transfer: it delivers data 2 to User A, receives an integrity acknowledgment, and relays that acknowledgment to User B to complete the transaction audit trail.

Figure 7.

A running example of a data hosting center.

A defining characteristic of a DHC is its dual mandate: (i) to aggregate voluminous user-generated corpora and (ii) to act as an authorized redistribution hub. SEEK exemplifies this model: investigators upload project-derived datasets to the SEEK repository, which, having obtained explicit dissemination consent, renders the content universally accessible under open-access licensing terms.

One of the primary advantages of DHCs is their high efficiency. As centralized repositories for shared big data, DHCs benefit from the general optimization methods applicable to data storage systems. For instance, frequently accessed big data can be pre-replicated to enhance data transfer speeds, and caching techniques can be employed to expedite big data queries. Another advantage of DHCs is their ability to ensure the authenticity of big data. Without DHCs, data owners might falsely claim possession of data or refuse to share it despite prior commitments. DHCs act as intermediaries between data owners and users, ensuring reliable data exchange.

However, DHCs also have disadvantages with data privacy being a significant concern. Specifically, DHCs may replicate big data without the consent of data owners, and there is no guarantee that data will be shared in accordance with the policies set by the data owners.

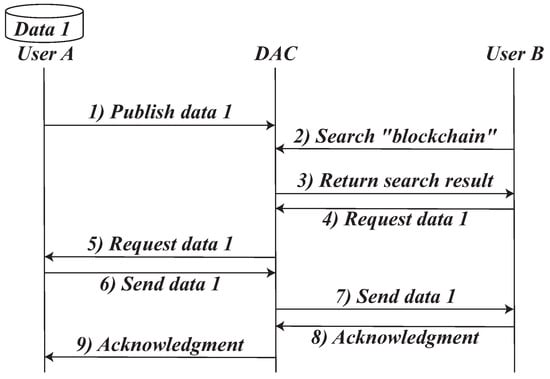

3.2.2. Data Aggregation Center

The function of a data aggregation center (DAC) in the realm of big data parallels the role of a real estate agency in the property market. A real estate agency compiles information about properties from owners and advertises them to attract potential buyers, thereby facilitating transactions between property owners and buyers. Similarly, as depicted in Figure 8, a DAC gathers data descriptions from data owners, offers a platform for potential users to search for big data, and facilitates the sharing of big data between data owners and users. While both real estate agencies and DACs possess general information about properties and big data, respectively, they do not have control over their disposition. However, DACs differ from real estate agencies in that the authenticity of big data is not as easily verifiable as that of real estate. Epimorphics Linked Data Platform and HKSTP Data Studio are typical data aggregation centers.

Figure 8.

A running example of a data aggregation center.

In the DAC model, the center connects data services among agencies through an API interface. Data agencies are not required to report or upload data to the DAC in advance; instead, they retain ownership and management of their data. When an agency needs to search for data, it interacts with the DAC in real time to send a data request. The DAC then relays and broadcasts this request to other agencies. Agencies possessing the requested data respond, and the DAC collects and forwards the data back to the requester. However, it is evident that the DAC has both the capability and the opportunity to retain data. Over time, as the DAC accumulates data during the sharing process, it may gradually evolve into a DHC.

3.2.3. Decentralized Big Data Sharing Solutions

DHCs and DACs represent centralized solutions that require central authorities to manage big data-sharing platforms. In contrast, decentralized solutions operate without the need for a central authority [61]. In these decentralized systems, data providers and recipients form a peer-to-peer (P2P) network, maintaining a list of available data. When a data owner wishes to publish data, they broadcast a message regarding data ownership across the entire network. Similarly, data search and transfer requests are handled within this network.

Decentralized solutions offer several advantages, the most significant being their immunity to single points of failure or malicious central authorities due to the absence of central control. Additionally, data transfer speeds can be rapid if the data are “hot,” meaning a large number of peers in the network possess copies of the desired data. The InterPlanetary File System is a typical decentralized big data sharing solution.

However, decentralized solutions also present certain drawbacks. First, maintaining the list of available data imposes additional burdens on both data providers and recipients, potentially overwhelming lightweight devices that may lack the capacity for such maintenance. Second, the incentive mechanisms for data providers and recipients to participate in the P2P network are less clear compared to centralized solutions, where participants benefit from structured services. Lastly, the performance of decentralized solutions can be inconsistent. If the P2P network has few participants or if the requested data are “cold” (i.e., only a small number of peers possess copies of the data), the system’s performance may be significantly compromised.

As shown in Table 3, the three models, DHCs, DACs, and decentralized solutions, represent distinct architectural philosophies for sharing big data. Each approach offers a unique balance of control, efficiency, privacy, and resilience. A DHC acts as a centralized custodian of data, a DAC serves as a centralized broker of metadata, and a decentralized solution eliminates the central entity altogether in favor of a peer-to-peer network.

Table 3.

Comparison of data-hosting center (DHC), data aggregation center (DAC), and decentralized big data-sharing solutions.

The choice between these three models involves a fundamental trade-off between centralization and decentralization.

- DHCs prioritize efficiency and data authenticity at the cost of data owner control and privacy. They are suitable for scenarios where performance and reliability are paramount, and data owners are willing to entrust their data to a central authority.

- DACs offer a middle ground, preserving data owner control while providing a centralized mechanism for data discovery. However, this model introduces concerns about the center’s potential to overstep its role and the difficulty in verifying data authenticity.

- Decentralized solutions champion resilience, censorship resistance, and the elimination of a central authority, which removes single points of failure and control. This comes at the price of performance consistency, a higher maintenance burden on participants, and less defined incentive structures. This model is ideal for environments where trust in a central entity is low or non-existent.

4. Challenges and Existing Solutions

Unlike traditional commodities, data are non-exclusive, exponentially growing, and costless to replicate; their ultimate value is unknowable ex ante, ownership is difficult to establish, and circulation channels are inherently hard to police. These idiosyncrasies frustrate the design of trading mechanisms that are simultaneously efficient, credible, fair, and secure. Meanwhile, the requirements of big data sharing articulated in Section 2.5 impose technical solutions to meet them. This section delineates the principal challenges confronting big data sharing and surveys the state-of-the-art proposals advanced to address each one.

4.1. Standardization of Heterogeneous Data

Standardization is crucial in facilitating an efficient and meaningful analysis of shared data [62]. Initially, a thorough data audit is necessary to ensure it is correctly stored as required by the data owner. Subsequently, normalizing the structure of the dataset is essential to generate an efficient and organized dataset. Finally, providing a data summary enables data users to better comprehend the characteristics of the data. Data-hosting centers are facing severe challenges to standardize heterogeneous data for providing reliable data storage services to the data owners and trustworthy data retrieval services to the data users.

Data owners increasingly delegate their corpora to cloud repositories that may behave maliciously or simply fail to meet service-level agreements. A rational yet unscrupulous provider can silently discard cold data to reclaim storage and bandwidth, thereby violating integrity guarantees. Concretely, the owner needs an efficient mechanism that both (i) continuously certifies that the outsourced dataset is intact and fully retrievable and (ii) cryptographically binds that guarantee to the owner’s private key. The problem is variously termed proof of retrievability, proof of storage, or provable data possession [63,64].

The recent literature has produced practically oriented solutions. He et al. present DeyPoS, a dynamic proof-of-storage framework that couples authenticated skip-list indexing with secure cross-user deduplication, allowing outsourced files to be updated and audited in logarithmic time while eliminating redundant ciphertext [65]. Yu et al. address the realistic threat of the ephemeral client–key compromise; they construct a leakage-resilient cloud-audit scheme that refreshes secret keys after each verification epoch, thereby containing the damage caused by exposure [66].

The analytical utility of shared datasets is fundamentally constrained by their inherent heterogeneity across dimensions such as type, structure, semantics, granularity, and accessibility [62]. The propagation of incorrect or inconsistent data artifacts not only diminishes the value of the source but also invalidates subsequent analyses. Accordingly, a systematic preprocessing regimen, encompassing data cleaning, standardization, calibration, fusion, and desensitization, is indispensable prior to dissemination [67]. For voluminous sources like sensor networks, this curation process may involve significant data reduction, yet it introduces non-trivial challenges in designing information-preserving filters and automating metadata generation. The objectives of this phase are twofold: to establish an efficient data representation that reflects its structural and semantic properties and to facilitate information extraction from underlying resources into a structured, analyzable format [68].

Subsequently, the presentation of these curated datasets on sharing platforms necessitates a strategic balance between informational transparency and asset protection. While detailed descriptions are required to attract prospective users, full disclosure invites unauthorized replication and compromises data value. Data summarization is the principal technique used to resolve this tension, offering a condensed representation of the dataset [69]. Although existing platforms often utilize manual metadata and summary generation [70], automation is imperative for achieving scalable efficiency and accuracy. The synthesis of a meaningful summary is a task of greater complexity than metadata generation, as it presupposes a semantic interpretation of the data [71]. Advanced methodologies, including machine learning models [72], are being developed to address this, but their viability hinges on achieving computational efficiency, ensuring data security, and accommodating demands for personalized abstracts.

4.2. Value Assessment and Pricing Model

When data are commoditized, their quality and prospective economic value must be estimated rigorously and communicated transparently [41]. Accurate valuation and pricing safeguard the interests of all market participants, underwrite the emergence of standardized and trustworthy exchanges, and foster a sustainable data-sharing ecosystem. Quality appraisal concentrates on intrinsic content attributes: completeness, accuracy, consistency, timeliness, and provenance integrity [73]. Value assessment extends this analysis by incorporating (i) the historical cost of data generation, curation, and storage, and (ii) the expected marginal utility that the dataset yields across heterogeneous downstream applications [74].

The rigorous evaluation of data quality and value is a critical prerequisite for any data transaction, enabling consumers to make informed purchasing decisions and providers to establish fair market prices. This evaluative function is also incumbent upon the sharing platform, which must actively monitor data to maintain market health. Key platform responsibilities include filtering substandard data, preventing malicious pricing from disrupting the market, and recommending cost-effective datasets to users. Ultimately, these governance measures are vital for boosting user satisfaction and safeguarding the platform’s long-term credibility. Value assessment is even more challenging for data aggregation centers because they do not access the original big data.

State-of-the-art quality and value assessment frameworks are hindered by four inter-locking challenges: (1) the absence of reproducible, quantitative metrics for contextual or semantic features; (2) prohibitive computational overhead when sampling large-scale, high-velocity corpora; (3) opaque cost structures that obscure the true economic expense of data generation and curation; and (4) epistemic uncertainty in forecasting downstream utility. These difficulties are compounded by the non-stationarity of value (a dataset’s worth can appreciate or depreciate within hours), the trivial ease of perfect replication, and the fragility of ex post access control. Consequently, ex ante certification of quality and value demands assessment protocols that are simultaneously accurate, inexpensive, and dynamically updateable.

Research has identified five critical dimensions of data quality: intrinsic quality, presentation quality, contextual quality, accessibility, and reliability. These dimensions are essential for evaluating data quality and ensuring that it meets the requirements of data users.

- Intrinsic quality: the conformance of a dataset to elementary syntactic and semantic criteria: volume, accuracy, completeness, timeliness, uniqueness, internal consistency, security posture, and provenance reliability [75].

- Presentation quality: the clarity of structure and semantics conveyed to the consumer, encompassing conciseness, interpretability, syntactic uniformity, and cognitive ease of comprehension.

- Contextual quality: the degree to which data content aligns with the specific decision-making context and is fit for the intended analytical or operational purpose.

- Accessibility: the ease and economy with which the buyer can locate, negotiate, and physically retrieve the dataset, including communication latency and any associated transactional overheads.

- Reliability: the cumulative reputation and verifiable trustworthiness of both the data originator and the vendor, evaluated through historical performance and third-party attestations.

The economic value of a dataset is contingent upon its quality, but it is also mediated by production cost and prevailing market conditions. Conventional intangible-asset valuation techniques, including cost, market, and income approaches, can be adapted to data, yet each yields distinctive limitations.

- Cost approach: Value is anchored to the historic expenditure incurred during collection, cleansing, storage, and maintenance. Owing to joint-production effects and indivisible overheads, marginal cost is rarely observable, so the method often understates the option value and fails to capture future rent-generating potential.

- Market approach: Value is inferred from recent transaction prices of allegedly comparable datasets. The paucity of transparent exchanges and the heterogeneity of data attributes (schema granularity, provenance, timeliness) render the identification of true comparables problematic, producing wide confidence intervals.

- Income (revenue) approach: Value equates to the discounted stream of incremental cash flows attributable to the dataset across its economic life. Because forecast benefits are application-specific and buyer-specific, the approach is inherently subjective; valuations can diverge by orders of magnitude across prospective licensees.

By understanding the dimensions of data quality and the methods for evaluating data value, data-sharing platforms can provide accurate and reliable quality and value evaluation of data, enabling data users to make informed decisions and ensuring a healthy data-sharing environment.

Despite the growing importance of big data sharing, developing models and methods for accurate assessment of the full value of data remains a significant challenge. The vast volume and diverse types of data in the market pose several obstacles to data quality and value evaluation, including the following:

- Multi-dimensional quantitative evaluation of quality. Although the literature proposes extensive taxonomies of data-quality dimensions, most remain conceptual schemata supported by qualitative heuristics; operational, quantitative models are conspicuously absent. This deficit is exacerbated when repositories contain massive unstructured corpora, such as text, imagery, and sensor streams, whose semantic content resists automatic, scalable, and reproducible metrology.

- Data collection quality assessment. Most current approaches assess data quality at the level of individual data units (e.g., a single text or image). However, data sharing and trading platforms typically involve large datasets (e.g., 10,000 texts or 100,000 images). Evaluating the overall quality of these datasets by aggregating the quality statistics of individual data units ignores the relationships between data units and their impact on the overall quality of the dataset.

- Dynamic evaluation of data value. Quantifying the value of a dataset is an inherently complex task, requiring an assessment of factors such as its rarity, acquisition difficulty, and intrinsic quality. A significant limitation of existing evaluation frameworks, however, is their tendency to focus on static measures of quality while neglecting the dynamic nature of data’s true value. The value of data is not fixed; it evolves in response to technological advancements in collection and storage, the optimization of data-mining models, and shifts in application scenarios and consumer needs. This temporal dynamism introduces a profound layer of complexity, rendering simplistic, static assessments inadequate and making robust value estimation a persistent challenge.

Developing more sophisticated models and methods for data quality and value evaluation is essential to overcome these challenges. The models and methods involve the following:

- Developing quantitative models and methods for evaluating data quality. Researchers should focus on creating specific quantitative models and methods for evaluating data quality, particularly for unstructured data.

- Assessing data collection quality. New approaches should be developed to assess the overall quality of large datasets, taking into account the relationships between data units and their impact on the overall quality of the dataset.

- Evaluating data value dynamically. Researchers should develop methods that can reasonably evaluate the dynamic characteristics of data value, including rarity, difficulty in obtaining, and changes in data collection, storage, and application scenarios.

By addressing these challenges, developing more accurate and reliable data quality and value evaluation models is possible, ultimately facilitating more efficient and effective big data sharing.

4.3. Sharing Security

Data security is a multifaceted concept encompassing three primary attributes: confidentiality, integrity, and availability, which is collectively known as the CIA triad. These attributes are crucial in protecting sensitive data from unauthorized access, modification, or disruption despite the architecture of the big data-sharing solutions. Confidentiality is paramount in big data, ensuring that all input, output, and intermediate state calculations remain secret to potentially adversarial or untrusted entities.

Confidentiality is achieved through the protection of data from unauthorized access [76]. Access control and encryption technologies are effective means of ensuring data confidentiality [77]. Access control technology, in particular, plays a vital role in protecting data from unauthorized access and managing authorized users. In scenarios involving big data sharing, fine-grained [78] and flexible [79] access control is often required to meet the following requirements:

- Time-limited authorization: determining whether user authorization has time constraints.

- Authority division: distinguishing between data ownership and usage rights.

- Re-sharing permissions: deciding whether users can re-share data.

- Flexible revocation: allowing for the complete revocation of user permissions.

This survey examines the requirements for access control from the following perspectives:

- Consolidation and integration of access control strategies. In many cases, data users require access to multiple heterogeneous data sources. Integrating access control policies from these sources is essential, but automated or semi-automated strategic integration systems are needed to resolve conflict issues [80]. Allowing data providers to develop their access strategies can complicate data sharing, and the automatic integration and merging of these strategies remains challenging.

- Authorization management. Fine-grained access control requires efficient authorization management, which can be resource-intensive for large datasets. Automatic authorization technologies are necessary based on the user’s digital identity, profile, context, and data content and metadata. While initial steps have been taken in developing machine learning-based permission assignments [81], more advanced methods are needed to address dynamically changing contexts and situations.

- Implementation of access control on big data platforms. The rise of big data platforms has introduced new challenges in implementing fine-grained access control for diverse users. Although initial work has focused on injecting access control policies into submitted work, further research is needed to study the effective implementation of such strategies in big data storage, particularly in fine-grained encryption.

In the realm of cloud computing security, data encryption plays a pivotal role in ensuring confidentiality. Various encryption methodologies, such as functional encryption [82], identity-based encryption [83], and attribute-based encryption [84], are instrumental in safeguarding data. Notably, attribute-based encryption is further categorized into key-policy attribute-based encryption [85] and ciphertext-policy attribute-based encryption [86]. Despite the protective capabilities of data encryption, its efficacy is often constrained by the proliferation of keys and the complexities inherent in key management. Additionally, the computational demands of existing encryption schemes present significant challenges. Consequently, there remains a pressing need for the development of more lightweight and adaptable encryption algorithms to facilitate the secure sharing of large datasets.

A particularly noteworthy encryption technique for ensuring data confidentiality is homomorphic encryption (HE) [87,88]. The pioneering fully homomorphic encryption scheme was introduced by Gentry and Boneh in 2009 [89]. Although HE represents a ground-breaking advancement in cryptographic technology, its practical application is hindered by inefficiencies. Subsequent research efforts have focused on enhancing the scheme’s efficiency, notably reducing computation time [90]. Nevertheless, the performance of fully homomorphic encryption remains suboptimal for most practical applications. Beyond inefficiency, homomorphic encryption presents additional limitations. For instance, it necessitates that all sensors and the ultimate recipient share a common key for encryption and decryption, posing logistical challenges when these entities belong to disparate organizations. Furthermore, homomorphic encryption does not support computations on data encrypted with different keys without incurring substantial overhead, thereby restricting differential access to contributed data. While alternative encryption methods, such as attribute-based and functional encryption, address some of these limitations, homomorphic encryption solely guarantees data confidentiality and not integrity. It must be combined with mechanisms ensuring correct computations to provide comprehensive security assurances.

The integrity of data is a critical attribute, ensuring that any unauthorized modifications are detectable [91]. Moreover, it guarantees that the outputs of computations on sensitive data are accurate and consistent with the input data. In essence, integrity signifies that data remains unaltered by unauthorized entities. With the proliferation of internet usage, the demand for data has surged, leading to an expanded concept of data integrity, now encompassing data trustworthiness. This broader notion ensures that data are not only unmodified by unauthorized parties but also error-free, current, and sourced from reputable origins. Addressing data trustworthiness is a complex challenge, which is often contingent on the specific application domain. Solutions typically involve a synergy of various technologies, including cryptographic techniques for digital signatures [92], access control to restrict data modifications to authorized parties [93], data quality techniques for automatic error detection and correction, source verification technologies [94], and reputation systems to assess data source credibility [95]. Availability, another crucial attribute, ensures that data are accessible to authorized users and that users can retrieve data as needed. The triad of confidentiality, integrity, and availability remains paramount in contemporary data security. As data collection and sharing activities intensify, the complexity of data attacks has escalated, expanding the attack surface and rendering the fulfillment of these security requirements increasingly challenging.

4.4. Sharing Privacy

In recent years, the increasing demand for data and the evolution of data sharing, alongside the attributes of confidentiality, integrity, and availability, have elevated privacy to a critical requirement. This paper addresses privacy concerns by categorizing them into data privacy and user privacy.

Numerous definitions of data privacy have been proposed over time, reflecting the evolving methods of acquiring personal information. A widely accepted definition is provided by Allan Westin, who describes data privacy as the ability of individuals, groups, or institutions to control the timing, manner, and extent to which information about them is shared with others [96].

While data privacy is often equated with data confidentiality, there are distinct differences between these two concepts. Data privacy inherently requires the protection of data confidentiality, as unauthorized access undermines privacy. However, privacy encompasses additional considerations, including compliance with legal requirements, privacy regulations, and individual privacy preferences [76]. For instance, data sharing poses significant privacy challenges, as individuals may have differing views on sharing their data for research purposes. Consequently, systems managing privacy-sensitive data must accommodate and record the privacy preferences of individuals to whom the data pertains. Furthermore, these preferences may evolve over time. Therefore, addressing privacy issues necessitates not only the implementation of organizational access control policies but also adherence to the legal and regulatory frameworks governing data subjects’ preferences.

Beyond robust access control mechanisms, data encryption technologies are indispensable in safeguarding privacy. Privacy management in the context of big data often relies on cloud platforms, where key concerns include secure storage, computation on encrypted data, and secure communication [97]. Data encryption technologies address these challenges. Applications on cloud platforms typically depend on secure data storage, indexing, retrieval, and the trustworthiness of the cloud provider. Homomorphic encryption and functional encryption, previously discussed in the context of data confidentiality, are prevalent methods for protecting individual data privacy. Hu et al. introduced key-value privacy storage methods and multi-level index processing technologies utilizing homomorphic encryption to ensure that neither the data owner nor the cloud platform can be identified during the node retrieval process of user queries [98].

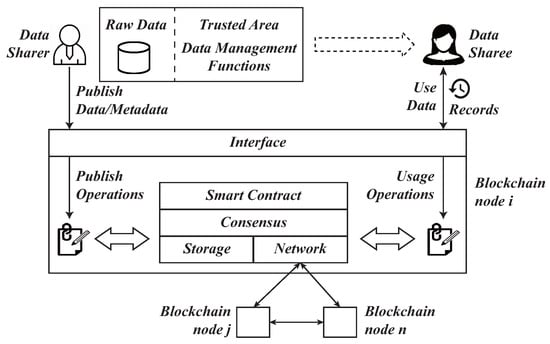

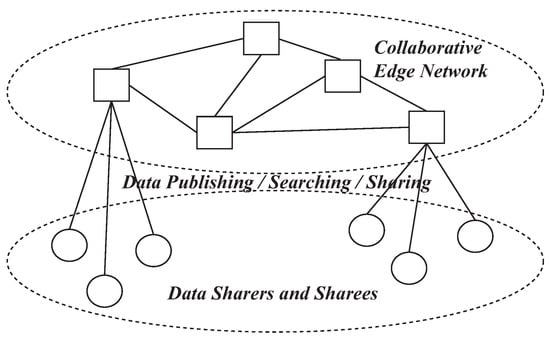

Encryption technology and access control serve as heuristic protection mechanisms against existing external threats. However, in the face of novel attacks, it becomes imperative to reformulate these protective strategies. These methods are not universally applicable within the big data environment due to the absence of a robust mathematical framework to define data privacy and potential loss. Differential privacy has emerged to address this deficiency [99]. This model represents a novel and robust privacy-protection technology underpinned by mathematical theory. According to its formal definition, differential privacy regulates the degree of privacy protection and the extent of privacy loss through privacy parameters, ensuring that the insertion or deletion of a record in a dataset does not influence the outcome of any computation. Furthermore, this method remains effective regardless of the attacker’s background knowledge; even if an attacker possesses information on all records except one, the privacy of that particular record remains intact. This characteristic endows differential privacy with excellent scalability. Consequently, differential privacy has become a focal point in contemporary privacy protection research. The academic community posits that differential privacy is inherently suited to big data, as the vast volume and diversity of big data render the addition or removal of a single data point minimally impactful on the overall dataset. This aligns with the fundamental principles of differential privacy.