Abstract

Kalman filter (KF) and its variants and extensions are wildly used for hydrologic prediction in environmental science and engineering. In many data assimilation applications of Kalman filter (KF) and its variants and extensions, accurate estimation of extreme states is often of great importance. When the observations used are uncertain, however, KF suffers from conditional bias (CB) which results in consistent under- and overestimation of extremes in the right and left tails, respectively. Recently, CB-penalized KF, or CBPKF, has been developed to address CB. In this paper, we present an alternative formulation based on variance-inflated KF to reduce computation and algorithmic complexity, and describe adaptive implementation to improve unconditional performance. For theoretical basis and context, we also provide a complete self-contained description of CB-penalized Fisher-like estimation and CBPKF. The results from one-dimensional synthetic experiments for a linear system with varying degrees of nonstationarity show that adaptive CBPKF reduces the root-mean-square error at the extreme tail ends by 20 to 30% over KF while performing comparably to KF in the unconditional sense. The alternative formulation is found to approximate the original formulation very closely while reducing computing time to 1.5 to 3.5 times of that for KF depending on the dimensionality of the problem. Hence, adaptive CBPKF offers a significant addition to the dynamic filtering methods for general application in data assimilation when the accurate estimation of extremes is of importance.

1. Introduction

Streamflow prediction is subject to uncertainties from multiple sources. These include uncertainties in the input (e.g., mean areal precipitation (MAP) and mean areal potential evapotranspiration (MAPE)), hydrologic model uncertainties, and the uncertainties in the initial conditions. With climate change and rapid urbanization, the uncertainty in the input and parametric uncertainties will increase; thus, reducing them has become increasingly challenging. Recently, data assimilation is widely used to reduce the uncertainty in initial conditions [1,2].

In many data assimilation applications in hydrologic predictions, Kalman filter (KF) and its variants and extensions are widely used to fuse observations with model predictions in a wide range of applications [3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23]. In geophysics and environmental science and engineering, often the main objective of data assimilation is to improve the estimation and prediction of states in their extremes rather than in normal ranges. In hydrologic forecasting, for example, the accurate prediction of floods and droughts is far more important than that of streamflow and soil moisture in normal conditions. Because KF minimizes unconditional error variance, its solution tends to improve the estimation near median where the state of the dynamic system resides most of the times while often leaving significant biases in the extremes. Such conditional biases (CB) [24] generally result in consistent under- and overestimation of the true states in the upper and lower tails of the distribution, respectively. To address CB, CB-penalized Fisher-like estimation and CB-penalized KF (CBPKF) [25] have recently been developed which jointly minimize error variance and expectation of the Type-II CB squared for the improved estimation and prediction of extremes. The Type-II CB, defined as , is associated with failure to detect the event where x denotes the realization of where , and denote the unknown truth, the estimate, and the realization of , respectively [26]. The original formulation of CBPKF, however, is computationally extremely expensive for high-dimensional problems. Additionally, whereas CBPKF improves performance in the tails, it deteriorates performance in the normal ranges. In this work, we approximate CBPKF with forecast error covariance-inflated KF, referred to hereafter as the variance-inflated KF (VIKF) formulation, as a computationally less expensive and algorithmically simpler alternative, and implement adaptive CBPKF to improve unconditional performance.

Elements of CB-penalized Fisher-like estimation have been described in the forms of CB-penalized indicator cokriging for fusion of predicted streamflow from multiple models and observed streamflow [27], CB-penalized kriging for spatial estimation [28] and rainfall estimation [29], and CB-penalized cokriging for fusion of radar rainfall and rain gauge data [30]. The original formulation of CBPKF have been described in [25], respectively. Its ensemble extension, CB-penalized ensemble KF, or CEnKF, is described in [31] in the context of ensemble data assimilation for flood forecasting. In this paper, we provide in the context of data assimilation a complete self-contained description of CBPKF for theoretical background for the alternative formulation and adaptive implementation. Whereas CBPKF was initially motivated for environmental and geophysical state estimation and prediction, it is broadly applicable to a wide range of applications for which improved performance in the extremes is desired. This paper is organized as follows. Section 2 and Section 3 describe CB-penalized Fisher-like solution and CBPKF, respectively. Section 4 describes approximation of CBPKF. Section 5 describe the evaluation experiments and results, respectively. Section 6 describes adaptive CBPKF. Section 7 provides the conclusions.

2. Conditional Bias-Penalized Fisher-like Solution

As in Fisher estimation [32], the estimator sought for CB-penalized Fisher-like estimation is where denotes the (m × 1) vector of the estimated states, W denotes the (m × (n + m)) weight matrix, and Z denotes the ((n + m) × 1) augmented observation vector. In the above, n denotes the number of observations, m denotes the number of state variables, and (n + m) represents the dimensionality of the augmented vector of the observations and the model-predicted states to be fused for the estimation of the true state . The purpose of augmentation is to relate directly to CBPKF in Section 3 without introducing additional notations. Throughout this paper, we use regular and bold letters to differentiate the non-augmented and augmented variables, respectively. The linear observation equation is given by:

where X denotes the (m × 1) vector of the true state with E[X] = MX and Cov[X,XT] = ΨXX, H denotes the ((n + m) × m) augmented linear observation equation matrix, and V denotes the ((n + m) × 1) augmented zero-mean observation error vector with . Assuming independence between X and V, we write the Bayesian estimator [30] for , or , as:

The error covariance matrix for , , is given by:

With Equation (2), we may write Type-II CB as:

The observation equation for Z is obtained by inverting Equation (1):

The (mxn) matrix, GT, in Equation (5) is given by:

where UT is some (m × (n + m)) nonzero matrix. Using Equation (5) and the identity, , we may write the Bayesian estimate for in Equation (4) as:

where

Equations (7) and (8) state that the Bayesian estimate of Z given X is given by HX if the a priori state error covariance is noninformative or there are no observation errors, but by the average of the a priori mean and the observed true state X if the a priori is perfectly informative or observations are information-less.

With Equation (4), we may write the quadratic penalty due to Type-II CB as:

where I denotes the (m × m) identity matrix. Combining in Equation (3) and in Equation (9), we have the apparent error covariance, , which reflects both the error covariance and Type-II CB:

where α denotes the scaler weight given to the CB penalty term. Minimizing Equation (10) with respect to W, or by direct analogy with the Bayesian solution [32], we have:

The modified structure matrix and observation error covariance matrix in Equation (11) are given by:

Using Equation (11) and the matrix inversion lemma [33], we have for and in Equations (10) and (2), respectively:

where . To render the above Bayesian solution to a Fisher-like solution, we assume no a priori information in X and let in the brackets in Equations (14) and (15) vanish:

where the scaling matrix B is given by . To obtain the estimator of the form, , we impose the unbiasedness condition, , or equivalently, . The above condition is satisfied by replacing with and dropping ∆ in Equation (17):

Finally, we obtain from Equation (3) the error covariance, , associated with in Equation (19):

Note that, if α = 0, we have and , and hence the CB-penalized Fisher-like solution, Equations (19) and (20), is reduced to the Fisher solution [32].

3. Conditional Bias-Penalized Kalman Filter

CBPKF results directly from decomposing the augmented matrices and vectors in Equations (19) and (20) as KF does from the Fisher solution [32]. The CBPKF solution, however, is not very simple because the modified observation error covariance matrix, Λ, is no longer diagonal. An important consideration in casting the CB-penalized Fisher-like solution into CBPKF is to recognize that CB arises from the error-in-variable effects associated with uncertain observations [34], and that the a priori state, represented by the dynamical model forecast, is not subject to CB. We therefore apply the CB penalty to the observations only and reduce C in Equation (8) to . Separating the observation and dynamical model components in and via the matrix inversion lemma, we have:

where

In the above, denotes the (n × m) observation matrix, and denotes the (n × n) observation error covariance matrix. To evaluate the (m × n) matrix, , it is necessary to specify in Equation (6). We use which ensures invertibility of , but other choices are also possible. We then have for :

where

Expanding W in Equation (11) with , we have:

In Equation (32), the (m × n) and (m × m) weight matrices for the observation and model prediction, ω1,k and ω2,k, respectively, are given by:

where

The apparent CBPKF error covariance, which reflects both and , is given by Equation (18) as:

The CBPKF error covariance, which reflects only, is given by Equation (20) as:

Because CBPKF minimizes rather than , it is not guaranteed that Equation (39) satisfies a priori. If the above condition is not met, it is necessary to reduce α and repeat the calculations. If α is reduced all the way to zero, CBPKF collapses to KF. The CBPKF estimate may be rewritten into a more familiar form:

In Equation (40), Zk denotes the (n × 1) observation vector, and the (m × n) CB-penalized Kalman gain, , is given by:

To operate the above as a sequential filter, it is necessary to prescribe and α. An obvious choice for , i.e., the a priori error covariance of the state, is . Specifying α requires some care. In general, a larger α improves accuracy over the tails but at the expense of increasing unconditional error. Too small an α may not affect large enough CB penalty in which case the CBPKF and KF solutions would differ a little. Too large an α, on the other hand, may severely violate the condition in which case the filter may have to be iterated at an additional computational expense with successively reduced . A reasonable strategy for reducing is , with 0 < c < 1 where denotes the value of α at the i-th iteration [24,29]. For high-dimensional problems, CBPKF can be computationally very expensive. Whereas KF requires solving an (m × n) linear system only once per updating or fusion cycle, CBPKF additionally requires solving two (m × m) linear systems (for . and ), and a (n × n) system (for ), assuming that the structure of the observation equation does not change in time (in which case in Equation (29) may be evaluated only once). To reduce computation, below we approximate CBPKF with KF by inflating the forecast error covariance.

4. VIKF Approximation of CBPKF

The main idea behind this simplification is that, if the gain for the CB penalty, C, in Equation (10) can be linearly approximated with H, the apparent error covariance becomes identical to in Equation (3) but with inflated by a factor of 1 + α:

where . The KF solution for Equation (42) is identical to the standard KF solution but with replaced by :

With WH=I in Equation (43) for the VIKF solution, we have for the apparent filtered error variance of in Equation (42). The error covariance of , , is given by Equation (3) as:

In Equation (44), the inflated filtered error covariance, , where denotes the multiplicative inflation factor, is given by:

Computationally, the evaluation of Equations (43) and (44) requires solving two (m × n) and a (m × m) linear systems. As in the original formulation of CBPKF, iterative reduction of is necessary to ensure .

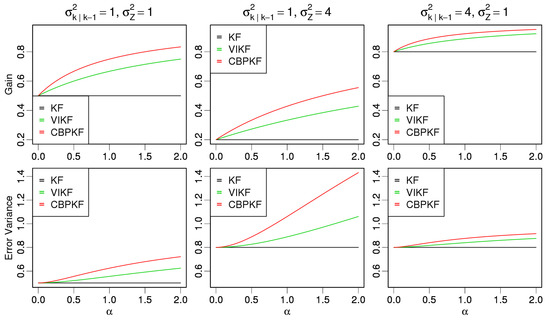

The above approximation assumes that the CB penalty, , is proportional to the error covariance, . To help ascertain how KF, CBPKF and the VIKF approximation may differ, we compare in Table 1 their analytical solutions for gain , and filtered error variance for the 1D case of m = n = 1. The table shows that the VIKF approximation and CBPKF are identical for the 1D problem except that the CB penalty for CBPKF is twice as large as that for the VIKF approximation. To visualize the differences, Figure 1 shows and for KF, the VIKF approximation and CBPKF for the three cases of and (left), and (middle), and and (right). For all cases, we set h to unity and varied from 0 to 1. The figure indicates that, compared to KF, the VIKF approximation and CBPKF prescribe appreciably larger gains, that the increase in gain is larger for larger α, and that the CBPKF gain is larger than the gain in the VIKF approximation for the same value of α. The figure also indicates that, compared to KF error variance, CBPKF error variance is larger, and that the increase in error variance is larger for larger α. Note that the differences between the KF and CBPKF solutions are the smallest for , a reflection of the diminished impact of CB owing to the comparatively smaller uncertainty in the observations. The above development suggests that one may be able to approximate CBPKF very closely with the VIKF-based formulation by adjusting α in the latter. Below, we evaluate the performance of CBPKF relative to KF and the VIKF-based approximation of CBPKF.

Table 1.

Comparison of gain and filtered error variance among KF, the VIKF approximation, and CBPKF.

Figure 1.

Comparison of and for KF, the VIKF approximation and CBPKF for three different cases: and (left), and (middle), and (right).

5. Evaluation and Results

For comparative evaluation, we carried out the synthetic experiments of [25]. We assume the following linear dynamical and observation models with perfectly known statistical parameters:

where Xk and Xk−1 denote the state vectors at time steps k and k − 1, respectively, Φk−1 denotes the state transition matrix at time step k − 1 assumed as , denotes the white noise vector, , j = 1,…,m, with , and Vk denotes the observation error vector, , I = 1, …, n. The number of observations, n, is assumed to be time-invariant. The observation errors are assumed to be independent among themselves and of the true state. To assess comparative performance under widely varying conditions, we randomly perturbed φk−1, σw,k−1 and σv,k above according to Equations (48) through (50) below, and used only those deviates that satisfy the bounds:

In the above, the superscript p signifies that the variable is a perturbation, and denote the normally distributed white noise for the respective variables, and and denote the standard deviations of the white noise added to , and , respectively. The parameter settings (see Table 1) are chosen to encompass less predictable (small φk−1) to more predictable (large φk−1) processes, certain (small σw,k−1) to uncertain (large σw,k−1) model dynamics, and more informative (small σv,k) to less informative (large σv,k) observations. The bounds for in Equation (48) are based on the range of lag-1 serial correlation representing moderate to high predictability where CBPKF and KF are likely to differ the most. The bounding of the perturbed values and in Equations (49) and (50), respectively, is necessary to avoid the observational or model prediction uncertainty becoming unrealistically small. Very small and render the information content of the model prediction, , and the observation, Zk, respectively, very large, and hence keep the filters operating in unrealistically favorable conditions for extended periods of time. We then apply KF, CBPKF, and the VIKF approximation to obtain and , and verify them against the assumed truth. To evaluate the performance of CBPKF relative to KF, we calculate percent reduction in root-mean-square error (RMSE) by CBPKF over KF conditional on the true state exceeding some threshold between 0 and the largest truth.

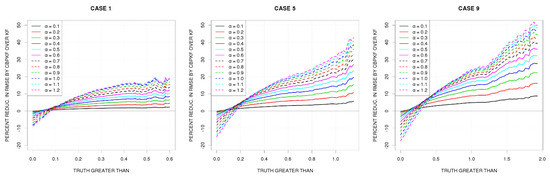

Figure 2 shows the percent reduction in RMSE by CBPKF over KF for Cases 1 (left), 5 (middle) and 9 (right) representing Groups 1, 2 and 3 in Table 1, respectively. The three groups differ most significantly in the variability of the dynamical model error, , and may be characterized as nearly stationary (Group 1), nonstationary (Group 2), and highly nonstationary (Group 3). The range of values used is [0.1, 1.2] with an increment of 0.1. The numbers of state variables, observations, and updating cycles used in Figure 2 are 1, 10, and 100,000 for all cases. The dotted line at 10% reduction in the figure serves as a reference for significant improvement. The figure shows that, at the extreme end of the tail, CBPKF with of 0.7, 0.6, and 0.5 reduces RMSE by about 15, 25, and 30% for Cases 1, 5 and 9, respectively, but at the expense of increasing unconditional RMSE by about 5%. The general pattern of reduction in RMSE for other cases in Table 1 is similar within each group and is not shown. We only note here that larger variability in observational uncertainty (i.e., larger ) reduces the relative performance of CBPKF somewhat, and that the magnitude of variability in predictability (i.e., ) has a relatively small impact on the relative performance.

Figure 2.

Percent reduction in RMSE by CBPKF over KF for a range of values of for Cases 1 (left), 5 (middle), and 9 (right).

It was seen in Table 1 that the VIKF approximation is identical to CBPKF for m = n = 1 but for the multiplicative scaler weight for the CB penalty. Numerical experiments indicate that, whereas the above relationship does not hold for other m or n, one may very closely approximate CBPKF with the VIKF-based formulation by adjusting . For example, the VIKF approximation with increased by a factor of 1.25 to 1.90 differ from CBPKF only by 1% or less for all 12 cases in Table 2 with m = 1 and n = 10. The above findings indicate that the VIKF approximation may be used as a computationally less expensive alternative for CBPKF. Table 3 compares the CPU time among KF, CBPKF, and the VIKF approximation for six different combinations of m and n based using Intel(R) Xeon(R) Gold 6152 CPU @ 2.10 GHz. The computing time is reported in multiples of the KF’s. Note that the original formulation of CBPKF quickly becomes extremely expensive as the dimensionality of the problem increases, whereas the CPU time of the VIKF approximation stays under 3.5 times that of KF for the size of the problems considered.

Table 2.

Parameter settings for the 12 cases considered.

Table 3.

Comparison of computing time among KF, CBPKF, and VIKF approximation.

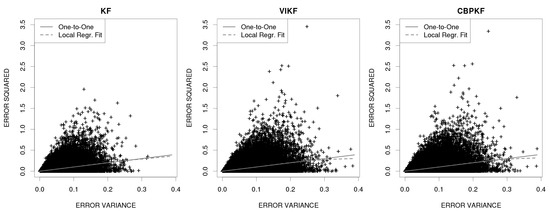

If the filtered error variance is unbiased, one would expect the mean of the actual error squared associated with the variance to be approximately the same as the variance itself. To verify this, we show in Figure 3 the filtered error variance vs. the actual error squared for KF (left), the VIKF approximation (middle), and CBPKF (right) for all ranges of filtered error variance. For reference, we plot the one-to-one line representing the unbiased error variance conditional on the magnitude of the filtered error variance and overlay the local regression fit through the actual data points using the R package locfit [35]. The figure shows that all three provide conditionally unbiased estimates of filtered error variance as theoretically expected, and that the VIKF approximation and CBPKF results are extremely similar to each other.

Figure 3.

Filtered error variance vs. error squared for KF (left), the VIKF approximation (middle), and CBPKF (right). The one-to-one line is shown in black and the local regression fit is shown in green.

6. Adaptive CBPKF

Whereas CBPKF or the VIKF approximation significantly improves the accuracy of the estimates over the tails, it deteriorates performance near the median. Figure 2 suggests that if can be prescribed adaptively such that a small/large CB penalty is affected when the system is in the normal/extreme state, the unconditional performance of CBPKF would improve. Because the true state of the system is not known, adaptively specifying is necessarily an uncertain proposition. There are, however, certain applications in which the normal vs. extreme state of the system may be ascertained with higher accuracy than others. For example, the soil moisture state of a catchment may be estimated from assimilating precipitation and streamflow data into hydrologic models [36,37,38,39,40,41]. If is prescribed adaptively based on the best available estimate of the state of the catchment, one may expect improved performance in hydrologic forecasting. In this section, we apply adaptive CBPKF in the synthetic experiment and assess its performance. An obvious strategy for adaptively filtering is to parameterize in terms of the KF estimate (i.e., the CBPKF estimate with ) as the best guess for the true state. The premise of this strategy is that, though it may be conditionally biased, the KF estimate fuses the information available from both the observations and the dynamical model, and hence best captures the relationship between and the departure of the state of the system from median. A similar approach has been used in fusing radar rainfall data and rain gauge observations for multisensor precipitation estimation in which an ordinary cokriging estimate was used to prescribe in CB-penalized cokriging [30].

Necessarily, the effectiveness of the above strategy depends on the skill of the KF estimate; if the skill is very low, one may not expect significant improvement. Figure 2 suggests that, qualitatively, α should increase as the state becomes more extreme. To that end, we employed the following model for time-varying :

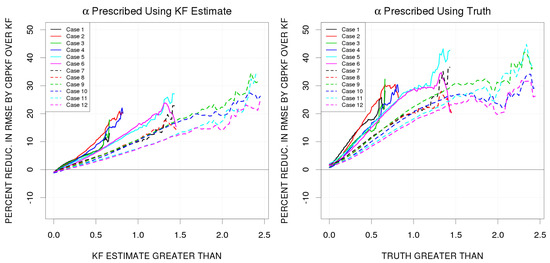

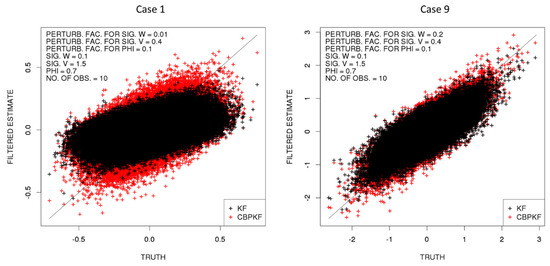

where denotes the multiplicative CB penalty factor for CBPKF at time step k, denotes some norm of the KF estimate at time step k, and denotes the proportionality constant. Figure 4(left) shows the RMSE reduction by adaptive CBPKF over KF with for the 12 cases in Table 2 m = 1 and n = 10. The values used were 3.0, 1.0, and 0.5 for Groups 1, 2, and 3 in Table 2, respectively. The figure shows that adaptive CBPKF performs comparably to KF in the unconditional sense while substantially improving performance in the tails. The rate of reduction in RMSE with respect to the increasing conditioning truth, however, is now slower than that seen in Figure 2 due to the occurrences of incorrectly specified α. To assess the uppermost bound of the feasible performance of adaptive CBPKF, we also specified with perfect accuracy under Equation (51) via where denotes the true state. The results are shown in Figure 4(right) for which the values used were 3.0, 1.5, and 1.0 for Groups 1, 2, and 3 in Table 2, respectively. The figure indicates that adaptive CBPKF with perfectly prescribed greatly improves performance, even outperforming KF in the unconditional sense. Figure 4 suggests that, if can be prescribed more accurately with additional sources of information, the performance of adaptive CBPKF may be improved beyond the level seen in Figure 4(left). Finally, we show in Figure 5 the example scatter plots of the KF (black) and adaptive CBPKF (red) estimates vs. truth. They are for Cases 1 and 9 in Table 2 representing Groups 1 and 3, respectively. It is readily seen that the CBPKF significantly reduces CB in the tails while keeping its estimates close to the KF estimates in normal ranges.

Figure 4.

Percent reduction in RMSE by adaptive CBPKF over KF in which is prescribed using the KF estimate (left) and the truth (right).

Figure 5.

Example scatter plots of KF (black) and adaptive CBPKF (red) estimates vs. truth for Cases 1 (left) and 9 (right) in Table 2.

7. Conclusions

Conditional bias-penalized Kalman filter (CBPKF) has recently been developed to improve the estimation and prediction of extremes. The original formulation, however, is computationally very expensive, and deteriorates performance in the normal ranges relative to KF. In this work, we present a computationally less expensive alternative based on the variance-inflated KF (VIKF) approximation, and improve unconditional performance by adaptively prescribing the weight for the CB penalty. For evaluation, we carried out synthetic experiments using linear systems with varying degrees of dynamical model uncertainty, observational uncertainty, and predictability. The results indicate that the VIKF-based approximation of CBPKF provides a computationally much less expensive alternative to the original formulation, and that adaptive CBPKF performs comparably to KF in the unconditional sense while improving the estimation of extremes by about 20 to 30% over KF. It is also shown that additional improvement may be possible by improving adaptive prescription of the weight to the CB penalty using additional sources of information. The findings indicate that adaptive CBPKF offers a significant addition to the dynamic filtering methods for general application in data assimilation and, in particular, when or where the estimation of extremes is of importance. The findings in this work are based on idealized synthetic experiments that satisfy linearity and normality. Additional research is needed to assess performance for non-normal problems and for nonlinear problems using the ensemble extension [31], and to prescribe the weight for the CB penalty more skillfully.

Author Contributions

Conceptualization, D.-J.S.; methodology, D.-J.S., H.S., H.L.; software, H.S.; validation, H.S.; writing—original draft preparation, H.S., D.-J.S.; writing—review and editing, D.-J.S., H.S., H.L.; visualization, H.S.; supervision, D.-J.S.; project administration, D.-J.S.; funding acquisition, D.-J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science Foundation [CyberSEES-1442735], and the National Oceanic and Atmospheric Administration [NA16OAR4590232, NA17OAR4590174, NA17OAR4590184].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wood, A.W.; Lettenmaier, D.P. An ensemble approach for attribution of hydrologic prediction uncertainty. Geophys. Res. Lett. 2008, 35, L14401. [Google Scholar] [CrossRef] [Green Version]

- Gupta, H.V.; Clark, M.P.; Vrugt, J.A.; Abramowitz, G.; Ye, M. Towards a comprehensive assessment of model structural adequacy. Water Resour. Res. 2012, 48, W08301. [Google Scholar] [CrossRef]

- Antoniou, C.; Ben-Akiva, M.; Koutsopoulos, H.N. Nonlinear Kalman filtering algorithms for on-Line calibration of dynamic traffic assignment models. IEEE Trans. Intell. Transp. Syst. 2007, 8, 661–670. [Google Scholar] [CrossRef]

- Bhotto, M.Z.A.; Bajić, I.V. Constant modulus blind adaptive beamforming based on unscented Kalman filtering. IEEE Signal Process. Lett. 2015, 22, 474–478. [Google Scholar] [CrossRef]

- Bocher, M.; Fournier, A.; Coltice, N. Ensemble Kalman filter for the reconstruction of the Earth’s mantle circulation. Nonlinear Process. Geophys. 2018, 25, 99–123. [Google Scholar] [CrossRef] [Green Version]

- Chen, W.; Shen, H.; Huang, C.; Li, X. Improving soil moisture estimation with a dual ensemble Kalman smoother by jointly assimilating AMSR-E brightness temperature and MODIS LST. Remote Sens. 2017, 9, 273. [Google Scholar] [CrossRef] [Green Version]

- Gao, Z.; Shen, W.; Zhang, H.; Ge, M.; Niu, X. Application of helmert variance component based adaptive Kalman filter in multi-GNSS PPP/INS tightly coupled integration. Remote Sens. 2016, 8, 553. [Google Scholar] [CrossRef]

- Houtekamer, P.L.; Zhang, F. Review of the ensemble Kalman filter for atmospheric data assimilation. Mon. Weather Rev. 2016, 144, 4489–4532. [Google Scholar] [CrossRef]

- Jain, A.; Krishnamurthy, P.K. Phase noise tracking and compensation in coherent optical systems using Kalman filter. IEEE Commun. Lett. 2016, 20, 1072–1075. [Google Scholar] [CrossRef]

- Jiang, Y.; Liao, M.; Zhou, Z.; Shi, X.; Zhang, L.; Balz, T. Landslide deformation analysis by coupling deformation time series from SAR data with hydrological factors through data assimilation. Remote Sens. 2016, 8, 179. [Google Scholar] [CrossRef] [Green Version]

- Kurtz, W.; Franssen, H.-J.H.; Vereecken, H. Identification of time-variant river bed properties with the ensemble Kalman filter. Water Resour. Res. 2012, 48, W10534. [Google Scholar] [CrossRef] [Green Version]

- Lu, X.; Wang, L.; Wang, H.; Wang, X. Kalman filtering for delayed singular systems with multiplicative noise. IEEE/CAA J. Autom. Sin. 2016, 3, 51–58. [Google Scholar] [CrossRef]

- Lv, H.; Qi, F.; Zhang, Y.; Jiao, T.; Liang, F.; Li, Z.; Wang, J. Improved detection of human respiration using data fusion based on a multi-static UWB radar. Remote Sens. 2016, 8, 773. [Google Scholar] [CrossRef] [Green Version]

- Ma, R.; Zhang, L.; Tian, X.; Zhang, J.; Yuan, W.; Zheng, Y.; Zhao, X.; Kato, T. Assimilation of remotely-sensed leaf area index into a dynamic vegetation model for gross primary productivity estimation. Remote Sens. 2017, 9, 188. [Google Scholar] [CrossRef] [Green Version]

- Muñoz-Sabater, J. Incorporation of passive microwave brightness temperatures in the ECMWF soil moisture analysis. Remote Sens. 2015, 7, 5758–5784. [Google Scholar] [CrossRef] [Green Version]

- Nair, A.; Indu, J. Enhancing Noah land surface model prediction skill over Indian subcontinent by assimilating SMOPS blended soil moisture. Remote Sens. 2016, 8, 976. [Google Scholar] [CrossRef] [Green Version]

- Reichle, R.H.; McLaughlin, D.B.; Entekhabi, D. Hydrologic data assimilation with the ensemble Kalman filter. Mon. Weather Rev. 2002, 130, 103–114. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef] [Green Version]

- de Wit, A.J.W.; van Diepen, C.A. Crop model data assimilation with the ensemble Kalman filter for improving regional crop yield forecasts. Agric. For. Meteorol. 2007, 146, 38–56. [Google Scholar] [CrossRef]

- Yan, M.; Tian, X.; Li, Z.; Chen, E.; Wang, X.; Han, Z.; Sun, H. Simulation of forest carbon fluxes using model incorporation and data assimilation. Remote Sens. 2016, 8, 567. [Google Scholar] [CrossRef] [Green Version]

- Yu, K.K.C.; Watson, N.R.; Arrillaga, J. An adaptive Kalman filter for dynamic harmonic state estimation and harmonic injection tracking. IEEE Trans. Power Del. 2005, 20, 1577–1584. [Google Scholar] [CrossRef]

- Dong, Z.; You, Z. Finite-horizon robust Kalman filtering for uncertain discrete time-varying systems with uncertain-covariance white noises. IEEE Signal Process. Lett. 2006, 13, 493–496. [Google Scholar] [CrossRef]

- Zhou, H.; Huang, H.; Zhao, H.; Zhao, X.; Yin, X. Adaptive unscented Kalman filter for target tracking in the presence of nonlinear systems involving model mismatches. Remote Sens. 2017, 9, 657. [Google Scholar] [CrossRef] [Green Version]

- Ciach, G.J.; Morrissey, M.L.; Krajewski, W.F.; Ciach, G.J.; Morrissey, M.L.; Krajewski, W.F. Conditional bias in radar rainfall estimation. J. Appl. Meteorol. 2000, 39, 1941–1946. [Google Scholar] [CrossRef]

- Seo, D.-J.; Saifuddin, M.M.; Lee, H. Conditional bias-penalized Kalman filter for improved estimation and prediction of extremes. Stochastic Environ. Res. Risk Assess 2018, 32, 183–201, Erratum in Stochastic Environ. Res. Risk Assess. 2018, 32, 3561–3562. [Google Scholar] [CrossRef]

- Jolliffe, I.T.; Stephenson, D.B. Forecast Verification: A Practitioner’s Guide in Atmospheric Science; John Wiley & Sons: Hoboken, NJ, USA, 2003. [Google Scholar]

- Brown, J.D.; Seo, D.-J.; Brown, J.D.; Seo, D.-J. A nonparametric postprocessor for bias correction of hydrometeorological and hydrologic ensemble forecasts. J. Hydrometeorol. 2010, 11, 642–665. [Google Scholar] [CrossRef] [Green Version]

- Seo, D.-J. Conditional bias-penalized kriging (CBPK). Stochastic Environ. Res. Risk Assess. 2013, 27, 43–58. [Google Scholar] [CrossRef]

- Seo, D.-J.; Siddique, R.; Zhang, Y.; Kim, D. Improving real-time estimation of heavy-to-extreme precipitation using rain gauge data via conditional bias-penalized optimal estimation. J. Hydrol. 2014, 519, 1824–1835. [Google Scholar] [CrossRef]

- Kim, B.; Seo, D.-J.; Noh, S.J.; Prat, O.P.; Nelson, B.R. Improving multi-sensor estimation of heavy-to-extreme precipitation via conditional bias-penalized optimal estimation. J. Hydrol. 2018, 556, 1096–1109. [Google Scholar] [CrossRef]

- Lee, H.; Noh, S.J.; Kim, S.; Shen, H.; Seo, D.-J.; Zhang, Y. Improving flood forecasting using conditional bias-penalized ensemble Kalman filter. J. Hydrol. 2019, 575, 596–611. [Google Scholar] [CrossRef]

- Schweppe, F.C. Uncertain Dynamic Systems, Prentice-Hall. 1973. Available online: https://openlibrary.org/books/OL5291577M/Uncertain_dynamic_systems (accessed on 12 January 2022).

- Woodbury, M.A. Inverting Modified Matrices; Princeton University: Princeton, NJ, USA, 1950. [Google Scholar]

- Hausman, J. Mismeasured variables in econometric analysis: Problems from the right and problems from the left. J. Econ. Perspect. 2001, 15, 57–67. [Google Scholar] [CrossRef] [Green Version]

- Catherine Loader, Locfit: Local Regression, Likelihood and Density Estimation. 2013. Available online: https://CRAN.Rproject.org/package=locfit (accessed on 12 January 2022).

- Lee, H.; Seo, D.-J. Assimilation of hydrologic and hydrometeorological data into distributed hydrologic model: Effect of adjusting mean field bias in radar-based precipitation estimates. Adv. Water Resour. 2014, 74, 196–211. [Google Scholar] [CrossRef]

- Lee, H.; Seo, D.-J.; Koren, V. Assimilation of streamflow and in situ soil moisture data into operational distributed hydrologic models: Effects of uncertainties in the data and initial model soil moisture states. Adv. Water Resour. 2011, 34, 1597–1615. [Google Scholar] [CrossRef]

- Lee, H.; Seo, D.-J.; Liu, Y.; Koren, V.; McKee, P.; Corby, R. Variational assimilation of streamflow into operational distributed hydrologic models: Effect of spatiotemporal scale of adjustment. Hydrol. Earth Syst. Sci. 2012, 16, 2233–2251. [Google Scholar] [CrossRef] [Green Version]

- Lee, H.; Zhang, Y.; Seo, D.-J.; Xie, P. Utilizing satellite precipitation estimates for streamflow forecasting via adjustment of mean field bias in precipitation data and assimilation of streamflow observations. J. Hydrol. 2015, 529, 779–794. [Google Scholar] [CrossRef]

- Rafieeinasab, A.; Seo, D.-J.; Lee, H.; Kim, S. Comparative evaluation of maximum likelihood ensemble filter and ensemble Kalman filter for real-time assimilation of streamflow data into operational hydrologic models. J. Hydrol. 2014, 519, 2663–2675. [Google Scholar] [CrossRef]

- Seo, D.-J.; Koren, V.; Cajina, N. Real-time variational assimilation of hydrologic and hydrometeorological data into operational hydrologic forecasting. J. Hydrometeor. 2003, 4, 627–641. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).