High-Resolution Hogel Image Generation Using GPU Acceleration

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

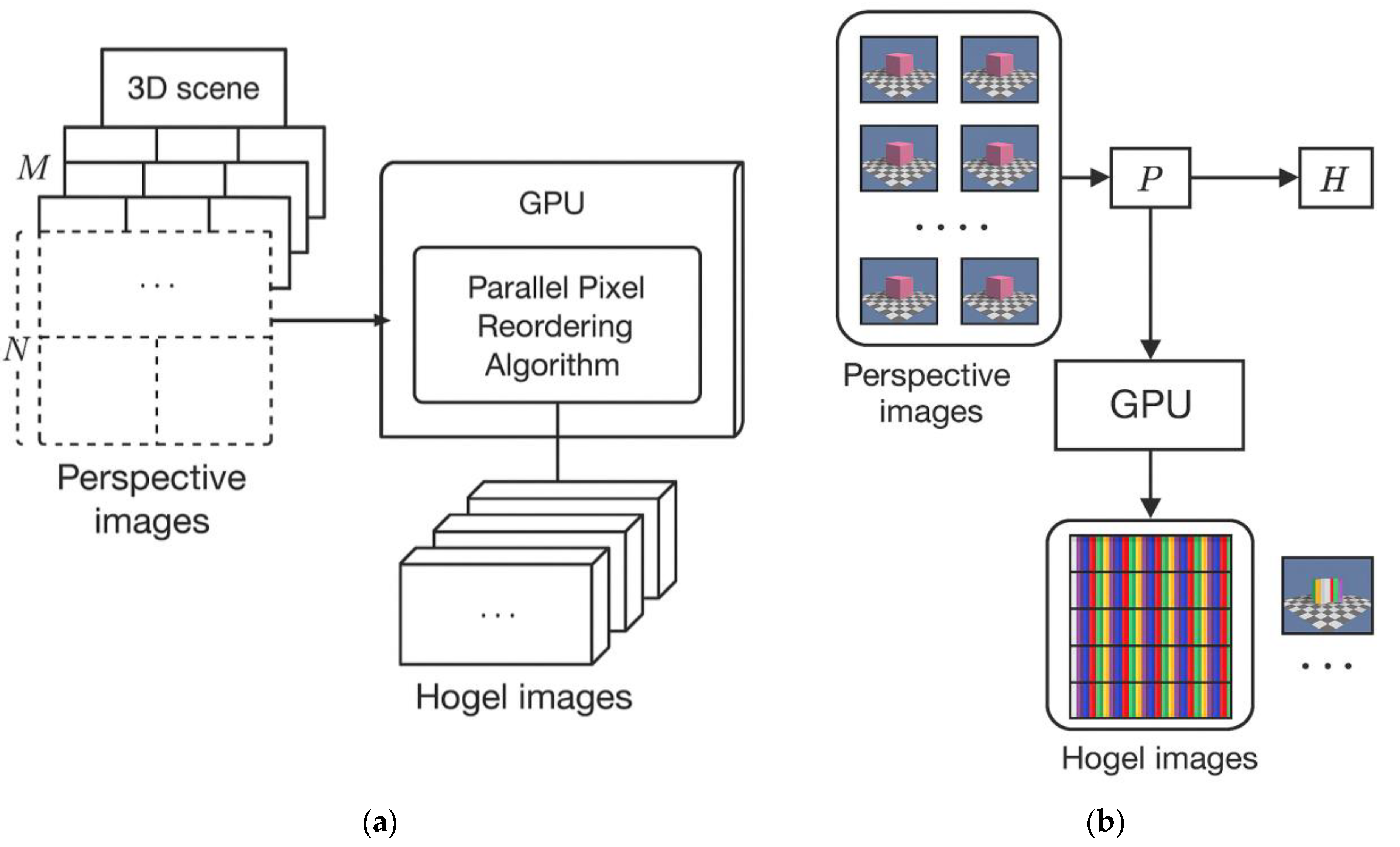

3.1. Overview of GPU Parallelization of the Hogel Generation Process

3.2. GPU Implementation Optimizations

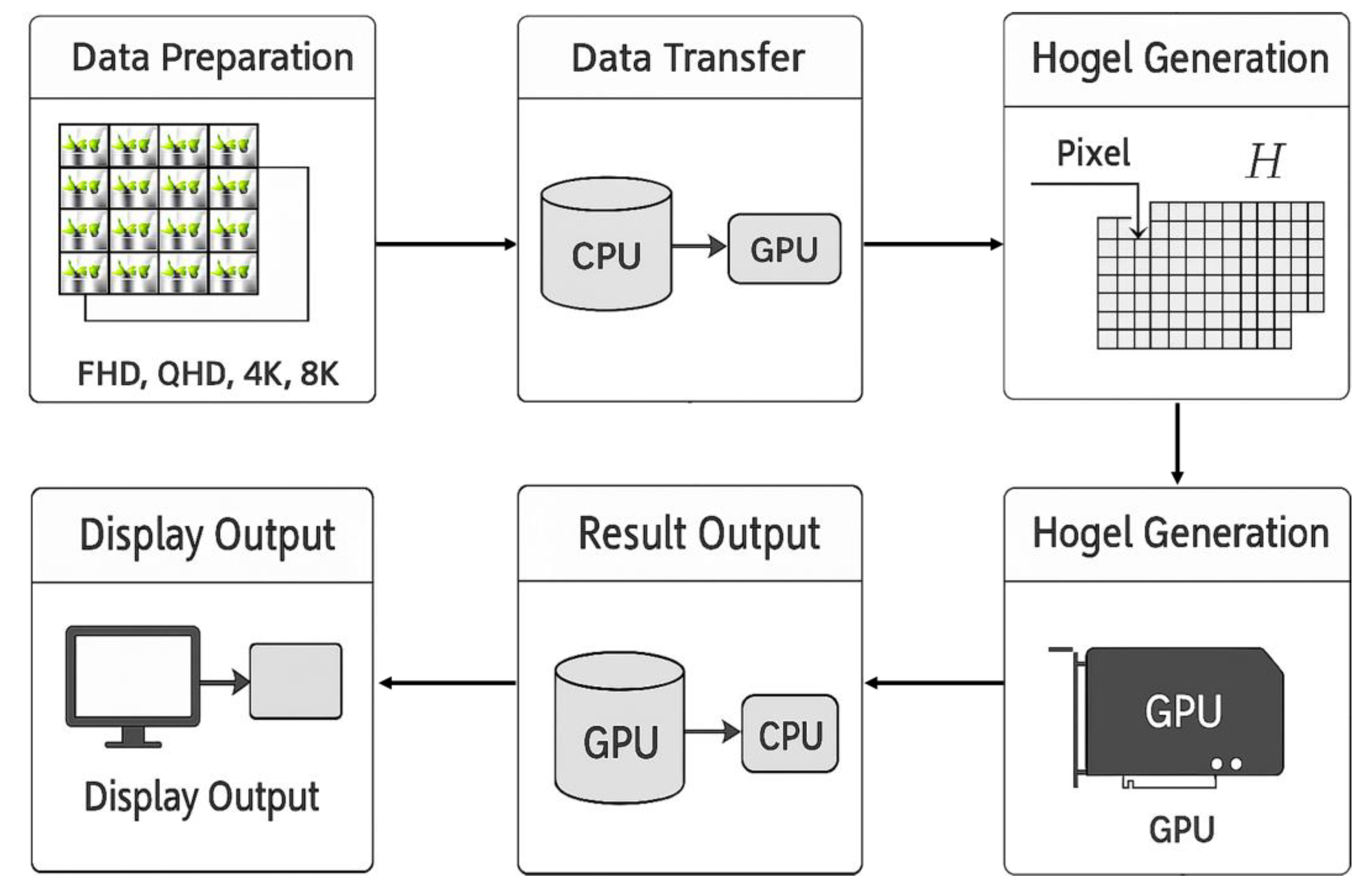

3.3. System Architecture and Workflow

- Data Preparation: Prepare multiple perspective images on the CPU or acquire them via a camera array or rendering. In our experiments, we used 16 perspective images at FHD, QHD, 4K, and 8K resolutions.

- Data Transfer: Copy the set of perspective images into GPU memory, using the optimized transfer techniques described above.

- Parallel Hogel Generation: Launch the CUDA kernel on the GPU to perform pixel rearrangement. Millions to billions of individual pixel-copy operations run in parallel to produce the hogel image array .

- Output: Either display the generated hogel images directly from the GPU or, if needed, transfer them back to CPU memory.

4. Results and Discussion

4.1. Experimental Setup and Configuration

4.2. Speed Comparison and Analysis

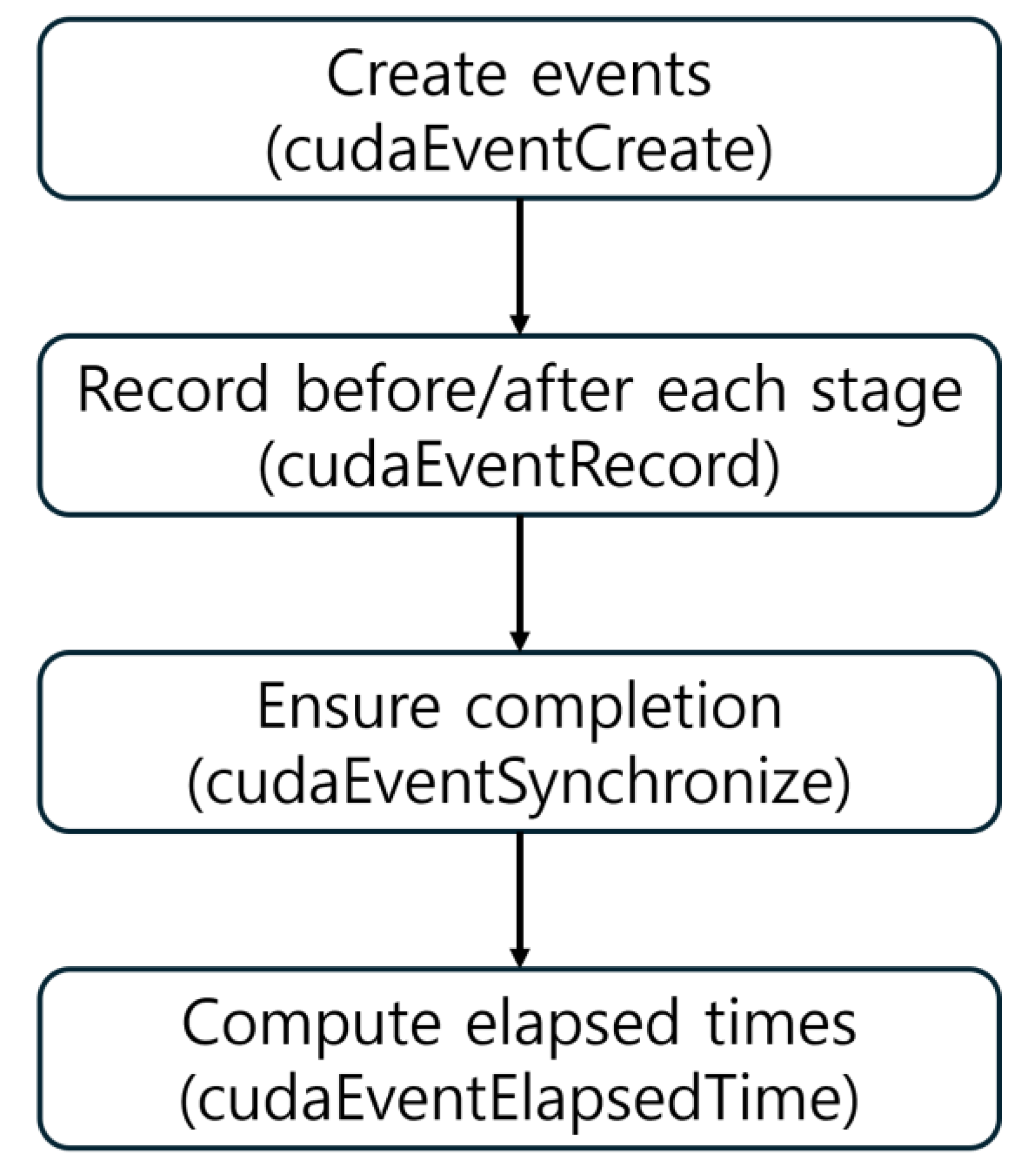

- Create events (cudaEventCreate);

- Record before/after each stage (cudaEventRecord);

- Ensure completion (cudaEventSynchronize); and

- Compute elapsed times (cudaEventElapsedTime).

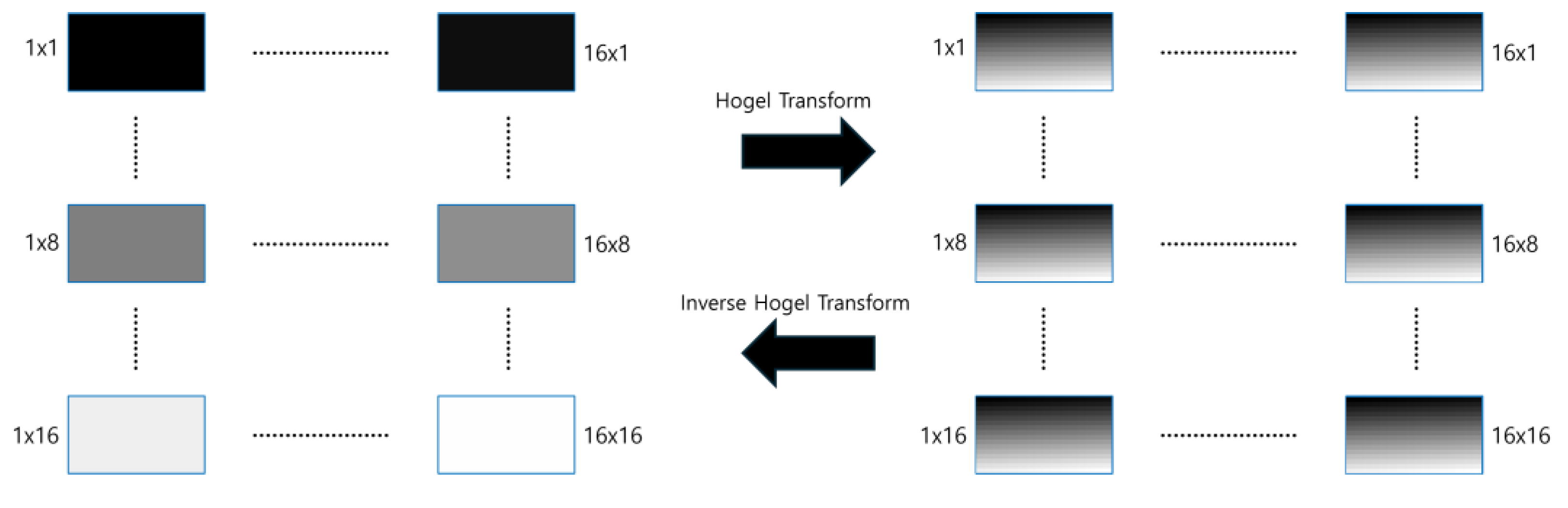

4.3. Visualization and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dashdavaa, E.; Khuderchuluun, A.; Wu, H.-Y.; Lim, Y.-T.; Shin, C.-W.; Kang, H.; Jeon, S.-H.; Kim, N. Efficient Hogel-Based Hologram Synthesis Method for Holographic Stereogram Printing. Appl. Sci. 2020, 10, 8088. [Google Scholar] [CrossRef]

- Yan, X.; Zhang, T.; Wang, C.; Liu, Y.; Wang, Z.; Wang, X.; Zhang, Z.; Lin, M.; Jiang, X. View-Flipping Effect Reduction and Reconstruction Visualization Enhancement for EPISM-Based Holographic Stereogram with Optimized Hogel Size. Sci. Rep. 2020, 10, 13492. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Xu, F.; Zhang, H.; Zhang, H.; Huang, K.; Li, Y.; Wang, Q. High-Resolution Hologram Calculation Method Based on Light Field Image Rendering. Appl. Sci. 2020, 10, 819. [Google Scholar] [CrossRef]

- Pi, D.; Liu, J.; Wang, Y. Review of computer-generated hologram algorithms for color dynamic holographic three-dimensional display. Light Sci. Appl. 2022, 11, 231. [Google Scholar] [CrossRef] [PubMed]

- Bjelkhagen, H.; Brotherton-Ratcliffe, D. Ultra-Realistic Imaging: Advanced Techniques in Analog and Digital Colour Holography, 1st ed.; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Li, Y.-L.; Wang, D.; Li, N.-N.; Wang, Q.-H. Fast Hologram Generation Method Based on the Optimal Segmentation of a Sub-CGH. Appl. Opt. 2021, 60, 4235–4244. [Google Scholar] [CrossRef] [PubMed]

- Cao, H.; Jin, X.; Ai, L.; Kim, E.-S. Faster Generation of Holographic Video of 3-D Scenes with a Fourier Spectrum-Based NLUT Method. Opt. Express 2021, 29, 39738–39754. [Google Scholar] [CrossRef] [PubMed]

- Sugie, T.; Akamatsu, T.; Nishitsuji, T.; Hirayama, R.; Masuda, N.; Nakayama, H.; Ichihashi, Y.; Shiraki, A.; Oikawa, M.; Takada, N.; et al. High-performance parallel computing for next-generation holographic imaging. Nat. Electron. 2018, 1, 254–259. [Google Scholar] [CrossRef]

- Kwon, M.W.; Kim, S.C.; Yoon, S.E.; Ho, Y.S.; Kim, E.S. Object tracking mask-based NLUT on GPUs for real-time generation of holographic videos of three-dimensional scenes. Opt. Express 2015, 23, 2101–2120. [Google Scholar] [CrossRef] [PubMed]

- Sato, H.; Kakue, T.; Ichihashi, Y.; Endo, Y.; Wakunami, K.; Oi, R.; Yamamoto, K.; Nakayama, H.; Shimobaba, T.; Ito, T. Real-time color hologram generation based on ray-sampling plane with multi-GPU acceleration. Sci. Rep. 2018, 8, 1500. [Google Scholar] [CrossRef] [PubMed]

- Niwase, H.; Naoki, T.; Hiromitsu, A.; Yuki, M.; Masato, F.; Hirotaka, N.; Takashi, K.; Tomoyoshi, S.; Tomoyoshi, I. Real-time electro holography using a multiple-graphics processing unit cluster system with a single spatial light modulator and the InfiniBand network. Opt. Eng. 2016, 55, 093108. [Google Scholar] [CrossRef]

- Ma, H.; Wei, C.; Wei, J.; Han, Y.; Liu, J. Superpixel-Based Sub-Hologram Method for Real-Time Color Three-Dimensional Holographic Display with Large Size. Opt. Express 2022, 30, 4235–4244. [Google Scholar] [CrossRef] [PubMed]

- Khuderchuluun, A.; Piao, Y.-L.; Erdenebat, M.-U.; Dashdavaa, E.; Lee, M.-H.; Jeon, S.-H.; Kim, N. Simplified Digital Content Generation Based on an Inverse-Directed Propagation Algorithm for Holographic Stereogram Printing. Appl. Opt. 2021, 60, 4235–4244. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.-W.; Lee, Y.-H.; Seo, Y.-H. High-speed computer-generated hologram based on resource optimization for block-based parallel processing. Appl. Opt. 2018, 57, 3511–3518. [Google Scholar] [CrossRef] [PubMed]

- Magallón, J.A.; Blesa, A.; Serón, F.J. Monte–Carlo Techniques Applied to CGH Generation Processes and Their Impact on the Image Quality Obtained. Eng. Rep. 2025, 7, e1410. [Google Scholar] [CrossRef]

- Ahrenberg, L.; Benzie, P.; Magnor, M.; Watson, J. Computer generated holography using parallel commodity graphics hardware. Opt. Express 2006, 14, 7636–7641. [Google Scholar] [CrossRef] [PubMed]

- Shimobaba, T.; Ito, T.; Masuda, N.; Ichihashi, Y.; Takada, N.; Oikawa, M. Fast Calculation of Computer-Generated Hologram on AMD HD5000 Series GPU and OpenCL. arXiv 2010, arXiv:1002.0916. [Google Scholar] [CrossRef] [PubMed]

- Murano, K.; Shimobaba, T.; Sugiyama, A.; Takada, N.; Kakue, T.; Oikawa, M.; Ito, T. Fast Computation of Computer-Generated Hologram Using Xeon Phi Coprocessor. arXiv 2013, arXiv:1309.2734. [Google Scholar] [CrossRef]

- Endo, Y.; Shimobaba, T.; Kakue, T.; Ito, T. GPU-accelerated compressive holography. Opt. Express 2016, 24, 8437–8445. [Google Scholar] [CrossRef] [PubMed]

- Nishitsuji, T.; Blinder, D.; Kakue, T.; Shimobaba, T.; Schelkens, P.; Ito, T. GPU-accelerated calculation of computer-generated holograms for line-drawn objects. Opt. Express 2021, 29, 12849–12866. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, S.; Jackin, B.J.; Ohkawa, T.; Ootsu, K.; Yokota, T.; Hayasaki, Y. Acceleration of Large-Scale CGH Generation Using Multi-GPU Cluster. In Proceedings of the 2017 Fifth International Symposium on Computing and Networking (CANDAR), Aomori, Japan, 19–22 November 2017; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

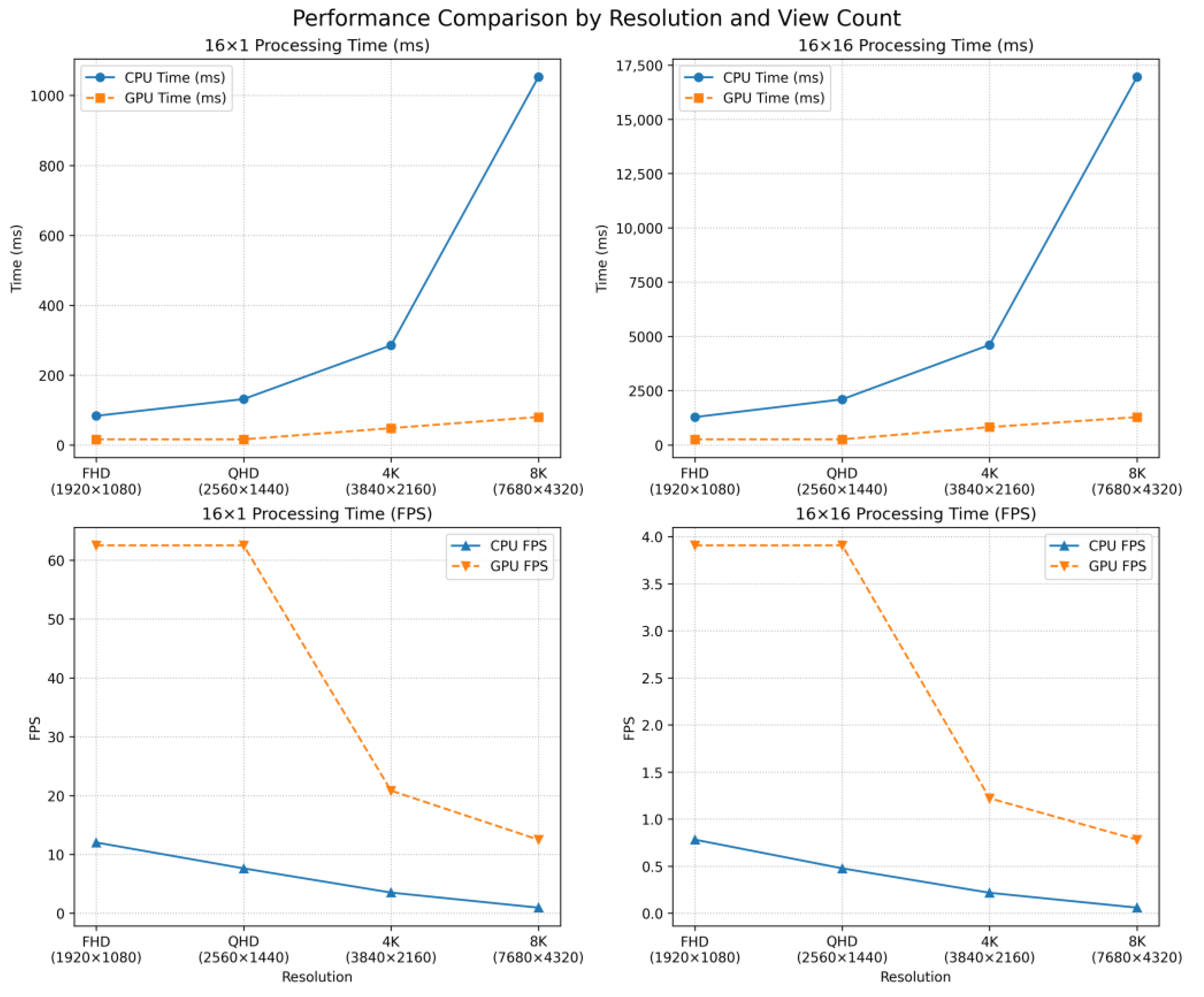

| Resolution | Views | CPU Time | GPU Time | Speedup (×) |

|---|---|---|---|---|

| FHD (1920 × 1080) | 1 × 1 | 83.2 (12 FPS) | 16 (62.5 FPS) | 5.2 |

| QHD (2560 × 1440) | 16 × 1 | 131.2 (7.6 FPS) | 16 (62.5 FPS) | 8.2 |

| 4K (3840 × 2160) | 16 × 1 | 248.8 (3.5 FPS) | 48 (20.8 FPS) | 5.1 |

| 8K (7680 × 4320) | 16 × 1 | 1052.8 (0.9 FPS) | 80 (12.5 FPS) | 13.1 |

| FHD (1920 × 1080) | 16 × 16 | 1280 (0.7 FPS) | 256 (3.9 FPS) | 5 |

| QHD (2560 × 1440) | 16 × 16 | 2099.2 (0.4 FPS) | 256 (3.9 FPS) | 8.2 |

| 4K (3840 × 2160) | 16 × 16 | 4608 (0.2 FPS) | 819.2 (1.2 FPS) | 5.6 |

| 8K (7680 × 4320) | 16 × 16 | 16,947.2 (0.05 FPS) | 1280 (0.7 FPS) | 13.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, H.; Kim, B.; Seo, Y. High-Resolution Hogel Image Generation Using GPU Acceleration. Photonics 2025, 12, 882. https://doi.org/10.3390/photonics12090882

Kang H, Kim B, Seo Y. High-Resolution Hogel Image Generation Using GPU Acceleration. Photonics. 2025; 12(9):882. https://doi.org/10.3390/photonics12090882

Chicago/Turabian StyleKang, Hyunmin, Byungjoon Kim, and Yongduek Seo. 2025. "High-Resolution Hogel Image Generation Using GPU Acceleration" Photonics 12, no. 9: 882. https://doi.org/10.3390/photonics12090882

APA StyleKang, H., Kim, B., & Seo, Y. (2025). High-Resolution Hogel Image Generation Using GPU Acceleration. Photonics, 12(9), 882. https://doi.org/10.3390/photonics12090882