2.1. FMCW LiDAR Non-Linearity

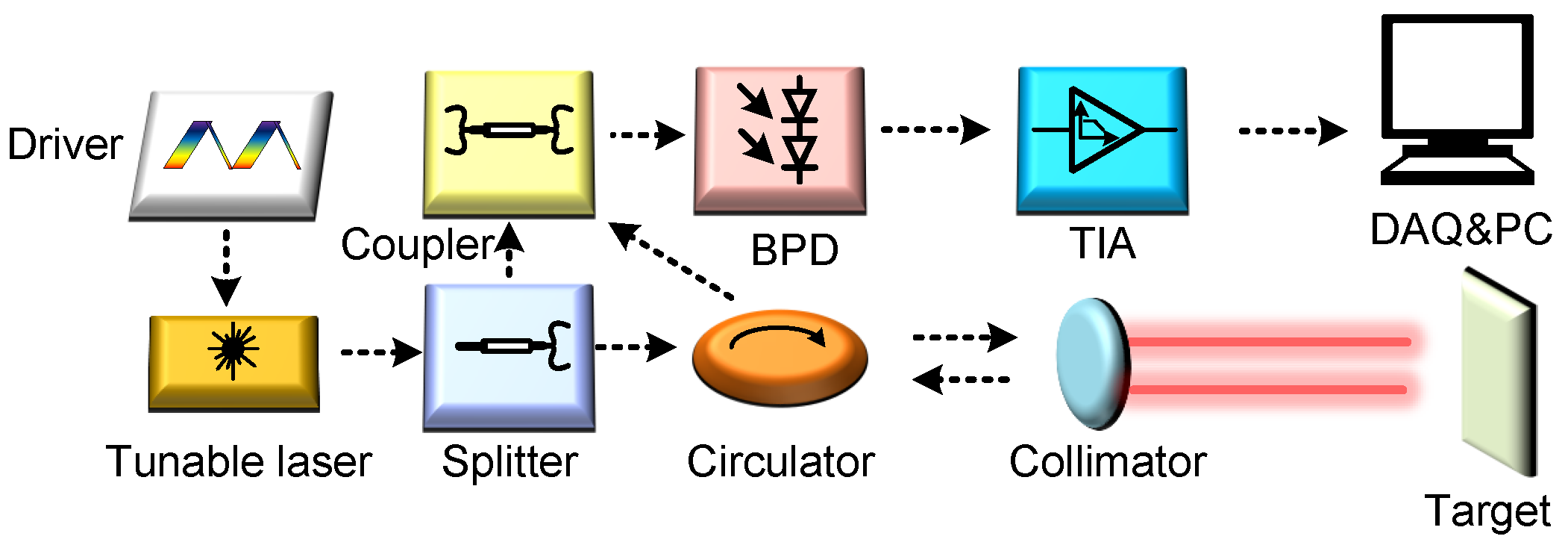

The fundamental architecture of the FMCW LiDAR system is illustrated in

Figure 1, which presents a block diagram of a direct detection-based laser ranging configuration. In this system, a triangular waveform generated by a driver modulates a tunable laser source, producing a continuous-wave optical signal with a periodically swept frequency. The emitted light is split into two paths: the measurement beam, directed toward the target via a circulator, and the reference (local) beam, sent to an optical coupler. The measurement beam is collimated, transmitted to the target, and the reflected signal is re-collimated and guided back through the circulator to the coupler. There, it interferes with the local beam, and the resulting optical interference is detected via a balanced photodetector (BPD), which converts it into an electrical beat frequency signal. This signal is then amplified via a trans-impedance amplifier (TIA). The target distance is determined by analyzing the frequency of the beat signal.

External-cavity semiconductor frequency-modulated lasers, which achieve modulation by driving cavity mirrors to vary the optical path length, are widely adopted due to their superior FM linearity, wavelength stability, narrow linewidth, low noise, high side-mode suppression ratio, elevated output power, and compact integration. Despite these advantages, practical implementation is challenged by nonlinearities arising from piezoelectric actuator (PZT) hysteresis, mechanical vibrations, and circuit noise. These effects distort the frequency sweep, introducing errors in the beat frequency

and thereby degrading ranging resolution and accuracy. As depicted in

Figure 2, the dashed line illustrates the ideal linear frequency modulation trajectory, while the solid line represents the actual, non-ideal modulation behavior encountered in real-world scenarios.

In the range of laser modulation period

, the modulated signal of local light and measure light

can be written as follows:

Let

denote the initial optical frequency and

k the modulation rate, defined as

, where

B represents the laser’s frequency sweep bandwidth and

T the modulation period. The parameter

corresponds to the round-trip propagation delay associated with a target located at the target’s distance

R, and it is given as follows:

When considering the nonlinear term

of the FMCW LiDAR system, the above Equation (

1) can be written as follows:

To calculate the beat frequency, we first need to obtain the phase of the local light and measure light:

Then, the phase difference between the measured light signal and the local light signal from Equation (

4) can be expressed as follows:

Since practical ranging scenarios typically occur within 300 m, the round-trip time is at most 2. Given that the modulation period T of FMCW LiDAR is generally around 100, we have . Therefore, we neglect the term , is considered invariant in , and we can get .

Thus, according to Equation (

5), the nonlinearity beat signal can be expressed as follows:

And the distance

R of the target can be rewritten as follows:

The accuracy of the beat signal frequency directly determines the precision and resolution of distance measurements in FMCW LiDAR. The ranging resolution is defined as

. When a window function is applied to the beat signal spectrum via Fourier transform [

23], the theoretical spatial resolution (TSR) corresponds to the full width at half maximum (FWHM) of the spectral peak, given by

. To evaluate the deviation in beat signal frequency

introduced via modulation nonlinearity, the bandwidth is estimated using Carson’s rule [

24].

where

is the repetition of FMCW LiDAR modulation signal,

is the modulation index, and has

and

is the root mean square(RMS) value of nonlinear error. Thus, Equation (

8) can be rewritten as

. In this way, by analyzing the spectrum of the beat signal, we are able to quantitatively evaluate the sweep nonlinearity, and the

can be rewritten as [

19]:

Since we can control the magnitude of the modulation slope

to achieve the compensation purpose, the beat frequency by compensation

can be expressed as follows:

The compensated distance

can be expressed as follows:

To establish the reinforcement learning environment, we adopt the methodology presented in [

19]. The environment is constructed using experimentally obtained data and the underlying principles of FMCW LiDAR measurement. A nonlinear setting is simulated by incorporating Gaussian noise, effectively emulating the real-world nonlinear behavior of FMCW systems. This approach enables efficient policy training without direct interaction with the physical hardware, thereby mitigating the risk of potential damage to laser components. The first step involves formulating the physical relationship between the beat frequency and the modulation slope:

The

denotes the Hilbert transform, and

can also be expressed as follows:

, with

denoting the nonlinear characteristic. The noise term

is then introduced to model the stochastic properties of the system:

Based on the given , the modulated beat frequency is calculated, and the model is constructed as a training environment for the RL algorithm through this relation.

2.2. Soft Actor–Critic with Hybrid Prioritized Experience Replay

The Soft Actor–Critic (SAC) algorithm represents a state-of-the-art reinforcement learning approach founded on the principle of maximum entropy policy optimization. Unlike conventional methods that primarily enhance policy performance through Q-value estimation of a given policy

, SAC employs a dual-stage optimization process involving both the value and policy functions. This design eliminates the reliance on manually tuned exploration noise parameters, which are often associated with inefficient and unstable policy learning. A distinguishing feature of SAC is its incorporation of an adaptive temperature coefficient,

, which is dynamically optimized as part of the objective function. This mechanism obviates the need for manual hyperparameter adjustment, thereby enhancing the algorithm’s ability to adapt to varying environmental complexities and different phases of the training process. Additionally, entropy regularization encourages broader exploration across the state–action space while suppressing redundant interactions with less informative regions. This exploratory efficiency renders SAC particularly well-suited to environments that demand a balance between exploration and learning stability. Despite these advantages, SAC exhibits limitations due to its uniform sampling strategy, which treats all experience samples with equal priority. This can lead to slower convergence and instability during training. To address these issues, prioritized experience replay is introduced into the SAC framework. By emphasizing samples with higher value function estimation errors and suboptimal policy outcomes, the network is guided to focus on experiences with greater learning potential. This modification enhances both the stability and convergence rate of the learning process. The SAC algorithm can be formally described as a policy search within the framework of a Markov decision process (MDP). By extending the standard reward maximization objective with a maximum entropy component, the algorithm aims to jointly maximize the expected return and the entropy of the policy. The resulting policy objective,

, is given as follows:

In this context,

represents the immediate reward received from the environment when the agent is in state

and executes action

and can be expressed as

in this paper. The term

denotes the entropy of the action distribution under policy

, given state

, which quantifies the level of randomness in the agent’s action selection at that state. A higher entropy indicates a more exploratory policy. The coefficient

regulates the contribution of the entropy term. The SAC algorithm consists of two primary components: policy evaluation and policy improvement. In the policy evaluation phase, the soft Q-value function, denoted as

, is defined as follows:

where

is the discount factor, and the soft state value function

is defined as follows:

The term

represents the expected soft Q-value obtained by selecting an action according to the current policy in state

, capturing the anticipated return following the agent’s decision. The entropy regularization component,

, promotes stochasticity in the policy by penalizing certainty, thereby encouraging exploration. The temperature parameter

modulates this term, balancing exploration and exploitation by scaling the entropy contribution. Importantly,

is updated adaptively to optimize the trade-off between reward maximization and entropy. This adjustment follows the gradient update rule:

where

and

denote the current and updated temperature coefficients, respectively,

is the learning rate, and

is the gradient of the entropy-related objective with respect to

.

where

N denotes the size of the batch data sampled from the empirical playback buffer and

h is the target entropy. In the strategy enhancement stage, we optimize the strategy using Kullback–Leibler Divergence [

25]:

where

is a normalized distributional function, and the output of the strategy

is a probability distribution. The soft Q-function

parameters can be trained to minimize the soft Bellman residual:

Here,

, and

denotes the target Q-value network obtained via the exponential moving average of the parameter

of the Q-value function for the parameter

. The soft actor–critic algorithm uses the reparameterization trick to redefine the strategy

as follows:

where

is a random variable in the heavy parameter skill. According to the above definition, the updated gradient of the soft Q function is

where

and

are parameters of the soft Q function and its target network, respectively, and the update gradient of the policy network

is

During SAC training, experience batches are uniformly sampled from the replay buffer, assigning equal selection probability to all transitions. However, this uniform approach may be suboptimal, as experiences vary in their contribution to learning. Prioritized experience replay addresses this issue by assigning higher sampling probabilities to transitions with greater learning potential, thereby focusing updates on more informative experiences. PER quantifies the importance of each transition using the temporal-difference (TD) error

, which measures the discrepancy between predicted and actual returns. Larger TD errors indicate transitions with greater potential to improve the policy, and thus, they are prioritized during sampling. The sampling probability for each experience is derived from its TD error, ensuring that data with higher learning relevance are revisited more frequently, thereby improving training efficiency and accelerating convergence. The sampling probability is calculated using the following formulation:

where

refers to the priority of state transition, which is a positive number, like

.

is an exponential hyperparameter corresponding to the priority level to be used and can be regarded as a trade-off factor to balance the uniformity and greediness. When

, uniform sampling is performed, and when

, greedy (maximum value selection) sampling is performed. However, considering that, in FMCW LiDAR, the global estimate of the TD error representation strategy cannot directly reflect the nonlinear correction results in terms of laser device physics, to ensure accurate correction at the hardware level, we introduce the modulation frequency (MF) difference between the beat frequency signals of adjacent beat frequency units as a feature quantity that directly reflects instantaneous nonlinearity. This enables the integration of MF error and TD error into a hybrid priority mechanism, resolving the compatibility between instantaneous response and long-term optimization for intelligent agents. This achieves a more robust training strategy. The definition of MF error is

where

denotes the difference between the beat frequencies of two adjacent segments of the modulation signal, and the sampling probability

of the new hybrid priority mechanism is

Here,

denotes the mixing coefficient. By setting

, the weight ratio between

and

can be dynamically adjusted. And

denotes the TD error, which can be expressed as follows:

Among them,

and

are the two value networks of SAC. To further eliminate priority bias, we introduce a dynamic mixing coefficient

to control the weights of

and

in the priority, and the importance sampling weight

can be expressed as follows:

When

,

, indicating that

dominates the weighting. When

,

, indicating that

dominates the weighting. The calculation method for

is as follows:

where

is the sigmoid function, which is defined on [0,1], and

is calculated through the following equation:

In FMCW LiDAR systems, the modulation nonlinearity of the laser is influenced by environmental factors like temperature fluctuations and laser degradation, as well as hardware characteristics like driver circuit latency, leading to time-varying behavior. Conventional experience replay (ER) strategies, which typically rely on fixed-delay or immediate data injection, are insufficient for capturing these dynamic nonlinearities. This can result in an imbalance between data recency and diversity, thereby hindering model performance. To address this limitation, we introduce a Time-Varying Delay Experience (TDE) infusion mechanism, which dynamically adjusts the injection delay of training samples based on system dynamics. Combined with buffer segmentation management, this approach ensures a more balanced temporal distribution of experiences. The delay function

is designed to reflect the rate of change in system nonlinearity, modeled using the first-order derivative of the modulation frequency error

.

where

is the base delay step to ensure minimum data diversity.

is the sensitivity coefficient, which controls the strength of the response of the delay to the rate of nonlinear change. The value range of

is

, so the actual range of

is

, and

is calculated by sliding the modulation frequency error window:

where

k denotes the current training step, and

W represents the window width used to smooth instantaneous noise, typically determined based on the total number of steps in a single training session. When

, it indicates that the nonlinearity of the modulation signal is intensifying, and the modulation frequency error is increasing rapidly (e.g., sudden rise in laser temperature causing frequency modulation distortion). In this case,

approaches 1,

, and the delay increases. At this point, the system requires more time to accumulate new data in order to fully capture the evolving trend of nonlinear dynamics. When

, it indicates that the nonlinearity of the modulation signal is stable,

, and the base delay is maintained to preserve exploration diversity. When

, it indicates that the nonlinear trend of the modulation signal is decreasing, the modulation frequency error is decreasing, approaching -1, and

. At this point, the delay is minimized, and the system needs to quickly inject new data to promptly reflect the nonlinear compensation effect and avoid training lag. In addition, we divide the ER buffer

into two sub-buffers, namely the fast zone

and the slow zone

, where the fast zone is used to store experiences in the high dynamic change phase

, accounting for

of the total capacity, and the slow zone is used to store experiences in the steady state phase

, with a capacity ratio of

, as shown in the following equation:

Finally, during sampling, we mixed the two types of data proportionally in batch

during network training to balance detection and exploitation:

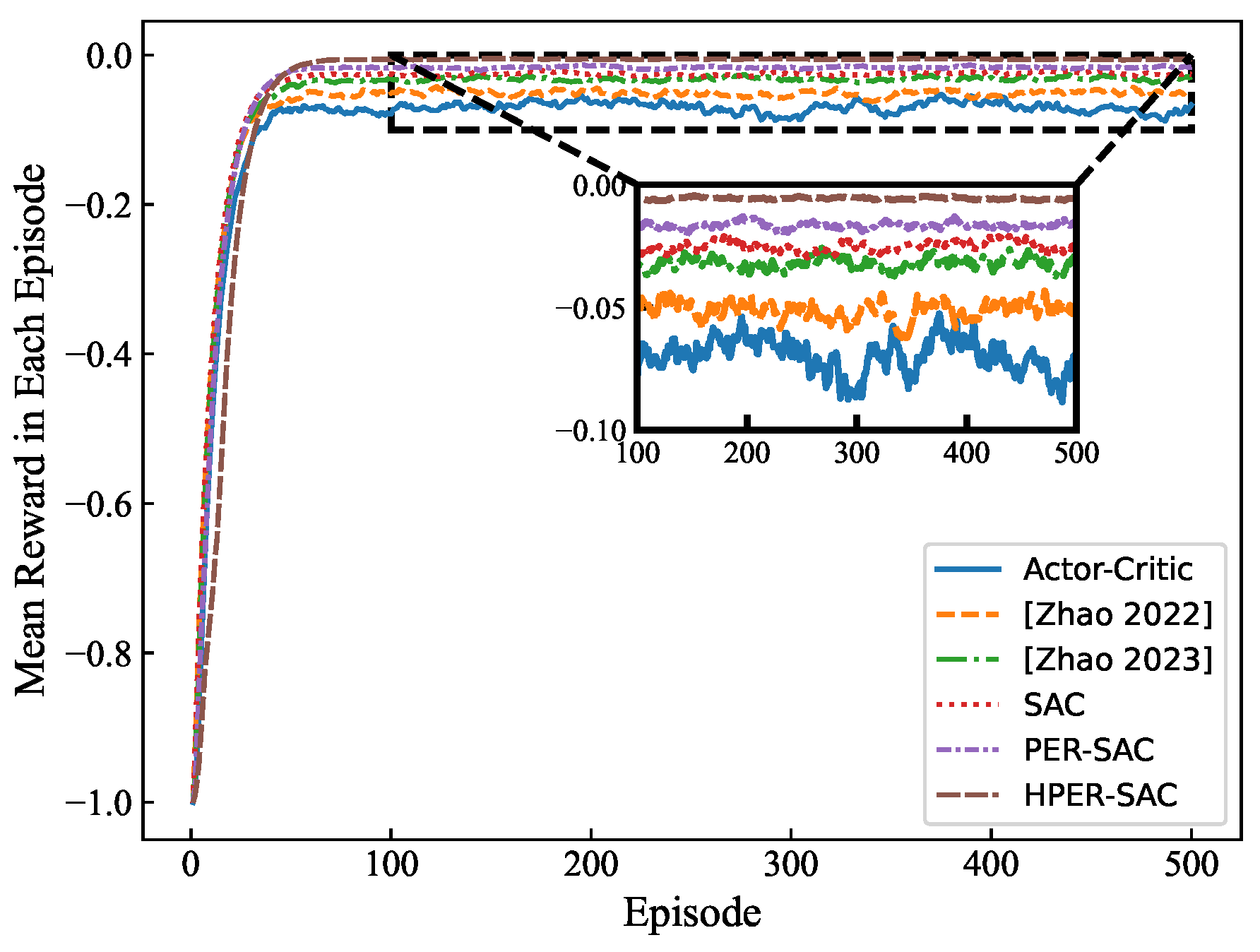

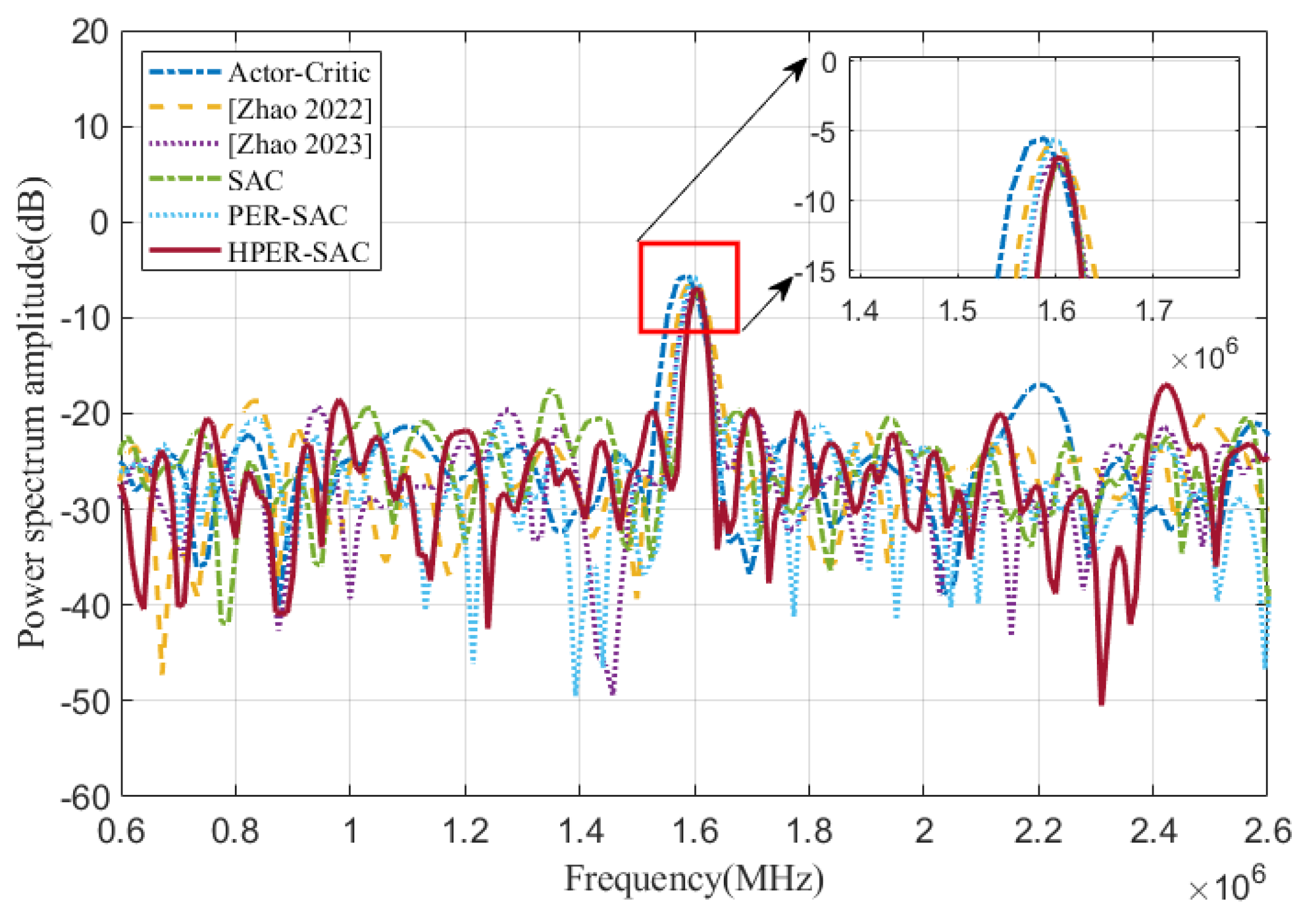

The structure of HPER-SAC is shown in

Figure 3. First, the FMCW LiDAR experimental system section, where an Field-Programmable Gate Array (FPGA) generates the original modulated signal, which is then converted into a drive signal via a digital-to-analog converter (DAC) to drive a tunable laser to generate laser light. The laser light is split by a coupler into 10% local light and 90% measurement light. The measurement light then passes through a time-delay fiber before entering a second coupler simultaneously with the local light, where they are mixed and frequency-converted into beat signals via a BPD. Then, an analog-to-digital converter (ADC) quantizes and collects the beat frequency signal to the FPGA. The FPGA transmits the digitized beat frequency data and the original modulation signal to the laptop via a network protocol and initializes the system environment required for RL training. Then, the HPER-SAC reinforcement learning network algorithm is started. First, the actor interacts with the environment and stores the samples in the experience replay buffer. Then, it calculates the beat frequency MF and TD error between adjacent segments within the sweep frequency period to construct a mixed priority experience pool and automatically adjust the proportion of replayed experiences. After training the agent, probabilistic sampling is performed from the experience replay buffer, and the parameters of the critic network are updated. Simultaneously, the target network undergoes a soft update to guide the Actor network in updating the signal modulation actions. The specific HPER-SAC algorithm steps are shown in Algorithm 1.

| Algorithm 1 HPER-SAC RL. |

Input: Hyperparameters: exponential moving average , discount factor , target entropy h, batch size , Critic NN learning rate , Actor NN learning rate , entropy coefficient learning rate . Initialize Critic NN: , ; Actor NN: ; Target NN: . Replay buffer: and . for = 1 to N, do: Get initial state and initialize modulation slope . for = 1 to T do: Sample by to obtain action and calculate and Update modulation slope: Store tuple to and calculate , , , if t mod == 0: if : Store tuple to else Store tuple to end if end if Calculate , sample a batch transition from to , and from to , then get for do: Calculate and . end for Update critic NN loss function: Update actor NN loss function: end for Update critic networks: ; Update actor network: Update temperature coefficient: Update target networks: ; . end for Output: control policy

|

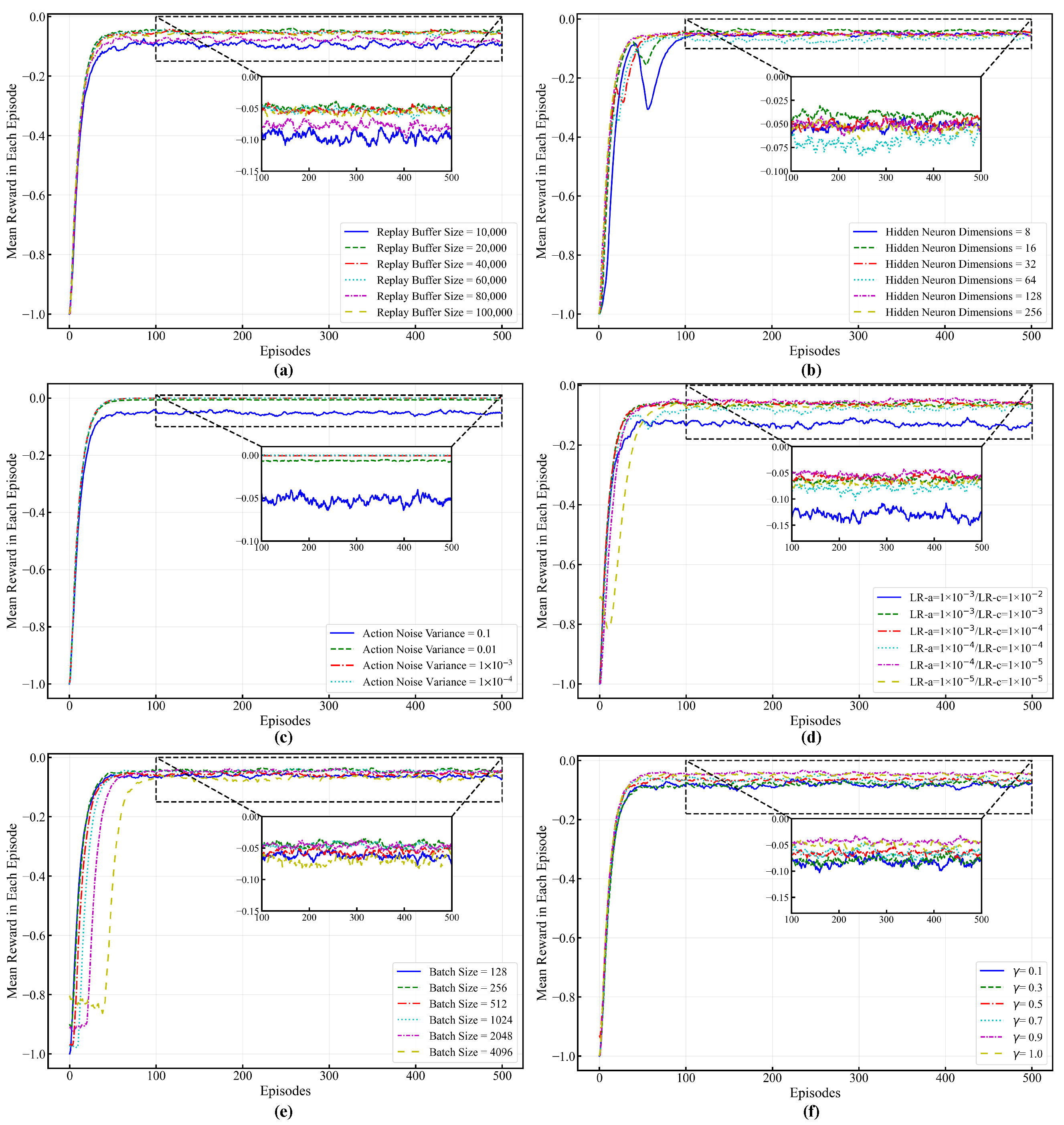

The training parameters are listed in

Table 1.