Abstract

In biological neural networks, neurons transmit chemical signals through synapses, and there are multiple ion channels during transmission. Moreover, synapses are divided into inhibitory synapses and excitatory synapses. The firing mechanism of previous spiking neural P (SNP) systems and their variants is basically the same as excitatory synapses, but the function of inhibitory synapses is rarely reflected in these systems. In order to more fully simulate the characteristics of neurons communicating through synapses, this paper proposes a dynamic threshold neural P system with inhibitory rules and multiple channels (DTNP-MCIR systems). DTNP-MCIR systems represent a distributed parallel computing model. We prove that DTNP-MCIR systems are Turing universal as number generating/accepting devices. In addition, we design a small universal DTNP-MCIR system with 73 neurons as function computing devices.

1. Introduction

Membrane computing (MC) is a type of system with the characteristic of distributed parallel computing, usually called P systems or membrane systems. MC is obtained by researching the structure and functioning of biological cells as well as the communication and cooperation of cells in tissues, organs, and biological neural networks [1,2]. P systems are mainly divided into three categories, namely cell-like P systems, tissue-like P systems, and neural-like P systems. In the past two decades, many P-system variants have been studied and applied to real-world problems, and most of them have been proven to be universal number generating/accepting devices and functional computing devices [3,4].

1.1. Related Work

Spiking neural P (SNP) systems are abstracted from biological facts that neurons transmit spikes to each other through synapses. An SNP system can be regarded as a directed graph, where neurons are regarded as nodes, and the synaptic connections between neurons are regarded as arcs [5,6]. SNP systems are the main form of neural-like P systems [7]. An SNP system consists of two components: data and firing rules. Data usually describe the states of neurons and can also indicate the number of spikes contained in every neuron. Firing rules contain spiking rules and/or forgetting rules. The firing of rules needs to satisfy necessary conditions. The form of firing rules is , where E indicates the regular expression. When and , the rule is applied and the neuron fires. represents the language set associated with regular expressions E. indicates that the neuron where the rule is located contains n spikes. Once the rule is enabled, the neuron removes c spikes and transmits the generated p spikes to succeeding neurons. When , rule is called a forgetting rule. represents an empty string, which indicates that forgetting rules does not generate new spikes. In summary, the firing condition of a firing rule is: and . This means that the firing of a rule is only related to the state of the neuron and has nothing to do with the state of other neurons. Moreover, the parallel work of neurons determines that SNP systems are models of distributed parallel computing. Therefore, most SNP systems are equipped with a global clock to mark time. SNP systems also have nondeterminism characteristic. If more than one rule can be enabled in a neuron at a certain time, then only one of them will be selected non-deterministically.

Neural-like P systems have received extensive attention and research in recent years. SNP systems was proposed by Ionescu et al. [7]. Usually, SNP systems have spikes and forgetting rules, but Song et al. [8] proposed that neurons can also have request rules. The request rules enable neurons to perceive “stimuli” from the environment by receiving a certain number of spikes. Zeng et al. [9] proposed SNP systems with weights. In this system, when the potential of a neuron is equal to a given value (called a threshold), it will fire. Zhao et al. [10] introduced a new mechanism called neuron dissolution, which can eliminate redundant neurons generated in the calculation process. In order to enable SNP systems to represent and process fuzzy and uncertain knowledge, the weighted fuzzy peak neural P system was proposed by Wang et al. [11]. Considering that SNP systems can only fire one neuron in each step, sequential SNP systems is investigated [12,13]. Although most SNP systems are synchronous, asynchronous SNP systems are investigated by [14,15,16]. Song et al. [15] also studied the computing power of asynchronous SNP system with partial synchronization. Moreover, most SNP systems as number generating/receiving devices [17,18], language generators [19], and functional computing devices [20,21] have been proven to be Turing universal. Furthermore, SNP systems have applications in dealing with real-world problems, such as fault diagnosis [22,23,24], clustering [25], and optimization problems [26].

1.2. Motivation

Yang et al. [27] researched SNP systems with multiple channels (SNP-MC systems) based on the fact: there are multiple ion channels in the process of chemical signal transmission, therefore, spiking rules with channel labels are introduced into neural P systems. After SNP-MC systems were proposed, many neural P systems combine with multiple channels have been investigated. They prove that using multiple channels can improve the computing power of neural P systems.

In addition, there are the following facts in the biological nervous system:

- (1)

- The conduction of nerve impulses between neurons is unidirectional, that is, nerve impulses can only be transmitted from the axon of one neuron to the cell body or dendrites of another neuron, but not in the opposite direction.

- (2)

- Synapses are divided into excitatory synapses and inhibitory synapses. According to the signal from the presynaptic cells, if the excitability of the postsynaptic cell is increased or excited, then the connection is excitatory synapse. If the excitability of the postsynaptic cell is decreased or the excitability is not easily generated, then it is inhibitory synapse.

Li et al. [28] were inspired by the above two biological facts and proposed SNP system with inhibitory rules (SNP-IR systems). The firing condition of an inhibitory rule not only related to the state of neuron where the rule is located but also related to other neurons (presynaptic neurons). This is because of the unidirectionality of nerve impulse transmission. SNP-IR systems have stronger control capabilities than other SNP systems.

Peng et al. [29] first proposed the dynamic threshold neural P systems (DTNP systems), which were abstracted from ICM model of the cortical neurons. The firing mechanism of DTNP systems are different from SNP systems. DTNP systems adopt a dynamic-threshold-based firing mechanism, and they have two data units (feeding input unit and dynamic threshold unit) as well as a maximum spike consumption strategy. In order to improve the computational efficiency of DTNP systems, we introduce multiple channels and inhibitory rules.

The main motivation of this paper is mainly to introduce inhibitory rules into DTNP systems, which can better reflect the working mechanism of inhibitory synapses and simulate the actual situation of communication between neurons through synapses. The introduction of multiple channels improves the control capability of the DTNP system so that it can better solve real-world problems.

Compared with DTNP systems, DTNP-MCIR systems have the following innovations:

- (1)

- DTNP-MCIR systems introduce firing rules with channel labels.

- (2)

- Inspired by SNP-IR systems, we introduce inhibitory rules to DTNP systems, but the form and firing conditions of inhibitory rules have been re-defined.

- (3)

- The firing rules of neuron (corresponds to register r) is in DTNP systems, is a default firing condition usually not displayed, because can be reflected in the rules. However, can also be a regular expression in DTNP-MCIR systems, for example , where , represents an odd number of spikes. The rule is only enabled when neuron contains an odd number of spikes and satisfies the default firing condition.

If a rule in a neuron is enabled in DTNP-MCIR systems, then the number of spikes consumed by the neuron is . When two rules in a neuron can be applied simultaneously at time t, we can choose one of the rules according to the neuron’s maximum spike consumption strategy.

Based on the above, we built a variant of DTNP systems called dynamic threshold neural P systems with multiple channels and inhibitory rules (DTNP-MCIR systems). We will prove Turing universal of DTNP-MCIR systems as number generating/accepting devices and functional computing devices. The content of the rest of this paper is arranged as follows. Section 2 defines DTNP-MCIR systems and gives an illustrative example. Section 3 illustrates the Turing universality of DTNP-MCIR systems as number-generating/accepting devices. Section 4 explains DTNP-MCIR systems as function computing devices. Section 5 is the conclusion of this paper.

2. DTNP-MCIR Systems

In this section, we define a DTNP-MCIR system, describe some of its related details, and give an illustrative example. For convenience, DTNP-MCIR systems use the same notations and terms as SNP systems.

2.1. Definition

A DTNP-MCIR system with degree m 1 is shown below:

where:

- (1)

- is a singleton alphabet (the object a is called the spike);

- (2)

- is the channel labels;

- (3)

- are m neurons of the form where:

- (a)

- is the number of spikes contained in the feeding input unit of neuron ;

- (b)

- is the number of spikes as a (dynamic) threshold in neuron ;

- (c)

- is a finite set of channel labels used by neuron ;

- (d)

- is a finite set of rules of two types in ;

- (i)

- the form of firing rules is , where is a firing condition, , and ; when , the rule is known as a spiking rule, and when , , the rule is known as a forgetting rule, can be written as ;

- (ii)

- the form of inhibitory rules is , where the subscript represents an inhibitory arc between neuron and , , , , , and ;

- (4)

- with (synapse connections)

- (5)

- in indicate input neurons;

- (6)

- out indicate output neurons.

From the perspective of topological structure, a DTNP-MCIR system can be regarded as a directed graph with inhibitory arcs, where m neurons denote m nodes, and the synaptic connections between neurons denote the arcs of the directed graph. An inhibitory arc is represented by an arc with a solid circle. A directed arc with an arrow indicates excitatory conduction. Synaptic connections are expressed as a tuple , which means that neuron is connected to neuron through the l-th channel. Outside system is called environment. In system , only input neurons and output neurons can communicate with the environment. When the system works in generative mode, input neurons are not considered, by contrast, output neurons are not considered in accepting mode.

Data and rules are two components of a DTNP-MCIR system. There are two data units in each neuron : feeding input unit and dynamic threshold unit . If the calculation of system proceeds to time t, then and respectively represent the number of spikes in the two data units of the neuron [29]. The direct manifestation of data is the number of spikes contained in each neuron, the number of spikes contained in a neuron can also indicate the state of the neuron. The state of the entire system at time t is characterized by the configuration , which can reflect the number of spikes in feeding input unit and dynamic threshold unit of m neurons. The initial configuration can be denoted as .

The rules in DTNP-MCIR systems can be divided into two types: firing rules and inhibitory rules. The form of firing rules is , firing condition is which is equivalent to and . If the firing condition in neuron is fulfilled at time t, then rules in neuron will be applied to a configuration . When rule is enabled, neuron fires and then consumes u spikes from feeding input unit (retaining ( )spikes) and spikes from dynamic threshold unit (retaining ( ) spikes). When , p spikes generated by neuron are transmitted to neuron through the l-th synaptic channel. When p = 0, rule is called forgetting rule. If forgetting rule in neuron is enabled, then spikes in feeding input unit and dynamic threshold unit will be removed and no new spikes are generated.

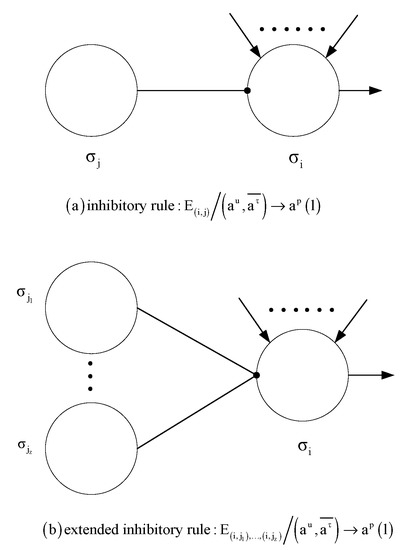

The form of inhibitory rules is , as shown in Figure 1a, its firing mechanism is special. Usually the firing of a neuron depends only on its current state, other neurons have no influence on it. However, the firing condition of an inhibitory rule not only depends on the state of the current neuron, but also related to the state of the preceding neuron (called an inhibitory neuron). Suppose an inhibitory rule is located in neuron and the inhibitory neuron of is . We assume that when there is an inhibitory arc between neurons and , the usual arc cannot exist. In addition, the inhibitory neuron only controls the firing of the neuron , and the neuron has no effect on the inhibitory neuron . The firing condition of the inhibitory rule is defined as , which is equivalent to , and ,where is an additional constraint compared to the firing condition of the firing rule. represents the number of spikes at time t in the feeding input unit of inhibitory neuron . represents the number of spikes at time t in the dynamic threshold unit of neuron . Therefore, the firing conditions of inhibitory rules correspond to the function of inhibitory synapses. It is not only related to the state of neuron , but also depends on the state of the inhibitory neuron . Although the firing conditions of inhibitory rules and firing rules are different, the spike consumption strategy is same when they are applied. If neuron applies rules to configuration , we can get:

where n spikes come from other neurons, and p spikes are generated by the neuron .

Figure 1.

(a) inhibitory rule and (b) extended inhibitory rule.

A neuron may have multiple inhibitory neurons. As shown in Figure 1b, neuron has z inhibitory neurons . At this time, the form of inhibitory rules is , which is called an extended inhibitory rule. The firing condition of the extended inhibitory rule is , and .

If neuron meets firing conditions during calculation, then one of the rules in must be used. If two rules and in neuro n can be applied to configuration at the same time, only one of them can be applied. According to the maximum spikes consumption strategy of DTNP-MCIR systems, when , the rule is applied, otherwise, the rule is applied, when , one of them will be non-deterministically selected. Note that this strategy is also effective for forgetting rules. For example, two rules and both satisfy firing conditions at time t, since the firing of forgetting rule consumes three spikes, however, the spiking rule only consumes one spike, then the forgetting rule will be chose and applied.

We assume that a transition step is from one configuration to another. A sequence of transitions from the initial configuration is defined as a calculation. For a configuration , if no rules in the system can be applied, the system calculation halts. Any calculation corresponds to a binary sequence, write 1 when the output neuron emit a spike to the environment, otherwise write 0. Therefore, the calculation result is defined as the time interval between the first two spikes emitted by the output neuron . represents a set of numbers calculated by system . denotes the families of all sets accepted by DTNP-MCIR systems having at most m neurons and at most n rules in each neuron. When m or n is not restricted, the symbol “*” is usually used instead. System can be used as a accepting device, at this time, the input neuron receives spikes from the environment, but the output neuron is removed from the system. The system imports spikes train from the environment, then stores the number n in the form of 2n spikes. When the system calculation halts, n is the number to be accepted by it. represents a set of numbers accepted by the system, denotes the families of all sets accepted by DTNP-MCIR systems having at most m neurons and at most n rules in each neuron.

2.2. Illustrative Example

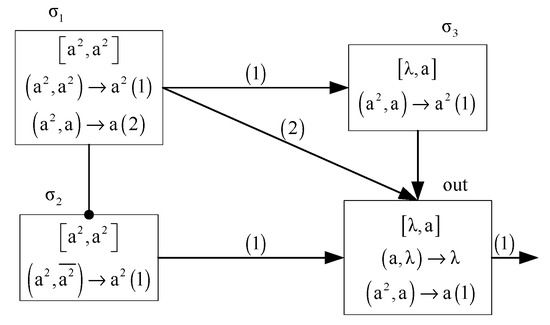

In order to clearly understand the working mechanism of DTNP-MCIR systems, we give an example that can generate a finite spike train, as shown in Figure 2.

Figure 2.

An illustrative example.

We assume that a DTNP-MCIR system consists of four neurons . Initially, the feeding input units of neuron and neuron each have two spikes, but there are no spikes in neuron and out, that is . The number of spikes in the initial dynamic threshold unit of the four neurons is respectively. Therefore, the initial configuration .

At time 1, since , rules and can be applied in neuron , and since the number of spikes consumption for both rules is , one of them will be selected non-deterministically. Therefore, there are the following two cases:

- (1)

- Case 1: if rule is applied in neuron at time 1, then neuron will consume two spikes in the feeding input unit and sends two spikes to the neuron through channel (1). Neuron is the inhibitory neuron of neuron , since and , rule reaches the firing condition in neuron , so neuron consumes two spikes in the feeding input unit and sends two spikes to the neuron out via channel (1) at time 1. Therefore, . At time 2, since , both rule and rule in neuron out can be applied. However, according to the maximum spike consumption strategy, rule will be applied and sends one spike to the environment through channel (1). Further, since , rule is enable in neuron and consumes two spikes in the feeding input unit and sends two spike to neuron out. Thus . At time 3, since , rule fires again and sends one spike to the environment. The system halts. Therefore, the spike train generated by this system is “011”.

- (2)

- Case 2: if rule is applied in neuron at time 1, then neuron consumes two spikes in the feeding input unit and sends one spikes to the neuron out through channel (2). Because the state of system is the same as that in case 1 at time 1, neuron consumes two spikes in the feeding input unit and sends two spikes to the neuron out through channel (1).So . At time 2, since , also according to the maximum spike consumption strategy, neuron out fires by the rule and sends one spike to the environment through channel (1). The configuration of System at this time is . At time 3, since , rule is applied and removes one spike in feeding input unit and the system halts. Therefore, the spike train generated is “010”.

3. Turing Universality of DTNP-MCIR Systems as Number-Generating/Accepting Device

In this section, we will explain the working mechanism of DTNP-MCIR systems as the number generating and number accepting mode. DTNP-MCIR systems proves its computational completeness by simulating register machines. More specifically, DTNP-MCIR systems can generate/accept all recursively enumerable sets of numbers (their family is called NRE).

A register machine can be defined as , where m is the number of registers, H indicates the set of instruction labels, corresponds to the start label, corresponds to the halt label, I denotes the set of instructions. Each instruction in I corresponds to a label in H, the instructions in I have the following three forms:

- (1)

- (add 1 to register r and then move non-deterministically to one of the instructions with labels , ).

- (2)

- (if register r is non-zero, then subtract 1 from it, and go to the instruction with label ; otherwise go to the instruction with label ).

- (3)

- (halting instruction).

3.1. DTNP-MCIR Systems as Number Generating Devices

Initially, every register in the register machine M is empty. The register machine can calculate the number n in the generation mode. It first starts with instruction , and then applies a series of instructions until it reaches the halting instruction . Finally, the number stored in the first register is the result calculated by the register machine. Usually the family NRE can be characterized by the register machine.

Theorem 1.

.

Proof.

As we all know, . So we only need to prove we consider a register machine that works in generation mode, assuming that all registers except register 1 are empty, and register 1 never decrements during the calculation. □

We designed a DTNP-MCIR system to simulate the register machine working in generating mode. The system includes three modules: ADD module, SUB module, and FIN module. ADD module and SUB module used to simulate the ADD instruction and the SUB instruction respectively, and FIN module deals with the calculation results of the system .

We stipulate that each register r of register machine M corresponds to a neuron , note that there are no rules in neuron ,numbers can be encoded in neuron . If the number stored in the register r is , then neuron contains 2n spikes. Each instruction l corresponds to a neuron , and some auxiliary neurons are introduced into models. Assume that there are no spikes in the feeding input unit of all auxiliary neurons, but neuron receives two spikes at the beginning. Each neuron has an initial threshold for the initial threshold unit: (i) the initial threshold of each instruction neuron is ; (ii) each register neuron contains an initial threshold of a; (iii) the initial threshold of other neurons is uncertain. Because neuron gets two spikes, the system begin to simulate the instruction n (OP represents one of ADD or SUB operations). Starting from the activated neuron , the simulation deals with neuron according to OP, and then two spikes are introduced to one of the neurons and . The simulation will continue until the neuron fires, indicating that the simulation is completed [30]. In the calculation process, the spikes are sent to the environment twice by neuron at times and respectively, the calculation result is defined as the interval of that is also the number contained in register1.

In order to verify that the system can indeed simulate the register machine M correctly, we will explain how the ADD or SUB module simulates the ADD or SUB instruction, and how the FIN module outputs the calculation results.

- (1)

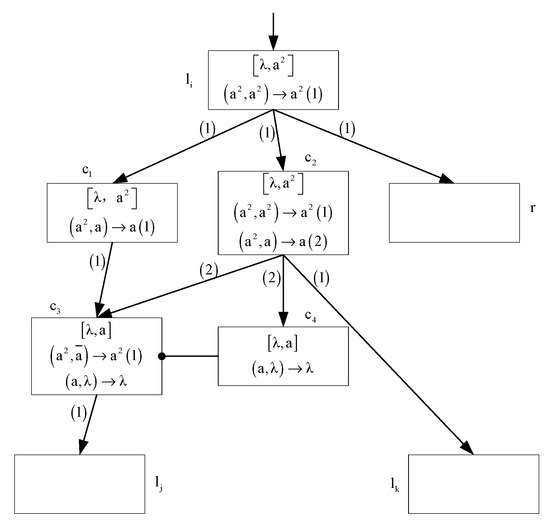

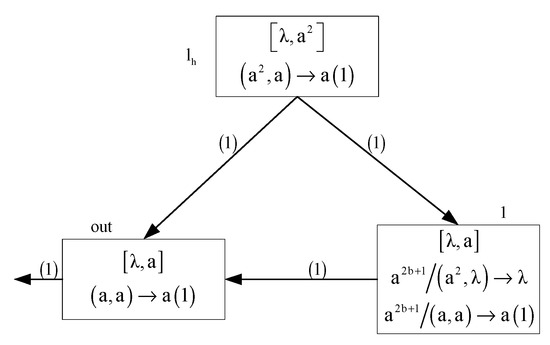

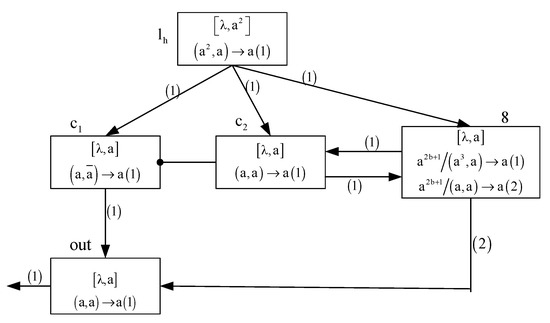

- ADD module (shown in Figure 3)—simulating an ADD instruction

Figure 3. Module ADD simulating the ADD instruction .

Figure 3. Module ADD simulating the ADD instruction .

The system starts from ADD instruction . Suppose an ADD instruction is simulated at time t. At this time, neuron contains two spikes and the configuration of module ADD is , which involves 7 neurons respectively. Thus, . Since , rule is applied in neuron ,then two spikes are sent to neurons via channel (1). Because neuron receives two spikes, register r increases by one. Thus, .

At time t + 1, both feeding input unit and dynamic threshold unit of neuron and neuron contain two spikes, so they fire. Rule in neuron is applied and send one spike to neuron . For neuron , rules and both satisfy the firing condition, and both consume an equal number of spikes, so one of them will be selected non-deterministically. We consider the following two cases:

- (i)

- At time t + 1, if rule is applied, neuron sends two spikes to neuron via channel (1). Then neuron receives two spikes, system starts to simulate instruction . Therefore . At time t + 3, since the rule of neuron is enabled and removes the only spike in the feeding input unit.

- (ii)

- At time t + 1, if rule is applied, neuron sends one spike to neurons and via channel (2). Thus, . Note that neuron is the inhibitory neuron of neuron , since , inhibitory rule is enabled, neuro n sends two spikes to neuron . This means that the system starts to simulate instruction . The configuration of at this time is .

Therefore, ADD instructions can be correctly simulated by the ADD module. Since neuron has two spikes, two spikes are added to neuron , and then neuron and are selected non-deterministically.

- (2)

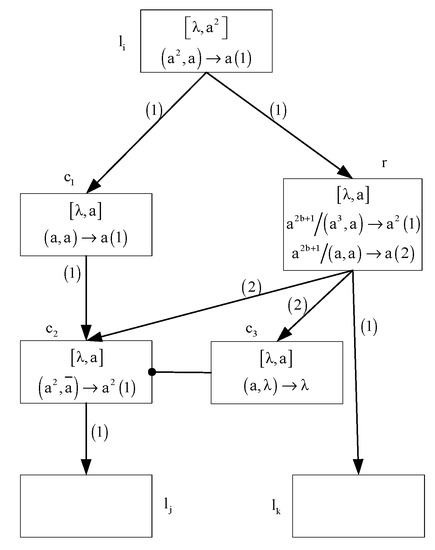

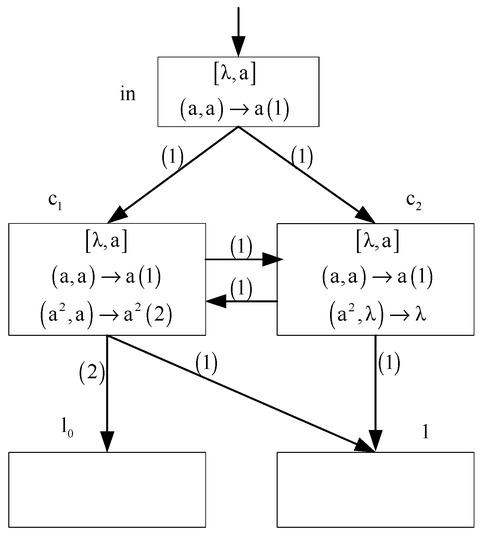

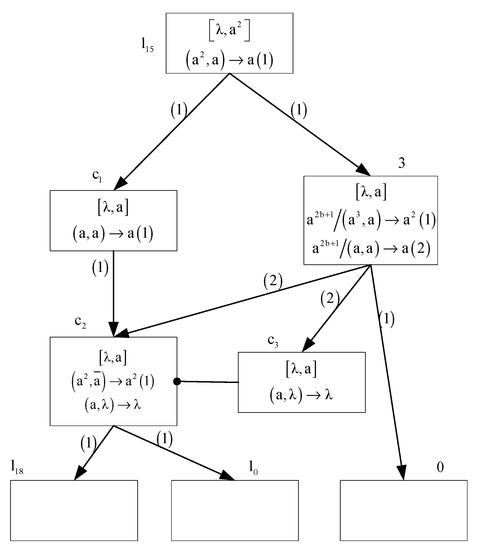

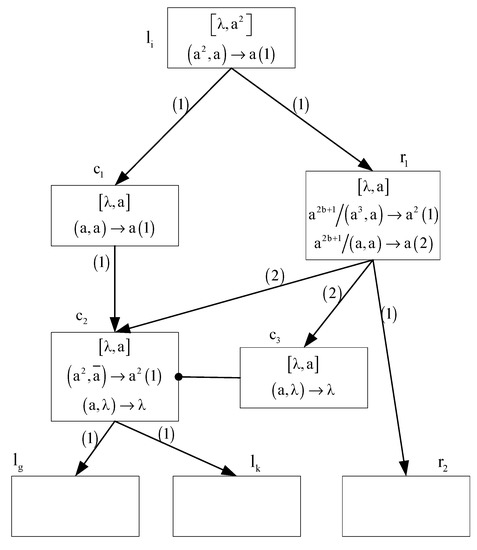

- SUB module (shown in Figure 4)—simulating a SUB instruction .

Figure 4. Module SUB simulating a SUB instruction .

Figure 4. Module SUB simulating a SUB instruction .

Suppose that the system starts to simulate the SUB instruction at time t, and neuron contains two spikes at the same time. The configuration of module SUB is , which involves 7 neurons respectively. Thus, . Since , neuron fires and sends a spike to neuron and via channel (1). Therefore . Based on the number of spikes in neuron , there are two cases:

- (i)

- If feeding input unit of neuron contains spikes at time t, then at time t + 1, neuron contains spikes. At time t + 2, rule will be enabled and neuron will send two spikes to neurons via channel (1). This indicates that system starts to simulate the instruction of register machine M. In addition, rule satisfies firing condition, neuron sends a spike to neuron . Thus . At time t+3, the forgetting rule of neuron is enabled and removes the only spike in the feeding input unit. Therefore, .

- (ii)

- If feeding input unit of neuron does not contain spikes at time t, then at time t + 1, neuron contains only one spike. At time t + 2, rule will be applied and neuron will transmit one spike to neurons and via channel (2). At the same time neuron also transmits a spike to neuron . So . Note that neuron is the inhibitory neuron of neuron . At time t + 3, the forgetting rule of neuron is enabled and removes the only spike in the feeding input unit. Moreover, since , rule is applied, neuron sends two spikes to neuron . This means that the system starts to simulate instruction . Thus, .

It can be seen from the above description that the SUB instruction can be correctly simulated by the SUB module. The system starts with neuron contains two spikes, then determine whether neuron or receives two spikes according to whether neuron contains spikes.

- (3)

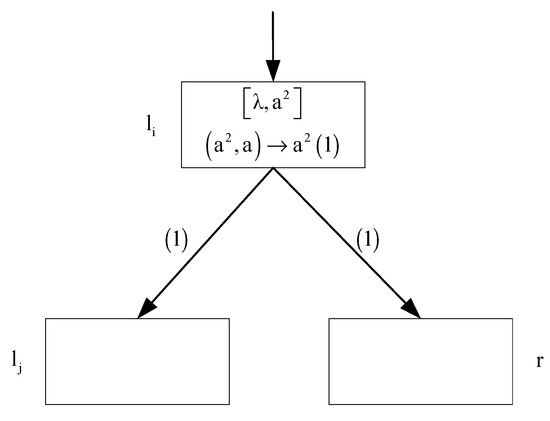

- Module FIN (shown in Figure 5)—outputting the result of computation

Figure 5. FIN module.

Figure 5. FIN module.

We assume that neuron contains 2n spikes. Since neuron has two spikes at time t, it means register machine M halts, the rule is enabled and sends a spike to neurons and . Therefore, the number of spikes in neuron becomes an odd number. At time t + 1, rule in neuron is enabled and sends the first spike to the environment. Moreover, both rule and rule can be applied in neuron . However, according to neuron’s maximum spike consumption strategy, forgetting rule is applied first and two spikes are eliminated. This process will repeat until there is only a spike in neuron . At time , neuron contains only a spike. Thus, the rule is applied and transmits a spike to neuron . At time , rule in neuron is enabled and sends the second spike to the environment. The time interval between two spikes being transmitted to the environment is , which is the number in register when the register machine halts.

According to the above description, it can be found that the system can correctly simulate register machine M working in generating module. Therefore, Theorem 1 holds.

3.2. Turing Universality of Systems Working in the Accepting Mode

In the following, we will prove the universal of DTNP-MCIR systems as number accepting device.

Theorem 2.

.

Proof.

A DTNP-MCIR system is constructed to simulate a register machine working in the accepting mode. The setting of the initial threshold is the same as that used in the proof of Theorem 1 and initially any auxiliary neurons do not contain spikes. The proof is based on a modification to the proof of Theorem 1. System contains a deterministic ADD module, a SUB module, and an INPUT module. □

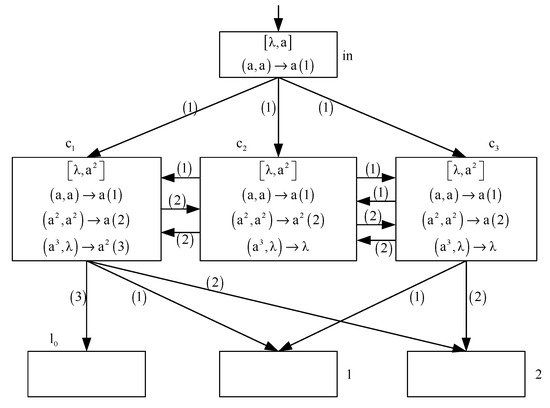

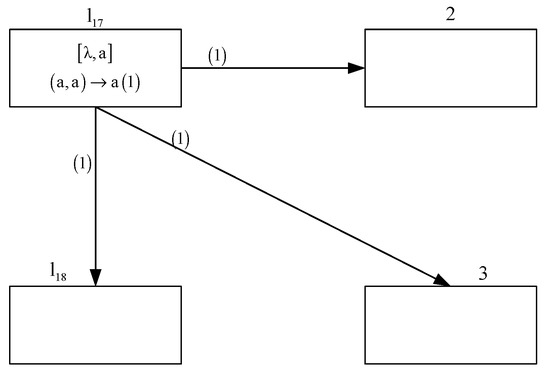

Figure 6 shows the input module where neuron is used to read the spike train from the environment. The number accepted by system is the interval ( ) between two spikes in the spike train.

Figure 6.

The INPUT module of .

Suppose that neuron imports the first spike from the environment at time t. Therefore, the initial configuration of System is , which involves 5 neurons respectively. Then rule in neuron is enabled and sends a spike to neurons and via channel (1). Thus . At time , the rule in neurons and is applied. From time t + 2 to the second spike is imported, neurons and both send a spike to neuron and exchange a spike with each other via channel (1). Thus neuron receives a total of 2n spikes from time t + 2 to time t + n + 1, which also indicates that the number contained in register 1 is n. At time t + n + 1, neuron receives the second spike. The configuration of system at this time is . At the next moment, the rule is enabled again and neuron sends a spike to neurons and . Thus, . Since neurons and each contain two spikes, the forgetting rule in neuron is enabled, so the two spikes in feeding input unit are removed. The rule in neuron is also applied and two spikes are transmitted to neuron via channel (2). Since neuron contains two spikes, system starts to simulate instruction .

In the acceptance mode, we use the deterministic ADD module as shown in Figure 7 to simulate the instruction in the register machine M. When neuron receives two spikes, rule is applied and two spikes is sent to neurons and . This means that system starts to simulate instruction and register r increases by 1. The SUB module shown in Figure 4 is used to simulate the instruction .

Figure 7.

Deterministic ADD module, simulating .

Module FIN is omitted, but the instruction remains in system . When neuron receives two spikes, it indicates that the halt instruction is reached, that is the register machine M halts.

Through the above description, we prove that system can correctly simulate the register machine M working in the accepting mode and can find that neurons of system contain at most two rules, Therefore, Theorem 2 holds.

4. DTNP-MCIR Systems as Function Computing Devices

In this part, we will discuss the ability of a small universal DTNP-MCIR system to calculate functions. A register machine is used to compute the function . Initially we assume that all registers are empty, k arguments are introduced into k registers. In general, only the first two registers are used. Then the register machine starts from the instruction and continues to calculate until it reaches the halt instruction . Finally, the function value is stored in a special register . is defined as a fixed admissible enumeration of the unary partial recursive functions. We think that a register machine is universal when there is a recursive function g that makes for all natural numbers x, y.

Korec [31] proposed a small universal register machines . contains 8 registers (labeled from 0 to 8) and 23 instructions. By introducing two numbers g(x) and y into registers 1 and 2, respectively, can be computed by . When stops, the function value is stored in register 0. In order to simplify the register machine , we introduce a new register 8 and replace the halt instruction with three new instructions: ; ; . We define the modified register machine as , as shown in Table 1. We will design a small universal DTNP-MCIR system to simulate the register machine .

Table 1.

Universal register machines .

Theorem 3.

There is a small universal DTNP-MCIR system having 73 neurons for computing functions.

Proof.

We design a DTNP-MCIR system to simulate the register machine . System contains an INPUT module, an OUTPUT module, and some ADD and SUB modules to simulate the ADD and SUB instructions of , respectively. The INPUT module is used to read the spike train from the environment, and the OUTPUT module deal with calculation result which is placed in register 8. Each register r and each instruction of corresponds to a neuron and respectively. If the feeding input unit of neuron contains 2n spikes, it means that the register r contains the number n. When neuron receives two spikes, the simulation of instruction is started. Initially, all neurons in system are assumed to be empty. □

The INPUT module is shown in Figure 8. The spike train is read from the environment by it, and 2g(x) spikes and 2y spikes will be stored in neurons and respectively. We do not use inhibitory rules in this module, but make full use of the function of multiple channels. Multiple channels have played a major role in saving neurons and improving system operation efficiency.

Figure 8.

Module INPUT.

Suppose that neuron receives the first spike form the environment at time .At time , rule is applied, and neuron sends a spike to neurons , and . Since neurons , and all contain a spike, the rule in three neurons is enabled at time . That is, neuron sends a spike to neuron , neuron and neuron exchange a spike with each other, as well as neuron and neuron each send a spike to neuron via channel (1). This process will repeat until the second spike reaches neuron . Therefore, neuron receives two spikes in each moment from time to time and imports a total of spikes. (That is, the number stored in neuron is ).

Assuming that the time for the second spike to reach neuron is , in fact . Similarly, rule in neuron is applied to send a spike to neurons , and at time . Then neurons , and each contain two spikes, so rule in neurons and is enabled and rule is applied in neurons at time . Then neurons and respectively send a spike to neuron via channel(2) and exchange spikes with neuron each step from this moment.

Neuron receives a total of 2y spikes from time to time (That is, the number stored in neuron is y). When the third spike is imported by neuron , the next moment neurons ,, and will each contain three spikes. Three spikes are consumed by forgetting rule in neuron and . Rule in neuron is applied to transmit two spikes to neuron via channel (3), this indicates that the system starts to simulate the initial instruction .

By observing Table 1, we can find that all ADD instructions have the form , therefore, we use the deterministic ADD module shown in Figure 7 to simulate the ADD instructions. Its working mechanism has been clarified in the proof of Theorem 2.

In addition, the SUB instruction can be simulated by the SUB module in Figure 4. The working mechanism of the SUB module has been discussed during the proof of Theorem 1.

When neuron receives two spikes at time t, the calculation of the system halts. The OUTPUT module shown in Figure 9 is used to deal with the calculation results. We assume that neuron contains 2n spikes. The configuration of OUTPUT module at time t is , which involves fives neurons respectively. Thus, . Rule is enabled, and neuron sends one spike to neurons and via channel (1) at time t. Therefore, . Since neuron is the inhibitory neuron of neuron and , rule is applied, neuron sends a spike to neuron . Rule in neuron and rule in neuron are also enabled. Although the rule consumes three spikes of neuron , because neuron and neuron exchange one spike with each other via channel (1), neuron only consumes two spikes each moment. Thus, . Rule is applied in neuron , then the first spike is sent to the environment at time t + 2. Rule will be executed continuously until neuron contains only one spikes. At time t + n + 1, neuron contains only a spike, rule is applied and neuron transmits a spike to neuron via channel (2). At time t + n + 2, rule is applied, neuron sends the second spike to the environment. According to the definition in Section 2, the result of the system is , which is also the number contained in register 8.

Figure 9.

OUTPUT module.

From the above description, it can be seen that the system can correctly simulate the register machine calculation function. System contains a total of 81 neurons: (1) the INPUT module has 3 auxiliary neurons; (2) the OUTPUT module has 2 auxiliary neurons; (3) each SUB module has 3 auxiliary neurons, for a total of 42 neurons; (4) 9 neurons correspond to 9 registers; (5) 25 neurons correspond to 25 instructions.

We can further reduce the number of neurons in system by combining instructions. Here are three cases:

Case 1. For the sequence of two consecutive ADD instructions and , we can use the model ADD-ADD in Figure 10 to simulate so that neuron corresponding to instruction can be saved.

Figure 10.

The model simulating consecutive ADD-ADD instructions and .

Case 2. We can also combine instructions and to form one instruction so that will be saved. The SUB-ADD-1 model shown in Figure 11 can be used to simulate newly formed instructions.

Figure 11.

The model simulating consecutive SUB-ADD-1 instructions and .

Case 3. The following six pairs of ADD and SUB instructions can be combined.

By observing the above six pairs of instructions, it can be seen that the latter ADD instruction is at the position of the first exit of the previous SUB instruction. Therefore, the combination instructions can be expressed as . In this way, we can save six neurons. The recombined SUB and ADD instructions can be simulated by the SUB-ADD-2module in Figure 12.

Figure 12.

The model simulating consecutive SUB-ADD-2 instructions .

In summary, we can save eight neurons in total by combining some ADD/SUB instructions. The recombined instructions can be simulated with ADD-ADD, SUB-ADD-1and SUB-ADD-2modules respectively. Therefore, the number of neurons in system dropped from 81 to 73. So far, we have completed the proof of Theorem 3.

In order to further illustrate the computing power of DTNP-MCIR systems, we compared it with some computing models in term of small number of computing units. From Table 2, we can see that DTNP systems, SNP systems, SNP-IR systems and recurrent neural networks need 109,67,100 and 886 neurons respectively to achieve Turing universality for computing function. However, DTNP-MCIR systems need fewer neurons than them. Although SNP-MC systems require only 38 neurons to compute function, which is much less than the computing unit required by DTNP-MCIR systems, they have different working modes. SNP-MC systems work in asynchronous mode but DTNP-MCIR systems work in synchronous mode. Therefore, the comparison indicates that DTNP-MCIR systems need fewer neurons than most of modes. In addition, Table 3 gives the full name of the comparative model abbreviation.

Table 2.

The comparison of different computing models in the term of small number of computing units.

Table 3.

Abbreviations and corresponding full names of some systems cited in the paper.

5. Conclusions and Further Work

Inspired by SNP systems with inhibitory rules (SNP-IR systems) and the learning of SNP systems with multiple channels (SNP-MC systems), this paper proposes a dynamic threshold neural P system with inhibitory rules and multiple channels (DTNP-MCIR systems). In fact, dynamic threshold neural P systems (DTNP systems) had been researched and proved to be Turing universal number generating/accepting device. Our original intention to construct DTNP-MCIR systems is to more fully simulate the actual situation of neurons communicating through synapses, and also to show the use of inhibitory rules and multiple channels in DTNP systems. In addition, we have optimized DTNP systems. The form of firing rules is , where , and . However, and are always equal in the DTNP system, we think it lacks generality and improve it; For the rule of neuron in the SUB module, If neuron contains 2n spikes at time t and , then neuron can be fired at time t, which is unreasonable for the entire system. Therefore, we improve the form of firing rules as , where is a regular expression we introduced, which means that neuron can be fired only when the number of spikes is an odd number.

In the future, we want to research whether DTNP-MCIR systems can be combined with certain algorithms, we think that the two data units of DTNP-MCIR systems can be used as two parameter inputs, the use of inhibitory rules and multiple channels make them have stronger control capabilities. Moreover, because DTNP-MCIR systems are a distributed parallel computing model, they can greatly improve the computational efficiency of algorithms. Future work will focus on using DTNP-MCIR systems to solve real-world problems, such as image processing and data clustering

Author Contributions

Conceptualization, X.Y. and X.L.; methodology, X.Y.; formal analysis, X.Y.; writing—original draft preparation, X.Y.; writing—review and editing, X.Y.; visualization, X.L.; funding acquisition, X.Y. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research project is supported by National Natural Science Foundation of China (61876101, 61802234, 61806114), Social Science Fund Project of Shandong Province, China (16BGLJ06, 11CGLJ22), Natural Science Fund Project of Shandong Province, China (ZR2019QF007), Postdoctoral Project, China (2017M612339, 2018M642695), Humanities and Social Sciences Youth Fund of the Ministry of Education, China (19YJCZH244), Postdoctoral Special Funding Project, China (2019T120607).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Păun, G. Computing with membranes. J. Comput. Syst. Sci. 2000, 61, 108–143. [Google Scholar] [CrossRef]

- Song, T.; Gong, F.; Liu, X. Spiking neural P systems with white hole neurons. IEEE Trans. NanoBiosci. 2016, 15, 666–673. [Google Scholar] [CrossRef]

- Freund, R.; Păun, G.; Pérez-Jiménez, M.J. Tissue-like P systems with channel-states. Theor. Comput. Sci. 2005, 330, 101–116. [Google Scholar] [CrossRef]

- Song, T.; Pan, L.; Wu, T. Spiking neural P Systems with leaning functions. IEEE Trans. NanoBiosci. 2019, 18, 176–190. [Google Scholar] [CrossRef] [PubMed]

- Cabarle, F.G.C.; Adorna, H.N.; Jiang, M.; Zeng, X. Spiking neural P systems with scheduled synapses. IEEE Trans. NanoBiosci. 2017, 16, 792–801. [Google Scholar] [CrossRef] [PubMed]

- Song, T.; Pan, L. Spiking neural P systems with rules on synapses working in maximum spiking strategy. IEEE Trans. NanoBiosci. 2015, 14, 465–477. [Google Scholar] [CrossRef]

- Ionescu, M.; Păun, G.; Yokomori, T. Spiking neural P systems. Fund. Inform. 2006, 71, 279–308. [Google Scholar]

- Song, T.; Pan, L. Spiking neural P systems with request rules. Neurocomputing 2016, 193, 193–200. [Google Scholar] [CrossRef]

- Zeng, X.; Zhang, X. Spiking Neural P Systems with Thresholds. Neural Comput. 2014, 26, 1340–1361. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, X. Spiking Neural P Systems with Neuron Division and Dissolution. PLoS ONE 2016, 11, e0162882. [Google Scholar] [CrossRef]

- Wang, J.; Shi, P.; Peng, H.; Perez-Jimenez, M.J.; Wang, T. Weighted Fuzzy Spiking Neural P Systems. IEEE Trans. Fuzzy Syst. 2012, 21, 209–220. [Google Scholar] [CrossRef]

- Jiang, K.; Song, T.; Pan, L. Universality of sequential spiking neural P systems based on minimum spike number. Theor. Comput. Sci. 2013, 499, 88–97. [Google Scholar] [CrossRef]

- Zhang, X.; Luo, B. Sequential spiking neural P systems with exhaustive use of rules. Biosystems 2012, 108, 52–62. [Google Scholar] [CrossRef] [PubMed]

- Cavaliere, M.; Ibarra, O.H.; Păun, G.; Egecioglu, O.; Ionescu, M.; Woodworth, S. Asynchronous spiking neural P systems. Theor. Comput. Sci. 2009, 410, 2352–2364. [Google Scholar] [CrossRef]

- Song, T.; Pan, L.; Păun, G. Asynchronous spiking neural P systems with local synchronization. Inf. Sci. 2013, 219, 197–207. [Google Scholar] [CrossRef]

- Song, T.; Zou, Q.; Liu, X.; Zeng, X. Asynchronous spiking neural P systems with rules on synapses. Neurocomputing 2015, 151, 1439–1445. [Google Scholar] [CrossRef]

- Păun, G. Spiking neural P systems with astrocyte-like control. J. Univers. Comput. Sci. 2007, 13, 1707–1721. [Google Scholar]

- Wu, T.; Păun, A.; Zhang, Z.; Pan, L. Spiking Neural P Systems with Polarizations. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 3349–3360. [Google Scholar]

- Păun, A.; Păun, G. Small universal spiking neural P systems. BioSystems 2007, 90, 48–60. [Google Scholar] [CrossRef]

- Cabarle, F.; Adorna, H.; Perez-Jimenez, M.; Song, T. Spiking neuron P systems with structural plasticity. Neural Comput. Appl. 2015, 26, 1905–1917. [Google Scholar] [CrossRef]

- Peng, H.; Chen, R.; Wang, J.; Song, X.; Wang, T.; Yang, F.; Sun, Z. Competitive spiking neural P systems with rules on synapses. IEEE Trans. NanoBiosci. 2017, 16, 888–895. [Google Scholar] [CrossRef] [PubMed]

- Xiong, G.; Shi, D.; Zhu, L.; Duan, X. A new approach to fault diagnosis of power systems using fuzzy reasoning spiking neural P systems. Math. Probl. Eng. 2013, 2013, 1–13. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, G.X.; Zhao, J.B.; He, Z.Y.; Wang, J.; Pérez-Jiménez, M.J. Fault diagnosis of electric power systems based on fuzzy reasoning spiking neural P systems. IEEE Trans. Power Syst. 2015, 30, 1182–1194. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J.; Ming, J.; Shi, P.; Pérez-Jiménez, M.J.; Yu, W.; Tao, C. Fault diagnosis of power systems using intuitionistic fuzzy spiking neural P systems. IEEE Trans. Smart Grid. 2018, 9, 4777–4784. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J.; Shi, P.; Pérez-Jiménez, M.J.; Riscos-Núñez, A. An extended membrane system with active membrane to solve automatic fuzzy clustering problems. Int. J. Neural Syst. 2016, 26, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G.; Rong, H.; Neri, F.; Pérez-Jiménez, M. An optimization spiking neural P system for approximately solving combinatorial optimization problems. Int. J. Neural Syst. 2014, 24, 1440006. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Yang, J.; Wang, J.; Wang, T.; Sun, Z.; Song, X.; Lou, X.; Huang, X. Spiking neural P systems with multiple channels. Neural Netw. 2017, 95, 66–71. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Li, B.; Wang, J. Spiking neural P systems with inhibitory rules. Knowl.-Based Syst. 2020, 188, 105064. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J. Dynamic threshold neural P systems. Knowl.-Based Syst. 2019, 163, 875–884. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J. Coupled Neural P Systems. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 1672–1682. [Google Scholar] [CrossRef]

- Korec, I. Small universal register machines. Theor. Comput. Sci. 1996, 168, 267–301. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, X.; Pan, L. Smaller universal spiking neural P systems. Fundam. Inf. 2007, 87, 117–136. [Google Scholar]

- Siegelmann, H.T.; Sontag, E.D. On the computational power of neural nets. J. Comput. Syst. 1995, 50, 132–150. [Google Scholar] [CrossRef]

- Song, X.; Peng, H. Small universal asynchronous spiking neural P systems with multiple channels. Neurocomputing 2020, 378, 1–8. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).