A Lightweight Infrared and Visible Light Multimodal Fusion Method for Object Detection in Power Inspection

Abstract

1. Introduction

2. Research Status and Analysis

3. Model Construction

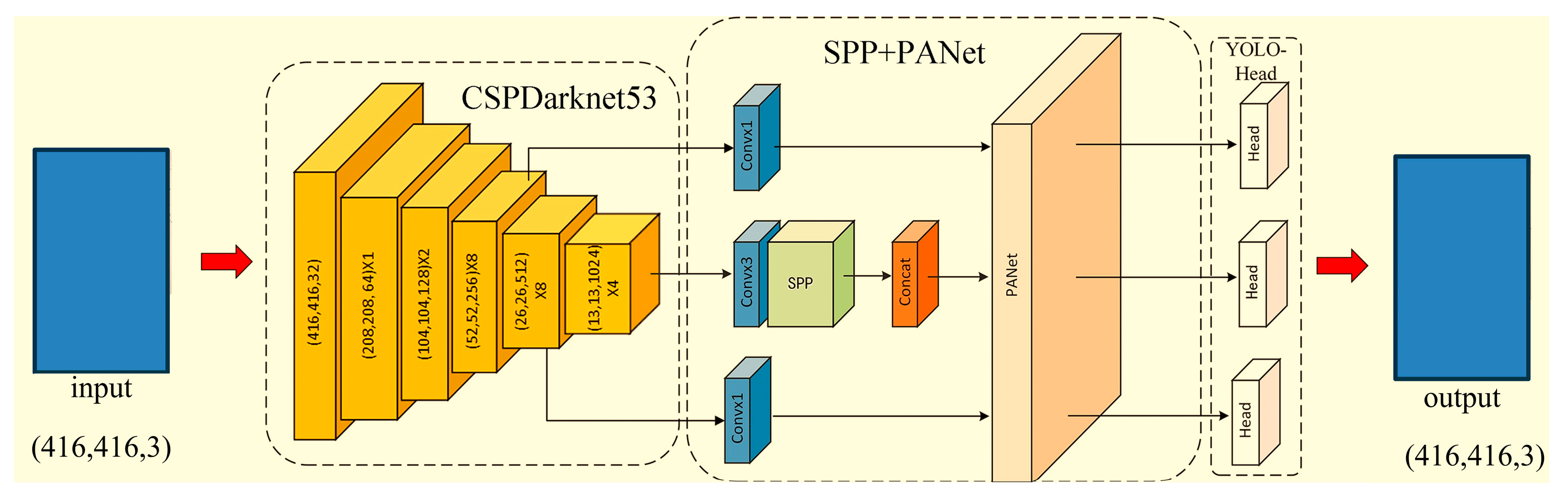

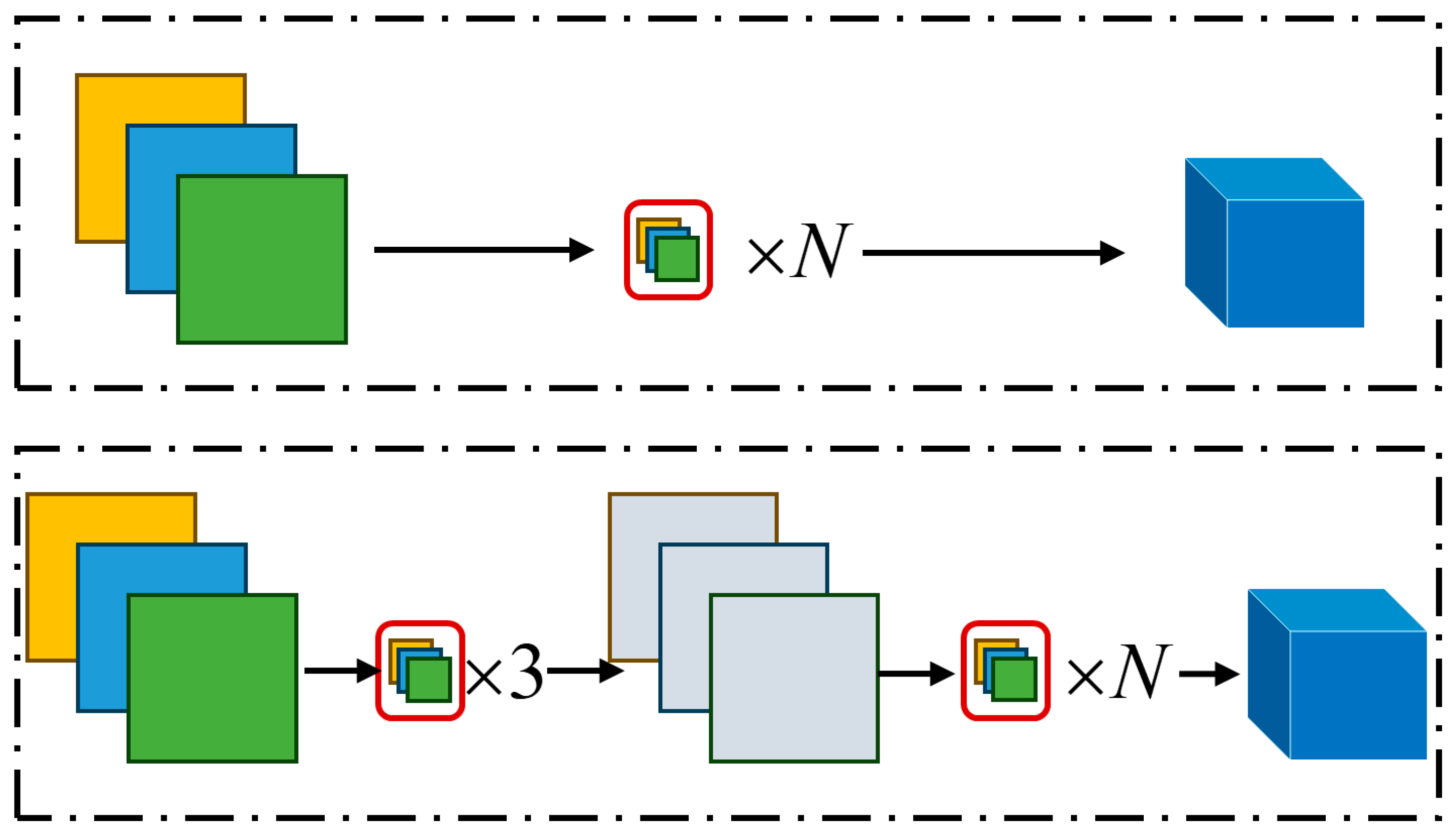

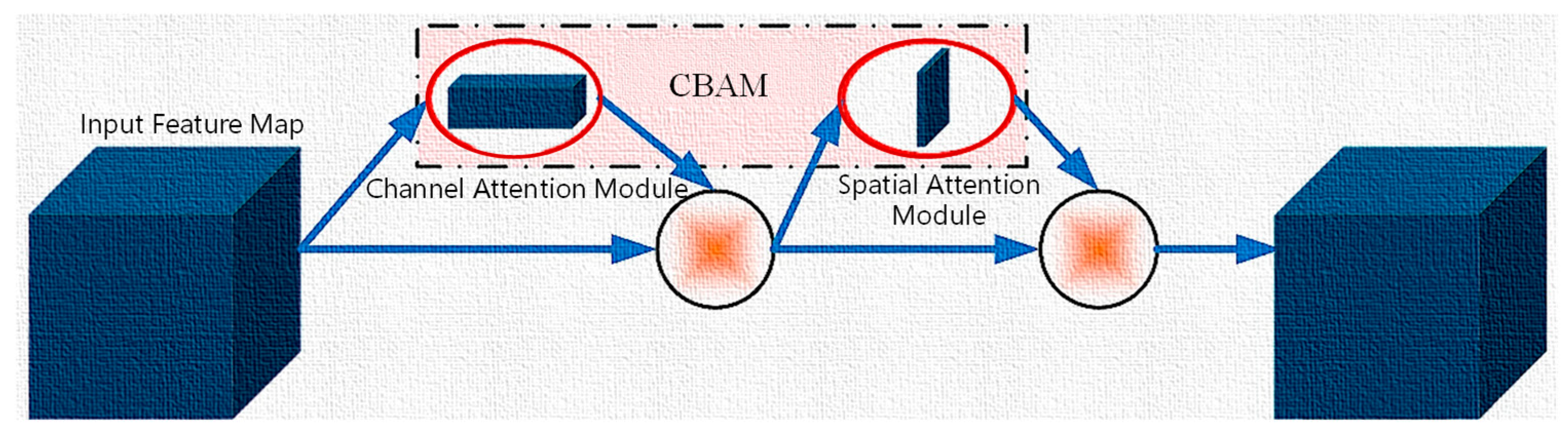

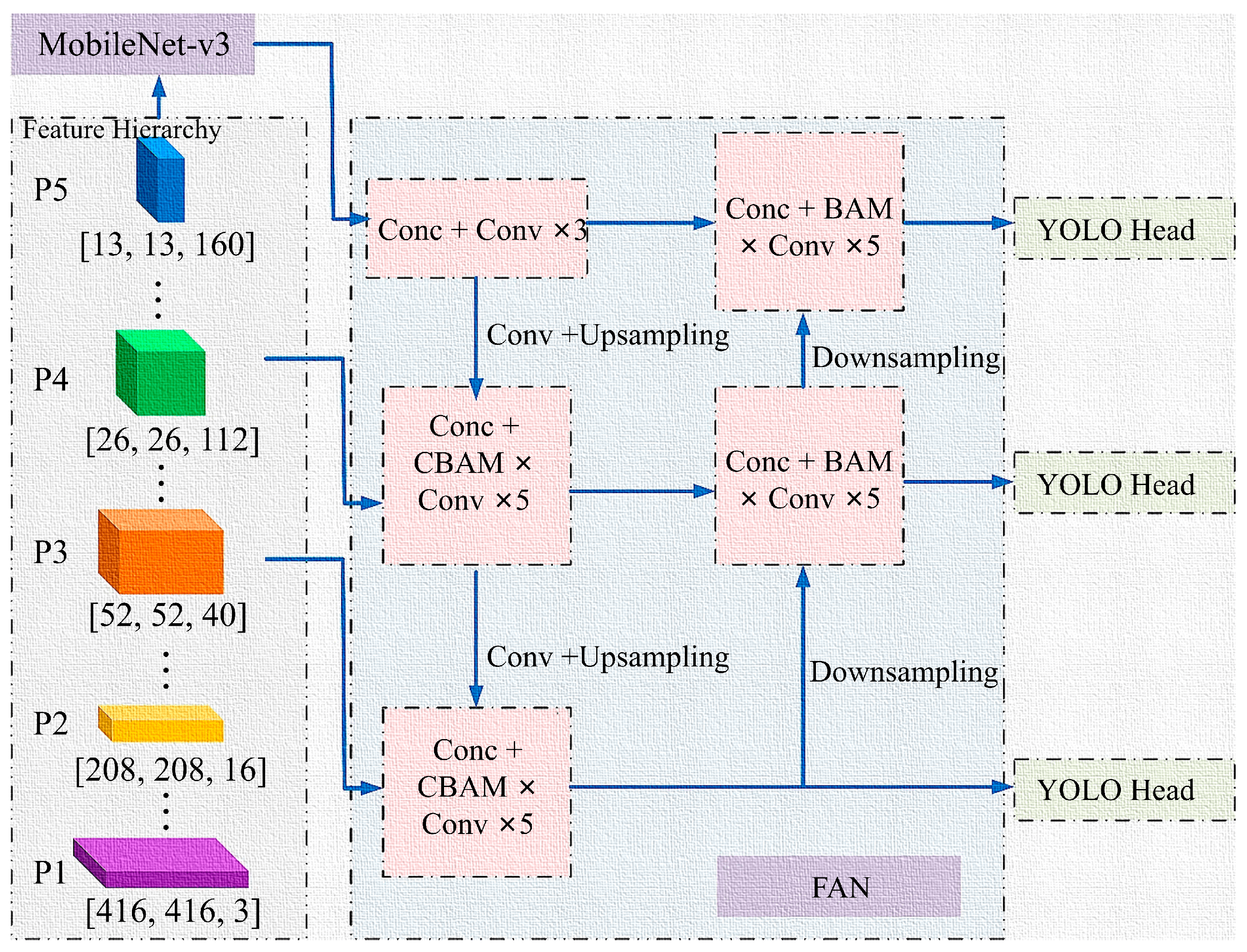

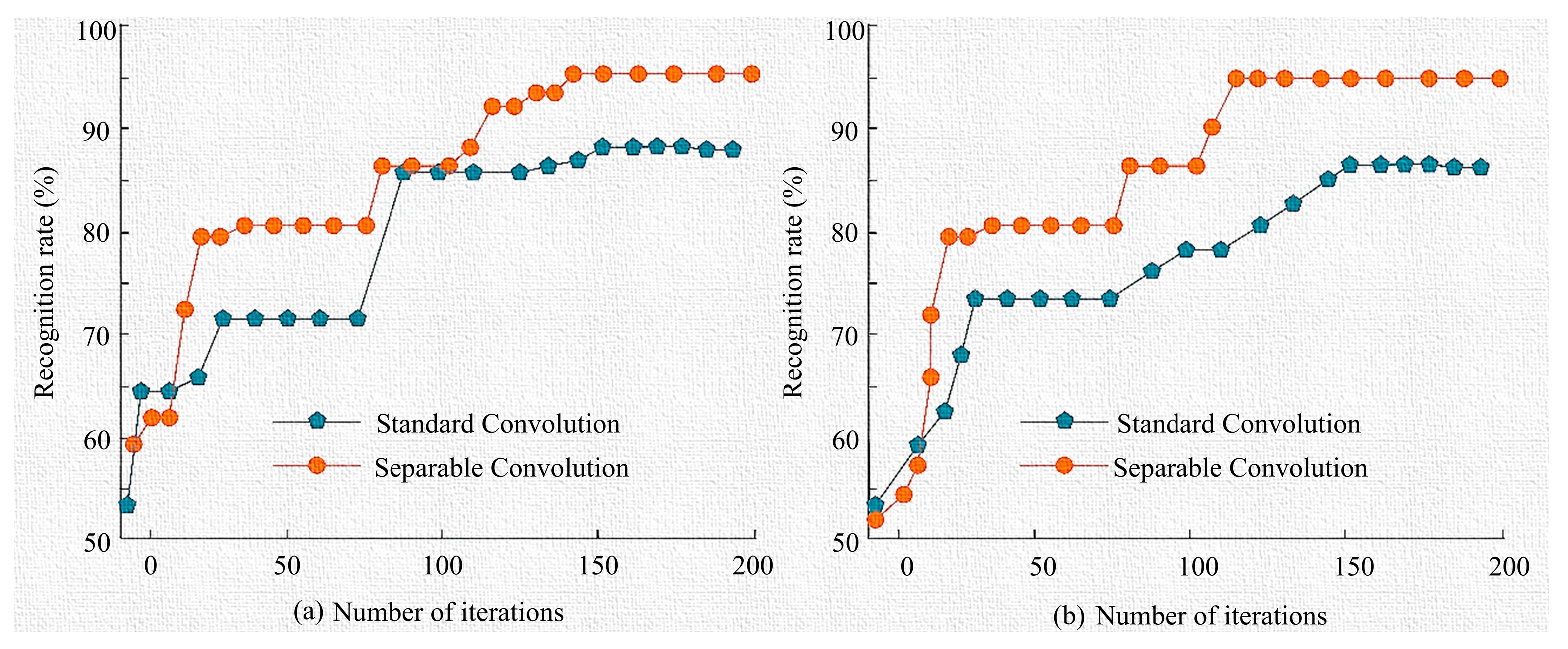

3.1. Construction of a Lightweight Multimodal Optical Image Processing Model Based on CBAM-YOLOv4

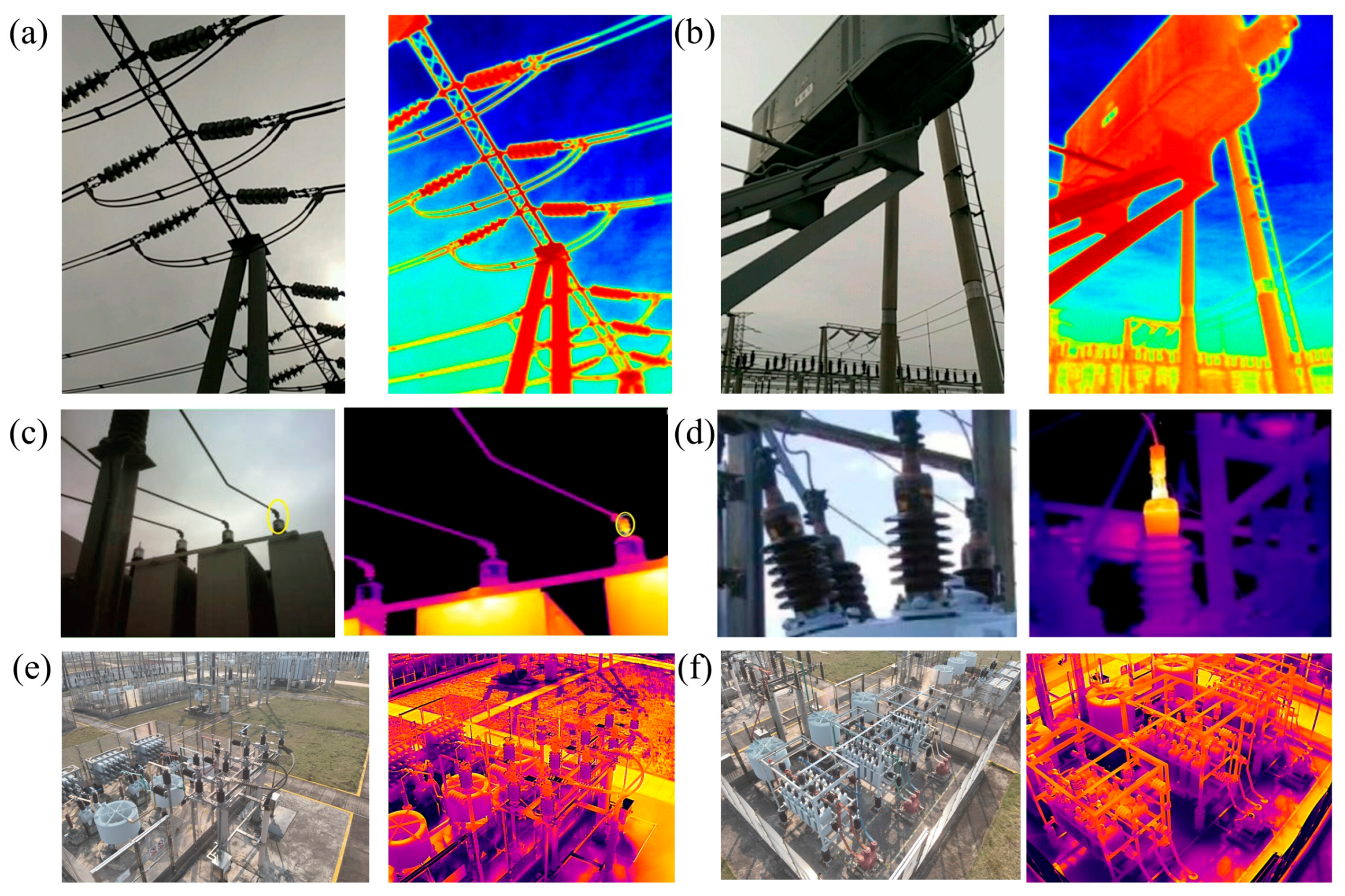

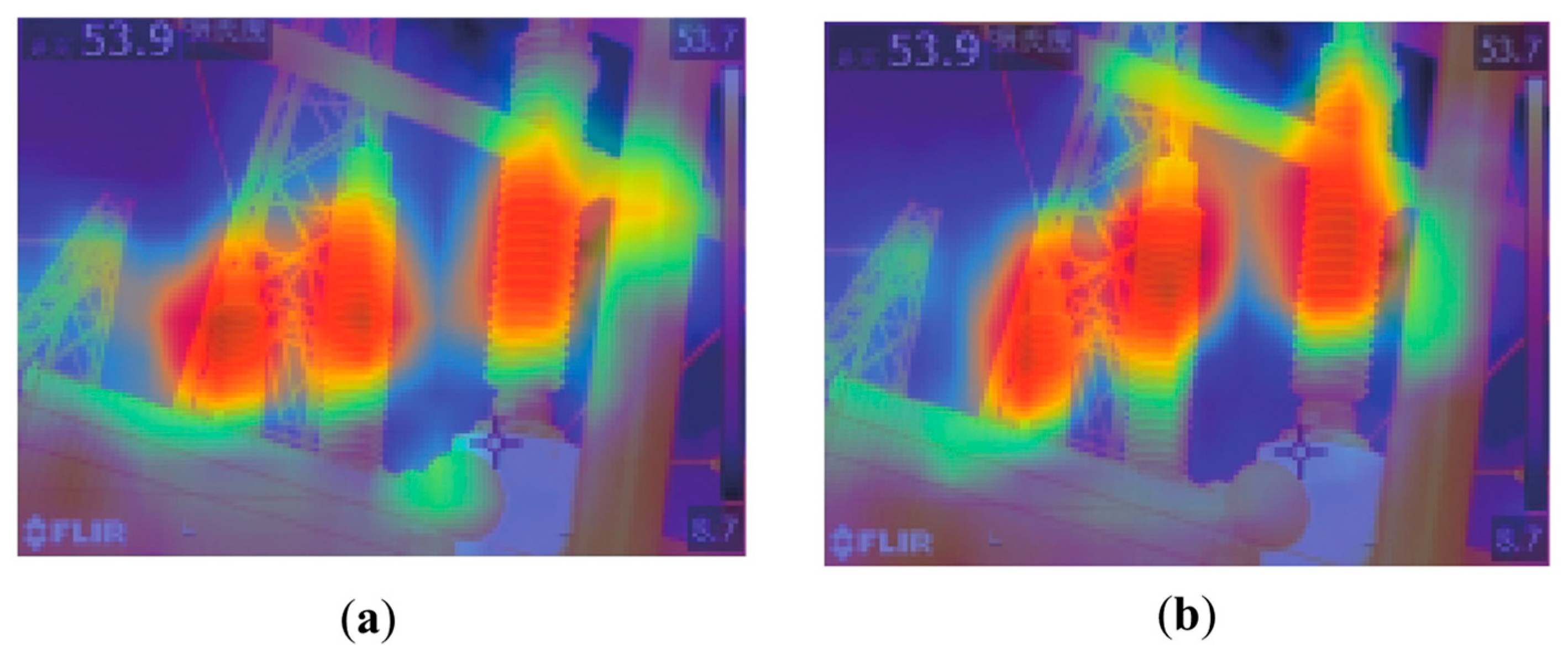

3.2. Multimodal Information Fusion-Driven CBAM-YOLOv4 Application for Substation Inspection

4. Results and Analysis

4.1. Experimental Setup and Dataset

4.2. Model Performance Evaluation

4.3. Model Characteristics Analysis

4.4. Edge Device Performance Validation

4.5. Ablation Study on Attention Mechanism

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kim, S.; Kim, D.; Jeong, S.; Ham, J.-W.; Lee, J.-K.; Oh, K.-Y. Fault Diagnosis of Power Transmission Lines Using a UAV-Mounted Smart Inspection System. IEEE Access 2020, 8, 149999–150009. [Google Scholar] [CrossRef]

- Piancó, F.; Moreira, A.; Fanzeres, B.; Jiang, R.; Zhao, C.; Heleno, M. Decision-Dependent Uncertainty-Aware Distribution System Planning Under Wildfire Risk. IEEE Trans. Power Syst. 2025, 1–15. [Google Scholar] [CrossRef]

- Zhou, N.; Luo, L.; Sheng, G.; Jiang, X. Scheduling the Imperfect Maintenance and Replacement of Power Substation Equipment: A Risk-Based Optimization Model. IEEE Trans. Power Deliv. 2025, 40, 2154–2166. [Google Scholar] [CrossRef]

- Raghuveer, R.M.; Bhalja, B.R.; Agarwal, P. Real-Time Energy Management System for an Active Distribution Network with Multiple EV Charging Stations Considering Transformer’s Aging and Reactive Power Dispatch. IEEE Trans. Ind. Appl. 2025, 1–13. [Google Scholar] [CrossRef]

- Hrnjic, T.; Dzafic, I.; Ackar, H. Data Model for Three Phase Distribution Network Applications. In Proceedings of the 2019 XXVII International Conference on Information, Communication and Automation Technologies (ICAT), Sarajevo, Bosnia and Herzegovina, 20–23 October 2019; pp. 1–5. [Google Scholar]

- Zhang, L.; Hu, L.; Wang, D. Mechanistic Unveiling of Transformer Circuits: Self-Influence as a Key to Model Reasoning 2025. Available online: https://aclanthology.org/2025.findings-naacl.76.pdf (accessed on 1 January 2025).

- Chung, Y.; Lee, S.; Kim, W. Latest Advances in Common Signal Processing of Pulsed Thermography for Enhanced Detectability: A Review. Appl. Sci. 2021, 11, 12168. [Google Scholar] [CrossRef]

- Shi, B.; Jiang, Y.; Xiao, W.; Shang, J.; Li, M.; Li, Z.; Chen, X. Power Transformer Vibration Analysis Model Based on Ensemble Learning Algorithm. IEEE Access 2025, 13, 37812–37827. [Google Scholar] [CrossRef]

- Feng, J.; Shang, R.; Zhang, M.; Jiang, G.; Wang, Q.; Zhang, G.; Jin, W. Transformer Abnormal State Identification Based on TCN-Transformer Model in Multiphysics. IEEE Access 2025, 13, 44775–44788. [Google Scholar] [CrossRef]

- Pinho, L.S.; Sousa, T.D.; Pereira, C.D.; Pinto, A.M. Anomaly Detection for PV Modules Using Multi-Modal Data Fusion in Aerial Inspections. IEEE Access 2025, 13, 88762–88779. [Google Scholar] [CrossRef]

- Wu, D.; Yang, W.; Li, J. Fault Detection Method for Transmission Line Components Based on Lightweight GMPPD-YOLO. Meas. Sci. Technol. 2024, 35, 116015. [Google Scholar] [CrossRef]

- Cao, X.; Yu, J.; Tang, S.; Sui, J.; Pei, X. Detection and Removal of Excess Materials in Aircraft Wings Using Continuum Robot End-Effectors. Front. Mech. Eng. 2024, 19, 36. [Google Scholar] [CrossRef]

- Li, C.; Shi, Y.; Lu, M.; Zhou, S.; Xie, C.; Chen, Y. A Composite Insulator Overheating Defect Detection System Based on Infrared Image Object Detection. IEEE Trans. Power Deliv. 2024, 40, 203. [Google Scholar] [CrossRef]

- He, Y.; Wu, R.; Dang, C. Low-Power Portable System for Power Grid Foreign Object Detection Based on the Lightweight Model of Improved YOLOv7. IEEE Access 2024, 13, 125301–125312. [Google Scholar] [CrossRef]

- Xu, X.; Liu, G.; Bavirisetti, D.P.; Zhang, X.; Sun, B.; Xiao, G. Fast Detection Fusion Network (FDFnet): An End to End Object Detection Framework Based on Heterogeneous Image Fusion for Power Facility Inspection. IEEE Trans. Power Deliv. 2022, 37, 4496–4505. [Google Scholar] [CrossRef]

- Wei, J.; Ma, H.; Lu, R. Challenges Driven Network for Visual Tracking. In International Conference on Image and Graphics; Springer: Cham, Switzerland, 2019; pp. 332–344. [Google Scholar]

- Wang, K.; Wu, B. Power Equipment Fault Diagnosis Model Based on Deep Transfer Learning with Balanced Distribution Adaptation. In International Conference on Advanced Data Mining and Applications; Springer: Cham, Switzerland, 2018; pp. 178–188. [Google Scholar]

- Shen, J.; Liu, N.; Sun, H. Vehicle Detection in Aerial Images Based on Lightweight Deep Convolutional Network. IET Image Process. 2020, 15, 479–491. [Google Scholar] [CrossRef]

- Zhou, S.; Liu, J.; Fan, X.; Fu, Q.; Goh, H.H. Thermal Fault Diagnosis of Electrical Equipment in Substations Using Lightweight Convolutional Neural Network. IEEE Trans. Instrum. Meas. 2023, 72, 3240210. [Google Scholar] [CrossRef]

- Parico, A.I.B.; Ahamed, T. Real Time Pear Fruit Detection and Counting Using YOLOv4 Models and Deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Volume abs/1807.06521, pp. 3–19. [Google Scholar]

- Vergura, S. Correct Settings of a Joint Unmanned Aerial Vehicle and Infrared Camera System for the Detection of Faulty Photovoltaic Modules. IEEE J. Photovolt. 2020, 11, 124–130. [Google Scholar] [CrossRef]

- Bai, X.; Wang, R.; Pi, Y.; Zhang, W. DMFR-YOLO: An Infrared Small Hotspot Detection Algorithm Based On Double Multi-Scale Feature Fusion. Meas. Sci. Technol. 2024, 36, 015422. [Google Scholar] [CrossRef]

- Wang, R.; Chen, J.; Wang, X.; Xu, J.; Chen, B.; Wu, W.; Li, C. Research on Infrared Image Extraction and Defect Analysis of Composite Insulator Based on U-Net. In Proceedings of the 2021 IEEE 5th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Xi’an, China, 15–17 October 2021; Volume 36, pp. 859–863. [Google Scholar]

- Hong, F.; Song, J.; Meng, H.; Wang, R.; Fang, F.; Zhang, G. A Novel Framework on Intelligent Detection for Module Defects of PV Plant Combining the Visible and Infrared Images. Sol. Energy 2022, 236, 406–416. [Google Scholar] [CrossRef]

- Li, X.; Wang, H.; He, Y.; Gao, Z.; Zhang, X.; Wang, Y. Active Thermography Non-Destructive Testing Going beyond Camera’s Resolution Limitation: A Heterogenous Dual-Band Single-Pixel Approach. IEEE Trans. Instrum. Meas. 2025, 74, 3545520. [Google Scholar] [CrossRef]

- Wang, H.; Hou, Y.; He, Y.; Wen, C.; Giron-Palomares, B.; Duan, Y.; Gao, B.; Vavilov, V.P.; Wang, Y. A Physical-Constrained Decomposition Method of Infrared Thermography: Pseudo Restored Heat Flux Approach Based on Ensemble Bayesian Variance Tensor Fraction. IEEE Trans. Ind. Inform. 2023, 20, 3413–3424. [Google Scholar] [CrossRef]

- Zhuang, J.; Chen, W.; Guo, B.; Yan, Y. Infrared Weak Target Detection in Dual Images and Dual Areas. Remote Sens. 2024, 16, 3608. [Google Scholar] [CrossRef]

- Wang, C.; Yin, L.; Zhao, Q.; Wang, W.; Li, C.; Luo, B. An Intelligent Robot for Indoor Substation Inspection. Ind. Robot. 2020, 47, 705–712. [Google Scholar] [CrossRef]

- Dong, L.; Chen, N.; Liang, J.; Li, T.; Yan, Z.; Zhang, B. A Review of Indoor-Orbital Electrical Inspection Robots in Substations. Ind. Robot. 2022, 50, 337–352. [Google Scholar] [CrossRef]

- Wang, Q.; Yang, L.; Zhou, B.; Luan, Z.; Zhang, J. YOLO-SS-Large: A Lightweight and High-Performance Model for Defect Detection in Substations. Sensors 2023, 23, 8080. [Google Scholar] [CrossRef]

- Kong, D.; Hu, X.; Zhang, J.; Liu, X.; Zhang, D. Design of Intelligent Inspection System for Solder Paste Printing Defects Based on Improved YOLOX. iScience 2024, 27, 109147. [Google Scholar] [CrossRef]

- Zhang, N.; Yang, G.; Wang, D.; Hu, F.; Yu, H.; Fan, J. A Defect Detection Method for Substation Equipment Based on Image Data Generation and Deep Learning. IEEE Access 2024, 12, 105042–105054. [Google Scholar] [CrossRef]

| Feature | Description/Value |

|---|---|

| Dataset Name | VISED |

| Data Modality | Visible Light and Infrared Thermography |

| Total Number of Image Pairs | 500 pairs |

| Data Source | Multiple real-world industrial sites and laboratory environments |

| Equipment Types | Transformers, switches, insulators, capacitors, connectors, etc. |

| Covered Scenarios | Various typical substation equipment, including normal conditions and common infrared thermal anomaly patterns |

| Image Registration | Synchronized collection of visible light and infrared images, spatial registration |

| Data Division | Training Set: 250 pairs; Test Set: 250 pairs |

| Basic Preprocessing | Image normalization, noise filtering, etc. |

| Component | Specifications |

|---|---|

| Device | NVIDIA Jetson Nano Developer Kit |

| CPU | Quad-core ARM® Cortex®-A57 MPCore processor |

| GPU | 128-core NVIDIA MaxwellTM architecture GPU |

| Memory | 4 GB 64-bit LPDDR4 |

| Software | NVIDIA JetPack SDK with TensorRT |

| Model | Weight File Size (MB) | mIoU (%) | mAP (%) | Frames Per Second (FPS) |

|---|---|---|---|---|

| YOLOv4 | 27 | 81.19 | 80.67 | 13.48 |

| YOLOv3 | 30 | 74.78 | 74.77 | 19.78 |

| Lightweight YOLOv4 | 19 | 64.19 | 59.59 | 25.29 |

| MASK-RCNN | 28 | 73.77 | 71.18 | 9.49 |

| CBAM-YOLOv4 | 25 | 85.12 | 82.28 | 31.53 |

| Value of α | mAP (%) |

|---|---|

| 0.3 | 81.6 |

| 0.4 | 82 |

| 0.5 (Optimal) | 82.28 |

| 0.6 | 82.1 |

| 0.7 | 81.2 |

| Model | mAP (%) | Inference Speed (FPS) on Jetson Nano |

|---|---|---|

| YOLOv4 | 80.67 | 6.8 |

| YOLOv3 | 74.77 | 9.5 |

| Lightweight YOLOv4 | 59.59 | 18.2 |

| MASK-RCNN | 71.18 | 1.3 (practically unusable) |

| CBAM-YOLOv4 | 82.28 | 21.7 |

| Precision Level | mAP (%) | Latency (ms) | FPS | Avg. Power (W) | Peak RAM (MB) |

|---|---|---|---|---|---|

| FP32 (Baseline) | 82.28 | 46.1 | 21.7 | 7.8 | 1150 |

| FP16 | 82.15 | 31.3 | 32 | 6.5 | 780 |

| INT8 | 80.91 | 24.5 | 40.8 | 5.2 | 620 |

| Model Configuration | mAP (%) | Latency (ms) | Parameters (M) |

|---|---|---|---|

| Lightweight YOLOv4 (Baseline) | 80.12 | 44.5 | 24.8 |

| Lightweight YOLOv4 + SE | 81.05 | 45.2 | 24.9 |

| Lightweight YOLOv4 + CBAM (Ours) | 82.28 | 46.1 | 25.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Kuang, J.; Teng, Y.; Xiang, S.; Li, L.; Zhou, Y. A Lightweight Infrared and Visible Light Multimodal Fusion Method for Object Detection in Power Inspection. Processes 2025, 13, 2720. https://doi.org/10.3390/pr13092720

Zhang L, Kuang J, Teng Y, Xiang S, Li L, Zhou Y. A Lightweight Infrared and Visible Light Multimodal Fusion Method for Object Detection in Power Inspection. Processes. 2025; 13(9):2720. https://doi.org/10.3390/pr13092720

Chicago/Turabian StyleZhang, Linghao, Junwei Kuang, Yufei Teng, Siyu Xiang, Lin Li, and Yingjie Zhou. 2025. "A Lightweight Infrared and Visible Light Multimodal Fusion Method for Object Detection in Power Inspection" Processes 13, no. 9: 2720. https://doi.org/10.3390/pr13092720

APA StyleZhang, L., Kuang, J., Teng, Y., Xiang, S., Li, L., & Zhou, Y. (2025). A Lightweight Infrared and Visible Light Multimodal Fusion Method for Object Detection in Power Inspection. Processes, 13(9), 2720. https://doi.org/10.3390/pr13092720