Abstract

A novel neural network (NN)-based parallel model predictive control (PMPC) method is proposed to deal with the tracking problem of the quadrotor unmanned aerial vehicles (Q-UAVs) system in this article. It is well known that the dynamics of Q-UAVs are changeable while the system is operating in some specific environments. In this case, traditional NN-based MPC methods are not applicable because their model networks are pre-trained and kept constant throughout the process. To solve this problem, we propose the PMPC algorithm, which introduces parallel control structure and experience pool replay technology into the MPC method. In this algorithm, an NN-based artificial system runs in parallel with the UAV system to reconstruct its dynamics model. Furthermore, the experience replay technology is used to maintain the accuracy of the reconstructed model, so as to ensure the effectiveness of the model prediction algorithm. Furthermore, a convergence proof of the artificial system is also given in this paper. Finally, numerical results and analysis are given to demonstrate the effectiveness of the PMPC algorithm.

1. Introduction

In the past decade, with the development of sensor and network technologies, Q-UAVs have attracted more and more attention from researchers. Due to the characteristics of efficient deployment and flexible mobility, Q-UAVs are widely used in the fields of nuclear power plant inspections [1,2], forest fire fighting [3,4] and land surveying [5,6]. However, it is challenging to design an effective control for the Q-UAVs system, because of the problems of under-actuating, strong non-linearity, and coupling. Recently, numerous algorithms have been developed by researchers under the framework of traditional control, such as feedback linearization control [7,8], fuzzy logic-based control [9,10], and sliding mode control(SMC) [11,12]. In [13], a non-linear PID controller is designed to deal with the tracking control problem of the Q-UAVs system, where the system energy is considered. A fuzzy-based backstepping SMC algorithm is developed to deal with the tracking problem of the UAVs with parameter uncertainties and external disturbances in [14]. In [15], a second-order SMC algorithm has been introduced to design the Q-UAV controller; however, the optimization characteristics, as an essential part of the control domain, are not considered in the above-mentioned traditional control algorithms.

It is well known, MPC is widely used in many fields as an effective optimal control method, such as the robot control [16,17], autonomous driving [18,19], and energy management [20,21], etc. Under the MPC algorithm scheme, the future system states and behaviors are predicted by the model of the plant, and the control law is optimized based on the analysis and evaluation of the prediction data. Due to obtaining the optimal control by minimizing the performance cost function which is subject to constraints, MPC also has a good performance in the robustness control. Many researchers have also introduced the MPC algorithm into the field of Q-UAV control. In [22], a novel non-linear MPC is proposed to deal with the navigation problem where obstacle avoidance is considered. According to the MPC algorithm scheme, it can be seen that the implementation of the MPC algorithm relies on the accuracy of the prediction model. MPC algorithm is applied to find the optimal paths in the atmosphere with the maximum of the UAV’s energy [23]. In [24], a novel adaptive MPC scheme is established for the angular rate and thrust control of a Q-UAV, where the thrust bound and the disturbances are considered; however, it is difficult to accurately model the dynamics of Q-UAVs due to the high complexity of the system.

Neural networks are widely used to approximate the unknown function for its universal approximation characteristics [25,26,27,28]. Under the MPC scheme, NN technology is introduced to approximate the dynamics model of the Q-UAVs. Many researchers have also made many contributions in this field. In [29], a novel reinforcement learning-based MPC algorithm is developed to deal with the tracking control problem with thrust vectoring capabilities. An offline learning approach is applied to learning the dynamics model of the hybrid Q-UAV in [30]. A Q-UAV controller is designed based on the learning-based MPC algorithm, which is used to provide levels of guarantees about safety, robustness, and convergence in [31]. Alessandro developed an active learning algorithm to deal with the control problem where the model of the Q-UAV is uncertainty-aware [32]. Several novel meta-learning approaches have been developed to approximate the distribution over different “tasks” [33,34]. In the research mentioned above, the NN model is pre-trained before the system runs, and the weight matrices are kept constant during the system operation. However, in some specific environments, such as nuclear radiation and forest fire environments, the dynamics model of the Q-UAVs changes as the system operates. The algorithms mentioned above are unreliable in this case.

Motivated by the above-mentioned problems, we propose a novel NN-based PMPC algorithm. The main contributions are summarized as follows:

- (1)

- Different from the exiting results [29], the model NN is not only used to approximate the position model of the Q-UAVs but also the whole dynamics model of the Q-UAVs. With our PMPC algorithm, the dynamics model of the Q-UAVs is fully unknown and which will make the optimal problem more difficult to be solved.

- (2)

- Different from the exiting results [30], the model NN is executed in parallel with the system and is continuously updated with the system runs in the PMPC algorithm. Compared with the unchangeable NN in [30,33,34], our algorithm can be applied in the condition where the dynamics model is changeable during the system running.

- (3)

- The experiment replay technology is introduced to the PMPC algorithm, which is used to maintain the accuracy of the reconstructed model to ensure the effectiveness of the model prediction algorithm.

The remainder of this article is organized as follows. The problem is formulated in Section 2. In Section 3, the parallel structure and the details of the PMPC algorithm are introduced. Numerical results and analysis are given to demonstrate the effectiveness of the PMPC algorithm in Section 4. Finally, the conclusion is presented in Section 5.

2. Problem Formulation

2.1. Dynamic Models of Q-UAVs

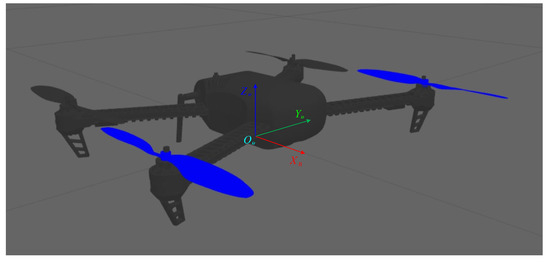

The dynamic models of Q-UAVs have been studied as a system with six degrees of freedom. Under the low speeds assumption, a simple, rigid-body model [35] of Q-UAVs is defined as

where , and indicate the state vector and control input of the system, respectively. Furthermore, as shown in Figure 1, , and denote the position and Euler angles in the inertial frame , respectively; and denote the velocity of the three axes and the angular velocity of three Euler angles, respectively; , and are the moments of inertia of the Q-UAV around three axes, respectively; and m is the mass of the Q-UAV; L is the length from the rotors to the center of mass; and are the moments of inertia and angular velocity of the propeller blades. Moreover, , and are the forces generated by the four propellers.

Figure 1.

The sketch of Q-UAV with body frame .

2.2. Model Predictive Control

Consider a non-linear system defined as

where and are denoted the state and the control input of the system at the time step k, respectively.

Select the as the finite prediction horizon steps, MPC is applied to minimize the performance cost over the steps at each time step k. The optimized control sequence can be obtained by solving the optimal function. The first term in the optimized control sequence is applied in the process and the rest will be applied to solve the optimal control function at the next time step , and so on.

2.3. Problem Statement

Under the traditional MPC scheme, the performance of the MPC algorithm is highly dependent on the accuracy of the model. However, the above model is constructed without consideration of the influences of some other sources, such as air drag, the gyroscope effect, and the hub force. To overcome the difficulty of the inaccurate model, a novel NN-based parallel control method is proposed in this paper.

3. Parallel MPC Method

In this section, the PMPC algorithm is introduced to obtain the optimal controller to deal with the tracking control problem for the Q-UAVs with a dynamic dynamics model.

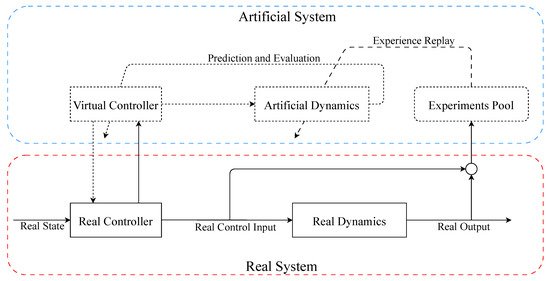

3.1. Algorithm Structure

In the PMPC algorithm, an artificial system is introduced to expand the real problem, which runs in parallel with the real system. The artificial space, between the artificial and real system, is introduced to solve the expanded real problem. The structure of the parallel MPC method can be shown in Figure 2.

Figure 2.

The structure of parallel MPC method.

From Figure 2, the parallel MPC method can be divided into three steps. Firstly, an artificial system is introduced to rebuild the dynamic model of the real system by learning the data observed from the actual system. The artificial system can keep the accuracy of the model with periodic learning of the data in the experiment pool. This is used to ensure the accuracy of the predictive experiments throughout the actual system run. Secondly, based on the artificial system, predictive experiments are performed to analyze the behavior of the Q-UAV system and evaluate the performance of the control laws. Based on the evaluated results, the optimal control law is updated. Thirdly, the appropriate control is applied to the real system with the interactional execution between the artificial and real systems.

3.2. Artificial System

Due to it being difficult to obtain accurate dynamic models of Q-UAVs, an artificial system, based on neural networks, is introduced to rebuild the dynamic model of the real system.

The discrete-time dynamic model of the Q-UAVs can be generally written as [36]

where and are the system state and control input at k instant.

According to the universal approximation property of the neural network, the ideal neural network representation of the system (3) can be written as

where , and are the neural network input, the ideal weight matrix, and the reconstruction error, respectively. Furthermore, and are the activation functions.

The artificial system can be defined as:

which also can be written in the form of a neural network

where is the approximation weight matrix of . The training process of the artificial system is expressed as Algorithm 1.

| Algorithm 1 Neural Networks-Based Artificial System |

| Initialization: |

| 1: Collect the data set as

|

| 2: Create a neural network as (6). |

| 3: Select the accuracy of modeling . |

| Training: |

| 4: Calculate the training error as

|

| 5: Adjust the weights to minimize the following error

|

| 6: Update the weight matrix with the following function:

|

| 7: Until the following function

|

Next, we will prove the convergence of the artificial system. Before proceeding, the following assumptions are necessary.

Assumption 1.

The reconstruction error satisfied the condition as

Assumption 2.

The activation function and the reconstruction error are bounded by the positive constant and , respectively.

Theorem 1.

Proof.

Consider the artificial system (6), and select the Lyapunov function as

The difference of (13) can be written as

According to the Cauchy–Schwarz inequality, we have

Based on the Assumption 2, we can obtain

Select to satisfy the condition as

Then, we have , which means the system identification error is asymptotically stable and the error matrices and both converge to zero, as . □

3.3. Predictive Experiments

After reconstructing the actual system, predictive experiments are executed to gain the optimal control of the artificial system (5). In the predictive experiments, the neural networks are applied to predict the state of the artificial system in future N steps. Thus, the system (5) can be rewritten as

where denote the predictive state of the system (5).

Let the control sequence defined as to be the predictive control in future N steps. Furthermore, the utility function is defined as

where with denote the target state at k instant; Q and R are semi-positive definite matrices with suitable dimensions. Thus, the cost function can be defined as

Then, the optimal control sequence can be obtained as

However, to maintain the safety and stability of the flight process, the following constraint should be applied in the optimization.

3.3.1. Input Constraint

In the parallel control scheme, the limits of the input of the Q-UAV is considered for the safety of the flight process. The constraint for the Q-UAV can be defined as

where the and denote the minimum and maximum of the input vector at the instant k.

3.3.2. Velocity Constraint

In the algorithm proposed in this article, the velocity of the Q-UAV is limited, which can be defined as

where and denote the minimum and maximum of the velocity of the Q-UAV in axis.

3.3.3. Angle Velocity Constraint

In this article, the limits of the angular velocities are also considered in the proposed algorithm, which can be defined as follows.

Similarly to (24), , , and denote the minimum angle velocity of three Euler angles, respectively; and , and denote the maximum angle velocity of three Euler angles, respectively.

Then, the parallel predictive experiment method under the constraints can be expressed as Algorithm 2.

| Algorithm 2 Parallel Experiment Algorithm |

| Initialization: |

| 1: Select the semi-positive matrices P and Q to construct the cost function as (21). |

| 2: Select the positive constant N as the control horizon. |

| Rolling Prediction: |

| 3: Collect data with the artificial system (6) based on current control law. |

| 4: Calculate the cost function by (21). |

| 5: Obtain the optimized control sequence according to the function (22) with the constraint (23). |

| 6: Implement the first element of the control sequence into the artificial system (6). |

3.4. Parallel Execution

In the above section, the predictive experiments are executed to gain the optimal control of the artificial system (5), which aims to control the discrete-time dynamic model (3) of the Q-UAVs. However, for the real Q-UAV system, it is difficult to rebuild the system dynamics in the whole time horizon by a single artificial system, because the system function is complex and unknown. Thus, to keep the accuracy of the model, the dataset in (7) is updated with time, which means the dataset always stores the latest data.

Combining the above sections, the Parallel MPC method can be expressed as Algorithm 3.

| Algorithm 3 Parallel MPC algorithm |

| Initialization: |

| 1: Select the positive integer as the maximum length of the experience pool. |

| 2: Select the semi-positive matrices P and Q. |

| 3: Select the positive integer N as the control horizon. |

| 4: Select the computation precision . |

| 5: Select the initial instant . |

| 6: Select the positive integer l as the length of the experience pool. |

| Parallel Predictive Control: |

| 7: if then |

| 8: Store the last state , control input and the system state , as a data pair, into the experience pool. |

| 9: else |

| 10: Remove the first data pair from the experience pool. |

| 11: Store the newest data pair in the experience pool. |

| 12: end if |

| 13: Training the artificial system with the experience pool until is satisfied. |

| 14: Predict the system state based on the artificial system in the control horizon N. |

| 15: Calculate the cost value based on the function (21) for every predictive instant. |

| 16: Calculate the optimal control based on the cost value. |

| 17: Applying the first optimal control into the actual system. |

4. Simulation Results

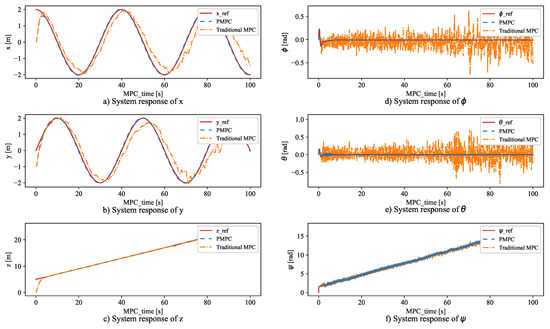

In this section, to demonstrate the effectiveness of the PMPC algorithm for UAVs, a comparison with traditional MPC is performed. The initial mass of the Q-UAV is chosen as kg; the moment of the inertia of the Q-UAV around the axes are chosen as kg·m, respectively; the moments of inertia of the propeller blades are chosen as kg·m; the length from the rotors to the center of mass is chosen as m; and the acceleration of gravity is chosen as 9.8 m/s.

The parameters in this simulation are chosen as

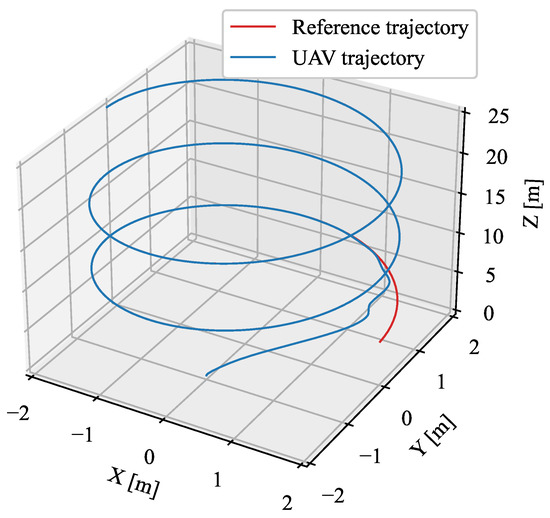

and the learning rate of the modelNN is chosen as , the activation function and are defined as sigmoid function. To reflect the internal dynamic uncertainties of the UAV dynamic model, 5% parameter inaccuracies are assumed during the model prediction process. Some constraints in this simulation are shown as Figure 3.

Figure 3.

The tracking histories of the UAV.

The target trajectory is chosen as

It is worth mentioning that the target Euler angles are defined to keep track of the target trajectory in this simulation. Furthermore, the constraints of the position velocity in this simulation are chosen as 1 m/s and the angular velocity is chosen as rad/s. The rotating speed of rotors is bounded in the range of r/s.

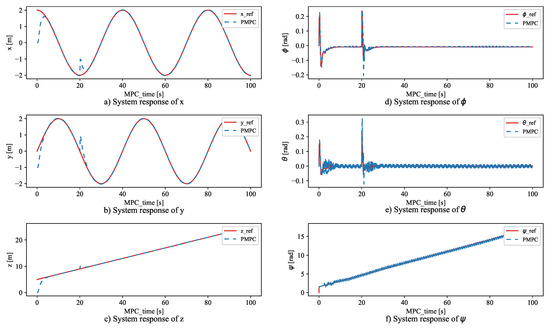

As shown in Figure 4, under the PMPC algorithm, the UAV tracked the target trajectory in 15 seconds. However, when it is under traditional MPC control, it cannot follow the target trajectory if the dynamics model is changeable. This means the PMPC algorithm has better performance when dealing with the tracking control problem with a dynamic dynamics model. To demonstrate the robustness of PMPC algorithm, the external disturbances are added at 20 s, and the results are shown in Figure 5. From Figure 5, the system can track the target trajectory in several seconds under the PMPC algorithm.

Figure 4.

System response of x, y, z, , , and .

Figure 5.

Tracking error of x, y, z, , , and .

5. Conclusions

In this article, a novel NN-based PMPC optimal tracking control algorithm is proposed for the UAV under a dynamic dynamics model. Due to the dynamics model of the UAV being changeable, which is difficult to deal with for traditional MPC algorithms, a neural network with experiment reply technology is applied to approximate the dynamic model as the system runs. Then, a parallel structure is constituted by the NN-based artificial system and the real system. Under the parallel structure, the MPC algorithm is applied to predict the future states of the artificial system and obtain the optimal control for the tracking control problem. Finally, a simulation is applied to demonstrate the effectiveness of the PMPC algorithm.

Author Contributions

Software, J.Q.; Investigation, J.C.; Writing—original draft, C.H.; Writing—review & editing, Z.X.; Funding acquisition, M.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Sichuan Province (2023NSFSC0872).

Data Availability Statement

Not applicable.

Acknowledgments

This work was supported by the Natural Science Foundation of Sichuan Province (2023NSFSC0872).

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

All symbols and their meanings are shown in the following Table

| Symbols | Meanings of the symbols |

| x | |

| y | |

| z | |

| g | |

| L | |

| , | |

References

- Han, J.; Xu, Y.; Di, L.; Chen, Y. Low-cost multi-UAV technologies for contour mapping of nuclear radiation field. J. Intell. Robot. Syst. 2013, 70, 401–410. [Google Scholar] [CrossRef]

- Jiang, G.; Voyles, R.M.; Choi, J.J. Precision fully-actuated uav for visual and physical inspection of structures for nuclear decommissioning and search and rescue. In Proceedings of the 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Philadelphia, PA, USA, 6–8 August 2018; pp. 1–7. [Google Scholar]

- Casbeer, D.W.; Beard, R.W.; McLain, T.W.; Li, S.M.; Mehra, R.K. Forest fire monitoring with multiple small UAVs. In Proceedings of the American Control Conference, Portland, OR, USA, 8–10 June 2005; pp. 3530–3535. [Google Scholar]

- Ollero, A.; Merino, L. Unmanned aerial vehicles as tools for forest-fire fighting. For. Ecol. Manag. 2006, 234, S263. [Google Scholar] [CrossRef]

- Jiménez-Jiménez, S.I.; Ojeda-Bustamante, W.; Marcial-Pablo, M.d.J.; Enciso, J. Digital terrain models generated with low-cost UAV photogrammetry: Methodology and accuracy. ISPRS Int. J. Geo-Inf. 2021, 10, 285. [Google Scholar] [CrossRef]

- Tariq, A.; Osama, S.M.; Gillani, A. Development of a low cost and light weight uav for photogrammetry and precision land mapping using aerial imagery. In Proceedings of the 2016 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 19–21 December 2016; pp. 360–364. [Google Scholar]

- Fang, Z.; Zhi, Z.; Jun, L.; Jian, W. Feedback linearization and continuous sliding mode control for a quadrotor UAV. In Proceedings of the 2008 27th Chinese Control Conference, Kunming, China, 16–18 July 2008; pp. 349–353. [Google Scholar]

- Choi, I.; Bang, H. Quadrotor-tracking controller design using adaptive dynamic feedback-linearization method. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2014, 228, 2329–2342. [Google Scholar] [CrossRef]

- Gao, H.; Liu, C.; Guo, D.; Liu, J. Fuzzy adaptive PD control for quadrotor helicopter. In Proceedings of the 2015 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; pp. 281–286. [Google Scholar]

- Yacef, F.; Bouhali, O.; Hamerlain, M. Adaptive fuzzy backstepping control for trajectory tracking of unmanned aerial quadrotor. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 920–927. [Google Scholar]

- Xiong, J.J.; Zhang, G. Discrete-time sliding mode control for a quadrotor UAV. Optik 2016, 127, 3718–3722. [Google Scholar] [CrossRef]

- Sun, L.; Zheng, Z. Finite-time sliding mode trajectory tracking control of uncertain mechanical systems. Asian J. Control 2017, 19, 399–404. [Google Scholar] [CrossRef]

- Salih, A.L.; Moghavvemi, M.; Mohamed, H.A.; Gaeid, K.S. Flight PID controller design for a UAV quadrotor. Sci. Res. Essays 2010, 5, 3660–3667. [Google Scholar]

- Khebbache, H.; Tadjine, M. Robust fuzzy backstepping sliding mode controller for a quadrotor unmanned aerial vehicle. J. Control. Eng. Appl. Inform. 2013, 15, 3–11. [Google Scholar]

- Zheng, E.H.; Xiong, J.J.; Luo, J.L. Second order sliding mode control for a quadrotor UAV. ISA Trans. 2014, 53, 1350–1356. [Google Scholar] [CrossRef]

- Incremona, G.P.; Ferrara, A.; Magni, L. MPC for robot manipulators with integral sliding modes generation. IEEE/ASME Trans. Mechatronics 2017, 22, 1299–1307. [Google Scholar] [CrossRef]

- Sun, Z.; Xia, Y.; Dai, L.; Liu, K.; Ma, D. Disturbance rejection MPC for tracking of wheeled mobile robot. IEEE/ASME Trans. Mechatronics 2017, 22, 2576–2587. [Google Scholar] [CrossRef]

- Kong, J.; Pfeiffer, M.; Schildbach, G.; Borrelli, F. Kinematic and dynamic vehicle models for autonomous driving control design. In Proceedings of the 2015 IEEE intelligent vehicles symposium (IV), Seoul, Republic of Korea, 28 June–1 July 2015; pp. 1094–1099. [Google Scholar]

- Qian, X.; Navarro, I.; de La Fortelle, A.; Moutarde, F. Motion planning for urban autonomous driving using Bézier curves and MPC. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 826–833. [Google Scholar]

- Borhan, H.; Vahidi, A.; Phillips, A.M.; Kuang, M.L.; Kolmanovsky, I.V.; Di Cairano, S. MPC-based energy management of a power-split hybrid electric vehicle. IEEE Trans. Control. Syst. Technol. 2011, 20, 593–603. [Google Scholar] [CrossRef]

- Di Cairano, S.; Bernardini, D.; Bemporad, A.; Kolmanovsky, I.V. Stochastic MPC with learning for driver-predictive vehicle control and its application to HEV energy management. IEEE Trans. Control. Syst. Technol. 2013, 22, 1018–1031. [Google Scholar] [CrossRef]

- Lindqvist, B.; Mansouri, S.S.; Agha-mohammadi, A.a.; Nikolakopoulos, G. Nonlinear MPC for collision avoidance and control of UAVs with dynamic obstacles. IEEE Robot. Autom. Lett. 2020, 5, 6001–6008. [Google Scholar] [CrossRef]

- Liu, Y.; Van Schijndel, J.; Longo, S.; Kerrigan, E.C. UAV energy extraction with incomplete atmospheric data using MPC. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 1203–1215. [Google Scholar] [CrossRef]

- Fresk, E.; Nikolakopoulos, G. A generalized Frame Adaptive MPC for the low-level control of UAVs. In Proceedings of the 2018 European Control Conference (ECC), Limassol, Cyprus, 12–15 June 2018; pp. 1815–1820. [Google Scholar] [CrossRef]

- Song, S.; Zhu, M.; Dai, X.; Gong, D. Model-Free Optimal Tracking Control of Nonlinear Input-Affine Discrete-Time Systems via an Iterative Deterministic Q-Learning Algorithm. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–14. [Google Scholar] [CrossRef]

- Bai, W.; Zhou, Q.; Li, T.; Li, H. Adaptive reinforcement learning neural network control for uncertain nonlinear system with input saturation. IEEE Trans. Cybern. 2019, 50, 3433–3443. [Google Scholar] [CrossRef]

- Liu, Y.J.; Zeng, Q.; Tong, S.; Chen, C.P.; Liu, L. Adaptive neural network control for active suspension systems with time-varying vertical displacement and speed constraints. IEEE Trans. Ind. Electron. 2019, 66, 9458–9466. [Google Scholar] [CrossRef]

- Ni, J.; Shi, P. Global predefined time and accuracy adaptive neural network control for uncertain strict-feedback systems with output constraint and dead zone. IEEE Trans. Syst. Man Cybern. Syst. 2020, 51, 7903–7918. [Google Scholar] [CrossRef]

- Jiang, B.; Li, B.; Zhou, W.; Lo, L.Y.; Chen, C.K.; Wen, C.Y. Neural Network Based Model Predictive Control for a Quadrotor UAV. Aerospace 2022, 9, 460. [Google Scholar] [CrossRef]

- Bauersfeld, L.; Kaufmann, E.; Foehn, P.; Sun, S.; Scaramuzza, D. Neurobem: Hybrid aerodynamic quadrotor model. arXiv 2021, arXiv:2106.08015. [Google Scholar]

- Bouffard, P.; Aswani, A.; Tomlin, C. Learning-based model predictive control on a quadrotor: Onboard implementation and experimental results. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 279–284. [Google Scholar]

- Saviolo, A.; Frey, J.; Rathod, A.; Diehl, M.; Loianno, G. Active Learning of Discrete-Time Dynamics for Uncertainty-Aware Model Predictive Control. arXiv 2022, arXiv:2210.12583. [Google Scholar]

- Belkhale, S.; Li, R.; Kahn, G.; McAllister, R.; Calandra, R.; Levine, S. Model-Based Meta-Reinforcement Learning for Flight With Suspended Payloads. IEEE Robot. Autom. Lett. 2021, 6, 1471–1478. [Google Scholar] [CrossRef]

- O’Connell, M.; Shi, G.; Shi, X.; Azizzadenesheli, K.; Anandkumar, A.; Yue, Y.; Chung, S.J. Neural-fly enables rapid learning for agile flight in strong winds. Sci. Robot. 2022, 7, eabm6597. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Tian, Z.; Lin, H. UAV based adaptive trajectory tracking control with input saturation and unknown time-varying disturbances. IET Intell. Transp. Syst. 2023, 17, 780–793. [Google Scholar] [CrossRef]

- Lu, J.; Han, L.; Wei, Q.; Wang, X.; Dai, X.; Wang, F.Y. Event-Triggered Deep Reinforcement Learning Using Parallel Control: A Case Study in Autonomous Driving. IEEE Trans. Intell. Veh. 2023, 8, 2821–2831. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).