Abstract

The Aquila Optimizer (AO) algorithm is a meta-heuristic algorithm with excellent performance, although it may be insufficient or tend to fall into local optima as as the complexity of real-world optimization problems increases. To overcome the shortcomings of AO, we propose an improved Aquila Optimizer algorithm (IAO) which improves the original AO algorithm via three strategies. First, in order to improve the optimization process, we introduce a search control factor (SCF) in which the absolute value decreasing as the iteration progresses, improving the hunting strategies of AO. Second, the random opposition-based learning (ROBL) strategy is added to enhance the algorithm’s exploitation ability. Finally, the Gaussian mutation (GM) strategy is applied to improve the exploration phase. To evaluate the optimization performance, the IAO was estimated on 23 benchmark and CEC2019 test functions. Finally, four real-world engineering problems were used. From the experimental results in comparison with AO and well-known algorithms, the superiority of our proposed IAO is validated.

1. Introduction

The optimization process aims to find the optimal decision variables for a system from all the possible values by minimizing its cost function []. In many scientific fields, such as image processing [], industrial manufacturing [], wireless sensor networks [], scheduling problems [], path planning [], feature selection [], and data clustering [], optimization problems are essential. Real-world engineering problems have the characteristics of non-linearity, computation burden, and wide solution space. Due to the gradient mechanism, traditional optimization methods have the drawbacks of poor flexibility, high computational complexity, and local optima entrapment. In order to overcome these shortcomings, many new optimization methods have been proposed, especially the meta-heuristic (MH) algorithms. MH algorithms perform well in optimization problems, with high flexibility, no gradient mechanism, and high ability to escape the trap of local optima [].

In general, MH algorithms are classified into four main categories: swarm intelligence algorithms (SI), evolutionary algorithms (EA), physics-based algorithms (PB), and human-based algorithms (HB) [], as shown in Table 1. Despite the different sources of inspiration for these four categories, they all occupy two important search phases, namely, exploration (diversification) and exploitation (intensification) [,]. The exploration phase explores the search space widely and efficiently, and reflects the capability to escape from the local optimal traps, while the exploitation phase reflects the capability to improve the quality locally and tries to find the best optimal solution around the obtained solution. In summary, a great MH algorithm can obtain a balance between these two phases to achieve good performance.

Table 1.

Categories of MH algorithms.

Generally, the optimization process of the MH algorithms begins with a set of candidate solutions generated randomly. These solutions follow an inherent set of optimization rules to update, and they are evaluated by a specific fitness function iteratively; this is the essence of an optimization algorithm. The Aquila Optimizer (AO) [] was first proposed in 2021 as one of the SI algorithms. It is achieved by simulating the four Aquila hunting strategies, which are high soar with a vertical stoop, walk and grab prey, low flight with slow descent attack, and walk and grab prey. The first two behavioral strategies reflect the exploitation ability, while the latter two reflect the exploration ability. AO has the advantages of fast convergence speed, high search efficiency, and a simple structure. However, this algorithm is insufficient as the complexity of real-world engineering problems increases. The performance of AO depends on the diversity of population and the optimization update rules. Moreover, the algorithm has inadequate exploitation and exploration capabilities, weak ability to jump out of local optima, and poor convergence accuracy.

In [], Ewees, A. et al. improved the AO algorithm through using the search strategy of the WOA to boost the search process of the AO. They mainly used the operator of WOA to substitute for the exploitation phase of the AO. The proposed method tackled the limitations of the exploitation phase as well as increasing the diversity of solutions in the search stage. Their work attempted to avoid the weakness of using a single search strategy; comparative trials showed that the suggested approach achieved promising outcomes compared to other methods.

Zhang, Y. et al. [] proposed a new hybridization algorithm of AOA with AO, named AOAAO. This new algorithm mainly utilizes the operators of AOA to replace the exploitation strategies of AO. In addition, inspired by the HHO algorithm, an energy parameter, E, was applied to balance the exploration and exploitation procedures of AOAAO, with a piecewise linear map used to decrease the randomness of the energy parameter. AOAAO is more efficient than AO in optimization, with faster convergence speed and higher convergence accuracy. Experimental results have confirmed that the suggested strategy outperforms the alternative algorithms.

Wang, S. et al. [] proposed Improved Hybrid Aquila Optimizer and Harris Hawks Algorithm (IHAOHHO) in 2021. This algorithm combines the exploration strategies of AO and the exploitation strategies of HHO. Additionally, the nonlinear escaping energy parameter is introduced to balance the two exploration and exploitation phases. Finally, it uses random opposition-based learning (ROBL) strategy to enhance performance. IHAOHHO has the advantages of high search accuracy, strong ability to escape local optima, and good stability.

The binary variants of the AO mentioned above all improve on the performance of original AO. However, they remain insufficient for specific complex optimization problems, especially multimodal and high-dimensional problems. In order to enhance the optimization capability in both low and high dimensions, we propose an improved AO algorithm, IAO, which uses SCF and mutations. The main drawbacks of the AO consist of two main points:

- The optimization rules of AO limit the search capability. The second strategy of AO limits the exploration capability, as the effect of the Levy flight function leads to local optima, while the third limits the exploitation capability with the fixed number parameter , resulting in low convergence accuracy.

- The population diversity of AO decreases with increasing numbers of iterations []. While the candidate solutions are updated according to the specific optimization rules of AO, the randomness of the solutions decreases through the search process, resulting in inferior population diversity.

Therefore, in order to improve the optimization rules of AO, we introduce a search control factor (SCF) to improve these two search methods and allow the Aquila to search widely in the search space and around the best result. The random opposition-based learning (ROBL) and Gaussian mutation (GM) strategies are applied to increase the population diversity of the algorithm. The ROBL strategy is employed to further enhance the exploitation capability, while the GM strategy is utilized to further improve the exploration phase. In addition, the 23 standard benchmark functions were applied to rigorously evaluate the robustness and effectiveness of the IAO algorithm. In this paper, the IAO is compared with AO, IHAOHHO, and several well-known MH algorithms, including PSO, SCA, WOA, GWO, HBA, SMA, and SNS. In the end, CEC2019 test functions and four real-world engineering design problems were utilized to further evaluate the performance of IAO. The experimental results show that the proposed IAO achieves the best performance among all of these algorithms.

The main contribution of this paper is to improve on the performance of the AO algorithm. To improve the exploration and exploitation phases, we define an SCF to change the second and third predation strategies of the Aquila in order to fully search around the solution space and the optimal Aquila, respectively. We apply the ROBL strategy to further enhance the ability to exploit the search space and utilize the GM strategy to further enhance the ability to escape from local optimal traps. The remainder of this paper is organized as follows: Section 2 introduces the AO algorithm; Section 3 illustrates the details of the proposed algorithm; and comparative trials on benchmark functions and real-world engineering experiments are provided in Section 4. Finally, Section 5 concludes the study.

2. Aquila Optimizer (AO)

The AO algorithm is a typical SI algorithm proposed by Abualigah, L. et al. [] in 2021. The algorithm is optimized by simulating four predator–prey behaviors of Aquila through four strategies: selecting the search space by high soar with a vertical stoop; exploring within a divergent search space by contour flight with a short glide attack; exploiting within a convergent search space via low flight with a slow descent attack; and swooping, walking, and grabbing prey. A brief description of these strategies is provided below.

- Strategy 1: Expanded exploration ()

In this strategy, the Aquila searches the solution space through high soar and uses a vertical stoop to determine the hunting area; the mathematical model is defined as

where represents the best position in the current iteration, represents the average value of positions, which is shown in Equation (2), is a random number between 0 and 1, Dim is the dimension value of the solution space, N represents the number of Aquila population, and t and T are the current iteration and the maximum number of iterations, respectively.

- Strategy 2: Narrowed exploration ()

In the second strategy, the Aquila uses spiral flight above the prey and then attacks through a short glide. The model formula is described as

where is a random number within (0, 1), is a random number selected from the Aquila population, D is the dimension number, and is the Levy flight method function, which is shown as

where s is a constant value equal to 0.01, is another equal to 1.5, u and are random numbers within (0, 1), and y and x indicate the spiral flight trajectory in the search, which are calculated as follows:

where is an integer number from 1 to the dimension length (D) and refers to an integer indicating the search cycles between 1 and 20.

- Strategy 3: Expanded exploitation ()

In this method, when the hunting area is selected the Aquila descends vertically and searches the solution space through low flight, then attacks the prey. The mathematical expression formula is represented as

where and are integer numbers equal to 0.1 which are used to adjust exploitation, and are random numbers between 0 and 1, and and are the upper and lower bounds of the solution space, respectively.

- Strategy 4: Narrowed exploitation ()

In the final method, the Aquila chases the prey in the light of stochastic escape route and attacks the prey on the ground. The mathematical expression of this behavior is

where is a quality function parameter which is applied to tune the search strategies, rand is a random value within (0, 1), is a random number between −1 and 1 indicating the behavior of prey tracking during the elopement, and represents the flight slope when hunting prey, which decreases from 2 to 0.

3. The Proposed Improved Aquila Optimizer (IAO) Algorithm

Among the four strategies of the original AO algorithm, the effect of the Levy flight function of the second makes Aquila search insufficient in the solution space and tends to fall into local optima. Meanwhile, the third leads to a weak local exploitation ability, as the parameter is constant. To reduce the search pace of Aquila as iterations proceeds, we create a SCF woth an absolute value (abs) that decreases with the number of iterations to improve on the second and third strategies of the original AO. Furthermore, the ROBL and GM strategies are added to further improve the exploitation and exploration phases, respectively. The IAO is discussed in additional detail below.

3.1. Search Control Factor (SCF)

The search control factor is used to control the basic search step size and direction of Aquila. As iteration progresses, the movement of the Aquila will gradually decrease to increase the search accuracy. Therefore, the abs of SCF decreases with iterations (t), which is described as

where is a constant >0 (default = 2). The term is used to control the Aquila’s flight speed through the number of iterations, r is a random number between 0 and 1, and is the direction control factor described by Equation (9), which is used to control the Aquila’s flight direction.

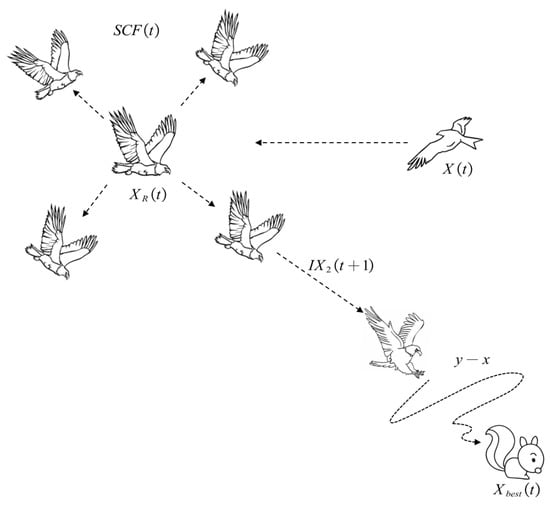

3.1.1. Improved Narrowed Exploration (): Full Search with Short Glide Attack

In the second improved method, the Aquila searches the target solution space sufficiently through different directions and speeds, then attacks the prey. The improved method is called full search with short glide attack, and is shown in Figure 1. The mathematical expression formula of the improved strategy is illustrated in Equation (10):

where is the search control factor, which is introduced above, is the solution of the next iteration of t, is a random selected from the Aquila population, is a random number within (0,1), is the best-obtained solution until t iteration, and y and x are the same as in the original AO algorithm, and describe the spiral flight method in the search as calculated in Equation (5).

Figure 1.

Aquila search around prey and attack.

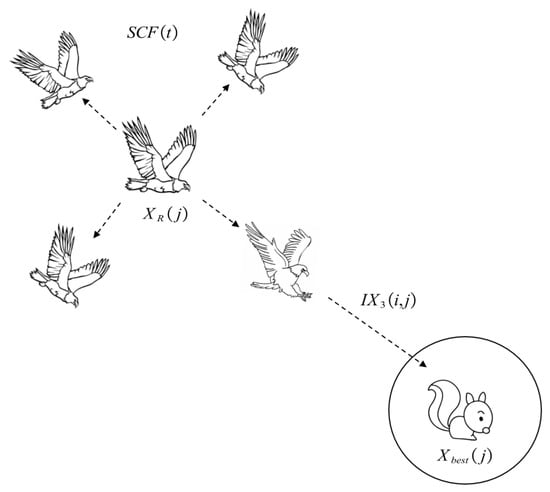

3.1.2. Improved Expanded Exploitation (): Search around Prey and Attack

In the third improved method, the Aquila searches thoroughly around the prey and then attacks it. Figure 2 shows this strategy, called Aquila search around prey and attack. This method aims to improve the exploitation capability, and is mathematically expressed by Equation (11):

where is the search control factor, and rand are random numbers between 0 and 1, is the solution of the next iteration of t, represents the jth dimension of the best position in this current iteration, j is a random integer between 1 and the dimension length, is the jth dimension of a random one selected from the Aquila population, and and represent the jth dimension of the the upper and lower bounds of the solution space, respectively.

Figure 2.

Aquila search around prey and attack.

3.2. Random Opposition-Based Learning (ROBL)

On the basis of opposition-based learning (OBL) [], Long, W. [] developed a powerful optimization tool called random opposition-based learning (ROBL). The main idea of ROBL is to consider the fitness of a solution and its corresponding random opposite solution at the same time in order to obtain a better candidate solution. ROBL has made a great contribution to improving exploitation ability, as defined by

where is a random number between 0 and 1, is the opposite solution, and and are the upper and lower bounds of the solution space, respectively.

3.3. Gaussian Mutation (GM)

Gaussian mutation (GM) is another commonly employed optimization tool, and performs well in the exploitation phase. Here, we use GM to prevent the IAO from falling into local optima; it is mathematically expressed by

where and are the current and mutated positions, respectively, of a search agent and denotes a uniform random number that following a Gaussian distribution with a value of mean to 0 and standard deviation to 1, shown as

3.4. Computational Complexity of IAO

In this section, we discuss the computational complexity of the proposed IAO algorithm. In general, the computational complexity of the IAO typically includes three phases: initialization, fitness calculation, and updating of Aquila positions. The computational complexity of the initialization phase is O (N), where N is the population size of Aquila. Assume that T is the total number of iterations and O (T × N) is the computational complexity of the fitness calculation phase. Additionally, assume that Dim is the dimension of the problem; then, for updating positions of Aquila, the computational complexity is O (T × N × Dim + T × N × 2). Consequently, the total computational complexity of IAO is O (N × (T × (Dim + 2) + 1)).

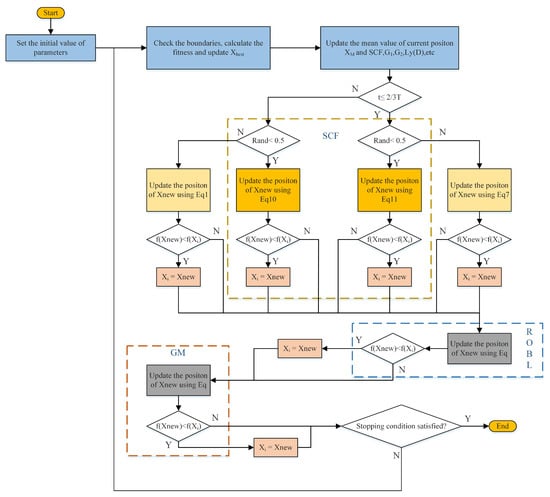

3.5. Pseudo-Code of of IAO

In summary, IAO uses SCF to improve the second and third hunting methods of the original AO. Additionally, GM is applied to further enhance the exploitation capability, while ROBL is utilized to further improve the exploration phase. The target is to increase the solution diversity of the algorithm. The pseudocode and details of the IAO are shown in Algorithm 1 and Figure 3, respectively.

| Algorithm 1 Improved Aquila Optimizer |

|

Figure 3.

Flowchart of IAO algorithm.

4. Experimental Results and Discussion

In this section, we desribe the 23 benchmark test functions, CEC2019 benchmark functions, and four real-world engineering design problems that were carried out to evaluate the performance of the IAO algorithm. To ensure the fairness of comparison, all algorithms were implemented for the same iterations and search agents, which were 500 and 30, respectively. All experiments were tested on the MATLAB 2019 platform using a PC with an Intel (R) Core (TM) i7-9750H CPU @ 2.60 GHz and 16 GB of RAM.

4.1. Results of Twenty-Three Benchmark Test Functions

In this section, we describe the 23 benchmark functions which were used to analyze the IAO’s ability in exploring the solution space and exploiting the global solutions. We used three types of benchmark functions: unimodal (F1-F7, Table 2), multimodal (F8-F13, Table 3), and fixed dimension multimodal functions (F14-F23, Table 4). The results of unimodal and multimodal functions used to evaluate the exploitation ability and exploration tendency of IAO, respectively, in ten dimensions are shown in Table 5. In addition, these two tests were employed in 50 and 100 dimensions; the results are shown in Table 6 and Table 7. Finally, the results with fixed dimension multimodal functions are shown in Table 8, indicating the exploration ability in the lower dimensions of the IAO. The results are compared with nine well-known algorithms: AO, PSO, SCA, WOA, GWO, HBA, SMA, IHAOHHO, and SNS. The main control parameters of the compared algorithms are shown in Table 9. All algorithms were evaluated using the mean and standard deviations (STD) from 30 independent runs and Friedman’s mean rank tests. Three lines of tables have been reported in order to display statistical analysis for the test functions, demonstrating the superiority of the IAO. The first line illustrates three symbols, (W|L|T), that denote the number of the functions in which the performance of the IAO was either the best (win), inferior (loss), or indistinguishable (tie) with respect to the compared algorithm. The second line shows the Friedman mean rank values., while the third line refers to the final rank values of the algorithms.

Table 2.

Unimodal benchmark functions.

Table 3.

Multimodal benchmark functions.

Table 4.

Fixed-dimension multimodal benchmark functions.

Table 5.

Results of the comparative methods on classical test functions (F1–F13); the dimension is fixed to 10.

Table 6.

Results of the comparative methods on classical test functions (F1–F13); the dimension is fixed to 50.

Table 7.

Results of the comparative methods on classical test functions (F1–F13); the dimension is fixed to 100.

Table 8.

Results of the comparative methods on classical test functions (F14–F23).

Table 9.

Parameters for the compared algorithms.

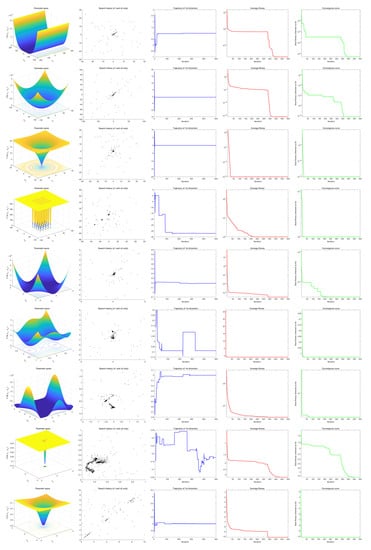

4.1.1. Qualitative Analysis for Convergence of IAO

In this section, we present the results of a series of experiments made to qualitatively analyze the convergence of the IAO. Figure 4 shows the parameter space of the function, search history of the Aquila positions, trajectory, average fitness, and convergence curve from the first to fifth columns, respectively. The search history reflects the way in which IAO explores and exploits the search space. The search history discusses how the Aquila explores and exploits the solution space, indicating the hunting behavior between the eagle and prey. From the last two columns, it can be seen that the values of average fitness and convergence curve are large at first, then gradually decrease as iteration progresses. From the fourth column, presenting the trajectory of the solution, it can be observed that the solution has a large value at the beginning of the iterations and gradually tends towards stability as iteration progresses. The comparison indicates that the IAO has strong exploitation and exploration abilities in the iterative process.

Figure 4.

Qualitative results of IAO.

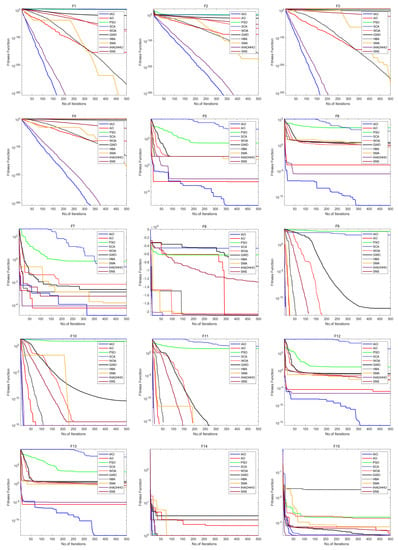

In addition, Figure 5 shows the convergence curve for the IAO and the other compared algorithms on the 23 benchmark functions. For unimodal functions (F1–F7), the convergence rate of the IAO is faster than the other algorithms, and its final convergence accuracy is better as well. This result confirms that the exploitation capacity of IAO is strong and has reliable performance. For the convergence curves of the multimodal functions, the IAO shows very good search performance. It can be seen that the IAO achieves a high balance between the exploration and exploitation phases. For F8–F15, F17, F21, F22, and F23, the IAO approaches positions around the optimal solutions at a very fast speed, and the positions have are exploited efficiently to catch the high precision final solutions. For F16, F18, F19, and F20, the IAO gradually approaches the optimal solutions and updates the Aquila positions in the iterations to confirm the final solution. In addition, three features can be obtained from Figure 5. The first is the fast convergence speed, which can be seen from F1–F4 and F9–F11. Second, from F5–F7, F12, F13, and F15, we can conclude that IAO has the best global optimal approximation compared with its peers. Finally, in examining the strong local optimum escape capability, the behavior of the IAO indicates its ability to escape the local optimum after multiple stagnations in F5–F7, F12, F13, and F20.

Figure 5.

Qualitative results of IAO.

4.1.2. Exploitation Capability of IAO

Because the set of unimodal functions (F1-F7) has only one global optimum, it was employed to test the IAO’s exploitation capability, as shown in Table 5. From F1 to F4, the IAO can find the optimal solution. For F5 and F7, the IAO achieves the smallest average and STD values. For F6, the IAO shows the second smallest Average and STD, while HBA has the best. Therefore, as compared with other algorithms the IAO has the best stability and accuracy. It can be concluded that the exploitation capability of the IAO is excellent.

4.1.3. Exploration Capability of IAO

As multimodal functions have several local optima, they were employed to evaluate the IAO’s exploitation capability. The multimodal experiments were divided into two categories, multidimensional (F8–F13) and fixed-dimensional (F14–F23), to test the exploration ability of the IAO in multiple dimensions and low dimensions, respectively. From Table 5, it can be seen that the IAO attains the smallest Average and STD values, excluding F12. For F12, SNS performs best, while IAO achieves the second best position. As for the fixed-dimensional experiments, which are shown in Table 8, IAO achieves the best performance in more than half of the sets. These comparative trials show that IAO’s exploration capability is superior to its competitors.

4.1.4. Stability of IAO

To verify the stability and quality of IAO in dealing with high-dimensional problems, two more experiments were designed with dimensions of 50 and 100. All algorithms (IAO, AO, PSO, SCA, WOA, GWO, HBA, SMA, IHAOHHO, and SNS) were run independently 30 times with 30 search agents and 500 iterations. The results are shown in Table 6 and Table 7. It can be seen that the IAO obtains the best results in 50 and 100 dimensions except for the second performance in F5. For F5, AO obtains the minimum average and STD in both 50 and 100 dimensions. Therefore, we can conclude that the stability and quality of IAO confer a particularly good effect in dealing with high-dimensional problems.

4.1.5. Time Consuming Test of IAO

In this section, the running time of the algorithm is tested to evaluate its efficiency. Each algorithm was run in ten dimensions with variable dimension function (F1–F23) and the time was recorded. From Table 10, it can be seen that the time required by IAO is longer than the original AO, as the GM and ROBL steps increase the complexity of the algorithm and consume excess time. Nevertheless, the elapsed time of IAO on the majority of the benchmark functions is less than that of SMA and IHAOHHO. Considering the excellent performance of the IAO and the increasing requirements of real-world optimization problems, the time consumption of the IAO is acceptable.

Table 10.

Computation time of algorithms on benchmark functions.

4.2. Results of Cec2019 Test Functions

This section analyses the IAO with the CEC2019 test functions, the definitions of which are listed in Table 11. The IAO was implemented with 30 agents and 500 iterations for 30 independent runs. The IAO was compared with AO, AOA, IHAOHHHO, PSO, HBA, WOA, and SSA. As reported in Table 12, the comparison was performed through the average and STD values of the considered algorithms across the course of the functions. In addition, the Friedman mean rank values and final ranks are shown at the bottom of Table 12. The results confirm the proposed IAO’s superiority in dealing with these challenging test functions, as it performed the best in more than half of the functions.

Table 11.

CEC2019 benchmark functions.

Table 12.

Results of the comparative methods on CEC2019 test functions.

4.3. Real-World Application

To further verify the performance of the algorithm, the IAO was tested in four well-known real-world engineering design problems: the speed reducer design problem, pressure vessel design problem, tension/compression spring design problem, and three-bar truss design problem, and the experimental results with the IAO were compared with classical algorithms proposed in previous studies.

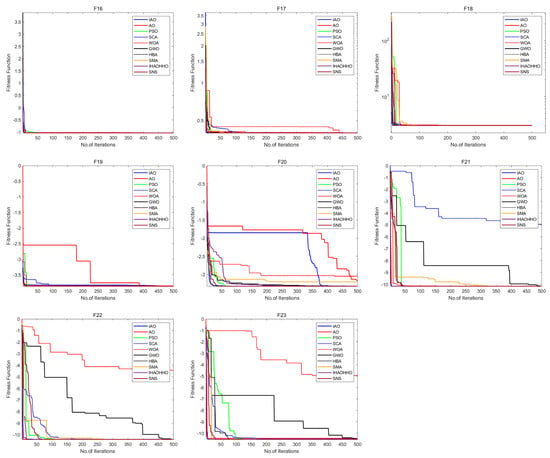

4.3.1. Speed Reducer Design Problem

The optimization goal of this optimization problem is to minimize the weight of the speed reducer. This design problem includes seven optimization parameter variables and eleven constraints. Figure 6 shows the image expression of this design, and Equation (15) provides the mathematical expression. The IAO is compared with IHAOHHO, AO, AOA, PSO, SCA, GA, MDA, MFO, FA, HA, and PAO-DE. From Table 13, it can be seen hat the IAO is superior to all of the other algorithms, and ranks first.

Figure 6.

Speed reducer design problem.

Table 13.

Results of the compared algorithms for solving the speed reducer design problem.

Minimize

Subject to

where

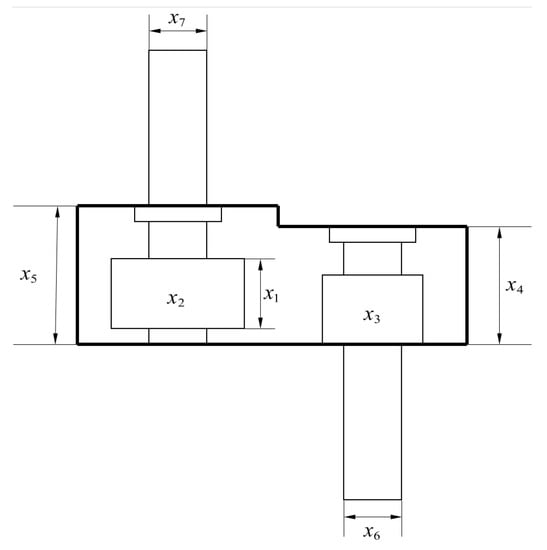

4.3.2. Pressure Vessel Design Problem

The optimization goal of this design problem is to minimize the manufacturing cost of pressure vessels. As shown in Figure 7, this design problem includes four variables that need to be optimized: the thickness of the shell (Ts), the head (Th), the length of the cylindrical section (L), and the inner radius (R). Meanwhile, there are four constraints, as shown below. From Table 14, it can be seen that the results with the IAO are the best compared to its competitors.

Figure 7.

Pressure vessel design problem.

Table 14.

Results of the compared algorithms for solving the pressure vessel design problem.

Consider

Minimize

Subject to

where

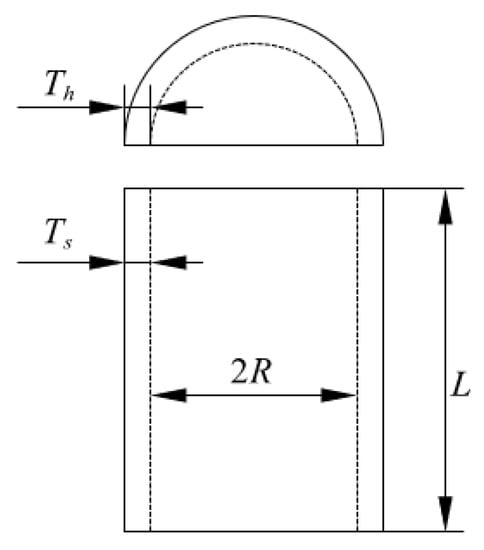

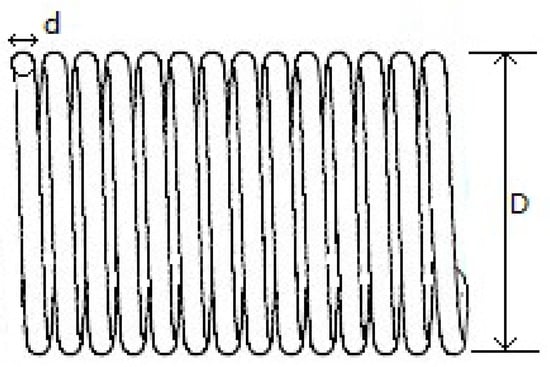

4.3.3. Tension/Compression Spring Design Problem

This design problem seeks to minimize the weight of the tension/compression spring by adjusting three design variables: the wire diameter (d), mean coil diameter (D), and the number of active coils (N), shown in Figure 8. Equation (19) provides the mathematical functions of this problem. The results can be obtained from Table 15, where it can be seen that the IAO is competitive compared with AO, IHAOHHO, WOA, HS, MVO, PSO, ES, and CSCA.

Figure 8.

Tension/compression spring design problem.

Table 15.

Results of the compared algorithms for solving the tension/compression spring design problem.

Consider

Minimize

Subject to

where .

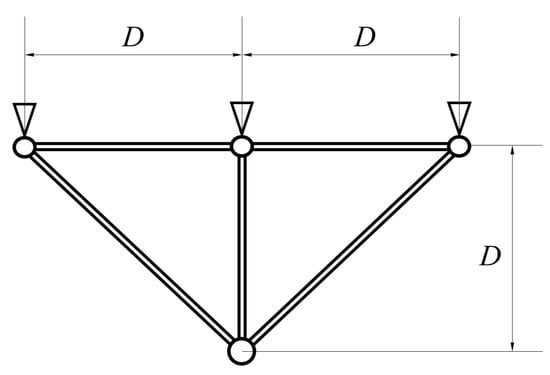

4.3.4. Three-Bar Truss Design Problem

This design problem seeks to minimize the weight of the truss by adjusting the two parameters in Figure 9. Equation (21) provides the mathematical functions of the problem. The results are shown in Table 16, from which it is obvious that the IAO can better obtain the minimum weight of a three-bar truss compared with AO, IHAOHHO, AOA, SSA, MBA, PSO-DE, CS, GOA, and MFO.

Figure 9.

Three-bar truss design problem.

Table 16.

Results of the compared algorithms for solving three-bar truss design problem.

Minimize

Subject to

where

5. Conclusions

This paper proposes an Improved Aquila Optimizer (IAO) algorithm. A search control factor (SCF) is proposed to improve the second and third search strategies of the original Aquila Optimizer (AO) algorithm. Then, GM and ROBL methods are integrated to further improve the exploration and exploitation ability of the original AO. To evaluate the performance of the IAO, we tested the algorithm with 23 benchmark functions and CEC2019 test functions. From the results, the performance of the IAO is superior to other advanced MH algorithms. Meanwhile, through experimental results on four real-world engineering design problems, it is validated that the IAO has high practicability in solving practical problems.

In future studies, we will consider various methods to balance the exploration and exploitation phases. Different mutation strategies can be taken into account to increase the solution diversity. In addition, the proposed algorithm could be applied to more fields, including deep learning, material scheduling problems, parameter estimation, wireless sensor networks, path planning, signal denoising, image segmentation, model optimization, and more.

Author Contributions

Conceptualization, B.G. and F.X.; methodology, B.G.; software, B.G.; validation, B.G.; formal analysis, B.G.; investigation, B.G. and Y.S.; resources, B.G.; data curation, B.G.; writing—original draft preparation, B.G.; writing—review and editing, F.X. and Y.S.; visualization, B.G. and Y.S.; supervision, F.X. and X.X.; project administration, F.X.; funding acquisition, F.X. and X.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 51975422 and 52175543.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hajipour, V.; Kheirkhah, A.; Tavana, M.; Absi, N. Novel Pareto-based meta-heuristics for solving multi-objective multi-item capacitated lot-sizing problems. Int. J. Adv. Manuf. Technol. 2015, 80, 31–45. [Google Scholar] [CrossRef]

- Ameur, M.; Habba, M.; Jabrane, Y. A comparative study of nature inspired optimization algorithms on multilevel thresholding image segmentation. Multimed. Tools Appl. 2019, 78, 34353–34372. [Google Scholar] [CrossRef]

- Yildiz, A.R.; Mirjalili, S.; Sait, S.M.; Li, X. The Harris hawks, grasshopper and multi-verse optimization algorithms for the selection of optimal machining parameters in manufacturing operations. Mater. Test. 2019, 8, 1–15. [Google Scholar]

- Wang, S.; Yang, X.; Wang, X.; Qian, Z. A virtual vorce algorithm-lévy-mmbedded grey wolf optimization algorithm for wireless sensor network coverage optimization. Sensors 2019, 19, 2735. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, W.; Wen, J.B.; Zhu, Y.C.; Hu, Y. Multi-objective scheduling simulation of flexible job-shop based on multi-population genetic algorithm. Int. J. Simul. Model. 2017, 16, 313–321. [Google Scholar] [CrossRef]

- Liu, A.; Jiang, J. Solving path planning problem based on logistic beetle algorithm search–pigeon-inspired optimisation algorithm. Electron. Lett. 2020, 56, 1105–1108. [Google Scholar] [CrossRef]

- Chen, B.; Chen, H.; Li, M. Improvement and optimization of feature selection algorithm in swarm intelligence algorithm based on complexity. Complexity 2021, 2021, 9985185. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Zong, W.G. A comprehensive survey of the harmony search algorithm in clustering applications. Appl. Sci. 2020, 10, 3827. [Google Scholar] [CrossRef]

- Omar, M.B.; Bingi, K.; Prusty, B.R.; Ibrahim, R. Recent advances and applications of spiral dynamics optimization algorithm: A review. Fractal Fract. 2022, 6, 27. [Google Scholar] [CrossRef]

- Molina, D.; Poyatos, J.; Ser, J.D.; García, S.; Herrera, F. Comprehensive taxonomies of nature- and bio-inspired optimization: Inspiration versus algorithmic behavior, critical analysis recommendations. Cogn. Comput. 2020, 12, 897–939. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Af, A.; Mh, A.; Bs, A.; Sm, B. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar]

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 2009, 12, 702–713. [Google Scholar] [CrossRef] [Green Version]

- Fogel, L.J.; Owens, A.J.; Walsh, M.J. Artificial Intelligence Through Simulated Evolution; Wiley-IEEE Press: New York, NY, USA, 1966. [Google Scholar]

- Hansen, N.; Müller, S.D.; Koumoutsakos, P. Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol. Comput. 2003, 11, 1–18. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.; Vecchi, M. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Azizi, M. Atomic orbital search: A novel metaheuristic algorithm. Appl. Math. Model. 2021, 93, 657–683. [Google Scholar] [CrossRef]

- Bouchekara, H.R.E.H. Electrostatic discharge algorithm: A novel nature-inspired optimisation algorithm and its application to worst-case tolerance analysis of an EMC filter. Iet Sci. Meas. Technol. 2019, 13, 491–499. [Google Scholar] [CrossRef]

- Talatahari, S.; Bayzidi, H.; Saraee, M. Social network search for global optimization. IEEE Access 2021, 9, 92815–92863. [Google Scholar] [CrossRef]

- Farshchin, M.; Maniat, M.; Camp, C.V.; Pezeshk, S. School based optimization algorithm for design of steel frames. Eng. Struct. 2018, 171, 326–335. [Google Scholar] [CrossRef]

- Moosavian, N.; Roodsari, B.K. Soccer league competition algorithm: A novel meta-heuristic algorithm for optimal design of water distribution networks. Swarm Evol. Comput. 2014, 17, 14–24. [Google Scholar] [CrossRef]

- Eberhart, R.C.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the MHS’95, Proceedings of the 6th International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995. [Google Scholar]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey badger algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. (MATCOM) 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Peraza-Vazquez, H.; Pena-Delgado, A.F.; Echavarria-Castillo, G.; Beatriz Morales-Cepeda, A.; Velasco-Alvarez, J.; Ruiz-Perez, F. A bio-inspired method for engineering design optimization inspired by dingoes hunting strategies. Math. Probl. Eng. 2021, 2021, 9107547. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput.-Syst. Int. J. Esci. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control. Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Elaziz, M.A.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Yazdani, M.; Jolai, F. Lion Optimization Algorithm (LOA): A Nature-Inspired Metaheuristic Algorithm. J. Comput. Des. Eng. 2016, 3, 24–36. [Google Scholar] [CrossRef] [Green Version]

- Fathollahi-Fard, A.M.; Hajiaghaei-Keshteli, M.; Tavakkoli-Moghaddam, R. Red deer algorithm (RDA): A new nature-inspired meta-heuristic. Soft Comput. 2020, 24, 14637–14665. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Ewees, A.A.; Algamal, Z.Y.; Abualigah, L.; Al-qaness, M.A.A.; Yousri, D.; Ghoniem, R.M.; Abd Elaziz, M. A Cox Proportional-Hazards Model Based on an Improved Aquila Optimizer with Whale Optimization Algorithm Operators. Mathematics 2022, 10, 1273. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Yan, Y.X.; Zhao, J.; Gao, Z.M. AOAAO: The Hybrid Algorithm of Arithmetic Optimization Algorithm With Aquila Optimizer. IEEE Access 2022, 10, 10907–10933. [Google Scholar] [CrossRef]

- Wang, S.; Jia, H.; Abualigah, L.; Liu, Q.; Zheng, R. An improved hybrid aquila optimizer and harris hawks algorithm for solving industrial engineering optimization problems. Processes 2021, 9, 1551. [Google Scholar] [CrossRef]

- Tizhoosh, H.R. Opposition-based learning: A new scheme for machine intelligence. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28–30 November 2005; pp. 695–701. [Google Scholar] [CrossRef]

- Long, W.; Jiao, J.; Liang, X.; Cai, S.; Xu, M. A random opposition-based learning grey wolf optimizer. IEEE Access 2019, 7, 113810–113825. [Google Scholar] [CrossRef]

- Lu, S.; Kim, H.M. A regularized inexact penalty decomposition algorithm for multidisciplinary design optimization problems with complementarity constraints. J. Mech. Des. 2010, 132, 041005. [Google Scholar] [CrossRef] [Green Version]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Baykasoglu, A.; Ozsoydan, F.B. Adaptive firefly algorithm with chaos for mechanical design optimization problems. Appl. Soft Comput. 2015, 36, 152–164. [Google Scholar] [CrossRef]

- Geem, Z.; Kim, J.; Loganathan, G. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Liu, H.; Cai, Z.; Wang, Y. Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl. Soft Comput. 2010, 10, 629–640. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl. Math. Comput. 2007, 186, 1407–1422. [Google Scholar] [CrossRef]

- Mezura-Montes, E.; Coello Coello, C.A. An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. Int. J. Gen. Syst. 2008, 37, 443–473. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng. Appl. Artif. Intell. 2007, 20, 89–99. [Google Scholar] [CrossRef]

- Huang, F.z.; Wang, L.; He, Q. An effective co-evolutionary differential evolution for constrained optimization. Appl. Math. Comput. 2007, 186, 340–356. [Google Scholar] [CrossRef]

- Sadollah, A.; Bahreininejad, A.; Eskandar, H.; Hamdi, M. Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Appl. Soft Comput. 2013, 13, 2592–2612. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper gptimisation algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).