1. Introduction

The fourth industrial revolution brought to light new concepts that utilize increasing amounts of industrial data. The important role of data is motivated by the potential of extractable knowledge hidden within it. Knowledge integration plays an important role in smart factories on a human, organizational, and technical level, where data is the central resource for extracting knowledge with the help of, e.g., data analytics or machine learning methods [

1]. For this reason, modern decision-making and information-retrieval systems provide new methods for extracting this knowledge from data. This is intended to enable experts to use this knowledge for analysis, planning, and well-informed actions [

2]. In this context, the role of the expert in the decision-making processes is essential, since they are the ones who understand the implications of the extracted knowledge and can evaluate and integrate it further. However, the expert’s ability to use the extracted data is influenced by their understanding of the knowledge-extraction methods [

3]. This is because understanding the knowledge extraction algorithm helps the expert to be aware of its performance, accuracy, and limitations, which in turn reflects on their trust levels towards the automatically extracted knowledge. The development of intelligent information retrieval systems mainly focuses on increasing the accuracy of retrieval. Here, we adopt the definition of an IR system by William and Baeza-Yates (1992) [

4]: a system that is able to match a user query to data objects stored in a database. Those data objects are usually documents with semi-structured or unstructured textual content. In this article, we consider the information retrieval task as the method to respond directly to a user query or to retrieve information that other algorithms then use to respond to a user query. The former case takes the form of a search engine, while the latter can, for example, retrieve documents from a database for a recommender system, which then generates the recommendation to the user. Machine learning (ML), and especially deep learning (DL), models were able to solve prediction tasks in IR systems with high accuracy, provided an availability of sufficient datasets. However, intelligent information retrieval algorithms are usually considered black-box models, where the reasoning of their predictions is not clear. This has an influence on the level of user’s trust and acceptance of the retrieved information [

5]. This represents a particular limitation in critical domains, where experts require a clear understanding of the reasoning behind the prediction, in order to adopt it in making a final decision [

6]. This is one of the main drivers to develop the so-called open-box algorithms, which offer the possibility to explain their reasoning to the user. The idea here is that the reasoning of the intelligent model can inform the reasoning of the human expert and therefore support the expert’s decision. The importance of explaining the prediction of intelligent information retrieval algorithms grew rapidly in the recent years, as a part of the research on explainable artificial intelligence (XAI) [

2,

5,

7]. Our proposed framework, therefore, aims to generate high-level explanations for intelligent IR systems. The explanations are meant to reflect the reasoning of the intelligent algorithms for the users, allowing them to better evaluate and utilize the retrieved information in their decisions.

Explaining intelligent IR algorithms depends on the domain of application, since the explanations themselves are meant to clarify the algorithm’s logic to the domain experts. This means that explainability functions require tailoring towards their industrial applications, which are considered domain specific, since they have strict requirements for the decision-making process. In practice, tailoring IR and explainability solutions implies additional implementation costs for handling domain-specific requirements. Additional costs include: (1) labor costs associated with the developers, who manage the integration of domain-specific requirements in the IR and explainability algorithms, (2) labor costs associated with the domain experts, who provide the insights on the domain requirements, e.g., through interviews, and (3) the costs associated with the complexity of technical solution and subsequent costs of the methods it uses, e.g., the need for advanced processing power. Therefore, the technical complexity is a result of the domain requirements’ complexity. In order to limit those costs, our proposed framework is designed to provide a transferrable structure for explainable intelligent IR solutions. It minimizes the needs for tailoring the IR and explainability algorithms to each domain of application by adopting a semantic representation of the domain’s knowledge and requirements. Since the domain’s requirements are integrated into the concept of knowledge representation, the same IR and explainability algorithms can be used in multiple domains. The cost of developing explainable IR systems will therefore considerably decrease by implementing the same IR and explainability algorithms in multiple domains. The balance between developing a cost-effective solution and a domain-specific one is required for IR solutions; this is still a challenge for the current state of the art.

To address the explainability of intelligent IR systems and their transferability between multiple domains, we investigated the role of knowledge graphs in making black-box IR algorithms more interpretable, while providing the option to model domain requirements in a transferrable manner. We considered two research questions in this investigation:

How to generate domain-specific explanations for intelligent IR tasks.

How to enable transferring the same explainable IR algorithm to other domains, without compromising its performance.

We address these challenges by developing a framework that can link the IR and explainability algorithms to the domain requirements through a knowledge graph as a unified knowledge representation structure. We aim to integrate those requirements in the knowledge representation to: (1) allow the intelligent algorithms to utilize the domain’s requirements inherently, since they are already integrated in the knowledge structure, and (2) provide a domain-agnostic semantic knowledge structure that both IR and explainability algorithms can use. The key concept in our approach that answers these questions is considering a semantic knowledge graph (KG) representation as a single source of truth (SSoT). This way, multiple information sources are fused in the graph, and called by the intelligent IR and explainability algorithms, to provide the user with understandable search results. Our proposed solution serves as a foundation, which can be used to develop explainable, domain flexible, IR systems. We use the term “domain flexible” to reflect the ability of the framework of being domain-agnostic by being transferrable between multiple domains, while being able to represent each domain’s requirements in the explainable IR algorithm.

Among multiple knowledge representation structures, we choose knowledge graphs in our framework for the following reasons:

The ability of knowledge graph to contextualize the entities within the graph. Contextualizing the entity is a result of its semantic relations to other entities in the same graph. This feature provides more reliability to the IR algorithm and more meaningfulness of the generated explanations.

Unlike relational databases, the knowledge graph functions as a graph database. This means that graph theory methods can be directly implemented on the entities and relations in the knowledge graph. Graph databases enable an efficient querying of the elements as a graph and the use of basic statistics, such as the degree of elements. Furthermore, more complex statements, which are captured in the literature under the term “centrality measures” can be calculated efficiently for a future algorithmic extension of the presented approach. This enables, for example, considering more complex measures of connectivity to evaluate the retrieved information in the context of other information. Utilizing those methods from graph theory enhances the relatedness of retrieved results to a certain user query.

Knowledge graphs provide paths between any pair of entities. Those paths consist of in-between nodes and relations that connect those entities. Utilizing the concept of graph walks, i.e., navigating those graph paths following a predefined set of rules, the IR algorithm can identify more relevant results to the user query. Moreover, the graph path represents the reasoning of connecting two entities and thus explains the retrieval of one entity based on the others.

The proposed approach provides the methods to implement domain and expert requirements in the explainable IR algorithm. This in turn enables the system to be applicable in different domains through integrating requirements-engineering concepts explicitly into the process of model creation. The knowledge graph links domain requirements, explanations, and the domain data together. During the construction of the KG, information from domain requirements, expert knowledge, and textual data sources are taken into consideration and modelled through graph nodes and relations. The result is then used to generate explanations on how the intelligent IR algorithm is retrieving search results from the KG. The embedded knowledge in the KG relations allows one to overcome the challenge of explaining a black-box IR algorithm [

2,

8,

9]. It provides sufficient information to generate an understandable explanation that reflects the reasoning behind the IR-model’s prediction. Explanations from the KG can be constructed visually or verbally, making them more human-understandable for the end-user.

In order to generate a transferrable solution, the construction of the knowledge graph is designed to capture high-level domain requirements, from the domain experts and from the data sources themselves. Once those requirements are defined, they are embedded into the KG uniformly. Then, they are retrieved with the same intelligent IR algorithm, since the IR uses the same KG structure, and not the raw data source, to retrieve information. This aspect of our framework provides a novel approach to address the challenge of creating a transferrable IR solution that is domain specific at the same time.

Our contributions in this article are summarized as follows:

The development of a comprehensive framework, with specialized components that are capable of integrating domain-specific requirements in the chosen semantic knowledge representation.

Developing a novel system structure that enables intelligent IR and explainability algorithms to considering domain requirements inherently, using the KG-based SSoT.

The development of a novel transferability approach minimizes the implementation costs by enabling the use of the same IR and explainability algorithms in multiple domains, without compromising their domain-specific requirements.

We tested the transferability of the proposed method within two different application domains: (1) semiconductor industry and (2) job recommendation. Our results show high user acceptance and IR accuracy in both domains, with minimal-to-zero changes in the KG construction process, the intelligent IR algorithm, and the generation of visual and textual explanations.

In the following sections of the article, we review the related literature in

Section 2.

Section 3 elaborates on the proposed transferrable, KG-based retrieval, and explainability framework. The evaluation use cases and metrics are demonstrated in

Section 4 and the article is concluded in

Section 5.

2. Related Work

Although the topic of explaining intelligent algorithms is not new [

3,

10], it has gained new perspectives since deep learning algorithms have become widespread [

11]. This was due to the black-box nature of such algorithms, which challenged the ability to track the algorithms’ predictions and understand their reasoning. Explaining AI algorithms followed several directions in the state of the art. Li et al., (2020) [

2] classify these directions in two main categories: data-driven approaches, such as [

12,

13], and knowledge-aware ones [

14,

15]. Data-driven methods use the information from data and the intelligent model itself in generating a comprehensible interpretation of the model’s behavior. Knowledge-aware methods use the explicit or implicit knowledge that can be extracted from the domain. This knowledge is used either to generate or to enhance the explanations of the intelligent IR or recommendation algorithms.

For generating explanations, knowledge modelling methods have been investigated in recent years to infer the reasoning behind retrieving certain results by the IR algorithm or recommending an item to a certain user [

16,

17]. Both data-driven and knowledge-aware methods, which are implemented in singular domains of application, supported explaining the intelligent prediction models. However, these approaches were also dependent on the models used for the information retrieval or recommendation tasks. Therefore, recent literature shows a high focus on developing model-agnostic algorithms, not only for the intelligent IR or recommendation tasks but also for generating explanations of their predictions. A model-agnostic explanation algorithm means that the explanations are generated independently of how the intelligent model works. The algorithm uses the input and the output of the intelligent model to generate explanations without relying on the model’s internal behavior. An example of such model-agnostic, explainable recommendation algorithms can be clearly seen in the work of Chen and Miyazaki (2020) [

17]. They developed a task-specialized knowledge graph that serves as a general common knowledge source, to generate model-agnostic explanations of the intelligent recommendations. Their knowledge-aware approach uses the task-specialized graph to overcome the challenge of not having sufficient information from the databases to generate high-quality personalized explanations.

Knowledge graphs are knowledge modelling methods that support the generation of knowledge-aware explanations [

2]. A KG is a network of interconnected entities, where each entity is represented by a graph node, and the relevance between the nodes is represented by relations [

18]. Through the nodes and their relations, the KG is described as a set of triplets (head, relation, tail) as shown in Equation (1).

where

is the head entity,

is the tail entity, and

is the relation between them.

represents the entity group, and

is the relations group.

In the context of domain-specific textual documents, graph nodes are defined to represent the content of those documents, i.e., the words, sentences, or paragraphs [

19]. Graph relations are then defined based on certain criteria, which should also reflect the domain’s requirements. Alzhoubi [

20] proposes the use of association rules mining (RAM) for enhancing graph construction from textual databases. The proposed approach extracts frequent subgraphs from the overall KG. Those subgraphs are then processed to produce feature vectors that represent the relations between nodes. A similar approach is used in [

21], where vectors of the textual documents are considered as graph nodes, while the relations amongst them are calculated using the cosine similarity scores between document pairs.

The importance of model-agnostic solutions comes from their ability to use the same explanation concept and apply it for different intelligent models [

11,

17,

22]. However, despite their independence of the intelligent models, explainability algorithms are still influenced by the domain of application [

8]. Different domains require different content and shapes of explanations. Domain specifications also influence the intelligent IR or recommendation algorithms. An example of such domain-specific algorithms is found in the work of Naiseh (2020) [

23], who proposes explainable design patterns for clinical decision support systems. Another example in the biomedical domain is in the work of Yang (2020) [

6], who uses knowledge graphs to support the information retrieval task while considering the domain of application and its implications on the generated explanations. Their work builds on the flexibility that graph nodes and relations have, for embedding domain-related information while representing the data. Knowledge graph structures support the explainability of the system and serve as a foundation for the information retrieval itself, since they represent queryable graphical knowledge bases [

6].

The specific nature of a domain can be captured from multiple sources, which include the databases and the knowledge of domain experts. Model-agnostic solutions, such as in [

17], solve the challenge of explaining different black-box algorithms with the same explainability approach, but they do not handle components of the system that reflect its specific requirements, such as the role of domain experts in generating the knowledge graph, which then underlines the explainable recommendation algorithm. Domain-specific approaches such as [

6,

14,

23] address the challenges of considering domain requirements. The compliance with domain requirements and specification(s) has its own cost, however. The more the explainable solution is tailored towards a specific domain, the less applicable it becomes in other domains of application [

8]. For example, Ehsan et al. (2018) [

14] describe a highly domain-specific task for an explainable robotic behavior. They take into consideration the expert’s role in annotating the corpus and link it to the robot’s navigation states. However, their explainable approach is still dependent on the intelligent model, meaning that other domains of application, with other requirements and databases, cannot use the same model. Their approach is applicable in other domains once new corpuses are generated and the model is retrained again based on the new data. This process implies the costs we elaborated in

Section 1.

Moon et al., (2019) approach a solution for both challenges, by developing a domain-agnostic intelligent reasoning system over graph walks for an entity retrieval task [

11]. Their system is built on a conversational database (OpenDialKG) and tested amongst multiple domains. Their solution highlights the importance of bridging the gap between developing domain-specific solutions and transferring them amongst domains. Their solution shows high accuracy in adopting the same intelligent model to different domains. However, that adaptation is based solely on the large corpus they use to train the intelligent model. In a domain where limited data is available, the model may not be able to cover other domains. Moreover, the dependency on the data does not take into consideration the role of domain experts in modeling domain requirements.

Therefore, our proposed framework is designed to enable domain-specific, explainable algorithms, which are transferable to other domains with minimal changes. We put a high emphasis on domain modeling by including the role of domain experts and explicit domain requirements alongside the role of domain databases in the framework. Our approach allows generating high-level explanations that users can read in a natural language or visualize through the knowledge graph. Our solution builds on the work of Chen and Miyazaki (2020) [

17] and Moon et al., (2019) [

11] and bridges the gap between developing domain-agnostic and domain-specific explainable systems, utilizing the knowledge graphs as a base structure that is comprehensible and adaptable to different domains.

We propose a complete framework that considers the role of databases, domain experts, and domain requirements in constructing the knowledge graph and then furthering the IR explanations. Our framework uses the knowledge graph as the single source of truth, which provides the intelligent IR and recommendation algorithms with information. The explainability algorithm relies on the KG to generate high-level, textual, and visual explanations. In contrast to the approaches in [

15,

24,

25], where the IR and recommendation algorithms are model-intrinsic, the use of the KG as a SSoT in our framework allows the explainable IR algorithm to be model-agnostic. This removes the limits on the IR and explainability algorithms alike. Moreover, as compared to the work of Moon et al. [

11], our framework extends the sources of domain requirements. While their approach captures the requirements from databases solely, our framework enables capturing those requirements from databases, expert knowledge, and other requirement sources that can be explicitly defined, such as those found in the exploratory data analysis (EDA). Our proposed framework serves as a foundation for building domain-specific systems, since the knowledge graph is constructed with domain requirements in mind. Moreover, it addresses the transferability of the intelligent IR and explainability algorithms, through adopting the knowledge graph as the main source of information for both algorithms. In comparison to the similar transferrable approach of Chen and Miyazaki [

17], our framework does not compromise the domain-specific nature of the IR solution when transferring it to other domains. We explicitly embed those requirements in the system design to enable their effective integration in the intelligent, explainable, IR. The use of the same intelligent models for information retrieval or recommendation in different domains is therefore possible, since they are trained to query the graph and not the data sources directly.

In

Table 1, we summarize a part of the reviewed literature that informed our approach. The table compares between approaches for developing graph-based, explainable intelligent information retrieval and recommendation systems, including our proposed framework. We separate the features of the similar solutions from their final outcomes to focus the comparison on the two research questions of our research, namely addressing the domain-specific requirements in the IR solution and enabling the transferability of the solution to other domains with respect to their own sets of requirements.

4. Experiment Design and Framework’s Evaluation

In order to evaluate our framework, we designed an experimental setup to test the performance of each part of the framework. We focus on two main evaluation criteria, which correspond to our research questions:

The extent of the framework building a domain-specific solution that integrates the domain requirements and expert knowledge into the explainable, intelligent IR algorithm.

The transferability of the framework to different domains, without compromising the performance of the KG construction, the intelligent IR, and the explainability algorithms.

To evaluate we use a set of key performance indicators (KPIs). We use the KPIs to evaluate the performance of the framework in a certain domain, i.e., the first criteria. To evaluate the second criteria, we implement the framework on two different domains that include a high level of dependency on the domain requirements and the knowledge of domain experts. The domains belong to different environments to ensure that their requirements have no similarities. The first evaluation domain is supporting the semiconductor chip-design process. Within this domain, a use case of a semantic search for design documents has been implemented using the framework. The second evaluation domain is in the field of CV-Job matching and job-posting recommendations. The second use case takes the form of an intelligent recommender system for personalized job-posting suggestions.

In each of the two use cases, we tested (1) the performance of the intelligent information retrieval algorithm, (2) how the KG represents the domain of application, and (3) the explainability of the overall solution.

We tested the performance of the IR algorithm quantitatively through two KPIs: (1) the relevance of the semantic search results and (2) the precision and accuracy of the intelligent document clustering and matching. Moreover, we evaluate the algorithm qualitatively through expert-user feedback, which was collected through surveys and focus groups.

The

Relevance measure, shown in Equation (2), is defined by the number of relevant results to a user’s query, as a percentage of the total number of retrieved results.

The precision and accuracy are measured for the intelligent models that are implemented for the IR and recommendation tasks. We measure the precision and accuracy of those models using the standard

Precision and

F1 measure, see Equations (3) and (4).

where TP is the number of correctly classified documents, while FP is the number of documents that are incorrectly classified as belonging to a certain class, and FN is the number of documents that are incorrectly classified as not belonging to that class.

To test the knowledge graph’s ability to represent the domain, we used an ontological structural-evaluation metric, namely the class richness [

31,

32], which is defined in Equation (5).

Class Richness measure reflects how the graph covers the instances of the use case. The underlying assumption for using this measure is that the elements of the framework, expect for the algorithms, can represent classes of an ontology. If a class is capable of representing a domain, then it will have instances defined from that domain. The more classes having instances, the more representative is the proposed framework of the domain of interest.

Testing the explainability algorithm takes place through four different metrics. We measure firstly the availability of information for generating an explanation based on the knowledge graph.

Information availability is used in this context to measure the quality of the explanation. It represents the amount of information that our framework provides about the retrieved results from the KG. The more information collected about the algorithm’s reasoning, the more comprehensive the explanation will be, and thus the higher the explanation quality is. To quantify this measure, we rely on the explanation templates that are used for generating the textual explanations. We consider the explanation to be of a high quality if all the slots of the explanation template can be filled with information from the KG. Otherwise, we consider the explanation to be of a low quality. We then define the

information availability as the ratio of high-quality explanations to the total number of explanations generated by the explainability algorithm, as in Equation (6).

In addition to the information availability, we calculate the

mean explainability precision (MEP) [

17,

33] of the explanation that are associated with the IR output, as defined in Equation (7).

MEP measures the average proportion of explainable results of an IR algorithm or recommendations of a recommender system.

Here, is the number of users, is the number of explainable results, and is the total number of results.

The third and fourth metrics focus on the quality of the explanation itself. We use

bilingual evaluation understudy (BLEU) [

34] and

Rouge-L [

35] scores to calculate how much a generated explanation represents the explained result. BLEU score measures the closeness between a machine-generated text and the original human-defined one. We use this score to measure how a generated textual explanation represents the original information that the KG paths have provided to the explainability algorithm. Rouge-L score measures the ability of a machine generated text to summarize the original information. In our case, this score represents the ability of the explanation to summarize the information that led to retrieving a certain result from the KG structure.

While measure, , score, and BLEU and Rouge-L scores are all well-defined measures that are used in the literature, we develop the and metrics to correspond to the nature of our domain-oriented framework and to demonstrate its ability to perform accurate information retrieval and generate comprehensive explanations for the different domain-specific environments it is used for.

In the following, we elaborate the implementation of our framework within two evaluation use cases. We focus on the strategy of implementing the framework’s components in each domain, the results of evaluating the framework’s domain coverage, and its transferability.

4.2. Use Case 2: CV-Job Matching and Recommendation

In the second use case, we choose a different domain to test the framework. We build an information retrieval algorithm that supports a job recommendation system. The field of matching job postings to job seekers features a different set of requirements, which is a result of the varying structures and content of job postings and job seekers’ CVs [

36].

CV documents include information that summarizes the user profile. Recommending a certain job to the CV holder requires finding the similarities between their skills and qualifications to those required by the job posting. This, in turn, requires the information retrieval algorithm to focus on parts of the CV that include the information about the user’s qualifications and skills, more than other sections, such as the hobbies and interests. This also applies to the job postings themselves, where one can find several parts about the company portfolio, which are repeated in every job posting and thus do not participate in generating an effective matching to the specific job seeker.

To accomplish this matching effectively, we build a customized named entity classifier (NER) to support the information retrieval, and later the recommendation and explanation systems, in separating the different textual parts of the job posting and the user’s CV to better model them in the knowledge graph.

5. Discussion

The implementation of our framework in the presented use cases shows its adaptability to multiple domains while generating explanations for the retrieved search results and recommendations. In the evaluation experiments, we focused on the main contributions of our approach, namely the ability of the framework to embed domain-specific requirements and the transferability of the framework to multiple domains with minimum to no changes in the intelligent algorithms.

Embedding domain requirements in the two use cases was a direct result of adopting the domain-specific components in the proposed framework. Those requirements are captured from multiple parts of the system, including the rules that domain experts define, the results of the EDA process, and the content of databases. To the best of our knowledge, no other solution has been suggested in the literature as to how to include domain requirements from all of those parts. Here, we especially refer to the domain-specific solutions in the comparison

Table 1, which only consider databases for extracting domain-related features. The importance of including other sources of domain requirements can be seen in the ability of expert defined rules in contrast to existing solutions, for example, to represent differences between the documents, which cannot be included in the database itself. This hinders other solutions from reaching the level of domain adaptability that the presented framework achieves.

Results from our experiments show that adopting the IR and explainability algorithms to requirements from a certain domain did not limit its ability to be transferred to other domains. The domains, within which we implemented our framework, revealed different features and requirements. Despite that fact, our use of the same IR and explainability algorithms was possible without compromising their accuracy. Numerical evaluation showed that the IR algorithm maintained an accuracy level higher than 95% in both use cases. The comparable literature in

Table 1 focuses on the accuracy of the IR or recommendation algorithms, namely [

11,

15,

24,

25]. Within this context, we show in

Table 2 and

Table 3 that our framework was able to achieve a high performance of the IR algorithm, ranging between 87% and 99% in both use cases, depending on the length of the user query and database size. This result is not only comparable to the 88.1% of accuracy, achieved by Wang et al. [

15] using their Knowledge-aware Path Recurrent Network (KPRN), but adds to it the ability of considering domain requirements in the intelligent IR algorithm. Unlike the domain-intrinsic nature of the KPRN, our framework provides the same or higher accuracy in domain restricted environments, such as the ones demonstrated in the previous two use cases.

The explainability algorithm reached 85.6% in one use case and ranged between 82.5% and 99.6% depending on the length of the search query. In both cases the

MEP score is comparable or surpasses the results of Chen and Miyazaki [

17], who achieve an 87.25%

MEP score from a similar explainability algorithm implemented on a Slack-Item-KG of 100k items. It should be also noted in this comparison that our main contribution is not achieving the highest accuracy levels of the IR and explainability algorithms but rather achieving accurate results in a domain-specific environment, with a higher level of restrictions and data complexity than in the general-purpose datasets used in [

15,

17,

24,

25]. The ability of our solution to consider those requirements and achieve high accuracy scores of the IR and explainability at the same time, is the value-added that our framework offers in comparison to the current state of the art. In

Table 4, we summarize the comparative

MEP scores of our proposed framework and other similar solutions.

It can be noticed from

Table 4 that the MEP score increases with the increase of data size. This increase can be in the dataset itself, such as the case of using Slack-Item-KG 1M instead of 100k, or in the search query, such as the difference in our first use case between long and short queries. The enhanced potential for generating explanations from larger numbers of data is due to the increased potential to find relations and paths in the KG, which correspond to the user query. With more information in the search query, or larger knowledge graphs, more paths can be extracted and used for constructing the result explanation. Here, we mainly compared the explainability algorithm in our approach to the work of Chen and Miyazaki, since the authors explicitly use the MEP measure to evaluate their work. We then extended our evaluation to include BLEU and Rouge-L scores, as illustrated in

Figure 6,

Figure 7 and

Figure 12.

The evaluation of the proposed framework was implemented on two use cases that have different domain requirements, to demonstrate the transferability of the solution. In general, the proposed framework is designed in a generic way that adopts to any domain of interest. The implementation of our framework in other domains, such as medical [

23], energy [

38], or educational [

39], can be similarly accomplished, as long as the domain requirements are defined by the experts and data sources. The IR and explainability algorithms are then transferrable amongst those domains, due to their dependence on the KG structure. This fact implies the sustainability of the proposed framework, when used in a certain domain and then transferred to another one. Another sustainability aspect of our framework comes from the potential to develop and extend the knowledge graph itself, during the lifetime and therefore ongoing extension of the industrial process that is supported by the framework and its underlying data. Since the proposed framework includes the necessary elements to construct the knowledge graph, it inherently enables its expansion and development. This allows for a long-term use of the framework, which can take into account new data, new domain requirements, and new user queries.

Our framework was developed to handle textual data sources. This fact influenced the nature of domain-specific and domain-agnostic components we used in the framework. That being said, we also address the limitation of our framework to include image data sources, since it lacks the image-processing components on the input part of the framework. Although the current framework excels in handling pure textual data sources, and imagery data that is well annotated, it can still be extended to include specific elements that handle pure visual data sources to embed them in the knowledge graph construction process. Once the image data is embedded in the KG, the IR and explainability algorithms will be able to query these data types directly, corresponding to the same user queries.

Author Contributions

Conceptualization, H.A.-R., C.W. and J.Z.; methodology, H.A.-R., C.W., J.Z., M.D. and M.F.; software, H.A.-R., C.W. and J.Z.; validation, H.A.-R., C.W., J.Z., M.D. and M.F.; formal analysis, H.A.-R., C.W., J.Z., M.D. and M.F.; investigation, H.A.-R., C.W. and J.Z.; resources, H.A.-R., C.W., J.Z. and M.D.; data curation, H.A.-R., C.W. and J.Z.; writing—original draft preparation, H.A-R., C.W., J.Z. and M.D.; writing—review and editing, H.A.-R., C.W., J.Z., M.D. and M.F.; visualization, H.A.-R., C.W., J.Z. and M.D.; supervision, H.A.-R., C.W., M.D. and M.F.; project administration, H.A.-R., C.W. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the EU project iDev40. iDev40 received funding from the ECSEL Joint Undertaking (JU) under grant agreement No 783163. The JU receives support from the European Union’s Horizon 2020 research and innovation program and Austria, Germany, Belgium, Italy, Spain, Romania. Information and results set out in this publication are those of the authors and do not necessarily reflect the opinion of the ECSEL Joint Undertaking.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data used in the first use case includes confidential information. Therefore, it is not publicly available. Data used in the second use case is publicly available as elaborated in

Section 4.2.1. This data can be found here: (

https://www.monsterindia.com, accessed on 4 August 2020).

Acknowledgments

Authors acknowledge and thank Chirayu Upadhyay for his support with the recommender system in Use Case 2.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zenkert, J.; Weber, C.; Dornhöfer, M.; Abu-Rasheed, H.; Fathi, M. Knowledge Integration in Smart Factories. Encyclopedia 2021, 1, 792–811. [Google Scholar] [CrossRef]

- Li, X.-H.; Cao, C.C.; Shi, Y.; Bai, W.; Gao, H.; Qiu, L.; Wang, C.; Gao, Y.; Zhang, S.; Xue, X.; et al. A Survey of Data-driven and Knowledge-aware eXplainable AI. IEEE Trans. Knowl. Data Eng. 2020, 34, 29–49. [Google Scholar] [CrossRef]

- Samek, W.; Montavon, G.; Vedaldi, A.; Hansen, L.K.; Müller, K.-R. (Eds.) Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11700, ISBN 978-3-030-28953-9. [Google Scholar]

- William, B.F.; Baeza-Yates, R. Information Retrieval: Data Structures and Algorithms; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1992. [Google Scholar]

- Polley, S.; Koparde, R.R.; Gowri, A.B.; Perera, M.; Nuernberger, A. Towards Trustworthiness in the Context of Explainable Search. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Online, 11–15 July 2021; pp. 2580–2584. [Google Scholar]

- Yang, Z. Biomedical Information Retrieval incorporating Knowledge Graph for Explainable Precision Medicine. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Online, 25–29 July 2020; p. 2486. [Google Scholar]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Abu-Rasheed, H.; Weber, C.; Zenkert, J.; Krumm, R.; Fathi, M. Explainable Graph-Based Search for Lessons-Learned Documents in the Semiconductor Industry. In Intelligent Computing; Arai, K., Ed.; Lecture Notes in Networks and Systems; Springer International Publishing: Cham, Switzerland, 2022; Volume 283, pp. 1097–1106. ISBN 978-3-030-80118-2. [Google Scholar]

- Tiddi, I.; Lécué, F.; Hitzler, P. (Eds.) Knowledge Graphs for Explainable Artificial Intelligence: Foundations, Applications and Challenges; Studies on the Semantic Web; IOS Press: Amsterdam, The Netherlands, 2020; ISBN 978-1-64368-081-1. [Google Scholar]

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges. In Natural Language Processing and Chinese Computing; Tang, J., Kan, M.-Y., Zhao, D., Li, S., Zan, H., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11839, pp. 563–574. ISBN 978-3-030-32235-9. [Google Scholar]

- Moon, S.; Shah, P.; Kumar, A.; Subba, R. OpenDialKG: Explainable Conversational Reasoning with Attention-based Walks over Knowledge Graphs. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 845–854. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 28 July–2 August 2016; pp. 1135–1144. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef] [Green Version]

- Ehsan, U.; Harrison, B.; Chan, L.; Riedl, M.O. Rationalization: A Neural Machine Translation Approach to Generating Natural Language Explanations. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018; pp. 81–87. [Google Scholar]

- Wang, X.; Wang, D.; Xu, C.; He, X.; Cao, Y.; Chua, T.-S. Explainable Reasoning over Knowledge Graphs for Recommendation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 7–11 July 2019; Volume 33, pp. 5329–5336. [Google Scholar] [CrossRef] [Green Version]

- Xian, Y.; Fu, Z.; Muthukrishnan, S.; de Melo, G.; Zhang, Y. Reinforcement Knowledge Graph Reasoning for Explainable Recommendation. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 285–294. [Google Scholar]

- Chen, Y.; Miyazaki, J. A Model-Agnostic Recommendation Explanation System Based on Knowledge Graph. In Database and Expert Systems Applications; Hartmann, S., Küng, J., Kotsis, G., Tjoa, A.M., Khalil, I., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12392, pp. 149–163. ISBN 978-3-030-59050-5. [Google Scholar]

- Fensel, D.; Şimşek, U.; Angele, K.; Huaman, E.; Kärle, E.; Panasiuk, O.; Toma, I.; Umbrich, J.; Wahler, A. Introduction: What Is a Knowledge Graph? In Knowledge Graphs; Springer International Publishing: Cham, Switzerland, 2020; pp. 1–10. ISBN 978-3-030-37438-9. [Google Scholar]

- Alzoubi, W.A. Dynamic Graph based Method for Mining Text Data. WSEAS Trans. Syst. Control 2020, 15, 453–458. [Google Scholar] [CrossRef]

- Alzoubi, W.A. An Improved Graph based Rules Mining Technique from Text. Eng. World 2020, 2, 76–81. [Google Scholar]

- Abu-Rasheed, H.; Weber, C.; Zenkert, J.; Czerner, P.; Krumm, R.; Fathi, M. A Text Extraction-Based Smart Knowledge Graph Composition for Integrating Lessons Learned During the Microchip Design. In Intelligent Systems and Applications; Arai, K., Kapoor, S., Bhatia, R., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2021; Volume 1251, pp. 594–610. ISBN 978-3-030-55186-5. [Google Scholar]

- Seeliger, A.; Pfaff, M.; Krcmar, H. Semantic Web Technologies for Explainable Machine Learning Models: A Literature Review. In Joint Proceedings of the 6th International Workshop on Dataset PROFlLing and Search and the 1st Workshop on Semantic Explainability co-located with the 18th International Semantic Web Conference (ISWC 2019), Auckland, New Zealand, 27 October 2019; Volume 2465, pp. 30–45. [Google Scholar]

- Naiseh, M. Explainability Design Patterns in Clinical Decision Support Systems. In Research Challenges in Information Science; Dalpiaz, F., Zdravkovic, J., Loucopoulos, P., Eds.; Lecture Notes in Business Information Processing; Springer International Publishing: Cham, Switzerland, 2020; Volume 385, pp. 613–620. ISBN 978-3-030-50315-4. [Google Scholar]

- Song, W.; Duan, Z.; Yang, Z.; Zhu, H.; Zhang, M.; Tang, J. Explainable Knowledge Graph-Based Recommendation via Deep Reinforcement Learning. arXiv 2019, arXiv:1906.09506. Available online: http://arxiv.org/abs/1906.09506 (accessed on 17 November 2021).

- Xie, L.; Hu, Z.; Cai, X.; Zhang, W.; Chen, J. Explainable recommendation based on knowledge graph and multi-objective optimization. Complex Intell. Syst. 2021, 7, 1241–1252. [Google Scholar] [CrossRef]

- Shalaby, W.; AlAila, B.; Korayem, M.; Pournajaf, L.; AlJadda, K.; Quinn, S.; Zadrozny, W. Help me find a job: A graph-based approach for job recommendation at scale. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 1544–1553. [Google Scholar]

- Zhang, J.; Li, J. Enhanced Knowledge Graph Embedding by Jointly Learning Soft Rules and Facts. Algorithms 2019, 12, 265. [Google Scholar] [CrossRef] [Green Version]

- Cheng, K.; Yang, Z.; Zhang, M.; Sun, Y. UniKER: A Unified Framework for Combining Embedding and Definite Horn Rule Reasoning for Knowledge Graph Inference. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing; Association for Computational Linguistics, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 9753–9771. Available online: https://aclanthology.org/2021.emnlp-main.769 (accessed on 29 November 2021).

- Yu, J.; McCluskey, K.; Mukherjee, S. Tax Knowledge Graph for a Smarter and More Personalized TurboTax. arXiv 2020, arXiv:2009.06103. Available online: http://arxiv.org/abs/2009.06103 (accessed on 29 November 2021).

- Dijkstra, E.W. A note on two problems in connexion with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef] [Green Version]

- Tartir, S.; Arpinar, I.B. Ontology Evaluation and Ranking using OntoQA. In Proceedings of the International Conference on Semantic Computing (ICSC 2007), Irvine, CA, USA, 17–19 September 2007; pp. 185–192. [Google Scholar]

- García, J.; García-Peñalvo, F.J.; Therón, R. A Survey on Ontology Metrics. In Knowledge Management, Information Systems, E-Learning, and Sustainability Research; Lytras, M.D., Ordonez De Pablos, P., Ziderman, A., Roulstone, A., Maurer, H., Imber, J.B., Eds.; Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 111, pp. 22–27. ISBN 978-3-642-16317-3. [Google Scholar]

- Abdollahi, B.; Nasraoui, O. Explainable Matrix Factorization for Collaborative Filtering. In Proceedings of the 25th International Conference Companion on World Wide Web–WWW ’16 Companion, Montrial, QC, Canada, 11–15 April 2016; pp. 5–6. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.-J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics–ACL ’02, Philadelphia, PA, USA, 7–12 July 2001; p. 311. [Google Scholar]

- Lin, C.-Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Upadhyay, C.; Abu-Rasheed, H.; Weber, C.; Fathi, M. Explainable Job-Posting Recommendations Using Knowledge Graphs and Named Entity Recognition. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 3291–3296. [Google Scholar]

- Gambhir, M.; Gupta, V. Recent automatic text summarization techniques: A survey. Artif. Intell. Rev. 2017, 47, 1–66. [Google Scholar] [CrossRef]

- Li, H.; Deng, J.; Yuan, S.; Feng, P.; Arachchige, D.D.K. Monitoring and Identifying Wind Turbine Generator Bearing Faults Using Deep Belief Network and EWMA Control Charts. Front. Energy Res. 2021, 9, 799039. [Google Scholar] [CrossRef]

- Abu-Rasheed, H.; Weber, C.; Harrison, S.; Zenkert, J.; Fathi, M. What to Learn Next: Incorporating Student, Teacher and Domain Preferences for a Comparative Educational Recommender System. In Proceedings of the EduLEARN19 Proceedings, Palma, Spain, 1–3 July 2018; pp. 6790–6800. [Google Scholar]

- Zenkert, J.; Klahold, A.; Fathi, M. Knowledge discovery in multidimensional knowledge representation framework: An integrative approach for the visualization of text analytics results. Iran J. Comput. Sci. 2018, 1, 199–216. [Google Scholar] [CrossRef]

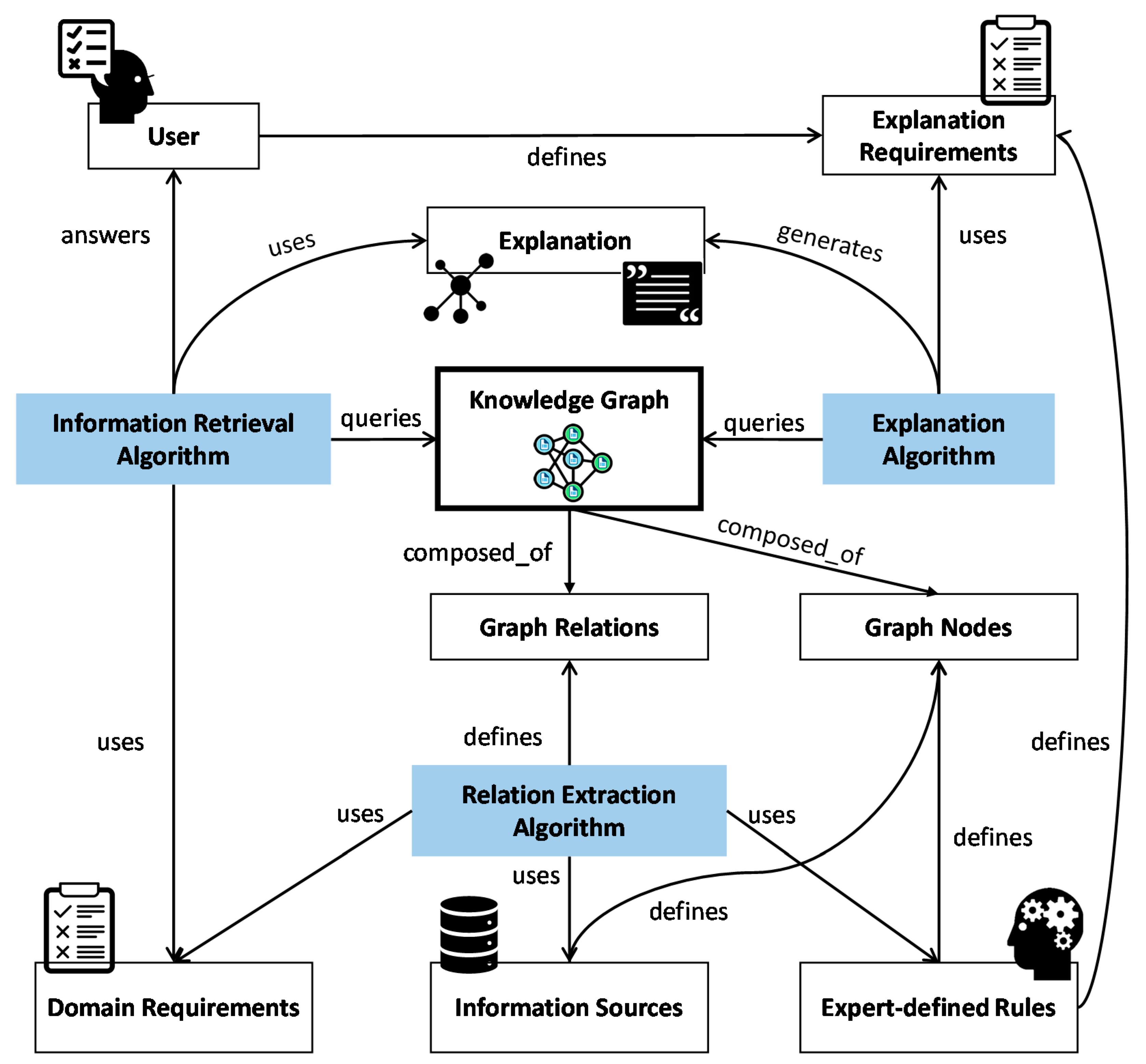

Figure 1.

Proposed framework for transferrable, domain oriented, explainable IR, based on knowledge graphs. Domain-specific elements are shown in white, while domain-agnostic algorithms are shown in blue.

Figure 2.

Example of integrating expert defined rules and domain requirements in the creation of graph nodes and relations. In the proposed framework, expert knowledge is used for defining the nodes and relations of the graph. Domain requirements are here presented as a list, which is used by the relation extraction algorithm to define graph relations.

Figure 3.

Visual and textual explanations based on the knowledge graph. Results that are retrieved by the IR algorithm are extracted from the graph and shown visually as a sub-graph, which shows the interlinks between different results. Textual explanations are generated from the reasoning behind linking two or more nodes in the graph to each other.

Figure 4.

The construction of the multidimensional knowledge graph from chip-design documents. Four types of nodes are defined, along with their relations. Relations exist amongst nodes of the same type and among those of different types.

Figure 5.

Verbal explanation template used in the implementation of Use Case 1. The template is designed based on expert requirements for explaining the semantic search results.

Figure 6.

BLEU and Rouge-L scores for the search results. The figure represents the scores for the short search queries. The average BLEU score for the short search queries (on the horizontal axis) reached 37.2%, and the average Rouge-L score was 25.1%.

Figure 7.

BLEU and Rouge-L scores for the search results. The figure shows the scores of the long search queries. The average BLEU score for the long search queries (on the horizontal axis) reached 38.3%, and the average Rouge-L score was 27.4%.

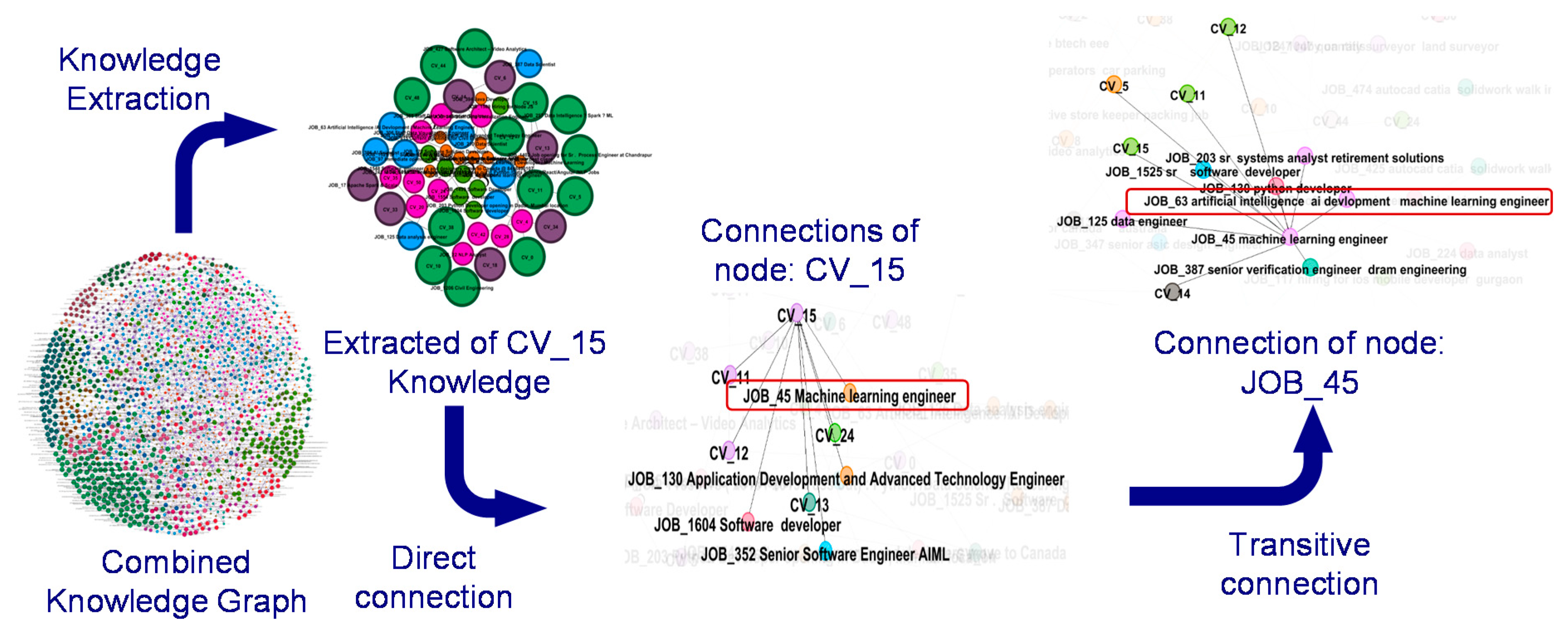

Figure 8.

Direct relations between user profiles and relevant job postings. Each relation is defined based on the similarity score between the two nodes.

Figure 9.

The use of direct and transitive relations in the knowledge graph. Here, jobPosting 63 is recommended to the userProfile_15, since it is relevant to the directly connected jobPosting_45.

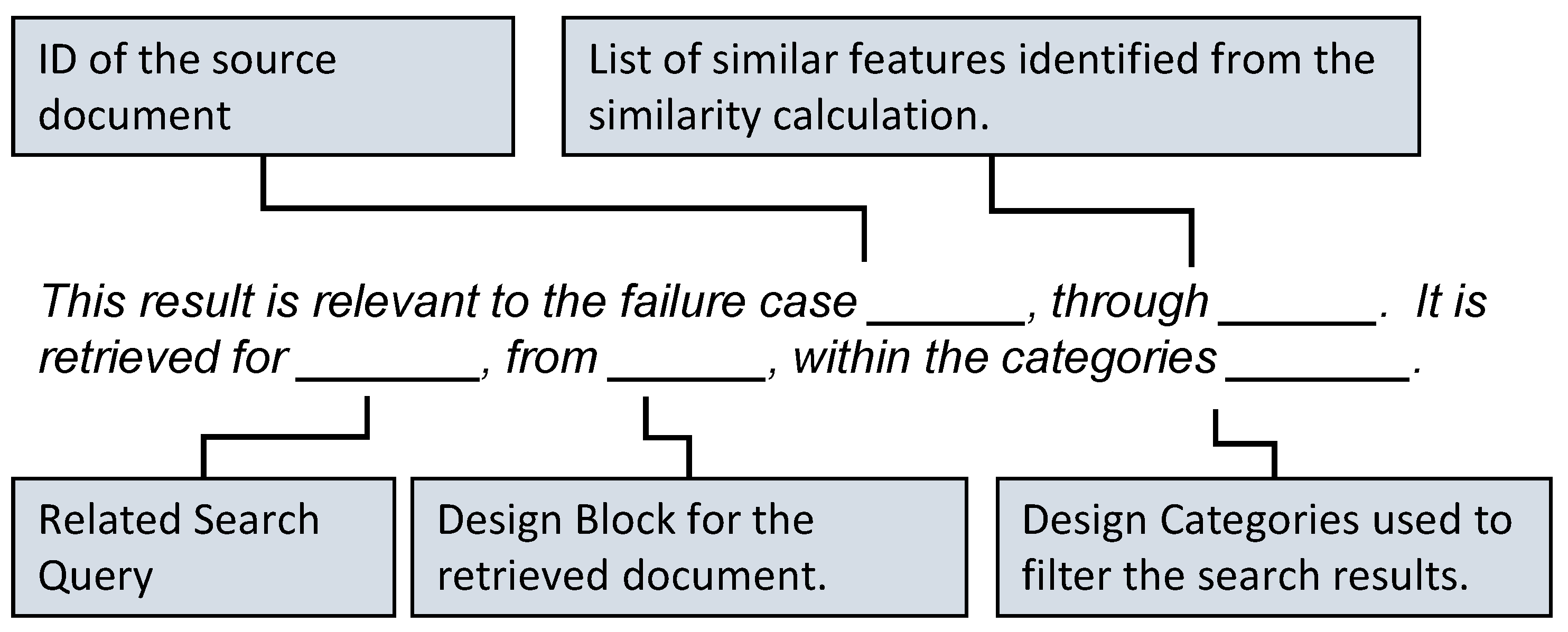

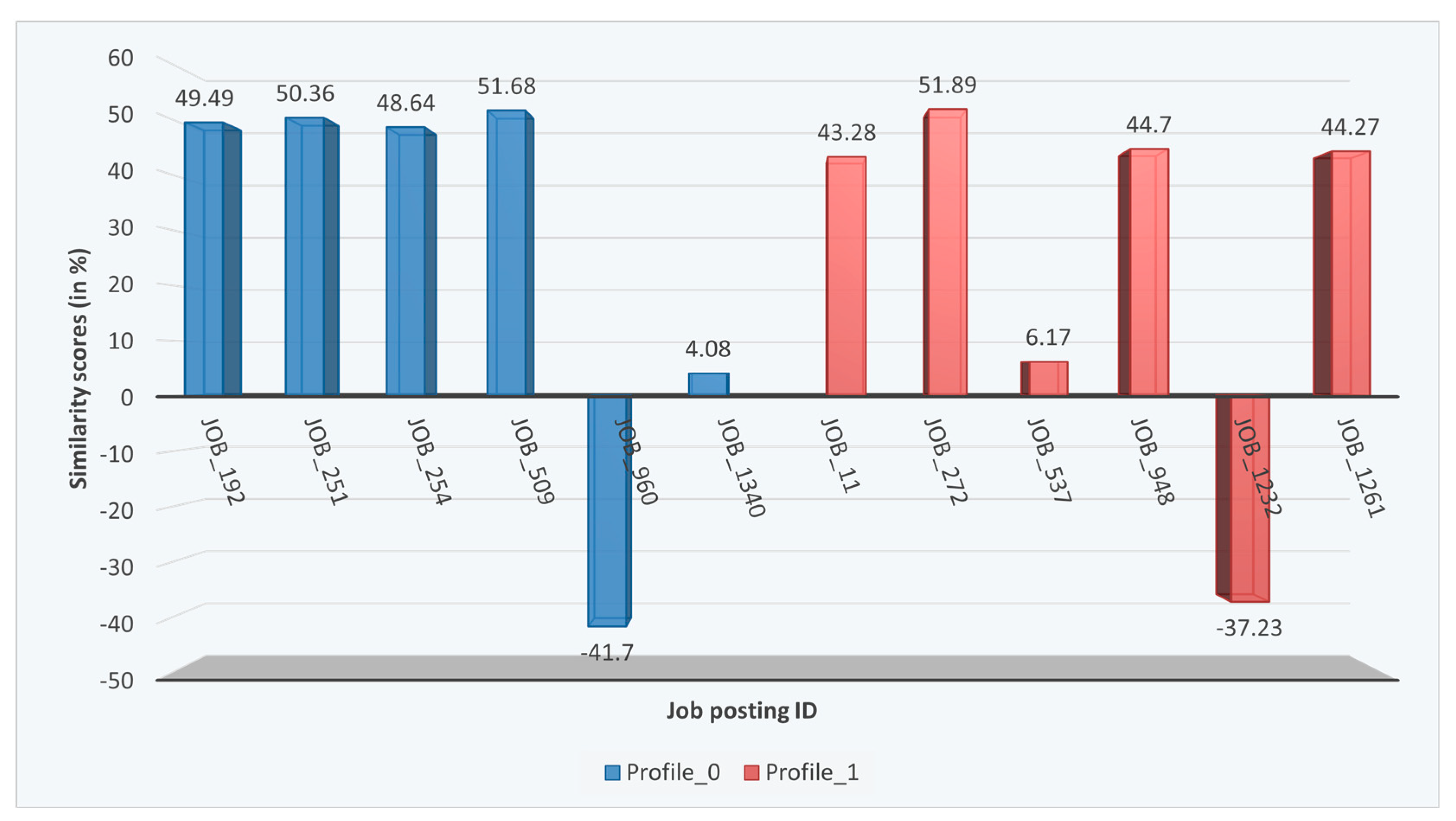

Figure 10.

A sample of the overall calculated similarity scores between user profiles and job postings. Similarities above a predefined threshold are used to create corresponding relations in the knowledge graph. Negative similarity scores are caused by the angle value between document vectors, which results in a negative cosine value of that angle when calculating the cosine similarity.

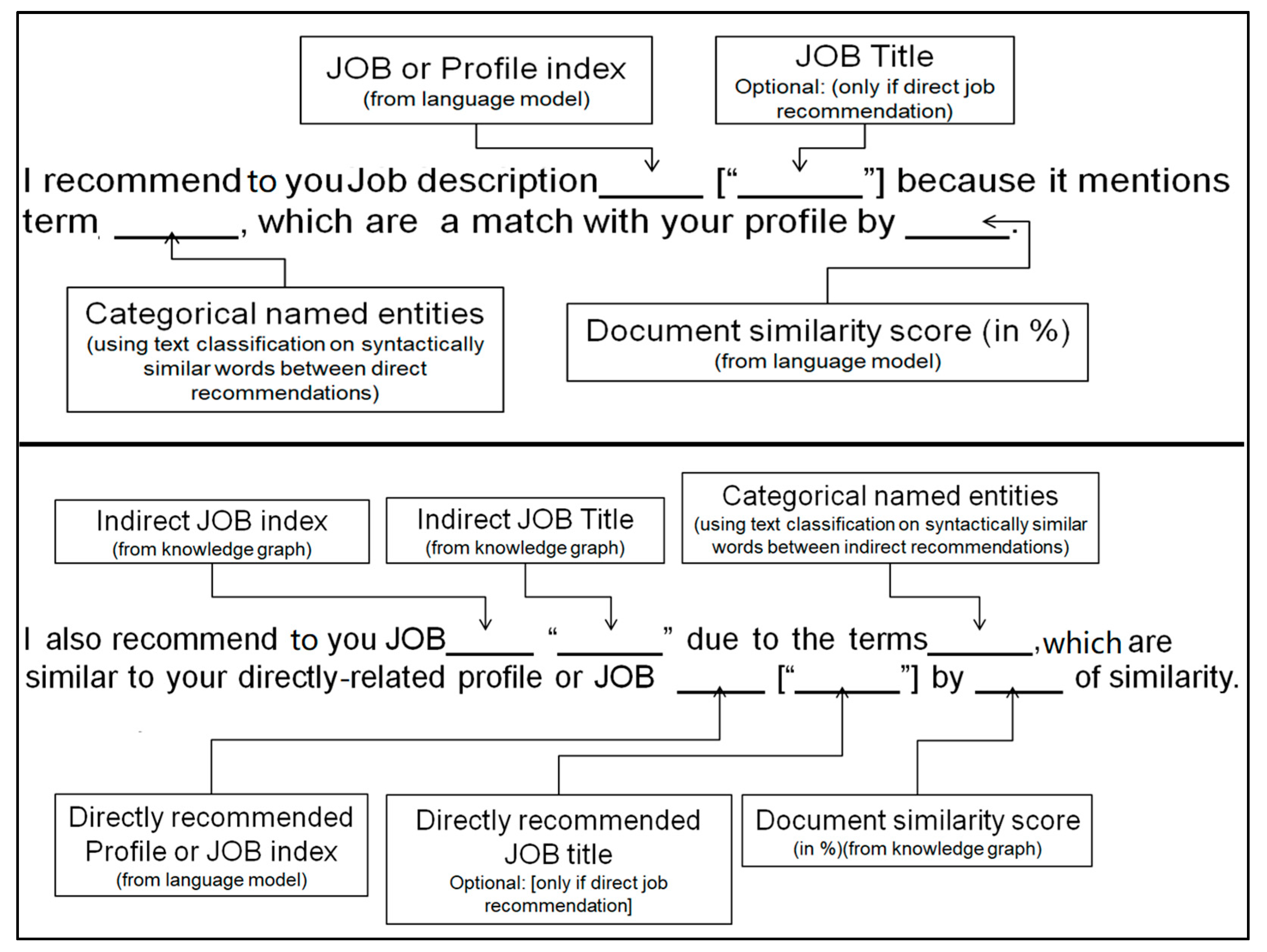

Figure 11.

Two templates are used to generate the textual explanations. The first template is designed for direct recommendations, where only the link between the user profile and the job posting is explained. The second template includes information about the intermediate link between the user profile and the job posting, within the transitive relation.

Figure 12.

BLEU and Rouge-L scores for a sample of recommended job postings to two user profiles.

Table 1.

Comparison between different explainability approaches.

| Reference | Solution Features | Solution Outcomes |

|---|

| Year | Model-Agnostic/

Intrinsic | Recommendation Approach | Explainability

Approach | Retrieval Task | Domain

Requirement Based on | Transferable with Respect to Domain

Requirements |

|---|

Chen and Miyazaki

[17] | 2020 | Model-

agnostic | Conventional and intelligent recommendation systems | Textual explanation generated from a translator after ranking graph paths | Graph path retrieval | Not domain-specific | Yes |

Moon et al.

[11] | 2019 | Model-

agnostic | Entity recommendations based on graph walker | Explanations are based on the generated paths from the graph walker | Graph path retrieval | The Database | Yes |

Song et al.

[24] | 2019 | Model-

intrinsic | Based on a Markov decision process | Usage of user-to-item paths to generate explanations | Graph path retrieval in user-item-entity graph | Not domain specific | No |

Wang et al.

[15] | 2019 | Model-

intrinsic | Intelligent model to learn path semantics and generate recommendations | Pooling algorithm to detect the path strength and its role in the prediction | Graph path retrieval | Not domain specific | No |

Xie et al.

[25] | 2021 | Model-

intrinsic | Recommendation based on a user-item KG with item ratings | Explanation based on KG and multi-objective optimization | Graph path retrieval | Not domain specific | No |

| Our Framework | 2021 | Model-

agnostic | Graph path recommendation | Graph based | Node retrieval, Graph path retrieval | Database, Expert Rules, Explicit requirements | Yes |

Table 2.

Evaluation results of the proposed framework on Use Case 1.

| | Graph Evaluation Measure | IR Evaluation Measure | Explainability Evaluation Measure |

|---|

| | Class Richness | Avg. Relevance | Information Availability | MEP | Avg. BLEU | Avg. Rouge-L |

|---|

| Short search query | 88% | 87.3% | 78.7% | 82.5% | 37.2% | 25.1% |

| Long search query | 99% | 91.8% | 99.6% | 38.3% | 27.4% |

Table 3.

Precision, Recall, and F1 scores of the customized NER, with regard to the predefined classes.

| | Precision | Recall | F1-Score | Support |

|---|

| programming_framework | 0.84 | 0.85 | 0.84 | 241 |

| skill | 0.794 | 0.88 | 0.83 | 2610 |

| software | 0.79 | 0.90 | 0.85 | 902 |

| study | 0.87 | 0.86 | 0.86 | 4481 |

| job_title | 0.89 | 0.99 | 0.94 | 480 |

| location | 0.99 | 0.96 | 0.98 | 485 |

| other_word | 0.93 | 0.88 | 0.90 | 9190 |

| programming_language | 0.92 | 0.98 | 0.895 | 642 |

| Accuracy | | | 0.88 | 19,031 |

Table 4.

Explainability comparison based on the MEP scores.

| Approach | MEP Score % |

|---|

| Chen and Miyazaki—Slack-Item-KG 100k | 87.25 |

| Chen and Miyazaki—Slack-Item-KG 1M | 91.67 |

| Our framework—Use case1 (short query) | 82.5 |

| Our framework—Use case1 (long query) | 99.6 |

| Our framework—Use case2 | 85.6 |

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).