Explainable Artificial Intelligence for Workplace Mental Health Prediction

Abstract

1. Introduction

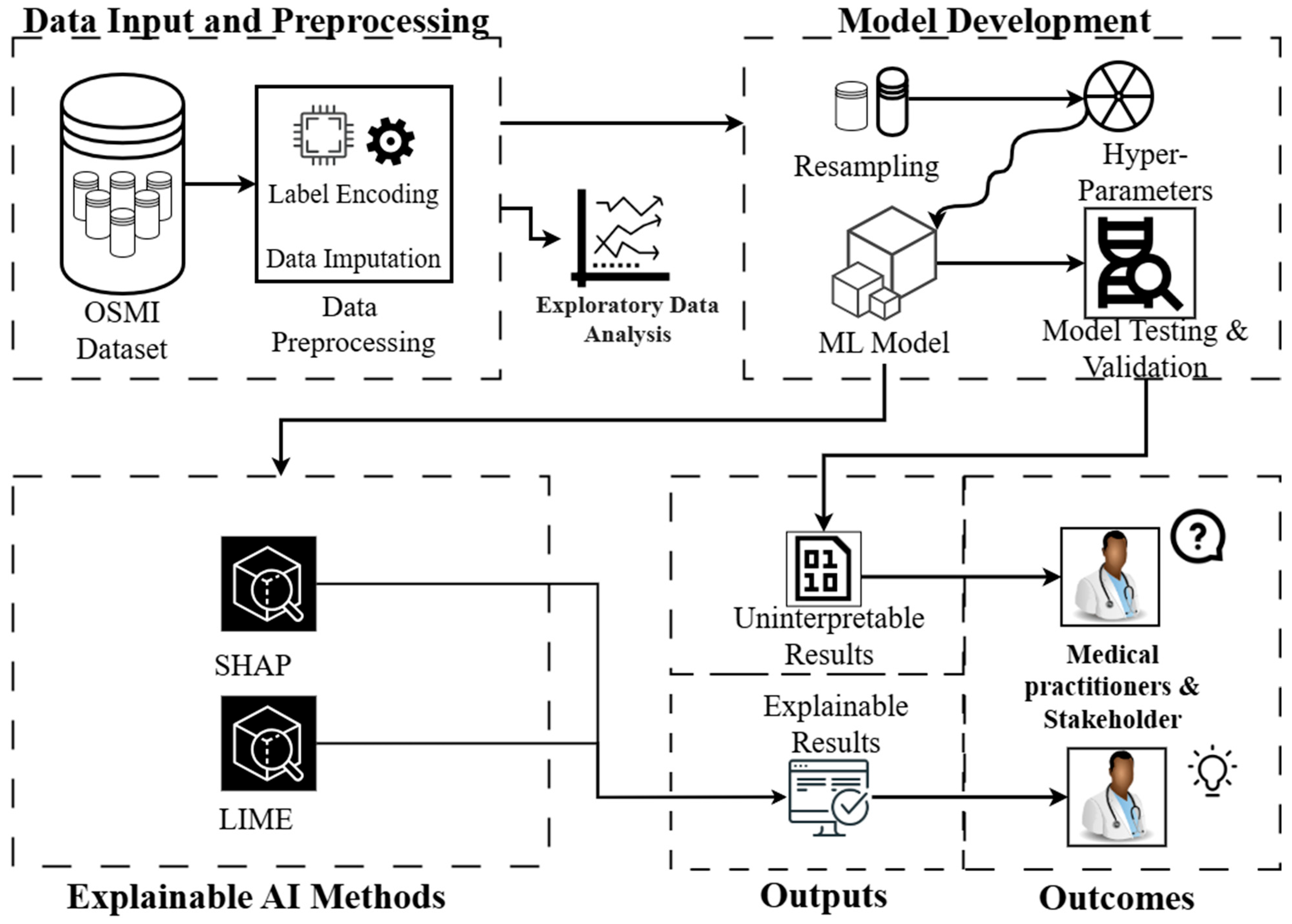

- Developing and evaluating ML models using the Open Sourcing Mental Illness (OSMI) secondary dataset to predict workplace mental health outcomes.

- Applying Explainable AI (XAI) techniques (SHAP and LIME) to enhance model transparency and interpretability.

2. Related Work

3. Methods

3.1. Dataset Overview and Data Preprocessing

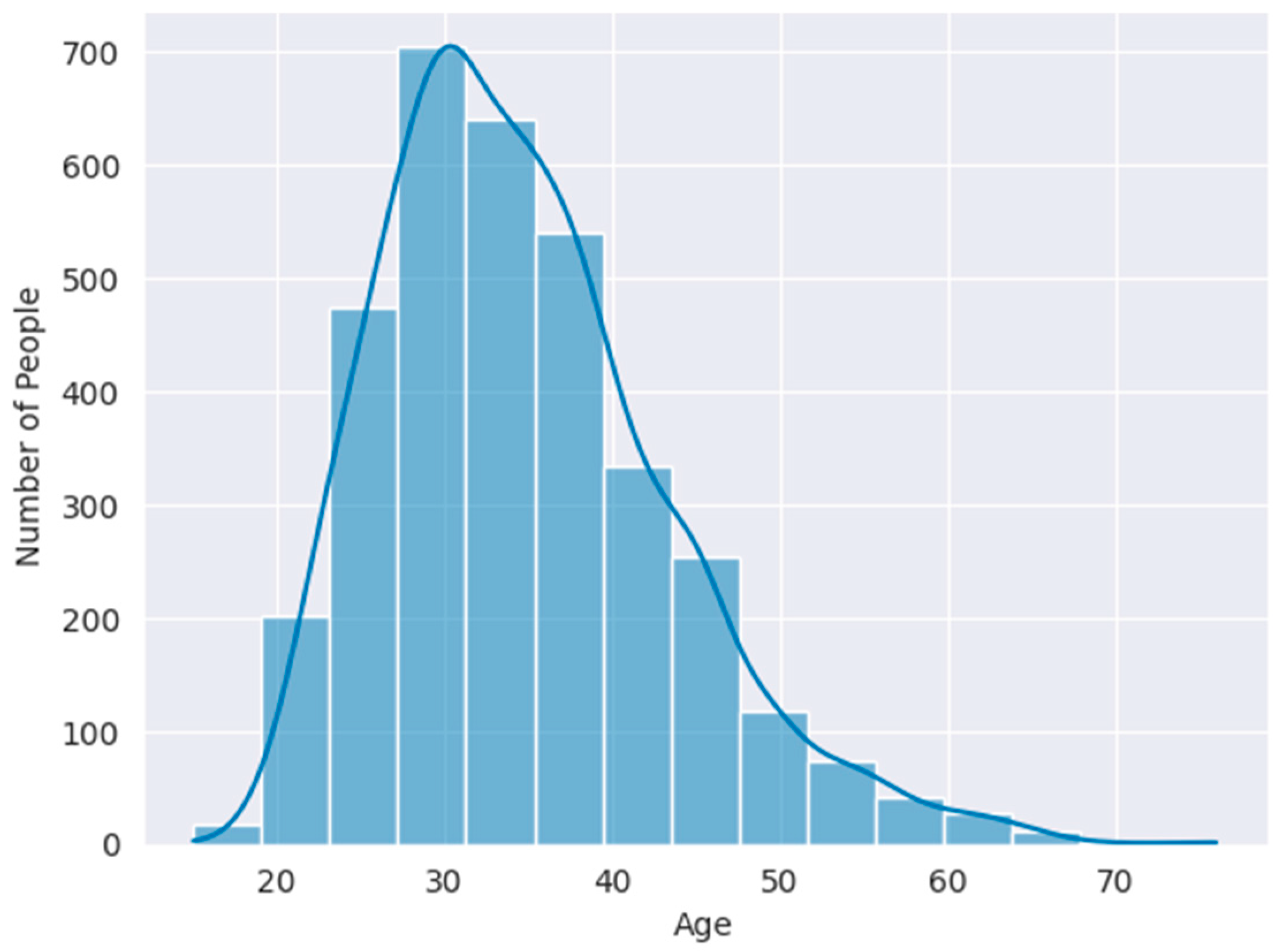

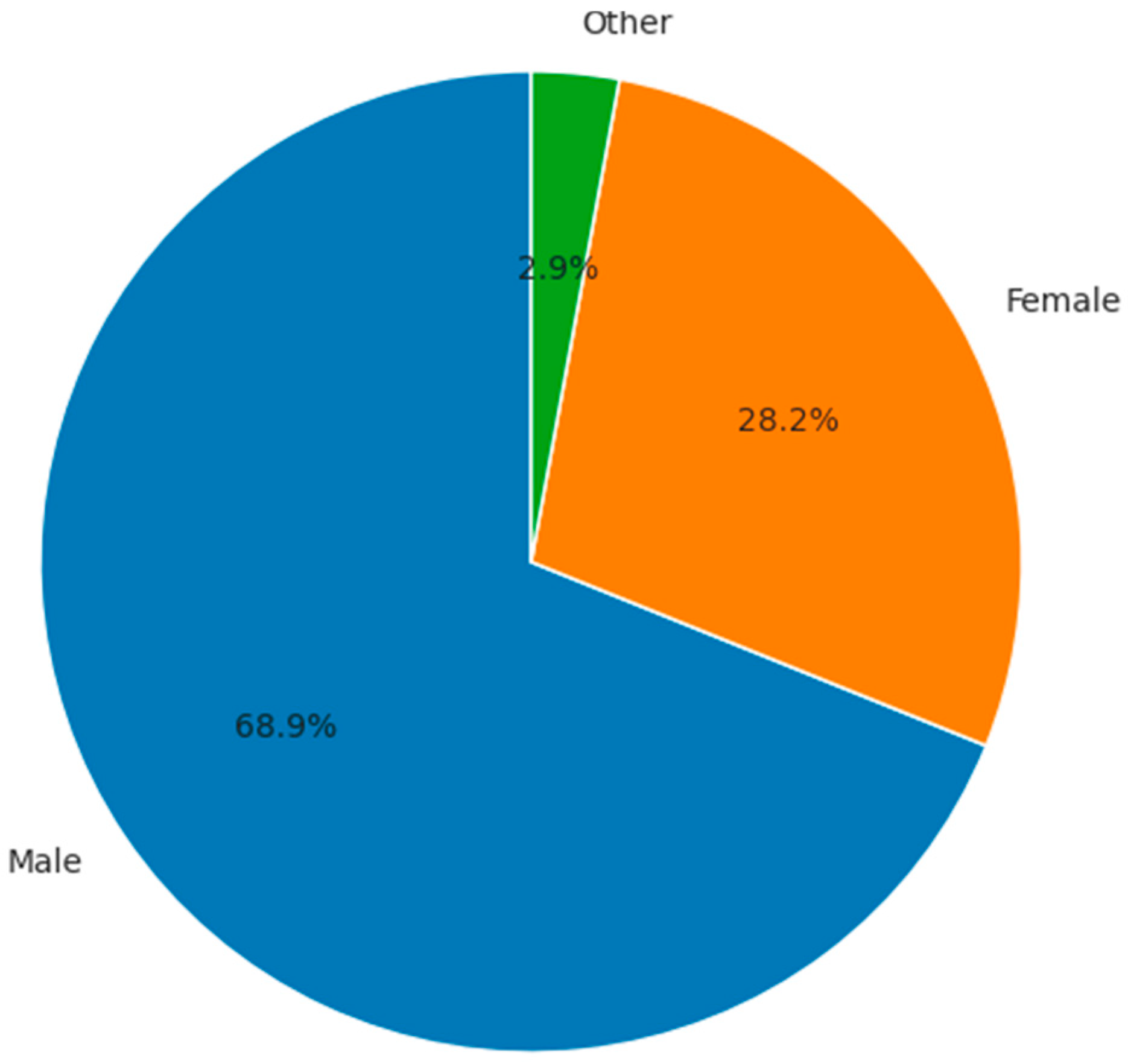

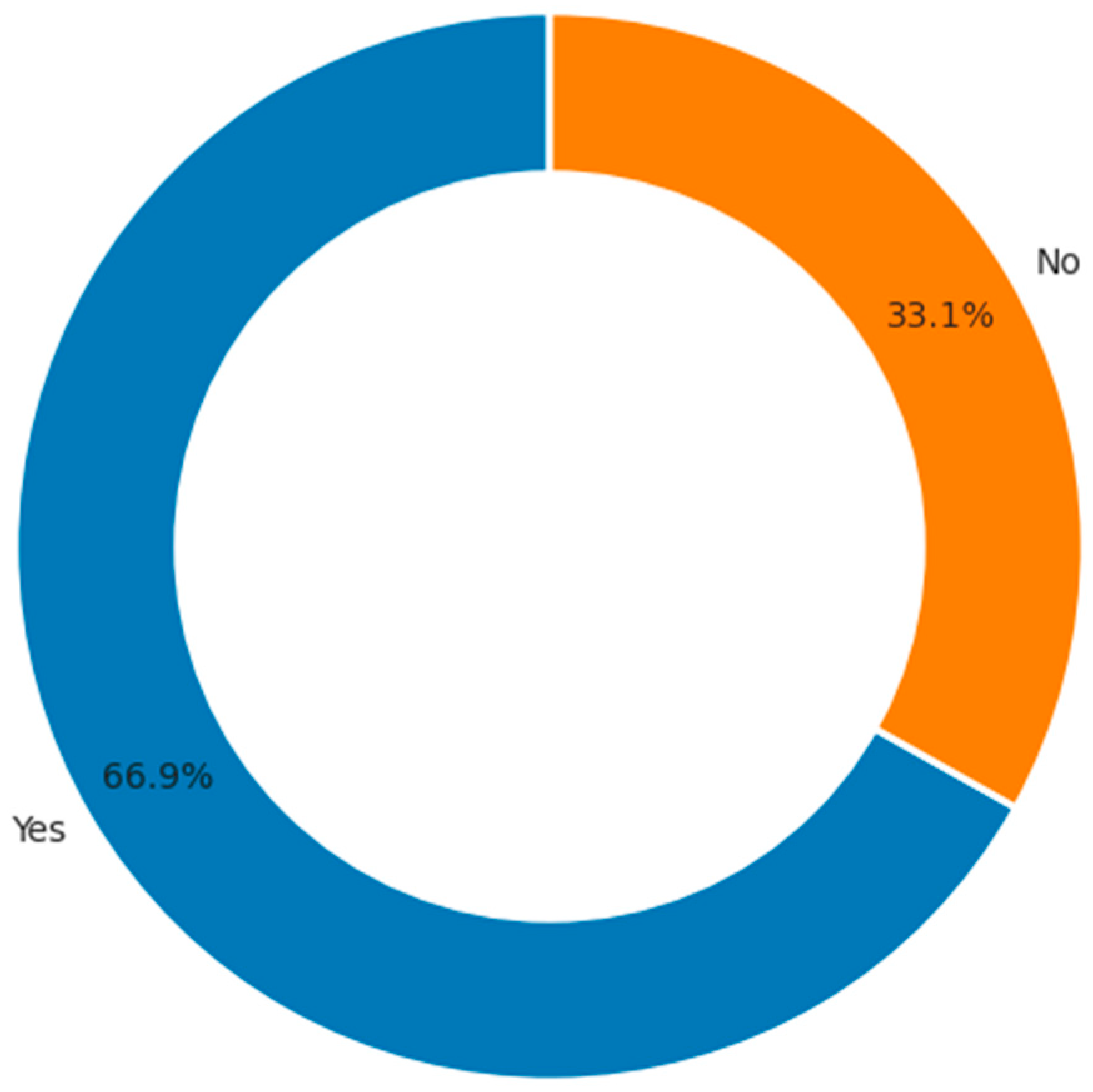

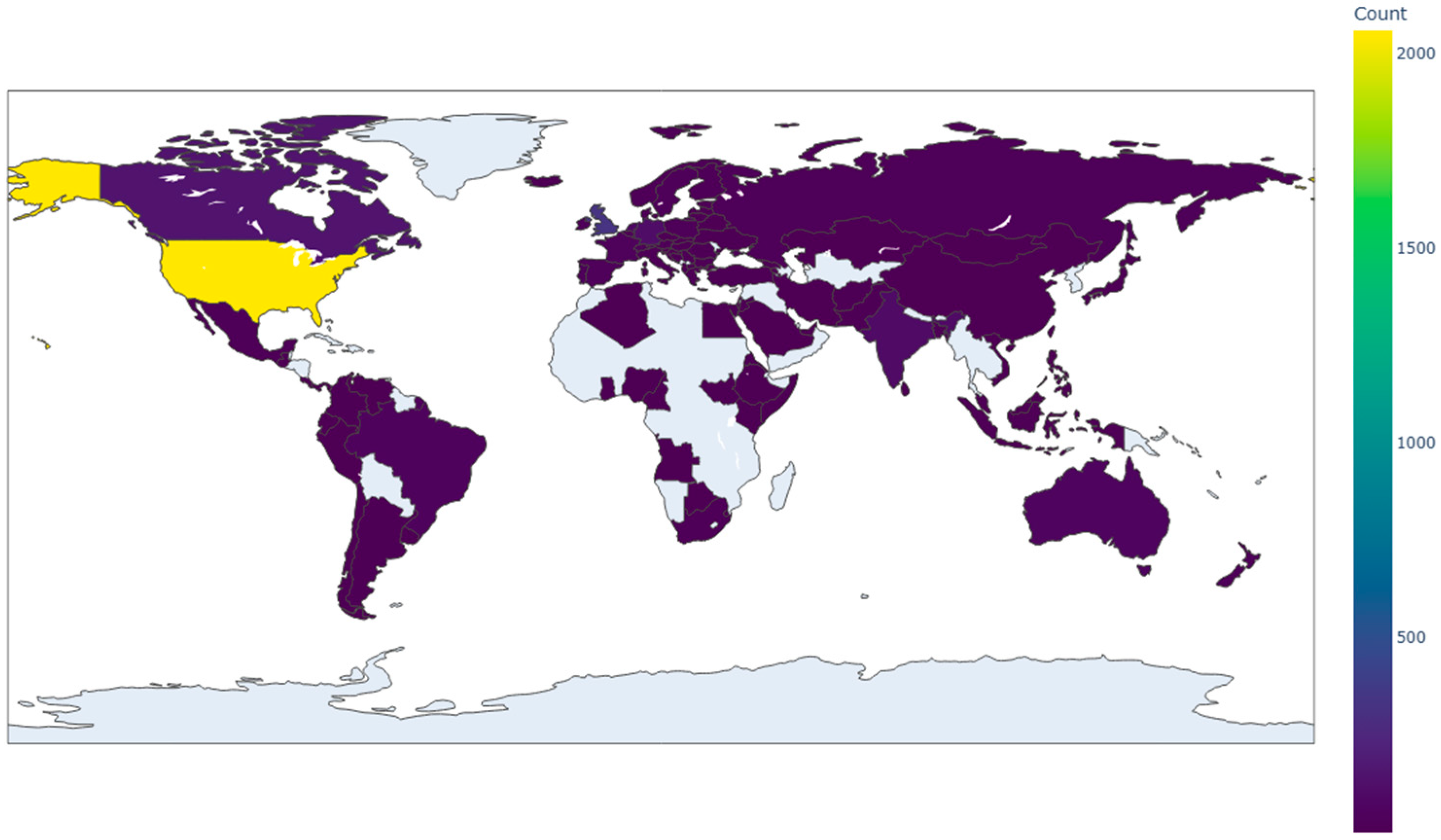

3.2. Exploratory Data Analysis

3.3. Class Imbalance

3.4. Model Development

3.4.1. ML Classifiers

3.4.2. Performance Metrics

3.4.3. Explainable AI Used

3.4.4. Experimental Setup

4. Results

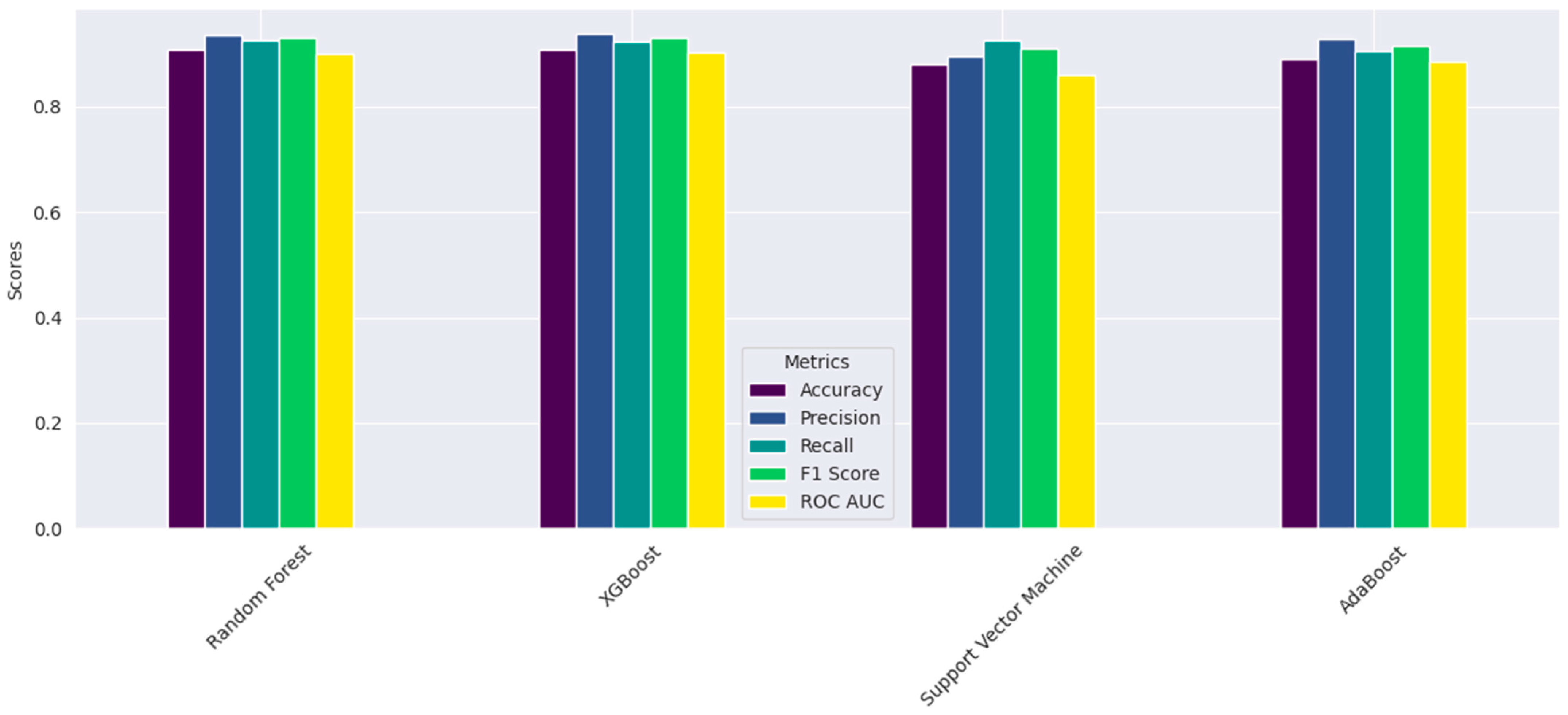

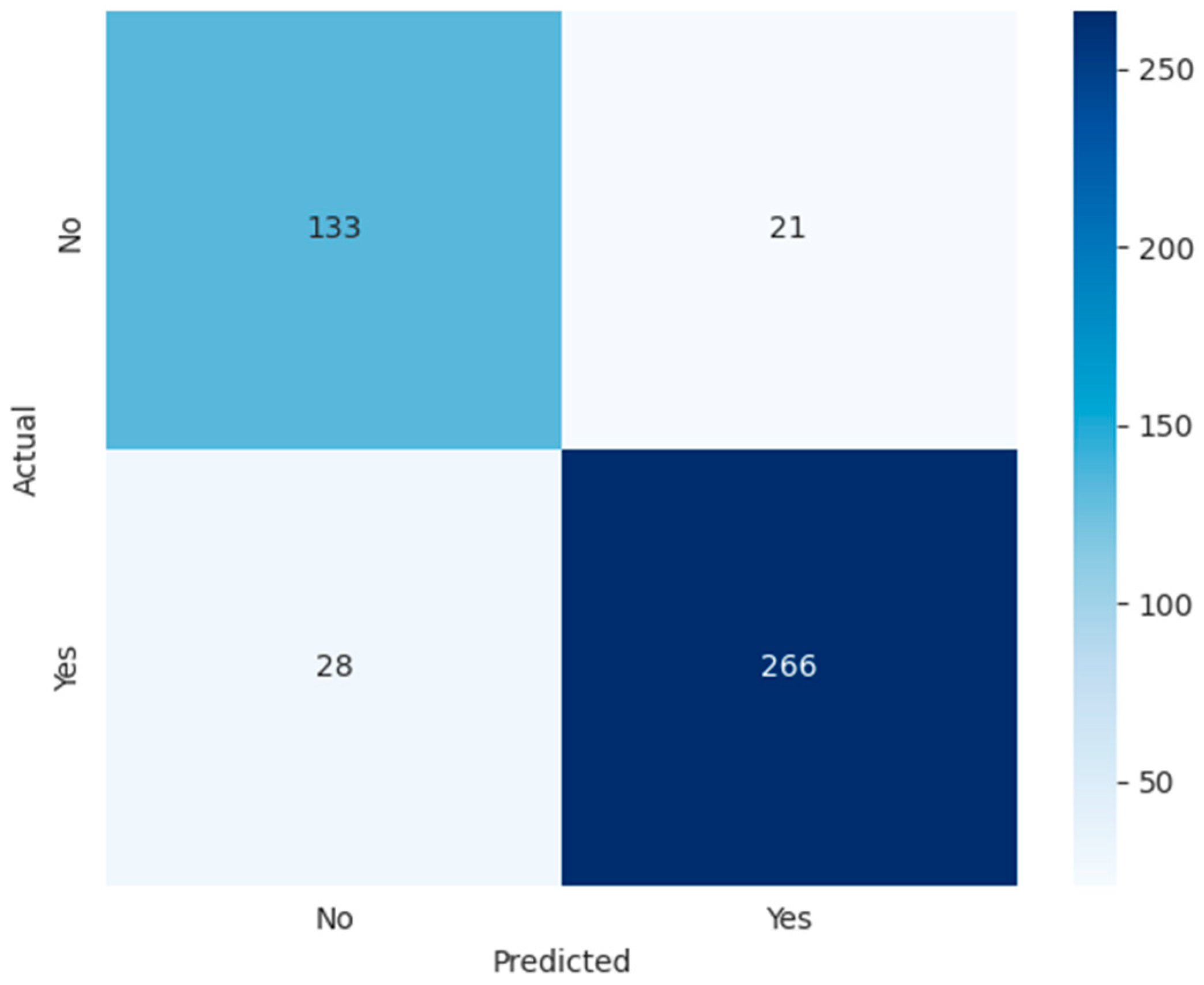

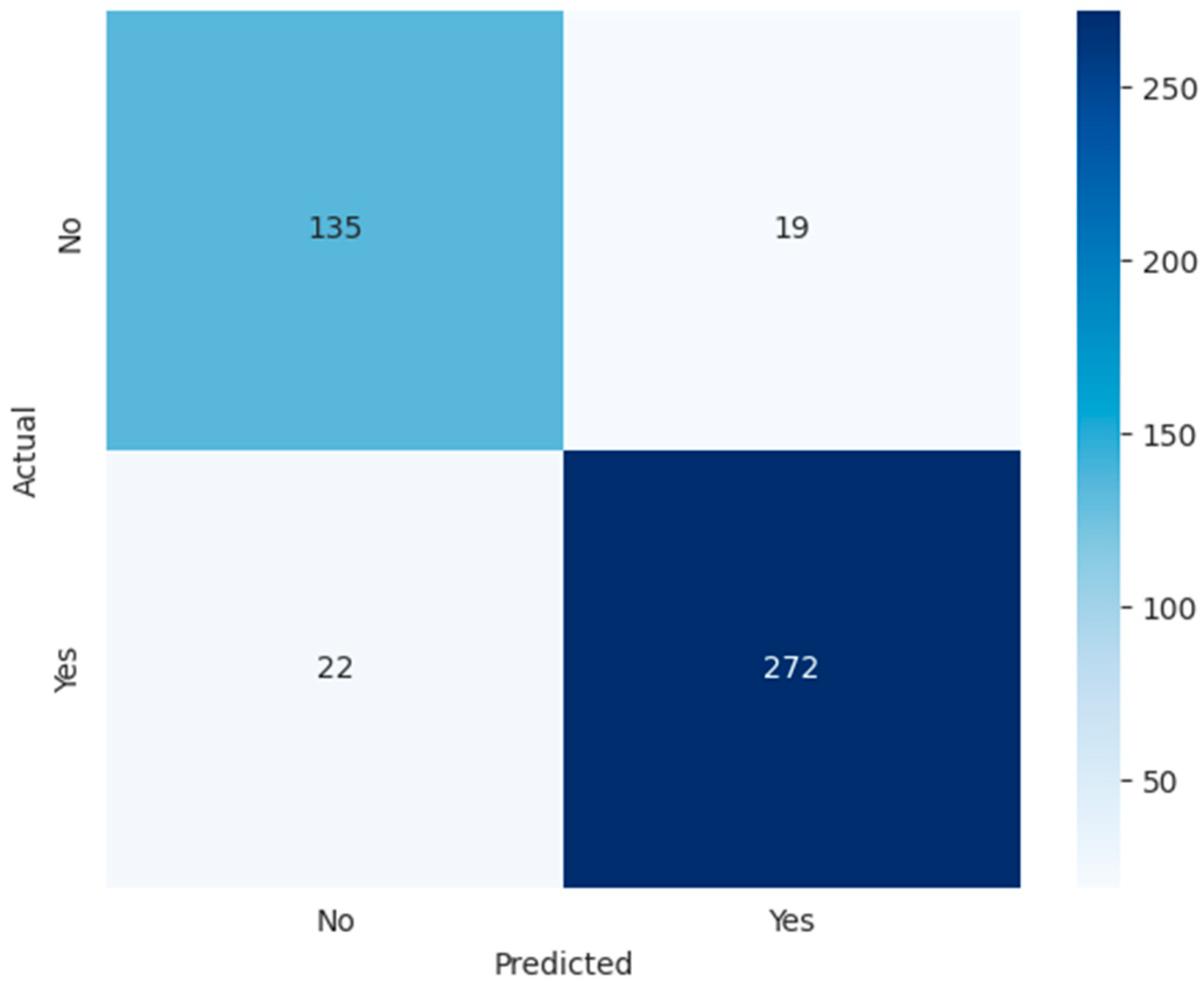

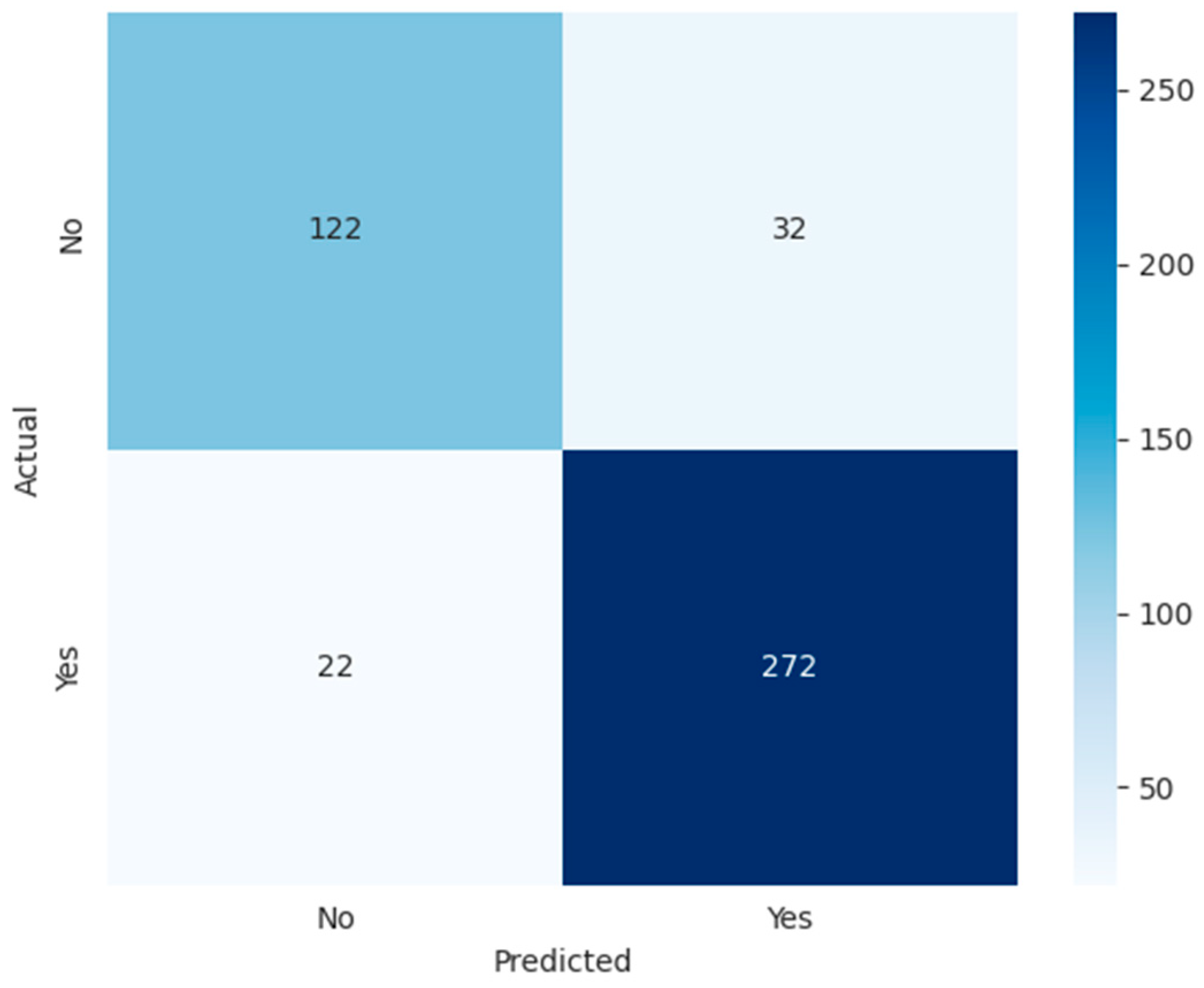

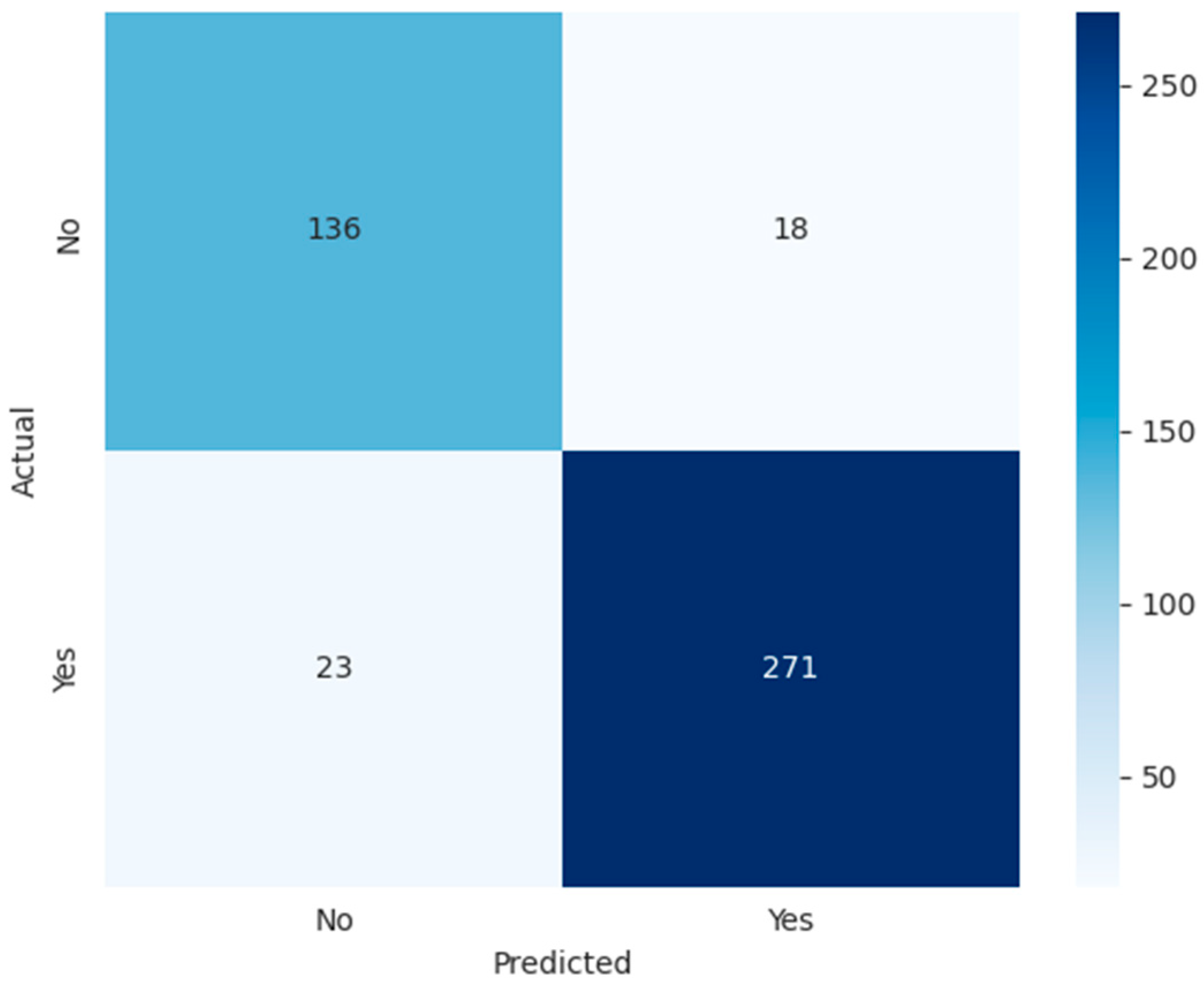

4.1. Performance Results of the ML Models

4.2. Explainable AI

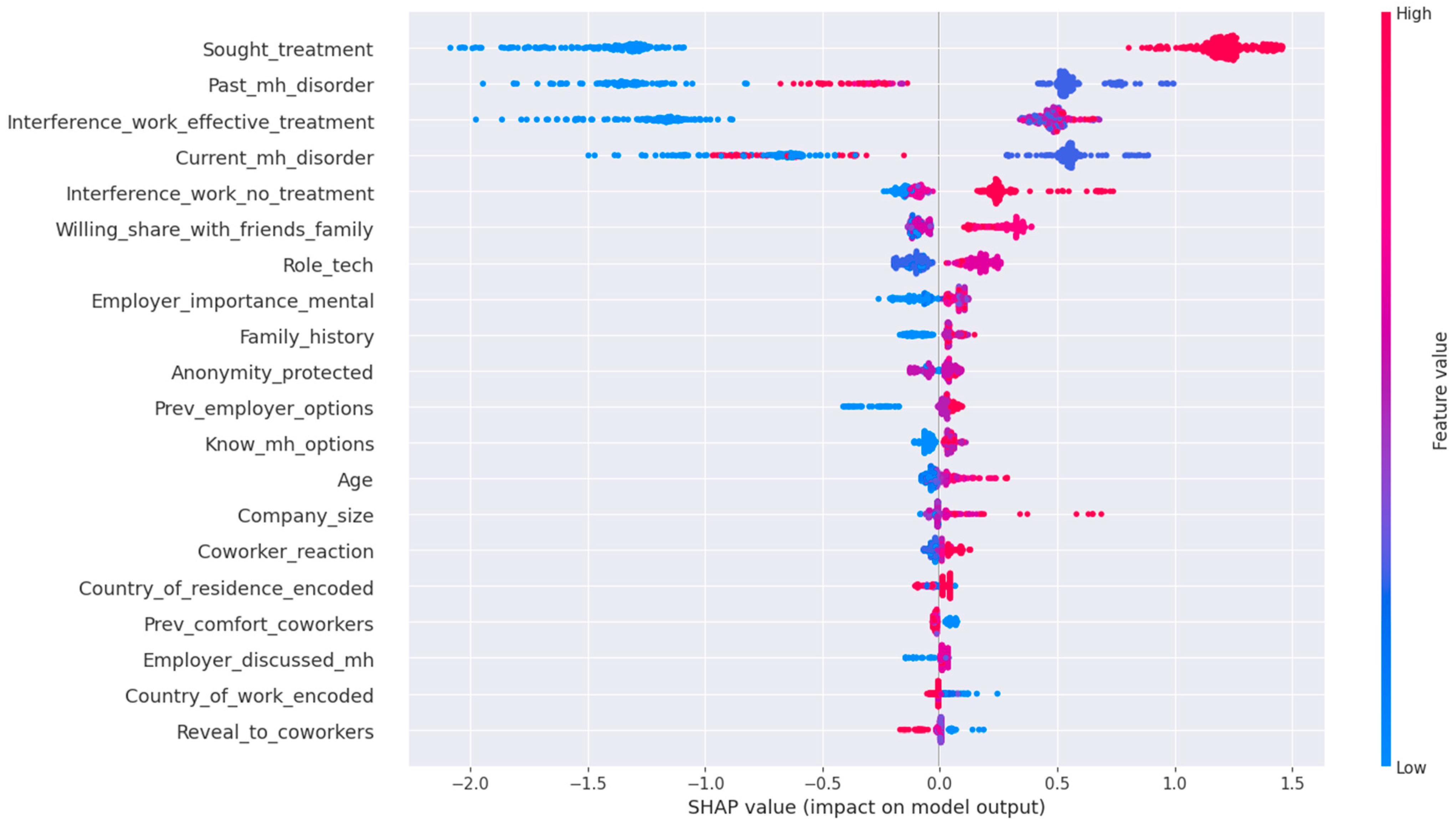

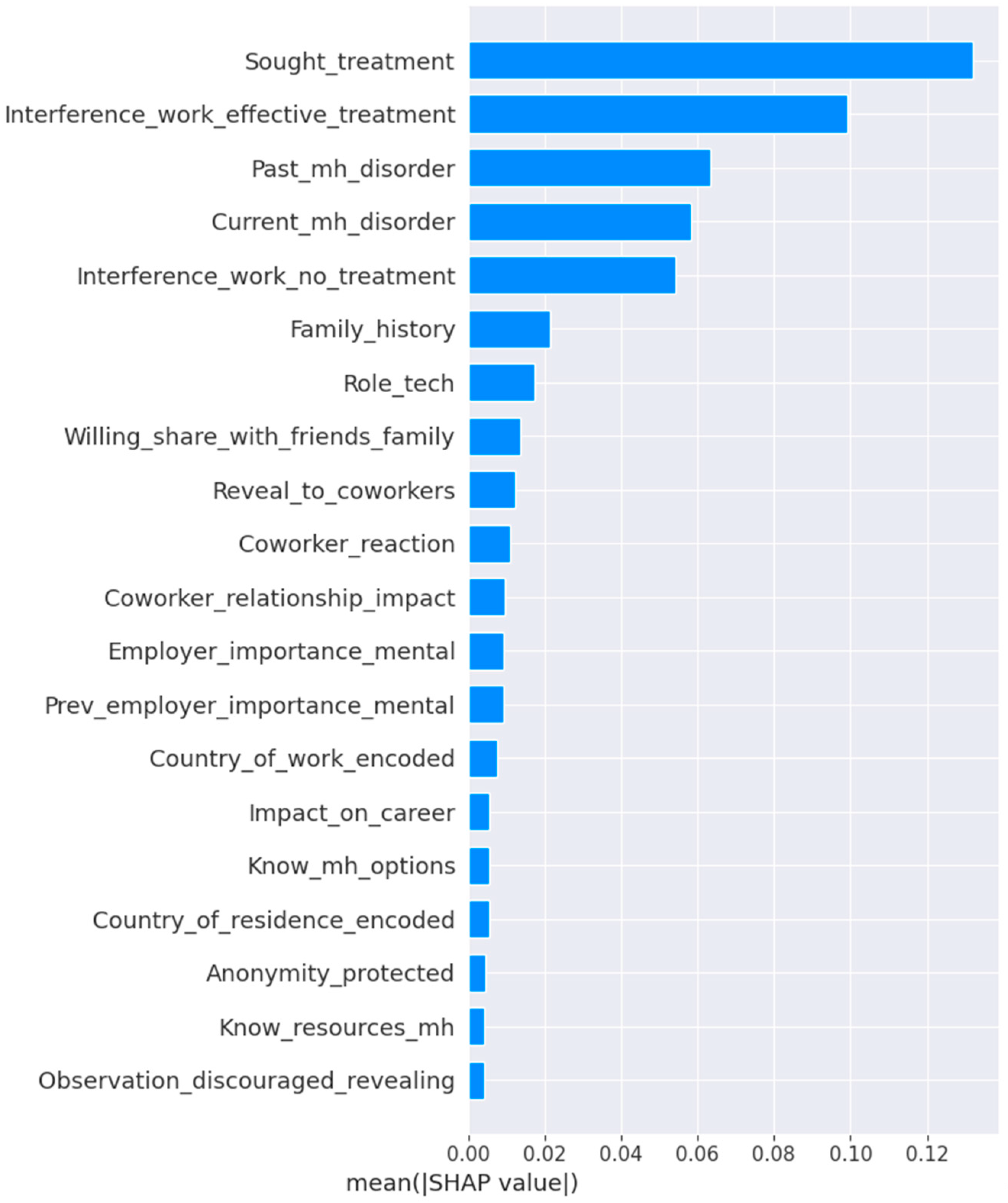

4.2.1. SHAP Analysis

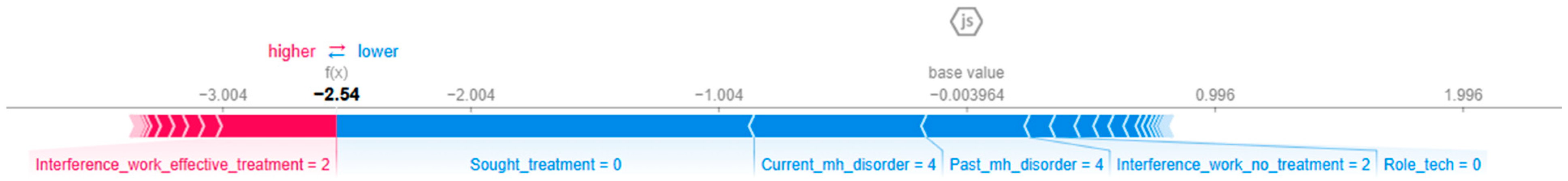

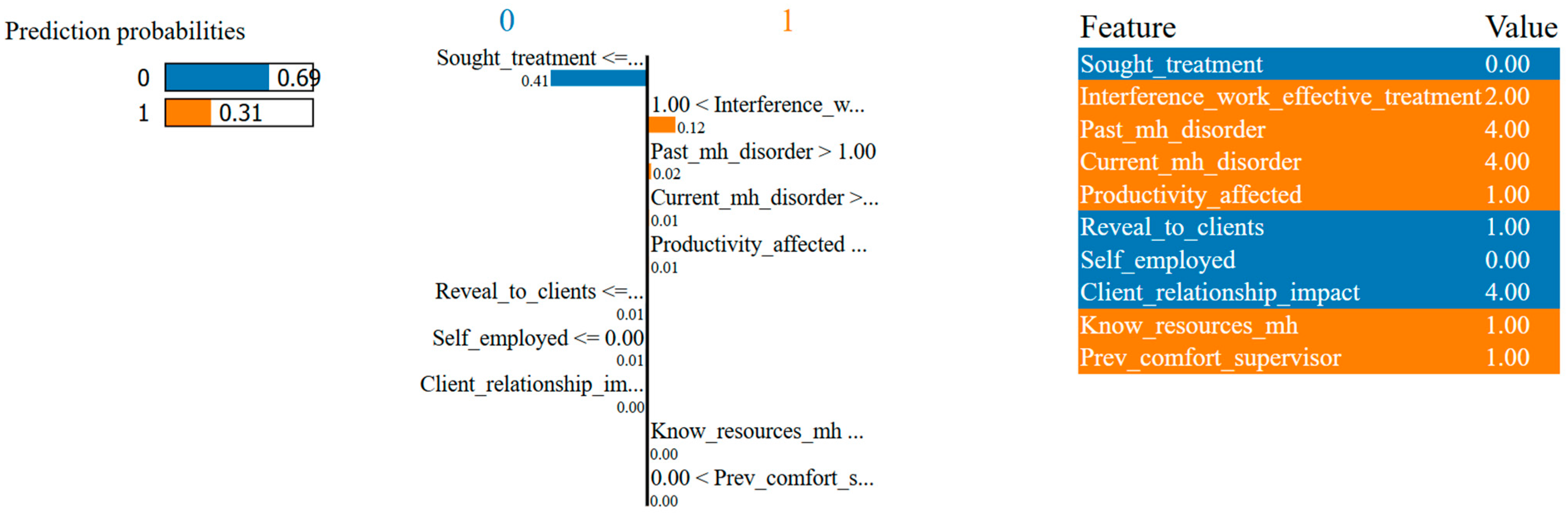

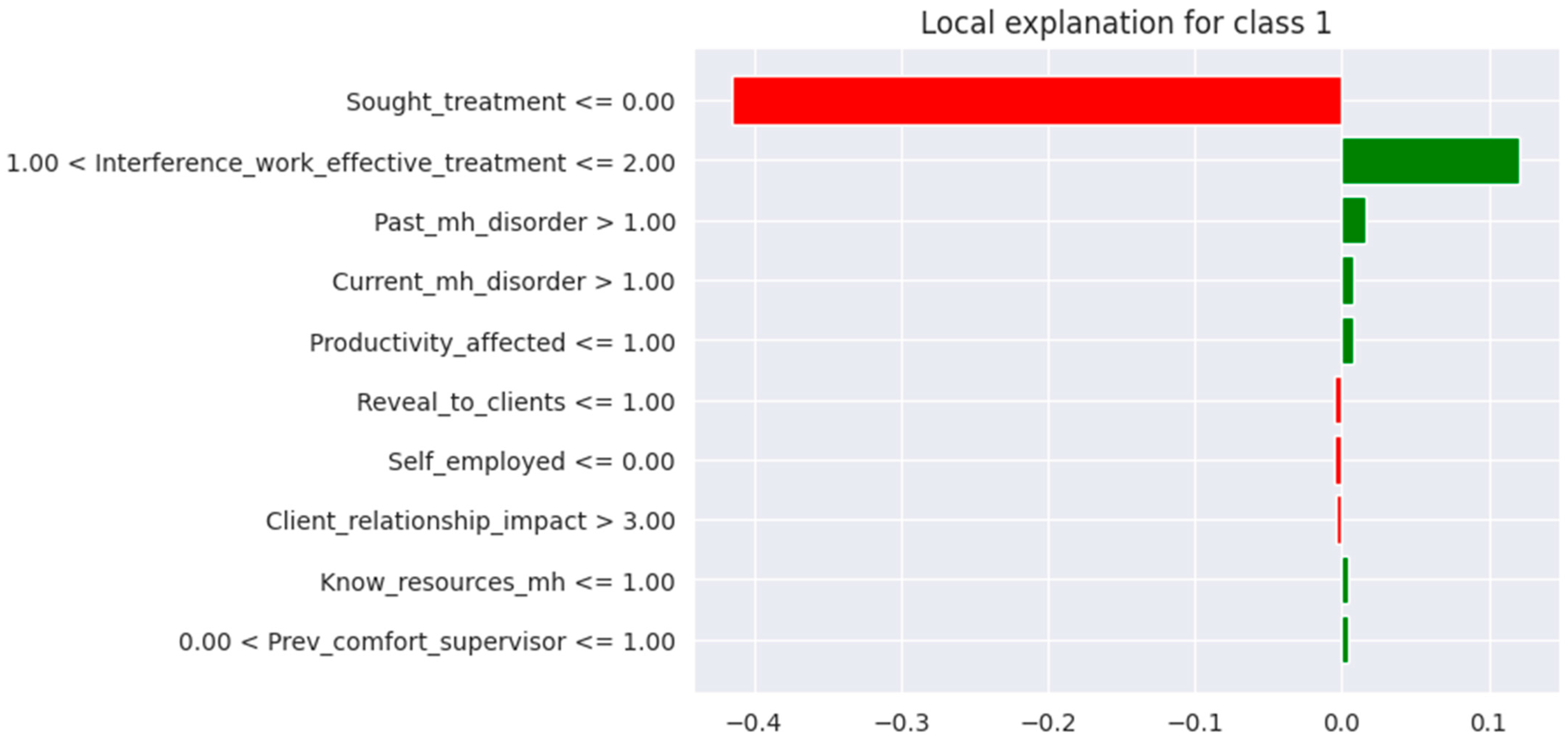

4.2.2. LIME Analysis

5. Discussion

5.1. Contributions and Implications of the Study

5.1.1. Theoretical Contributions

5.1.2. Theoretical Implications

5.1.3. Practical Implications

5.2. Limitations of the Study

5.3. Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SHAP | SHapley Additive exPlanations |

| LIME | Local Interpretable Model-agnostic Explanations |

| SMOTE | Synthetic Minority Oversampling Technique |

| XAI | Explainable Artificial Intelligence |

References

- Dybdahl, R.; Lien, L. Mental health is an integral part of the sustainable development goals. Prev. Med. Community Health 2018, 1, 1–3. [Google Scholar] [CrossRef]

- LaMontagne, A.D.; Martin, A.; Page, K.M.; Reavley, N.J.; Noblet, A.J.; Milner, A.J.; Keegel, T.; Smith, P.M. Workplace mental health: Developing an integrated intervention approach. BMC Psychiatry 2014, 14, 131. [Google Scholar] [CrossRef]

- World Health Organization. WHO Guidelines on Mental Health at Work; World Health Organization: Geneva, Switzerland, 2022. [Google Scholar]

- Bryan, M.L.; Bryce, A.M.; Roberts, J. The effect of mental and physical health problems on sickness absence. Eur. J. Health Econ. 2021, 22, 1519–1533. [Google Scholar] [CrossRef] [PubMed]

- Mokheleli, T. A Comparison of Machine Learning Techniques for Predicting Mental Health Disorders. 2023. Available online: https://hdl.handle.net/10210/511142 (accessed on 29 October 2024).

- Cuijpers, P. The Dodo Bird and the need for scalable interventions in global mental health—A commentary on the 25th anniversary of Wampold et al. (1997). Psychother. Res. 2023, 33, 524–526. [Google Scholar] [CrossRef] [PubMed]

- Peykar, P. The Relationship Between Servant Leadership, Supportive Work Environment, and Tech Employees’ Mental Well-Being. 2024. Available online: https://digitalcommons.liberty.edu/doctoral/6027 (accessed on 9 December 2024).

- Bhavya, C. Impact of Mental Health Initiatives on Employee Productivity. Int. J. Sci. Res. Eng. Manag. 2025. Available online: https://doi.org/10.55041/IJSREM46519 (accessed on 5 July 2025).

- Celestin, P.; Vanitha, N. Mental Health Matters: How Companies Are Failing Their Employees. SSRN Electron. J. 2024, 8, 44–52. [Google Scholar] [CrossRef]

- Özer, G.; Escartín, J. Imbalance between Employees and the Organisational Context: A Catalyst for Workplace Bullying Behaviours in Both Targets and Perpetrators. Behav. Sci. 2024, 14, 751. [Google Scholar] [CrossRef] [PubMed]

- Memish, K.; Martin, A.; Bartlett, L.; Dawkins, S.; Sanderson, K. Workplace mental health: An international review of guidelines. Prev. Med. 2017, 101, 213–222. [Google Scholar] [CrossRef]

- Balch-Samora, J.; Van Heest, A.; Weber, K.; Ross, W.; Huff, T.; Carter, C. Harassment, Discrimination, and Bullying in Orthopaedics: A Work Environment and Culture Survey. J. Am. Acad. Orthop. Surg. 2020, 28, e1097–e1104. [Google Scholar] [CrossRef]

- Lohia, A.; Ranjith, A.; Sachdeva, A.; Bhat, A. An Ensemble Technique to Analyse Mental Health of Workforce in the Corporate Sector. In Proceedings of the 2023 7th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 17–19 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1245–1251. [Google Scholar] [CrossRef]

- Graham, S.; Depp, C.; Lee, E.E.; Nebeker, C.; Tu, X.; Kim, H.-C.; Jeste, D.V. Artificial Intelligence for Mental Health and Mental Illnesses: An Overview. Curr. Psychiatry Rep. 2019, 21, 116. [Google Scholar] [CrossRef]

- Iyortsuun, N.K.; Kim, S.-H.; Jhon, M.; Yang, H.-J.; Pant, S. A Review of Machine Learning and Deep Learning Approaches on Mental Health Diagnosis. Healthcare 2023, 11, 285. [Google Scholar] [CrossRef]

- Mokheleli, T.; Bokaba, T.; Museba, T. An In-Depth Comparative Analysis of Machine Learning Techniques for Addressing Class Imbalance in Mental Health Prediction. 2023. Available online: https://aisel.aisnet.org/acis2023/15 (accessed on 12 July 2025).

- Almaleh, A. Machine Learning-Based Forecasting of Mental Health Issues Among Employees in the Workplace. In Proceedings of the 2023 IEEE International Conference on Industry 4.0, Artificial Intelligence, and Communications Technology (IAICT), Bali, Indonesia, 13–15 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 118–124. [Google Scholar] [CrossRef]

- Chauhan, T.; Renjith, P. Utilizing Machine Learning to foster employee mental health in modern workplace environment. In Proceedings of the 2024 IEEE International Students’ Conference on Electrical, Electronics and Computer Science (SCEECS), Bhopal, India, 24–25 February 2024; IEEE: Piscataway, NJ, USA; 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Frasca, M.; La Torre, D.; Pravettoni, G.; Cutica, I. Explainable and interpretable artificial intelligence in medicine: A systematic bibliometric review. Discov. Artif. Intell. 2024, 4, 15. [Google Scholar] [CrossRef]

- Nanath, K.; Balasubramanian, S.; Shukla, V.; Islam, N.; Kaitheri, S. Developing a mental health index using a machine learning approach: Assessing the impact of mobility and lockdown during the COVID-19 pandemic. Technol. Forecast. Soc. Change 2022, 178, 121560. [Google Scholar] [CrossRef]

- Joyce, D.W.; Kormilitzin, A.; Smith, K.A.; Cipriani, A. Explainable artificial intelligence for mental health through transparency and interpretability for understandability. npj Digit. Med. 2023, 6, 6. [Google Scholar] [CrossRef]

- Rautaray, S.S.; Nayak, S.; Pandey, M. A Machine Learning Based Employee Mental Health Analysis Model. In Proceedings of the 2023 International Conference on Sustainable Communication Networks and Application (ICSCNA), Theni, India, 15–17 November 2023; IEEE: Piscataway, NJ, USA; 2023; pp. 1055–1059. [Google Scholar] [CrossRef]

- Srividya, M.; Mohanavalli, S.; Bhalaji, N. Behavioral Modeling for Mental Health using Machine Learning Algorithms. J. Med. Syst. 2018, 42, 88. [Google Scholar] [CrossRef]

- Mallick, S.; Panda, M. Predictive Modeling of Mental Illness in Technical Workplace: A Feature Selection and Classification Approach. In Proceedings of the 2024 OPJU International Technology Conference (OTCON) on Smart Computing for Innovation and Advancement in Industry 4.0, Raigarh, India, 5–7 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Sujal, B.; Neelima, K.; Deepanjali, C.; Bhuvanashree, P.; Duraipandian, K.; Rajan, S.; Sathiyanarayanan, M. Mental Health Analysis of Employees using Machine Learning Techniques. In Proceedings of the 2022 14th International Conference on COMmunication Systems & NETworkS (COMSNETS), Bangalore, India, 4–8 January 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Kapoor, A.; Goel, S. Predicting Stress at Workplace using Machine Learning Techniques. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Chung, J.; Teo, J. Mental Health Prediction Using Machine Learning: Taxonomy, Applications, and Challenges. Appl. Comput. Intell. Soft Comput. 2022, 2022, 1–19. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef]

- Zilker, S.; Weinzierl, S.; Zschech, P.; Kraus, M.; Matzner, M. Best of Both Worlds: Combining Predictive Power with Interpretable and Explainable Results for Patient Pathway Prediction. In Proceedings of the ECIS 2023, Kristiansand, Norway, 11–16 June 2023; Available online: https://aisel.aisnet.org/ecis2023_rp/266 (accessed on 9 December 2024).

- Dwivedi, K.; Rajpal, A.; Rajpal, S.; Kumar, V.; Agarwal, M.; Kumar, N. Enlightening the path to NSCLC biomarkers: Utilizing the power of XAI-guided deep learning. Comput. Methods Programs Biomed. 2024, 243, 107864. [Google Scholar] [CrossRef]

- Letoffe, O.; Huang, X.; Asher, N.; Marques-Silva, J. From SHAP Scores to Feature Importance Scores. arXiv 2024, arXiv:2405.11766. [Google Scholar] [CrossRef]

- Mienye, I.D.; Obaido, G.; Jere, N.; Mienye, E.; Aruleba, K.; Emmanuel, I.D.; Ogbuokiri, B. A survey of explainable artificial intelligence in healthcare: Concepts, applications, and challenges. Inform. Med. Unlocked. 2024, 51, 101587. [Google Scholar] [CrossRef]

- Noor, A.A.; Manzoor, A.; Mazhar Qureshi, M.D.; Qureshi, M.A.; Rashwan, W. Unveiling Explainable AI in Healthcare: Current Trends, Challenges, and Future Directions. WIREs Data Min. Knowl. Discov. 2025, 15, e70018. [Google Scholar] [CrossRef]

- Atlam, E.S.; Rokaya, M.; Masud, M.; Meshref, H.; Alotaibi, R.; Almars, A.M.; Assiri, M.; Gad, I. Explainable artificial intelligence systems for predicting mental health problems in autistics. Alex. Eng. J. 2025, 117, 376–390. [Google Scholar] [CrossRef]

- Ahmed, I.; Brahmacharimayum, A.; Ali, R.H.; Khan, T.A.; Ahmad, M.O. Explainable AI for Depression Detection and Severity Classification From Activity Data: Development and Evaluation Study of an Interpretable Framework. JMIR Ment. Health 2025, 12, e72038. [Google Scholar] [CrossRef] [PubMed]

- OSMI: About, O.S.M.I. Available online: https://osmihelp.org/about/about-osmi.html (accessed on 1 December 2024).

- Kelloway, E.K.; Dimoff, J.K.; Gilbert, S. Mental Health in the Workplace. Annu. Rev. Organ. Psychol. Organ. Behav. 2023, 10, 363–387. [Google Scholar] [CrossRef]

- Alruhaymi, A.Z.; Kim, C.J. Why Can Multiple Imputations and How (MICE) Algorithm Work? Open J. Stat. 2021, 11, 759–777. [Google Scholar] [CrossRef]

- International Labour Organization. ILO Convention No. 138 at a Glance; International Labour Organization: Geneva, Switzerland, 2018; Available online: https://www.ilo.org/publications/ilo-convention-no-138-glance (accessed on 10 December 2024).

- Bichri, H.; Chergui, A.; Hain, M. Investigating the Impact of Train/Test Split Ratio on the Performance of Pre-Trained Models with Custom Datasets. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 331–339. [Google Scholar] [CrossRef]

- Komorowski, M.; Marshall, D.C.; Salciccioli, J.D.; Crutain, Y. Exploratory Data Analysis. In Secondary Analysis of Electronic Health Records; Springer International Publishing: Cham, Switzerland, 2016; pp. 185–203. [Google Scholar]

- Alghamdi, M.; Al-Mallah, M.; Keteyian, S.; Brawner, C.; Ehrman, J.; Sakr, S. Predicting diabetes mellitus using SMOTE and ensemble machine learning approach: The Henry Ford Exercise Testing (FIT) project. PLoS ONE 2017, 12, e0179805. [Google Scholar] [CrossRef]

- Sowjanya, A.M.; Mrudula, O. Effective treatment of imbalanced datasets in health care using modified SMOTE coupled with stacked deep learning algorithms. Appl. Nanosci. 2023, 13, 1829–1840. [Google Scholar] [CrossRef]

- Mohamed, E.S.; Naqishbandi, T.A.; Bukhari, S.A.C.; Rauf, I.; Sawrikar, V.; Hussain, A. A hybrid mental health prediction model using Support Vector Machine, Multilayer Perceptron, and Random Forest algorithms. Healthc. Anal. 2023, 3, 100185. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. arXiv 2016, arXiv:1603.02754v3. [Google Scholar]

- Liu, H.; Yan, G.; Duan, Z.; Chen, C. Intelligent modeling strategies for forecasting air quality time series: A review. Appl. Soft Comput. 2021, 102, 106957. [Google Scholar] [CrossRef]

- Thölke, P.; Mantilla-Ramos, Y.-J.; Abdelhedi, H.; Maschke, C.; Dehgan, A.; Harel, Y.; Kemtur, A.; Mekki Berrada, L.; Sahraoui, M.; Young, T.; et al. Class imbalance should not throw you off balance: Choosing the right classifiers and performance metrics for brain decoding with imbalanced data. Neuroimage 2023, 277, 120253. [Google Scholar] [CrossRef] [PubMed]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niterói, Brazil, 1–3 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Aurelio, Y.S.; de Almeida, G.M.; de Castro, C.L.; Braga, A.P. Cost-Sensitive Learning based on Performance Metric for Imbalanced Data. Neural Process Lett. 2022, 54, 3097–3114. [Google Scholar] [CrossRef]

- Ma, X.; Hou, M.; Zhan, J.; Liu, Z. Interpretable Predictive Modeling of Tight Gas Well Productivity with SHAP and LIME Techniques. Energies 2023, 16, 3653. [Google Scholar] [CrossRef]

- Wong, T.-T.; Yeh, P.-Y. Reliable Accuracy Estimates from k-Fold Cross Validation. IEEE Trans. Knowl. Data Eng. 2020, 32, 1586–1594. [Google Scholar] [CrossRef]

- Marcilio, W.E.; Eler, D.M. From explanations to feature selection: Assessing SHAP values as feature selection mechanism. In Proceedings of the 2020 33rd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Recife/Porto de Galinhas, Brazil, 7–10 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 340–347. [Google Scholar] [CrossRef]

- Ponce-Bobadilla, A.V.; Schmitt, V.; Maier, C.S.; Mensing, S.; Stodtmann, S. Practical guide to SHAP analysis: Explaining supervised machine learning model predictions in drug development. Clin. Transl. Sci. 2024, 17, e70056. [Google Scholar] [CrossRef] [PubMed]

| Studies | AI/ML Techniques | Performance Metrics | Data/Data Description | Focus Area | XAI Applied |

|---|---|---|---|---|---|

| Rautaray et al. [22] | LR, KNN, DT, RF | Accuracy, Classification error, False Positive Rate, Precision, Confusion Matrix | It is not mentioned, but comprises employee behaviour, health, and work–life impact. | Employee mental health and well-being | Not applied |

| Lohia et al. [13] | KNN, LR, DT, RF, Bagging, Boosting | Precision, Recall, Classification Accuracy, F1 Score, AUC | OSMI Mental Health in Tech Survey | Workplace mental health and operational efficiency | Not applied |

| Graham et al. [14] | Supervised, unsupervised ML, Deep Learning, and Natural Language Processing | AUC, Classification Accuracy, Sensitivity, Specificity, Precision, F1 Score | EHRs, Mood Scales, Brain Imaging, social media 28 research articles reviewed | General mental health predictions | - |

| Srividya et al. [23] | Clustering (K-means), SVM, DT, NB, KNN, LR | Confusion Matrix, Classification Accuracy, Precision, Recall, F-beta Score | 656 samples, 20 features, 3 class labels | Identifying mentally distressed individuals | Used LIME for trust computation |

| Nanath et al. [20] | Lasso, RFE, RFECV, Naïve Bayes, SGD, KNN, DT, RF, SVM, NN, XGB | Accuracy, Recall, Precision | Twitter data, Google mobility data, Lockdown index data | COVID-19 mental health impacts | Not applied |

| Almaleh [17] | RF, SVM, LR, AdaBoost, GB | Accuracy, Precision, Recall, Confusion Matrix, AUC, ROC Curve | OSMI dataset | Mental health treatment prediction | Not applied |

| Chung et al. [27] | - | Accuracy, F-measure, AUC | 30 research articles reviewed | Mental health prediction techniques | Not applied |

| Sujal et al. [25] | LR, KNN, DT, RF, NB, SVM, AdaBoost, GB, Light GBM | Precision, Recall, F1 Score, Accuracy | OSMI Mental Health Survey | Workplace stress and mental health | Not applied |

| Kapoor et al. [26] | LR, KNN, DT, RF, GB, AdaBoost, XGB, SVM | Classification Accuracy, False Positive Rate, Precision, AUC, ROC Curve | OSMI Mental Health Survey (2021 and 2014) | Stress management in tech professionals | Not applied |

| Mallick et al. [24] | LR, KNN, DT, RF, NN, XGB | Accuracy, Recall, Precision | Tech Survey dataset | Mental health feature selection | Not applied |

| [18] | CNN, KNN | Accuracy, Precision, Recall | Stress detection system data | Real-time stress detection and management | Not applied |

| Year | Number of Instances | Number of Columns |

|---|---|---|

| 2016 | 1433 | 63 |

| 2017 | 756 | 123 |

| 2018 | 417 | 123 |

| 2019 | 352 | 82 |

| 2020 | 180 | 120 |

| 2021 | 131 | 124 |

| 2022 | 164 | 126 |

| 2023 | 6 | 126 |

| Survey Questions | Feature Name |

|---|---|

| Are you self-employed? | Self_employed |

| How many employees does your company or organization have? | Company_size |

| Is your employer primarily a tech company/organization? | Employer_tech |

| Is your primary role within your company related to tech/IT? | Role_tech |

| Does your employer provide mental health benefits as part of healthcare coverage? | Mh_benefits |

| Do you know the options for mental health care available under your employer-provided health coverage? Do you know the options for mental health care available under your employer-provided coverage? | Know_mh_options |

| Has your employer ever formally discussed mental health (for example, as part of a wellness campaign or other official communication)? | Employer_discussed_mh |

| Does your employer offer resources to learn more about mental health disorders and options for seeking help? Does your employer offer resources to learn more about mental health concerns and options for seeking help? | Mh_resources |

| Is your anonymity protected if you choose to take advantage of mental health or substance abuse treatment resources provided by your employer? | Anonymity_protected |

| If a mental health issue prompted you to request a medical leave from work, how easy or difficult would it be to ask for that leave? If a mental health issue prompted you to request a medical leave from work, asking for that leave would be: | Ease_medical_leave |

| Would you feel comfortable discussing a mental health issue with your direct supervisor(s)? | Comfort_supervisor |

| Would you feel comfortable discussing a mental health issue with your coworkers? | Comfort_coworkers |

| Overall, how much importance does your employer place on mental health? Do you feel that your employer takes mental health as seriously as physical health? | Employer_importance_mental |

| Do you know local or online resources to seek help for a mental health issue? Do you know local or online resources to seek help for a mental health disorder? | Know_resources_mh |

| If you have been diagnosed or treated for a mental health disorder, do you ever reveal this to clients or business contacts? | Reveal_to_clients |

| If you have revealed a mental health disorder to a client or business contact, how has this affected you or the relationship? If you have revealed a mental health issue to a client or business contact, do you believe this has impacted you negatively? | Client_relationship_impact |

| If you have been diagnosed or treated for a mental health disorder, do you ever reveal this to coworkers or employees? | Reveal_to_coworkers |

| If you have revealed a mental health disorder to a coworker or employee, how has this impacted you or the relationship? If you have revealed a mental health issue to a coworker or employee, do you believe this has impacted you negatively? | Coworker_relationship_impact |

| Do you believe your productivity is ever affected by a mental health issue? | Productivity_affected |

| If yes, what percentage of your work time (time performing primary or secondary job functions) is affected by a mental health issue? | Productivity_percentage |

| Do you have previous employers? | Prev_employers |

| Have your previous employers provided mental health benefits? | Prev_employer_benefits |

| Were you aware of the options for mental health care provided by your previous employers? | Prev_employer_options |

| Did your previous employers ever formally discuss mental health (as part of a wellness campaign or other official communication)? | Prev_employer_discussed_mh |

| Did your previous employers provide resources to learn more about mental health disorders and how to seek help? | Prev_employer_resources |

| Was your anonymity protected if you chose to take advantage of mental health or substance abuse treatment resources with previous employers? | Prev_anonymity_protected |

| Would you have been willing to discuss your mental health with your direct supervisor(s)? Would you have been willing to discuss a mental health issue with your direct supervisor(s)? | Prev_comfort_supervisor |

| Would you have been willing to discuss your mental health with your coworkers at previous employers? Would you have been willing to discuss a mental health issue with your previous co-workers? | Prev_comfort_coworkers |

| Overall, how much importance did your previous employer place on mental health? Did you feel that your previous employers took mental health as seriously as physical health? | Prev_employer_importance_mental |

| Do you currently have a mental health disorder? | Current_mh_disorder |

| Have you ever been diagnosed with a mental health disorder? Have you been diagnosed with a mental health condition by a medical professional? | Diagnosed_mh * |

| Have you had a mental health disorder in the past? | Past_mh_disorder |

| Have you ever sought treatment for a mental health disorder from a mental health professional? Have you ever sought treatment for a mental health issue from a mental health professional? | Sought_treatment |

| Do you have a family history of mental illness? | Family_history |

| If you have a mental health disorder, how often do you feel that it interferes with your work when being treated effectively? If you have a mental health issue, do you feel that it interferes with your work when being treated effectively? | Interference_work_effective_treatment |

| If you have a mental health disorder, how often do you feel that it interferes with your work when NOT being treated effectively (i.e., when you are experiencing symptoms)? If you have a mental health issue, do you feel that it interferes with your work when NOT being treated effectively? | Interference_work_no_treatment |

| Have your observations of how another individual who discussed a mental health issue made you less likely to reveal a mental health issue yourself in your current workplace? Have your observations of how another individual who discussed a mental health disorder made you less likely to reveal a mental health issue yourself in your current workplace? | Observation_discouraged_revealing |

| How willing would you be to share with friends and family that you have a mental illness? | Willing_share_with_friends_family |

| Would you be willing to bring up a physical health issue with a potential employer in an interview? | Willing_physical_issue_interview |

| Would you bring up your mental health with a potential employer in an interview? Would you bring up a mental health issue with a potential employer in an interview? | Willing_mh_interview |

| Has being identified as a person with a mental health issue affected your career? Do you feel that being identified as a person with a mental health issue would hurt your career? | Impact_on_career |

| If they knew you suffered from a mental health disorder, how do you think that your team members/co-workers would react? Do you think that team members/co-workers would view you more negatively if they knew you suffered from a mental health issue? | Coworker_reaction |

| Have you observed or experienced an unsupportive or badly handled response to a mental health issue in your current or previous workplace? | Observed_unsupportive_response |

| What is your age? | Age |

| What is your gender? | Gender |

| What country do you live in? | Country_of_residence |

| What country do you work in? | Country_of_work |

| Metric | Formula |

|---|---|

| Accuracy | |

| Recall | |

| Precision | |

| F1 Score | |

| AUC | |

| Geometric mean | |

| Balanced Accuracy |

| Actual Positive | Actual Negative | |

|---|---|---|

| Predicted Positive | TP | FP |

| Predicted Negative | FN | TN |

| Model | Best Parameters |

|---|---|

| RF | n_estimators: 200; max_depth: 20; min_samples_split: 5; min_samples_leaf: 2; bootstrap: True |

| xGBoost | max_depth: 3; learning_rate: 0.01; n_estimators: 500; subsample: 1.0; colsample_bytree: 1.0 |

| SVM | C: 0.1; kernel: linear; gamma: scale |

| AdaBoost | n_estimators: 100; learning_rate: 0.01 |

| Classifier | Mean CV Accuracy | Standard Deviation |

|---|---|---|

| Random Forest | 94% | 0.01 |

| xGBoost | 94% | 0.01 |

| SVM | 91% | 0.02 |

| AdaBoost | 91% | 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mokheleli, T.; Bokaba, T.; Mbunge, E. Explainable Artificial Intelligence for Workplace Mental Health Prediction. Informatics 2025, 12, 130. https://doi.org/10.3390/informatics12040130

Mokheleli T, Bokaba T, Mbunge E. Explainable Artificial Intelligence for Workplace Mental Health Prediction. Informatics. 2025; 12(4):130. https://doi.org/10.3390/informatics12040130

Chicago/Turabian StyleMokheleli, Tsholofelo, Tebogo Bokaba, and Elliot Mbunge. 2025. "Explainable Artificial Intelligence for Workplace Mental Health Prediction" Informatics 12, no. 4: 130. https://doi.org/10.3390/informatics12040130

APA StyleMokheleli, T., Bokaba, T., & Mbunge, E. (2025). Explainable Artificial Intelligence for Workplace Mental Health Prediction. Informatics, 12(4), 130. https://doi.org/10.3390/informatics12040130