ETICD-Net: A Multimodal Fake News Detection Network via Emotion-Topic Injection and Consistency Modeling

Abstract

1. Introduction

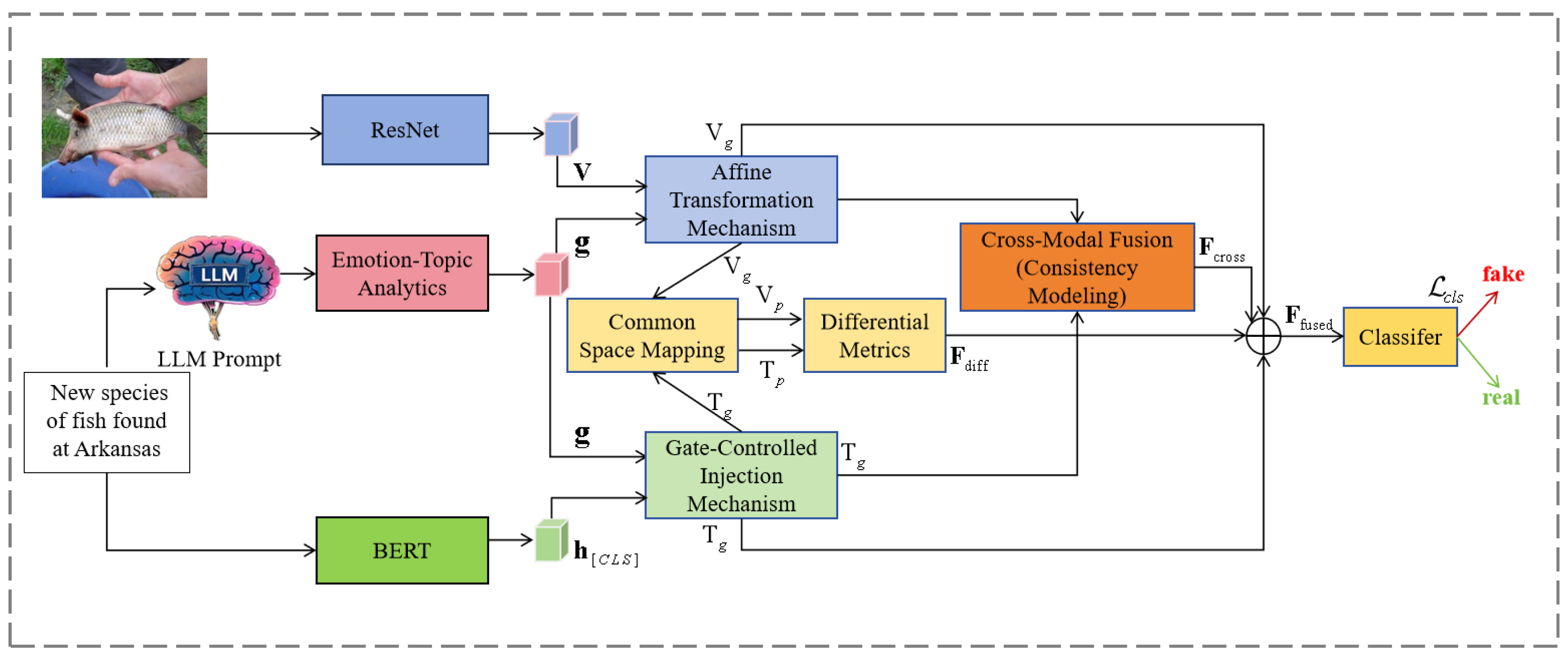

- Proposing a novel multimodal fake news detection framework (ETICD-Net): This framework integrates large language models as high-level semantic guides with traditional visual and linguistic encoders, introducing a new paradigm to the field.

- Introduces an affect-theme injection method: This paper designs an injection scheme based on gating mechanisms and conditional instance normalization, successfully deep-fusing discrete affect-theme semantic information into continuous modal features to enhance feature discriminability.

- Design of a hierarchical consistency fusion module: This module combines cross-modal attention with explicit divergence metrics to structurally evaluate consistency between image and text modalities, improving performance and model interpretability.

2. Related Work

2.1. Application of Sentiment Analysis in Fake News Detection

2.2. Multimodal Fake News Detection

3. Method

3.1. Overall Architecture

3.2. Feature Extraction and Sentiment-Theme Injection

3.2.1. Emotion-Themed Guided Generation

3.2.2. Emotion-Theme Injection of Textual Features

3.2.3. Emotion-Theme Injection of Image Features

3.3. Hierarchical Consistency Fusion Module

3.3.1. Cross-Modal Attention Consistency Modeling

3.3.2. Common Space Mapping and Difference Metrics

3.3.3. Integration and Classification

3.4. Interpretability Enhancement

4. Experiments and Results Analysis

4.1. Experimental Setup

4.1.1. Dataset

4.1.2. Evaluation Indicators

4.1.3. Implementation Details

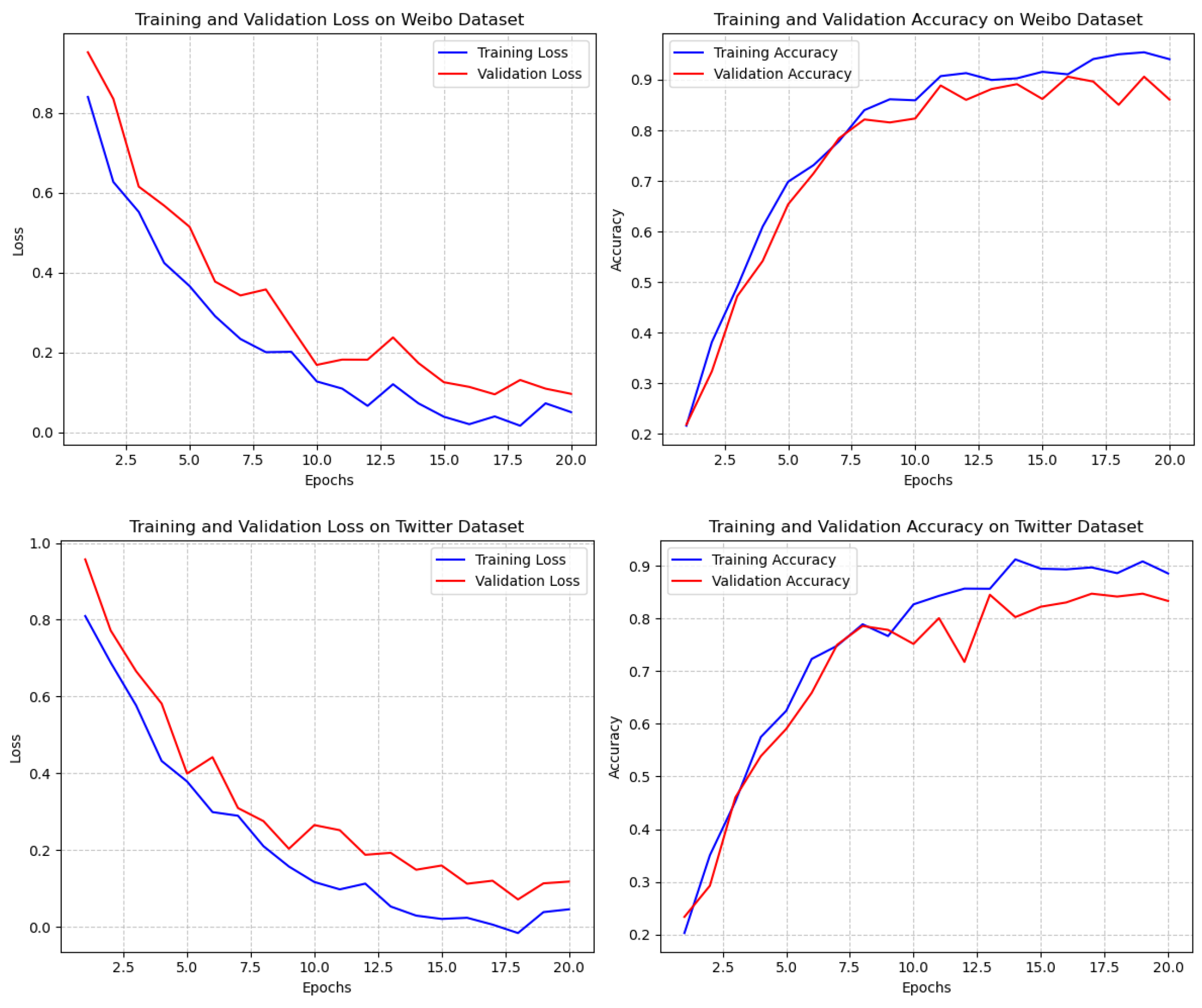

4.2. Comparative Experiment

4.2.1. Baseline Model

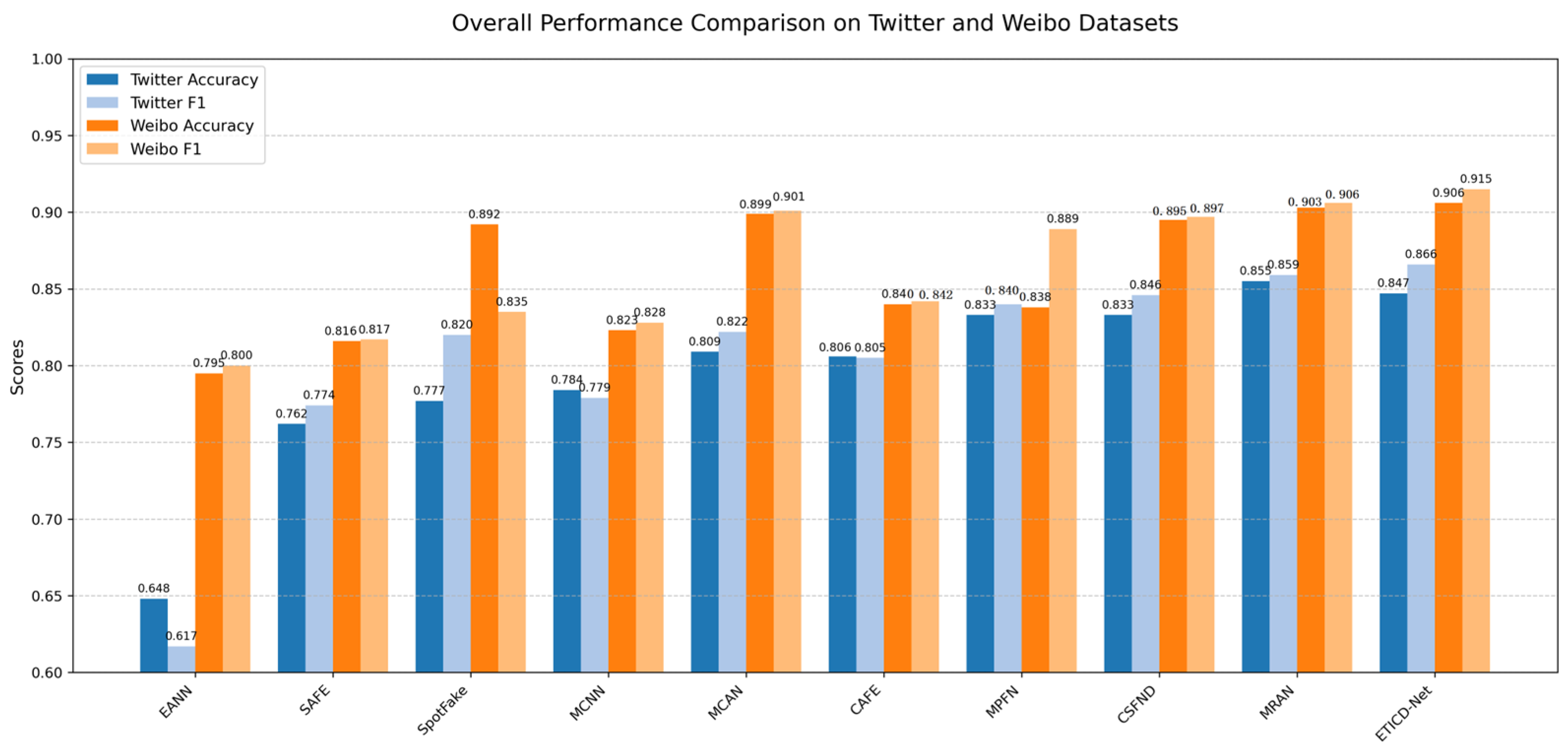

4.2.2. Model Performance Comparison Experiment

- Compared to EANN’s multi-task learning and event adversarial strategies, ETICD-Net achieves significantly higher accuracy (0.847 vs. 0.648) and F1 scores (0.866 vs. 0.617) on the Twitter dataset, while also demonstrating comprehensive superiority on the Weibo dataset (Accuracy: 0.906 vs. 0.795; F1: 0.915 vs. 0.800). This demonstrates that actively guiding semantic interpretation through high-level sentiment-theme signals generated by large language models can more effectively enhance a model’s overall discriminative capability than event detection alone.

- Compared to the simple similarity metric used by the SAFE model, ETICD-Net achieves superior performance on the Twitter dataset (Accuracy: 0.847 vs. 0.762; F1: 0.866 vs. 0.774) and the Weibo dataset (Accuracy: 0.906 vs. 0.816; F1: 0.915 vs. 0.817). This demonstrates that high-level semantic guidance outperforms low-level feature similarity in both accuracy and overall performance when identifying complex semantic inconsistency patterns.

- Compared to SpotFake’s simple feature concatenation strategy, ETICD-Net achieves comprehensive superiority across all metrics on the Weibo dataset, including accuracy (0.906 vs. 0.892), precision (0.911 vs. 0.888), recall (0.907 vs. 0.810), and F1 score (0.915 vs. 0.835). This demonstrates that our approach not only avoids the performance bottlenecks inherent in simple fusion strategies but also achieves stronger feature interaction capabilities through hierarchical consistency fusion.

- In the state-of-the-art models, ETICD-Net achieves a significantly higher F1 score (0.866) on the Twitter dataset compared to MCAN (0.822), CAFE (0.805), and MPFN (0.840). Simultaneously, it attains the best accuracy (0.906) and F1 score (0.915) on the Weibo dataset. Regarding comparisons with MRAN, we conducted a multi-faceted analysis: on the Twitter dataset, our accuracy (0.847) was slightly lower than MRAN’s (0.855), but our F1 score (0.866) was higher with a clear advantage; On the Weibo dataset, our model outperformed MRAN in both accuracy (0.906 vs. 0.903) and F1 score (0.915 vs. 0.906). This discrepancy reflects differing design priorities: MRAN excels at handling specific sample types, while ETICD-Net achieves more balanced and robust overall performance through high-level semantic guidance.

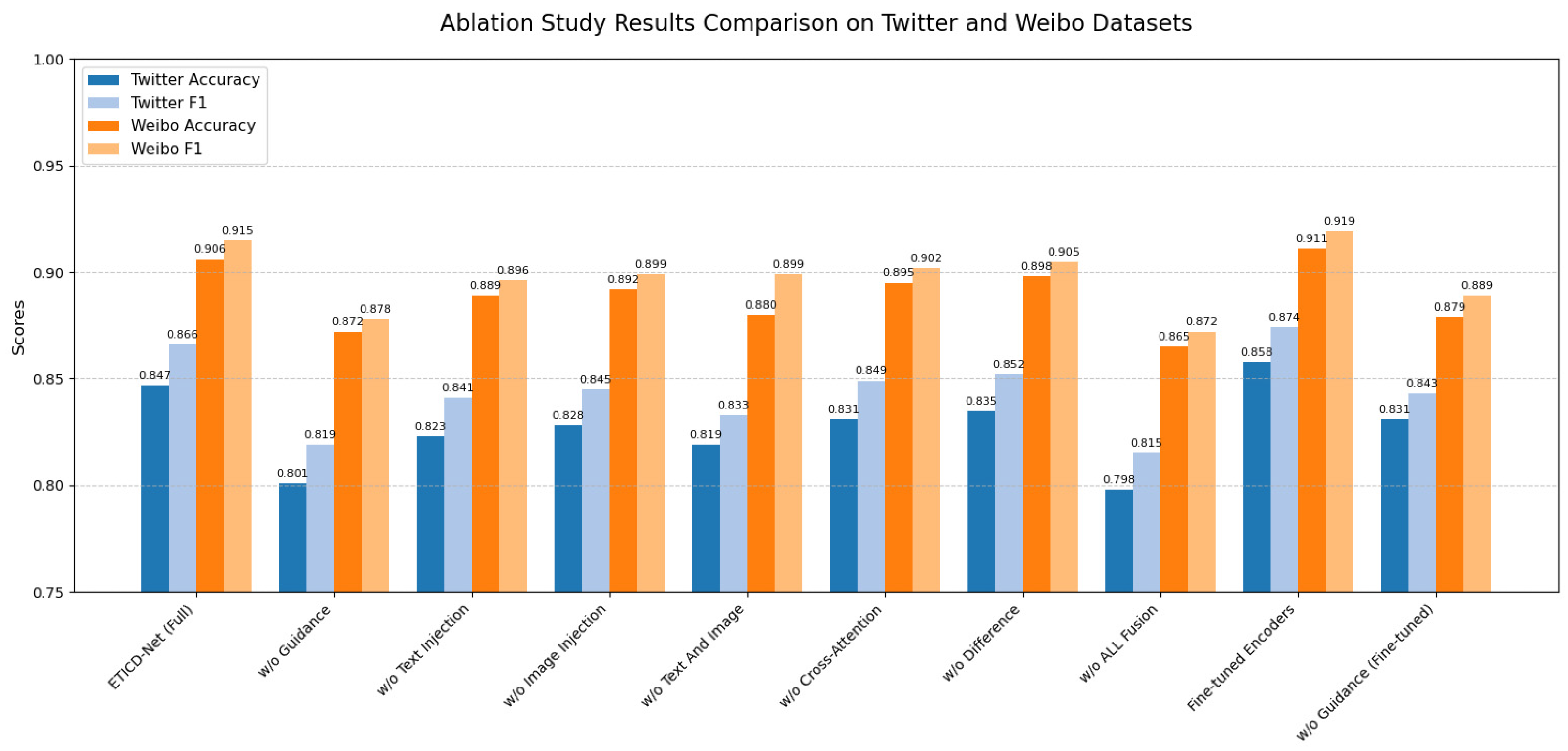

4.3. Ablation Experiment

- Emotional topic guidance is crucial: Removing the guidance module (w/o Guidance) resulted in the most significant performance decline, with F1 scores dropping by 4.7% and 3.7% on the Twitter and Weibo datasets, respectively. This demonstrates that the high-level semantic signals provided by large language models form the essential foundation for the entire model’s effectiveness, playing an irreplaceable role in understanding the semantic context of news.

- Bidirectional feature injection is effective: Removing either text or image feature injection alone resulted in performance degradation, indicating that injecting guidance signals into both modalities is beneficial. Notably, the performance drop from removing image injection (w/o Image Injection) was slightly smaller than that from removing text injection (w/o Text Injection). This may stem from image features inherently possessing greater abstractness, while text features benefit more directly from enhancing sentiment theme information.

- Importance of Original Features: Removing text and image features after emotional-thematic infusion leads to a decline in model performance. This demonstrates that the original features themselves, after undergoing emotional-thematic infusion, contain substantial critical discriminative information. These features complement the cross-modal interaction feature and the difference feature . Relying solely on interaction and difference features while neglecting the enhanced original features results in information loss.

- All components of the hierarchical fusion module are indispensable: Removing either cross-modal attention (w/o Cross-Attention) or the difference metric (w/o Difference) causes significant performance degradation, validating the effectiveness of our proposed hierarchical consistency fusion strategy. The cross-modal attention mechanism captures fine-grained correlations between modalities, while the difference metric explicitly quantifies inconsistency levels. These two components complement each other and must work together to capture inconsistencies optimally.

- Necessity of the Holistic Design: The complete model achieves optimal performance, and removing any component leads to degradation. This indicates that sentiment topic guidance, feature injection, and hierarchical fusion form an organic whole, with each component indispensable. Notably, the significant advantage of the complete model over the simplest feature concatenation method (w/o ALL Fusion) validates the superiority of our proposed multi-level, structured fusion strategy.

- Guidance Complements Fine-tuning: Introducing a variant where we fine-tune the last layers of BERT and ResNet shows that performance can be further improved (Fine-tuned Encoders). Crucially, even in this setting, removing the guidance signal (w/o Guidance (Fine-tuned)) leads to a performance drop. This demonstrates that the emotion-topic guidance provides complementary semantic information that is not fully captured by simply adapting the encoder parameters to the task, validating its unique role beyond a simple feature adaptation mechanism.

4.4. Case Study

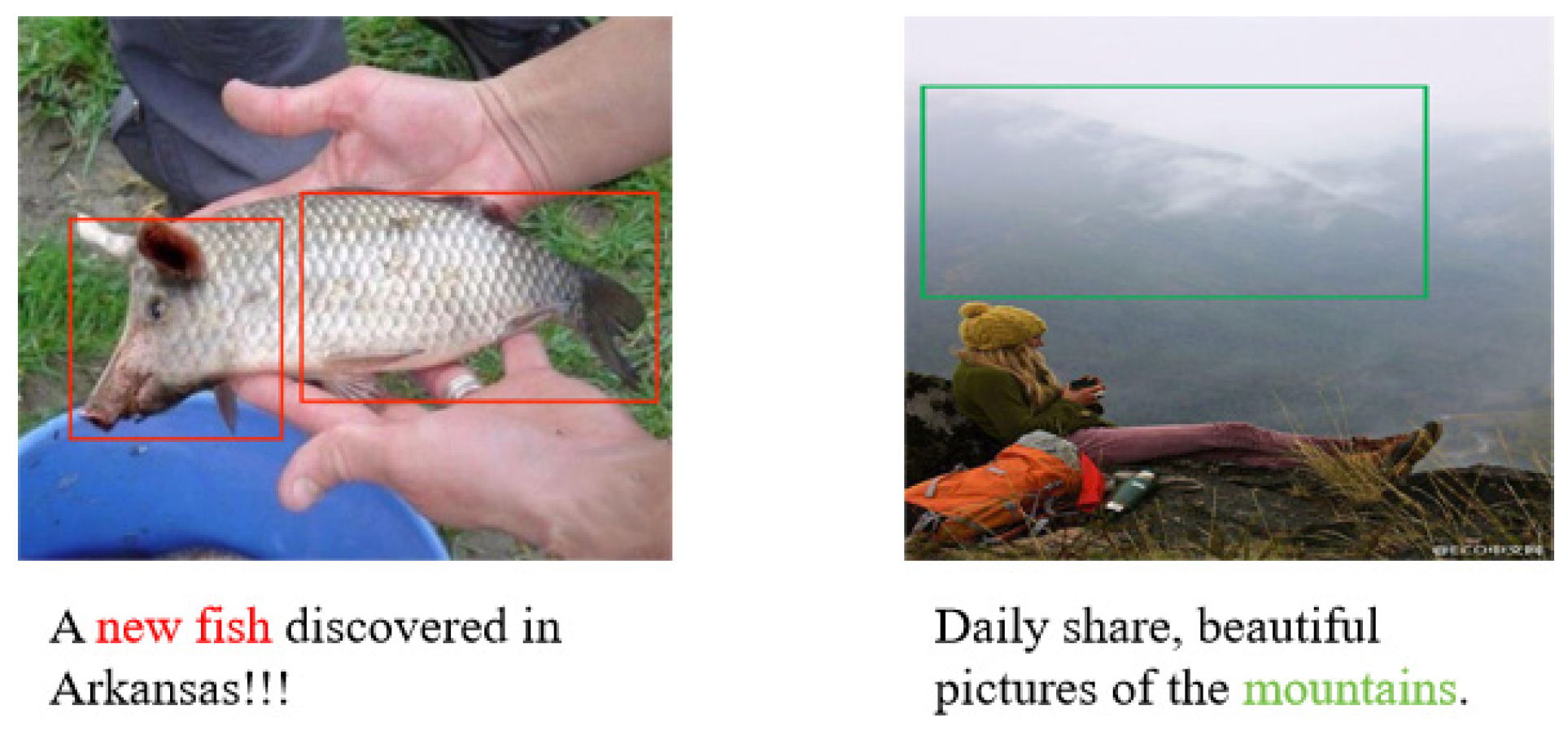

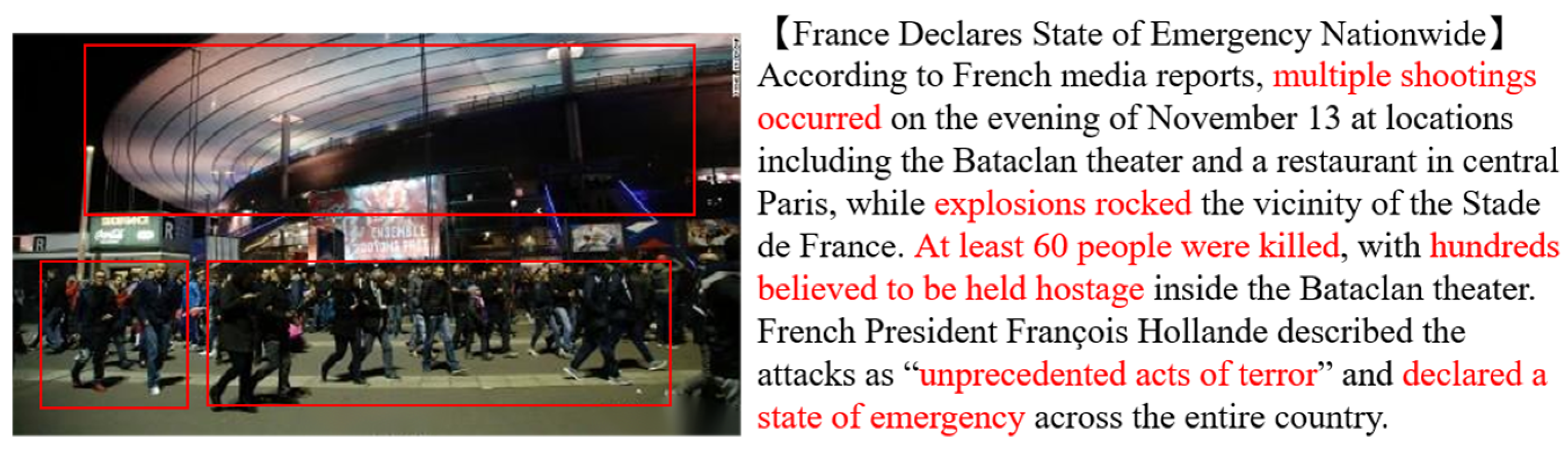

4.4.1. Classic Inconsistency Case-Validating Model Effectiveness

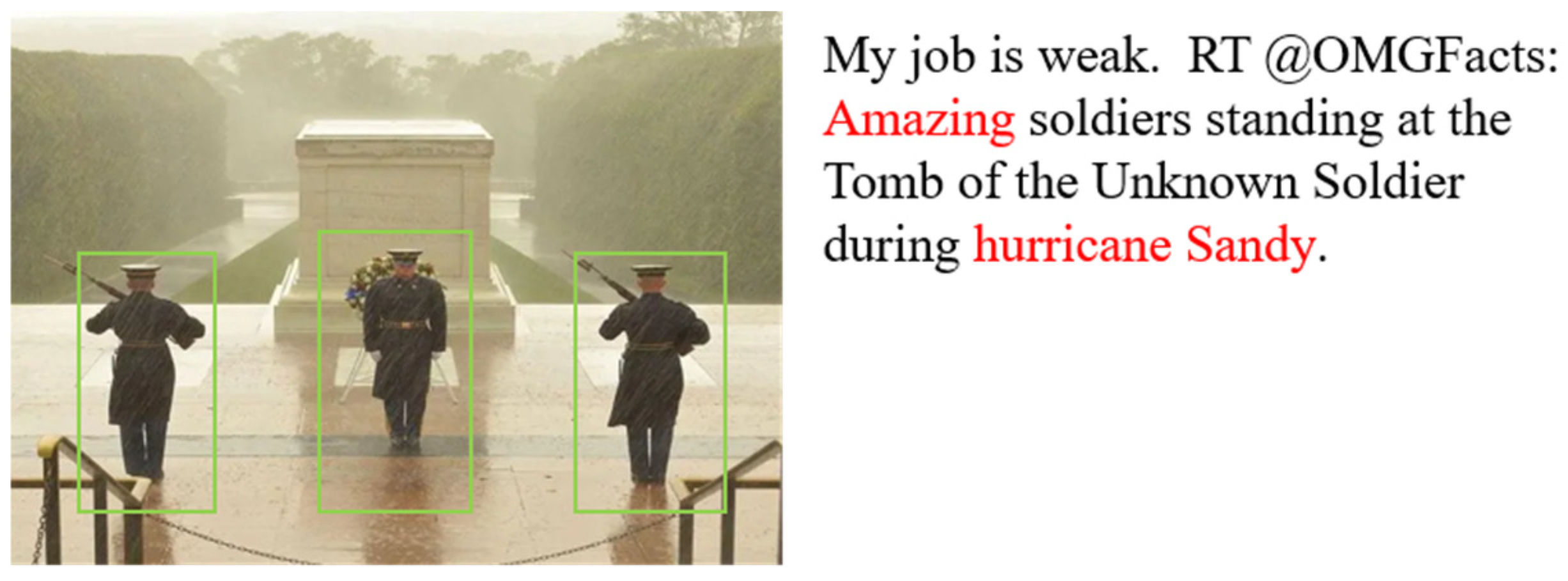

4.4.2. Edge Cases in the Real World

4.4.3. Case Summary

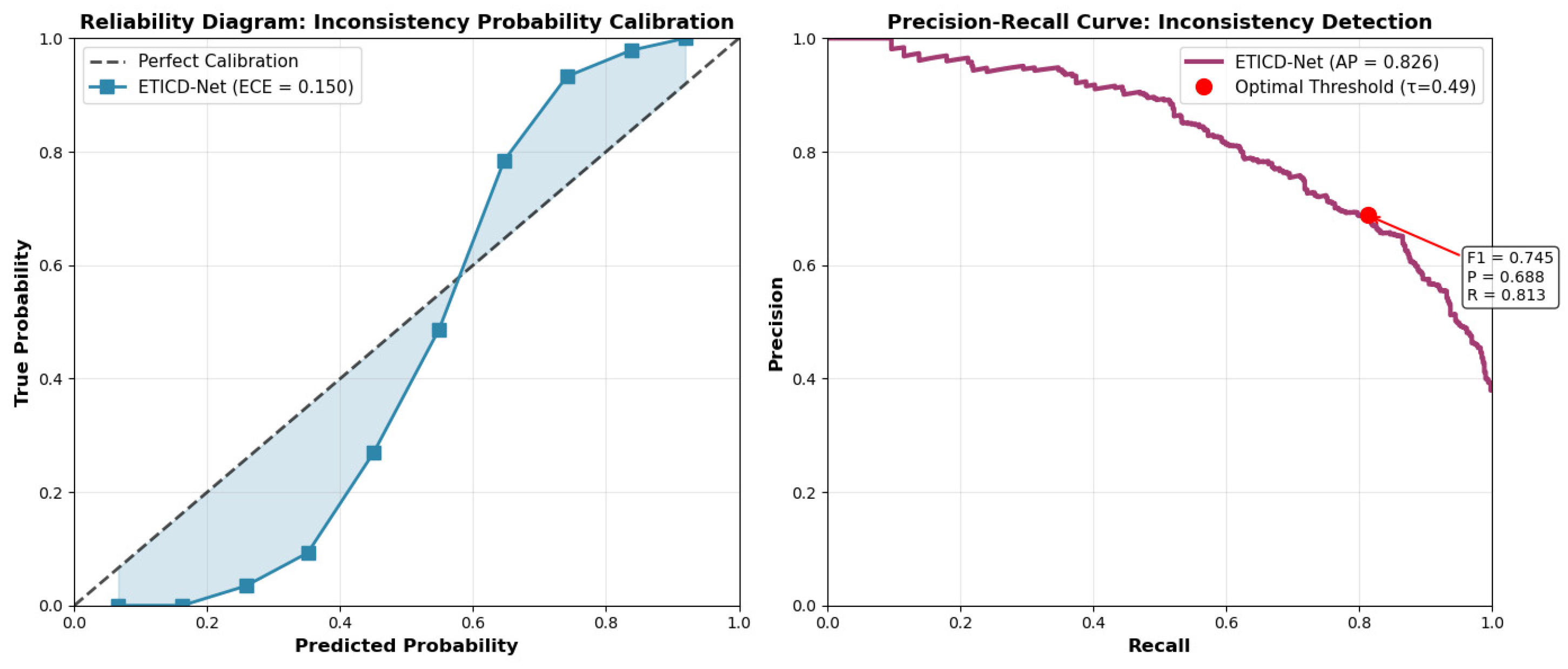

4.5. Interpretability Calibration and Inconsistency Detection

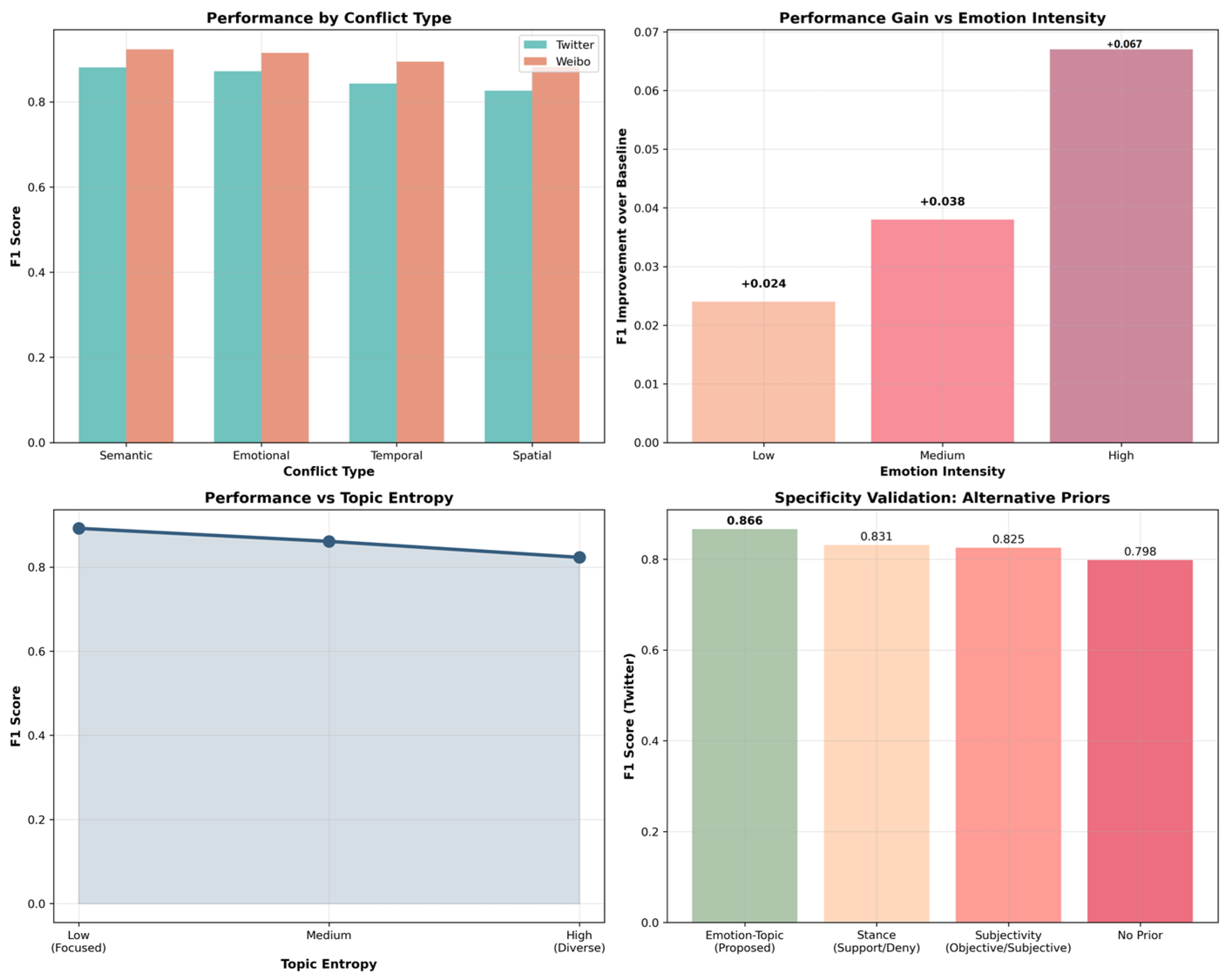

4.6. Emotion-Topic Prior Validation Through Targeted Analysis

5. Conclusions

6. Discussion

6.1. Model Generalizability and Limitations

6.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Plikynas, D.; Rizgeliene, I.; Korvel, G. Systematic Review of Fake News, Propaganda, and Disinformation: Examining Authors, Content, and Social Impact Through Machine Learning. IEEE Access 2025, 13, 17583–17629. [Google Scholar] [CrossRef]

- Wan, M.; Zhong, Y.; Gao, X.; Lee, S.Y.M.; Huang, C.-R. Fake News, Real Emotions: Emotion Analysis of COVID-19 Infodemic in Weibo. IEEE Trans. Affect. Comput. 2024, 15, 815–827. [Google Scholar] [CrossRef]

- Tufchi, S.; Yadav, A.; Ahmed, T. A comprehensive survey of multimodal fake news detection techniques: Advances, challenges, and opportunities. Int. J. Multim. Inf. Retr. 2023, 12, 28. [Google Scholar] [CrossRef]

- Li, X.; Qiao, J.; Yin, S.; Wu, L.; Gao, C.; Wang, Z.; Li, X. A Survey of Multimodal Fake News Detection: A Cross-Modal Interaction Perspective. IEEE Trans. Emerg. Top. Comput. Intell. 2025, 9, 2658–2675. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, F.; Jin, Z.; Yuan, Y.; Xun, G.; Jha, K.; Su, L.; Gao, J. EANN: Event Adversarial Neural Networks for Multi-Modal Fake News Detection. In Proceedings of the KDD 2018: The 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 849–857. [Google Scholar]

- Khattar, D.; Goud, J.S.; Gupta, M.; Varma, V. MVAE: Multimodal Variational Autoencoder for Fake News Detection. In Proceedings of the WWW 2019: The Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2915–2921. [Google Scholar]

- Singhal, S.; Shah, R.R.; Chakraborty, T.; Kumaraguru, P.; Satoh, S. SpotFake: A Multi-modal Framework for Fake News Detection. In Proceedings of the 2019 IEEE International Conference on Multimedia Big Data (BigMM), Singapore, 11–13 September 2019; pp. 39–47. [Google Scholar]

- Hu, L.; Zhao, Z.; Qi, W.; Song, X.; Nie, L. Multimodal matching-aware co-attention networks with mutual knowledge distillation for fake news detection. Inf. Sci. 2024, 664, 120310. [Google Scholar] [CrossRef]

- Du, P.; Gao, Y.; Li, L.; Li, X. SGAMF: Sparse Gated Attention-Based Multimodal Fusion Method for Fake News Detection. IEEE Trans. Big Data 2025, 11, 540–552. [Google Scholar] [CrossRef]

- Qu, Z.; Zhou, F.; Song, X.; Ding, R.; Yuan, L.; Wu, Q. Temporal Enhanced Multimodal Graph Neural Networks for Fake News Detection. IEEE Trans. Comput. Soc. Syst. 2024, 11, 7286–7298. [Google Scholar] [CrossRef]

- Huang, Z.; Lu, D.; Sha, Y. Multi-Hop Attention Diffusion Graph Neural Networks for Multimodal Fake News Detection. In Proceedings of the ICASSP 2025: IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 13–18 April 2025; pp. 1–5. [Google Scholar]

- Chen, H.; Wang, H.; Liu, Z.; Li, Y.; Hu, Y.; Zhang, Y.; Shu, K.; Li, R.; Yu, P.S. Multi-modal Robustness Fake News Detection with Cross-Modal and Propagation Network Contrastive Learning. Knowl. Based Syst. 2025, 309, 112800. [Google Scholar] [CrossRef]

- Cao, B.; Wu, Q.; Cao, J.; Liu, B.; Gui, J. External Reliable Information-enhanced Multimodal Contrastive Learning for Fake News Detection. In Proceedings of the AAAI 2025: The 39th Annual AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 22–26 February 2025; pp. 31–39. [Google Scholar]

- Wu, Y.; Zhan, P.; Zhang, Y.; Wang, L.; Xu, Z. Multimodal Fusion with Co-Attention Networks for Fake News Detection. In Proceedings of the ACL/IJCNLP 2021: Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online, 1–6 August 2021; pp. 2560–2569. [Google Scholar]

- Eyu, J.M.; Yau, K.-L.A.; Liu, L.; Chong, Y.-W. Reinforcement learning in sentiment analysis: A review and future directions. Artif. Intell. Rev. 2025, 58, 6. [Google Scholar] [CrossRef]

- Koukaras, P.; Rousidis, D.; Tjortjis, C. Unraveling Microblog Sentiment Dynamics: A Twitter Public Attitudes Analysis towards COVID-19 Cases and Deaths. Informatics 2023, 10, 88. [Google Scholar] [CrossRef]

- Wang, R.; Yang, Q.; Tian, S.; Yu, L.; He, X.; Wang, B. Transformer-based correlation mining network with self-supervised label generation for multimodal sentiment analysis. Neurocomputing 2025, 618, 129163. [Google Scholar] [CrossRef]

- Wu, H.; Kong, D.; Wang, L.; Li, D.; Zhang, J.; Han, Y. Multimodal sentiment analysis method based on image-text quantum transformer. Neurocomputing 2025, 637, 130107. [Google Scholar] [CrossRef]

- Sethurajan, M.R.; Natarajan, K. Performance analysis of semantic veracity enhance (SVE) classifier for fake news detection and demystifying the online user behaviour in social media using sentiment analysis. Soc. Netw. Anal. Min. 2024, 14, 36. [Google Scholar] [CrossRef]

- Bounaama, R.; Abderrahim, M.E.A. Classifying COVID-19 Related Tweets for Fake News Detection and Sentiment Analysis with BERT-based Models. arXiv 2023, arXiv:2304.00636. [Google Scholar] [CrossRef]

- Kula, S.; Choras, M.; Kozik, R.; Ksieniewicz, P.; Wozniak, M. Sentiment Analysis for Fake News Detection by Means of Neural Networks. In Proceedings of the ICCS 2020: International Conference on Computational Science, Amsterdam, The Netherlands, 3–5 June 2020; pp. 653–666. [Google Scholar]

- Zhang, H.; Li, Z.; Liu, S.; Huang, T.; Ni, Z.; Zhang, J.; Lv, Z. Do Sentence-Level Sentiment Interactions Matter? Sentiment Mixed Heterogeneous Network for Fake News Detection. IEEE Trans. Comput. Soc. Syst. 2024, 11, 5090–5100. [Google Scholar] [CrossRef]

- Kumari, R.; Ashok, N.; Ghosal, T.; Ekbal, A. A Multitask Learning Approach for Fake News Detection: Novelty, Emotion, and Sentiment Lend a Helping Hand. In Proceedings of the IJCNN 2021: International Joint Conference on Neural Networks, Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Jiang, S.; Guo, Z.; Ouyang, J. What makes sentiment signals work? Sentiment and stance multi-task learning for fake news detection. Knowl. Based Syst. 2024, 303, 112395. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, J.; Zafarani, R. SAFE: Similarity-Aware Multi-modal Fake News Detection. In Proceedings of the PAKDD 2020: Pacific-Asia Conference on Knowledge Discovery and Data Mining, Singapore, 11–14 May 2020; pp. 354–367. [Google Scholar]

- Wang, Z.; Huang, D.; Cui, J.; Zhang, X.; Ho, S.-B.; Cambria, E. A review of Chinese sentiment analysis: Subjects, methods, and trends. Artif. Intell. Rev. 2025, 58, 75. [Google Scholar] [CrossRef]

- Sun, Q.H.; Gao, B. Emotion-enhanced Cross-modal Contrastive Learning for Fake News Detection. In Proceedings of the 2025 2nd International Conference on Generative Artificial Intelligence and Information Security, Hangzhou, China, 21–23 February 2025; pp. 184–189. [Google Scholar]

- Toughrai, Y.; Langlois, D.; Smaïli, K. Fake News Detection via Intermediate-Layer Emotional Representations. In Proceedings of the WWW ‘25: The ACM Web Conference 2025, Sydney, NSW, Australia, 28 April–2 May 2025; pp. 2680–2684. [Google Scholar] [CrossRef]

- Tan, Z.H.; Zhang, T. Emotion-semantic interaction network for fake news detection: Perspectives on question and non-question comment semantics. Inf. Process. Manag. 2026, 63, 104391. [Google Scholar] [CrossRef]

- Chen, Y.; Li, D.; Zhang, P.; Sui, J.; Lv, Q.; Lu, T.; Shang, L. Cross-modal Ambiguity Learning for Multimodal Fake News Detection. In Proceedings of the WWW 2022: The Web Conference, Lyon, France, 25–29 April 2022; pp. 2897–2905. [Google Scholar]

- Sun, M.; Zhang, X.; Ma, J.; Xie, S.; Liu, Y.; Yu, P.S. Inconsistent Matters: A Knowledge-Guided Dual-Consistency Network for Multi-Modal Rumor Detection. IEEE Trans. Knowl. Data Eng. 2023, 35, 12736–12749. [Google Scholar] [CrossRef]

- Yu, H.; Wu, H.; Fang, X.; Li, M.; Zhang, H. SR-CIBN: Semantic relationship-based consistency and inconsistency balancing network for multimodal fake news detection. Neurocomputing 2025, 635, 129997. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, C.; Xu, H.; Xu, Y.; Xu, X.; Wang, S. Cross-modal Contrastive Learning for Multimodal Fake News Detection. In Proceedings of the ACM Multimedia 2023: 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 5696–5704. [Google Scholar]

- Jiang, Y.; Wang, T.; Xu, X.; Wang, Y.; Song, X.; Maynard, D. Cross-modal augmentation for few-shot multimodal fake news detection. Eng. Appl. Artif. Intell. 2025, 142, 109931. [Google Scholar] [CrossRef]

- Ying, Q.; Hu, X.; Zhou, Y.; Qian, Z.; Zeng, D.; Ge, S. Bootstrapping Multi-View Representations for Fake News Detection. In Proceedings of the AAAI 2023: 37th AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 5384–5392. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT 2019: Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the CVPR 2016: IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Jin, Z.; Cao, J.; Zhang, Y.; Zhou, J.; Tian, Q. Novel Visual and Statistical Image Features for Microblogs News Verification. IEEE Trans. Multim. 2017, 19, 598–608. [Google Scholar] [CrossRef]

- Boididou, C.; Papadopoulos, S.; Zampoglou, M.; Apostolidis, L.; Papadopoulou, O.; Kompatsiaris, Y. Detection and visualization of misleading content on Twitter. Int. J. Multim. Inf. Retr. 2018, 7, 71–86. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the ICLR 2015: International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Xue, J.; Wang, Y.; Tian, Y.; Li, Y.; Shi, L.; Wei, L. Detecting fake news by exploring the consistency of multimodal data. Inf. Process. Manag. 2021, 58, 102610. [Google Scholar] [CrossRef]

- Jing, J.; Wu, H.; Sun, J.; Fang, X.; Zhang, H. Multimodal fake news detection via progressive fusion networks. Inf. Process. Manag. 2023, 60. [Google Scholar] [CrossRef]

- Peng, L.; Jian, S.; Kan, Z.; Qiao, L.; Li, D.S. Not all fake news is semantically similar: Contextual semantic representation learning for multimodal fake news detection. Inf. Process. Manag. 2024, 61, 103564. [Google Scholar] [CrossRef]

- Yang, H.; Zhang, J.; Zhang, L.; Cheng, X.; Hu, Z. MRAN: Multimodal relationship-aware attention network for fake news detection. Comput. Stand. Interfaces 2024, 89, 103822. [Google Scholar] [CrossRef]

| Method | Twitter Dataset | Weibo Dataset | ||||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1 | Accuracy | Precision | Recall | F1 | |

| EANN | 0.648 | 0.810 | 0.498 | 0.617 | 0.795 | 0.806 | 0.795 | 0.800 |

| SAFE | 0.762 | 0.831 | 0.724 | 0.774 | 0.816 | 0.818 | 0.815 | 0.817 |

| SpotFake | 0.777 | 0.751 | 0.900 | 0.820 | 0.892 | 0.888 | 0.810 | 0.835 |

| MCNN | 0.784 | 0.778 | 0.781 | 0.779 | 0.823 | 0.858 | 0.801 | 0.828 |

| MCAN | 0.809 | 0.889 | 0.765 | 0.822 | 0.899 | 0.913 | 0.889 | 0.901 |

| CAFE | 0.806 | 0.807 | 0.799 | 0.805 | 0.840 | 0.855 | 0.830 | 0.842 |

| MPFN | 0.833 | 0.846 | 0.921 | 0.840 | 0.838 | 0.857 | 0.894 | 0.889 |

| CSFND | 0.833 | 0.899 | 0.799 | 0.846 | 0.895 | 0.899 | 0.895 | 0.897 |

| MRAN | 0.855 | 0.861 | 0.857 | 0.859 | 0.903 | 0.904 | 0.908 | 0.906 |

| ETICD-Net | 0.847 | 0.841 | 0.859 | 0.866 | 0.906 | 0.911 | 0.907 | 0.915 |

| Model Variants | Twitter Accuracy | Twitter F1 | Weibo Accuracy | Weibo F1 | Instruction |

|---|---|---|---|---|---|

| ETICD-Net (Full) | 0.847 | 0.866 | 0.906 | 0.915 | Complete Model |

| w/o Guidance | 0.801 | 0.819 | 0.872 | 0.878 | Remove emotional-theme guidance |

| w/o Text Injection | 0.823 | 0.841 | 0.889 | 0.896 | Remove Text Feature Injection |

| w/o Image Injection | 0.828 | 0.845 | 0.892 | 0.899 | Remove Image Feature Injection |

| w/o Text And Image | 0.819 | 0.833 | 0.880 | 0.899 | Remove Text And Image Feature |

| w/o Cross-Attention | 0.831 | 0.849 | 0.895 | 0.902 | Replace Cross-Modal Attention |

| w/o Difference | 0.835 | 0.852 | 0.898 | 0.905 | Remove Difference Metric |

| w/o ALL Fusion | 0.798 | 0.815 | 0.865 | 0.872 | Simply stitched together |

| Fine-tuned Encoders | 0.858 | 0.874 | 0.911 | 0.919 | Fine-tune last layer of BERT/ResNet |

| w/o Guidance (Fine-tuned) | 0.831 | 0.843 | 0.879 | 0.889 | Remove guidance from fine-tuned model |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shang, W.; Yang, J.; Zhang, L.; Yi, T.; Liu, P. ETICD-Net: A Multimodal Fake News Detection Network via Emotion-Topic Injection and Consistency Modeling. Informatics 2025, 12, 129. https://doi.org/10.3390/informatics12040129

Shang W, Yang J, Zhang L, Yi T, Liu P. ETICD-Net: A Multimodal Fake News Detection Network via Emotion-Topic Injection and Consistency Modeling. Informatics. 2025; 12(4):129. https://doi.org/10.3390/informatics12040129

Chicago/Turabian StyleShang, Wenqian, Jinru Yang, Linlin Zhang, Tong Yi, and Peng Liu. 2025. "ETICD-Net: A Multimodal Fake News Detection Network via Emotion-Topic Injection and Consistency Modeling" Informatics 12, no. 4: 129. https://doi.org/10.3390/informatics12040129

APA StyleShang, W., Yang, J., Zhang, L., Yi, T., & Liu, P. (2025). ETICD-Net: A Multimodal Fake News Detection Network via Emotion-Topic Injection and Consistency Modeling. Informatics, 12(4), 129. https://doi.org/10.3390/informatics12040129