The Impact of Short, Structured ENT Teaching Interventions on Junior Doctors’ Confidence and On-Call Preparedness: A Systematic Review

Highlights

- Short, structured ENT teaching (boot camps/simulation/workshops) consistently improved junior doctors’ immediate confidence and knowledge; two studies also showed gains on blinded objective performance.

- The evidence is moderate quality (mean MERSQI 10.0) and largely single-group pre–post with limited follow-up, constraining causal inference and retention claims.

- Services can implement a three-arm programme—e-learning (core knowledge), case-based discussions (decision-making), and simulation (hands-on skills)—incorporating observed assessment and a defined core outcome set.

- Future studies should use comparative designs with blinded scoring and 2–3-month follow-up to evaluate behaviour/clinical impact (Kirkpatrick 3–4) and report instrument validity.

Abstract

1. Introduction

- What types of short, structured ENT teaching interventions have been implemented for junior doctors since 2015?

- How effective are these interventions in improving confidence, knowledge, and preparedness for ENT on-call responsibilities?

- What insights from current interventions can inform the development of a scalable, standardised teaching framework for postgraduate ENT training?

1.1. Aim

1.2. Objectives

- To systematically identify and describe short, structured ENT induction/teaching interventions for junior doctors implemented since 2015.

- To synthesise evidence on confidence, knowledge, and observed performance (‘shows how’/‘does’), including follow-up and instrument validity reporting.

- To propose a scalable three-arm framework (e-learning, interactive cases, simulation) and priority evaluation methods (comparative designs, blinded assessment, 2–3-month follow-up, core outcomes).

2. Materials and Methods

2.1. Study Design

2.2. Research Question and Framework

2.3. Search Strategy

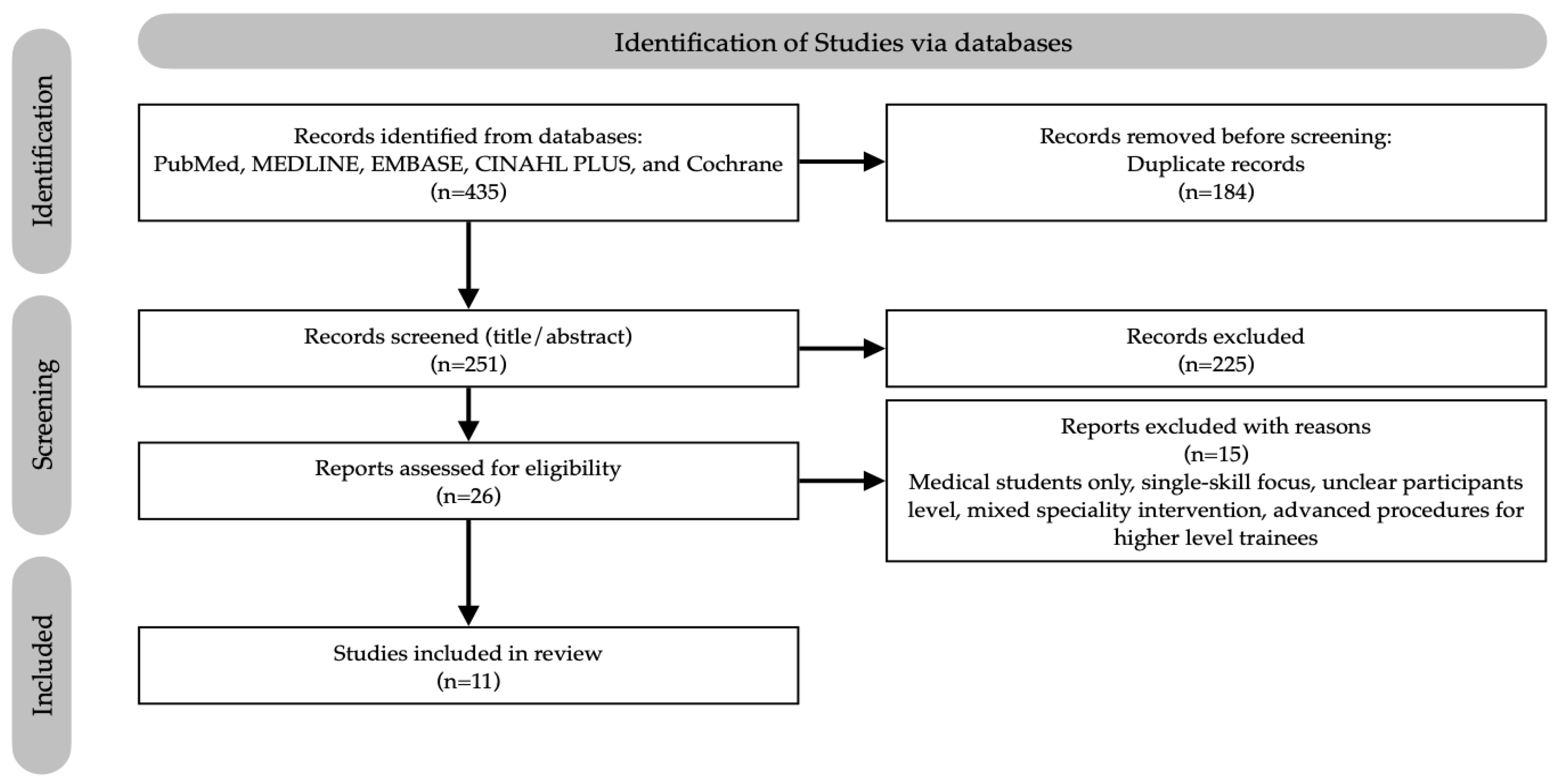

2.4. Study Selection

- Inclusion criteria: ENT-specific short courses for junior doctors, reporting outcomes on confidence, preparedness, knowledge, or competence, published between 2015–2025.

- Exclusion criteria: Studies focused solely on undergraduate medical students, interventions where ENT was a minor or embedded component of a broader programme, editorials, opinion pieces, and studies lacking defined methodologies or measurable outcome data.

2.5. Critical and Ethical Appraisal

2.6. Risk of Bias Appraisal

2.7. Data Extraction and Synthesis

2.8. Ethical Approval and Registration

3. Results

3.1. Study Selection

3.2. Study Characteristics

3.3. Methodological Quality and Key Findings

3.4. Synthesis of Results

3.4.1. Self-Reported Confidence and Competence

3.4.2. Knowledge Acquisition

3.4.3. Clinical Performance

3.4.4. Learner Satisfaction and Perception

4. Discussion

4.1. Summary of Major Findings

4.2. Interpretation in the Context of Existing Literature

4.3. Strengths and Limitations of the Review

4.4. Implications for Practice and Future Research

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DREEM | Dundee Ready Education Environment Measure |

| ENT | Ear, Nose and Throat |

| GP/GPST | General Practitioner/General Practice Specialty Trainee |

| MCQ/MCQs | Multiple-Choice Question(s) |

| MERSQI | Medical Education Research Quality Instrument |

| OSF | Open Science Framework |

| PICOS | Population, Intervention, Comparison, Outcomes, Study Design framework |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RCT | Randomised Controlled Trial |

| SHO | Senior House Officer |

| SSES | Satisfaction with Simulation Experience Scale |

| SWiM | Synthesis Without Meta-analysis |

References

- Dean, K.M.; DeMason, C.E.; Choi, S.S.; Malloy, K.M.; Malekzadeh, S. Otolaryngology boot camps: Current landscape and future directions. Laryngoscope 2019, 129, 2707–2712. [Google Scholar] [CrossRef]

- Hayois, L.; Dunsmore, A. Common and serious ENT presentations in primary care. InnovAiT 2023, 16, 79–86. [Google Scholar] [CrossRef]

- Gundle, L.; Guest, O.; Hyland, L.D.; Khan, A.; Grimes, C.; Nunney, I.; Tailor, B.V.; Collaborators, R.S. RecENT SHO (Rotating onto ear, nose and throat surgery): How well are new Senior House Officers prepared and supported? A UK-wide multi-centre survey. Clin. Otolaryngol. 2023, 48, 785–789. [Google Scholar] [CrossRef] [PubMed]

- Rai, A.; Shukla, S.; Mehtani, N.; Acharya, V.; Tolley, N. Does a junior doctor focused ‘Bootcamp’ improve the confidence and preparedness of newly appointed ENT registrars to perform their job roles? BMC Med. Educ. 2024, 24, 702. [Google Scholar] [CrossRef]

- Morris, S.; Owens, D.; Cserzo, D. Learning needs of junior doctors in otolaryngology: A qualitative study. J. Laryngol. Otol. 2024, 138, 592–600. [Google Scholar] [CrossRef]

- Ferguson, G.R.; Bacila, I.A.; Swamy, M. Does current provision of undergraduate education prepare UK medical students in ENT? A systematic literature review. BMJ Open 2016, 6, e010054. [Google Scholar] [CrossRef]

- Mayer, A.W.; Smith, K.A.; Carrie, S. A survey of ENT undergraduate teaching in the UK. J. Laryngol. Otol. 2020, 134, 553–557. [Google Scholar] [CrossRef]

- Smith, M.E.; Navaratnam, A.; Jablenska, L.; Dimitriadis, P.A.; Sharma, R. A randomized controlled trial of simulation-based training for ear, nose, and throat emergencies. Laryngoscope 2015, 125, 1816–1821. [Google Scholar] [CrossRef]

- Swords, C.; Smith, M.E.; Wasson, J.D.; Qayyum, A.; Tysome, J.R. Validation of a new ENT emergencies course for first-on-call doctors. J. Laryngol. Otol. 2017, 131, 106–112. [Google Scholar] [CrossRef]

- Bhalla, S.; Beegun, I.; Awad, Z.; Tolley, N. Simulation-based ENT induction: Validation of a novel mannequin training model. J. Laryngol. Otol. 2020, 134, 74–80. [Google Scholar] [CrossRef]

- Chin, C.J.; Chin, C.A.; Roth, K.; Rotenberg, B.W.; Fung, K. Simulation-based otolaryngology–head and neck surgery boot camp: ‘how I do it’. J. Laryngol. Otol. 2016, 130, 284–290. [Google Scholar] [CrossRef] [PubMed]

- Giri, S.; Khan, S.A.; Parajuli, S.B.; Rauniyar, Z.; Rimal, A. Evaluating a specialized workshop on otorhinolaryngology emergencies for junior doctors: Empowering the next generation of healers. Medicine 2024, 103, e40771. [Google Scholar] [CrossRef] [PubMed]

- Cervenka, B.P.; Hsieh, T.; Lin, S.; Bewley, A. Multi-institutional regional otolaryngology bootcamp. Ann. Otol. Rhinol. Laryngol. 2020, 129, 605–610. [Google Scholar] [CrossRef] [PubMed]

- Association for Simulated Practice in Healthcare (ASPiH). ASPiH Standards 2023: Simulation-Based Practice in Health and Care; ASPiH: Bournemouth, UK, 2023; Available online: https://aspih.org.uk/wp-content/uploads/2023/11/ASPiH-Standards-2023-CDN-Final.pdf (accessed on 5 October 2025).

- Health Education England. A National Framework for Simulation-Based Education (SBE); Health Education England: Leeds, UK, 2018; Available online: https://www.hee.nhs.uk/sites/default/files/documents/National%20framework%20for%20simulation%20based%20education.pdf (accessed on 5 October 2025).

- Kirkpatrick, D.; Kirkpatrick, J. Evaluating Training Programs: The Four Levels; Berrett-Koehler: San Francisco, CA, USA, 2006. [Google Scholar]

- Shadish, W.R.; Cook, T.D.; Campbell, D.T. Experimental and Quasi-Experimental Designs for Generalized Causal Inference; Houghton Mifflin: Boston, MA, USA, 2002. [Google Scholar]

- Kruger, J.; Dunning, D. Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J. Pers. Soc. Psychol. 1999, 77, 1121. [Google Scholar] [CrossRef]

- Miller, G.E. The assessment of clinical skills/competence/performance. Acad. Med. 1990, 65, S63–S67. [Google Scholar] [CrossRef]

- Popay, J.; Roberts, H.; Sowden, A.; Petticrew, M.; Arai, L.; Rodgers, M.; Britten, N.; Roen, K.; Duffy, S. Guidance on the Conduct of Narrative Synthesis in Systematic Reviews: A Product from the ESRC Methods Programme; Institute of Health Research, University of Lancaster: Lancaster, UK, 2006; Available online: https://www.academia.edu/download/39246301/02e7e5231e8f3a6183000000.pdf (accessed on 5 October 2025).

- Richardson, W.S.; Wilson, M.C.; Nishikawa, J.; Hayward, R.S. The well-built clinical question: A key to evidence-based decisions. ACP J. Club 1995, 123, 12. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—A web and mobile app for systematic reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef]

- Reed, D.A.; Beckman, T.J.; Wright, S.M.; Levine, R.B.; Kern, D.E.; Cook, D.A. Predictive validity evidence for Medical Education Research Study Quality Instrument scores: Quality of submissions to JGIM’s Medical Education Special Issue. J. Gen. Intern. Med. 2008, 23, 903–907. [Google Scholar] [CrossRef]

- Reed, D.A.; Cook, D.A.; Beckman, T.J.; Levine, R.B.; Kern, D.E.; Wright, S.M. Association between funding and quality of published medical education research. JAMA 2007, 298, 1002–1009. [Google Scholar] [CrossRef]

- Sterne, J.A.; Hernán, M.A.; Reeves, B.C.; Savović, J.; Berkman, N.D.; Viswanathan, M.; Henry, D.; Altman, D.G.; Ansari, M.T.; Boutron, I.; et al. ROBINS-I: A tool for assessing risk of bias in non-randomised studies of interventions. BMJ 2016, 355, i4919. [Google Scholar] [CrossRef] [PubMed]

- Sterne, J.A.C.; Savović, J.; Page, M.J.; Elbers, R.G.; Blencowe, N.S.; Boutron, I.; Cates, C.J.; Cheng, H.Y.; Corbett, M.S.; Eldridge, S.M.; et al. RoB 2: A revised tool for assessing risk of bias in randomised trials. BMJ 2019, 366, l4898. [Google Scholar] [CrossRef] [PubMed]

- Campbell, M.; McKenzie, J.E.; Sowden, A.; Katikireddi, S.V.; Brennan, S.E.; Ellis, S.; Hartmann-Boyce, J.; Ryan, R.; Shepperd, S.; Thomas, J.; et al. Synthesis without meta-analysis (SWiM) in systematic reviews: Reporting guideline. BMJ 2020, 368, l6890. [Google Scholar] [CrossRef] [PubMed]

- Jegatheeswaran, L.; Naing, T.K.P.; Choi, B.; Collins, R.; Luke, L.; Gokani, S.; Kulkarni, S. Simulation-based teaching: An effective modality for providing UK foundation doctors with core ENT skills training. J. Laryngol. Otol. 2023, 137, 622–628. [Google Scholar] [CrossRef]

- Morris, S.; Burton, L.; Owens, D. The all wales ENT SHO bootcamp: A national induction initiative. J. Laryngol. Otol. 2025, 139, 288–291. [Google Scholar] [CrossRef]

- La Monte, O.A.; Lee, J.H.; Soliman, S.I.; Saddawi-Konefka, R.; Harris, J.P.; Coffey, C.S.; Orosco, R.K.; Watson, D.; Holliday, M.A.; Faraji, F.; et al. Simulation-based workshop for emergency preparedness in otolaryngology. Laryngoscope Investig. Otolaryngol. 2023, 8, 1159–1168. [Google Scholar] [CrossRef]

- Dell’Era, V.; Garzaro, M.; Carenzo, L.; Ingrassia, P.L.; Valletti, P.A. An innovative and safe way to train novice ear, nose and throat residents through simulation: The SimORL experience. Acta Otorhinolaryngol. Ital. 2020, 40, 19–25. [Google Scholar] [CrossRef]

- Alabi, O.; Hill, R.; Walsh, M.; Carroll, C. Introduction of an ENT emergency-safe boot camp into postgraduate surgical training in the Republic of Ireland. Ir. J. Med. Sci. 2022, 191, 475–477. [Google Scholar] [CrossRef]

- McGaghie, W.C.; Issenberg, S.B.; Cohen, E.R.; Barsuk, J.H.; Wayne, D.B. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad. Med. 2011, 86, 706–711. [Google Scholar] [CrossRef]

- Cook, D.A.; Hatala, R.; Brydges, R.; Zendejas, B.; Szostek, J.H.; Wang, A.T.; Erwin, P.J.; Hamstra, S.J. Technology-enhanced simulation for health professions education: A systematic review and meta-analysis. JAMA 2011, 306, 978–988. [Google Scholar] [CrossRef]

- Mayer, R.E. Multimedia Learning, 3rd ed.; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar]

- Cook, D.A.; Levinson, A.J.; Garside, S.; Dupras, D.M.; Erwin, P.J.; Montori, V.M. Internet-based learning in the health professions: A meta-analysis. JAMA 2008, 300, 1181–1196. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Peng, W.; Zhang, F.; Hu, R.; Li, Y.; Yan, W. The effectiveness of blended learning in health professions: Systematic review and meta-analysis. J. Med. Internet Res. 2016, 18, e2. [Google Scholar] [CrossRef] [PubMed]

- Ericsson, K.A.; Krampe, R.T.; Tesch-Römer, C. The role of deliberate practice in the acquisition of expert performance. Psychol. Rev. 1993, 100, 363–406. [Google Scholar] [CrossRef]

- Sweller, J.; van Merriënboer, J.J.G.; Paas, F. Cognitive architecture and instructional design: 20 years later. Educ. Psychol. Rev. 2019, 31, 261–292. [Google Scholar] [CrossRef]

- Sweller, J.; van Merrienboer, J.J.G.; Paas, F.G.W.C. Cognitive architecture and instructional design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Kolb, D.A. Experiential Learning: Experience As the Source of Learning and Development; FT Press: Upper Saddle River, NJ, USA, 2014. [Google Scholar]

- Roediger, H.L., III; Karpicke, J.D. Test-enhanced learning: Taking memory tests improves long-term retention. Psychol. Sci. 2006, 17, 249–255. [Google Scholar] [CrossRef]

- Chynoweth, J.; Jones, B.G.; Stevens, K. Epistaxis 2016: National audit of management. J. Laryngol. Otol. 2017, 131, 1131–1141. [Google Scholar] [CrossRef]

- McGrath, B. (Ed.) Comprehensive Tracheostomy Care: The National Tracheostomy Safety Project Manual; John Wiley & Sons: Chichester, UK, 2014. [Google Scholar]

- Kane, M.T. Validating the interpretations and uses of test scores. J. Educ. Meas. 2013, 50, 1–73. [Google Scholar] [CrossRef]

- Messick, S. Validity of psychological assessment: Validation of inferences from persons’ responses and performances as scientific inquiry into score meaning. Am. Psychol. 1995, 50, 741–749. [Google Scholar] [CrossRef]

| Author, Year | Region | Study Design | Participants | Intervention | Comparison | Key Outcomes Measured |

|---|---|---|---|---|---|---|

| Jegatheeswaran et al. (2023) [29] | UK | Pre–Post | 41 FY Doctors | Online videos + five-station practical session (e.g., epistaxis, PTA drainage) | Pre/Post | Confidence (seven skills), preparedness (DREEM), satisfaction |

| Morris et al. (2025) [30] | Wales, UK | Pre–Post | 152 Junior Doctors and Allied Pros | National 1-day bootcamp (six stations, e.g., Airway, Rhinology) | Pre/Post | Confidence, knowledge (MCQ), satisfaction |

| Bhalla et al. (2020) [10] | UK and Australia | Two-Group Comparative | 51 Junior Doctors (Sim: 38, Lect: 13) | Sim-based induction (homemade models) vs. lecture-based | Sim vs. Lecture | Confidence, anxiety, knowledge (MCQ retention) |

| Chin et al. (2016) [11] | Canada and USA | Pre–Post | 22 ENT Residents (PGY1–2) | 1-day bootcamp (cadaveric models, sim scenarios) | Pre/Post | Confidence (nine procedures), satisfaction |

| Swords et al. (2017) [9] | UK | Pre–Post, Single-Blinded | 37 Junior Doctors | 1-day bootcamp (skills + simulated scenarios) | Pre/Post, 2-week Follow-up | Confidence, blinded performance assessment, behaviour change |

| La Monte et al. (2023) [31] | USA | Pre–Post | 47 ENT Residents (PGY1–2) | 1-day workshop (three sim stations, one lecture station) | Pre/Post, 2-month Follow-up | Confidence, anxiety (by scenario), satisfaction |

| Dell’Era et al. (2020) [32] | Italy | Pre–Post | 23 ENT Residents (PGY1–4) | 2-day sim event (ten diverse stations) | Pre/Post | Confidence (six skills), satisfaction (SSES) |

| Giri et al. (2024) [12] | Nepal | Pre–Post | 41 Medical Interns | 1-day didactic workshop (No simulation) | Pre/Post | Knowledge (MCQ only) |

| Cervenka et al. (2020) [13] | USA | Pre–Post | 45 ENT Residents (PGY1–2) | 1-day bootcamp (cadaveric task trainers + scenarios) | Pre/Post | Confidence, station efficacy ratings |

| Alabi et al. (2022) [33] | Ireland | Pre–Post | 54 Surgical Trainees | 4 h bootcamp (three critical scenarios) | Pre/Post | Self-assessed competence, perceived knowledge/confidence |

| Smith et al. (2015) [8] | UK | RCT | 38 Interns | Lecture + sim scenarios vs. Lecture-only | Between Groups | Blinded viva exam score, perception of learning |

| Author, Year | Key Findings (Primary) | Key Findings (Secondary) | MERSQI | Strengths and Limitations |

|---|---|---|---|---|

| Jegatheeswaran et al. (2023) [29] | Sig. ↑ confidence (7 skills, p < 0.001) | DREEM median 48; 100% satisfaction and recommend | 9.5 | S: 100% response; validated tool (DREEM). L: Pre–post; no control; self-report. |

| Morris et al. (2025) [30] | Sig. ↑ confidence (p < 0.01) and knowledge (68.5%→96.5%, p < 0.01) | 100% felt more confident; high satisfaction. | 11 | S: Large n; objective MCQ with confidence. L: Single-arm design; no follow-up. |

| Bhalla et al. (2020) [10] | Sim: Sig. ↑ confidence, ↓ anxiety. Lect: No Δ confidence. | Sim: Superior knowledge retention (17/20 vs. 12.3/20); positive qual themes. | 12 | S: Comparative design; mixed methods. L: Single institution; no instrument validity. |

| Chin et al. (2016) [11] | Sig. ↑ confidence for 6/9 procedures (p < 0.05) | 93% recommend; greater gain in procedural confidence. | 7.5 | S: Broad trainee cohort; cadaver + scenario. L: Low response (45%); self-report only. |

| Swords et al. (2017) [9] | Sig. ↑ confidence (p < 0.0001) and blinded performance (p = 0.0093) | Applied skills in practice (Kirkpatrick L3); high satisfaction. | 11.5 | S: Blinded assessment; Kirkpatrick L3. L: No control; analysis limitations noted. |

| La Monte et al. (2023) [31] | Sig. ↓ anxiety, ↑ confidence for simulation stations (p < 0.01). | 92% satisfaction; epistaxis showed ↑ anxiety/↓ confidence. | 8.5 | S: Internal control; longitudinal. L: Self-report; low follow-up. |

| Dell’Era et al. (2020) [32] | Sig. ↑ confidence all skills (p < 0.05) | High satisfaction (SSES: 4.5/5); cadaver station highest rated. | 9.5 | S: Diverse sim; validated scale (SSES). L: Pre–post; small n, self-report. |

| Giri et al. (2024) [12] | Sig. ↑ knowledge scores (p < 0.0001) | N/A | 11 | S: Objective knowledge focus. L: No sim; no skills/behaviour; single site. |

| Cervenka et al. (2020) [13] | Sig. ↑ confidence all stations (p < 0.05) | All stations rated highly effective; PGY-2 lacked confidence. | 8.5 | S: Regional cohort; multi-year bootcamp. L: Self-report only; no retention data. |

| Alabi et al. (2022) [33] | Sig. ↑ self-rated competence (e.g., 2/5→4/5) | 92% added knowledge; 85% more confident. | 7.5 | S: Addresses training gap. L: Self-report only; no objective measure. |

| Smith et al. (2015) [8] | Sim group scored higher on blinded viva (p < 0.05) | Sim group: higher satisfaction (DREEM, p < 0.001). | 13.5 | S: RCT with blinded assessment (viva). L: Single centre; no retention follow-up. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Khafaji, M.H.; Alabdalhussein, A.; Al-Dabbagh, S.; Altalaa, A.; Alhumairi, G.; Abdulwahid, Z.; Al-Hasani, A.; Baban, J.; Al-Ogaidi, M.; Hamid, E.; et al. The Impact of Short, Structured ENT Teaching Interventions on Junior Doctors’ Confidence and On-Call Preparedness: A Systematic Review. Healthcare 2025, 13, 2886. https://doi.org/10.3390/healthcare13222886

Al-Khafaji MH, Alabdalhussein A, Al-Dabbagh S, Altalaa A, Alhumairi G, Abdulwahid Z, Al-Hasani A, Baban J, Al-Ogaidi M, Hamid E, et al. The Impact of Short, Structured ENT Teaching Interventions on Junior Doctors’ Confidence and On-Call Preparedness: A Systematic Review. Healthcare. 2025; 13(22):2886. https://doi.org/10.3390/healthcare13222886

Chicago/Turabian StyleAl-Khafaji, Mohammed Hasan, Ali Alabdalhussein, Shahad Al-Dabbagh, Abdulmohaimen Altalaa, Ghaith Alhumairi, Zeinab Abdulwahid, Anwer Al-Hasani, Juman Baban, Mohammed Al-Ogaidi, Eshtar Hamid, and et al. 2025. "The Impact of Short, Structured ENT Teaching Interventions on Junior Doctors’ Confidence and On-Call Preparedness: A Systematic Review" Healthcare 13, no. 22: 2886. https://doi.org/10.3390/healthcare13222886

APA StyleAl-Khafaji, M. H., Alabdalhussein, A., Al-Dabbagh, S., Altalaa, A., Alhumairi, G., Abdulwahid, Z., Al-Hasani, A., Baban, J., Al-Ogaidi, M., Hamid, E., & Mair, M. (2025). The Impact of Short, Structured ENT Teaching Interventions on Junior Doctors’ Confidence and On-Call Preparedness: A Systematic Review. Healthcare, 13(22), 2886. https://doi.org/10.3390/healthcare13222886