Abstract

Probabilistic graphical models allow us to encode a large probability distribution as a composition of smaller ones. It is oftentimes the case that we are interested in incorporating in the model the idea that some of these smaller distributions are likely to be similar to one another. In this paper we provide an information geometric approach on how to incorporate this information and see that it allows us to reinterpret some already existing models. Our proposal relies on providing a formal definition of what it means to be close. We provide an example on how this definition can be actioned for multinomial distributions. We use the results on multinomial distributions to reinterpret two already existing hierarchical models in terms of closeness distributions.

1. Introduction

Bayesian modeling [1] builds on our ability to describe a given process in probabilistic terms, known as probabilistic modeling. As stated in [2]: “Statistical methods and models commonly involve multiple parameters that can be regarded as related or connected in such a way that the problem implies dependence of the joint probability model for these parameters”. Hierarchical modeling [3] is widely used for that purpose in areas such as epidemiological modeling [4] or to model oil or gas production [5]. The motivation for this paper comes from realizing that many hierarchical models can be understood, from a high level perspective, as defining a distribution over the multiple parameters that establishes that distributions which are closer to each other, are more likely. Thus, the main motivation is to start providing the mathematical tools that allow a probabilistic modeler to build hierarchical (and non-hierarchical) models starting from that geometrical concepts.

We start by introducing a simple example to illustrate the kind of problems we are interested in solving. Consider the problem of estimating a parameter using data from a small experiment and a prior distribution constructed from similar previous experiments. The specific problem description is borrowed from [2]:

In the evaluation of drugs for possible clinical application, studies are routinely performed on rodents. For a particular study drawn from the statistical literature, suppose the immediate aim is to estimate θ, the probability of a tumor in a population of female laboratory rats of type ‘F344’ that receive a zero dose of the drug (a control group). The data show that 4 out of 14 rats developed endometrial stromal polyps (a kind of tumor). (...) Typically, the mean and standard deviation of underlying tumor risks are not available. Rather, historical data are available on previous experiments on similar groups of rats. In the rat tumor example, the historical data were in fact a set of observations of tumor incidence in 70 groups of rats (Table 1). In the ith historical experiment, let the number of rats with tumors be and the total number of rats be . We model the ’s as independent binomial data, given sample sizes and study-specific means . Table 1. Tumor incidence in 70 historical groups of rats and in the current group of rats (from [6]). The table displays the values of: (number of rats with tumors)/(total number of rats).

Table 1. Tumor incidence in 70 historical groups of rats and in the current group of rats (from [6]). The table displays the values of: (number of rats with tumors)/(total number of rats).

Example. Estimating the risk of tumor in a group of rats.

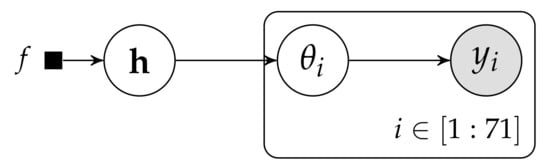

We can depict our graphical model (for more information on the interpretation of the graphical models in this paper the reader can consult [7,8]) as shown in Figure 1, where current and historical experiments are a random sample from a common population, having as hyperparameters, which follow f as prior distribution. Equationally, our model can be described as:

Figure 1.

General probabilistic graphical model for the rodents example.

The model used for this problem in [2] is the Beta-Binomial model, where g is taken to be the Beta distribution, hence (see Figure 2). Furthermore, in [2] the prior f over is taken to be proportional to , giving the model

Figure 2.

PGM for the rodents example proposed in [2].

The presentation of the model in [2] simply introduces the assumption that “the Beta prior distribution with parameters is a good description of the population distribution of the ’s in the historical experiments” without further justification. In this paper we would like to show that a large part of this model can be obtained from the intuitive idea that the probability distributions for rats with tumors in each group are similar. To do that we develop a framework for encoding as a probability distribution the assumption that two probability distributions are close to each other, and rely on information geometric concepts to model the idea of closeness.

We start by introducing the general concept of closeness distribution in Section 2. Then, we analyze the particular case in which we choose to measure remoteness between distributions by means of the Kullback Leibler divergence in the family of multinomial distributions in Section 3. The results from Section 3 are used in Section 4 to reinterpret the Beta Binomial model proposed in [2] for the rodents example, and in Section 5 to reinterpret the Hierarchical Dirichlet Multinomial model proposed by Azzimonti et al. in [9,10,11]. We are convinced that closeness distributions could play a relevant role in probabilistic modeling, allowing for more explicitly geometrically inspired probabilistic models. This paper is just a first step towards a proper definition and understanding of closeness distributions.

2. Closeness Distributions

We start by introducing the formal framework required to discuss the probability distributions. Then, we formalize what we mean by remoteness through a remoteness function, and we introduce closeness distributions as those that implement a remoteness function.

2.1. Probabilities over Probabilities

Information geometry [12] has shown us that most families of probability distributions can be understood as a Riemannian manifold. Thus, we can work with probabilities over probabilities by defining random variables which take values in a Riemannian manifold. Here, we only introduce some fundamental definitions. For a more detailed overview of measures and probability see [13], of Riemannian manifolds see [14]. Finally, Pennec provides a good overview of the probability on Riemannian manifolds in [15].

We start by noting that each manifold , has an associated -algebra, , the Lebesgue -algebra of (see Section 1, chapter XII in [16]). Furthermore, the existence of a metric g induces a measure (see Section 1.3 in [15]). The volume of is defined as

Definition 1.

Let be a probability space and be a Riemannian manifold. A random variable (referred to as a random primitive in [15]) taking values in is a measurable function from Ω to Furthermore, we say that has a probability density function (p.d.f.) (real, positive, and integrable function) if:

We would like to highlight that the density function is intrinsic to the manifold. If is a chart of the manifold defined almost everywhere, we obtain a random vector . The expression of in this parametrization is

Let be a real function on . We define the expectation of f under as

We have to be careful when computing so that we do it independently of the parametrization. We have to use the fact that

where is the Fisher matrix at in the parametrization . Hence,

where is the expression of in the parametrization for integration purposes, that is, its expression with respect to the Lebesgue measure instead of

We note that depends on the chart used whereas is intrinsic to the manifold.

2.2. Formalizing Remoteness and Closeness

Intuitively, the objective of this section is to create a probability distribution over pairs of probability distributions that assigns higher probability to those pairs of probability distributions which are “close”.

We assume that we measure how distant two points are in by means of a remoteness function , such that for each . Note that r does not need to be transitive, symmetric, or reflexive.

As can be seen in Appendix A, r induces a total order in We say that two remoteness functions are order-equivalent if .

Proposition 1.

Let . Then, is order-equivalent to

Proof.

iff iff iff iff . □

We say that a probability density function implements a remoteness function r if This is equivalent to stating that for each we have that iff . That is, a density function implements a remoteness function r if it assigns higher probability density to those pairs of points which are closer according to r.

Once we have clarified what it means for a probability to implement a remoteness function, we introduce a specific way of creating probabilities to that.

Definition 2.

Let be . If is finite, we define the density function

We refer to the corresponding probability distribution as a closeness distribution.

Note that is defined intrinsically. Following the explanation in the previous section, let be a chart of defined almost everywhere. The representation of this pdf in the parametrization is simply

and its representation for integration purposes is

Proposition 2.

It it exists, implements r.

Proof.

The exponential is a monotonous function and the minus sign in the exponent is used to revert the order. □

Proposition 3.

If r is measurable and has finite volume, then is finite, and hence implements r.

Proof.

Note that since , we have that , and hence is bounded. Furthermore, is measurable since it is a composition of measurable functions. Now, since any bounded measurable function in a finite volume space is integrable, is finite. □

Obviously, once we have established a closeness distribution we can define its marginal and conditional distributions in the usual way. We note (resp. ) as the marginal over x (resp. y). We note (resp. ) as the conditional density of x given y (resp. y given x).

3. KL-Closeness Distributions for Multinomials

In this section we study closeness distributions on (the family of multinomial distributions of dimension n, or the family of finite discrete distributions over atoms). To do that, first we need to establish the remoteness function. It is well known that there is an isometry between and the positive orthant of the n dimensional sphere () of radius 2 (see Section 7.4.2. in [17]). This isometry allows us to compute the volume of the manifold as the area of the sphere of radius 2 on the positive orthant.

Proposition 4.

The volume of is

Proof.

The area of a sphere of radius r is Taking , Now, there are orthants, so the positive orthant amounts for of that area, as stated. □

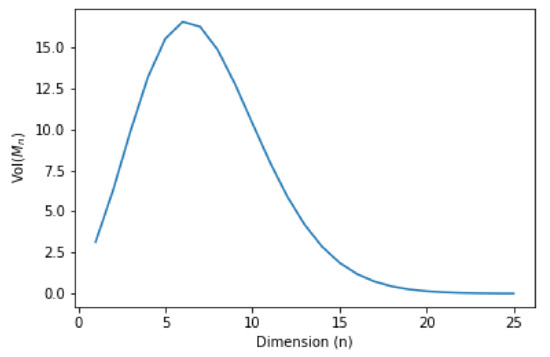

Figure 3 shows that the volume of the space of multinomial distributions over atoms reaches its maximum at . The main takeover of Proposition 4 is that the volume of is finite, because this allows us to prove the following result:

Figure 3.

Volume of the family of multinomial distributions as dimension increases.

Proposition 5.

For any measurable remoteness function r on there is a closeness distribution implementing it.

Proof.

Directly from Proposition 3 and the fact that has finite volume. □

A reasonable choice of remoteness function for a statistical manifold is the Kullback-Leibler (KL) divergence. The next section analyzes the closeness distributions that implement KL in .

3.1. Closeness Distributions for KL as Remoteness Function

Let Thus, is a discrete distribution over atoms. We write to represent Note that each is independent of the parametrization an thus it is an intrinsic quantity of the distribution.

Let . The KL divergence between and is

We want to study the closeness distributions that implement KL in . The detailed derivation of these results can be found in Appendix B. The closeness pdf according to Equation (7) is

The marginal for is

where is the multivariate Beta function.

The conditional for given :

Equation (10) is very similar to the expression of a Dirichlet distribution. In fact, the expression of for integration purposes in the expectation parameterization, namely , is that of a Dirichlet distribution:

Equation (11) deserves some attention. We have defined the joint density so that pairs of distributions that are close in terms of KL divergence are assigned a higher probability than pairs of distributions which are further away in terms of KL. Hence, the conditional assigns a larger probability to those distributions which are close in terms of KL to This means that whenever we have a probabilistic model which encodes two multinomial distributions and , and we are interested in introducing that should be close to , we can introduce the assumption that

Interesting as it is for modeling purposes, the use of Equation (11) however does not allow the modeler to convey information regarding the strength of the link. That is, ’s in the KL-surrounding of will be more probable, but there is no way to establish how much more probable. We know by Proposition 1 that for any remoteness function r, we can select , and is order-equivalent to r. We can take advantage of that fact and use to encode the strength of the probabilistic link between and . If instead of using the KL (D) as the remoteness function, we opt for , following a parallel development to the one above we will find that

Now, Equation (12) allows the modeler to fix a large value of to encode that it is extremely unlikely that separates from , or a value of close to 0 to encode that the link between and is highly loose. Furthermore it is important to realize that Equation (12) allows us to interpret any already existing model which incorporates Dirichlet (or Beta) distributions with the only requirement that each of its concentration parameters is larger than Say we have a model in which . Then, defining by coordinates as , we can interpret the model as imposing to be close to with intensity Note that, extending this interpretation a bit to the extreme, since the strength of the link reduces as , a “free” Dirichlet will have all of its weights set to This coincides with the classical prior suggested by Jeffreys [18,19] for this very same problem. This is reasonable since Jeffreys’ prior was constructed to be independent of the parametrization, that is, to be intrinsic to the manifold, similarly to what we are doing.

3.2. Visualizing the Distributions

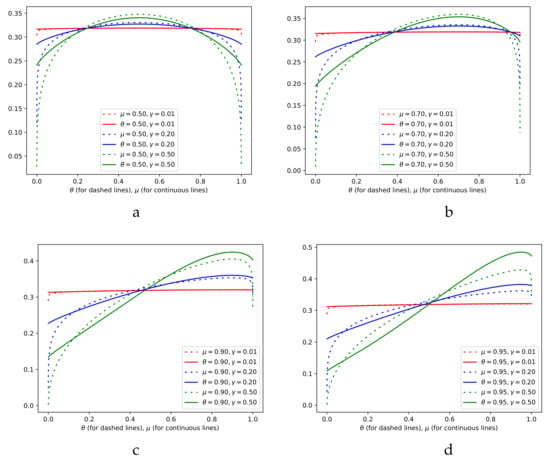

In the previous section we have seen an expression for Since the KL divergence is not symmetric, we have that is different from Unfortunately, we have not been able to provide a closed form expression for However, it is possible to compute it numerically in order to compare both conditionals.

Figure 4 shows a comparison of and According to what is suggested in [20], for a proper interpretation of the densities we show its density function, which is intrinsic to the manifold, instead of its expression in the parametrization, as is commonly done. Note that from Equation (12), the value of is 0 at and In Figure 4, we can see that this is not the case for neither at nor at . In fact we see that always starts below at (resp. . Then, as (resp. ) grows, it is always the case that goes over , to end decreasing again below it when (resp ) approaches to 1.

Figure 4.

Comparison of and In (a) and . In (b) and . In (c) and . In (d) and .

4. Reinterpreting the Beta-Binomial Model

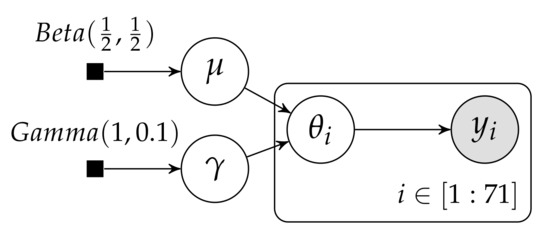

We are now ready to go back to the rodents example provided in the introduction. The main idea we would like this hierarchical model to capture is that the ’s are somewhat similar. We do this by introducing a new random variable to which we would like each to be close to (see Figure 5). Furthermore, we introduce another variable that controls how tightly coupled the ’s are to . Now, represents a proportion, and priors for proportions have been well studied, including the “Bayes-Laplace rule” [21] which recommends , the Haldane prior [22] which is an improper prior , and the Jeffreys’ prior [18,19] Following the arguments in the previous section, here we stick with the Jeffreys’ prior. A more difficult problem is the selection of the prior for , where we still do not have a well founded choice. Note that taking a look at Equation (12), ’s role acts similarly (although not exactly equal) to an equivalent sample size. Thus, the prior over could be thought as a prior over the equivalent sample size with which will be incorporated as prior into the determination of each of the ’s. In case the size of each sample () is large, there will not be much difference between a hierarchical model and modeling of each of the 71 experiments as independent experiments. So, it makes sense for the prior over to concentrate on a relatively small equivalent sample sizes. Following this line of thought we propose to follow a

Figure 5.

Reinterpreted hierarchical graphical model for the rodents example.

To summarize, the hierarchical model we obtain based on closeness probability distributions is:

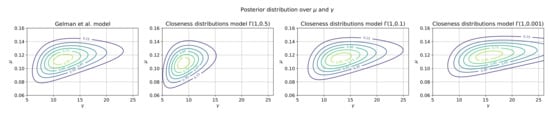

Figure 6 shows that the posteriors generated by both models are similar, and put the parameter (the pooled average) between 0.08 and 0.15, and the parameter (the intensity of the link between and each of the ’s) between 5 and 25. Furthermore, the model is relatively insensitive to the parameters of the prior for as long as they do create a sparse prior. Thus, we see that selecting the prior as creates a prior that is too concentrated on the low values of (that is, it imposes a relatively mild closeness link between and each of the ’s). This changes the estimation a lot. However, creates a posterior similar to that of , despite being more spread.

Figure 6.

Comparison of posteriors between a closeness distribution model and that proposed by Gelman et al. in [2].

5. Hierarchical Dirichlet Multinomial Model

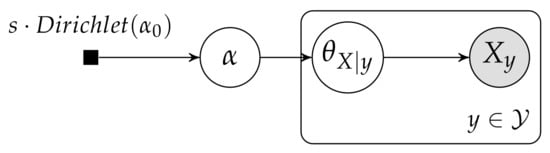

Recently [9,10,11], Azzimonti et al. have proposed a hierarchical Dirichlet multinomial model to estimate conditional probability tables (CPTs) in Bayesian networks. Given two discrete finite random variables X (over domain ) and Y over domain () which are part of a Bayesian network, and such that Y is the only parent of X in the network, the CPT for X is responsible of storing The usual CPT model (the so called Multinomial-Dirichlet) adheres to parameter independence and stores different independent Dirichlet distributions over each of the . Instead, Azzimonti et al. propose the hierarchical Multinomial-Dirichlet model, where “the parameters of different conditional distributions belonging to the same CPT are drawn from a common higher-level distribution”. Their model can be summarized equationally as

and graphically as shown in Figure 7.

Figure 7.

PGM for the hierarchical Dirichlet Multinomial model proposed in [10].

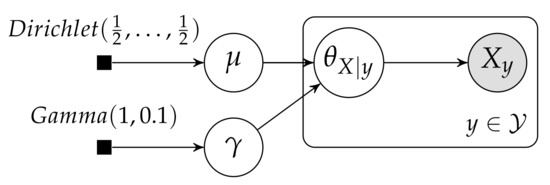

The fact that the Dirichlet distribution is the conditional of a closeness distribution allows us to think about this model as a generalization of the model presented for the rat example. Thus, the Hierarchical Dirichlet Multinomial model can be understood as introducing the assumption that there is a probability distribution with parameter , that is close in terms of its KL divergence to each of the different distributions, each of them parameterized by Thus, in equational terms, we have that the model can be rewritten as

and depicted as shown in Figure 8. Note that in our reinterpreted model plays a role quite similar to the one that s played on Azzimonti’s model. To maintain the parallel with the model developed for the rodents example, here we have also assumed a as prior over , instead of the punctual distribution assumed in [10], but we could easily mimic their approach and specify a single value for

Figure 8.

Reinterpreted PGM for the hierarchical Dirichlet Multinomial model.

Note that we are not claiming that we are improving the Hierarchical Dirichlet Multinomial model, we are just reinterpreting it in a way that is easier to understand conceptually.

6. Conclusions and Future Work

We have introduced the idea and the formalization remoteness functions and closeness distributions in Section 2. We have proven that any remoteness function induces a closeness distribution, provided that the volume of the space of distributions is finite. We have particularized closeness distributions for multinomials in Section 3, taking the remoteness function as the KL-divergence. By analyzing two examples, we have shown that closeness distributions can be a useful tool to the probabilistic model builder. We have seen that they can provide additional rationale and geometric intuitions for some commonly used hierarchical models.

In the Crowd4SDG and Humane-AI-net projects, these mathematical tools could prove useful in the understanding and development of consensus models for citizen science, improving the ones presented in [23]. Our plan is to study this in future work.

In this paper we have concentrated on discrete closeness distributions. The study of continuous closeness distributions remains for future work.

Funding

This work was partially supported by the projects Crowd4SDG and Humane-AI-net, which have received funding from the European Union’s Horizon 2020 research and innovation program under grant agreements No 872944 and No 952026, respectively. This work was also partially supported by Grant PID2019-104156GB-I00 funded by MCIN/AEI/10.13039/501100011033.

Acknowledgments

Thanks to Borja Sánchez López, Jerónimo Hernández-González and Mehmet Oğuz Mülâyim for discussions on preliminary versions.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Total Order Induced by a Function

Definition A1.

Let Z be a set and a function. The binary relation (a subset of ) is defined as

Proposition A1.

is a total (or lineal) order in Z.

Proof.

Reflexivity, transitivity, antisimmetry, and totality are inherited from the fact that ≤ is a total order in Z. □

Appendix B. Detailed Derivation of the KL Based Closeness Distributions for Multinomials

Closeness Distributions for KL as Remoteness Function

Let Thus, is a discrete distribution over atoms. We write to represent Note that each is independent of the parametrization an thus it is an intrinsic quantity of the distribution.

Let . The KL divergence between and is

The closeness pdf according to Equation (7) is

Now, it is possible to assess the marginal for

where we recall that is the measure induced by the Fisher metric and it is not connected to . To continue, we need to compute as an intrinsic quantity of the manifold, that is, invariant to changes in parametrization. We are integrating . We can parameterize the manifold using itself (the expectation parameters). In this parameterization, the integral can be written as

where the last equality comes from identifying it as a Dirichlet integral of type 1 (see 15-08 in [24]), and is the multivariate Beta function. Combining Equation (A2) with Equation (A3) we get

From here, we can compute the conditional for given :

Equation (A5) is very similar to the expression of a Dirichlet distribution. In fact, the expression of in the expectation parameterization is that of a Dirichlet distribution:

References

- Van de Schoot, R.; Depaoli, S.; King, R.; Kramer, B.; Märtens, K.; Tadesse, M.G.; Vannucci, M.; Gelman, A.; Veen, D.; Willemsen, J.; et al. Bayesian statistics and modelling. Nat. Rev. Methods Prim. 2021, 1, 1. [Google Scholar] [CrossRef]

- Gelman, A.; Carlin, J.B.; Stern, H.S.; Rubin, D.B. Bayesian Data Analysis; Chapman and Hall/CRC: London, UK, 2013. [Google Scholar]

- Allenby, G.M.; Rossi, P.E.; McCulloch, R. Hierarchical Bayes Models: A Practitioners Guide. SSRN Electron. J. 2005. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.Y.; Lei, B.; Mallick, B. Estimation of COVID-19 spread curves integrating global data and borrowing information. PLoS ONE 2020, 15, e0236860. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.Y.; Mallick, B.K. Bayesian Hierarchical Modeling: Application Towards Production Results in the Eagle Ford Shale of South Texas. Sankhya B 2021. [Google Scholar] [CrossRef]

- Tarone, R.E. The Use of Historical Control Information in Testing for a Trend in Proportions. Biometrics 1982, 38, 215–220. [Google Scholar] [CrossRef]

- Koller, D.; Friedman, N. Probabilistic Graphical Models: Principles and Techniques; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Obermeyer, F.; Bingham, E.; Jankowiak, M.; Pradhan, N.; Chiu, J.; Rush, A.; Goodman, N. Tensor variable elimination for plated factor graphs. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 4871–4880. [Google Scholar]

- Azzimonti, L.; Corani, G.; Zaffalon, M. Hierarchical Multinomial-Dirichlet Model for the Estimation of Conditional Probability Tables. In Proceedings of the 2017 IEEE International Conference on Data Mining (ICDM), New Orleans, LA, USA, 18–21 November 2017; pp. 739–744. [Google Scholar] [CrossRef] [Green Version]

- Azzimonti, L.; Corani, G.; Zaffalon, M. Hierarchical estimation of parameters in Bayesian networks. Comput. Stat. Data Anal. 2019, 137, 67–91. [Google Scholar] [CrossRef]

- Azzimonti, L.; Corani, G.; Scutari, M. Structure Learning from Related Data Sets with a Hierarchical Bayesian Score. In Proceedings of the International Conference on Probabilistic Graphical Models, PMLR, Aalborg, Denmark, 23–25 September 2020; pp. 5–16. [Google Scholar]

- Amari, S.I. Information Geometry and Its Applications; Springer: Berlin/Heidelberg, Germany, 2016; Volume 194. [Google Scholar]

- Dudley, R.M. Real Analysis and Probability, 2nd ed.; Cambridge Studies in Advanced Mathematics; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar] [CrossRef]

- Jost, J. Riemannian Geometry and Geometric Analysis; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar] [CrossRef]

- Pennec, X. Probabilities and Statistics on Riemannian Manifolds: A Geometric Approach; Technical Report RR-5093; INRIA: Rocquencourt, France, 2004. [Google Scholar]

- Amann, H.; Escher, J. Analysis III; Birkhäuser: Basel, Switzerland, 2009. [Google Scholar] [CrossRef]

- Kass, R.E.; Vos, P.W. Geometrical Foundations of Assimptotic Inference; Wiley-Interscience: Hoboken, NJ, USA, 1997. [Google Scholar]

- Jeffreys, H. An invariant form for the prior probability in estimation problems. Proc. R. Soc. Lond. Ser. A Math. Phys. Sci. 1946, 186, 453–461. [Google Scholar] [CrossRef] [Green Version]

- Jeffreys, H. The Theory of Probability; Oxford University Press: Oxford, UK, 1998. [Google Scholar]

- Cerquides, J. Parametrization invariant interpretation of priors and posteriors. arXiv 2021, arXiv:2105.08304. [Google Scholar]

- Laplace, P.S.m.d. Essai Philosophique sur les Probabilités; Courcier: Le Mesnil-Saint-Denis, France, 1814. [Google Scholar]

- Haldane, J.B.S. A note on inverse probability. Math. Proc. Camb. Philos. Soc. 1932, 28, 55–61. [Google Scholar] [CrossRef]

- Cerquides, J.; Mülâyim, M.O.; Hernández-González, J.; Ravi Shankar, A.; Fernandez-Marquez, J.L. A Conceptual Probabilistic Framework for Annotation Aggregation of Citizen Science Data. Mathematics 2021, 9, 875. [Google Scholar] [CrossRef]

- Jeffreys, H.; Swirles Jeffreys, B. Methods of Mathematical Physics; Cambridge University Press: Cambridge, UK, 1950. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).