Abstract

The ridge regression estimator is a commonly used procedure to deal with multicollinear data. This paper proposes an estimation procedure for high-dimensional multicollinear data that can be alternatively used. This usage gives a continuous estimate, including the ridge estimator as a particular case. We study its asymptotic performance for the growing dimension, i.e., when n is fixed. Under some mild regularity conditions, we prove the proposed estimator’s consistency and derive its asymptotic properties. Some Monte Carlo simulation experiments are executed in their performance, and the implementation is considered to analyze a high-dimensional genetic dataset.

1. Introduction

Consider the multiple regression model given by

where is a vector of n responses, is an design matrix, with the ith predictor , is the coefficients vector, and is an n-vector of unobserved errors. Further, we shall assume , , .

When , the ordinary least squares (LS) estimator of is given by

However, for the high dimensional (HD) case, the LS estimator cannot be obtained, because is rank deficient. It is well known that the ridge regression (RR) estimator of [1], followed by [2] regularization, however, exists. The rationale is to add a positive value to the eigenvalues of to efficiently estimate the parameters via . Refer to Saleh et al. [3] for theory and application of the RR approach. Using the projection of onto the row space of is a well-described remedy. Wang et al. [4] used this technique and proposed a high dimensional LS estimator as a limiting case of the RR, while Buhlmann [5] also used the projection method and developed a bias correction in the RR estimator to propose a bias-corrected RR estimator for the high dimensional setting. Shao and Deng [6] used the method and proposed to threshold the RR estimator when the projection vector is sparse, in the sense that many of its components are small and demonstrated consistency. Dicker [7] studied the minimum property of the RR estimator and derived its asymptotic risk for the growing dimension, i.e., . Although the RR estimator involves high dimensional problems, there exits a counterpart that has not been considered in high dimension.

An Existing Two-Parameter Biased Estimator

It is well known that the RR estimator is an efficient approach for multicollinear situations. Since then, many authors have developed ridge-type estimators to overcome the issue of multicollinearity. One drawback of the RR estimator is that it is a non-linear function of the tuning parameter. Hence, Liu [8] developed a similar estimator; however, it is linear for the tuning parameter via the following optimization problem, for the case :

The solution to the optimization problem (3) has the form

where is termed as the biasing parameter.

Combining the advantages of the RR and Liu estimators, Ozkale and Kaciranlar [9] proposed a two-parameter estimator by solving the following optimization problem:

where c is a constant, and k is the Lagrangian multiplier. The resulting two-parameter ridge estimator has the form

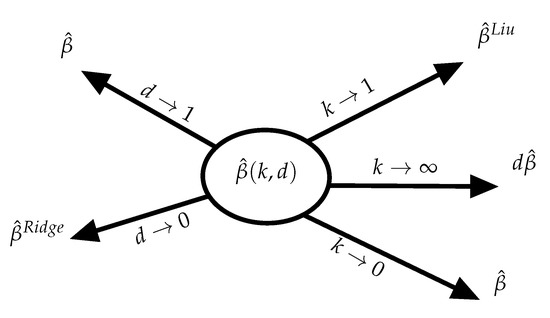

The above estimator has several advantages and can be simplified to LS, RR, and Liu estimators as limiting cases (see Figure 1). It can be argued that this estimator can also be interpreted as a restricted estimator under stochastic prior information about .

Figure 1.

Special limiting cases.

With growing dimensions p, , the LS estimator (2) cannot be obtained, so it is not possible to use the two-parameter ridge estimator in Equation (6). Hence, developing a high-dimensional two-parameter version of this estimator and studying its asymptotic performance is interesting and worthwhile. Therefore, in this paper, we propose a high-dimensional version of Ozkale and Kaciranlar’s estimator and give the asymptotic properties. The paper’s organization is as follows: In Section 2, a high-dimensional two-parameter estimator is proposed, and its asymptotic characteristics are discussed. Section 3 indicates the generalized cross validation for choosing the parameters. In Section 4, some simulation experiments are presented to assess the novel estimator’s statistical and computational performance, and an application to the AML data is illustrated in this section The conclusion is presented in the last section.

2. The Proposed Estimator

In this section, we develop an HD estimator and establish its asymptotic properties. To show a component is dependent to p, we shall use the subscript p and particularly consider the scenarios in which and n is fixed. This is termed large p, fixed n, which is more general than scenarios with , a common assumption in high-dimensional settings.

Consider a diverging number of variables case, in which p is allowed to tend to infinity. This case fulfills the high-dimensional case . Under this setting, the inverse of does not exist; however, the RR estimator is still valid and applicable. Further, the Liu estimator cannot be obtained. As a remedy, one can use the Moore–Penrose inverse of , a particular case of the generalized inverse. Wang and Leng [10] showed that can be seen as the Moore-Penrose inverse of for , and that is the Moore–Penrose inverse of when . This gives, for any ,

where s is an arbitrary nonegative constant.

Multiplying both sides of (7) by reveals that the LS estimator can be represented as

We impose the following regularity conditions for studying the asymptotic performance of the estimator. given by (9).

- (A1)

- . There exists a constant , such that a component of is .

- (A2)

- . There exists a constant , such that a component of is .

- (A3)

- For sufficiently large p, there is a vector , such that , and there exists a constant , such that each component of the vector is , and , with . (An example of such choice is and ).

- (A4)

- For sufficiently large p, there exists a constant , such that each component of is and . Further, .

Assumption (A3) is adopted from Luo [11]. Let .

Theorem 1.

Assume(A1)and(A2). Then, for all .

Proof.

For the proof, refer to Appendix A. □

Theorem 2.

Assume(A1)–(A3). Further, suppose , where is the ith eigenvalue of . Then, for all .

Proof.

For the proof, refer to Appendix A. □

Using Theorems 1 and 2, it can be verified that the HD estimator is a consistent estimator for as .

The following result reveals the asymptotic distribution of this estimator as .

Theorem 3.

Assume , and for sufficiently large p, there exists a constant , such that each component of β is . Let , . Furthermore, suppose that , . Then,

Proof.

For the proof, refer to Appendix A. □

3. Generalized cross Validation

As noted, the estimator depends on both the ridge parameter and Liu parameter that must be optimized in practice. To do this, we use the generalized cross-validation (GCV) criterion. The GCV uses to choose the ridge and Liu parameters by minimizing an estimate of the unobservable risk function

where

with , termed as the hat matrix of .

This is straightforward to demonstrate, as in [12].

where and .

The GCV function is then defined as

where .

The following theorem extends the GCV theorem proposed by Akdeniz and Roozbeh [13].

Theorem 4.

According to the definition of GCV, we have

where , and consequently,

whenever .

Proof.

r For the proof, refer to Appendix A. □

4. Numerical Investigations

In this section, for performance assessment of the proposed HD estimator , we conduct a simulation study along with the analysis of real data.

4.1. Simulation

Here, we consider the multiple regression model with varying squared multiple correlation coefficient and error distribution, given by the following relation:

where , is the active set, and its dimension is . The absolute values of a normal distribution with mean 0 and standard deviation 5 is considered . The remaining components are zero.

In this example, motivated by McDonald and Galarneau [14], the explanatory variables are computed by

where the s are independent standard normal pseudo-random vectors, and is specified such that the correlation between any two explanatory variables is given by . Similarly to Zhu et al. [15], the variance is set to , and two different kinds of error distribution are taken for : (1) the standard normal is , and (2) standard t with 5 degrees of freedom . The constant c is also varied to control the signal-to-noise ratio, and it is set to , 1, and 2 with the corresponding . represents the proportion of the variable for a dependent variable that is explained by an independent variable or variables in a regression model.

We consider ; the sample size and the number of covariates are set to , , respectively. Following regularity conditions ()–(), we set . For , we take , which guarantees (). We then simulate and 100 times using Equation (9) and .

For comparison purposes, the quadratic bias (QB) and mean squared error (MSE) are computed according to

respectively, where is one of or .

4.2. Review of Results

In Theorem 2, the condition for which the proposed is unbiased is investigated based on the eigenvalues of . Here, we numerically analyze the biasedness of this estimator by comparing the ridge estimator concerning the parameters of the model. For this purpose, the difference in QB is reported in Table 1 by evaluating

Table 1.

The difference between quadratic biases of the high dimensional and ridge estimators.

If diff is positive, then the quadratic bias of the proposed estimator is larger than that of the ridge estimator.

To comprise the MSEs, we use the relative mean square error (RMSE) given by

The results are reported in Table 2. If , then the proposed estimator has a smaller MSE compared to the ridge.

Table 2.

The relative MSE of the high dimensional and ridge estimators.

- (1)

- The performance of the estimators is affected by the number of observations (n), the number of variables (p), the signal to noise ratio (c), and the degree of multicollinearity ().

- (2)

- By increasing the degree of multicollinearity, , although for both cases of error distributions, the QB of the proposed estimator increases for and 1, its MSE decreases dramatically since the RMSE increases.

- (3)

- The signal-to-noise shows the effect of in the model. Lower values (less than 1) are a sign of model sparsity, since, when c is small, the proposed estimator performs better than the ridge. This is evidence that our estimator is a better candidate as an alternative in sparse models in the MSE sense. However, the QB increases for large c values, which forces the model to overestimate the parameters.

- (4)

- As p increases, although the proposed estimator is superior to the ridge in sparse models (small c values), the efficiency decreases. This is more evident when the ratio becomes larger. This fact may come as poor performance of the proposed estimators, but our estimator is still preferred in high dimensions for sparse models.

- (5)

- Obviously, as n increases, so does the RMSE; however, the QB becomes very large, and it is due to the nature of the proposed estimators because of its complicated form. It must be noted that this does not contradict the results of Theorem 2, since the simulation scheme does not obey the regularity condition.

- (6)

- There is evidence of robustness for the distribution tail for sparse models, i.e., the QB and RMSE are the same for both normal and t distributions. However, as c increases, the QB of the proposed estimator explodes for the heavier tail distribution. This may be seen as a disadvantage of the proposed estimators, but even for large values of c, the RMSE stays the same, evidence of relatively small variance for the heavier tail distribution.

4.3. AML Data Analysis

This section assesses the performance of the proposed estimators using the mean prediction error (MPE) and MSE criteria of a data set adopted from Metzeler et al. [16], in which the information for 79 patients was collected. The data can be accessed from the Gene Expression Omnibus (GEO) data repository (http://www.ncbi.nlm.nih.gov/geo/ (accessed on 1 January 2021)) by the National Center for Biotechnology Information (NCBI), where the data is available under GEO accession number GSE12417. We only use the data set that was used as a test set. This contains gene expression data for 79 adult patients with cytogenetically normal acute myeloid leukemia (CN-AML), showing heterogeneous treatment outcomes. According to Sill et al. [17], we reduce the total number of 54,675 gene expression features that have been measured with the Affymetrix HG-U133 Plus 2.0 microarray technology to the top features with the largest variance across all 79 samples. We considered overall survival time based on month as the response variable. The condition number of the design matrix for the AML data set is approximately , evident of severe multicollinearity among columns of the design matrix ([18], see p. 298). To find the optimum values of k and d, denoted by and for practical purposes, we use the GCV given by Equation (12). Hence, we use the following formulas:

To compute the MPE and MSE, we divide the whole data set into two train () and validation () sets, comprising and , respectively. Then, the measures are evaluated using

where stands for the number of bootstrapped sample, is one of the proposed and ridge estimators, and is the assumed true parameter obtained by Equation (9) from the whole data set.

The results are tabulated in Table 3 for the number of bootstrap . The following conclusions are obtained from Table 3:

Table 3.

RMPE and RMSE values for 200 bootstrapped samples in the analysis of AML data.

- (1)

- Using the GCV, the proposed estimator is shown to be consistently superior to the ridge estimator, relative to RMSE and RMPE criteria.

- (2)

- Similarly to the results of simulations, with growing p, the MSE of the proposed estimator increases compared to the ridge estimator. However, as p gets larger the mean prediction error becomes smaller, which shows the superiority for prediction purposes.

Further, Figure 2 depicts the MSE and MPE values for both HD and ridge estimators, for the case . It is obvious that the high-dimensional estimator performs better compared to the ridge. For the case , we obtained similar results.

Figure 2.

Box-plot of the MSE and MPE values for in the AML data.

5. Conclusions

In this note, we propose a high-dimensional two-parameter ridge estimator to the conventional ridge and Liu estimators. Its asymptotic properties have also been discussed. This estimator, via simulation and real-life experiments, is efficient in high dimensional problems and can potentially overcome multicollinearity. Additionally, the proposed high-dimensional ridge estimator yields superior performance in the small mean squared error sense.

Author Contributions

Conceptualization, M.A. and N.M.K.; methodology, M.A. and N.M.K.; validation, M.A., M.N., M.R. and N.M.K.; formal analysis, M.A., M.N., M.R. and N.M.K.; investigation, M.A., M.N., M.R. and N.M.K.; resources, M.N.; writing—original draft preparation, M.A.; writing—review and editing, M.A., M.N., M.R. and N.M.K.; visualization, M.A., M.N. and M.R.; supervision, M.A. and N.M.K.; project administration, M.A., M.N., M.R. and N.M.K.; funding acquisition, M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was based upon research supported, in part, by the visiting professor program, University of Pretoria, and the National Research Foundation (NRF) of South Africa, SARChI Research Chair UID: 71199; Reference: IFR170227223754 grant No. 109214. The work of M. Norouzirad and M. Roozbeh is based on the research supported in part by the Iran National Science Foundation (INSF) (grant number 97018318). The opinions expressed and conclusions arrived at are those of the authors and are not necessarily attributed to the NRF.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this article may be simulated in R, using the stated seed value and parameter values. The real data set is available at http://www.ncbi.nlm.nih.gov/geo/ (accessed on 1 January 2021).

Acknowledgments

We would like to sincerely thank two anonymous reviewers for their constructive comments, which led us to put many details in the paper and improved the presentation.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of the Main Results

Proof of Theorem 1.

By definition, we have

By (A1), and . By (A2), . Hence, as , and the proof is complete. □

Proof of Theorem 2.

By definition

Under (A2), . The proof is complete using Theorem 2 of Luo [11]. □

Proof of Theorem 3.

We have

By (A1), , by (A2), , and by (A4), . Hence,

The proof is complete. □

Proof of Theorem 4.

It is straightforward to verify that

Hence

which leads to the required result. □

References

- Hoerl, A.E.; Kennard, R.W. Ridge regression: Biased estimation for non-orthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Tikhonov, A.N. Solution of incorrectly formulated problems and the regularization method. Sov. Math. Dokl. 1963, 4, 1035–1038. [Google Scholar]

- Saleh, A.K.M.E.; Arashi, M.; Kibria, B.M.G. Theory of Ridge Regression Estimation with Applications; John Wiley: Hoboken, NJ, USA, 2019. [Google Scholar]

- Wang, X.; Dunson, D.; Leng, C. No penalty no tears: Least squares in high-dimensional models. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 1814–1822. [Google Scholar]

- Bühlmann, P. Statistical significance in high-dimensional linear models. Bernoulli 2013, 19, 1212–1242. [Google Scholar] [CrossRef] [Green Version]

- Shao, J.; Deng, X. Estimation in high-dimensional linear models with deterministic design matrices. Ann. Stat. 2012, 40, 812–831. [Google Scholar] [CrossRef] [Green Version]

- Dicker, L.H. Ridge regression and asymptotic minimum estimation over spheres of growing dimension. Bernoulli 2016, 22, 1–37. [Google Scholar] [CrossRef]

- Liu, K. A new class of biased estimate in linear regression. Commun. Stat. Theory Methods 1993, 22, 393–402. [Google Scholar]

- Ozkale, M.R.; Kaciranlar, S. The restricted and unrestricted two-parameter estimators. Commun. Stat. Theory Methods 2007, 36, 2707–2725. [Google Scholar] [CrossRef]

- Wang, X.; Leng, C. High dimensional ordinary least squares projection for screening variables. J. R. Stat. Soc. Ser. B 2015. [Google Scholar] [CrossRef] [Green Version]

- Luo, J. The discovery of mean square error consistency of ridge estimator. Stat. Probab. Lett. 2010, 80, 343–347. [Google Scholar] [CrossRef]

- Amini, M.; Roozbeh, M. Optimal partial ridge estimation in restricted semiparametric regression models. J. Multivar. Anal. 2015, 136, 26–40. [Google Scholar] [CrossRef]

- Akdeniz, F.; Roozbeh, M. Generalized difference-based weighted mixed almost unbiased ridge estimator in partially linear models. Stat. Pap. 2019, 60, 1717–1739. [Google Scholar] [CrossRef]

- McDonald, G.C.; Galarneau, D.I. A Monte Carlo of Some Ridge-Type Estimators. J. Am. Stat. Assoc. 1975, 70, 407–416. [Google Scholar] [CrossRef]

- Zhu, L.P.; Li, L.; Li, R.; Zhu, L.X. Model-free feature screening for ultrahigh dimensional data. J. Am. Stat. Assoc. 2011, 106, 1464–1475. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Metzeler, K.H.; Hummel, M.; Bloomfield, C.D.; Spiekermann, K.; Braess, J.; Sauerl, M.C.; Heinecke, A.; Radmacher, M.; Marcucci, G.; Whitman, S.P.; et al. An 86 Probe Set Gene Expression Signature Predicts Survival in Cytogenetically Normal Acute Myeloid Leukemia. Blood 2008, 112, 4193–4201. [Google Scholar] [CrossRef] [PubMed]

- Sill, M.; Hielscher, T.; Becker, N.; Zucknick, M. c060: Extended Inference for Lasso and Elastic-Net Regularized Cox and Generalized Linear Models; R Package Version 0.2-4; 2014. Available online: http://CRAN.R-project.org/package=c060 (accessed on 1 January 2021).

- Montgomery, D.C.; Peck, E.A.; Vining, G.G. Introduction to Linear Regression Analysis, 5th ed.; Wiley: Hoboken, NJ, USA, 2012. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).