Abstract

The benefits and properties of iterative splitting methods, which are based on serial versions, have been studied in recent years, this work, we extend the iterative splitting methods to novel classes of parallel versions to solve nonlinear fractional convection-diffusion equations. For such interesting partial differential examples with higher dimensional, fractional, and nonlinear terms, we could apply the parallel iterative splitting methods, which allow for accelerating the solver methods and reduce the computational time. Here, we could apply the benefits of the higher accuracy of the iterative splitting methods. We present a novel parallel iterative splitting method, which is based on the multi-splitting methods, The flexibilisation with multisplitting methods allows for decomposing large scale operator equations. In combination with iterative splitting methods, which use characteristics of waveform-relaxation (WR) methods, we could embed the relaxation behavior and deal better with the nonlinearities of the operators. We consider the convergence results of the parallel iterative splitting methods, reformulating the underlying methods with a summation of the individual convergence results of the WR methods. We discuss the numerical convergence of the serial and parallel iterative splitting methods with respect to the synchronous and asynchronous treatments. Furthermore, we present different numerical applications of fluid and phase field problems in order to validate the benefit of the parallel versions.

Keywords:

multisplitting method; iterative splitting method; numerical analysis; operator-splitting method; initial value problem; iterative solver method; waveform relaxation method; convection-diffusion equation; viscous Burgers’ equation; fractional diffusion equations MSC:

35K45; 35K90; 47D60; 65M06; 65M55

1. Introduction

Nowadays, iterative splitting methods are important solver methods to solve large systems of ordinary, partial, or stochastic differential equations, see [1,2,3,4,5,6]. Iterative splitting methods are based on two solver ideas: In the first part, we separate the full operators into different sub-operators and reduce the computational time for such sub-computation. An additional benefit is the iterative technique, which allows for solving a relaxation problem, as in the waveform-relaxation method or Picard’s iterative method, see [7,8,9,10]. Both parts reduce the computational time and the complexity as if we solved all parts (full operator and direct method) together, see [11]. Such iterative splitting methods can be used to compute, with less computational burden, an approximate solution of the ordinary differential equations (ODEs) or semi-discretized partial differential equations (PDEs), see [10,11]. Moreover, we consider parallel splitting methods, which are nowadays important to solve large problems, see [3]. Such parallel splitting methods are applied to the splitted subproblems and computed independently by the different processors. Therefore, in a second part we consider the parallelization-techniques, which are given with the multi-splitting approach, see [5]. Such approaches allow for embedding the splitting techniques of the first part into a parallelized version, see [12]. Based on the parallel versions of our methods, we can consider large computational problems.

For the computational problems, we consider the interesting part of higher dimensional and nonlinear PDEs as nonlinear and fractional convection-diffusion equations, see [13,14]. The nonlinear PDEs are applied in nonlinear flow problems, for example, to simulate traffic-flow problems, see [15] and flow problems that are related to Navier–Stokes equations, see [16], which can be modeled with the Burgers’ equation.

Furthermore, we take into account the fractional PDEs, e.g., fractional convection-diffusion equations. Nowadays, the fractional calculus is applied to several fields of science and engineering, for example, in visco-elastic and thermal diffusion in fractal domains, see [17,18], or in phase-field models with mixtures of fluids [19,20], or in biological models to deal with fractional multi-models [21]. It is also interesting on a theoretical aspect, e.g., physical and geometrical interpretation [22], fractional Dirac operators [23].

The treatment of such delicate fractional and nonlinear partial differential equations (PDEs) needs additional spatial and nonlinear approximations of higher order, see [24]. We apply flexible iterative splitting methods, see [13,25], which can be extended to parallel algorithms.

The underlying modeling equations are given as nonlinear fractional convection-diffusion equations:

where is the spatial domain with d as the dimension and . We denote by a scalar parameter of the convection part and by the viscosity of the diffusion part, while f is a right hand side function.

We have different cases:

- : viscous Burgers’ equation, see [2,26],

- and : Diffusion equation, see [2,26], and

- : fractional diffusion equation, see [14,27].

Further interesting examples that are related to fractional and nonlinear PDE can be found in [24,28].

Equations (1)–(3) can be derived in a more general setting with the idea of the least action principle, see [29,30]. Here, we are not restricted by the specific Lagrangian or Hamiltonian principle, such that we can account for a much larger number of fractional differential equations.

Here, the fractional Laplace operator replaces the standard Laplacian operator, see [14], and it is denoted as a sum of one-dimensional spatial operators, :

where we have defined the Riemann fractional derivative of order , as:

In the next step, we apply a semi-discretisation with higher order finite-difference methods for , see [31] or with higher order Grünwald formula for the fractional operators with , see [27,32]. Thus, we could reduce the computational cost of the time-splitting approaches, which are given as an iterative splitting approach, see [33]. With such an approximation and the consideration of the semi-discretization with higher order schemes, we obtain the nonlinear differential equation system in a Cauchy-form, which is given as:

where , while , where d is the dimension and m is the number of grid-points in each spatial direction. Furthermore, is a nonlinear matrix, which includes the spatial discretization of the nonlinear convection in Equation (1) with the boundary conditions and with higher order spatial finite difference schemes, see [31]. The operator is a linear matrix, which includes the spatial discretization of the fractional diffusion term with the boundary conditions and with the underlying fractional discretization methods, see [24,32]. The function : is the discretized right-hand side. We assume to be an appropriate Banach space with a vector and induced matrix norm , see [10,34,35].

For the nonlinear term, we apply the linearisation that is based on the Picard’s iteration, which is also a special iterative splitting approach, see [7,36]. We have:

where with some (number of the iterations for the nonlinear part) and starting condition . Furthermore, we assume to have a sufficiently large J, such that , where is an error-bound. For simplification, we assume , while is a right hand side, which can be approached by an integral equation, see [35].

Therefore, we obtain a linearised differential equation system, which is solved by the outer-iterations with . In the following, we concentrate on solving such linearised evolution equations by decomposing them with respect to their underlying matrix-operator in submatrix-operators, so that we obtain the following differential equation,

where is the full operator, and are the sub-operators. Further, is the solution and is the initial condition.

The bottleneck of the iterative methods is due to the large sizes of the iteration matrices, see [4,5]; therefore, we consider parallel versions of the iterative splitting method, see [3,12]. Based on the decomposition of the large scale differential equation with operator A into different smaller sub-differential equations with operators , where , and L is the number of processors, we distribute the computational time to many processors and reduce the overall computational time, see [5]. Furthermore, we modify the synchronous parallel splitting method with chaotic (asynchronous) ideas, such that the computation and communication of the various processors can be done independently, see [37].

In addition, we have considered different examples of the literature that also discuss optimized computational cost and error bounds, see [28]. Here, we deal with models of viscous Burgers’ equation, which are applied in flow-problems, and fractional convection-diffusion equations, which are applied in diffusion interface phase field problems, see [20].

The outline of this paper is as follows. Section 2 explains the serial iterative splitting method. Section 3 introduces the parallel iterative splitting method. We discuss the theoretical results in Section 4. The numerical examples are presented in Section 5. In Section 6, we discuss the theoretical and practical results.

2. Serial Iterative Splitting Method

We consider a two-level iterative splitting method, which is discussed for two operators in [2] and for L operators in [38].

In this work, we also apply the recent theoretical results of our work in [26]. While in that work we took the adaptivity of the splitting results into account, here we consider the application of multi-operator splitting approaches and multi-splitting methods.

Based on the differential Equation (10), we have the following decomposition of the operator A:

- , while are the sub-operators for the iterative part (solver part), and

- and are the sub-operators for the relaxation part (right hand side part).

where we have .

The serial iterative splitting method is given in the following algorithm. Here, we consider discretization step-size , which can also be set adaptively, see [26]. We assume to have time-interval with , where is the initial time and is the end time. We consecutively solve the following sub-problems for :

where we can assume for the first initialisation , or a different initial-function, see [2]. is the known split approximation, which is computed in the previous iterative procedure. The current split approximation at time is defined as , where is the maximal number of iterative steps. The stopping criterion is and, then we have the solution .

The solutions are given as:

where .

Remark 1.

We approximate the integral operator with a higher order integration scheme, e.g., exp-matrix computations, see [39,40], exp-Runge–Kutta methods, see [41], Pade- or Magnus-expansions, see [42,43] or with higher order numerical integration methods, as Trapezoidal- or Simpsons-rule, see [44].

We define the error-function as with , the maximum-norm , and the maximum operator norm .

Theorem 1.

Proof.

The result for the 2-level method is given in [38]. We apply a recursive argument to the iterative scheme with L operators and obtain:

where is given with , see also [38]. □

Remark 2.

The operators are based on the spatial discretization with higher order methods, e.g., [27,31]. Further, we assume that all of the operators, including the fractional discretized operators, are bounded, see [24]. Based on such assumption, we could generalize the results with respect to fractional operators.

3. Parallel Iterative Splitting Method

In the following, we parallelise the serial iterative splitting approach.

We present the following approaches:

- multi-splitting iterative approach, and

- two operator iterative splitting approach.

3.1. Multi-Splitting Iterative Approach

The problem is given as .

The idea is a multiple decomposition of

where is a non-singular and is the rest matrix.

Further, we have the decomposition of the parallel computable vectors:

where is the i-th iterative solution and are the parallel computable solutions in the i-th iterative step. E is the identity matrix and are diagonal matrices with positive entries.

The multisplitting iterative approach is given as:

where the initialisation is , and are the iterative steps. The stopping criterion is and then we have the solution .

The splitting error of the iterative splitting is of order, i.e. , with

where and are the weights () and , while .

Benefit:

- Parallel implementation (the method is designed for parallel contributions), and

- Good error balance between the different operators

Drawback:

- Balances in the decomposition of important to damp large errors

3.2. Parallel Splitting: Classical Version (Synchronous Version)

Here, we consider the classical version of the parallel splitting algorithm that is applied with synchronisation, see also [45]. We assume to deal with L processors and are the iterative steps, while I is the maximum number of iterative steps.

We deal with the parallel splitting in the synchronous version as:

where we assume for the initialisation of the first step . E is the identity matrix and are diagonal matrices with positive entries. and are given in the Equations (20) and (21). Furthermore, is the known split approximation at the time-level , which is computed in the previous iterative process. The split approximation at the time-level is defined as , which is computed in the current iterative process.

The solutions are given as:

where .

The stopping criterion is given as:

The integrals can be solved by higher order integration-rules, see Remark 1.

In the classical algorithm, we have a synchronisation point, so that the next iterative step can only start if all processors have submitted the results, see Equation (34). Such a hard point allows for applying a simpler stopping criterion, which is given in Equation (35).

The bottleneck of the synchronous algorithm is that the finished processors could not go on with the next iterative step and the computational time is wasted. Therefore, we explain the ideas of the asynchronous algorithms, which are used to apply the parallel splitting method in an asynchronous version.

3.3. Asynchronous Algorithm

The idea of an asynchronous algorithm is that each processor works independently and has access to a common memory. If one processor needs an update of a solution of another processor, then he can read the solution in the common memory. The processors are independent and the convergence is given with the weighted norm, see [3].

An asynchronous algorithm is defined, as follows:

Definition 1.

For , let be the subset indicating which components are computed at the i-th iteration. Additionally, we have an iteration count (prior to i), which indicates the iteration when the l-th component was computed in processor l.

For , we have , where L is the number of processors and , such that:

Subsequently, we define the asynchronous algorithm:

where are the initialisations and is the solver function of the l-th component, see [3].

In the following, the convergence of the asynchronous algorithm is presented with respect to the weighted norm, see [3].

Definition 2.

We assume that each component space has a normed linear space . We can define an appropriate norm for the parallel methods, which can be given as the weighted maximum norm:

where the vector is positive in each component for all .

Theorem 2.

We assume that we have a fixed point with for all i. Further, we assume that there exists and a positive vector , such that:

Based on the assumptions, we conclude that the asynchronous iterates converge to , which is the unique fixed point of .

Proof.

The proof is given in [3]. □

3.4. Parallel Splitting: Modern Version (Asynchronous Version)

We have to apply the following asynchronous algorithm, which is given in Definitions 1 and 2 and with the convergence results in Theorem 2. We assume to deal with L processors and are the iterative steps, while I is the maximum number of iterative steps.

We deal with the parallel splitting in the synchronous version as:

where we assume for the initialisation of the first step . E is the identity matrix and are diagonal matrices with positive entries. and are given in Equations (20) and (21). Further, is the known split approximation at time , which is computed in the previous iterative process. The split approximation at time is given as , which is computed in the current iterative process.

The solutions are given as:

where .

The stopping criterion is given as:

where and we have the maximum-norm with for all .

The integrals can be solved by higher order integration-rules, see Remark 1.

In the modern algorithm, we do not have a synchronisation point, so that each processor can work independently. For the stopping criterion, we apply a weighted norm, which means that all of the single results of the processors have to be lower than the given error-bound, see the stopping criterion in Equation (54).

4. Theoretical Results

In the following, we deal with the m-dimensional initial value problem in the non-homogeneous form, also see the homogeneous form in Equation (10):

where A is conveniently decomposed in two operators , and f is the right hand side.

Further, we deal in the following with the proof-ideas that are related to Waveform-relaxation methods, see [37,45].

The initial value problem (55) is solved with the multisplitting Waveform-relaxation method, which is given as:

where A is given in Equation (10). Further, is the starting condition.

For the multisplitting approach, we have the following Definition:

Definition 3.

Let be the number of splittings, and real-valued matrices. We say that for is a multisplitting triple if:

- and with ,

- The matrices are non-negative diagonal matrices and satisfy: ,where I is the identity matrix, and

- indicates the iteration, where the l-th component is computed prior to .

- The multisplitting approach that is based on the Waveform-relaxation in the classical version is given by:

- The multisplitting approach based on the Waveform-relaxation in the modern version is given by:

4.1. Stability Analysis

We deal with the following system:

where we have

where for , and we apply the multisplitting notation (58), with:

where and we obtain the standard Waveform-relaxation method as:

We can rewrite into an recursive notation and without loss of generality, we assume , then we obtain:

Given a well-conditioned system of eigenvectors, we can consider the eigenvalues of and of instead of the operators themselves, for . For the matrices we have the eigenvalues with and .

We can rewrite into the eigenvalue-notation and obtain:

We assume that all of the initial values with are as where I is the order, following the ideas in the iterative splitting approach [46].

Further, we also assume that the pairs for , otherwise we do not consider the iterative splitting approach, while the time-scales are equal, see [34].

In the following, we apply the -stability.

-Stability

We define and with , .

We have the following proposition 1:

Proposition 1.

Starting with and a time-step , we obtain:

where is the stability function of the scheme. The are given as:

and

with i-iterations.

Proof.

We apply the complete induction.

We start with and obtain:

Let us consider the -stability that is given by the following eigenvalues in a wedge:

For the A-stability we have whenever . This means that we have the stiff operator, where we assume that is the non-stiff operator.

The stability of the two iterations is given in the following theorem with respect to A and -stability.

Theorem 3.

We have the following stability for the iterative operator splitting scheme (71):

For the stability function , where i is the iterative step, we have the following A-stability

with , the initialization is given as and the initial conditions are .

Proof.

We consider the , while is bounded as a nonstiff operator.

We apply the complete induction.

We start with :

with the assumption of the nonstiff operators with .

Further we also have :

Subsequently, we apply the induction step for :

where we applied .

Afterwards, we obtain our results, also see the ideas of the stability proofs in [47]. □

4.2. Convergence Analysis

In order to apply the multisplitting Waveform-relaxation method (57) and (58), we write the solutions of the individual Equation (57) as:

where we have

where for .

Further, we apply the multisplitting notation (58) and obtain the summations:

where and we obtain the standard Waveform-relaxation method as:

We assume that Lemma 1 is fulfilled, see also [45].

Lemma 1.

The following items are equivalent:

- is a solution of the initial value problem (55).

- is a solution of each multisplitted equation .

- is the solution of the fixed point equation .

We define as maximum norm and we also denote by the matrix norm induced by the vector norm .

Based on these assumptions, in the following we derive the errors and convergence results.

In Theorem 4, we derive the error of the i-th approximation, see also [45].

Theorem 4.

Proof.

We have given

We apply the Lemma 1 and follow with the iterative approach:

where is is the i-times convolution

.

Further, we have , where is an appropriate Banach-Norm.

There exists:

and we have

We apply the estimation of the Waveform-relaxation, see [8], and obtain:

where we have .

The error estimate is then given as

We apply

Subsequently, we obtain the estimation

□

We have the following new convergence Theorem 5 based on the extension of the classical convergence Theorem version 4.

Theorem 5.

There exists a constant , which is given to estimate the kernel k of the multisplitting waveform-relaxation operator, such that we obtain . Subsequently, the convergence of the modern multisplittting WR method (59) and (60) is given by

where , where are the retarded iterations of the l-th processor.

Proof.

We start with the estimation of the i-th iteration:

Afterwards, we have the recursion:

where we apply , based on the idea in [8], and we obtain:

where . □

Remark 3.

For the parallel error, we obtain order if we assume that all processors have at least m iterative cycles, while, for the serial error, we have order . Thus, in the serial version, we have to apply iterative steps in sum to obtain the result, while in the parallel version, we only apply m iterative steps, while L processors share the computation to solve the L sub-equations. Furthermore, we can assume that the sub-equations are faster to solve, because the sub-operators are much smaller and simpler to handle. Subsequently, we have , where is the time to solve a sub-problem and the time to solve the full problem. Therefore, we have a benefit in the parallel distribution and obtain faster the higher order than with the serial version.

Remark 4.

While the classical version of the parallel splitting method has order , see the Theorem 4, the modern version of the parallel splitting method can improve the order partially to higher order. We obtain with , when we split into slow and fast convergent processors and assume that the faster results of the fast convergent processors are sufficient for the update. We can define a new with and the set is given with the fast convergent processors, see also the ideas of [37,48]. Therefore, we circumvent the slow processors and, later, we redistribute the decomposition of the matrices to gain a more balanced load of the processors.

5. Numerical Examples

In what follows, we deal with different numerical examples, which are motivated by real-life applications in fluid-flow and phase-field problems. We verify and test our theoretical results of the novel parallel iterative splitting approaches.

We deal with:

- Only time-dependent linear problem: we apply ordinary differential equation to verify the theoretical results.

- Time and space dependent linear problem: we apply a diffusion equation with different spatial dependent operators and test the application to partial differential equations.

- Time and space dependent nonlinear problem: we apply a mixed diffusion-convection with Burgers’ equation to test and verify the application to nonlinear problems.

- Time and space dependent fractional problem: we apply a fractional diffusion equation with different spatial dependent operators and, furthermore, we test the application to fractional differential equations.

5.1. First Example: Matrix Problem

In the first test example, we consider the following matrix equation,

whose exact solution is

We split the matrix as:

- Two operator approach

- Multiple operator approachwhere the and are given as:

We include Table 1 and Table 2 that correspond to multi-splitting iterative approach classical and modern version with the above partitions and using different discretizations in of step h allowing for a maximum of 10 iterations and a tolerance of We can see in the results the relative and absolute errors for each component of the solution and the average iterations performed in order to reach the tolerance.

Table 1.

Multisplitting classic version.

Table 2.

Multisplitting Modern version.

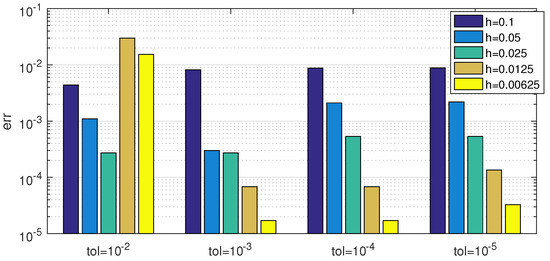

Figure 1 shows the influence of the tolerance value in the error of the modern version of the algorithm. Each group of bars represents the error for the different step sizes indicated in the legend. It can be seen that the error decreases with the step h if the tolerance is small enough. This is the case, except for , where higher errors appear for smaller steps. Looking at the bars that correspond to the same step and different tolerances, it can be observed that the error for a given temporal step h reaches a minimum as the tolerance decreases and, beyond this point, the errors stabilize or even increase slightly for smaller tolerances.

Figure 1.

Matrix problem. Precision of the modern version of the multi-splitting iterative approach in terms of the time step h and the tolerance.

Remark 5.

We applied the multisplitting method with the classical (synchronous) and modern (chaotic) approach. We receive the same accuracy of the numerical results, which means that the methods are equally accurate. We obtain some more benefits of the modern method if we apply large time-steps, such that the solution of one sub-problem can be achieved faster and benefit the solution of the second sub-problem. For such small computational unbalances, the modern approach is more efficient.

5.2. Second Example: Diffusion Problem

We deal with the following diffusion problem:

where we have the analytical solution , with and .

In operator notation, we write:

where and we assume that the zero-boundary conditions (Dirichlet boundary conditions) are fulfilled.

The problem is discretized by using a four-dimensional (4-D) mesh in . Denote by the approximated value of the solution at node for a given t. For the time-integration, we apply the integral formulation, see Equations (15)–(18).

For the spatial discretization, we test a second order scheme:

and a fourth order scheme:

where we have the analogous operators for the y and z derivatives.

We compute the solution obtained using spatial and temporal steps and respectively, in order to establish the convergence of the algorithms. We use different measures to estimate the convergence. On one hand, we can compare the outcome of the method with the exact solution for every point of the mesh, which shows the convergence of the method. On the other hand, we can compare with the result that was obtained halving the time steps, , at the points shared by the corresponding meshes. This allows for analyzing how the results depend on these steps.

Denote by the difference between the results at a mesh point in the final time T, , obtained using time steps h and , and by the difference with the analytical solution at the same point. In the tables, we will denote the maximum errors by

and

and the mean errors by

and

where N is the number of spatial nodes at time T.

In the following, we discuss different decompositions of the multi-operator splitting approach:

- Directional decomposition: We decompose into the different directions:Here, we have the benefit of decomposing the different directions.The drawback is related to the unbalanced decomposition, where the matrices have different sparse entries. Therefore, the exponential matrices of the operators are different in their sparse behaviour and the error can not be optimally reduced.We can reduce the unbalanced problem, if we deal with the idea to use , see [38]. Subsequently, we obtain at least a second order scheme (related to the spatial discretization).We compare our sequential and parallel iterative splitting methods with standard ones such as the sequential operator splitting [49] and the Strang–Marchuk splitting [50]. We apply the splitting algorithms with directional decomposition in . The splitting is iterated until a tolerance of or a maximum of 10 iterations is reached. The values that are shown in the tables correspond to maximum or mean values at . Table 3 and Table 4 present the results obtained using different number of temporal steps and the second and fourth order schemes for the spatial discretization, respectively.

Table 3. Diffusion problem. Results of the directional decomposition using the second order scheme for the spatial discretization, with 10 spatial subintervals and different number of temporal steps.

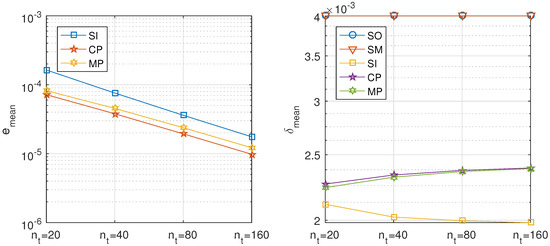

Table 3. Diffusion problem. Results of the directional decomposition using the second order scheme for the spatial discretization, with 10 spatial subintervals and different number of temporal steps. Table 4. Diffusion problem. Results of the directional decomposition using the fourth order scheme for the spatial discretization, with 10 subintervals in each dimension and different number of temporal steps.The standard methods, sequential operator, and Strang–Marchuk splitting give almost the same results independently of the number of time steps, because of the linearity of the equation. The e error estimates are negligible, whereas the error estimates are independent of the time step, reflecting the spatial discretization error.Table 3 and Table 4 show that the estimated errors and of the iterative splitting methods are proportional to h for the second order scheme of discretization of the directional derivatives, whereas they are proportional to for the fourth order scheme. The mean differences with the analytical solution, , of the iterative splitting methods tend to those of the non iterative ones as h decreases. The error estimates are more than one order of magnitude better for the fourth order scheme than for the second order scheme. Figure 2 and Figure 3 depict these results.

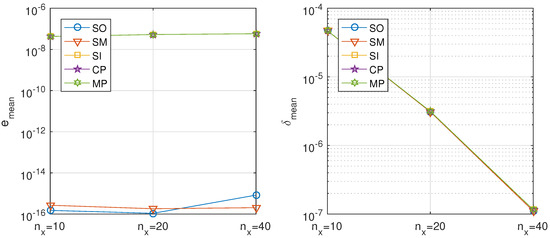

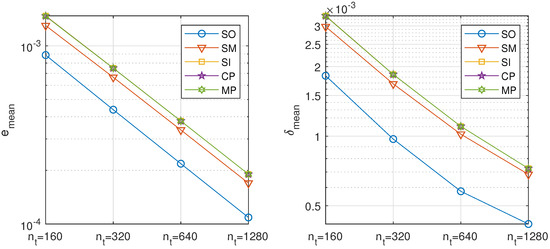

Table 4. Diffusion problem. Results of the directional decomposition using the fourth order scheme for the spatial discretization, with 10 subintervals in each dimension and different number of temporal steps.The standard methods, sequential operator, and Strang–Marchuk splitting give almost the same results independently of the number of time steps, because of the linearity of the equation. The e error estimates are negligible, whereas the error estimates are independent of the time step, reflecting the spatial discretization error.Table 3 and Table 4 show that the estimated errors and of the iterative splitting methods are proportional to h for the second order scheme of discretization of the directional derivatives, whereas they are proportional to for the fourth order scheme. The mean differences with the analytical solution, , of the iterative splitting methods tend to those of the non iterative ones as h decreases. The error estimates are more than one order of magnitude better for the fourth order scheme than for the second order scheme. Figure 2 and Figure 3 depict these results. Figure 2. Diffusion problem, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, as compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with second order approximations for the spatial derivatives for different number of time steps.

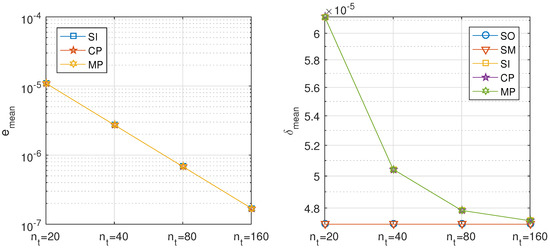

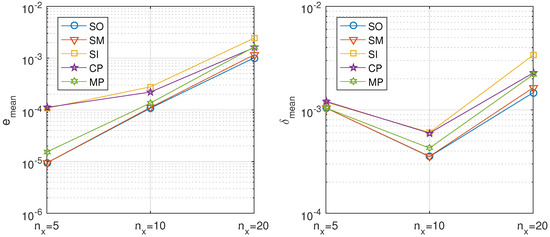

Figure 2. Diffusion problem, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, as compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with second order approximations for the spatial derivatives for different number of time steps. Figure 3. Diffusion problem, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with fourth order approximations for the spatial derivatives for different number of time steps.Now, we analyze the influence of the number of spatial intervals on the convergence properties of the iterative splitting algorithms. The spatial step is reduced simultaneously in the three dimensions, in order to keep the increments equal, because the performance of the method is better in this case. The time step is small enough to ensure the convergence in the case of the smaller spatial subinterval and is the same in all of the cases to allow the comparison depending only on the spatial step.For the second order scheme (see Table 5), the e error estimates are proportional to the number of spatial subintervals in each dimension, whereas the error estimates decrease, indicating a better approximation to the analytical solution as the spatial step decreases.The parallel methods obtain slightly less approximation to the analytical result and they require more iterations than the serial iterative method. The modern parallel method needs more iterations per step to converge than the classical parallel method.

Figure 3. Diffusion problem, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with fourth order approximations for the spatial derivatives for different number of time steps.Now, we analyze the influence of the number of spatial intervals on the convergence properties of the iterative splitting algorithms. The spatial step is reduced simultaneously in the three dimensions, in order to keep the increments equal, because the performance of the method is better in this case. The time step is small enough to ensure the convergence in the case of the smaller spatial subinterval and is the same in all of the cases to allow the comparison depending only on the spatial step.For the second order scheme (see Table 5), the e error estimates are proportional to the number of spatial subintervals in each dimension, whereas the error estimates decrease, indicating a better approximation to the analytical solution as the spatial step decreases.The parallel methods obtain slightly less approximation to the analytical result and they require more iterations than the serial iterative method. The modern parallel method needs more iterations per step to converge than the classical parallel method. Table 5. Diffusion problem. Results of the directional decomposition using the second order scheme for the spatial discretization, with 320 temporal steps and different number of spatial subintervals.For the fourth order scheme (see Table 6), the e error estimates are unaffected by the number of spatial subintervals, so that the convergence is maintained. The error estimates are proportional to a power of the spatial step of degree between 3 and 5, greatly improving the approximation of the second order scheme.

Table 5. Diffusion problem. Results of the directional decomposition using the second order scheme for the spatial discretization, with 320 temporal steps and different number of spatial subintervals.For the fourth order scheme (see Table 6), the e error estimates are unaffected by the number of spatial subintervals, so that the convergence is maintained. The error estimates are proportional to a power of the spatial step of degree between 3 and 5, greatly improving the approximation of the second order scheme. Table 6. Diffusion problem, directional decomposition. Results using the fourth order scheme for the spatial discretization, with 320 temporal steps and different number of subintervals in each dimension.Figure 4 and Figure 5 present the results that were obtained by the analyzed splitting methods with different number of spatial steps, using the second and the fourth order schemes for the spatial discretization, respectively.

Table 6. Diffusion problem, directional decomposition. Results using the fourth order scheme for the spatial discretization, with 320 temporal steps and different number of subintervals in each dimension.Figure 4 and Figure 5 present the results that were obtained by the analyzed splitting methods with different number of spatial steps, using the second and the fourth order schemes for the spatial discretization, respectively. Figure 4. Diffusion problem, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with second order approximations for the spatial derivatives with 320 time steps and different number of spatial steps.

Figure 4. Diffusion problem, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with second order approximations for the spatial derivatives with 320 time steps and different number of spatial steps. Figure 5. Diffusion problem, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with fourth order approximations for the spatial derivatives for different number of spatial steps.

Figure 5. Diffusion problem, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with fourth order approximations for the spatial derivatives for different number of spatial steps. - Balanced decomposition: We decompose into:Here, we have the benefit of equal load balances of the matrices, such that the exp-matrices have the same sparse structure. The results of the splitting algorithms with balanced decomposition are shown in Table 7 and Table 8 for the second and fourth order schemes for the spatial discretization, and in Figure 6 and Figure 7, respectively. The numerical behaviour is similar to that of the directional decomposition.

Table 7. Diffusion problem. Results of the balanced decomposition using the second order scheme for the spatial discretization, with 10 spatial subintervals and different number of temporal steps.

Table 7. Diffusion problem. Results of the balanced decomposition using the second order scheme for the spatial discretization, with 10 spatial subintervals and different number of temporal steps. Table 8. Diffusion problem. Results of the balanced decomposition using the fourth order scheme for the spatial discretization, with 10 spatial subintervals and different number of temporal steps.

Table 8. Diffusion problem. Results of the balanced decomposition using the fourth order scheme for the spatial discretization, with 10 spatial subintervals and different number of temporal steps. Figure 6. Diffusion problem, balanced decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with second order approximations for the spatial derivatives for different number of time steps.

Figure 6. Diffusion problem, balanced decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with second order approximations for the spatial derivatives for different number of time steps. Figure 7. Diffusion problem, balanced decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with fourth order approximations for the spatial derivatives for different number of time steps.

Figure 7. Diffusion problem, balanced decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, with fourth order approximations for the spatial derivatives for different number of time steps. - Mixed decomposition: We decompose into:where . For , we have the directional decomposition, while, for , we have the balanced decomposition.

Remark 6.

Based on the balanced decomposition with , we do not have problems with the splitting approaches and obtain optimal results. For the pure unbalanced decomposition, which means , we decompose into different directions. Here, we have to restrict us to the exact second order approach, which is .

Remark 7.

We obtain the benefit of the classical and modern parallel iterative splitting method that is based on larger time-steps and more iterative steps. In such an optimal version, we are much faster than the serial version and also the result is more accurate. For very fine time-steps, we do not see an improvement in the accuracy, but we see a benefit in the computational time; the parallel versions are faster.

5.3. Third Example: Mixed Convection-Diffusion and Burgers’ Equation

We consider a partial differential equation, which is a two-dimesnional (2D) example of a mixed convection-diffusion and Burgers’ equation. Such mixed PDEs are used to model fluid flow problems in traffic or population dynamics, see [15,16,51]. For testing the numerical methods, we consider a Burgers’ equation, where we can find an analytical solution. The model problem is:

where , , and is the viscosity.

The analytical solution is given by

where we compute accordingly.

As in the previous example, denote, by , the numerical solution obtained using spatial subintervals of amplitude , time steps h and a tolerance of , allowing a maximum of 40 iterations. On one hand, we will compare the numerical solution with the exact one for every point of the mesh, which shows the convergence of the method. On the other hand, we will compare with the result obtained halving the time steps, , at the points that are shared by the corresponding meshes. Denote by the difference between the results obtained using two different time steps, h and , at a common mesh point , and by the difference with the analytical solution at the same point. In the tables, we will use the error estimates given by

and

and the mean errors by

and

where N is the total number of nodes .

We measure the time consumed by processor l in each iteration m, in , in order to obtain the temporal cost of the parallel schemes. In the classical parallel scheme, the processors synchronize at each iteration, so the cost for this time interval is , whereas, in the modern parallel scheme, the processors iterate independently in performing iterations until convergence, thus the cost is . The final cost is obtained adding the results of all time subintervals.

In the following, we discuss different decompositions of the multi-operator splitting approach:

- Directional decomposition

We decompose into the different directions (x and y direction):

Let us first analyze the influence of parameter on the convergence of the algorithms.

Table 9 and Table 10 show the error estimations of the considered algorithms for different values of using the second and the fourth order discretization scheme, respectively, taking 10 subintervals in each spatial dimension and 640 time steps. The results for the different algorithms are similar. As it could be expected, higher viscosity values give lower error estimates.

Table 9.

Mixed convection-diffusion and Burgers’ equation. Results of the directional decomposition using the second order scheme for the spatial discretization, with 10 spatial subintervals, 640 temporal intervals and different values of .

Table 10.

Mixed convection-diffusion and Burgers’ equation. Results of the directional decomposition using the fourth order scheme for the spatial discretization, with 10 spatial subintervals, 640 temporal intervals and different values of .

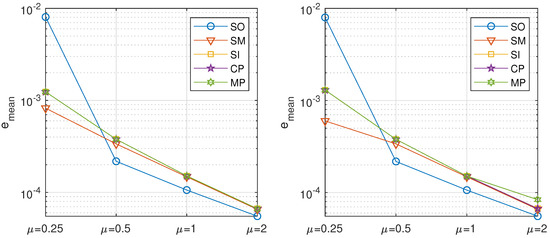

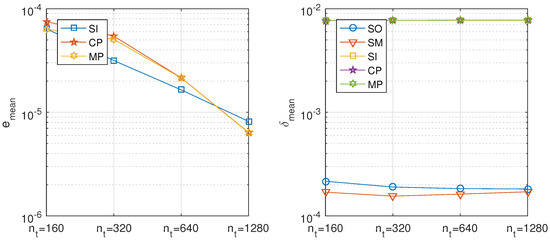

Figure 8 shows the dependence on of the values of for the different algorithms, using second and fourth order approximations for the spatial derivatives. In contrast with the former example, the use of higher order approximations for the spatial derivatives produces only a slight improvement in the error estimations, in both cases being of the same order with respect to the time step.

Figure 8.

Mixed convection-diffusion and Burgers’ equation, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different viscosities . Left: order 2 approximation and right: order 4 approximation for the discretization of the spatial derivatives.

As we can observe, the mean error is approximately proportional to the inverse of the viscosity, , and it is not much affected by the order of approximation of the spatial derivatives. The number of iterations and the execution time are not affected by the viscosity changes, except for the case of in the modern parallel algorithm, where the maximum number of iterations is reached in each step, also consuming more execution time. In what follows, we will take the viscosity parameter and use second order approximations for the spatial derivatives.

We will now analyze the influence of the number of time steps on the convergence of the algorithms.

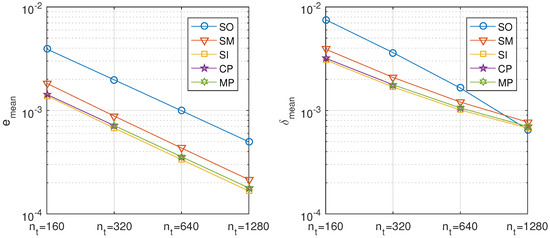

Table 11 shows that the estimated error is roughly proportional to the time step, although, in the end, the differences with the analytical solution decrease more slowly, due to the discretization error. All of the considered methods have errors of the same order. The sequential operator splitting method presents higher estimates than the iterative schemes for the maximum error, but lower estimates of the mean error, as seen in Figure 9.

Table 11.

Mixed convection-diffusion and Burgers’ equation. Results of the directional decomposition with 10 spatial subintervals and different number of temporal steps.

Figure 9.

Mixed convection-diffusion and Burgers’ equation, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different number of time steps.

Now, we analyze the dependence on the number of spatial subintervals. Table 12 displays the errors obtained varying the number of space subintervals, considering 1280 time steps, , tolerance and maximum number of iterations 40.

Table 12.

Mixed convection-diffusion and Burgers’ equation. Results of the directional decomposition using the second order scheme for the spatial discretization, with 1280 time subintervals and different number of spatial steps.

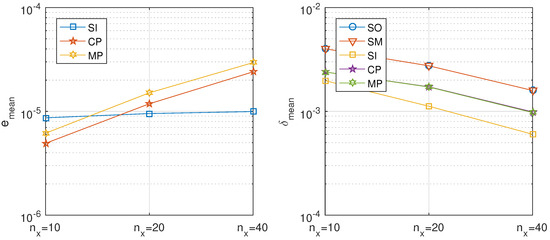

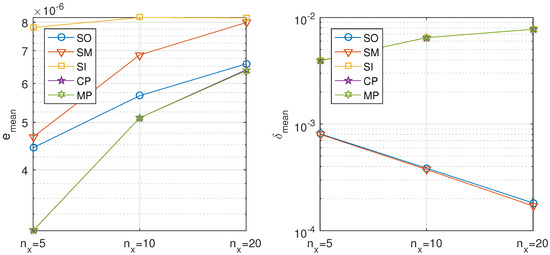

Figure 10 shows, on the left, that the convergence properties degrade with the number of spatial steps for a fixed time step and, on the right, that the best approximations are obtained for 10 subintervals in each spatial dimension. The error increment for more space intervals can be due to the fact that the condition number of the exponential matrix utilized in order to solve the differential system increases fast with the number of spatial intervals, making the solution less reliable.

Figure 10.

Mixed convection-diffusion and Burgers’ equation, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different number of spatial steps in the directional decomposition.

- Convection and diffusion decomposition

Here, we decompose to an explicit part, which is the convection, and into an implicit part, which is the diffusion.

Table 13 analyzes the convergence of the different splitting methods for the convection and diffusion decomposition varying the time step. The errors are proportional to the time step, as shown in the log-log diagrams in Figure 11. The iterative methods have slightly better accuracy than the reference methods, particularly better than the sequential operator splitting method. The modern parallel version does not converge for 160 time steps.

Table 13.

Mixed convection-diffusion and Burgers’ equation. Results for the convection diffusion decomposition using the second order scheme for the spatial discretization with 10 spatial subintervals and different number of temporal steps.

Figure 11.

Mixed convection-diffusion and Burgers’ equation, convection-diffusion decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different number of time steps.

Table 14 analyzes the dependence on the spatial step. The behavior is similar to the case of the directional decomposition, presenting an increment of the estimated error with the number of spatial subintervals for a fixed time step. In the second order scheme, the -errors decrease with the space step. The temporal cost is relatively high in the case of 20 subintervals, due to the computational overhead for dealing with big matrices.

Table 14.

Mixed convection-diffusion and Burgers’ equation. Results for the convection diffusion decomposition using the second order scheme for the spatial discretization with 10 temporal steps and different number of spatial subintervals.

Figure 12 compares the estimated mean errors and of the different methods with the convection-diffusion decomposition and second order approximation of the spatial derivatives for different number of spatial steps. The sequential operator splitting has poorer convergence properties than the other methods, and its result differs more from the analytical solution as the number of spatial nodes increases.

Figure 12.

Mixed convection-diffusion and Burgers’ equation, convection-diffusion decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different number of spatial steps.

- Balanced decomposition

We decompose into:

where is an arbitrary parameter that can be tuned in order to achieve the maximum efficiency.

We first examine the influence of parameter on the convergence of the different splitting schemes, see Table 15, The method has the same behavior for parameter values symmetric with respect to 0.5. The results are quite uniform for in the range , whereas, for other parameter values, the method may diverge. The classical parallel algorithm yields results for , whereas the serial and the modern parallel iterative methods fail for .

Table 15.

Mixed convection-diffusion and Burgers’ equation, balanced decomposition. Results for the balanced decomposition using the second order scheme for the spatial discretization with 640 temporal subintervals and different values of parameter .

The dependence on the time and space steps of the algorithms with the convection-diffusion decomposition is similar to that of the previously analyzed decompositions. Table 16 compares the behavior of the considered methods with different decompositions.

Table 16.

Mixed convection-diffusion and Burgers’ equation with 10 spatial intervals and 640 temporal steps. Results of the different splitting methods with directional decomposition, D, convection-diffusion decomposition, CD, and -balanced decomposition, B.

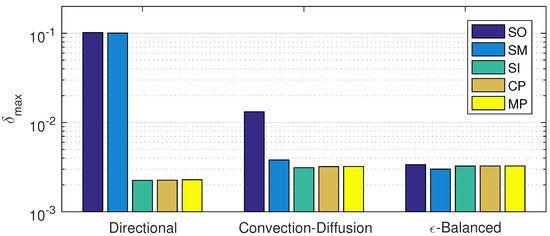

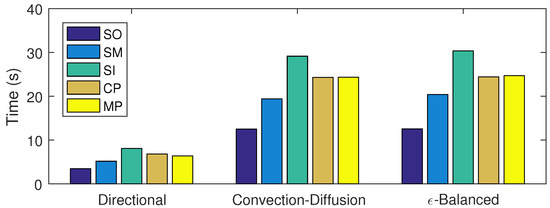

Figure 13 shows that the non-iterative splitting methods give better results with the -balanced decomposition, whereas the iterative methods give similar results with all of the decompositions, being slightly better for the directional decomposition.

Figure 13.

Mixed convection-diffusion and Burgers’ equation with 10 spatial intervals and 1280 temporal steps. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different decomposition methods.

Figure 14 compares the temporal costs that are shown in Table 16. The results indicate that the directional decomposition is better than the convection-diffusion decomposition and the -balanced decomposition for all the considered splitting algorithms.

Figure 14.

Mixed convection-diffusion and Burgers’ equation with 10 spatial intervals and 640 time steps. Temporal cost in seconds of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different decomposition methods.

5.4. Fourth Example: Fractional Diffusion Problem

We deal with the following fractional diffusion problem, see also [27]:

where we have the analytical solution , with and and with . and , .

In operator notation, we write:

where and we assume that the Dirichlet boundary conditions are embedded into the operators.

We apply the normalized Grünwald weights by:

for the right-shifted Grünwald formula, see [32].

We apply:

In order to establish the convergence of the algorithms, we compute the solution obtained while using spatial and temporal steps and , respectively. We use different measures to estimate the convergence. On one hand, we compare the outcome of the method with the exact solution for every point of the mesh, which shows the convergence of the method. On the other hand, we can compare with the result obtained halving the time or space steps, , at the final time . Denote, by , the difference between the results at a mesh point , obtained using two different time steps, h and , and by the difference with the analytical solution at the same point. In the tables, we will denote the maximum errors by

and

and the mean errors by

and

where N is the number of spatial nodes at time T.

In the following, we discuss different decompositions of the multi-operator splitting approach:

- Directional decomposition:

We decompose into the different directions:

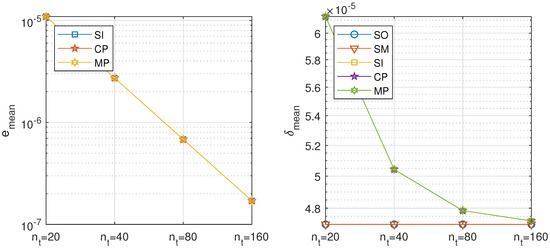

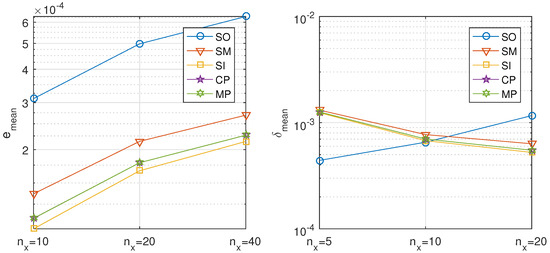

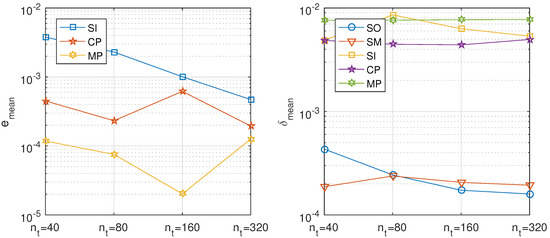

The directional decomposition allows obtaining the solution solving linear systems of size , where is the number of spatial subintervals. Table 17 shows the results of the considered splitting algorithms for 20 spatial subintervals and different time steps, allowing for a maximum of three iterations and using tolerance . Figure 15 shows, on the left, that the parallel iterative methods perform slightly better than the serial iterative method and, on the right, the approximation to the analytical solution for different number of time steps.

Table 17.

Fractional diffusion equation. Results for the directional decomposition with 20 spatial subintervals and different number of temporal steps.

Figure 15.

Fractional diffusion equation, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different number of time steps.

Table 18 shows the results of the considered splitting algorithms for 320 time steps and different number of spatial subintervals, allowing for a maximum of three iterations and using tolerance . Figure 16 presents graphically the results.

Table 18.

Fractional diffusion equation. Results for the directional decomposition with 320 time steps and different number of spatial subintervals.

Figure 16.

Fractional diffusion equation, directional decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for 320 temporal steps and different number of spatial subintervals.

- Balanced decomposition:

We decompose into:

Here, we have the benefit of equal load balances of the matrices, such that the exp-matrices have the same sparse structure.

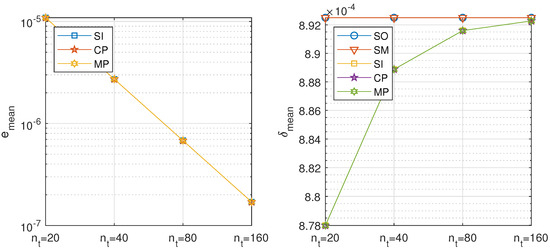

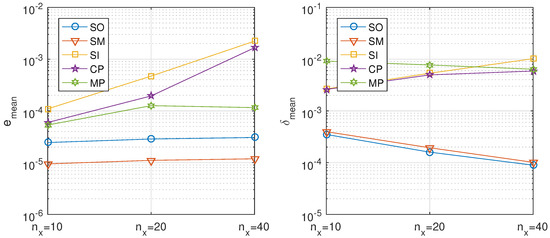

Table 19 shows the results of the considered splitting algorithms for 20 spatial subintervals and different time steps, allowing a maximum of three iterations and using tolerance . Figure 17 shows, on the left, that the parallel iterative methods perform slightly better than the serial iterative method and, on the right, the approximation to the analytical solution for different number of time steps.

Table 19.

Fractional diffusion equation. Results for the balanced decomposition with 20 spatial subintervals and different number of temporal steps.

Figure 17.

Fractional diffusion equation, balanced decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for different number of time steps.

Table 20 shows the results of the considered splitting algorithms for 320 time steps and different number of spatial subintervals, allowing a maximum of three iterations and using tolerance . Figure 18 graphically presents the results.

Table 20.

Fractional diffusion equation. Results for the balanced decomposition with 320 time steps and different number of spatial subintervals.

Figure 18.

Fractional diffusion equation, balanced decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for 320 temporal steps and different number of spatial subintervals.

- Mixed decomposition

We decompose into the different directions:

where . For , we have the directional decomposition, while, for , we have the balanced decomposition.

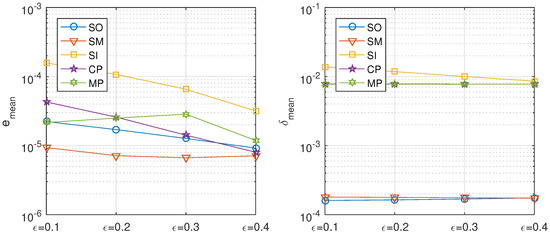

Table 21 shows the influence of on the convergence of the different splitting methods with mixed decomposition for the fractional diffusion problem using 20 subintervals in each spatial direction and 320 time steps. The same information can be seen in Figure 19.

Table 21.

Fractional diffusion equation. Results for the mixed decomposition with 20 subintervals in each spatial direction, 320 time steps, and different values of .

Figure 19.

Fractional diffusion equation, mixed decomposition. Precision of the proposed methods: sequential iterative SI, classical parallel CP, and modern parallel MP, compared with the classical ones: sequential operator SO and Strang–Marchuk SM, for 20 temporal steps, 320 temporal steps, and different values of .

Remark 8.

For the fractional diffusion model, we could also obtain the same results as in the previous diffusion and Burgers’ equation. We could stabilize the schemes with respect to and obtain a good load balance of the matrices. Here, we could apply real-life problems with respect to the fractional differential equations, while we developped a stable novel parallel solver scheme.

6. Conclusions

In the paper, we have discussed the extensions of the iterative splitting approaches to parallel solver methods. Such novel methods allow for reducing the computational time. We can achieve the same accuracy as in the serial version. The improvements are obtained with larger time-steps and additional iterative steps, where we could reduce the computational time with the parallel methods. The benefit is, of course, the balance to multiple processors with additional memories. Further, we could apply the resources to improve with additional steps the accuracy of the approximations. We circumvent the problem of the memory of the algorithm, see [38], which we have if we only apply a serial method. Based on the parallel distribution, we have additional iterative steps for each processor and we distribute such a memory to all processors. For large scale numerical experiments, we could present the benefit of the parallel resources.

In our proposed iterative methods, we gain accuracy if we apply more iterative cycles, so that we have to devote additional computational time. We could reduce the computational cost more than with serial iterative methods due to the application of parallel ideas. On the other hand, it is important to optimize the parallel amount of work with additional adaptive and distributed ideas that improve the efficiency of the proposed parallel methods.

In summary, we optimize the reduction of computational time and the results accuracy with the help of parallel iterative splitting methods. In the future, we will consider more real-life problems and stochastic processes. Furthermore, we will extend the parallel iterative methods to more efficient adaptive schemes.

Author Contributions

The theory, the formal analysis and the methodology presented in this paper were developed by J.G. The software development and the numerical validation of the methods were done by J.L.H. and E.M. The paper was written by J.G., J.L.H. and E.M. and was corrected and edited by J.G., J.L.H. and E.M. The writing–review was done by J.G., J.L.H. and E.M. The supervision and project administration were done by E.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by Ministerio de Economía y Competitividad, Spain, under grant PGC2018-095896-B-C21-C22 and German Academic Exchange Service grant number 91588469.

Acknowledgments

We acknowledge support by the DFG Open Access Publication Funds of the Ruhr-Universität of Bochum, Germany.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Farago, I.; Geiser, J. Iterative Operator-Splitting methods for Linear Problems. Int. J. Comput. Sci. Eng. 2007, 3, 255263. [Google Scholar] [CrossRef]

- Geiser, J. Iterative Splitting Methods for Differential Equations; Numerical Analysis and Scientific Computing Series; Taylor & Francis Group: Boca Raton, FL, USA; London, UK; New York, NY, USA, 2011. [Google Scholar]

- Frommer, A.; Szyld, D.B. On asynchronous iterations. J. Comput. Appl. Math. 2000, 123, 201–216. [Google Scholar] [CrossRef]

- O’Leary, D.P.; White, R.E. Multi-splittings of matrices and parallel solution of linear systems. Siam Algebr. Discret. Methods 1985, 6, 630–640. [Google Scholar] [CrossRef]

- White, R.E. Parallel algorithms for nonlinear problems. Siam Algebr. Discret. Methods 1986, 7, 137–149. [Google Scholar] [CrossRef]

- Geiser, J. Iterative Splitting Methods for Coulomb Collisions in Plasma Simulations. arXiv 2017, arXiv:1706.06744. [Google Scholar]

- Geiser, J. Picard’s iterative method for nonlinear multicomponent transport equations. Cogent Math. 2016, 3, 1158510. [Google Scholar] [CrossRef]

- Miekkala, U.; Nevanlinna, O. Convergence of dynamic iteration methods for initial value problems. SIAM J. Sci. Stat. Comput. 1987, 8, 459–482. [Google Scholar] [CrossRef]

- Miekkala, U.; Nevanlinna, O. Iterative solution of systems of linear differential equations. Acta Numer. 1996, 5, 259–307. [Google Scholar] [CrossRef]

- Vandewalle, S. Parallel Multigrid Waveform Relaxation for Parabolic Problems; Teubner Skripten zur Numerik, B.G. Teubner Stuttgart; Springer: Berlin/Heidelberg, Germany, 1993. [Google Scholar]

- Geiser, J. Multicomponent and Multiscale Systems: Theory, Methods, and Applications in Engineering; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Geiser, J. Multi-stage waveform Relaxation and Multisplitting Methods for Differential Algebraic Systems. arXiv 2016, arXiv:1601.00495. [Google Scholar]

- Geiser, J. Iterative operator-splitting methods for nonlinear differential equations and applications. Numer. Methods Partial. Differ. Equ. 2011, 27, 1026–1054. [Google Scholar] [CrossRef]

- He, D.; Pan, K.; Hu, H. A spatial fourth-order maximum principle preserving operator splitting scheme for the multi-dimensional fractional Allen-Cahn equation. Appl. Numer. Math. 2020, 151, 44–63. [Google Scholar] [CrossRef]

- Haberman, R. Mathematical Models: Mechanical Vibrations, Population Dynamics, and Traffic Flow; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1998. [Google Scholar]

- Orlandi, P. (Ed.) The Burgers equation. In Fluid Flow Phenomena: A Numerical Toolkit; Springer: Dordrecht, The Netherlands, 2000; pp. 40–50. [Google Scholar]

- Ginoa, M.; Cerbelli, S.; Roman, H.E. Fractional diffusion equation and relaxation in complex viscoelastic materials. Physica A 1992, 191, 449–453. [Google Scholar] [CrossRef]

- Nigmatullin, R.R. The realization of the generalized transfer equation in a medium with fractal geometry. Phys. Stat. Sol. B 1986, 133, 425–430. [Google Scholar] [CrossRef]

- Allen, S.M.; Cahn, J.W. A microscopic theory for antiphase boundary motion and its application to antiphase domain coarsening. Acta Metall. 1979, 27, 1085–1095. [Google Scholar] [CrossRef]

- Yue, P.; Feng, J.; Liu, C.; Shen, J. Diffuse-interface simulations of drop coalescence and retraction in viscoelastic fluids. J. Non-Newton. Fluid Mech. 2005, 129, 163–176. [Google Scholar] [CrossRef]

- Sommacal, L.; Melchior, P.; Oustaloup, A.; Cabelguen, J.-M.; Ijspeert, A.J. Fractional Multi-models of the Frog Gastrocnemius Muscle. J. Vib. Control. 2008, 14, 1415–1430. [Google Scholar] [CrossRef]

- Moshrefi-Torbati, M.; Hammond, J.K. Physical and geometrical interpretation of fractional operators. J. Frankl. Inst. 1998, 335, 1077–1086. [Google Scholar] [CrossRef]

- Rami Ahmad El-Nabulsi. Fractional Dirac operators and deformed field theory on Clifford algebra. Chaos Solitons Fractals 2009, 42, 2614–2622. [Google Scholar] [CrossRef]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations. In Mathematics Studies, 1st ed.; Elesevier: North-Holland, The Netherlands, 2006; Volume 204. [Google Scholar]

- Kanney, J.; Miller, C.; Kelley, C.T. Convergence of iterative split-operator approaches for approximating nonlinear reactive transport problems. Adv. Water Resour. 2003, 26, 247–261. [Google Scholar] [CrossRef][Green Version]

- Geiser, J.; Hueso, J.L.; Martinez, E. Adaptive Iterative Splitting Methods for convection-diffusion-reaction equations. Mathematics 2020, 8, 302. [Google Scholar] [CrossRef]

- Meerschaert, M.M.; Scheffler, H.P.; Tadjeran, C. Finite difference methods for two-dimensional fractional dispersion equation. J. Comput. Phys. 2006, 211, 249–261. [Google Scholar] [CrossRef]

- Argyros, I.K.; Regmi, S. Undergraduate Research at Cameron University on Iterative Procedures in Banach and Other Spaces; Nova Science Publisher: New York, NY, USA, 2019. [Google Scholar]

- Cresson, J.; Inizan, P. Irreversibility, Least Action Principle and Causality. Preprint, HAL, 2008. Available online: https://hal.archives-ouvertes.fr/hal-00348123v1 (accessed on 11 April 2020).

- Cresson, J. Fractional embedding of differential operators and Lagrangian systems. J. Math. Phys. 2007, 48, 033504. [Google Scholar] [CrossRef]

- Gustafsson, B. High Order Difference Methods for Time Dependent PDE; Springer Series in Computational Mathematics; Springer: Berlin/Heidelberg, Germany, 2007; Volume 38. [Google Scholar]

- Meerschaert, M.M.; Tadjeran, C. Finite difference approximations for fractional advection–dispersion flow equations. J. Comput. Appl. Math. 2003, 172, 65–77. [Google Scholar] [CrossRef]

- Geiser, J. Computing Exponential for Iterative Splitting Methods: Algorithms and Applications. J. Appl. Math. 2011, 2011, 193781. [Google Scholar] [CrossRef]

- Geiser, J. Iterative Operator-Splitting Methods with Higher Order Time-Integration Methods and Applications for Parabolic Partial Differential Equations. J. Comput. Appl. Math. 2008, 217, 227–242. [Google Scholar] [CrossRef]

- Ladics, T. Error analysis of waveform relaxation method for semi-linear partial differential equations. J. Comput. Appl. Math. 2015, 285, 15–31. [Google Scholar] [CrossRef]

- Kelley, C.T. Iterative Methods for Linear and Nonlinear Equations; SIAM Frontiers in Applied Mathematics, no. 16; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1995. [Google Scholar]

- Yuan, D.; Burrage, K. Convergence of the parallel chaotic waveform relaxation method for stiff systems. J. Comput. Appl. Math. 2003, 151, 201–213. [Google Scholar] [CrossRef][Green Version]

- Ladics, T.; Farago, I. Generalizations and error analysis of the iterative operator splitting method. Cent. Eur. J. Math. 2013, 11, 1416–1428. [Google Scholar] [CrossRef][Green Version]

- Moler, C.B.; Loan, C.F.V. Nineteen dubious ways to compute the exponential of a matrix, twenty-five years later. SIAM Rev. 2003, 45, 3–49. [Google Scholar] [CrossRef]

- Najfeld, I.; Havel, T.F. Derivatives of the matrix exponential and their computation. Adv. Appl. Math. 1995, 16, 321–375. [Google Scholar] [CrossRef]

- Hochbruck, M.; Ostermann, A. Exponential integrators. Acta Numer. 2010, 19, 209–286. [Google Scholar] [CrossRef]

- Casas, F.; Iserles, A. Explicit Magnus expansions for nonlinear equations. J. Phys. A Math. Gen. 2006, 39, 5445–5461. [Google Scholar] [CrossRef][Green Version]

- Magnus, W. On the exponential solution of differential equations for a linear operator. Commun. Pure Appl. Math. 1954, 7, 649–673. [Google Scholar] [CrossRef]

- Stoer, J.; Bulirsch, R. Introduction to Numerical Analysis; Texts in Applied Mathematics No.12; Springer: New York, NY, USA, 2002. [Google Scholar]

- Jeltsch, R.; Pohl, B. Waveform Relaxation with Overlapping Splittings. SIAM J. Sci. Comput. 1995, 16, 40–49. [Google Scholar] [CrossRef][Green Version]

- Farago, I. A Modified iterated operator-splitting method. Appl. Math. Model. 2008, 32, 1542–1551. [Google Scholar] [CrossRef]

- Hairer, E.; Lubich, C.; Wanner, G. Geometric Numerical Integration: Structure-Preserving Algorithms for Ordinary Differential Equations; Springer: Berlin-Heidelberg, Germany; New York, NY, USA, 2002. [Google Scholar]

- Li, J.; Jiang, Y.-L.; Miao, Z. A parareal approach of semi-linear parabolic equations based on general waveform relaxation. Numer. Methods Partial. Differ. Equ. 2019, 35, 2017–2034. [Google Scholar] [CrossRef]

- Trotter, H.F. On the product of semi-groups of operators. Proc. Am. Math. Soc. 1959, 10, 545–551. [Google Scholar] [CrossRef]

- Strang, G. On the construction and comparision of difference schemes. SIAM J. Numer. Anal. 1968, 5, 506–517. [Google Scholar] [CrossRef]

- Geiser, J. Operator-Splitting Methods in Respect of Eigenvalue Problems for Nonlinear Equations and Applications to Burgers Equations. J. Comput. Appl. Math. 2009, 231, 815–827. [Google Scholar] [CrossRef][Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).