Abstract

This research paper proposes a derivative-free method for solving systems of nonlinear equations with closed and convex constraints, where the functions under consideration are continuous and monotone. Given an initial iterate, the process first generates a specific direction and then employs a line search strategy along the direction to calculate a new iterate. If the new iterate solves the problem, the process will stop. Otherwise, the projection of the new iterate onto the closed convex set (constraint set) determines the next iterate. In addition, the direction satisfies the sufficient descent condition and the global convergence of the method is established under suitable assumptions. Finally, some numerical experiments were presented to show the performance of the proposed method in solving nonlinear equations and its application in image recovery problems.

Keywords:

nonlinear monotone equations; conjugate gradient method; projection method; signal processing MSC:

65K05; 90C52; 90C56; 92C55

1. Introduction

In this paper, we consider the following constrained nonlinear equation

where is continuous and monotone. The constraint set is nonempty, closed and convex.

Monotone equations appear in many applications [1,2,3], for example, the subproblems in the generalized proximal algorithms with Bregman distance [4], reformulation of some -norm regularized problems arising in compressive sensing [5] and variational inequality problems are also converted into nonlinear monotone equations via fixed point maps or normal maps [6], (see References [7,8,9] for more examples). Among earliest methods for the case is the hyperplane projection Newton method proposed by Solodov and Svaiter in Reference [10]. Subsequently, many methods were proposed by different authors. Among the popular methods are spectral gradient methods [11,12], quasi-Newton methods [13,14,15] and conjugate gradient methods (CG) [16,17].

To solve the constrained case (1), the work of Solodov and Svaiter was extended by Wang et al. [18] which also involves solving a linear system in each iteration but it was shown later by some authors that the computation of the linear system is not necessary. For examples, Xiao and Zhu [19] presented a CG method, which is a combination the well known CG-DESCENT method in Reference [20] with the projection strategy by Solodov and Svaiter. Liu et al. [21] presented two CG method with sufficiently descent directions. In Reference [22], a modified version of the method in Reference [19] was presented by Liu and Li. The modification improves the numerical performance of the method in Reference [19]. Another extension of the Dai and Kou (DK) CG method combined with the projection method to solve (1) was proposed by Ding et al. in Reference [23]. Just recently, to popularize the Dai-Yuan (DY) CG method, Liu and Feng [24] modified the DY such that the direction will be sufficiently descent. A new hybrid spectral gradient projection method for solving convex constraints nonlinear monotone equations was proposed by Awwal et al. in Reference [25]. The method is a convex combination of two different positive spectral parameters together with the projection strategy. In addition, Abubakar et al. extended the method in Reference [17] to solve (1) and also solve some sparse signal recovery problems.

Inspired by some the above methods, we propose a descent conjugate gradient method to solve problem (1). Under appropriate assumptions, the global convergence is established. Preliminary numerical experiments were given to compare the proposed method with existing methods to solve nonlinear monotone equations and some signal and image reconstruction problems arising from compressive sensing.

The remaining part of this paper is organized as follows. In Section 2, we state the proposed algorithm as well as its convergence analysis. Finally, Section 3 reports some numerical results to show the performance of the proposed method in solving Equation (1), signal recovery problems and image restoration problems.

2. Algorithm: Motivation and Convergence Result

This section starts by defining the projection map together with some of its properties.

Definition 1.

Let be a nonempty closed convex set. Then for any , its projection onto Ψ, denoted by , is defined by

Moreover, is nonexpansive, That is,

All through this article, we assume the followings

- ()

- The mapping F is monotone, that is,

- ()

- The mapping F is Lipschitz continuous, that is there exists a positive constant L such that

- ()

- The solution set of (1), denoted by , is nonempty.

An important property that methods for solving Equation (1) must possess is that the direction satisfy

where is a constant. The inequality (3) is called sufficient descent property if is the gradient vector of a real valued function .

In this paper, we propose the following search direction

where

and is determined such that Equation (3) is satisfied. It is easy to see that for , the equation holds with . Now for ,

aking we have

Thus, the direction defined by (4) satisfy condition (3) where .

To prove the global convergence of Algorithm 1, the following lemmas are needed.

| Algorithm 1: (DCG) |

| Step 0. Given an arbitrary initial point , parameters , , and set . Step 1. If , stop, otherwise go to Step 2. Step 2. Compute using Equation (4). Step 3. Compute the step size such that Step 4. Set . If and , stop. Else compute where Step 5. Let and go to Step 1. |

Lemma 1.

Lemma 2.

Suppose that assumptions ()–() holds, then the sequences and generated by Algorithm 1 (CGD) are bounded. Moreover, we have

and

Proof.

We will start by showing that the sequences and are bounded. Suppose , then by monotonicity of F, we get

Also by definition of and the line search (8), we have

So, we have

Thus the sequence is non increasing and convergent and hence is bounded. Furthermore, from Equation (13), we have

and we can deduce recursively that

Then from Assumption (), we obtain

If we let , then the sequence is bounded, that is,

The boundedness of the sequence together with Equations (15) and (16), implies the sequence is bounded.

Since is bounded, then for any , the sequence is also bounded, that is, there exists a positive constant such that

This together with Assumption () yields

Equation (17) implies

However, using Equation (2), the definition of and the Cauchy-Schwartz inequality, we have

which yields

☐

Equation (9) and definition of implies that

Lemma 3.

Suppose is generated by Algorithm 1 (CGD), then there exist such the

Proof.

By definition of and Equation (15)

Letting , we have the desired result. ☐

Theorem 1.

Suppose that assumptions ()–() hold and let the sequence be generated by Algorithm 1, then

Proof.

To prove the Theorem, we consider two cases;

Case 1

Suppose we have Then by continuity of F, the sequence has some accumulation point such that Because converges and is an accumulation point of , therefore converges to .

Case 2

3. Numerical Examples

This section gives the performance of the proposed method with existing methods such as PCG and PDY proposed in References [22,24], respectively, to solve monotone nonlinear equations using 9 benchmark test problems. Furthermore Algorithm 1 is applied to restore a blurred image. All codes were written in MATLAB R2018b and run on a PC with intel COREi5 processor with 4 GB of RAM and CPU 2.3 GHZ. All runs were stopped whenever

The parameters chosen for the existing algorithm are as follows:

PCG method: All parameters are chosen as in Reference [22].

PDY method: All parameters are chosen as in Reference [24].

Algorithm 1: We have tested several values of and found that gives the best result. In addition, to implement most of the optimization algorithms, the parameter is chosen as a very small number. Therefore, we chose and for the implementation of the proposed algorithm.

We test 9 different problems with dimensions ranging from and 6 initial points: , , , , , . In Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8 and Table 9, the number of iterations (ITER), number of function evaluations (FVAL), CPU time in seconds (TIME) and the norm at the approximate solution (NORM) were reported. The symbol ‘−’ is used when the number of iterations exceeds 1000 and/or the number of function evaluations exceeds 2000.

Table 1.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 1 with given initial points and dimensions.

Table 2.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 2 with given initial points and dimensions.

Table 3.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 3 with given initial points and dimensions.

Table 4.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 4 with given initial points and dimensions.

Table 5.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 5 with given initial points and dimensions.

Table 6.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 6 with given initial points and dimensions.

Table 7.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 7 with given initial points and dimensions.

Table 8.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 8 with given initial points and dimensions.

Table 9.

Numerical Results for Algorithm 1 (DCG), PCG and PDY for Problem 9 with given initial points and dimensions.

The test problems are listed below, where the function F is taken as .

Problem 1

([26]). Exponential Function.

Problem 2

([26]). Modified Logarithmic Function.

Problem 3

([13]). Nonsmooth Function.

It is clear that Problem 3 is nonsmooth at .

Problem 4

([26]). Strictly Convex Function I.

Problem 5

([26]). Strictly Convex Function II.

Problem 6

([27]). Tridiagonal Exponential Function

Problem 7

([28]). Nonsmooth Function

Problem 8

([23]). Penalty 1

Problem 9

([29]). Semismooth Function

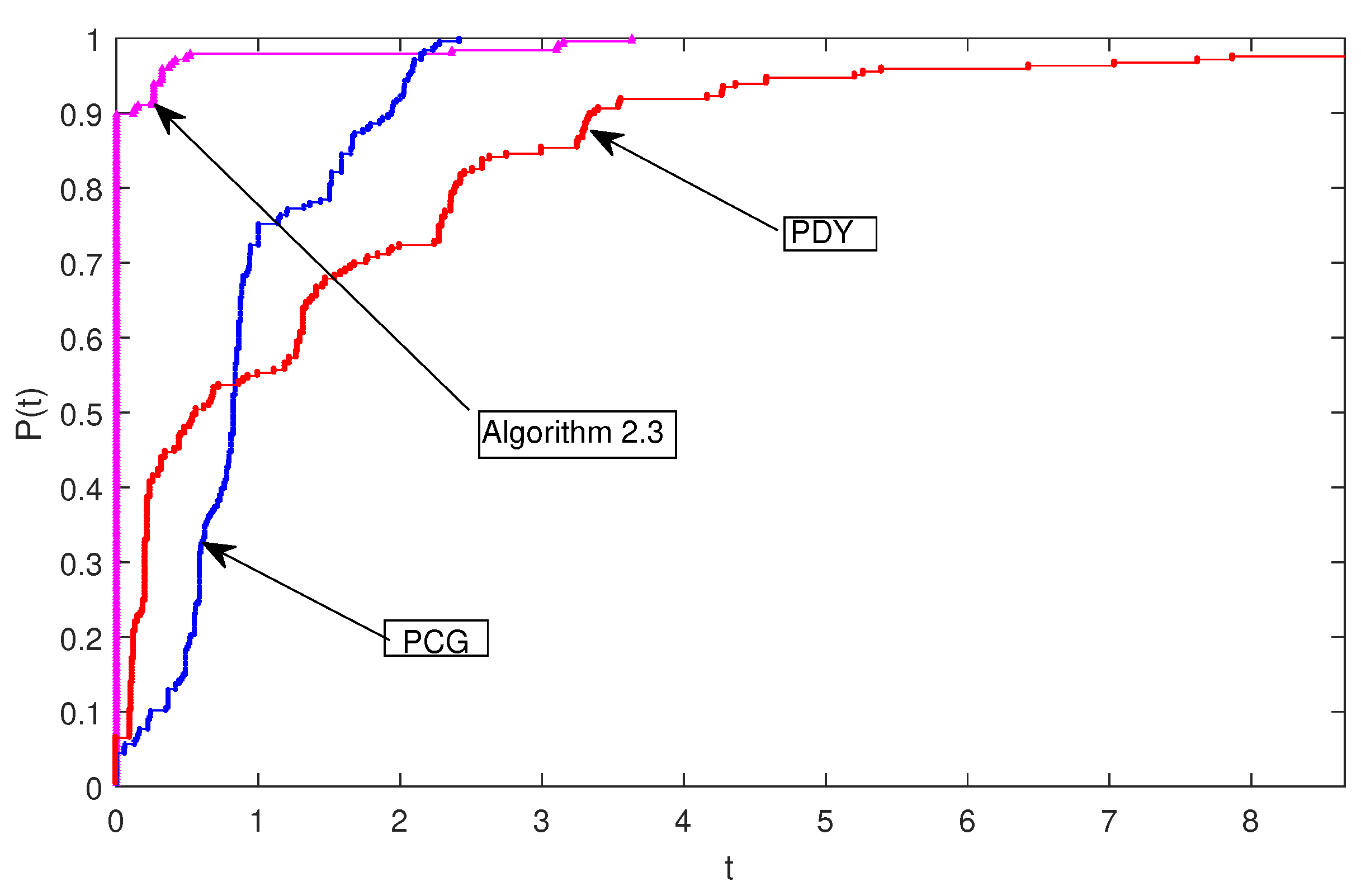

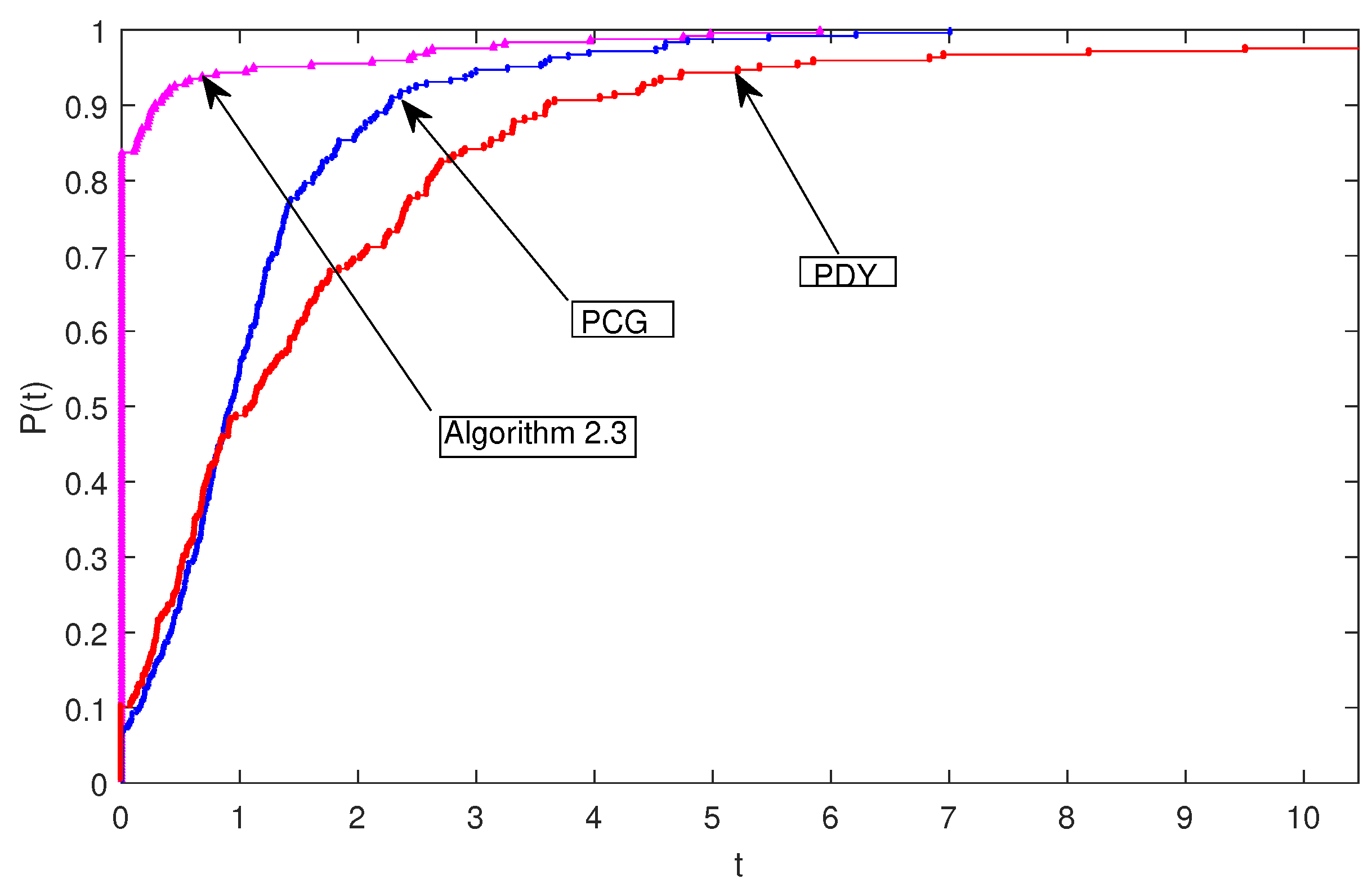

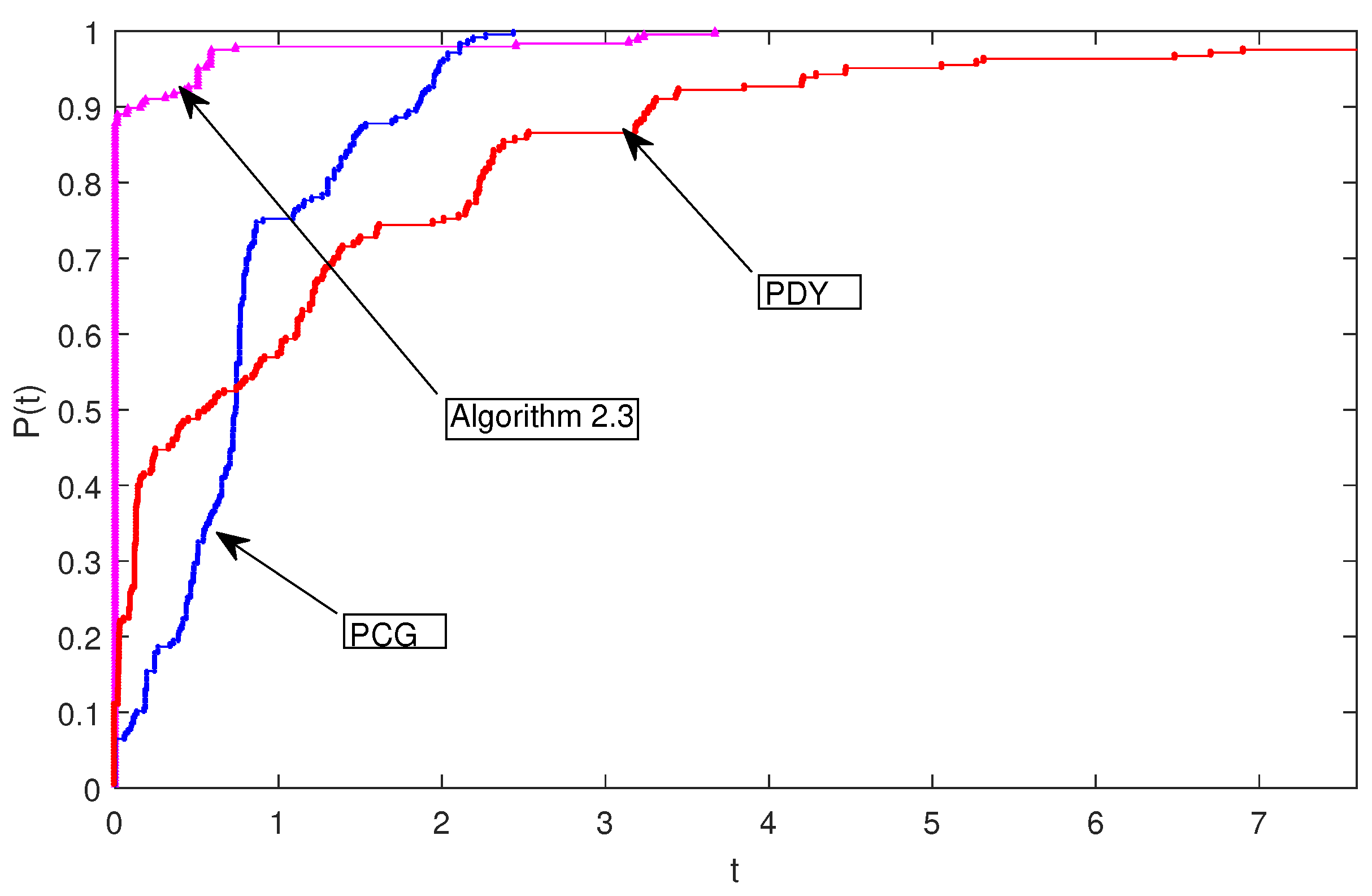

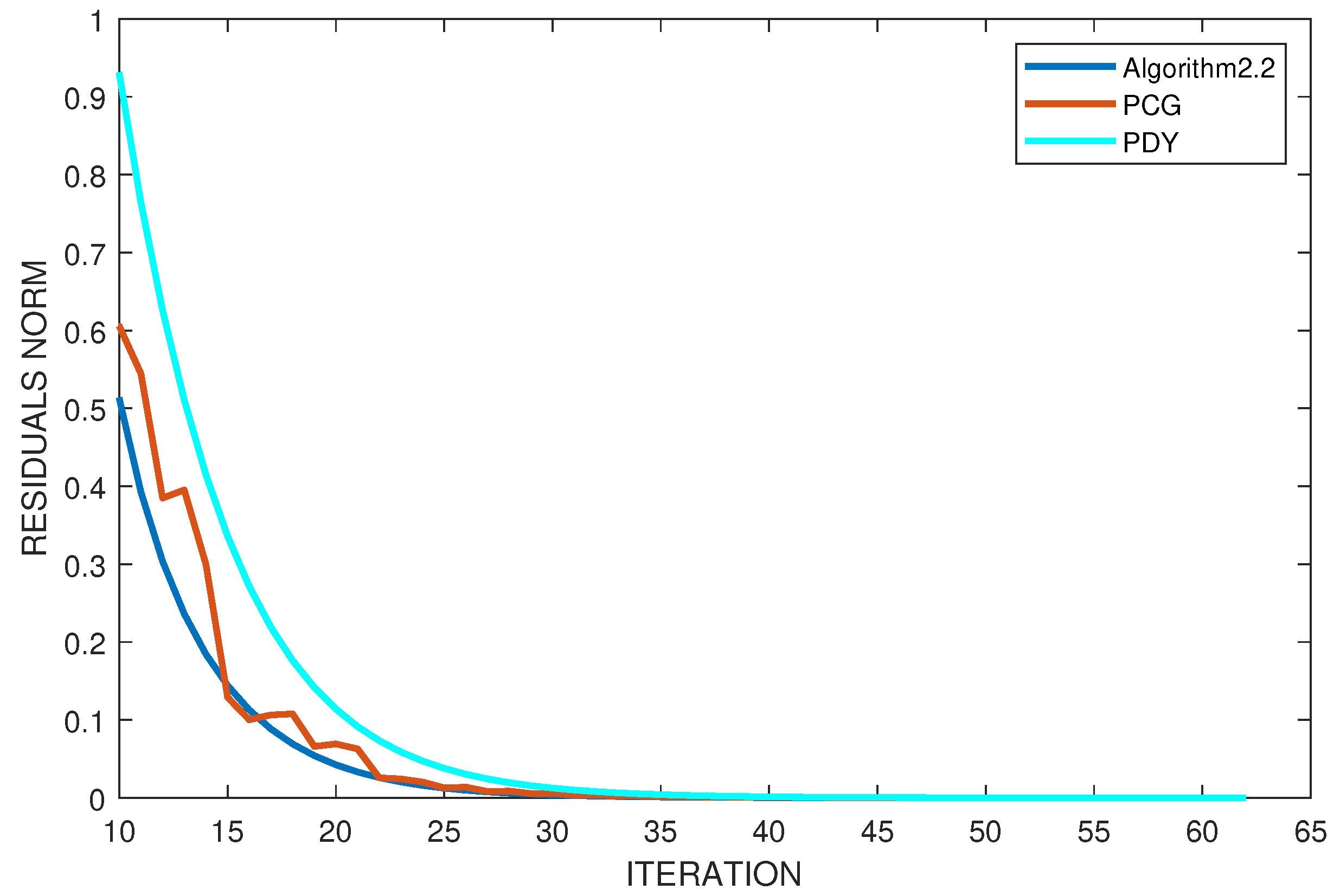

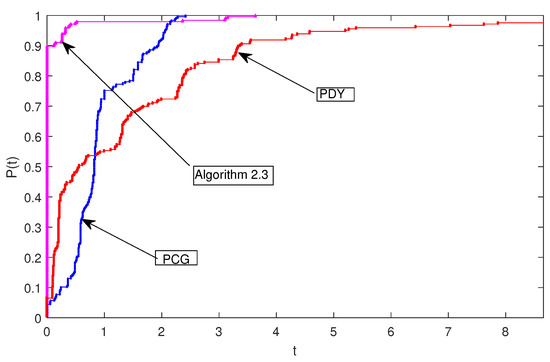

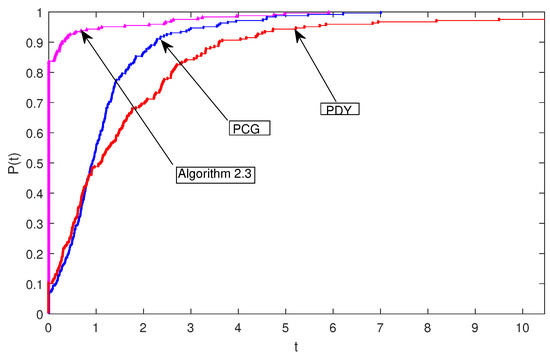

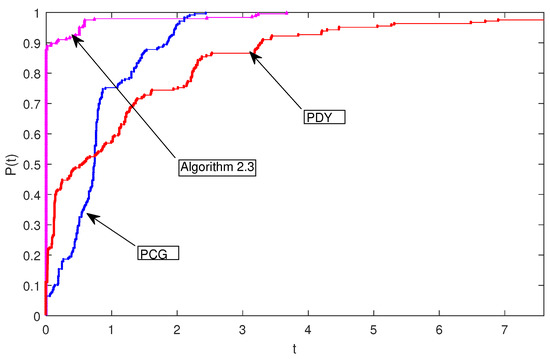

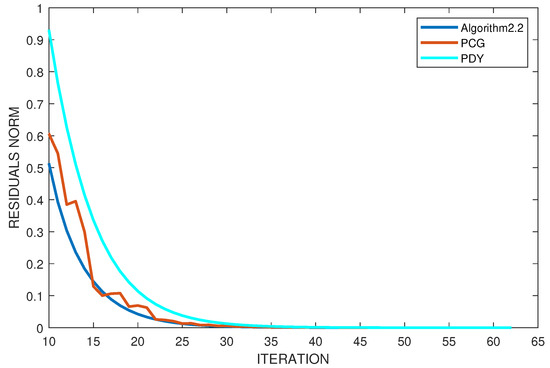

In addition, we employ the performance profile developed in Reference [30] to obtain Figure 1, Figure 2 and Figure 3, which is a helpful process of standardizing the comparison of methods. The measure of the performance profile considered are; number of iterations, CPU time (in seconds) and number of function evaluations. Figure 1 reveals that Algorithm 1 most performs better in terms of number of iterations, as it solves and wins 90 percent of the problems with less number of iterations, while PCG and PDY solves and wins less than 10 percent. In Figure 2, Algorithm 1 performed a little less by solving and winning over 80 percent of the problems with less CPU time as against PCG and PDY with similar performance of less than 10 percent of the problems considered. The translation of Figure 3 is identical to Figure 1. Figure 4 is the plot of the decrease in residual norm against number of iterations on problem 9 with as initial point. It shows the speed of the convergence of each algorithm using the convergence tolerance , it can be observed that Algorithm 1 converges faster than PCG and PDY.

Figure 1.

Performance profiles for the number of iterations.

Figure 2.

Performance profiles for the CPU time (in seconds).

Figure 3.

Performance profiles for the number of function evaluations.

Figure 4.

Convergence histories of Algorithm 1, PCG and PDY on Problem 9.

Applications in Compressive Sensing

There are many problems in signal processing and statistical inference involving finding sparse solutions to ill-conditioned linear systems of equations. Among popular approach is minimizing an objective function which contains quadratic () error term and a sparse —regularization term, that is,

where , is an observation, () is a linear operator, is a non-negative parameter, denotes the Euclidean norm of x and is the —norm of x. It is easy to see that problem (24) is a convex unconstrained minimization problem. Due to the fact that if the original signal is sparse or approximately sparse in some orthogonal basis, problem (24) frequently appears in compressive sensing and hence an exact restoration can be produced by solving (24).

Iterative methods for solving (24) have been presented in many papers (see References [5,31,32,33,34,35]). The most popular method among these methods is the gradient based method and the earliest gradient projection method for sparse reconstruction (GPRS) was proposed by Figueiredo et al. [5]. The first step of the GPRS method is to express (24) as a quadratic problem using the following process. Let and splitting it into its positive and negative parts. Then x can be formulated as

where , for all and . By definition of -norm, we have , where . Now (24) can be written as

which is a bound-constrained quadratic program. However, from Reference [5], Equation (25) can be written in standard form as

where , , , .

Clearly, D is a positive semi-definite matrix, which implies that Equation (26) is a convex quadratic problem.

Xiao et al. [19] translated (26) into a linear variable inequality problem which is equivalent to a linear complementarity problem. Furthermore, it was noted that z is a solution of the linear complementarity problem if and only if it is a solution of the nonlinear equation:

The function F is a vector-valued function and the “min” is interpreted as component-wise minimum. It was proved in References [36,37] that is continuous and monotone. Therefore problem (24) can be translated into problem (1) and thus Algorithm 1 (DCG) can be applied to solve it.

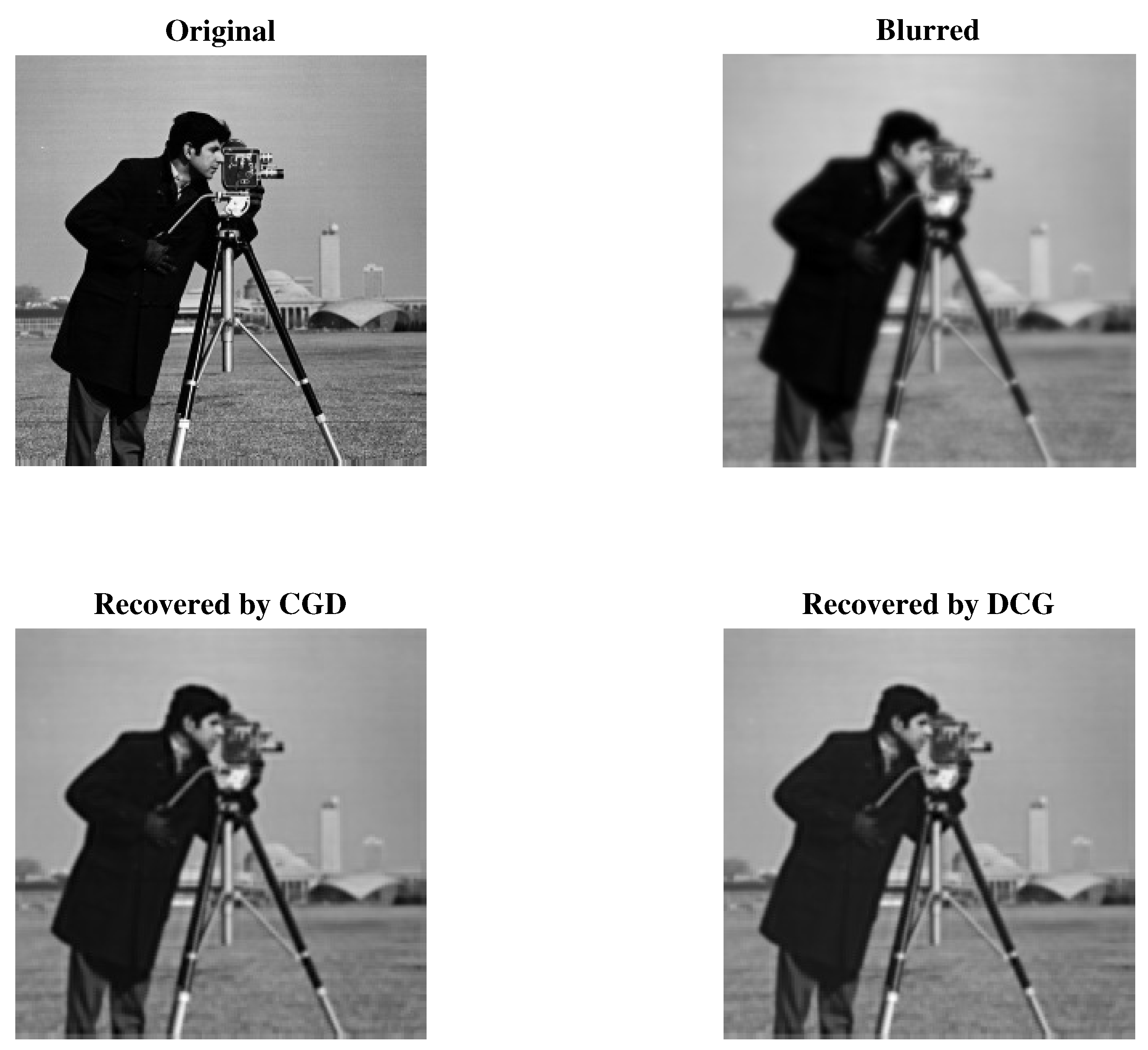

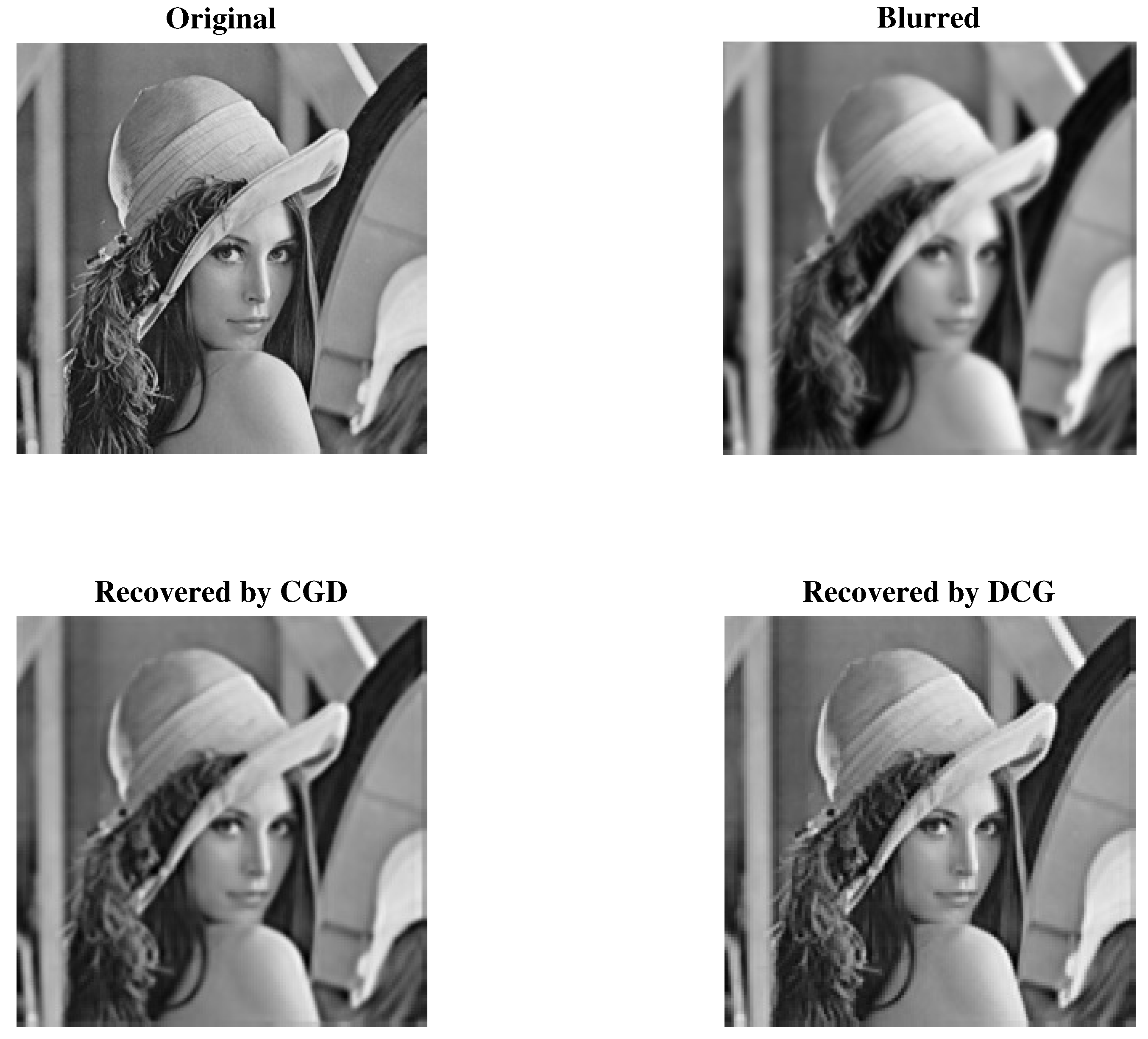

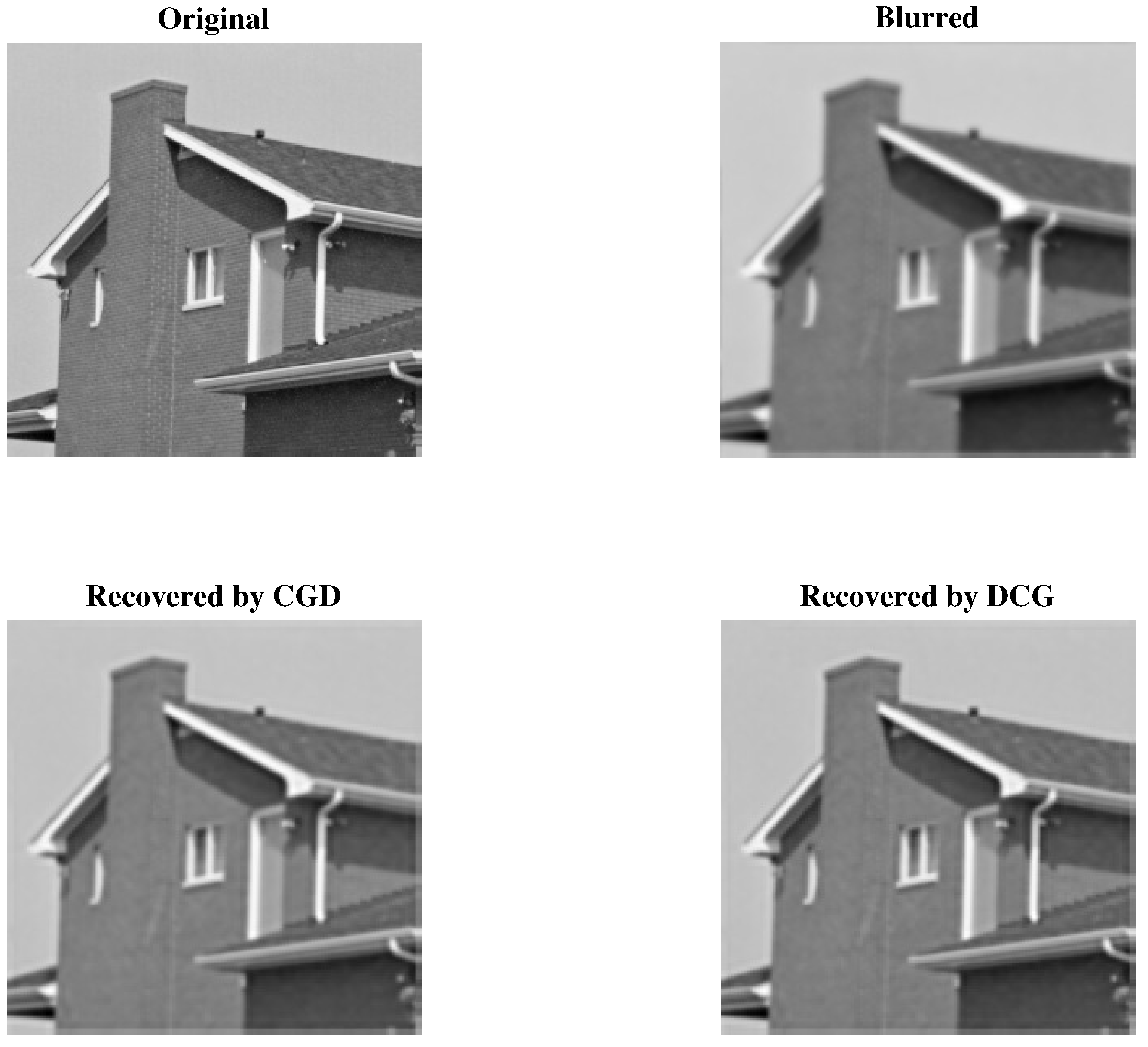

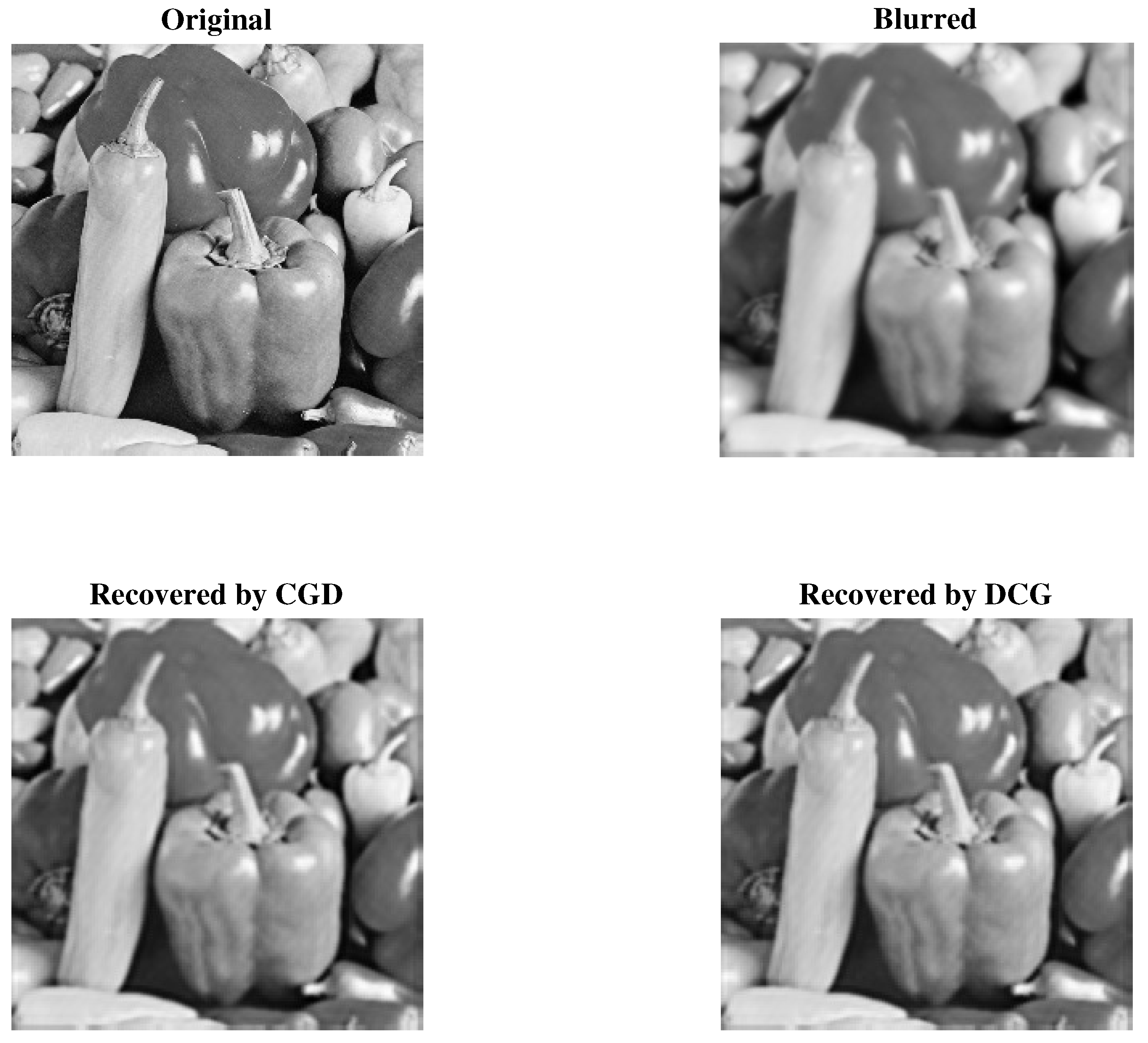

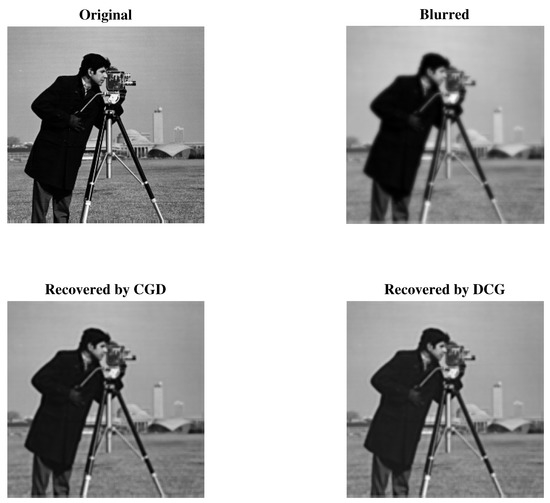

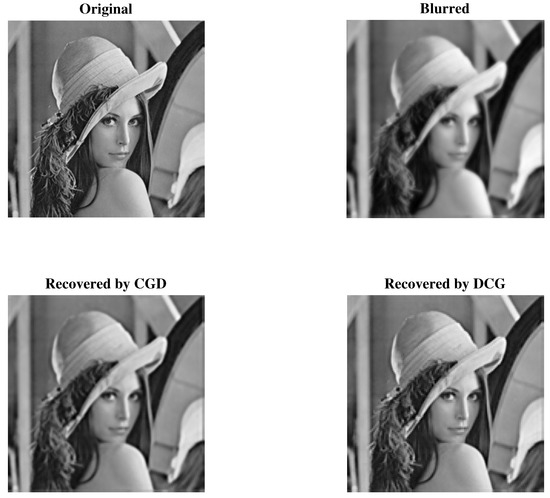

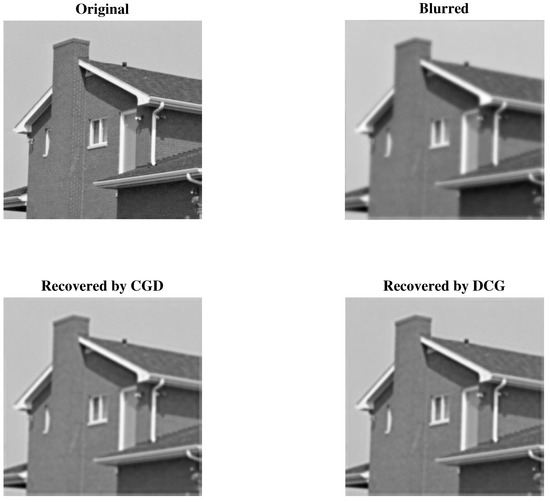

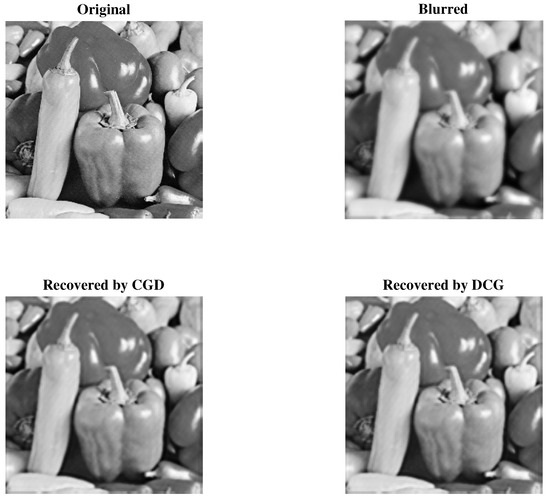

In this experiment, we consider a simple compressive sensing possible situation, where our goal is to restore a blurred image. We use the following well-known gray test images; (P1) Cameraman, (P2) Lena, (P3) House and (P4) Peppers for the experiments. We use 4 different Gaussian blur kernals with standard deviation to compare the robustness of DCG method with CGD method proposed in Reference [19]. CGD method is an extension of the well-known conjugate gradient method for unconstrained optimization CG-DESCENT [20] to solve the -norm regularized problems.

To access the performance of each algorithm tested with respect to metrics that indicate a better quality of restoration, in Table 10 we reported the number of iterations, the objective function (ObjFun) value at the approximate solution, the mean of squared error (MSE) to the original image ,

where is the reconstructed image and the signal-to-noise-ratio (SNR) which is defined as

We also reported the structural similarity (SSIM) index that measure the similarity between the original image and the restored image [38]. The MATLAB implementation of the SSIM index can be obtained at http://www.cns.nyu.edu/~lcv/ssim/.

Table 10.

Efficiency comparison based on the value of the number of iterations (Iter), objective function (ObjFun) value, mean-square-error (MSE) and signal-to-noise-ratio (SNR) under different Pi ().

The original, blurred and restored images by each of the algorithm are given in Figure 5, Figure 6, Figure 7 and Figure 8. The figures demonstrate that both the two tested algorithm can restored the blurred images. It can be observed from Table 10 and Figure 5, Figure 6, Figure 7 and Figure 8 that Algorithm 1 (DCG) compete with the CGD algorithm, therefore, it can be used as an alternative to CGD for restoring blurred image.

Figure 5.

The original image (top left), the blurred image (top right), the restored image by CGD (bottom left) with SNR = 20.05, SSIM = 0.83 and by DCG (bottom right) with SNR = 20.12, SSIM = 0.83.

Figure 6.

The original image (top left), the blurred image (top right), the restored image by CGD (bottom left) with SNR = 22.93, SSIM = 0.87 and by DCG (bottom right) with SNR = 24.36, SSIM = 0.90.

Figure 7.

The original image (top left), the blurred image (top right), the restored image by CGD (bottom left) with SNR = 25.65, SSIM = 0.86 and by DCG (bottom right) with SNR = 26.37, SSIM = 0.87.

Figure 8.

The original image (top left), the blurred image (top right), the restored image by CGD (bottom left) with SNR = 21.50, SSIM = 0.84 and by DCG (bottom right) with SNR = 21.81, SSIM = 0.85.

4. Conclusions

In this research article, we present a CG method which possesses the sufficient descent property for solving constrained nonlinear monotone equations. The proposed method has the ability to solve non-smooth equations as it does not require matrix storage and Jacobian information of the nonlinear equation under consideration. The sequence of iterates generated converge the solution under appropriate assumptions. Finally, we give some numerical examples to display the efficiency of the proposed method in terms of number of iterations, CPU time and number of function evaluations compared with some related methods for solving convex constrained nonlinear monotone equations and its application in image restoration problems.

Author Contributions

Conceptualization, A.B.A.; methodology, A.B.A.; software, H.M.; validation, P.K. and A.M.A.; formal analysis, P.K. and H.M.; investigation, P.K. and A.M.A.; resources, P.K.; data curation, A.B.A. and H.M.; writing—original draft preparation, A.B.A.; writing—review and editing, H.M.; visualization, A.M.A.; supervision, P.K.; project administration, P.K.; funding acquisition, P.K.

Funding

Petchra Pra Jom Klao Doctoral Scholarship for Ph.D. program of King Mongkut’s University of Technology Thonburi (KMUTT) and Theoretical and Computational Science (TaCS) Center. Moreover, this project was partially supported by the Thailand Research Fund (TRF) and the King Mongkut’s University of Technology Thonburi (KMUTT) under the TRF Research Scholar Award (Grant No. RSA6080047).

Acknowledgments

We thank Associate Professor Jin Kiu Liu for providing us with the access of the CGD-CS MATLAB codes. The authors acknowledge the financial support provided by King Mongkut’s University of Technology Thonburi through the “KMUTT 55th Anniversary Commemorative Fund”. This project is supported by the theoretical and computational science (TaCS) center under computational and applied science for smart research innovation (CLASSIC), Faculty of Science, KMUTT. The first author was supported by the “Petchra Pra Jom Klao Ph.D. Research Scholarship from King Mongkut’s University of Technology Thonburi”.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gu, B.; Sheng, V.S.; Tay, K.Y.; Romano, W.; Li, S. Incremental support vector learning for ordinal regression. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 1403–1416. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Li, X.; Yang, B.; Sun, X. Segmentation-based image copy-move forgery detection scheme. IEEE Trans. Inf. Forensics Secur. 2015, 10, 507–518. [Google Scholar]

- Wen, X.; Shao, L.; Xue, Y.; Fang, W. A rapid learning algorithm for vehicle classification. Inf. Sci. 2015, 295, 395–406. [Google Scholar] [CrossRef]

- Michael, S.V.; Alfredo, I.N. Newton-type methods with generalized distances for constrained optimization. Optimization 1997, 41, 257–278. [Google Scholar]

- Figueiredo, M.A.T.; Nowak, R.D.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 2007, 1, 586–597. [Google Scholar] [CrossRef]

- Magnanti, T.L.; Perakis, G. Solving variational inequality and fixed point problems by line searches and potential optimization. Math. Program. 2004, 101, 435–461. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, Y.; Kwong, S. Efficient motion and disparity estimation optimization for low complexity multiview video coding. IEEE Trans. Broadcast. 2015, 61, 166–176. [Google Scholar]

- Xia, Z.; Wang, X.; Sun, X.; Wang, Q. A secure and dynamic multi-keyword ranked search scheme over encrypted cloud data. IEEE Trans. Parallel Distrib. Syst. 2016, 27, 340–352. [Google Scholar] [CrossRef]

- Zheng, Y.; Jeon, B.; Xu, D.; Wu, Q.M.; Zhang, H. Image segmentation by generalized hierarchical fuzzy c-means algorithm. J. Intell. Fuzzy Syst. 2015, 28, 961–973. [Google Scholar]

- Solodov, M.V.; Svaiter, B.F. A globally convergent inexact newton method for systems of monotone equations. In Reformulation: Nonsmooth, Piecewise Smooth, Semismooth and Smoothing Methods; Springer: Dordrecht, The Netherlands, 1998; pp. 355–369. [Google Scholar]

- Mohammad, H.; Abubakar, A.B. A positive spectral gradient-like method for nonlinear monotone equations. Bull. Comput. Appl. Math. 2017, 5, 99–115. [Google Scholar]

- Zhang, L.; Zhou, W. Spectral gradient projection method for solving nonlinear monotone equations. J. Comput. Appl. Math. 2006, 196, 478–484. [Google Scholar] [CrossRef]

- Zhou, W.J.; Li, D.H. A globally convergent BFGS method for nonlinear monotone equations without any merit functions. Math. Comput. 2008, 77, 2231–2240. [Google Scholar] [CrossRef]

- Zhou, W.; Li, D. Limited memory BFGS method for nonlinear monotone equations. J. Comput. Math. 2007, 25, 89–96. [Google Scholar]

- Abubakar, A.B.; Waziria, M.Y. A matrix-free approach for solving systems of nonlinear equations. J. Mod. Methods Numer. Math. 2016, 7, 1–9. [Google Scholar] [CrossRef]

- Abubakar, A.B.; Kumam, P. An improved three-term derivative-free method for solving nonlinear equations. Comput. Appl. Math. 2018, 37, 6760–6773. [Google Scholar] [CrossRef]

- Abubakar, A.B.; Kumam, P.; Awwal, A.M. A descent dai-liao projection method for convex constrained nonlinear monotone equations with applications. Thai J. Math. 2018, 17, 128–152. [Google Scholar]

- Wang, C.; Wang, Y.; Xu, C. A projection method for a system of nonlinear monotone equations with convex constraints. Math. Methods Oper. Res. 2007, 66, 33–46. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhu, H. A conjugate gradient method to solve convex constrained monotone equations with applications in compressive sensing. J. Math. Anal. Appl. 2013, 405, 310–319. [Google Scholar] [CrossRef]

- Hager, W.; Zhang, H. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 2005, 16, 170–192. [Google Scholar] [CrossRef]

- Liu, S.-Y.; Huang, Y.-Y.; Jiao, H.-W. Sufficient descent conjugate gradient methods for solving convex constrained nonlinear monotone equations. Abstr. Appl. Anal. 2014, 2014, 305643. [Google Scholar] [CrossRef]

- Liu, J.K.; Li, S.J. A projection method for convex constrained monotone nonlinear equations with applications. Comput. Math. Appl. 2015, 70, 2442–2453. [Google Scholar] [CrossRef]

- Ding, Y.; Xiao, Y.; Li, J. A class of conjugate gradient methods for convex constrained monotone equations. Optimization 2017, 66, 2309–2328. [Google Scholar] [CrossRef]

- Liu, J.; Feng, Y. A derivative-free iterative method for nonlinear monotone equations with convex constraints. Numer. Algorithms 2018, 1–18. [Google Scholar] [CrossRef]

- Muhammed, A.A.; Kumam, P.; Abubakar, A.B.; Wakili, A.; Pakkaranang, N. A new hybrid spectral gradient projection method for monotone system of nonlinear equations with convex constraints. Thai J. Math. 2018, 16, 125–147. [Google Scholar]

- La Cruz, W.; Martínez, J.; Raydan, M. Spectral residual method without gradient information for solving large-scale nonlinear systems of equations. Math. Comput. 2006, 75, 1429–1448. [Google Scholar] [CrossRef]

- Bing, Y.; Lin, G. An efficient implementation of Merrill’s method for sparse or partially separable systems of nonlinear equations. SIAM J. Optim. 1991, 1, 206–221. [Google Scholar] [CrossRef]

- Yu, Z.; Lin, J.; Sun, J.; Xiao, Y.H.; Liu, L.Y.; Li, Z.H. Spectral gradient projection method for monotone nonlinear equations with convex constraints. Appl. Numer. Math. 2009, 59, 2416–2423. [Google Scholar] [CrossRef]

- Yamashita, N.; Fukushima, M. Modified Newton methods for solving a semismooth reformulation of monotone complementarity problems. Math. Program. 1997, 76, 469–491. [Google Scholar] [CrossRef]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D. An EM algorithm for wavelet-based image restoration. IEEE Trans. Image Process. 2003, 12, 906–916. [Google Scholar] [CrossRef]

- Hale, E.T.; Yin, W.; Zhang, Y. A Fixed-Point Continuation Method for ℓ1-Regularized Minimization with Applications to Compressed Sensing; CAAM TR07-07; Rice University: Houston, TX, USA, 2007; pp. 43–44. [Google Scholar]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Van Den Berg, E.; Friedlander, M.P. Probing the pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 2008, 31, 890–912. [Google Scholar] [CrossRef]

- Birgin, E.G.; Martínez, J.M.; Raydan, M. Nonmonotone spectral projected gradient methods on convex sets. SIAM J. Optim. 2000, 10, 1196–1211. [Google Scholar] [CrossRef]

- Xiao, Y.; Wang, Q.; Hu, Q. Non-smooth equations based method for ℓ1-norm problems with applications to compressed sensing. Nonlinear Anal. Theory Methods Appl. 2011, 74, 3570–3577. [Google Scholar] [CrossRef]

- Pang, J.-S. Inexact Newton methods for the nonlinear complementarity problem. Math. Program. 1986, 36, 54–71. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).