Abstract

In multi-scale information systems, the information is often characterized at multi scales and multi levels. To facilitate the computational process of multi-scale information systems, we employ the matrix method to represent the multi-scale information systems and to select the optimal scale combination of multi-scale decision information systems in this study. To this end, we first describe some important concepts and properties of information systems using some relational matrices. The relational matrix is then introduced into multi-scale information systems, and used to describe some main concepts in systems, including the lower and upper approximate sets and the consistence of systems. Furthermore, from the view of the relation matrix, the scale significance is defined to describe the global optimal scale and the local optimal scale of multi-scale information systems. Finally, the relational matrix is used to compute the scale significance and to construct the optimal scale selection algorithms. The efficiency of these algorithms is examined by several practical examples and experiments.

1. Introduction

Granular computing [1,2] originated from fuzzy information granulation is a mathematical method for knowledge representation and data mining. The purpose is to solve complex problems by dividing the massive information into relatively simple blocks according to its respective characteristics and performance. Since the concept of granular computing was put forward, it has become a hot research topic and has been widely used in many practical applications [3,4,5,6,7,8,9,10,11,12,13].

The theory of rough set plays an important role in the promotion and development of granular computing [14,15,16,17,18,19,20]. Pawlak [14] used an information system to study granular computing. If a decision is required, it is usually an information system with decision attributes.

People can process a lot of information at different levels and scales. Based on this view point, multi-scale information systems have been developed and widely studied [21,22,23,24,25,26,27]. Ferone et al. used feature granulation to study feature selection [12,13]. In papers [21,22], Wu and Leung et al. proposed a multi-scale decision information system model. In their model, the objects are granulated with granularity methods from fine to coarse. The optimal scale selection of multi-scale decision tables was also investigated. Gu and Wu [23] presented a formal method of knowledge acquisition measured at different granularity. Wu and Qian [24] measured the uncertainty in incomplete multi-scale information tables with the Dempster-Shafer evidence theory [28]. Shen et al. [25] employed a local method to induce decision rules in multi-scale decision tables. Li and Hu [26] introduced a step-wise method for the optimal scale selection of multi-scale decision tables.

Due to the massive information provided in multi-scale information systems, which leads to much time consuming in the computation of the concepts in the systems, this article aims to employ the Boolean matrix and matrix computation in order to facilitate the knowledge description and the optimal scale selection in multi-scale decision tables. Indeed, the matrix method has had a computation advantage in many backgrounds [29,30,31,32,33,34,35,36,37], such as in classical rough set and Information systems [29,30,31], static and dynamic information systems [32,35,36], covering information systems [33,34], and so on. For multi-scale information systems, it is worthy to use the relational matrix to obtain the optimal scale selection and develop some matrix methods to facilitate the computational process.

The paper is structured as follows. We first introduce a relational matrix to represent the relative concepts in information systems. We then examine some properties of information systems based on the Boolean matrix. In Section 3, we introduce multi-scale information systems and the Boolean matrix representation. In Section 4, we use the relational matrix to define the scale significance, and to investigate the optimal scale selection of multi-scale information systems. In Section 5, we seek the optimal scale of multi-scale information systems by using the scale significance based on the relation matrix. The paper is finally concluded with a summary. In Section 7, the effectiveness of the matrix method is illustrated by experiments.

2. Preliminary Background

2.1. Rough Set and Information Systems

In this section we introduce some basic concepts of rough set and information systems.

Definition 1

([1]). The Pawlak approximate space is defined as , where is a non-empty finite set called the universe, and R is the equivalent relation on U, the lower and the upper approximation sets of are defined as , , respectively:

where is the equivalent class containing x.

Definition 2

([14]). Let be an information system, where is a non-empty finite set called the universe, is a non-empty finite set of attributes. For any , there is a surjective map ie. , where is called the domain of attribute a. For any , the equivalent relation On U is determined as

The equivalence class of in terms of is denoted as

The lower approximation set and the upper approximate set of corresponding to the attribute subset B are represented as and , respectively,

Definition 3

([14]). Let be a decision information system, where is an information system, is called the decision attribute, and is a surjective map, where is called the domain of d.

Similarly, the equivalent relation on U induced by is given by

The equivalence class of is denoted as

Let be a decision information system, we summarize the following two kinds of consistent:

(1) If , then S is globally consistent;

(2) For , if , then S is locally consistent for x.

2.2. Boolean Matrix Characterization of Decision Information Systems

Definition 4.

A non-negative matrix is called a Boolean matrix, where . Let are Boolean matrices, define the following operations:

(1) Order relation ;

(2) Meet

(3) Boolean product , where ;

(4) Complement .

where ,

It can be seen that and

Definition 5

([33]). Let and , The characteristic function of X is defined as , where

It can be seen that

Definition 6

([33]). Let be an approximate space, , The relation matrix of R is defined as , where

Obviously, by Definitions 5 and 6, we have

Theorem 1

([30]). Let be an approximate space, , and , then

Proof.

Let , , .

According to the symmetry of matrix and Definition 6, we have and . Therefore . Similarly, . Therefore

By , we have □

Example 1.

Let

Therefore, .

Theorem 2

([29]). Let U be an universe, are equivalent relations on U, then the following conclusions hold:

(1) ;

(2) If , then

Let be an decision information system, , the relation matrix of the equivalent relation is denote as , the relation matrix corresponding to is denoted as ,

Theorem 3.

Let be an decision information system, , then , where

and , where

Proof.

By Definition 2, , by Definition 6, we have . Similarly □

Theorem 4.

Let be a decision information system, then the following conclusions are obtained:

(1) S is locally consistent for if and only hold;

(2) S is globally consistent if and only if is hold.

Proof.

By Definition 5, S is locally consistent for ;

By Theorem 2, we have S is globally consistent . □

Example 2.

Determining the globally consistent of the following system Table 1.

Table 1.

A decision syetem.

is the relational matrix corresponding to A, is the relational matrix corresponding to d. According to Theorem 3, by calculation

Obviously , by Theorem 4, the is globally consistent.

3. Relational Matrix in Generalized Multi-Scale Information Systems

The theoretical model of multi-scale decision information systems was first proposed by Wu and Leung [21]. Some scholars have participated in this study [21,22,23,24,25,26,27,38,39,40,41], Li and Hu generalized this model in paper [27]. Among many studies, optimal scale selection is an important subject. Wu, Li et al. [29,38,40] studied the optimal scale selection of multi-scale decision information systems. Gu et al. [39] studied the optimal scale selection of incomplete multi-scale decision information systems. Li and Hu [27] studied the optimal scale selection for generalized multi-scale decision information systems. Li et al. [26] gave an attribute significance of generalized multi-scale decision information systems to search for optimal scale selection. Decision information system models involve a large number of set operations. The matrix itself is a powerful tool. The use of matrices to study multi-scale information systems helps to improve algorithm efficiency and further develop multi-scale information table theory. In this part, we introduce relation matrix into multi-scale information systems to prepare for optimal scale selection.

Definition 7

([21,27]). A multi-scale information system is a tuple , where is a nonempty and finite set of objects called the universe, is a nonempty and finite set of attributes, and each has scales. Then a multi-scale information system can be represented as

where is a surjective function and is the domain of corresponding to the kth scale, and furthermore, for any , there exists a surjective such that , i.e., , is called the information granularity transformation function.

When , the information system defined above is the multi-scale information system proposed by Wu-Leung in paper [21].

The equivalent relation determined by attribute is

By the existence of the surjective , for any , we have

For a multi-scale information system , if the attribute is restricted on their scale, we call to be a scale combination of the system , and all the scale combinations of the system are denoted as ∑ and ∑ forms a partial ordered lattice [27].

The information system corresponding to the scale combination is denoted as

The equivalent relation induced by is denoted as , while the relation matrix of is denoted as .

Definition 8

([27]). Let be a multi-scale information system, , if , we called the scale combination to be K is finer than L, denoted as , and further if and there is at least one of such that , we called K is strictly finer than L, denoted as .

Example 3.

The following example gives a comprehensive evaluation of three courses for eight students, and scores are divided into four criteria, as shown in Table 2.

Table 2.

Evaluation criteria.

We use attribute to evaluate the results of the first course, which divided into four levels, attribute for the second course, which divided into three levels, and attribute for the third course, which divided into two levels. Attribute d is called decision attribute, 1 is qualified and 0 is unqualified. According to the scores of each student, the following multi-scale decision-making information system is obtained, as shown in Table 3. In order to facilitate the expression below, we denote excellent by "E", and similarly "G" denotes good, "F" denotes fair, "P" denotes pass, "B" denotes bad, "S" denotes super, "M" denotes middle, "L" denotes low, "Y" denotes yes, "N" denotes no.

Table 3.

A generalized multi-scale information system.

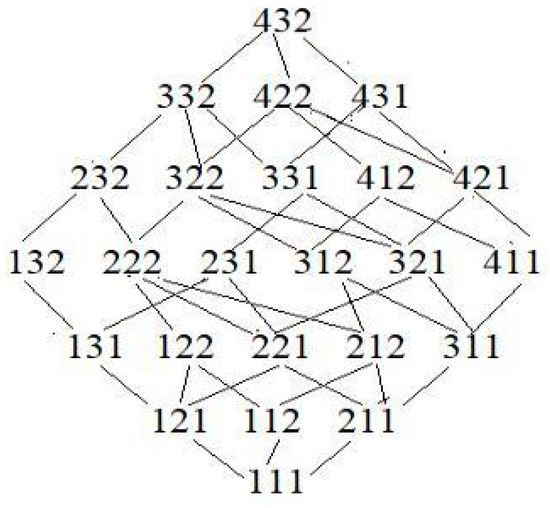

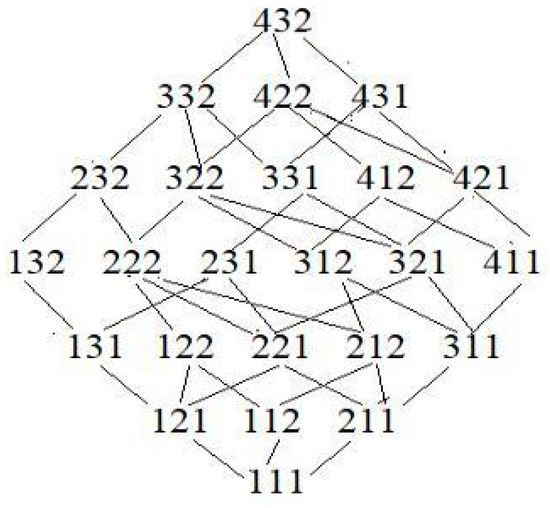

There are 24 different scale combinations in multi-scale information system in Table 3. According to the scale relation in Definition 8, we can obtain a partial ordered lattices [27], as shown in Figure 1.

Figure 1.

Partial ordered lattices of scale combinations.

We now discuss some properties of multi-scale information systems using the relation matrix.

Let be a multi-scale information system and ∑ is the set of all scale combinations for the system, the decision information system corresponding to the scale combination K is , the relation matrix of is denote as .

Theorem 5.

Let be a multi-scale information system, then , where

Proof.

This conclusion can be obtained from Theorem 3. □

Theorem 6.

Let be a multi-scale information system and ∑ is the set of all scale combinations for the system, . Then the following conclusions hold:

(1) For any attribute ;

(2) ;

(3) ;

(4) ;

(5) ;

(6) ;

(7) .

Proof.

(1) By Definition 7, as a result of , according to Theorem 2, we have ;

(2) ;

(3) Suppose , then , , by Theorem 2, we have ;

(4) (4) and (5) can be directly obtained by Theorem 1;

(6) ;

(7) . □

4. Optimal Scale Selection for Consistent Multi-Scale Decision Information Systems

Definition 9

([21,27]). Let be a multi-scale decision information system, where is a multi-scale information system, ∑ is the collection of all scale combinations, is a decision attribute and is a surjective map, where is called the domain of d.

4.1. Global Optimal Scale

The decision information system corresponding to the scale combination is denoted as

Obviously, is globally consistent if and only if

The relation matrix corresponding to the equivalent relation is , the relation matrix corresponding to the equivalent relation is .

By Theorem 2, is globally consistent if and only if .

Definition 10

([21]). Let be a multi-scale decision information system, and ∑ is the collection of all scale combinations, For the finer scale , if , then we call S to be consistent. If there is a such that , but for any , , does not hold, we call K is a global optimal scale combination.

Theorem 7.

Let be a multi-scale decision information system, and ∑ the collection of all scale combinations. Then the following conclusions hold:

(1) S is globally consistent if and only if ;

(2) S is globally consistent if and only if ;

(3) A scale combination is the global optimal scale combination if and only if holds, and for any with , does not hold;

(4) Suppose let , then .

Proof.

(1)S is global consistent ;

(2) The result is obvious;

(3) By the definition of the global optimal scale combination, it is easy to be obtained;

(4) As a result of , we have , Therefore, □

Definition 11.

Let be a multi-scale decision information system and ∑ is the collection of all scale combinations, . Define the significance of scale combination K as

where represents the numbers of 1 in the matrix A.

Let be a multi-scale decision information system, and ∑ is the collection of all scale combinations. By Definition 7, the decision information system corresponding to scale combination K is represented as . Obviously, is globally consistent if .

For the scale combination , if the decision information system is globally inconsistent, then is not true, i.e., . The direct subsequent scale of scale combination K is denoted as , The global consistence of the decision information system is compared, i.e., the following significance is calculated as

It can be seen that if is more smaller, the scale becomes more significant. This means that this metric combination is selected first, in other words, the information system corresponding to this scale combination is closer to consistent. Based on this idea, a global optimal scale combination selection algorithm of a multi-scale decision system can be described as Algorithm 1.

As Algorithm 1 shows, the algorithm sets up the scale combination variable and significant variable with the coarsest scale and maximun value initially, and then the scale combination K is put into a queue and starts a iteration. In the iteration, the current scale combination K is take out from the queue and is calculated. If the current is smaller than , then and are replaced by the current scale combination K and . During the iterating, if the value of become zero then iteration is terminal and optimal scale combination is come out. When the queue is empty and , consequence finer scale combinations are constructed from and are put into the queue, and starts another iteration.

| Algorithm 1: Selecting the global optimal scale combination of a multi-scale decision system |

| Input: A multi-scale decision system ; Output: The global optimal scale combination. calculates ; Let Queue=NULL Let //set the coarsest scale combination initially Let = Integer. MAX VALUE //set maximum value to initially Queue. put() While (true) While (not Queue. empty) Let Queue. get() //pick a scale combination K from Queue Let //calculate If ( ) //choose the minimum and its K Let = Let =K EndIf If (==0) //if the optimal scale combination is found, return. return EndIf EndWhile //loop until Queue. empty Queue.clear() //empty the Queue, and construct next finer scale combination Let For each //set finer scale to each attribute, respectively If Let Queue. put(K) EndIf EndFor //next to search finer scale combination EndWhile |

Example 4.

In Example 2, we seek the global optimal scale combination of the system corresponding to Table 3.

By Definition 11, the sufficient condition for the decision information system being global consistent is , where .

We apply scale combination relation diagram 1 to illustrate the calculation steps of optimal scale selection in Table 3. We first judge whether the system is consistent or not, and then, according to the definition of scale significant, we look for the optimal scale combination from the scale relation diagram 1 from top to bottom.

(1) For the smallest scale combination , according to Theorems 3 and 5, by calculation

By Definition 11, , So, the decision information system is global consistent, by Definition 10, we see that is global consistent;

(2) For the coarsest scale combination , similarly, by calculation, ;

(3) For each scale combination that belongs to (4,3,2) for the second layer, similarly, by calculation, , therefore, the scale combination is the most important. We continue to search for the optimal scale according to this branch;

(4) For each scale combination that belongs to (4,2,2) for the third layer, by calculation, , therefore the decision information system is global consistent and the scale combination is the global optimal scale combination.

This means that the evaluation of the eight students in Table 3 can be fully decision-making and (4, 2, 2) is an optimal scale combination for maintaining decision-making.

4.2. Local Optimal Scale

Definition 12.

Let be a consistent multi-scale decision system. and ∑ is the collection of all scale combinations, For and the scale combination , if , but for any does not hold, we call K is a local optimal scale combination of S for x.

Theorem 8.

Let be a consistent multi-scale decision system, and ∑ the collection of all scale combinations. Given a scale combination , the following conclusion holds:

Definition 13.

Let be a multi-scale decision information system, and ∑ is the collection of all scale combinations, . then the significance of scale combination K for is defined as

where represents the the numbers of 1 in the boolean vector .

If , then , then the decision information system is locally consistent for . Based on this idea, a local optimal scale combination selection algorithm of a multi-scale decision system can be described as Algorithm 2.

The computation process of Algorithm 2 is similar to Algorithm 1, except the value of is the value of relevant to the given object x.

Example 5.

Look for the local optimal scale combination for in Table 3.

We apply scale combination relation diagram 1 to illustrate the calculation steps of optimal local scale selection for , by Definition 13,

(1) For the smallest scale combination, by calculation, , the system is local consistent for ;

(2) For the coarsest scale combination by calculation, , Hence, is local inconsistent for ;

(3) For each scale combination that belongs to (4,3,2) for the second layer, by calculation, , Hence the scale combination is the local optimal scale combination for .

| Algorithm 2: Selecting the local optimal scale combination of a multi-scale decision system |

| Input: A consistent multi-scale decision systems and an object . Output: A local optimal scale combination for x. Calculates ; Let Queue=NULL Let //set the coarsest scale combination initially Let = Integer. MAX VALUE //set maximum value to initially Queue. put() While (true) While (not Queue.empty) Let Queue.get() //pick a scale combination K from Queue Let //calculate If ( ) //choose the minimum and its K Let = Let EndIf If (==0) //if the optimal scale combination is found, return. return EndIf EndWhile //loop until Queue. empty Queue.clear() //empty the Queue, and construct next finer scale combination Let For each //set finer scale to each attribute, respectively If Let Queue. put(K) EndIf EndFor //next to search finer scale combinations EndWhile |

5. Local Optimal Scale Selection for Inconsistent Generalized Decision Information Systems

Let be a decision information system which defined by Definition 3, where is the universe, is the set of conditional attributes, is the decision attribute. If the decision information system S is locally consistent for , then is unique. If the decision information system is globally consistent, is unique for any . For an inconsistent decision information system, , such that is not unique. For this reason, Wu and Leung put forward a definition of generalized decision in their paper [21].

Definition 14

([21]). Let be a decision information system. For , the generalized decisions of object is defined as

Definition 15.

let , are matrices. Define

The following theorem is easily obtained from Definitions 14 and 15.

Theorem 9.

Let be a decision information system, Let , , represents the set of all component elements in vector , for convenience, we denote . If , then

It should be pointed out that if there exist a and , the above theorem is not suitable. For this case, we may assign another value to the attribute d for x, which does not affect the classification and decision making.

Example 6.

Find the generalized decision of each object in the following inconsistent decision information system Table 4.

Table 4.

An inconsistent decision system.

By calculation , . According to Theorem 9, we have , therefore . can be obtained by the same method.

Definition 16.

Let be a multi-scale inconsistent decision information system, and ∑ is the collection of all scale combinations, the decision information systems corresponding to the scale combination K is . If holds, we call to be local generalized consistent for x. If is local generalized consistent for x, but for any with , is local generalized inconsistent for x, we call K the optimal local generalized consistence for x.

Theorem 10.

is a local generalized consistence for if and only if holds.

Proof.

By Theorem 9, is local consistent for

Theorem 11.

Let be an inconsistent multi-scale decision system, and ∑ is the collection of all scale combinations, . Then K is the optimal scale combination for if and only if holds. Additionally, for any

Definition 17.

Let be an inconsistent multi-scale decision system, and ∑ is the collection of all scale combinations, . Then the significance of scale combination K for is defined as

where represents the cardinality of the set .

If , then . Therefore, the decision information system is locally generalized consistent for . The computation process of Algorithm 3 is similar to Algorithm 2.

| Algorithm 3: Selecting the global optimal scale combination of a multi-scale decision system |

| Input: A inconsistent multi-scale decision system and an object . Output: A local generalized optimal scale combination for x. Let ; //set the finest scale combination Calculates ; // correspondents to with and in Theorem 9 Let Queue=NULL Let //set the coarsest scale combination initially Let = Integer. MAX VALUE //set maximum value to initially Queue. put() While (true) While (not Queue.empty) Let Queue. get() //pick a scale combination K from Queue Calculates ; // correspondents to with K and in Theorem 9 Let //calculate If ( ) //choose the minimum and its K Let = Let EndIf If (==0) //if the optimal scale combination is found, return. return EndIf EndWhile //loop until Queue. empty Queue.clear() //empty the Queue, and construct next finer scale combination Let For each //set finer scale to each attribute, respectively If Let Queue. put(K) EndIf EndFor //next to search finer scale combination EndWhile |

Example 7.

Search for the generalized local optimal scale combination of the following system Table 5 for

Table 5.

A generalized inconsistent multi-scale information system.

(1) , for scale combination ,

(2) For the coarsest scale and therefore, is not the generalized local optimal scale for

(3) For each scale combination that belongs to (3,3,2) for the second layer, by calculation, and hence the is the generalized local optimal scale combination for .

6. Conclusions

This paper introduces relational matrices and matrix calculations into multi-scale decision information systems. Some properties of multi-scale decision information systems are discussed based on matrix methods. Using the relation matrix to introduce the significance of scale combination, the method of optimal scale combination selection is studied, and the related algorithm is designed. The effectiveness of the method is illustrated by experiments. In the future, we will use the matrix method to study the classification and decision-making methods of multi-scale information systems.

7. Experiments and Analysis

In order to verify whether the Algorithms 1 and 2 are able to practically apply to choose the optimal combined-scale in multi-scale decision information system, some University of California Irvine (UCI) datasets are applied in the experiments. Table 6 shows the description of these datasets.

Table 6.

The UCI dataset description.

These data sets in Table 6 are single-scale decision datasets, and to extend these datasets from single-scale to multi-scale, some methods in [27] are adopted, which are described below.

Firstly, the finest level of scale of each attribute, that is, is the value of at attribute a in the original dataset.

Secondly, for each attribute a, the standard deviation and minimum are denoted as and , then the second level of scale of at the attribute a in multi-scale dataset is denoted:

where means the largest integer v satisfied .

Thirdly, based on the previous level of scale, the next scale of attribute a can be made by merging some equivalence classes in previous level of scale. For example, suppose the equivalence classes of previous level of scale denoted , then in the next level of scale, the class and class are merged into , which denoted by . That is, if is belong to or , then is , and the consequence equivalence classes is .

Table 7 shows the result of experiments using multi-scale optimal combined dataset according to the Algorithm 1. In Table 7 it presents the Support Vector Machine (SVM) classification accuracy both under the raw datasets and the refined datasets of optimal combined multi-scale, and it demonstrates the Algorithm 1 works well.

Table 7.

Experiments results of Algorithm 1.

The Table 7 also presents the rates of optimal, that is the percent of the attributes with level of scale ≥2 in the given refined dataset. For example, there are seven attributes in Auto_MPG raw dataset, the optimal combined scale of the refined dataset outcomes in the experiment is , which indicates three of seven attributes are optimal, and the rate of optimal is . Similarly, the average of level of scale (LoS) in the refined Auto_MPG dataset is , and relatively the LoS of raw Auto_MPG dataset is 1.

To compare the local multi-scale optimal method of Algorithm 2 with the global multi-scale optimal method of Algorithm 1, each datasets randomly select ≤5% instances of all and the final optimal combined-scale is the intersection of every optimal combined-scales obtained by the every single instance. For example, two instances are randomly selected in Auto_MPG, and their optimal combined-scales are [1,3,4,5,5,7,4] and [3,2,4,5,5,6,1] respectively, thus their final intersected combined-scale is [1,3,4,5,5,7,4]∧[3,2,4,5,5,6,1]=[1,2,4,5,5,6,1].

Table 8 shows the SVM accuracy, Rate of optimal, Average of LoS and time cost in Algorithm 2 relatively compare to Algorithm 2. It demonstrates that, the same as optimal performances of global multi-scale optimal algorithm can be achieved by choosing a small amount of instances in local multi-scale optimal algorithm.

Table 8.

The experiments results of Algorithm 2 compares to Algorithm 1.

Author Contributions

All authors contributed equally to this work.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant (No:11701258,11871259), Program for Innovative Research Team in Science and Technology in Fujian Province University, and Quanzhou High-Level Talents Support Plan under Grant 2017ZT012.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zadeh, L.A. Fuzzy sets and information granularity. Adv. Fuzzy Set Theory Appl. 1979, 11, 3–18. [Google Scholar]

- Pedrycz, W. Granular Computing: An Introduction; Physica-Verlag: Heidelberg, Germany, 2000; pp. 309–328. [Google Scholar] [CrossRef]

- Yao, Y.Y.; Miao, D.Q.; Xu, F.F. Granular Structures and Approximations in Rough Sets and Knowledge Spaces. In Rough Set Theory: A True Landmark in Data Analysis; Springer: Berlin/Heidelberg, Germany, 2009; pp. 71–84. [Google Scholar] [CrossRef]

- Li, J.; Ren, Y.; Mei, C.; Qian, Y.; Yang, X. A comparative study of multigranulation rough sets and concept lattices via rule acquisition. Knowl.-Based Syst. 2016, 91, 152–164. [Google Scholar] [CrossRef]

- Pal, S.K.; Ray, S.S.; Ganivada, A. Introduction to Granular Computing, Pattern Recognition and Data Mining. In Granular Neural Networks, Pattern Recognition and Bioinformatics; Springer International Publishing: Cham, Switzerland, 2017; pp. 1–37. [Google Scholar] [CrossRef]

- Han, S.E. Roughness measures of locally finite covering rough sets. Int. J. Approx. Reason. 2019, 105, 368–385. [Google Scholar] [CrossRef]

- Liu, H.; Cocea, M. Granular computing-based approach for classification towards reduction of bias in ensemble learning. Granul. Comput. 2017, 2, 131–139. [Google Scholar] [CrossRef]

- Wang, G.; Yang, J.; Xu, J. Granular computing: From granularity optimization to multi-granularity joint problem solving. Granul. Comput. 2017, 2, 105–120. [Google Scholar] [CrossRef]

- Sun, B.Z.; Ma, W.M.; Qian, Y.H. Multigranulation fuzzy rough set over two universes and its application to decision making. Knowl.-Based Syst. 2017, 123, 61–74. [Google Scholar] [CrossRef]

- Yao, Y. Three-way decision and granular computing. Int. J. Approx. Reason. 2018, 103, 107–123. [Google Scholar] [CrossRef]

- Mo, J.; Huang, H.L. (T, S)-Based Single-Valued Neutrosophic Number Equivalence Matrix and Clustering Method. Mathematics 2019, 7, 36. [Google Scholar] [CrossRef]

- Petrosino, A.; Ferone, A. Feature Discovery through Hierarchies of Rough Fuzzy Sets. In Granular Computing and Intelligent Systems: Design with Information Granules of Higher Order and Higher Type; Pedrycz, W., Chen, S.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 57–73. [Google Scholar] [CrossRef]

- Ferone, A. Feature selection based on composition of rough sets induced by feature granulation. Int. J. Approx. Reason. 2018, 101, 276–292. [Google Scholar] [CrossRef]

- Pawlak, Z. Imprecise Categories, Approximations and Rough Sets. In Rough Sets: Theoretical Aspects of Reasoning about Data; Springer: Dordrecht, The Netherlands, 1991; pp. 9–32. [Google Scholar] [CrossRef]

- Zhu, P.F.; Hu, Q.H.; Zuo, W.M.; Yang, M. Multi-granularity distance metric learning via neighborhood granule margin maximization. Inf. Sci. 2014, 282, 321–331. [Google Scholar] [CrossRef]

- Zhu, P.F.; Hu, Q.H. Adaptive neighborhood granularity selection and combination based on margin distribution optimization. Inf. Sci. 2013, 249, 1–12. [Google Scholar] [CrossRef]

- Tan, A.H.; Wu, W.Z.; Li, J.J.; Lin, G.P. Evidence-theory-based numerical characterization of multigranulation rough sets in incomplete information systems. Fuzzy Sets Syst. 2016, 294, 18–35. [Google Scholar] [CrossRef]

- Yao, Y.; She, Y. Rough set models in multigranulation spaces. Inf. Sci. 2016, 327, 40–56. [Google Scholar] [CrossRef]

- Lin, G.P.; Liang, J.Y.; Qian, Y.H. An information fusion approach by combining multigranulation rough sets and evidence theory. Inf. Sci. 2015, 314, 184–199. [Google Scholar] [CrossRef]

- Yang, X.B.; Song, X.N.; Chen, Z.H.; Yang, J.Y. On multigranulation rough sets in incomplete information system. Int. J. Mach. Learn. Cybern. 2012, 3, 223–232. [Google Scholar] [CrossRef]

- Wu, W.Z.; Leung, Y. Theory and applications of granular labelled partitions in multi-scale decision tables. Inf. Sci. 2011, 181, 3878–3897. [Google Scholar] [CrossRef]

- Wu, W.Z.; Leung, Y. Optimal scale selection for multi-scale decision tables. Int. J. Approx. Reason. 2013, 54, 1107–1129. [Google Scholar] [CrossRef]

- Gu, S.M.; Wu, W.Z. On knowledge acquisition in multi-scale decision systems. Int. J. Mach. Learn. Cybern. 2013, 4, 477–486. [Google Scholar] [CrossRef]

- Wu, W.Z.; Qian, Y.; Li, T.J.; Gu, S.M. On rule acquisition in incomplete multi-scale decision tables. Inf. Sci. 2017, 378, 282–302. [Google Scholar] [CrossRef]

- She, Y.H.; Li, J.H.; Yang, H.L. A local approach to rule induction in multi-scale decision tables. Knowl.-Based Syst. 2015, 89, 398–410. [Google Scholar] [CrossRef]

- Li, F.; Hu, B.Q.; Wang, J. Stepwise optimal scale selection for multi-scale decision tables via attribute significance. Knowl.-Based Syst. 2017, 129, 4–16. [Google Scholar] [CrossRef]

- Li, F.; Hu, B.Q. A new approach of optimal scale selection to multi-scale decision tables. Inf. Sci. 2017, 381, 193–208. [Google Scholar] [CrossRef]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976. [Google Scholar]

- Guan, J.W.; Bell, D.A.; Guan, Z. Matrix computation for information systems. Inf. Sci. 2001, 131, 129–156. [Google Scholar] [CrossRef]

- Liu, G.L. Rough Sets over the Boolean Algebras. In Rough Sets, Fuzzy Sets, Data Mining, and Granular Computing; Ślęzak, D., Wang, G., Szczuka, M., Düntsch, I., Yao, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 124–131. [Google Scholar]

- Wang, L.; Li, T.R. Matrix-Based Computational Method for Upper and Lower Approximations of Rough Sets. Pattern Recognit. Artif. Intell. 2011, 24, 756–762. [Google Scholar] [CrossRef]

- Huang, Y.Y.; Li, T.R.; Luo, C.; Horng, S.J. Matrix-Based Rough Set Approach for Dynamic Probabilistic Set-Valued Information Systems. In Rough Sets; Springer International Publishing: Cham, Switzerland, 2016; pp. 197–206. [Google Scholar]

- Tan, A.H.; Li, J.J.; Lin, Y.J.; Lin, G.P. Matrix-based set approximations and reductions in covering decision information systems. Int. J. Approx. Reason. 2015, 59, 68–80. [Google Scholar] [CrossRef]

- Tan, A.H.; Li, J.J.; Lin, G.P.; Lin, Y.J. Fast approach to knowledge acquisition in covering information systems using matrix operations. Knowl.-Based Syst. 2015, 79, 90–98. [Google Scholar] [CrossRef]

- Luo, C.; Li, T.R.; Yi, Z.; Fujita, H. Matrix approach to decision-theoretic rough sets for evolving data. Knowl.-Based Syst. 2016, 99, 123–134. [Google Scholar] [CrossRef]

- Hu, C.X.; Liu, S.X.; Liu, G.X. Matrix-based approaches for dynamic updating approximations in multigranulation rough sets. Knowl.-Based Syst. 2017, 122, 51–63. [Google Scholar] [CrossRef]

- Karczmarek, P.; Kiersztyn, A.; Pedrycz, W. An Application of Graphic Tools and Analytic Hierarchy Process to the Description of Biometric Features. In Artificial Intelligence and Soft Computing; Rutkowski, L., Scherer, R., Korytkowski, M., Pedrycz, W., Tadeusiewicz, R., Zurada, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 137–147. [Google Scholar]

- Xu, Y.H.; Wu, W.Z.; Tan, A.H. Optimal Scale Selections in Consistent Generalized Multi-scale Decision Tables. Rough Sets 2017, 10313, 185–198. [Google Scholar] [CrossRef]

- Gu, S.; Gu, J.; Wu, W.; Li, T.; Chen, C. Local optimal granularity selections in incomplete multi-granular decision systems. J. Comput. Res. Dev. 2017, 54, 1500–1509. [Google Scholar] [CrossRef]

- Wu, W.Z.; Chen, C.J.; Li, T.J.; Xu, Y.H. Comparative Study on Optimal Granularities in Inconsistent Multi-granular Labeled Decision Systems. Pattern Recognit. Artif. Intell. 2016, 29, 1095–1103. [Google Scholar] [CrossRef]

- Wu, W.Z.; Yang, L.; Tan, A.H.; Xu, Y.H. Granularity Selections in Generalized Incomplete Multi-Granular Labeled Decision Systems. J. Comput. Res. Dev. 2018, 55, 1263–1272. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).