Abstract

This study presents Galerkin and collocation algorithms based on Telephone polynomials (TelPs) for effectively solving high-order linear and non-linear ordinary differential equations (ODEs) and ODE systems, including those with homogeneous and nonhomogeneous initial conditions (ICs). The suggested approach also handles partial differential equations (PDEs), emphasizing hyperbolic PDEs. The primary contribution is to use suitable combinations of the TelPs, which significantly streamlines the numerical implementation. A comprehensive study has been conducted on the convergence of the utilized telephone expansions. Compared to the current spectral approaches, the proposed algorithms exhibit greater accuracy and convergence, as demonstrated by several illustrative examples that prove their applicability and efficiency.

Keywords:

telephone numbers; telephone polynomials; spectral methods; partial differential equations; convergence analysis MSC:

65-XX; 65L60; 65L05

1. Introduction

Polynomials and special functions are crucial in various fields because of their several uses in applied sciences; see, for instance, ref. [1,2,3]. Many theoretical publications have been devoted to the different polynomial sequences. The authors of [4] explored several polynomial sequences and offered a few practical uses for their findings. In ref. [5], the authors investigated different polynomial sequences. In ref. [6], degenerated polynomials of the Apostol type were introduced. The authors of [7] examined Sheffer polynomial sequences. Several articles also examined certain generalized polynomials. For example, the authors of [8] developed several other generalized polynomials. A significant subject in numerical analysis and the solution of various differential equations (DEs) is the utilization of distinct sequences. A great deal of effort has been focused on this area. For example, the authors of [9,10] used specific polynomials to solve some DEs, which are generalizations of the first and second kinds of Chebyshev polynomials. The generalized Bessel polynomials and the generalized Jacobi polynomials were used to address multi-order fractional DEs in [11,12]. The authors of ref. [13] employed a set of generalized Chebyshev polynomials to solve the telegraph DEs.

High-order DEs are central to numerous applications in science and engineering, including structural mechanics, fluid dynamics, and electromagnetic theory. Over the past few decades, significant advancements have been made in developing efficient numerical methods to address these complex problems. Among the most notable techniques are spectral methods, which have demonstrated superior convergence properties compared to traditional finite difference and finite element methods, especially for problems with smooth solutions [14,15,16].

Recent advancements in machine learning have shown promising applications in solving ODEs and PDEs. Various studies have successfully implemented machine learning techniques, providing innovative solutions and insights into complex dynamics. For instance, the work by Qiu et al. [17] explored novel machine learning frameworks for ODEs, while Zhang et al. [18] demonstrated effective methodologies for PDEs. Additionally, the study by Cuomo et al. [19] highlighted the evolving role of machine learning in enhancing computational efficiency and accuracy in this domain. These contributions collectively illustrate the dynamic development of machine learning approaches in addressing differential equations and underscore the potential for further exploration in our research.

In addition to the emerging techniques in machine learning and operational calculus, it is crucial to recognize traditional numerical methods that have been extensively employed in the approximation of differential equations. For instance, Runge–Kutta methods provide a robust framework for solving ordinary differential equations, offering varying degrees of accuracy depending on the specific formulation used. Similarly, differential transformation methods have gained attention for their efficiency and ease of implementation in obtaining numerical solutions. These classical approaches have been explored in literature, as highlighted in recent studies (e.g., [19,20]), and will be discussed further to provide a comprehensive overview of the landscape of numerical techniques applicable to differential equations.

Heat transfer, wave propagation, fluid dynamics, and many more areas of physics and engineering extensively use PDEs. In addition, biology uses them to model population dynamics and disease transmission, while economics uses them to compute price alternatives. Photodynamic enzymes are vital in medical imaging. The geosciences utilize them for weather prediction and groundwater movement simulation, while the material sciences employ them for chemical reaction and diffusion studies. For some applications of different PDEs, see [21,22]. Several numerical techniques exist for solving different PDEs. For example, the authors of [23] followed a variational quantum algorithm for handling PDEs. In [24], some studies of an inverse source problem for the linear parabolic equation were performed.

Spectral methods use global basis functions, such as orthogonal polynomials or trigonometric functions, to approximate the solution to DEs. These methods can be classified into various types (see, for example, [25,26,27,28]). The main spectral methods are collocation, tau, and Galerkin. The collocation method relies on choosing suitable nodes in the domain and, after that, satisfying the equations at these points. Many authors use the collocation method because it is easy to implement and can handle various DEs. For example, the authors of [29] applied a wavelet collocation approach to treat the Benjamin–Bona–Mahony equation. Another collocation approach using third-kind Chebyshev polynomials was followed in [30] to treat the nonlinear fractional Duffing equation. In [31], the authors applied a collocation procedure to specific high-order DEs. The collocation method treated inverse heat equations in [32]. Some other contributions regarding collocation methods can be found in [33,34,35]. The Galerkin method and its variants are also among the essential spectral methods. The main principle of applying this method is to choose basis functions that meet the underlying conditions. For example, the authors of [36] applied a Jacobi Galerkin algorithm for two-dimensional time-dependent PDEs. Some other contributions regarding the Galerkin method can be found in [37,38,39,40]. The tau method can be applied without restrictions on the choice of basis functions. In [41], the authors followed a tau approach for certain Maxwell’s equations. Another tau approach was followed in [42] to solve the two-dimensional ocean acoustic propagation in an undulating seabed environment. A tau–Gegenbauer spectral approach was followed for treating certain systems of fractional integro DEs in [43]. Some other contributions can be found in [44,45,46].

Table 1 summarizes the key publications related to Galerkin’s methods for solving DEs, including the types of equations addressed, the specific Galerkin approach used, and notable findings.

Table 1.

Summary of Key Literature on Galerkin’s Approaches to Solving Differential Equations.

In 1800, Heinrich Rothe proposed the Telephone numbers (TelNs) method while computing the involutions in a set of n elements. In a telephone system, where each subscriber may only be linked to a maximum of two other subscribers, the sequence of TelNs can alternatively be seen as the number of feasible methods to make connections between the n subscribers. In graph theory, the quantity of matchings in a whole graph with n vertices is another use of the TelNs. In this paper, we will develop a generalization for the TelNs to build a sequence of polynomials, namely TelPs. We will employ these polynomials to solve some ODEs and PDEs.

In this study, we propose a spectral Galerkin approach for solving some models of DEs. We utilize the TelPs as basis functions. We will propose suitable basis functions for handling some models of DEs. The convergence analysis for the used expansions will be discussed.

The structure of this paper is organized as follows: Section 2 gives an overview of the mathematical properties of TelPs and develops an inversion formula, which is essential for analyzing the error analysis of the used expansion. Section 3 describes the Galerkin formulation for the high-order linear IVPs, while Section 4 proposes a collocation algorithm for treating the non-linear IVPs. Section 5 extends the method to systems of DEs, while Section 6 discusses the application to hyperbolic PDEs. In Section 7, a comprehensive investigation of the convergence and error analysis is performed. Numerical results presented in Section 8 validate the efficiency and accuracy of the presented methods, with concluding remarks and future research directions in Section 9.

2. An Overview of TelNs and Their Corresponding Polynomials

In mathematics, the involution numbers, sometimes called TelNs, are a series of integers that count the possible connections between n individuals through direct telephone calls. These numbers can be generated using the following recursive formula [53]:

These numbers can be represented explicitly as

Now, we define a sequence of polynomials that generalize the numbers . We define of degree n as follows:

From Formula (2), it can be verified that the recurrence relation given by

is satisfied by the TelPs that are expressed in (2). Now, we will give the inversion formula to Formula (2) in the following theorem.

Theorem 1.

For every non-negative integer m, the following formula holds:

where is the Pochhammer function defined as .

Proof.

We will proceed by induction. The formula holds for . Assume that Formula (4) is valid, and we have to prove the validity of the following identity:

Now, making use of the inductive hypothesis, we can write

Making use of the recurrence relation (3) in the following form:

the following formula can be obtained:

Some algebraic computations lead to Formula (5). □

Remark 1.

3. Treatment of High-Order Initial Value Problems

In this section, we focus on applying the Telephone Galerkin method (TGM) to solve linear higher-order ODEs, both with homogeneous and non-homogeneous ICs.

3.1. Homogeneous ICs

We are interested in handling the following linear higher-order ODEs:

controlled by the following homogeneous ICs:

where are constants. If we define the following spaces:

then, the TGM approximation to Equations (11) and (12) is to find such that

where the scalar inner product in the space is . To meet the homogenous ICs, we choose suitable basis functions in the form

Now, if we consider the following approximate solution for (11) and (12):

then, the Galerkin formulation for (11) and (12) is given by

Let us denote

Then, (17) can be written equivalently in the following form:

in which the vector of unknowns is . The following theorem presents the nonzero elements of the matrices, and .

Theorem 2.

Consider the basis functions in (15). Setting and . The nonzero elements of the two matrices and can be written, respectively, in the following explicit forms:

Proof.

The basis functions are chosen such that the ICs (12) are satisfied. Now, we prove (21). Using (15), we have

By differentiating both sides of (22) l times with respect to z, we obtain

and making use of the scalar inner product enables one to obtain

which leads to the following formula:

which proves (21).

3.2. Treatment of Nonhomogeneous ICs

In this section, we show how to deal with Equation (11) governed by the following non-homogeneous ICs:

where are real constants. In such a case, we make use of the transformation

It follows that Equation (11) governed by the non-homogeneous conditions (12) turns into the following equation:

governed by the following homogeneous conditions:

where

4. Treatment of the High-Order Non-Linear IVPs

This section is confined to the numerical treatment of the high-order non-linear IVPs. The typical collocation method, together with the operational matrix of derivatives, will be utilized.

4.1. The Operational Matrix of Derivatives of the TelPs

Here, we establish the operational matrix of derivatives of the TelPs that will be used to solve the non-linear IVPs. The following theorem will be the key to developing the operational matrix.

Theorem 3.

The first derivative of is given by

Proof.

Based on the power form representation in (2), we can write

If we insert the inversion Formula (4) into (31), then the following formula can be obtained:

which can be written alternatively as

Using the following identity:

Formula (33) reduces to the following simplified formula:

This ends the proof. □

If we consider the following vector:

then it is easy to see that

where S is the operational matrix of derivatives with the following entries:

For example, the matrix S for is

4.2. Treatment of the High-Order Non-Linear IVPs

We aim to solve the following nonlinear IVPs numerically:

governed by the following ICs:

Now, assume a polynomial approximation in terms of the TelPs as

which can be expressed as

where

and is defined in (34).

Now, using the operational matrix S, the ℓth derivative of can be expressed in the following form:

and accordingly, the residual of Equation (36) has the following form:

Now, to apply the collocation method to solve Equation (36), we enforce the residual to be zero at selected suitable points . We choose them to be the distinct zeros of the shifted Legendre polynomial on . In such case, we have

In addition, the ICs in (37) lead to the following n equations:

Merging the equations in (43) and (44) yields a non-linear algebraic system that can be treated using any suitable numerical algorithm such as Newton’s iterative method, and hence solution can be obtained.

5. Numerical Treatment of a System of Initial Value Problems

In this section, we present another application of the TGM to solve a system of higher-order DEs with homogeneous ICs. Consider the following system of higher-order DEs.

controlled by following homogeneous ICs:

Similarly, as in Section 3.1, we have to find , such that

Now, if we approximate , as

where is defined as in (15). Now the application of the Galerkin method leads to

One can use any appropriate numerical solver to solve the algebraic system in the variables , where .

6. Numerical Treatment of Hyperbolic PDEs of First-Order

In this section, we focus on applying the TGM to solve one-dimensional first-order hyperbolic PDEs governed by homogeneous and non-homogeneous ICs.

6.1. The Problem Governed by Homogeneous ICs

Consider the following one-dimensional hyperbolic PDE [54]:

subject to the following conditions:

where and are constants, and is the source function. We choose appropriate basis functions to apply the Galerkin method to (51)–(53). The TGM for (51) is to find such that

where

Now, we choose combinations of TelPs to act as basis functions. More precisely, we choose the two following sets of basis functions:

These choices allow us to convert (54) into

Let us denote

and

The proposed approximation can be written in the form

and is represented as

where

In the tensor product notion, Equation (59) can be represented as

where can be represented in the form

and and are matrices,

their elements and are obtained, respectively, from

where the nonzero elements of the matrices and are given explicitly by

Remark 2.

The Kronecker product of the matrix and the matrix

is the matrix having the following block form:

At last, the differential equation governed by its underlying conditions is converted into a linear system of algebraic equations concerning the unknown expansion coefficients, which can be efficiently solved utilizing the Gaussian elimination method.

6.2. The Problem Governed by the Nonhomogeneous Conditions

In this subsection, we examine the first-order one-dimensional hyperbolic PDEs (51) with non-homogeneous initial and boundary conditions:

where and are given functions.

We make use of the following transformation:

where is a function that is chosen to satisfy the following homogeneous conditions:

This means that (51) governed by (64), and (65) is equivalent to the following modified equation:

with

under the homogeneous conditions (66), and (67).

While is an arbitrary function that satisfies the original non-homogeneous initial and boundary conditions.

7. Investigation of the Convergence and Error Analysis

This section focuses on specific models discussed in Section 3, Section 4, Section 5 and Section 6. We will analyze the convergence of the proposed expansions employed for the numerical treatment of these models. This ensures that the numerical solutions converge to the exact solution as the discretization parameters approach their limits. Additionally, we will investigate convergence and error analysis. Through rigorous analysis, we aim to establish error bounds and conditions for convergence that affirm the efficiency of our methods in solving the models under consideration. The following lemma is required to continue with our analysis.

Lemma 1.

The polynomials satisfy the following inequality:

Proof.

In view of (2), we have

For , Formula (70) takes the form:

Using the simple identity: , we can write

In addition, it is not difficult to show that , and accordingly, (72) takes the form

where

By using computer algebra, especially Zeilberger’s algorithm (see [55]), are able to meet the first-order recurrence relation shown below:

The exact solution to this recurrence relation has the form

Then, we obtain

Similarly, for , we can prove that

The following corollary is a direct consequence of Lemma 1.

Corollary 1.

The polynomials satisfy the following inequality

7.1. The Model of Initial Value Problem

If the error obtained by solving (11), and (12) numerically is defined by

subsequently, the maximum absolute error (MAE) of the proposed technique is estimated as

while the differences between the function and its estimated value for the system (45) and (46) are defined by

and consequently, the MAEs of the suggested method are estimated by the following:

In order to evaluate the precision of the suggested method, we define the following error:

Theorem 4.

Assume that , where is an infinitely differentiable function at the origin with . Then, it has the following expansion:

where

These expansion coefficients satisfy the following inequality

and the series in (84) converges absolutely.

Proof.

First, we expand as

Inserting the inversion Formula (9) into (87) enables one to write

Expanding the right-hand side of (88) and rearranging similar terms, the following expansion is obtained:

where

In view of (9), we can obtain (85). The inequality (86) is a direct consequence of (85). Now, direct use of (86) together with the application of Lemma 1 yields

we have

then

so, the series converges absolutely. □

Theorem 5.

If satisfies the hypothesis of Theorem 4, and if

then, the following estimate is satisfied

So

where ≲ means that a generic constant d exists such that .

Proof.

The truncation error may be written as follows:

however, we have

then,

which completes the proof of the theorem. □

Corollary 2.

Suppose that has the form (16) and represents the best possible approximation for out of Ω. Then, the following estimates for the error are valid:

Proof.

Remark 3.

Theorems 4 and 5 can be extended when the error analysis is considered for the proposed technique for solving the model defined by the system (45) and (46). The most important steps would be as follows:

- (i)

- In order to derive the bound expression of the coefficients , it is necessary to establish the matching assumptions in Theorem 4.

- (ii)

- In Theorem 5, the error bound formulas for are derived. These expressions are identical to the ones for .

- (iii)

7.2. Treatment of First-Order Hyperbolic PDEs

The error estimation to assess the numerical scheme’s error is

To proceed in our analysis, the following two theorems are needed in this subsection:

Theorem 6.

Let be an infinitely differentiable function at the origin with . Then, it has the following expansion

where

These expansion coefficients satisfy the following inequality

and the series in (105) converges absolutely.

Proof.

First, we expand as

This expansion can be written in the form:

where

Inserting the inversion Formula (9) into (109) enables one to write

Expanding the right-hand side of (88), and rearranging the similar terms, the following expansion is obtained

Now,

Inserting the inversion Formula (9) into (113) and following the same procedures enables one to write

Substituting (114) for (112) immediately gives

where

Theorem 7.

If satisfies the hypothesis of Theorem 6, and if

then the following estimate is satisfied

Proof.

The truncation error may be written as follows:

we have

also,

then

which completes the proof of the theorem. □

Corollary 3.

Suppose that has the form (60) and represents the best possible approximation for out of . Then, the following estimates for the error are valid:

Proof.

The stability of error, or the process of estimating the propagation of error, is the focus of the subsequent theorem.

Theorem 8.

For any two successive approximations of , we obtain the following:

8. Numerical Results

Several test examples are presented in this section to demonstrate the effectiveness of the proposed algorithms. Comparisons between the results obtained using the proposed method and those from other methods show that the proposed methods are highly effective and convenient. The following examples are considered.

Example 1.

Consider the linear eighth-order problem [56]

subject to the conditions

and the analytical solution is .

Table 2 in Example 1 displays three sets of absolute error values using the proposed methods, with different numbers of points N (16, 20, and 24). The absolute errors decrease significantly as N increases, indicating that the method becomes more accurate with a higher number of points. This suggests the method is effective in reducing error and improving numerical precision.

Table 2.

The absolute errors for Example 1 at different values of z and N.

- At smaller values of z (e.g., ), the errors are generally lower.

- At larger values of z (e.g., ), the errors increase, indicating potential challenges in achieving high accuracy in these regions.

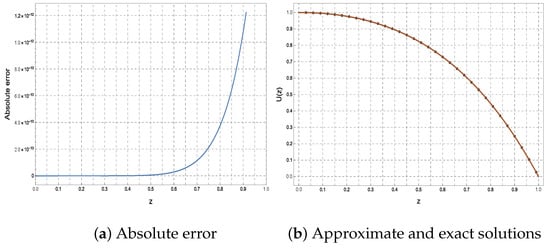

Figure 1 illustrates the corresponding absolute error function (left) and the comparison between the approximate and exact solutions (right) for Example 1 at . The plot on the left demonstrates that the absolute error remains very small and increases gradually at , confirming the method’s reliability and precision in solving the given problem. The plot on the right shows an excellent agreement between the approximate and exact solutions, indicating the high accuracy of the method.

Figure 1.

Graphs of absolute error, approximate and exact solutions at for Example 1.

Example 2.

Consider the linear second-order problem (see [57])

with two different sets of initial conditions and corresponding exact solutions:

- First case:Initial conditions:Exact solution:

- Second case:Initial conditions:Exact solution:

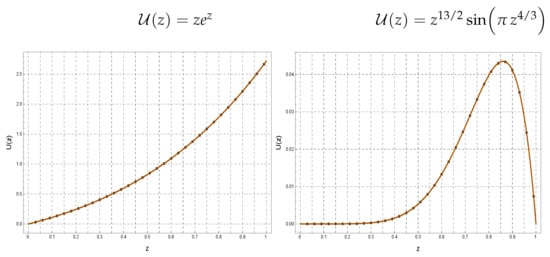

In each case, is selected accordingly to satisfy the differential equation. Table 3 presents a comparative analysis of two computational methods, Romanovski–Jacobi tau method (R-JTM) [57] and the proposed TGM, for solving numerical Example 2. The table details each method’s absolute errors and CPU time (s) at varying numbers of discretization points N. The results demonstrate the superior accuracy of the TGM method, achieving significantly smaller absolute errors compared to R-JTM [57]. For instance, at , TGM exhibits an absolute error of 8.03 × 10−17, while R-JTM at shows 2.96 × 10−13. Furthermore, TGM is more computationally efficient, requiring only seconds at , in contrast to R-JT’s seconds at . The table highlights that TGM offers a better balance between accuracy and efficiency, establishing it as a more favorable choice for solving similar problems with solutions of the form . Notably, while CPU time increases with N in both methods, the rate of increase is substantially lower for TGM. Figure 2 compares the exact solutions of a second-order differential equation in Example 2. In the left plot, the solution is shown for , exhibiting a smooth increasing behavior before decreasing, with a good match between the numerical and analytical solutions. In the right plot, the solution is presented for , displaying a more oscillatory pattern. The first solution demonstrates exponential behavior, while the second shows strong oscillatory effects. This figure highlights the possibility of obtaining different solutions for the same equation depending on the initial conditions.

Table 3.

The absolute errors and CPU time (s) for Example 2 at different values of N.

Figure 2.

Graphs of exact and approximate solutions for Example 2.

Remark 4.

In both accuracy and computational efficiency, the TGM approach beats the R-JTM method [57]. TGM is the better method for this problem as it requires considerably less CPU time and yields much less absolute errors.

Example 3.

The isothermal gas spheres equation [57] is given by the following equation:

with the following initial conditions:

An approximate solution to this equation, as obtained by Wazwaz [58], Liao [59], and Singh et al. [60], is given by the following formula:

Table 4 presents the approximate values of for the standard Lane–Emden equation with , obtained using TGM. These results are compared with those derived from the Jacobi Rational Pseudospectral Method (JRPM) [61], the variable weight fuzzy marginal linearization (VWFML) method [62], and the Adomian Decomposition Method (ADM) solution [58].

Table 4.

Approximate solutions for Lane–Emden Equation (131) for Example 3.

Example 4.

We consider the following linear ODE system [63],

with the ICs

The exact solution of this problem are and .

Table 5 and Table 6 provide a comparison of the absolute errors and for the functions and , solved using different methods. Here are some observations on the tables:

Table 5.

Comparison of the absolute errors for of Equation (132).

Table 6.

Comparison of the absolute errors for of Equation (132).

- They show a clear difference in solution accuracy between the three methods used (Differential transformation method (DTM) in [64], Bessel collocation method (BCM) in [63], and the present method). The present method achieves significantly higher accuracy, with much smaller error values at the same points .

- In Table 5, it is evident that the errors produced by the present method are much smaller compared to those of the DTM and BCM methods, especially as N increases from 6 to 10.

- In Table 6, the present method again demonstrates notably higher precision than the DTM and BCM methods across all points.

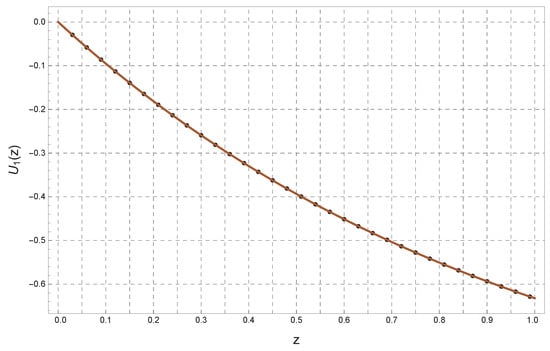

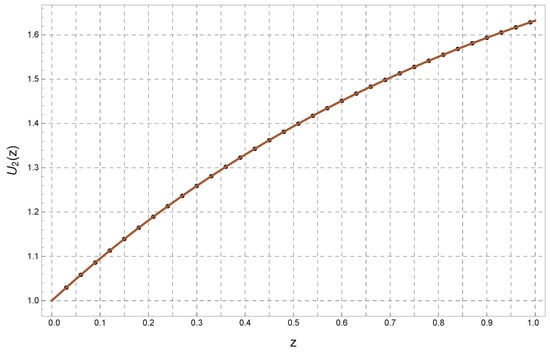

Also, Figure 3 and Figure 4 are plotted to compare the analytic solution with the approximate solution at for Example 4.

Figure 3.

Graph of exact solution and approximate solution at for Example 4.

Figure 4.

Graph of exact solution and approximate solution at for Example 4.

Example 5.

We consider the following hyperbolic equation of first-order of the form [33]

subject to initial and boundary conditions,

where

The exact solution is given by

Table 7 compares the absolute errors at various points in the domain for Example 5, with . The errors are shown for two previous methods (Legendre wavelets (LW) and Chebyshev wavelets (CW) from Singh et al. [33]) and the present method.

Table 7.

Comparison of the absolute errors for Example 5 at .

- It is notable that the errors in the present method are significantly lower compared to the LW and CW methods. For instance, at point , the error in the present method is , while the errors in the other methods are and , respectively.

- This result highlights the efficiency of the present method in substantially reducing the error compared to the other methods, reflecting higher accuracy.

Table 8 compares the errors for Example 5 at different values of N.

Table 8.

Comparison of the errors for Example 5 at different values of N.

- It is clear that the present method shows reduced errors as the values of N increase, with the error decreasing significantly with larger N. For instance, at , the error in the present method is , while the errors in the other methods are and for the LW and CW methods, respectively.

- The present method demonstrates excellent performance as N increases, achieving very high accuracy, such as at , indicating the method’s strength in convergence and accuracy.

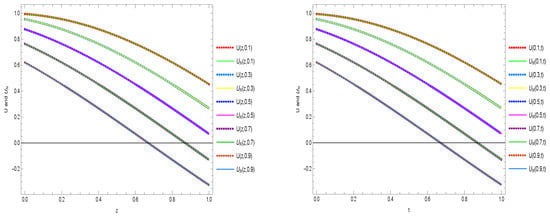

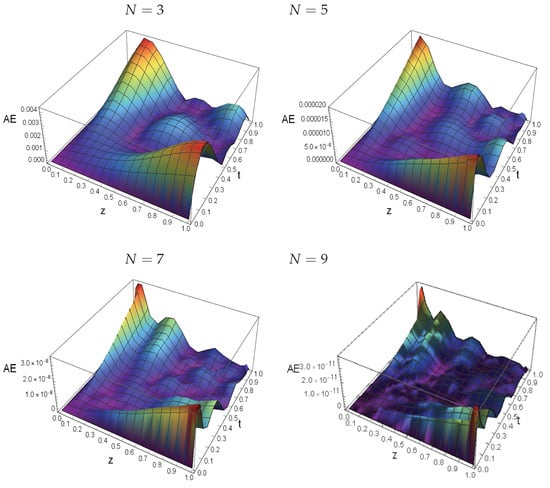

Also, Figure 5 compares the curves of analytical and approximation solutions for Example 5, where , at and . Finally, Figure 6 illustrates the space-time graphs of the absolute error functions for Example 5 at various choices of N, specifically , , , and . The graphs depict the distribution of errors over time and space, reflecting the accuracy of the proposed method in solving the problem. It is evident that increasing the values of N results in a significant reduction in errors, confirming the method’s effectiveness in terms of accuracy and convergence.

Figure 5.

Comparison of the curves of exact and numerical at t = 0.1, 0.3, 0.5, 0.7, 0.9 (left) and (right) for Example 5 where .

Figure 6.

The space-time graphs of the absolute error functions for Example 5 at various choices of N.

9. Conclusions

This paper introduces and utilizes a new set of polynomials known as TelPs, which are considered generalizations of TelNs. We showed that the application of spectral methods, and in particular the Galerkin and collocation methods, is efficient for solving different DEs. We treated numerically some types of DEs using TelPs as basis functions. We conducted a thorough investigation into the convergence analysis of the used expansion. To the best of our knowledge, these kinds of polynomials have not been used to solve DEs before. We plan to employ these polynomials in other types of DEs shortly. In conclusion, our research has significant implications for various real-world applications. For instance, the methodologies developed can be effectively applied to heat transfer problems, enabling more efficient thermal management in engineering systems. Additionally, our findings contribute to the growing field of machine learning-based PDE solvers, which have the potential to revolutionize how complex DEs are approached in disciplines such as fluid dynamics, materials science, and environmental modeling. By bridging the gap between theoretical advancements and practical implementations, we hope to inspire further exploration and application of these techniques in diverse fields.

Author Contributions

Conceptualization, R.M.H. and W.M.A.-E.; Methodology, R.M.H. and W.M.A.-E.; Software, R.M.H.; Validation, R.M.H., H.M.A., O.M.A., A.K.A. and W.M.A.-E.; Formal analysis, R.M.H. and H.M.A.; Investigation, H.M.A., O.M.A. and W.M.A.-E.; Writing—original draft, R.M.H., H.M.A. and W.M.A.-E.; Visualization, R.M.H. and W.M.A.-E.; Supervision, W.M.A.-E.; Funding acquisition, A.K.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was funded by Umm Al-Qura University, Saudi Arabia, under grant number: 25UQU4331287GSSR01.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors extend their appreciation to Umm Al-Qura University, Saudi Arabia, for funding this research work through grant number: 25UQU4331287GSSR01.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mason, J.C.; Handscomb, D.C. Chebyshev Polynomials, 1st ed.; Chapman and Hall/CRC: New York, NY, USA, 2002. [Google Scholar]

- Marcellán, F. Orthogonal Polynomials and Special Functions: Computation and Applications, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Lebedev, N.N. Special Functions & Their Applications; Dover Publ.: New York, NY, USA, 1972. [Google Scholar]

- Costabile, F.A.; Gualtieri, M.I.; Napoli, A. Polynomial sequences: Elementary basic methods and application hints. A survey. Rev. R. Acad. Cienc. Exactas Fis. Nat. Ser. A Math. 2019, 113, 3829–3862. [Google Scholar] [CrossRef]

- Costabile, F.A.; Gualtieri, M.I.; Napoli, A.; Altomare, M. Odd and even Lidstone-type polynomial sequences. Part 1: Basic topics. Adv. Differ. Equ. 2018, 2018, 299. [Google Scholar] [CrossRef]

- Cesarano, C.; Ramírez, W.; Díaz, S.; Shamaoon, A.; Khan, W.A. On Apostol-type Hermite degenerated polynomials. Mathematics 2023, 11, 1914. [Google Scholar] [CrossRef]

- Costabile, F.A.; Gualtieri, M.I.; Napoli, A. Towards the centenary of Sheffer polynomial sequences: Old and recent results. Mathematics 2022, 10, 4435. [Google Scholar] [CrossRef]

- Ramírez, W.; Cesarano, C. Some new classes of degenerated generalized Apostol-Bernoulli, Apostol-Euler and Apostol-Genocchi polynomials. Carpath. Math. Publ. 2022, 14, 354–363. [Google Scholar] [CrossRef]

- Abd-Elhameed, W.M.; Ahmed, H.M.; Zaky, M.A.; Hafez, R.M. A new shifted generalized Chebyshev approach for multi-dimensional sinh-Gordon equation. Phys. Scr. 2024, 99, 095269. [Google Scholar] [CrossRef]

- Ahmed, H.M.; Hafez, R.M.; Abd-Elhameed, W.M. A computational strategy for nonlinear time-fractional generalized Kawahara equation using new eighth-kind Chebyshev operational matrices. Phys. Scr. 2024, 99, 045250. [Google Scholar] [CrossRef]

- Izadi, M.; Cattani, C. Generalized Bessel polynomial for multi-order fractional differential equations. Symmetry 2020, 12, 1260. [Google Scholar] [CrossRef]

- El-Sayed, A.M.A.; El-Mesiry, A.E.M.; El-Saka, H.A.A. Numerical solution for multi-term fractional (arbitrary) orders differential equations. Comput. Appl. Math. 2004, 23, 33–54. [Google Scholar] [CrossRef]

- Abd-Elhameed, W.M.; Hafez, R.M.; Napoli, A.; Atta, A.G. A new generalized Chebyshev matrix algorithm for solving second-order and telegraph partial differential equations. Algorithms 2024, 18, 2. [Google Scholar] [CrossRef]

- Boyd, J.P. Chebyshev and Fourier Spectral Methods; Dover Publications: Mineola, NY, USA, 2001. [Google Scholar]

- Canuto, C.; Hussaini, M.Y.; Quarteroni, A.; Zang, T.A. Spectral Methods: Evolution to Complex Geometries and Applications to Fluid Dynamics; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Shen, J.; Tang, T.; Wang, L.L. Spectral Methods: Algorithms, Analysis and Applications, 1st ed.; Springer: New York, NY, USA, 2011. [Google Scholar]

- Qiu, L.; Wang, Y.; Gu, Y.; Qin, Q.H.; Wang, F. Adaptive physics-informed neural networks for dynamic coupled thermo-mechanical problems in large-size-ratio functionally graded materials. Appl. Math. Model. 2025, 140, 115906. [Google Scholar] [CrossRef]

- Zhang, B.; Wu, G.; Gu, Y.; Wang, X.; Wang, F. Multi-domain physics-informed neural network for solving forward and inverse problems of steady-state heat conduction in multilayer media. Phys. Fluids 2022, 34, 2442–2461. [Google Scholar] [CrossRef]

- Cuomo, S.; Di Cola, V.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific machine learning through physics–informed neural networks: Where we are and what’s next. J. Sci. Comput. 2022, 92, 88. [Google Scholar] [CrossRef]

- Dangal, T.; Chen, C.S.; Lin, J. Polynomial particular solutions for solving elliptic partial differential equations. Comput. Math. Appl. 2017, 73, 60–70. [Google Scholar] [CrossRef]

- Shearer, M.; Levy, R. Partial Differential Equations: An Introduction to Theory and Applications; Princeton University Press: Princeton, NJ, USA, 2015. [Google Scholar]

- Selvadurai, A.P.S. Partial Differential Equations in Mechanics 2: The Biharmonic Equation, Poisson’s Equation; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Sarma, A.; Watts, T.W.; Moosa, M.; Liu, Y.; McMahon, P.L. Quantum variational solving of nonlinear and multidimensional partial differential equations. Phys. Rev. A 2024, 109, 062616. [Google Scholar] [CrossRef]

- Lin, G.; Zhang, Z.; Zhang, Z. Theoretical and numerical studies of inverse source problem for the linear parabolic equation with sparse boundary measurements. Inverse Probl. 2022, 38, 125007. [Google Scholar] [CrossRef]

- Trefethen, L.N. Spectral Methods in MATLAB; SIAM: Philadelphia, PA, USA, 2000. [Google Scholar]

- Hesthaven, J.; Gottlieb, S.; Gottlieb, D. Spectral Methods for Time-Dependent Problems; Cambridge University Press: Cambridge, UK, 2007; Volume 21. [Google Scholar]

- Karniadakis, G.E.; Sherwin, S.J. Spectral/hp Element Methods for Computational Fluid Dynamics, 2nd ed.; Numerical Mathematics and Scientific Computation; Oxford University Press: Oxford, UK, 2005. [Google Scholar]

- Gottlieb, D.; Orszag, S.A. Numerical Analysis of Spectral Methods: Theory and Applications; SIAM: Philadelphia, PA, USA, 1977. [Google Scholar]

- Mulimani, M.; Srinivasa, K. A novel approach for Benjamin-Bona-Mahony equation via ultraspherical wavelets collocation method. Int. J. Math. Comput. Eng. 2024, 2, 39–52. [Google Scholar] [CrossRef]

- Youssri, Y.H.; Atta, A.G.; Moustafa, M.O.; Abu Waar, Z.Y. Explicit collocation algorithm for the nonlinear fractional Duffing equation via third-kind Chebyshev polynomials. Iran. J. Numer. Anal. Optim. 2025; in press. [Google Scholar]

- Costabile, F.; Napoli, A. Collocation for High-Order Differential Equations with Lidstone Boundary Conditions. J. Appl. Math. 2012, 2012, 120792. [Google Scholar] [CrossRef]

- Abdelkawy, M.A.; Amin, A.Z.M.; Babatin, M.M.; Alnahdi, A.S.; Zaky, M.A.; Hafez, R.M. Jacobi spectral collocation technique for time-fractional inverse heat equations. Fractal Fract. 2021, 5, 115. [Google Scholar] [CrossRef]

- Singh, S.; Patel, V.K.; Singh, V.K. Application of Wavelet Collocation Method for Hyperbolic Partial Differential Equations via Matrices. Appl. Math. Comput. 2018, 320, 407–424. [Google Scholar] [CrossRef]

- Jiang, W.; Gao, X. Review of Collocation Methods and Applications in Solving Science and Engineering Problems. CMES-Comput. Model. Eng. Sci. 2024, 140, 41–76. [Google Scholar] [CrossRef]

- Aourir, E.; Izem, N.; Dastjerdi, H.L. A computational approach for solving third kind VIEs by collocation method based on radial basis functions. J. Comput. Appl. Math. 2024, 440, 115636. [Google Scholar] [CrossRef]

- Hafez, R.M.; Youssri, Y.H. Fully Jacobi–Galerkin algorithm for two-dimensional time-dependent PDEs arising in physics. Int. J. Mod. Phys. C 2024, 35, 2450034. [Google Scholar] [CrossRef]

- Youssri, Y.H.; Atta, A.G. Modal spectral Tchebyshev Petrov–Galerkin stratagem for the time-fractional nonlinear Burgers’ equation. Iran. J. Numer. Anal. Optim. 2024, 14, 172–199. [Google Scholar]

- Abd-Elhameed, W.M.; Alsuyuti, M.M. New spectral algorithm for fractional delay pantograph equation using certain orthogonal generalized Chebyshev polynomials. Commun. Nonlinear Sci. Numer. Simul. 2024, 141, 108479. [Google Scholar] [CrossRef]

- Alsuyuti, M.M.; Doha, E.H.; Ezz-Eldien, S.S.; Youssef, I.K. Spectral Galerkin schemes for a class of multi-order fractional pantograph equations. J. Comput. Appl. Math. 2021, 384, 113157. [Google Scholar] [CrossRef]

- Ahmed, H.M. New Generalized Jacobi Galerkin operational matrices of derivatives: An algorithm for solving multi-term variable-order time-fractional diffusion-wave equations. Fractal Fract. 2024, 8, 68. [Google Scholar] [CrossRef]

- Niu, C.; Ma, H. An operator splitting Legendre-tau spectral method for Maxwell’s equations with nonlinear conductivity in two dimensions. J. Comput. Appl. Math. 2024, 437, 115499. [Google Scholar] [CrossRef]

- Ma, X.; Wang, Y.; Zhou, X.; Xu, G.; Gao, D. A Chebyshev tau matrix method to directly solve two-dimensional ocean acoustic propagation in undulating seabed environment. Phys. Fluids 2024, 36, 096601. [Google Scholar] [CrossRef]

- Sadri, K.; Amilo, D.; Hosseini, K.; Hinçal, E.; Seadawy, A.R. A tau-Gegenbauer spectral approach for systems of fractional integrodifferential equations with the error analysis. AIMS Math. 2024, 9, 3850–3880. [Google Scholar] [CrossRef]

- Pour-Mahmoud, J.; Rahimi-Ardabili, M.Y.; Shahmorad, S. Numerical solution of the system of Fredholm integro-differential equations by the Tau method. Appl. Math. Comput. 2005, 168, 465–478. [Google Scholar] [CrossRef]

- Ahmed, H.F.; Hashem, W.A. A fully spectral tau method for a class of linear and nonlinear variable-order time-fractional partial differential equations in multi-dimensions. Math. Comput. Simul. 2023, 214, 388–408. [Google Scholar] [CrossRef]

- Yang, X.; Jiang, X.; Zhang, H. A time–space spectral tau method for the time fractional cable equation and its inverse problem. Appl. Numer. Math. 2018, 130, 95–111. [Google Scholar] [CrossRef]

- Galerkin, B. Series occurring in various questions concerning the elastic equilibrium of rods and plates. Eng. Bull. (Vestn. Inzhenerov) 1915, 19, 897–908. [Google Scholar]

- Strang, G.; Fix, G.J. An Analysis of the Finite Element Method; Prentice-Hall, Inc.: Englewood Cliffs, NJ, USA, 1973; 318 p. [Google Scholar]

- Ciarlet, P.G.; Oden, J. The finite element method for elliptic problems. J. Appl. Mech. 1978, 45, 968. [Google Scholar] [CrossRef]

- Reddy, J.N. An Introduction to the Finite Element Method, 3rd ed.; McGraw-Hill Education: New York, NY, USA, 2005. [Google Scholar]

- Hughes, T.J.R. The Finite Element Method: Linear Static and Dynamic Finite Element Analysis; Dover Publications: Mineola, NY, USA, 2000. [Google Scholar]

- Quarteroni, A.; Valli, A. Domain Decomposition Methods for Partial Differential Equations; Oxford University Press: Oxford, UK, 1999. [Google Scholar]

- Catarino, P.; Morais, E.; Campos, H. A Note on k-Telephone and Incomplete k-Telephone Numbers. In Proceedings of the International Conference on Mathematics and Its Applications in Science and Engineering, Madrid, Spain, 12–14 July 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 225–238. [Google Scholar]

- Doha, E.H.; Hafez, R.M.; Youssri, Y.H. Shifted Jacobi Spectral-Galerkin Method for Solving Hyperbolic Partial Differential Equations. Comput. Math. Appl. 2019, 78, 889–904. [Google Scholar] [CrossRef]

- Koepf, W. Hypergeometric Summation, 2nd ed.; Universitext Series; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Haq, S.; Idrees, M.; Islam, S. Application of Optimal Homotopy Asymptotic Method to Eighth Order Initial and Boundary Value Problems. Int. J. Appl. Math. Comput. Sci. 2010, 2, 73–80. [Google Scholar]

- Youssri, Y.H.; Zaky, M.A.; Hafez, R.M. Romanovski-Jacobi spectral schemes for high-order differential equations. Appl. Numer. Math. 2024, 198, 148–159. [Google Scholar] [CrossRef]

- Wazwaz, A.W. A new algorithm for solving differential equations of Lane–Emden type. Appl. Math. Comput. 2001, 118, 287–310. [Google Scholar] [CrossRef]

- Liao, S. A new analytic algorithm of Lane–Emden type equations. Appl. Math. Comput. 2003, 142, 1–16. [Google Scholar] [CrossRef]

- Singh, O.P.; Pandey, R.K.; Singh, V.K. An analytic algorithm of Lane–Emden type equations arising in astrophysics using modified homotopy analysis method. Comput. Phys. Commun. 2009, 180, 1116–1124. [Google Scholar] [CrossRef]

- Doha, E.H.; Bhrawy, A.H.; Hafez, R.M.; Van Gorder, R.A. A Jacobi rational pseudospectral method for Lane-Emden–Emden initial value problems arising in astrophysics on a semi-infinite interval. Comput. Appl. Math. 2014, 33, 607–619. [Google Scholar] [CrossRef]

- Wang, D.G.; Song, W.Y.; Shi, P.; Karimi, H.R. Approximate analytic and numerical solutions to Lane-Emden equation via fuzzy modeling method. Math. Probl. Eng. 2012, 2012, 259494. [Google Scholar] [CrossRef]

- Yüzbaşı, S. An efficient algorithm for solving multi-pantograph equation systems. Comput. Math. Appl. 2012, 64, 589–603. [Google Scholar] [CrossRef]

- Abdel-Halim Hassan, I.H. Application to differential transformation method for solving systems of differential equations. Appl. Math. Model. 2008, 32, 2552–2559. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).