Abstract

This study proposes a predictive framework for the compressive strength (CS) of manufactured-sand concrete (MSC), integrating six machine learning (ML) models—artificial neural network (ANN), random forest (RF), extreme learning machine (ELM), kernel-ELM (KELM), support vector regression (SVR), and extreme gradient boosting (XGBoost) with the newly developed Dream optimization algorithm (DOA) for hyperparameter tuning. A database of 306 samples with eight features is used to train and test models. Results demonstrate that all models achieved satisfactory predictive accuracy, with the DOA-RF model exhibiting the best performance on the testing dataset (R2 = 0.9755, RMSE = 2.7836, MAE = 2.1716, WI = 0.9933). The DOA-XGBoost model also yielded competitive results, whereas DOA-ELM showed relatively weaker performance. Compared with existing optimization-based approaches, the proposed DOA-RF model significantly reduced RMSE and MAE, validating the effectiveness of the DOA. SHAP analysis further revealed that the water-to-binder ratio (W/B) and curing age (CA) are the most influential factors in predicting MSC strength. Overall, this work not only establishes an accurate and interpretable predictive tool but also underscores the potential of novel optimization algorithms to advance data-driven concrete design and sustainable construction practices.

MSC:

68-04

1. Introduction

In recent years, concrete composed with manufactured sand has attracted increasing attention as a sustainable alternative to natural river sand, driven by the depletion of natural sand resources and growing ecological constraints []. Concrete using manufactured sand (MSC) has been explored in a variety of structural and non-structural applications, including building slabs, precast members, pavements, and marine structures, owing to its controllable gradation, angular particle shape, and availability in many production regions. However, a central metric determining the viability of MSC in load-bearing applications is its compressive strength (CS), which not only governs structural design limits but also correlates with durability, crack resistance, and long-term service performance [,,]. Therefore, accurate assessment of the CS of MSC is essential both for mix design optimization and for ensuring compliance with safety standards.

Over the past decade, researchers have approached the study of MSC’s strength from multiple avenues, notably experimental investigations and numerical modeling. In the experimental domain, many authors have varied mixture proportions (Mp), sand gradation (Sg), stone powder content (SPC), curing ages (CAs), and admixture dosages (Ad) to explore their influence on CS of MSC materials. For instance, Sun et al. [] tested 12 groups of high-performance MSC mixes with varying SPC. The results observed that the best balance in workability and CS was obtained when the SPC was set near 9 %, and the 28-day compressive strength reached 60 MPa. Ma et al. [] replaced a portion of fine aggregate with marble powder and used response surface methodology to optimize the contents of superplasticizer (SP), fly ash (FA), and silica fume (SF) in an MSC material. The test results showed that the MSC material achieved a high 28-day strength of 54.5 MPa with 20 % marble powder replacement. Other experimental studies on the strength of MSC materials can be referred to in the literature [,,,,]. Although experimental studies are essential for validating the mechanical behavior of MSC materials, they are often costly, time-consuming, and limited in exploring complex parameter interactions []. These limitations motivate the use of numerical simulations, which enable controlled, repeatable, and scalable analysis across a broader design space. Numerical modeling of MSC is relatively less common but offers a path to link microstructure and macroscopic strength. Some studies embed manufactured-sand particle geometry, interfacial transition zone (ITZ) behavior, and damage models in finite element (FE) or meso-scale simulations [,]. However, such mechanistic models are not without limitations. First, their computational cost can be prohibitive, especially for large-scale or meso-scale simulations requiring fine meshes to resolve complex microstructures []. Second, model calibration is laborious and data-intensive, often relying on inverse fitting to experimental data, which may not generalize well across different mix designs or curing conditions []. Third, simplifying assumptions (e.g., isotropy, linear elasticity in aggregates, uniform ITZ thickness) may compromise accuracy, especially when applied to real-world MSC with diverse particle shapes and packing densities [].

Given these constraints, data-driven approaches such as machine learning (ML) have emerged as promising alternatives or complements. Various ML-based prediction models have been used to predict the concrete’s CS, such as artificial neural network (ANN), extreme gradient boosting (XGBoost), support vector regression (SVR), and random forest (RF) [,,,,,]. Among these models, the input features typically include cement content (Cc), water-to-cement (W/B) ratio, fine aggregate proportion (FA), sand ratio (SR), filler or admixture content (e.g., stone powder, fly ash, silica fume), CA, etc. For instance, Dao et al. [] developed two ML models called ANN and adaptive neuro fuzzy inference (ANFIS) to predict CS of geopolymer concrete materials. The results indicated that the prediction accuracy of ANFIS was higher than that of the ANN model, with a coefficient of determination (R2) of 0.879. Chou and Pham [] established several ensemble models to predict the CS of high-performance concrete (HPC) materials. Five datasets, including FA, water, coarse and fine aggregate, CA, sand, and cement, were used to train these models. The results illustrated that the ANN model exhibited superior performance across the majority of datasets. Gregori et al. [] used SVR and Gaussian process regression (GPR) models to predict the CS of rubberized concrete materials. Compared to the SVR model, the GPR model obtained a higher prediction accuracy in the CS prediction. And they also found that the W/B has the main influence on the CS prediction. For the MSC materials, Ly et al. [] developed an ANFIS model to predict the CS of MSC materials using 289 data samples with features such as compressive strength of cement (CSC), tensile strength of cement (TSC), CA, stone powder content (SPC), W/B, and SR. The results showed that the proposed model obtained a satisfactory predictive performance, with a root mean squared error (RMSE) of 4.93. In addition, they found that the CA was the most important feature in the CS prediction for MSC materials. Liu et al. [] introduced a paradigm application for predicting the CS of MSC materials using interpretable ML models. All models were trained and tested on 3382 data samples, among which the XGBoost model achieved the highest prediction accuracy (R2 = 0.934). In addition, the combination of Shapley additive explanations (SHAPs) and the XGBoost model provided insights into the contributions of 12 input features to the CS prediction. However, the performance of basic ML models depends on the proper selection of hyperparameters. Some optimization methods, such as metaheuristic algorithms, provide effective solutions for hyperparameter optimization [,,]. For example, Zhao et al. [] applied two nature-inspired metaheuristic algorithms to optimize ANN models for predicting the CS of MSC materials. The results indicated that the optimized ANN model achieved a higher prediction accuracy than the original ANN model, with a decrease in RMSE of 8.84%. However, the application of newly developed optimization algorithms to MSC’s CS prediction is still limited, and no study has systematically explored the development of diverse ML algorithms (e.g., extreme learning machine (ELM) and kernel-ELM (KELM)) for this task.

Thus, this paper aims to use six classical and high-performance ML models (ANN, RF, ELM, KELM, SVR, and XGBoost) to predict the CS of MSC materials, while employing a novel algorithm called Dream optimization algorithm (DOA) proposed by Lang and Gao [] to optimize the models’ hyperparameters in order to enhance prediction accuracy. The best model for CS prediction is determined through a systematic comparison of the performance among all models, thereby further strengthening the foundation of AI research in this field. The content of the other sections is organized as follows: Section 2 introduces ML models and optimization algorithm used for predicting CS; Section 3 describes the data used and the feature selection process; Section 4 outlines the development procedure of the prediction models, including data processing, model optimization, and model evaluation; Section 5 presents the main prediction results and analyzes the performance differences among all models, as well as feature sensitivity; and Section 6 summarizes the main findings of this work.

2. Methods

2.1. ML Models

2.1.1. ANN Model

ANN models have emerged as a versatile tool for modeling complex, nonlinear relationships in materials science. A typical ANN consists of an input layer, multiple hidden layers, and an output layer, where each hidden layer is composed of neurons interconnected through weighted links. The principal hyperparameters include the number of hidden layers (Nh) and the number of neurons per layer (Nn), which strongly influence the model’s capacity to capture intricate correlations between input variables and target properties. Excessive neurons may lead to overfitting, while insufficient complexity reduces predictive accuracy []. Thus, careful tuning is essential for robust regression performance. For regression tasks in concrete materials research, ANNs offer distinct advantages over traditional statistical approaches by flexibly handling high-dimensional and noisy data []. Their ability to approximate nonlinear constitutive relationships enables accurate prediction of properties such as CS, durability indices, or thermal performance. The general prediction function of an ANN with one hidden layer is as follows:

where y and x present output and input features, respectively. Nn and Nx represent the number of features and the number of neurons in a hidden layer, respectively. who is the weight between the hidden layer and output layer, and wih is the weight between the input layer and hidden layer. is the hidden-layer activation function. bh and bo are the bias terms of the hidden layer and the output layer, respectively.

2.1.2. RF Model

RF is a robust ensemble learning method widely applied in regression and classification tasks due to its ability to manage nonlinear relationships and high-dimensional datasets []. The model constructs multiple decision trees (DTs), each trained on a bootstrap sample of the data, and aggregates their predictions to reduce variance and improve generalization. This prediction mechanism can be described mathematically by Equation (2). Its main hyperparameters include the number of trees (Nt) and maximum tree depth (Md), all of which determine the balance between accuracy and computational efficiency. In regression problems, increasing the Nt typically enhances stability, while controlling depth prevents overfitting to noise. For applications in concrete materials prediction, RF provides superior interpretability compared with many black-box models, as variable importance measures can highlight influential microstructural or compositional features []. Moreover, its tolerance to multicollinearity and capacity to capture nonlinear feature–property linkages make RF a valuable predictive tool for estimating strength, durability, and service-life parameters in civil engineering materials.

where N represents the number of DTs. denotes the prediction made by the n-th DT for sample x.

2.1.3. ELM Model

ELM is a single-hidden-layer feedforward neural network distinguished by its unique training strategy. Unlike conventional backpropagation-based networks, the input weights and hidden biases in ELM are randomly assigned and remain fixed, while the output weights are analytically determined through a least-squares solution. This design eliminates iterative optimization and substantially reduces computational cost, allowing the model to achieve rapid convergence even with large datasets []. Key hyperparameters primarily include the Nn, which governs the balance between approximation capability and generalization. In regression-oriented applications, ELM demonstrates a strong capability in approximating nonlinear mappings with minimal training time, making it attractive for engineering domains requiring real-time prediction. Within concrete material research, ELM provides advantages by efficiently capturing multivariate interactions among composition, curing conditions, and mechanical performance, thereby enabling accurate forecasting of strength development and service-related properties in a computationally efficient manner [,]. The general prediction function of the ELM model with a single hidden layer is:

where H is the output matrix of the hidden layer. is the weight matrix of the output layer. denotes the mapped value of the k-th sample at the j-th hidden node. wj and bj represent the randomly initialized input weights and randomly initialized biases, respectively.

2.1.4. KELM Model

KELM is a refined variant of the ELM model, designed to combine the efficiency of random hidden-layer mapping with the flexibility of kernel-based learning []. In KELM, the hidden layer does not require explicit tuning of neuron weights, while nonlinear transformations are realized through a kernel matrix. The general prediction function of the KELM model is expressed by Equation (4). The first primary hyperparameter is the regularization factor (Rf), which balances model complexity against overfitting. The second hyperparameter is the kernel parameter (kp), which governs the mapping ability of the chosen kernel function. Compared with conventional neural networks, KELM avoids laborious iterative optimization, allowing rapid model construction even with high-dimensional or noisy datasets [].

where ya represents the actual of the samples. and I are kernel and identity matrices. X and C represent the training sample matrix and the regularization coefficient, respectively.

2.1.5. SVR Model

SVR represents a kernel-based learning framework capable of addressing nonlinear regression problems with high generalization ability. The model seeks to construct an optimal regression function within an allowable error margin while simultaneously maximizing prediction robustness. Its performance is also largely governed by two critical hyperparameters: Rf and kp. For instance, an excessively high Rf may induce overfitting, whereas a poorly tuned kp can limit the ability to capture nonlinear interactions []. Within the domain of concrete materials, SVR offers notable advantages, particularly in predicting strength development and durability indicators when experimental data are limited or highly variable [,]. The general prediction function of the SVR model is expressed by Equation (5).

where K represents the total samples in the training set. and are Lagrange multipliers. and b are the k-th samples in training and bias, respectively.

2.1.6. XGBoost Model

XGBoost is a tree-based ensemble algorithm designed to achieve high predictive accuracy and computational efficiency. It constructs an additive sequence of DTs, where each new tree corrects the residual errors of the previous ensemble. The major hyperparameters determining the model’s performance include the number of weak learners (Nw), Md, and learning rate (Lr). Increasing Nw typically improves model expressiveness but may cause overfitting if not regularized. A larger Md enables the capture of complex feature interactions, yet excessive depth risks reduced generalization. The Lr parameter governs the step size of updates: smaller values stabilize training and improve accuracy but require more iterations, while larger values accelerate convergence at the expense of precision. For regression tasks in concrete materials research, XGBoost offers remarkable advantages, such as robustness against noisy inputs, ability to manage heterogeneous features, and interpretability through feature importance ranking [,]. These characteristics make it a reliable predictive framework for property evaluation and optimization in advanced concrete systems. The general prediction function of the SVR model is expressed by Equations (6) and (7).

where represents the prediction obtained in the iteration prior to the final update. is the output of the k-th tree in the model. is the optimization target. Gk and Sk are the first- and second-order derivatives of the loss function, respectively. represents the regularization term of the XGBoost model.

2.2. Dream Optimization Algorithm (DOA)

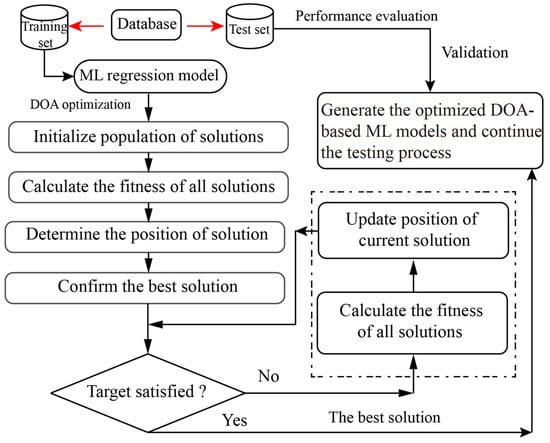

DOA has its theoretical basis in cognitive neuroscience, particularly in the neural processes related to human dreaming during rapid eye movement (REM) sleep. Dream states exhibit features such as incomplete memory preservation, intentional omission of information, and spontaneous reorganization, which together serve as an apt analogy for the balance between exploration and exploitation in metaheuristic search []. Building on this perspective, DOA portrays a population of agents undergoing iterative ‘dreaming’ cycles, where members are categorized according to different memory capacities. During each cycle, individuals recall previously discovered optimal solutions (resembling remembered experiences), discard certain dimensions (mimicking memory fading), and regenerate these missing elements either through internal reorganization or information exchange with peers (similar to dream-sharing). In regression modeling contexts, DOA explores complex parameter spaces through structured variation at the initial stages, while later iterations emphasize focused exploitation by narrowing the search scope. As for optimization problems (see Figure 1), the operational structure of DOA can be organized into the following components:

Figure 1.

The framework for using DOA to solve the optimization problem.

- (1)

- Initialization phase

The algorithm commences by dispersing candidate solutions randomly throughout the domain, as described by the following equation:

where represents the initial position of the i-th candidate solution. and represent the lower and upper boundaries of the searching space, respectively. is a random number within the range of [0, 1].

- (2)

- Exploration phase

At the onset of the dreaming stage, individuals experience partial forgetting of earlier solutions and explore fresh combinations under guided random perturbations. Grouped by memory ability, they adjust merely a subset of variables:

where represents the position of the i-th candidate solution at the t + 1 iteration. represents the best position of the candidate solution at the t iteration. t, Tmax, and Td are the current iteration time, the maximum number of iterations, and the maximum number of iterations in the current phase, respectively.

- (3)

- Exploitation phase

During later iterations, every agent emphasizes refinement near the globally retained optimum. This transition curtails random exploration and enhances the stability of the process:

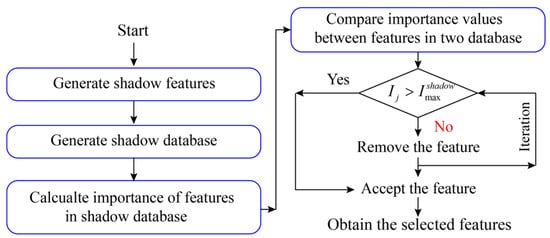

3. Materials

In this paper, a database containing 306 samples was utilized to train and test six ML models for predicting the CS of manufactured-sand concrete materials. The database was generated by Zhao et al. [] from published studies. This database included eight features, including CSC, TSC, CA, maximum size of the crushed stone (Smax), SPC, fine modulus (FM), W/B, and SR. The detailed information on the features in the database is illustrated in Table 1. Several statistical metrics, including minimum, maximum, mean, and standard indices, were used to describe the distribution of data. However, not all features should be used to train models for predicting targets limited in computational efficiency. Therefore, a feature selection approach conducted using the Boruta algorithm was adopted to determine the feature importance in the prediction of CS. As shown in Figure 2, the selection process can be organized using steps as follows:

Table 1.

The statistical information of features related to the UCS prediction.

Figure 2.

The flowchart of using the Boruta algorithm to select the optimal input features.

- (1)

- Shadow feature construction

The shadow database () was generated using original samples to enhance the complexity of the database. The new database can be described using Equation (11).

where is the randomly shuffled feature. F is the total number of features in the database.

- (2)

- Feature importance computation

Then, the database was used to build an RF model for predicting CS and calculate the importance scores of all features. The importance value for each feature can be expressed as follows:

where and are the importance values of the j-th feature in the original and shadow databases, respectively.

- (3)

- Feature selection rule

In this step, the maximum importance score of features in the shadow database () was considered as a threshold to further select the useful features. Then, the importance scores of all features in the original database were recalculated to compare with the threshold. As expressed in Equation (13), the features with values of importance scores lower than the threshold should be removed from the database. The calculation results of importance scores for each feature are summarized in Table 2. It can be observed that the minimum values of the importance score for all features are greater than the threshold based on the same database. In other words, all features can be considered as input features to generate ML models for forecasting the CS of manufactured-sand concrete materials in this work.

Table 2.

The results of importance scores for all features using the Boruta algorithm.

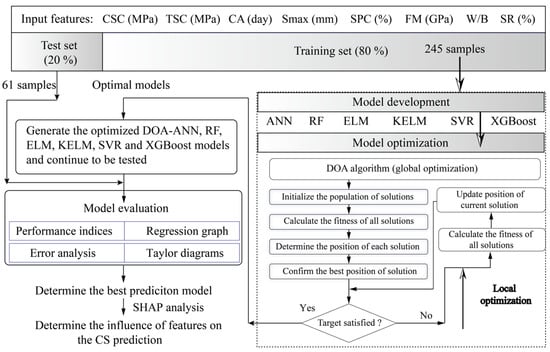

4. Prediction Models

In this paper, six common ML models were developed to predict the CS of manufactured-sand concrete materials. DOA was utilized to select the optimal combinations of hyperparameters for each model to improve predictive performance. After that, several evaluation tools were adopted to evaluate the model performance and then to determine the best model for exploring the influence of all features on the CS prediction. As shown in Figure 3, the framework can be divided into the following steps:

Figure 3.

The framework of using DOA and ML models to predict CS of manufactured-sand concrete materials.

(1) Data processing: A total of 306 samples were used to predict CS values. To improve prediction accuracy, more data samples need to be utilized to train models []. In this work, 80% of the data samples were assigned to the training set to enable the model to learn the internal relationships between input features and prediction targets. The remaining data samples were allocated to the test set to evaluate the performance of the trained model. In addition, all features were normalized to the range of −1 to 1 to prevent a decline in model predictive performance caused by differences in their scales.

(2) Model optimization: Before employing the DOA algorithm to optimize the best hyperparameter combinations for the models, it is necessary to determine the variation range of each model’s hyperparameters. For example, the candidate values of the parameter Nh in the ANN model were set to 1 and 2 in this study, while the parameter Nn varied within the range of 1 to 10. In the RF model, the range of Nt was set from 1 to 100, and the upper and lower limits of Md were set to 1 and 10, respectively. The hyperparameter ranges for the other models are presented in Table 3. Furthermore, two parameters called the population size and the number of iterations control the optimization performance of DOA. To that end, four values of population sizes (25, 50, 100, and 200) were selected to search for the optimal candidates during 300 iterations. Moreover, a fitness function organized by RMSE and five-fold cross-validation was established to avoid potential overfitting during DOA-based hyperparameter optimization. This function was defined as follows:

Table 3.

The setting of hyperparameters for all ML models.

(3) Model evaluation: To determine the optimal prediction model, the statistical indices were commonly used to evaluate the prediction accuracy of models [,,,]. The R2, RMSE, Willmott’s index (WI), and mean absolute error (MAE) were adopted to compare model performance in this paper. In regression tasks, the value of R2 for an ideal model is 1.0, indicating a perfect match between predicted and measured strengths. In contrast, the ideal values for RMSE and MAE are 0, since lower error magnitudes reflect better predictive performance. For WI, the ideal value is 1.0, representing a perfect agreement between model predictions and experimental results. Therefore, better-performing models are characterized by higher R2 and WI, together with lower RMSE and MAE. The definitions of these indices are expressed in Equations (5)–(8). In addition, the regression diagrams and prediction curves were also utilized to determine the optimal models for predicting CS values.

where ai and pi are the actual and predicted CS values of the i-th sample, respectively. represents the average values of actual values for all samples. N represents the maximum number of samples used in model evaluation.

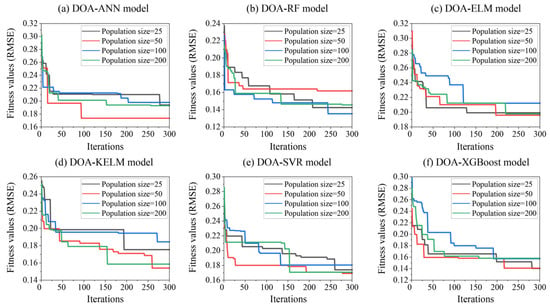

5. Results and Discussions

In this paper, six ML models were developed using a training set and were optimized using the DOA algorithm, combining various population sizes. Figure 4 shows the iteration curves of all hybrid models during the optimization process. It can be observed that the optimal solutions with the lowest fitness values were obtained before reaching the maximum number of iterations. For example, the optimized ANN model obtained the lowest fitness value when the population size was equal to 50. The optimal hybrid RF model was obtained using a population size of 100. The ELM, KELM, and SVR models also achieved higher performance optimized by DOA with 50 solutions. Especially, the fitness values of the DOA-XGBoost models with population sizes of 50 and 100 are close, indicating a similar predictive performance after iterations. The fitness values of all hybrid models were calculated and recorded in Table 4. In addition, based on the minimum fitness value principle, the selected optimal hyperparameter combinations are also presented in Table 4. In addition, it should be noted that the fitness curves of all hybrid models rapidly decrease in the early stages and approach a plateau after approximately 100–150 iterations, with only minor fluctuations afterwards. This behavior suggests that the near-optimal hyperparameter combinations have already been found, and additional iterations would yield only marginal reductions in RMSE while considerably increasing the computational cost. Therefore, 300 iterations were adopted as a practical upper limit in the optimization process.

Figure 4.

Iteration curves of all hybrid ML models optimized using DOA.

Table 4.

The fitness and hyperparameters for all models in the training and testing phases.

After determining the optimal hyperparameters of all ML models, several tools and methods were utilized to evaluate the model performance. To comprehensively assess the performance differences before and after optimization, all six models were first configured using their default hyperparameter settings (ANN model: Nh = 1 and Nn = 10; RF model: Nt = 100 and Md = Non; ELM model: Nn = 80; KELM model: Rf = 1 and kp = 1; SVR model: Rf = 1 and kp = 0.1; XGBoost model: Nw = 100, Md = 6, and Lr: 0.3). The same training and testing datasets were then used to develop and evaluate the baseline models for a comparison. Table 5 shows the values of statistical indices for all models calculated using training and test sets. In the training phase, the DOA-RF and DOA-XGBoost models obtained higher prediction accuracy than other models, especially the DOA-ELM model with unideal values of evaluation indices (R2 of 0.9141, RMSE of 4.9448, WI of 0.9771, and MAE of 3.7408). On the other hand, the trained models need to be further validated using a test set to avoid overfitting. As illustrated in this table, the DOA-RF also obtained the most satisfactory predictive performance, resulting in the highest values of R2 and WI (0.9755 and 0.9933), and the lowest values of RMSE and MAE (2.7836 and 2.1716). After this model, the ranking of other models ordered by performance is as follows: the DOA-XGBoost, DOA-ANN, DOA-SVR, DOA-KELM, and DOA-ELM models. Moreover, it can be seen that all non-optimized models exhibited noticeably lower prediction accuracy. For instance, the original RF model achieved values of indices R2 = 0.9536, RMSE = 2.7450, WI = 0.9807, and MAE = 1.9182 compared with the R2 = 0.9836, RMSE = 2.1606, WI = 0.9957, and MAE = 1.5104 after DOA optimization during the training phase. These results clearly indicate that the DOA algorithm substantially enhances model accuracy and generalization performance. In addition, although the R2 values of the proposed models are close to 1, the RMSE values are not close to 0 because RMSE is sensitive to the scale of the target variable. Given that the compressive strength values in the dataset range from 4.23 to 96.30 MPa, even a small percentage prediction error results in RMSE values greater than 2–4 MPa. Furthermore, R2 quantifies the proportion of explained variance and is independent of scale, while RMSE expresses absolute error. Therefore, high R2 and non-zero RMSE are consistent and expected. The obtained RMSE values represent small relative errors, confirming that the ML models achieved satisfactory predictive performance.

Table 5.

The results of statistical indices for all models in the training and testing phases.

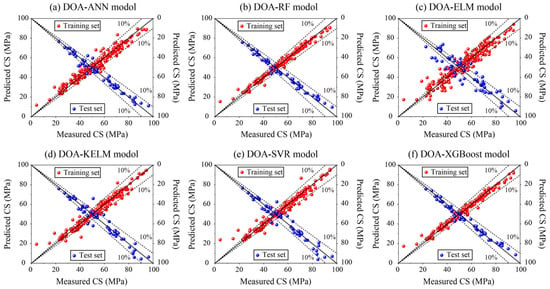

Furthermore, a method-based regression graph was adopted to compare the predictive performance between models developed in this paper. In a graph, the position of the data sample was determined using measured and predicted values. If the predicted value equals the measured value, the corresponding data sample lies on the diagonal line. In other words, the greater the deviation between the predicted and measured values, the farther the data points are from the diagonal. As demonstrated in Figure 5, it can be clearly observed that many data points in the DOA-ELM model are located far from the diagonal line. To allow for a certain level of prediction error, a 10% error tolerance line was introduced to further evaluate model performance, i.e., the greater the number of data points outside the tolerance line, the poorer the model performance. Overall, only the DOA-RF and DOA-XGBoost models have data points that are mostly within the tolerance line and even close to the diagonal. This indicates that the predictive performance of these two models is superior to the others, which is consistent with the performance ranking inferred from the evaluation indices.

Figure 5.

Regression graphs of all hybrid ML models optimized using DOA.

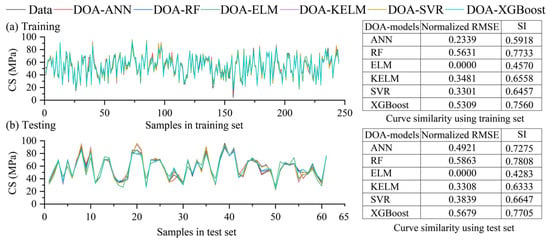

In addition, prediction curves were generated to further evaluate the predictive performance of the models. If the prediction curve coincides with the measured curve, it indicates that the model achieves 100% prediction accuracy. Therefore, this study proposes a curve similarity index based on the R2 and RMSE values to assess the degree of overlap between the predicted and measured curves. The R2 value directly reflects the goodness of fit between the predicted and actual curves, while the RMSE value describes their variation trend. To integrate these two metrics, RMSE was normalized to the range of 0–1 and then combined with R2 to form a new similarity index (SI). The SI values can be calculated using Equation (19). The purpose of introducing SI is to provide an integrated measure that captures both prediction accuracy and curve-shape consistency. While R2 evaluates the overall goodness-of-fit, RMSE reflects the magnitude of prediction errors. By combining these two metrics after normalizing RMSE, SI enables a more balanced assessment of how closely the predicted curve follows the measured trend. A higher SI value, therefore, indicates that the model not only fits the data well but also reproduces the variation pattern of the experimental curve more faithfully. As shown in Figure 6, during both the training and testing phases, the ANN model achieved the highest SI values (0.7733 and 0.7808) for the prediction and measured curves, representing the greatest degree of overlap and demonstrating that the ANN model provides the best predictive performance.

where represents the normalized RMSE values for each prediction model.

Figure 6.

Prediction curves and similarity calculation results of all hybrid ML models.

Although the DOA-RF model was regarded as the best-performing among the proposed models, its relative advantages or disadvantages compared with other published models remain unknown. Therefore, this paper further compares the prediction accuracy of the developed models under the same dataset. As shown in Table 6, the proposed model outperforms the models (ANN models optimized by biogeography-based optimization (BBO) and multi-tracker optimization algorithm (MTOA), i.e., BBONN and MTOANN) provided by Zhao et al. [] on both the training and testing sets, with lower values of RMSE and MAE (training: 2.1606 and 1.5104; testing: 2.7836 and 2.1716). This result further demonstrates that changes in the optimization algorithm exert a significant influence on improving the predictive performance of the model.

Table 6.

Comparison of predictive performance between the proposed model and existing models.

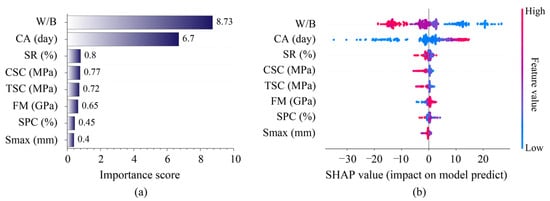

In addition, the influence of each feature on the CS prediction needs to be assessed using the optimal model (i.e., DOA-RF model). In this paper, the SHAP analysis was conducted to complete a sensitivity analysis for features in predicting the CS of MSC materials. SHAP is a model-agnostic interpretability method derived from cooperative game theory, where each feature is treated as a ‘player’ that contributes to the final prediction. The SHAP value assigned to each feature reflects its marginal contribution, averaged across all possible feature combinations [,,]. For a trained prediction model, the SHAP value of the j-th feature for the i-th sample is defined as the marginal contribution of that feature to the model output. Mathematically, the SHAP value is expressed as follows:

where represents the Shapley value (marginal contribution) of feature j for sample i. S and F are two subsets of features. is the input feature vector of the i-th sample. is model prediction using only feature subset S. and are cardinalities (number of elements) of sets S and F, respectively. SHAP provides two key outputs: (1) feature importance, ranking the variables according to their overall impact on the prediction, and (2) feature effects, showing whether a feature increases or decreases the predicted strength and how this effect varies with its magnitude. To derive the global importance of all features, the absolute SHAP values were averaged across all samples:

where represents the total number of samples used in the SHAP evaluation. is the global importance score of feature j.

Figure 7a shows the calculation results of importance scores for all features in predicting the CS of manufactured-sand concrete. It can be observed that the W/B and CA features had higher importance scores than other features, especially the W/B (importance score equals 8.73). On the other hand, the contribution of features to CS prediction is also analyzed by calculating the SHAP values. The color map represents the original feature values, where red indicates higher feature values and blue indicates lower values. The SHAP value on the vertical axis shows whether the feature increases or decreases the predicted compressive strength. Thus, the combination of color (feature magnitude) and SHAP value (impact direction) indicates how different levels of each feature influence the model prediction. It can be seen from Figure 7b that W/B and concrete’s CS exhibited a negative correlation, whereby higher W/B values correspond to lower CS values. In the case of CA, it shows a negative correlation with CS at lower values, while higher CA values exert a positive contribution to CS of concrete materials. This finding aligns with the fundamental material mechanisms. For instance, W/B governs the balance between hydration and porosity: lower W/B ratios lead to a denser cement matrix with fewer capillary pores, while higher W/B ratios produce more voids as excess water evaporates, significantly reducing strength. CA represents the progression of hydration and microstructural refinement over time. Longer curing promotes the continued formation of gel and densification of the ITZ, resulting in measurable increases in strength. In MSC materials, the angular geometry of manufactured sand makes the ITZ more sensitive to hydration progress, reinforcing the importance of CA. In short, the SHAP analysis indicates that the W/B and CA are the two most influential variables in predicting MSC material’s compressive strength.

Figure 7.

SHAP analysis of CS prediction based on the optimal DOA-RF model: (a) global feature importance ranking; (b) SHAP value distribution and feature impact analysis.

6. Conclusions

This study systematically developed and compared six DOA-optimized machine learning models for predicting the CS of manufactured-sand concrete. By integrating a newly proposed metaheuristic optimization algorithm with multiple ML models, the research provides a comprehensive benchmark for model performance and identifies the governing parameters affecting concrete strength. The major conclusions can be summarized as follows:

- (1)

- Model performance: Among all the models, DOA-RF achieved the highest predictive accuracy, with testing R2 = 0.9755, RMSE = 2.7836, and MAE = 2.1716.

- (2)

- The introduction of DOA significantly improved predictive performance compared with previously reported optimization techniques such as BBO and MTOA. This demonstrated that algorithmic selection is critical for enhancing the accuracy of ML-based predictions in materials engineering.

- (3)

- SHAP analysis confirmed that the W/B and CA are the dominant predictors of compressive strength. W/B showed a negative correlation with strength, whereas CA contributed positively to long-term strength development.

Despite the encouraging outcomes, this study is subject to certain limitations. The database consists of only 306 samples, which constrains the generalization of the models across broader ranges of mix designs and environmental conditions. The reliance on a single optimization algorithm (i.e., DOA) also raises questions regarding its robustness under different datasets, suggesting that future work should explore hybrid or comparative optimization strategies. Furthermore, the current validation is limited to laboratory data, and large-scale field applications are required to confirm the reliability and practicality of the proposed models in real engineering environments.

Author Contributions

Conceptualization, P.H. and X.M.; methodology, H.S. and S.D.; software, K.L.; validation, P.H. and K.L.; formal analysis, P.H.; investigation, P.H. and S.D.; writing—original draft preparation, P.H.; writing—review and editing, Z.C.; visualization, H.S. and K.L.; supervision, X.M. and Z.C.; funding acquisition, X.M. and Z.C. All authors have read and agreed to the published version of the manuscript.

Funding

Supported by National Key R&D Programs for Young Scientists (Grant No. 2023YFB2390400), the National Natural Science Foundation of China (Grant No. 52379112 and 52509156), Hubei Provincial Natural Science Foundation of China (Grant No. 2024AFB041), and Shenzhen undertakes major national science and technology projects (Grant No. CJGJZD20230724094000002).

Data Availability Statement

The data used in this article are confidential. The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

Peng Huang and Shengjie Di were employed by Northwest Engineering Corporation Limited, Power China. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Khan, K.; Salami, B.A.; Jamal, A.; Amin, M.N.; Usman, M.; Al-Faiad, M.A.; Abu-Arab, A.M.; Iqbal, M. Prediction models for estimating compressive strength of concrete made of manufactured sand using gene expression programming model. Materials 2022, 15, 5823. [Google Scholar] [CrossRef]

- Zhu, Y.; Cui, H.; Tang, W. Experimental investigation of the effect of manufactured sand and lightweight sand on the properties of fresh and hardened self-compacting lightweight concretes. Materials 2016, 9, 735. [Google Scholar] [CrossRef]

- Gao, K.; Sun, Z.; Ma, H.; Ma, G. Research on compressive strength of manufactured sand concrete based on response surface methodology. Materials 2023, 17, 195. [Google Scholar] [CrossRef]

- Ding, X.; Li, C.; Xu, Y.; Li, F.; Zhao, S. Experimental study on long-term compressive strength of concrete with manufactured sand. Constr. Build. Mater. 2016, 108, 67–73. [Google Scholar] [CrossRef]

- Sun, Y.; Song, S.; Yu, H.; Ma, H.; Xu, Y.; Zu, G.; Ruan, Y. Experimental Study on the Strength and Durability of Manufactured Sand HPC in the Dalian Bay Undersea Immersed Tube Tunnel and Its Engineering Application. Materials 2024, 17, 5003. [Google Scholar] [CrossRef] [PubMed]

- Ma, H.; Sun, Z.; Ma, G. Research on compressive strength of manufactured sand concrete based on response surface methodology (RSM). Appl. Sci. 2022, 12, 3506. [Google Scholar] [CrossRef]

- Zhao, S.; Ding, X.; Zhao, M.; Li, C.; Pei, S. Experimental study on tensile strength development of concrete with manufactured sand. Constr. Build. Mater. 2017, 138, 247–253. [Google Scholar] [CrossRef]

- Mane, K.M.; Nadgouda, P.A.; Joshi, A.M. An experimental study on properties of concrete produced with M-sand and E-sand. Mater. Today Proc. 2021, 38, 2590–2595. [Google Scholar] [CrossRef]

- Kavya, A.; Rao, A.V. Experimental investigation on mechanical properties of concrete with M-sand. Mater. Today Proc. 2020, 33, 663–667. [Google Scholar] [CrossRef]

- Li, F.; Yao, T.; Luo, J.; Song, Q.; Yang, T.; Zhang, R.; Cao, X.; Li, M. Experimental investigation on the performance of ultra-high performance concrete (UHPC) prepared by manufactured sand: Mechanical strength and micro structure. Constr. Build. Mater. 2024, 452, 139001. [Google Scholar] [CrossRef]

- Zheng, S.; Liang, J.; Hu, Y.; Wei, D.; Lan, Y.; Du, H.; Rong, H. An experimental study on compressive properties of concrete with manufactured sand using different stone powder content. Ferroelectrics 2021, 579, 189–198. [Google Scholar] [CrossRef]

- Dai, L.; Zhao, X.; Pan, Y.; Luo, H.; Gao, Y.; Wang, A.; Ding, L.; Li, P. Microseismic criterion for dynamic risk assessment and warning of roadway rockburst induced by coal mine seismicity. Eng. Geol. 2025, 357, 108324. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, C.; Liao, L.; Wang, C. Numerical study of the effect of ITZ on the failure behaviour of concrete by using particle element modelling. Constr. Build. Mater. 2018, 170, 776–789. [Google Scholar] [CrossRef]

- Pan, G.; Song, T.; Li, P.; Jia, W.; Deng, Y. Review on finite element analysis of meso-structure model of concrete. J. Mater. Sci. 2025, 60, 32–62. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, S. A method for modeling the damage behavior of concrete with a three-phase mesostructure. Constr. Build. Mater. 2016, 102, 26–38. [Google Scholar] [CrossRef]

- Cusatis, G.; Pelessone, D.; Mencarelli, A. Lattice discrete particle model (LDPM) for failure behavior of concrete. I Theory. Cem. Concr. Compos. 2011, 33, 881–890. [Google Scholar] [CrossRef]

- Grassl, P.; Jirásek, M. Damage-plastic model for concrete failure. Int. J. Solids Struct. 2006, 43, 7166–7196. [Google Scholar] [CrossRef]

- Eskandari-Naddaf, H.; Kazemi, R. ANN prediction of cement mortar compressive strength, influence of cement strength class. Constr. Build. Mater. 2017, 138, 1–11. [Google Scholar] [CrossRef]

- Duan, J.; Asteris, P.G.; Nguyen, H.; Bui, X.N.; Moayedi, H. A novel artificial intelligence technique to predict compressive strength of recycled aggregate concrete using ICA-XGBoost model. Eng. Comput. 2021, 37, 3329–3346. [Google Scholar] [CrossRef]

- Fissha, Y.; Ragam, P.; Ikeda, H.; Kumar, N.K.; Adachi, T.; Paul, P.S.; Kawamura, Y. Data-driven machine learning approaches for simultaneous prediction of peak particle velocity and frequency induced by rock blasting in mining. Rock Mech. Bull. 2025, 4, 100166. [Google Scholar] [CrossRef]

- Hosseini, S.; Lawal, A.I.; Mulenga, F. Prediction of blast-induced ground vibration in dolomitic marble quarry using Z-number information and fuzzy cognitive map based neural network models. Rock Mech. Bull. 2025, 4, 100217. [Google Scholar] [CrossRef]

- Shariati, M.; Mafipour, M.S.; Ghahremani, B.; Azarhomayun, F.; Ahmadi, M.; Trung, N.T.; Shariati, A. A novel hybrid extreme learning machine–grey wolf optimizer (ELM-GWO) model to predict compressive strength of concrete with partial replacements for cement. Eng. Comput. 2022, 38, 757–779. [Google Scholar] [CrossRef]

- Li, C.; Zhou, J.; Dias, D.; Gui, Y. A kernel extreme learning machine-grey wolf optimizer (KELM-GWO) model to predict uniaxial compressive strength of rock. Appl. Sci. 2022, 12, 8468. [Google Scholar] [CrossRef]

- Dao, D.V.; Ly, H.B.; Trinh, S.H.; Le, T.T.; Pham, B.T. Artificial intelligence approaches for prediction of compressive strength of geopolymer concrete. Materials 2019, 12, 983. [Google Scholar] [CrossRef] [PubMed]

- Chou, J.S.; Pham, A.D. Enhanced artificial intelligence for ensemble approach to predicting high performance concrete compressive strength. Constr. Build. Mater. 2013, 49, 554–563. [Google Scholar] [CrossRef]

- Gregori, A.; Castoro, C.; Venkiteela, G. Predicting the compressive strength of rubberized concrete using artificial intelligence methods. Sustainability 2021, 13, 7729. [Google Scholar] [CrossRef]

- Ly, H.B.; Pham, B.T.; Dao, D.V.; Le, V.M.; Le, L.M.; Le, T.T. Improvement of ANFIS model for prediction of compressive strength of manufactured sand concrete. Appl. Sci. 2019, 9, 3841. [Google Scholar] [CrossRef]

- Liu, X.; Mei, S.; Wang, X.; Li, X. Estimation of compressive strength of concrete with manufactured sand and natural sand using interpretable artificial intelligence. Case Stud. Constr. Mater. 2024, 21, e03840. [Google Scholar] [CrossRef]

- Li, C.; Zhou, J.; Du, K.; Armaghani, D.J.; Huang, S. Prediction of flyrock distance in surface mining using a novel hybrid model of harris hawks optimization with multi-strategies-based support vector regression. Nat. Resour. Res. 2023, 32, 2995–3023. [Google Scholar] [CrossRef]

- Li, C.; Mei, X.; Dias, D.; Cui, Z.; Zhou, J. Compressive strength prediction of rice husk ash concrete using a hybrid artificial neural network model. Materials 2023, 16, 3135. [Google Scholar] [CrossRef]

- Mei, X.; Li, J.; Zhang, J.; Cui, Z.; Zhou, J.; Li, C. Predicting energy absorption characteristic of rubber concrete materials. Constr. Build. Mater. 2025, 465, 140248. [Google Scholar] [CrossRef]

- Zhao, Y.; Hu, H.; Song, C.; Wang, Z. Predicting compressive strength of manufactured-sand concrete using conventional and metaheuristic-tuned artificial neural network. Measurement 2022, 194, 110993. [Google Scholar] [CrossRef]

- Lang, Y.; Gao, Y. Dream Optimization Algorithm (DOA): A novel metaheuristic optimization algorithm inspired by human dreams and its applications to real-world engineering problems. Comput. Methods Appl. Mech. Eng. 2025, 436, 117718. [Google Scholar] [CrossRef]

- Bejani, M.M.; Ghatee, M. A systematic review on overfitting control in shallow and deep neural networks. Artif. Intell. Rev. 2021, 54, 6391–6438. [Google Scholar] [CrossRef]

- Al-Shamasneh, A.A.R.; Mahmoodzadeh, A.; Karim, F.K.; Saidani, T.; Alghamdi, A.; Alnahas, J.; Sulaiman, M. Application of machine learning techniques to predict the compressive strength of steel fiber reinforced concrete. Sci. Rep. 2025, 15, 30674. [Google Scholar] [CrossRef]

- Zhou, J.; Liu, Z.; Li, C.; Du, K.; Yang, H. Cutting-edge approaches to specific energy prediction in TBM disc cutters: Integrating COSSA-RF model with three interpretative techniques. Undergr. Space 2025, 22, 241–262. [Google Scholar] [CrossRef]

- Mei, X.C.; Ding, C.D.; Zhang, J.M.; Li, C.Q.; Cui, Z.; Sheng, Q.; Chen, J. Application of optimized random forest regressors in predicting maximum principal stress of aseismic tunnel lining. J. Cent. S. Univ. 2024, 31, 3900–3913. [Google Scholar] [CrossRef]

- Ding, S.; Xu, X.; Nie, R. Extreme learning machine and its applications. Neural Comput. Appl. 2014, 25, 549–556. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, J.; Liu, C.; Zheng, J. Prediction of rubber fiber concrete strength using extreme learning machine. Front. Mater. 2021, 7, 582635. [Google Scholar] [CrossRef]

- Nayak, S.C.; Nayak, S.K.; Panda, S.K. Assessing compressive strength of concrete with extreme learning machine. J. Soft Comput. Civ. Eng. 2021, 5, 68–85. [Google Scholar] [CrossRef]

- Afzal, A.L.; Nair, N.K.; Asharaf, S. Deep kernel learning in extreme learning machines. Pattern Anal. Appl. 2021, 24, 11–19. [Google Scholar] [CrossRef]

- Samadi-Koucheksaraee, A.; Chu, X. Development of a novel modeling framework based on weighted kernel extreme learning machine and ridge regression for streamflow forecasting. Sci. Rep. 2024, 14, 30910. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; O’Donnell, L.J. Support vector regression. In Machine Learning; Academic Press: Cambridge, MA, USA, 2020; pp. 123–140. [Google Scholar]

- Abd, A.M.; Abd, S.M. Modelling the strength of lightweight foamed concrete using support vector machine (SVM). Case Stud. Constr. Mater. 2017, 6, 8–15. [Google Scholar] [CrossRef]

- Park, J.Y.; Yoon, Y.G.; Oh, T.K. Prediction of concrete strength with P-, S-, R-wave velocities by support vector machine (SVM) and artificial neural network (ANN). Appl. Sci. 2019, 9, 4053. [Google Scholar] [CrossRef]

- Hu, X.; Li, B.; Mo, Y.; Alselwi, O. Progress in artificial intelligence-based prediction of concrete performance. J. Adv. Concr. Technol. 2021, 19, 924–936. [Google Scholar] [CrossRef]

- Khan, M.I.; Abbas, Y.M.; Fares, G.; Alqahtani, F.K. Strength prediction and optimization for ultrahigh-performance concrete with low-carbon cementitious materials–XG boost model and experimental validation. Constr. Build. Mater. 2023, 387, 131606. [Google Scholar] [CrossRef]

- Magar, R.; Farimani, A.B. Learning from mistakes: Sampling strategies to efficiently train machine learning models for material property prediction. Comput. Mater. Sci. 2023, 224, 112167. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, T.; Li, C. Migration time prediction and assessment of toxic fumes under forced ventilation in underground mines. Undergr. Space 2024, 18, 273–294. [Google Scholar] [CrossRef]

- Li, C.; Zhou, J. Use of a novel description method in characterization of traces on hard rock pillar surfaces. Rock Mech. Rock Eng. 2025, 58, 3331–3352. [Google Scholar] [CrossRef]

- Li, C.; Zhou, J. Prediction and optimization of adverse responses for a highway tunnel after blasting excavation using a novel hybrid multi-objective intelligent model. Transp. Geotech. 2024, 45, 101228. [Google Scholar] [CrossRef]

- Zhang, J.; Dias, D.; An, L.; Li, C. Applying a novel slime mould algorithm-based artificial neural network to predict the settlement of a single footing on a soft soil reinforced by rigid inclusions. Mech. Adv. Mater. Struct. 2024, 31, 422–437. [Google Scholar] [CrossRef]

- Rao, A.R.; Wang, H.; Gupta, C. Predictive analysis for optimizing port operations. Appl. Sci. 2025, 15, 2877. [Google Scholar] [CrossRef]

- Ekanayake, I.U.; Meddage, D.P.P.; Rathnayake, U. A novel approach to explain the black-box nature of machine learning in compressive strength predictions of concrete using Shapley additive explanations (SHAP). Case Stud. Constr. Mater. 2022, 16, e01059. [Google Scholar] [CrossRef]

- Anjum, M.; Khan, K.; Ahmad, W.; Ahmad, A.; Amin, M.N.; Nafees, A. New SHapley Additive ExPlanations (SHAP) approach to evaluate the raw materials interactions of steel-fiber-reinforced concrete. Materials 2022, 15, 6261. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).