Abstract

Additive Bayesian networks (ABNs) provide a flexible framework for modeling complex multivariate dependencies among variables of different distributions, including Gaussian, Poisson, binomial, and multinomial. This versatility makes ABNs particularly attractive in clinical research, where heterogeneous data are frequently collected across distinct groups. However, standard applications either pool all data together, ignoring group-specific variability, or estimate separate models for each group, which may suffer from limited sample sizes. In this work, we extend ABNs to a mixed-effect framework that accounts for group structure through partial pooling, and we evaluate its performance in a large-scale simulation study. We compare three strategies—partial pooling, complete pooling, and no pooling—cross a wide range of network sizes, sparsity levels, group configurations, and sample sizes. Performance is assessed in terms of structural accuracy, parameter estimation accuracy, and predictive performance. Our results demonstrate that partial pooling consistently yields superior structural and parametric accuracy while maintaining robust predictive performance across all evaluated settings for grouped data structures. These findings highlight the potential of mixed-effect ABNs as a versatile approach for learning probabilistic graphical models from grouped data with diverse distributions in real-world applications.

Keywords:

hierarchical modeling; generalized linear mixed models; network structure learning; simulation study; multicenter data; directed acyclic graphs MSC:

62-08

1. Introduction

Modeling complex multivariate dependencies is a central challenge in biomedical research and many other scientific fields. In practice, clinical and experimental datasets often contain variables of different types, including continuous, count, binary, and categorical, and are collected across multiple centers, hospitals, or experimental batches [1]. Such grouped data structures introduce additional complexity: observations within a group are typically more similar to each other than to observations from other groups, violating the independence assumptions of traditional statistical models [2,3]. Ignoring these dependencies can lead to biased parameter estimates, spurious relationships, and suboptimal predictive performance. On the other hand, analyzing each group separately can sacrifice statistical power and may produce unstable estimates, particularly when sample sizes are limited [4]. These challenges necessitate flexible modeling approaches that can accommodate mixed variable types while appropriately accounting for group-specific effects.

To this end, Additive Bayesian Networks (ABNs) have emerged as a powerful class of probabilistic graphical models [5,6,7,8,9,10]. ABNs extend traditional Bayesian Networks (BNs) by representing each variable’s dependency on its parents using a Generalized Linear Model (GLM) [11]. This structure provides a natural framework for handling mixed-type data, enabling the simultaneous modeling of continuous outcomes, count data, and categorical variables without resorting to information-losing transformations such as discretization [12]. The network’s structure is typically learned by searching for a model that optimizes a goodness-of-fit score, which can be derived from either a Bayesian or an information-theoretic (frequentist) perspective [11]. This flexibility, along with the ability to produce interpretable graphs of conditional independencies, makes ABNs particularly attractive. They are especially well-suited for settings where both statistical rigor and clarity are critical, such as in clinical datasets that include physiological measurements, laboratory results, and categorical patient characteristics.

Despite these advantages, standard ABN approaches are limited in their ability to handle grouped data. Typically, applications either pool all observations together, assuming a single homogeneous population, or fit separate networks for each group [4]. Complete pooling ignores potential heterogeneity between groups, potentially leading to biased estimates if group-specific effects exist. Conversely, no pooling may suffer from small sample sizes, resulting in unstable network structures and parameter estimates. Mixed-effects models offer a principled compromise by introducing partial pooling, allowing their parameters to vary across groups, while sharing information to improve estimation [3,13]. Integrating mixed-effects modeling within the ABN framework thus offers the potential to retain flexibility for mixed-type variables while accounting for group-specific deviations, a combination not available in standard ABN implementations.

Building on our previous work, which introduced mixed-effect ABNs for a clinical application [5,14], we now provide a comprehensive simulation study designed to formally quantify their performance. In our previous application [14], we demonstrated how mixed-effect ABNs can accommodate mixed-type variables and explicitly account for center-specific effects in real-world multicenter clinical data, yielding a more parsimonious network structure and improving predictive accuracy for outcomes with high inter-center variability compared to a standard pooled analysis. However, because that analysis was conducted on observational data, the true underlying network structure and parameter values were unknown. Consequently, performance assessment was limited to indirect metrics such as predictive accuracy and clinical plausibility. The present study provides the formal methodological validation that builds upon that initial application. Here, we design a comprehensive simulation study in which the ground-truth network structures, parameter values, and group-specific effects are prespecified and fully known. This simulation framework enables us to rigorously quantify the ability of mixed-effect ABN to recover true network structures, accurately estimate parameters, and make reliable predictions across a range of conditions, including varying sample sizes, network sparsity, and group configurations. By systematically exploring these scenarios, we aim to characterize the strengths and limitations of partial pooling in the ABN context and to provide practical guidance for their application in multicenter clinical studies.

2. Materials and Methods

2.1. Mixed-Effect Additive Bayesian Networks

BNs are structured probabilistic models that use directed acyclic graphs (DAGs) to encode dependencies between random variables. Each node represents a variable, while the edges describe direct probabilistic relationships among them. This structure facilitates the decomposition of complex joint distributions into simpler, local conditional distributions.

To accommodate complex multivariate data with a mixture of variable types (such as Gaussian, binomial, multinomial, and Poisson), the standard BN framework can be extended to ABNs. ABNs model the local distribution of each node using an appropriate GLM tailored to its distributional characteristics [11]. Within this framework, the conditional distribution of each node, given its parents, is specified through a link function that connects its expected value to a linear predictor composed of its parents. This allows for a unified modeling approach for heterogeneous data types within a single graphical model.

2.1.1. Modeling Grouped Data with Mixed-Effect Additive Bayesian Networks

In studies involving multiple data sources, such as multi-center clinical data, observations often exhibit inherent grouping. Ignoring this grouping, for instance, by treating all data as coming from a single population (complete pooling), can lead to biased parameter estimates and misleading graphical structures. Conversely, modeling each group independently (no pooling) prevents information sharing, which is particularly detrimental in settings with sparse or imbalanced samples [4].

To address this trade-off between complete and no pooling, we extend the ABN framework to incorporate mixed effects. This is achieved by replacing the GLMs with Generalized Linear Mixed-effects Models (GLMMs), thereby integrating hierarchical modeling directly into the BN structure. This approach enables partial pooling of information by varying model parameters (such as intercepts and slopes) in the local conditional models by group, capturing both fixed effects that are consistent across all groups and random effects that reflect group-specific deviations [4,14,15]. Formally, for a Gaussian node i, corresponding to the variable , with parent set , the local conditional distribution can be written as:

where is the intercept term, is a vector of fixed-effect coefficients, is the associated design matrix, and is the residual error. Grouping is represented by an additional categorical variable F, with each of the F related data sets supposed to be independent. The matrix is the random effects design matrix of size , with , and the vector represents the group-specific random effects and is independent of .

2.1.2. Additive Bayesian Networks for Mixed Distribution Data

In this work, we restrict to random intercepts models, where only the intercept varies across groups. This choice balances modeling flexibility with computational tractability and interpretability [14]. While more complex random-effect structures, such as random slopes or interaction terms, can capture additional heterogeneity, they substantially increase model complexity and may lead to identifiability issues, particularly when working with high-dimensional networks or mixed discrete-continuous data [4]. The random intercept formulation still enables partial pooling and captures essential group-level variation in a sparser form, making it a practical and robust choice.

For a given node i and an observation coming from group k, conditional on its parent set , the local model with a varying intercept can be written as:

where is the jth row of the associated design matrix, is the random intercept for group k, and g is the canonical link function for variable , which depends on its distribution: identity link for Gaussian, logit link for binomial, multinomial logit for multinomial, and log link for Poisson.

2.1.3. Model Complexity

Building on the GLMM framework, we define a metric to quantify the complexity of the network structure, which is determined by the total number of parameters to be estimated. For the random-intercepts model described in Equation (2), the complexity for each node i is the sum of its fixed-effects, random-effects variance, and residual variance parameters:

The fixed effects include one parameter for the overall intercept () and one for each parent in . The random-effect component consists of a single variance parameter, , for the random intercept. An additional parameter for the residual variance is included only for Gaussian nodes. Note that this formulation is a simplification of the more general model in Scutari et al. [4], which also includes random slopes and their full covariance matrix. Summing over all nodes in the graph, we obtain the total model complexity:

where is the number of Gaussian nodes in the DAG.

2.1.4. Structure Learning and Parameter Estimation in Mixed-Effect ABNs

Learning the structure of a BN with mixed effects involves identifying the optimal set of directed edges that best represent conditional dependencies among variables while accounting for hierarchical grouping. The inclusion of random effects increases the number of parameters and the complexity of the model space, as covariance parameters for random effects must be estimated alongside fixed-effect coefficients.

To manage this complexity, we use score-based structure learning algorithms that optimize penalized likelihood criteria, such as the Bayesian Information Criterion (BIC), adapted for GLMMs. For this methodological evaluation, we chose an exact search algorithm because it guarantees finding the optimal network structure, thereby providing the most reliable and unambiguous baseline for assessing model performance. While this approach is not scalable to high-dimensional data [16], the mixed-effects ABN framework is fully compatible with approximate, heuristic search algorithms (e.g., hill-climbing or genetic algorithms) that are suitable for larger networks. Specifically, we use exact search methods [16] to identify network structures that balance goodness-of-fit and parsimony. Parameter estimation for each candidate local model is performed within a maximum likelihood framework, using random intercepts to model group-level variation.

To evaluate the effects of accounting for grouped data structures, we compare our mixed-effect model, known as partial pooling (), with two common alternative approaches. The first, complete pooling (), ignores the grouping variable and learns a single model from the entire dataset under the assumption of homogeneity. The second approach, no pooling (), treats the data from different groups as completely independent. Although one could achieve this by training a separate network for each group, a more integrated solution is to incorporate the grouping variable as a fixed-effect covariate within a single model. We employ this latter approach by designating the grouping variable as a parent of every other node in the network. This stratified model ensures that the parameters for each node are conditioned on group identity, effectively preventing the sharing of information across groups while maintaining a cohesive and comparable model structure. Finally, we validate the resulting network structure for each approach through bootstrapping to assess the stability and reliability of the inferred connections.

All analyses were performed using the R package abn [17], which is designed for structure learning and parameter estimation in ABNs.

2.2. Simulation Study

To evaluate the performance of the mixed-effect ABN algorithm under various settings, we conducted a comprehensive simulation study with varying DAG structures, variable types, sample sizes, and grouping scenarios.

2.2.1. DAG Generation

We first generated multiple DAGs with the following characteristics:

- Number of nodes ;

- Sparsity level , which defines the proportion of possible edges, yielding a number of edges.

For each combination of , five distinct DAGs were created by randomly removing edges from a fully connected DAG until the desired sparsity level was reached.

For a consistent measure of network complexity across the simulation settings, a single complexity value for each explored setting was defined by applying Equation (4), replacing the actual number of parents , which varies across the generated graphs, by the average number of parents per node in the graph, denoted . This average number of parents per node was calculated as the number of edges divided by the total number of nodes in the graph:

2.2.2. Data Simulation

For each of the generated DAGs, we simulated datasets with a total of observations and p variables, denoted , corresponding to the p nodes in the graph. The observations were split into groups based on four distinct group-size configurations:

- Even: Equal group sizes ;

- : One small group of size and large groups, with the remaining observations distributed equally among them;

- : One large group of size and small groups, with the remaining observations distributed equally among them;

- : Half of the groups are small (size ) and the other half are large, with the remaining observations distributed equally among them.

Each dataset was simulated in accordance with the mixed-effect ABN framework, described in Section 2.1.2. For each node, values were drawn from a conditional distribution defined by a GLMM (see Equation (2)) with the appropriate canonical link function. We considered four different distributions across nodes:

- Balanced: Variables are equally distributed among Gaussian, binomial, multinomial;

- Gaussian: One binomial, one multinomial, with all others being Gaussian;

- Binomial: One Gaussian, one multinomial, with all others being binomial;

- Multinomial: One Gaussian, one binomial, with all others being multinomial.

Although ABNs can accommodate count data via Poisson-family GLMMs, we excluded Poisson variables from this simulation study due to practical challenges in both data generation and model fitting. First, simulating realistic count data presents challenges due to the necessity of addressing overdispersion, a prevalent deviation from the equi-dispersion assumption of the Poisson model, which significantly complicates the data-generating process [18]. Second, fitting Poisson GLMMs relies on numerical integration methods (e.g., Laplace approximation) to approximate the likelihood, as no closed-form solution exists [3]. These methods can exhibit numerical instability and convergence failures, particularly with sparse data or low counts. To ensure robust and consistent model fitting across the thousands of scenarios in our study, we prioritized stability and deferred the inclusion of Poisson variables for future work.

Multinomial variables were generated with three to five discrete levels, randomly selected for each simulation.

Both intercept terms and regression coefficients for parent nodes were independently sampled from a uniform distribution on . To incorporate hierarchical group-level structure, random effects were added as specified in Section 2.1. For Gaussian and binomial nodes, the random effect standard deviations were independently drawn from a uniform distribution. For multinomial nodes, the random effects for the categories (with C the total number of categories for that node) were drawn from a multivariate normal distribution with zero mean and a covariance matrix generated to introduce correlations among category-level random effects. This covariance matrix was estimated from a sample of 1000 draws from a multivariate normal distribution with a zero mean and an identity covariance of dimension .

To optimize computational resources, redundant simulations were avoided. Specifically, for settings with , the , , and group-size configurations are mathematically equivalent configurations. Likewise, for p = 3 nodes, the Gaussian, Binomial, and Multinomial node compositions are identical to the Balanced setup. Therefore, results were reused for these equivalent cases to ensure consistency without re-running identical experiments.

All data were simulated using rjags, using custom model specifications for each variable type and network structure [19,20].

2.2.3. Parameters of the Algorithm

For the structure learning phase, we employed an exact search algorithm to identify the optimal network structure, as implemented in the R package abn. The search was constrained by setting a maximum number of parents (max.parents) ranging from 2 to 4 per node to balance computational feasibility with the need to explore sufficient structural possibilities. This value was chosen for each unique setting of nodes (p) and sparsity (s) by taking the average number of parents in the graph, as defined in Section 2.2.1, and rounding up to the nearest integer:

To ensure the search was not overly stringent, the minimum effective max.parents was set to 3, provided this value did not exceed the total number of possible parents ().

The BIC, adapted for generalized linear mixed-effects models, served as the score function to guide the search, balancing model fit against complexity. These settings were held constant across all pooling strategies (partial pooling, complete pooling, and no pooling) to ensure a fair comparison. For details, we refer the readers to the Github code repository at github.com/furrer-lab/mabnsimstud (accessed on 29 September 2025).

2.2.4. Summary of Simulation Settings

Table 1 and Table 2 summarize the performed simulations. For statistical stability and reliable inference, simulations in which at least one group had fewer than 25 observations were excluded. This threshold is informed by established guidelines for multilevel modeling, which indicate that while parameter estimates may remain unbiased with smaller group sizes, standard error estimation and random-effect variance components require adequate within-group sample sizes for stability [18,21,22]. Given the complexity of our mixed-distribution framework and the use of exact search algorithms for structure learning, we adopt a conservative threshold of 25 observations per group to ensure robust estimation of both fixed effects and random-effect variance components across all variable types. This threshold ensures that each group provides sufficient information for stable maximum likelihood estimation while maintaining computational feasibility for exact structure learning. Additionally, we did not consider situations where the chosen sparsity level s was too low to ensure a single connected DAG.

Table 1.

Number of samples per group for each simulation. Gray numbers correspond to simulations that were excluded from the analysis. Numbers are rounded to the nearest integer. For the unbalanced configurations , , and , the minimum and maximum group sizes are presented in brackets.

Table 2.

Average number of edges (rounded to the nearest integer) and maximum number of parents (max.parents) for each simulation setting. Gray numbers indicate simulations excluded from the analysis because a connected DAG could not be guaranteed.

2.3. Model Evaluation

We evaluated the performance of the algorithm using four distinct criteria:

- Structural accuracy, measured by the Structural Hamming Distance (SHD) between the learned DAG and the true data-generating DAG.

- Parametric accuracy, assessed using the Kullback–Leibler (KL) divergence between the learned and true conditional probability distributions for each node.

- Prediction error, evaluated using a 5-fold cross-validation procedure. To specifically isolate the accuracy of parameter estimation, a single consensus DAG was first learned from the full dataset. The model parameters were re-estimated on the training set, and the prediction error was calculated on the held-out test set. The error was then computed as the average of the differences between observed and predicted values across all nodes, more specifically:

- -

- For Gaussian distributed nodes , we use the Mean Squared Error (MSE):

- -

- For binomial distributed nodes , we use the Brier score, which measures the mean squared difference between the predicted probability and the observed binary outcome:

- -

- For multinomial distributed nodes , we use the multi-category Brier score:where and C is the number of categories.

The final prediction error is the average of these scores across all nodes and all five test folds, yielding a single predictive accuracy score for each simulation. - Computational efficiency, measured by the total runtime (in minutes) of the algorithm from structure learning to parameter estimation.

3. Results

3.1. Mixed-Effect Additive Bayesian Networks and Their Parameter Behavior

In this section, we evaluate the performance of the mixed-effect ABN algorithm under various conditions. Our analysis is structured in two parts: first, we investigate the effects of the number of observations (n), the number of groups (k), and the distribution of the groups; second, we analyze the impact of structural properties by varying the number of nodes (p), the sparsity levels (s), and the distribution of the nodes.

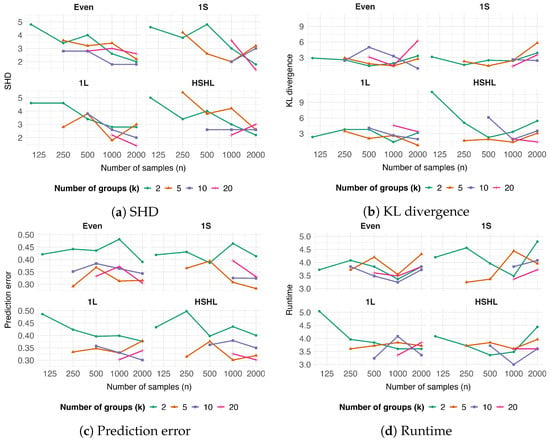

For the first analysis, we fix the number of nodes at , the sparsity at , and the node distribution to the Balanced configuration. These parameters were selected to provide a moderate and computationally feasible baseline for our analysis, allowing us to isolate and evaluate the effects of sample size and group structure. Performance results for SHD, KL divergence, prediction error, and runtime are shown in Figure 1.

Figure 1.

Performance of the mixed-effect ABN algorithm as a function of sample size (n) and number of groups (k). Four performance metrics are shown: (a) Structural Hamming Distance (SHD), (b) Kullback–Leibler (KL) divergence, (c) prediction error, and (d) runtime in minutes. In each sub-figure, the x-axis represents the total number of samples (n). Line colors denote the number of groups (). The four sub-panels correspond to different group-size configurations, which describe how the total number of samples is allocated among the available groups: Even (all groups have the same number of samples), 1S (one group is small), 1L (one group is large), and HSHL (half the groups are small and half are large). Each measurement represents the average of five repetitions conducted on different DAG structures to ensure statistical reliability and robustness.

The SHD consistently decreased with increasing sample size (Figure 1a). This trend was observed across all tested group distributions (Even, , , and ). In contrast to structural recovery, KL divergence, which measures parametric fit, was insensitive to changes in sample size or group structure (Figure 1b). The prediction error was not affected by the number of samples, but decreased as the number of groups k increased, with a notable drop in performance at (Figure 1c). Finally, the runtime of the mixed-effect ABN algorithm showed no discernible impact from variations in the number of samples, groups, or group distributions (Figure 1d).

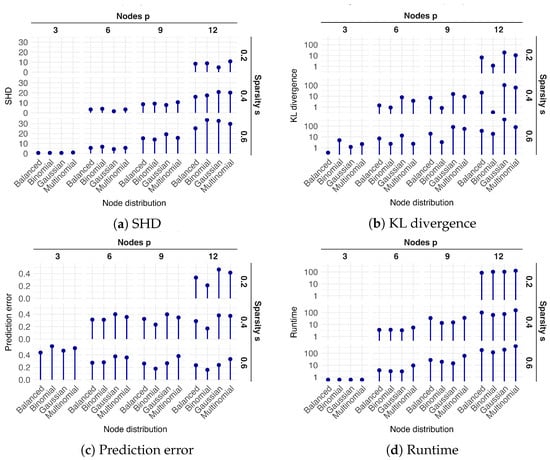

Next, we investigate the effects of the graph’s structural properties on model performance. For this analysis, we fix the number of samples at , the number of groups at , and the group distribution to the configuration. These values were chosen to represent a typical dataset size and a balanced group structure, ensuring that the results reflect the impact of varying the number of nodes, sparsity, and nodes’ distribution. The results for SHD, KL divergence, prediction error, and runtime are presented in Figure 2.

Figure 2.

Performance of the mixed-effect ABN algorithm under varying graph structures, with each measurement averaged over five repetitions on distinct DAGs. The figure comprises four main panels, showing: (a) the Structural Hamming Distance (SHD), (b) the Kullback–Leibler (KL) divergence, (c) the prediction error, and (d) the runtime in minutes. Each main panel is organized as a grid, with columns representing the number of nodes (p) and rows representing the sparsity level (s). Within each individual cell of this grid, the results for four different node compositions are plotted along the x-axis: Balanced, with an even distribution of node types; and three specific configurations Binomial, Gaussian, and Multinomial, each containing one node of the other two types and the remaining nodes of the primary type.

The results for both SHD and KL divergence demonstrated a clear trend of increasing error as the number of nodes (p) and the sparsity (s) increased (Figure 2a,b). While performance varies across the different node distribution configurations (, , , and ), no consistent ranking of these distributions was observed. Conversely, the prediction error decreased as the number of nodes (p) and graph sparsity (s) increased (Figure 2c). Finally, runtime increased significantly with the number of nodes (p), an effect that became more pronounced at higher sparsity levels (s) (Figure 2d).

3.2. Comparison with Pooling Strategies

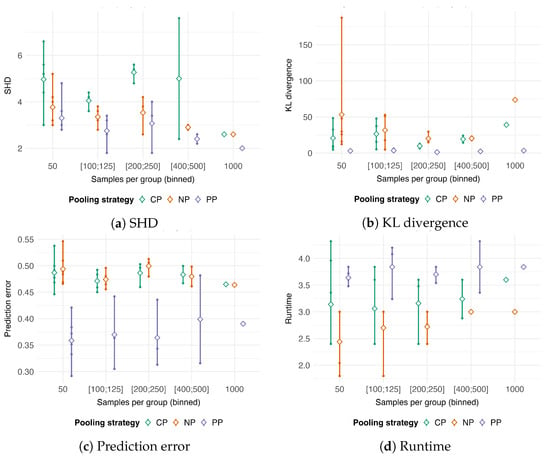

In this section, we compare the performance of three distinct strategies for handling grouped data: complete pooling (), which assumes no group-level effects; no-pooling (), which treats each group independently; and partial pooling (), which uses random effects to share information across groups. The analysis is structured into two parts: first, we examine the impact of the number of samples per group; second, we evaluate how each strategy performs as graph complexity increases. This comparison aims to quantify the trade-offs between these modeling choices in terms of accuracy and computational cost.

For the first analysis, we fix the number of nodes at , the sparsity at , the node distribution to , and the group-size configuration to . The configuration was specifically selected to compare the impact of the number of samples per group across simulations, while the other parameters were set to ensure a good balance between computational feasibility and complexity. To simplify, we group the simulation settings into 5 categories based on the number of samples per group (see Table 1):

- 50, corresponding to the , , and settings.

- , corresponding to the , , and settings.

- , corresponding to the , and settings.

- , corresponding to the and .

- 1000, corresponding to the setting.

We then measure the SHD, KL divergence, prediction error, and runtime as presented in Figure 3.

Figure 3.

Performance comparison of complete pooling (), no-pooling (), and partial pooling () strategies as a function of the number of samples per group. To isolate the effect of group size, results from multiple simulation settings (combinations of total sample size n and number of groups k) are aggregated into discrete bins based on the approximate number of samples per group, which are displayed on the x-axis. The pooling strategies represent distinct approaches to handling grouped data: (1) assumes homogeneity, with no group-specific effects; (2) treats group membership as a fixed effect, estimating parameters separately for each group; and (3) uses random effects to enable partial information sharing across groups. The panels show boxplots for four performance metrics: (a) Structural Hamming Distance (SHD), (b) Kullback–Leibler (KL) divergence, (c) prediction error, and (d) runtime (in minutes). A unique color distinguishes each pooling strategy.

In terms of SHD, structural recovery improved across all strategies with increasing sample numbers per group (Figure 3a). Overall, consistently yielded the lowest SHD, while produced the highest. Note that, for the 1000-samples-per-group setting, only one dataset was available for this configuration ( and ). In terms of KL divergence, resulted in the highest error, while was the most accurate (Figure 3b). Prediction error for was consistently lower than for and (Figure 3c). However, the variance of the error for was substantially higher, influenced by the case with only two groups (). Runtime was unaffected by the number of samples per group for all three methods (Figure 3d). had the lowest median runtime, and was the most computationally intensive.

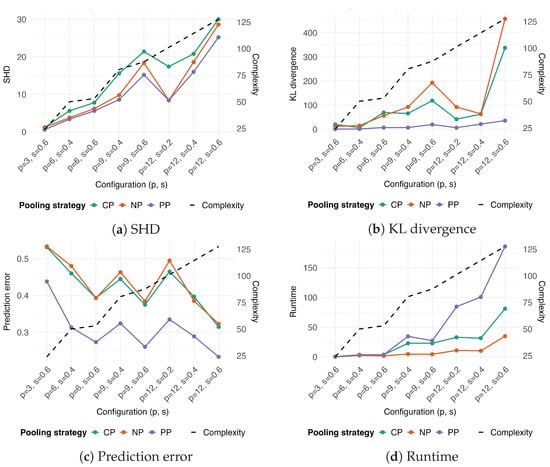

Next, we evaluate the performance of the three pooling strategies in relation to the graph’s complexity. For this analysis, we fix the number of samples to , the number of groups to , the group-size configuration to , and the node distribution to the configuration, to provide a typical and controlled data environment. Performance results, in terms of SHD, KL divergence, prediction error, and runtime, are presented in Figure 4.

Figure 4.

Performance comparison of pooling strategies across varying levels of graph complexity. The strategies compared are: complete pooling (), which ignores group structure; no pooling (), which treats groups as independent fixed effects; and partial pooling (), which uses random effects to share information across groups. Graph complexity (dashed line, right y-axis), defined in Equation (4), increases with the number of nodes (p) and sparsity (s) of the network. The four panels show performance (left y-axis) based on: (a) Structural Hamming Distance (SHD), (b) Kullback–Leibler (KL) divergence, (c) prediction error, and (d) runtime in minutes. A unique color distinguishes each pooling strategy.

In terms of SHD, all methods were negatively affected by the graph’s complexity, though consistently showed slightly better performance than and (Figure 4a). The KL divergence of was minimally affected by complexity, whereas it increased substantially for and with increasing sparsity (Figure 4b). As previously observed, prediction error decreased with increasing graph complexity for all three strategies (Figure 4c), with consistently achieving the lowest prediction error. Finally, the runtime of all methods increased with complexity; this effect was most pronounced for , while remained the fastest method (Figure 4d).

4. Discussion

In this study, we conducted a comprehensive simulation to evaluate the performance of a mixed-effect ABN model and compare strategies for handling grouped data. Our findings provide strong empirical support for the use of partial pooling () via random effects as a robust and accurate method for both structural learning and prediction in the presence of two-level hierarchical data structures.

4.1. Comparative Performance of Pooling Strategies

Our results demonstrate that the strategy consistently outperforms complete pooling () and no-pooling () across nearly all evaluation metrics. This empirical confirmation supports the theoretical advantages of mixed-effects models, which balance the bias–variance trade-off by enabling partial information sharing across groups. This approach has proven effective for both continuous and discrete data, as shown by Scutari et al. [4], who utilized mixed-effects models for Gaussian networks, and Azzimonti et al. [15], who developed a hierarchical score for improved structure learning in discrete networks. The model, which assumes data homogeneity, generated biased structural estimates, which is consistent with the known pitfalls of ignoring data heterogeneity [4]. In contrast, the model treated groups independently, resulting in higher variance and reduced efficiency in learning shared patterns, a limitation that hierarchical models are designed to overcome [4,15]. For practitioners in fields where data are frequently clustered by hospital, laboratory, or region, these findings underscore the critical importance of selecting a pooling strategy that properly accounts for underlying group structures to avoid erroneous conclusions.

The prediction error results further highlight the advantages of the approach. While the performance of and is quite similar, consistently provides the lowest error (Figure 3c), which is critical in decision-support applications where predictive accuracy is paramount. However, also exhibited substantially higher variance in its prediction error. This can be attributed to simulations with only two groups (), as a very small number of groups can negatively affect the stability of random-effect estimates and thus increase prediction error, as shown in Figure 1c. For the and strategies, prediction error decreases with an increase in the number of samples per group. This trend is less apparent for , which maintains a consistently low error regardless of the number of samples, further underscoring its efficiency in learning from grouped data.

One key finding of this study is the behavior of the mixed-effect ABN algorithm as graph complexity increases. The accuracy of the strategy remained superior as networks grew larger and denser. This robustness is crucial for real-world applications, where the underlying networks comprise many variables and their associations. The observed decrease in average prediction error with increasing network size can be attributed to a dilution effect, in which individual node-specific errors are masked when averaged across the entire network. Consequently, for effective decision-making, it is advisable to assess prediction accuracy at specific nodes of substantive importance, rather than relying solely on a global average. Our findings also confirmed that prediction errors in mixed-effect models decrease with a greater number of groups, consistent with established principles that more groups provide more information to accurately estimate the random effects distribution [22].

The analysis also reveals a clear and practical trade-off between model accuracy and computational cost. The superior performance of the strategy comes at the price of increased computational time, particularly for more complex networks. In contrast, the model was consistently the fastest. This trade-off is a crucial consideration for real-world applications. For analyses where accuracy is paramount, the strategy is clearly the preferred choice for grouped data. However, for applications requiring real-time inference or analysis of extremely large networks, the faster or approaches might serve as pragmatic alternatives, provided that their potential limitations in accuracy are acknowledged.

4.2. Limitations

Our study has several limitations that present opportunities for future research. Firstly, our simulations were performed under idealized conditions, where the data-generating process strictly adhered to the model assumptions. Future investigations should assess the robustness of these methods when assumptions are violated, for example, by incorporating non-Gaussian or skewed distributions for the random effects. Secondly, evaluating prediction performance across heterogeneous data types is challenging due to the lack of a unified metric. The prediction errors reported for Gaussian, binomial, and multinomial nodes are therefore not directly comparable, underscoring the importance of a standardized evaluation framework (see also Appendix C). Thirdly, while we examined a broad range of parameters, the effect of the number of groups (k) deserves closer scrutiny, particularly at the lower threshold where estimating the variance of random effects becomes unstable. Our findings showed a decline in performance at , which is consistent with the existing literature [22], but a more detailed analysis of this threshold would be advantageous. Furthermore, this simulation study focused solely on networks involving Gaussian, binomial, and multinomial nodes. Although Poisson models are compatible with the ABN framework for mixed-effects under maximum likelihood estimation, integrating them into the Bayesian data simulation approach proved challenging due to complexities in achieving stable and effective estimates. Future work should focus on incorporating Poisson-distributed nodes to broaden the applicability of mixed-effect ABNs to a wider range of count data challenges. Finally, expanding this framework to include dynamic Bayesian networks could offer a powerful method for analyzing longitudinal clustered data.

5. Conclusions

This study provides a rigorous quantitative validation of the mixed-effect ABN framework. By demonstrating its superior accuracy in structural recovery and prediction for grouped data, we establish it as a powerful and reliable tool for researchers and decision-makers dealing with complex, hierarchical systems. The primary contribution of this work is the empirical confirmation that accounting for group-level heterogeneity is a critical component for robust inference in Bayesian networks with mixed variable distributions. The clear elucidation of the accuracy-computation trade-off further provides practical guidance for its implementation, ensuring that the appropriate pooling strategy can be selected to meet the specific needs of a given scientific or industrial application.

Author Contributions

Conceptualization, M.C. and M.D.; Methodology, M.C., M.D. and R.F.; Software, M.D.; Validation, M.C. and M.D.; Formal analysis, M.C. and M.D.; Investigation, M.C. and M.D.; Resources, M.C. and M.D.; Data curation, M.C.; Writing—original draft preparation, M.C.; Writing—review and editing, M.C., M.D. and R.F.; Visualization, M.C. and M.D.; Supervision, Not applicable; Project administration, Not applicable; Funding acquisition, R.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by Digitalization Initiative of the Zurich Higher Education Institutions grant number DIZH_Stroke DynamiX_PC22-47_Furrer and GEMINI (funded by EU Horizon Europe R&I program No 101136438).

Data Availability Statement

All data used in this simulation study were synthetically generated. The R code used for data simulation, analysis, and figure generation is publicly available in the following GitHub repository: github.com/furrer-lab/mabnsimstud (accessed on 29 September 2025). This repository contains all necessary scripts to fully reproduce the results presented in this paper.

Acknowledgments

The authors declare the use of Generative AI technologies during the preparation of this manuscript. These tools were utilized exclusively for the purpose of language refinement, which included improving grammatical accuracy, syntax, and clarity. The AI systems provided assistance in editing the prose but did not contribute to the core intellectual work of the manuscript in any way. All research ideas, the methodological framework, data analysis, interpretation of results, and the final conclusions are the original work of the human authors. The authors have critically reviewed and edited all text and assume full and sole responsibility for the originality, accuracy, and integrity of the entire manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ABN | Additive Bayesian Network |

| GLM | Generalized Linear Model |

| BN | Bayesian Network |

| DAG | Directed Acyclic Graph |

| GLMM | Generalized Linear Mixed Model |

| BIC | Bayesian Information Criterion |

| SHD | Structural Hamming Distance |

| KL | Kullback–Leibler |

| MSE | Mean Squared Error |

| PP | Partial Pooling |

| NP | No Pooling |

| CP | Complete Pooling |

Appendix A. Empirical Validation of Search Constraint

Empirical results support the implementation of a strict constraint on the maximum number of parents (max.parents) in the exact structure learning algorithm detailed in Section 2.2.3. This constraint is justified by the significant reduction in computational cost while maintaining stable structural, parametric, and predictive performance.

The trade-off between model accuracy and computational complexity was evaluated under varying max.parents constraints. Table A1 presents key performance metrics for simulated networks with and nodes. The experimental configuration consists of samples, evenly distributed groups, a graph sparsity of , and a balanced node distribution. We test max.parents values of 3, 4, 5, and the maximum possible value, .

The results in Table A1 reveal a clear trade-off between computational expense and model accuracy, which becomes more pronounced as network size increases. For the smaller network (), performance metrics and runtime are stable across all tested max.parents values, suggesting that a stricter constraint can be applied without sacrificing accuracy. For the larger network, increasing the max.parents limit from 3 to 5 improves the model fit by reducing both SHD and KL divergence at the cost of an increased runtime from 38.0 to 44.2 min. Notably, further relaxing this limit to 8 yields no additional improvement in model accuracy while increasing the runtime to 51.1 min. This result demonstrates a point of diminishing returns, where loosening the constraint beyond a certain threshold increases the computational burden without a corresponding gain in performance. These findings empirically validate our use of a restrictive max.parents constraint as an effective strategy for managing computational complexity while preserving model quality.

Table A1.

Performance metrics (SHD, KL divergence, prediction error, and runtime in minutes) under varying max.parents constraint.

Table A1.

Performance metrics (SHD, KL divergence, prediction error, and runtime in minutes) under varying max.parents constraint.

| p | max.parents | SHD | KL | Prediction Error | Runtime (min) |

|---|---|---|---|---|---|

| 3 | 3.4 | 1.41 | 0.313 | 3.45 | |

| 4 | 3.4 | 1.41 | 0.314 | 3.24 | |

| 5 | 3.4 | 1.41 | 0.312 | 3.48 | |

| 3 | 7.6 | 6.62 | 0.327 | 38.0 | |

| 4 | 7.4 | 7.12 | 0.328 | 35.2 | |

| 5 | 6.8 | 5.72 | 0.329 | 44.2 | |

| 8 | 6.8 | 5.73 | 0.328 | 51.1 |

Appendix B. Numerical Stability in Poisson Modeling

This appendix provides empirical evidence supporting our decision to exclude Poisson-distributed variables from the main simulation study (see Section 2.2.2) due to their inherent numerical instability.

Table A2 below illustrates the high proportion of non-convergence encountered when fitting Poisson models. The test was conducted on a reduced data set (with fixed number of samples , number of groups , and distribution of groups), allowing the number of nodes (p), the graph sparsity (s), and the distribution of nodes to vary. Each configuration was then replicated 100 times to demonstrate the baseline instability of the Poisson family fitting.

The high total failure proportion shown in Table A2 with data sets that include Poisson distributed variables demonstrates that running the complete simulation would render the results inconsistent and statistically invalid, and supports the practical necessity of limiting the simulation scope.

Table A2.

Proportion of valid simulations (successful convergence) across different graphs configurations (number of nodes, sparsity of the graph, nodes distribution). Each configuration was replicated 100 times.

Table A2.

Proportion of valid simulations (successful convergence) across different graphs configurations (number of nodes, sparsity of the graph, nodes distribution). Each configuration was replicated 100 times.

| Nodes Distribution | Sparsity (s) | Number of Nodes (p) | |||

|---|---|---|---|---|---|

| 0.2 | - | - | 0.02 | 0.04 | |

| 0.4 | - | 0.02 | 0.04 | 0 | |

| 0.6 | 0.01 | 0.05 | 0.01 | 0 | |

| 0.2 | - | - | 0.02 | 0.02 | |

| 0.4 | - | 0.01 | 0.02 | 0.01 | |

| 0.6 | 0.01 | 0.02 | 0.03 | 0.02 | |

| 0.2 | - | - | 0.02 | 0.02 | |

| 0.4 | - | 0.03 | 0.04 | 0.04 | |

| 0.6 | 0.01 | 0.03 | 0.04 | 0.05 | |

| 0.2 | - | - | 0.04 | 0 | |

| 0.4 | - | 0.04 | 0 | 0 | |

| 0.6 | 0.01 | 0.02 | 0 | 0 | |

Appendix C. Disaggregated Prediction Error Results

To provide a more granular view of the model’s predictive performance, Table A3 presents the unaggregated prediction errors from a representative simulation setting (, with balanced group sizes and node distributions). The table details the error for each of the 12 nodes across five cross-validation folds, as well as the averaged error per node and by node type. The node prefixes ‘b’, ‘c’, and ‘n’ denote binomial, categorical (multinomial), and Gaussian variables, respectively. For Gaussian nodes, errors are quantified as Mean Squared Error (MSE), while for binomial and categorical nodes, they are measured using the (multi-category) Brier score, as detailed in Section 2.3.

Table A3.

Unaggregated prediction errors by node type. Errors are shown for each of the 5 cross-validation folds, averaged over the folds and averaged per node distribution (in italics).

Table A3.

Unaggregated prediction errors by node type. Errors are shown for each of the 5 cross-validation folds, averaged over the folds and averaged per node distribution (in italics).

| Node | Fold 1 | Fold 2 | Fold 3 | Fold 4 | Fold 5 | Averaged |

|---|---|---|---|---|---|---|

| b1 | 0.111 | 0.097 | 0.075 | 0.087 | 0.088 | 0.092 |

| b2 | 0.132 | 0.148 | 0.143 | 0.146 | 0.146 | 0.143 |

| b3 | 0.179 | 0.146 | 0.156 | 0.157 | 0.166 | 0.161 |

| b4 | 0.207 | 0.205 | 0.182 | 0.177 | 0.210 | 0.196 |

| averaged_b | - | - | - | - | - | 0.148 |

| c1 | 0.106 | 0.142 | 0.125 | 0.115 | 0.111 | 0.120 |

| c2 | 0.147 | 0.136 | 0.134 | 0.152 | 0.160 | 0.147 |

| c3 | 0.102 | 0.112 | 0.087 | 0.108 | 0.129 | 0.108 |

| c4 | 0.121 | 0.142 | 0.147 | 0.112 | 0.138 | 0.132 |

| averaged_c | - | - | - | - | - | 0.126 |

| n1 | 0.116 | 0.100 | 0.142 | 0.123 | 0.102 | 0.116 |

| n2 | 0.096 | 0.072 | 0.086 | 0.081 | 0.065 | 0.079 |

| n3 | 0.086 | 0.096 | 0.089 | 0.101 | 0.080 | 0.091 |

| n4 | 0.068 | 0.084 | 0.091 | 0.084 | 0.071 | 0.079 |

| averaged_n | - | - | - | - | - | 0.086 |

This disaggregated presentation is necessary for transparency because the global prediction error metric used in the main text aggregates distinct error types. As noted in the Section 4.2, a single, unified performance metric applicable across Gaussian, binomial, and multinomial distributions is, to our knowledge, unavailable. This detailed breakdown provides clearer, more direct insight into the model’s predictive accuracy at the individual node level, complementing the aggregated results presented in the main manuscript.

References

- Spiegelhalter, D.; Abrams, K.; Myles, J. Bayesian Approaches to Clinical Trials and Health-Care Evaluation; Wiley: Hoboken, NJ, USA, 2004. [Google Scholar]

- Gelman, A.; Hill, J. Data Analysis Using Regression and Multilevel/Hierarchical Models; Analytical Methods for Social Research; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- McCulloch, C.; Searle, S.R.; Neuhaus, J. Generalized, Linear and Mixed Models, 2nd ed.; Wiley Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 2008. [Google Scholar]

- Scutari, M.; Marquis, C.; Azzimonti, L. Using Mixed-Effects Models to Learn Bayesian Networks from Related Data Sets. In Proceedings of the 11th International Conference on Probabilistic Graphical Models, Almeria, Spain, 5–7 October 2022; pp. 73–84. [Google Scholar]

- Delucchi, M.; Spinner, G.R.; Scutari, M.; Bijlenga, P.; Morel, S.; Friedrich, C.M.; Furrer, R.; Hirsch, S. Bayesian network analysis reveals the interplay of intracranial aneurysm rupture risk factors. Comput. Biol. Med. 2022, 147, 105740. [Google Scholar] [CrossRef] [PubMed]

- Hartnack, S.; Springer, S.; Pittavino, M.; Grimm, H. Attitudes of Austrian Veterinarians towards Euthanasia in Small Animal Practice: Impacts of Age and Gender on Views on Euthanasia. BMC Vet. Res. 2016, 12, 26. [Google Scholar] [CrossRef] [PubMed]

- McCormick, B.J.J.; Sanchez-Vazquez, M.J.; Lewis, F.I. Using Bayesian Networks to Explore the Role of Weather as a Potential Determinant of Disease in Pigs. Prev. Vet. Med. 2013, 110, 54–63. [Google Scholar] [CrossRef] [PubMed]

- Guinat, C.; Comin, A.; Kratzer, G.; Durand, B.; Delesalle, L.; Delpont, M.; Guérin, J.L.; Paul, M.C. Biosecurity risk factors for highly pathogenic avian influenza (H5N8) virus infection in duck farms, France. Transbound. Emerg. Dis. 2020, 67, 2961–2970. [Google Scholar] [CrossRef] [PubMed]

- Aggrey, S.; Egeru, A.; Kalule, J.B.; Lukwa, A.T.; Mutai, N.; Hartnack, S. Household Satisfaction with Health Services and Response Strategies to Malaria in Mountain Communities of Uganda. Trans. R. Soc. Trop. Med. Hyg. 2025, 119, 85–96. [Google Scholar] [CrossRef] [PubMed]

- Kowalska, M.E.; Shukla, A.K.; Arteaga, K.; Crasta, M.; Dixon, C.; Famose, F.; Hartnack, S.; Pot, S.A. Evaluation of Risk Factors for Treatment Failure in Canine Patients Undergoing Photoactivated Chromophore for Keratitis—Corneal Cross-Linking (PACK-CXL): A Retrospective Study Using Additive Bayesian Network Analysis. BMC Vet. Res. 2023, 19, 227. [Google Scholar] [CrossRef] [PubMed]

- Kratzer, G.; Lewis, F.; Comin, A.; Pittavino, M.; Furrer, R. Additive Bayesian Network Modeling with the R Package abn. J. Stat. Softw. 2023, 105, 1–41. [Google Scholar] [CrossRef]

- Scutari, M. Learning Bayesian Networks with the bnlearn R Package. J. Stat. Softw. 2010, 35, 1–22. [Google Scholar] [CrossRef]

- Gelman, A.; Carlin, J.; Stern, H.; Dunson, D.; Vehtari, A.; Rubin, D. Bayesian Data Analysis, 3rd ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2013. [Google Scholar]

- Delucchi, M.; Bijlenga, P.; Morel, S.; Furrer, R.; Hostettler, I.; Bakker, M.; Bourcier, R.; Lindgren, A.; Maschke, S.; Bozinov, O.; et al. Mixed-effects Additive Bayesian Networks for the Assessment of Ruptured Intracranial Aneurysms: Insights from Multicenter Data. Comput. Biol. Med. 2025. submitted. [Google Scholar]

- Azzimonti, L.; Corani, G.; Scutari, M. A Bayesian hierarchical score for structure learning from related data sets. Int. J. Approx. Reason. 2022, 142, 248–265. [Google Scholar] [CrossRef]

- Koivisto, M.; Sood, K. Exact Bayesian Structure Discovery in Bayesian Networks. J. Mach. Learn. Res. 2004, 5, 549–573. [Google Scholar]

- Delucchi, M.; Liechti, J.; Spinner, G.; Furrer, R. Additive Bayesian Networks. J. Open Source Softw. 2024, 9, 6822. [Google Scholar] [CrossRef]

- Bolker, B.M.; Brooks, M.E.; Clark, C.J.; Geange, S.W.; Poulsen, J.R.; Stevens, M.H.H.; White, J.S.S. Generalized Linear Mixed Models: A Practical Guide for Ecology and Evolution. Trends Ecol. Evol. 2009, 24, 127–135. [Google Scholar] [CrossRef] [PubMed]

- Lunn, D.; Spiegelhalter, D.; Thomas, A.; Best, N. The BUGS project: Evolution, critique and future directions. Stat. Med. 2009, 28, 3049–3067. [Google Scholar] [CrossRef] [PubMed]

- Plummer, M.; Best, N.; Cowles, K.; Vines, K. CODA: Convergence Diagnosis and Output Analysis for MCMC. R News 2006, 6, 7–11. [Google Scholar]

- Sagan, A. Sample Size in Multilevel Structural Equation Modeling—The Monte Carlo Approach. Econometrics 2019, 23, 63–79. [Google Scholar] [CrossRef]

- Maas, C.; Hox, J. Sufficient sample sizes for multilevel modeling. Methodol. Eur. J. Res. Methods Behav. Soc. Sci. 2005, 1, 86–92. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).