1. Introduction

In an increasingly innovation-driven global economy, governments worldwide have enacted a vast number of policies to foster entrepreneurial ecosystems, aiming to promote economic growth and employment [

1,

2,

3,

4,

5]. These policies, ranging from macro-level regulations to specific financial subsidies and tax incentives, have generated a massive volume of complex and fragmented policy texts. This information explosion presents significant challenges for policymakers, researchers, and entrepreneurs, including information overload, difficulties in policy evaluation and monitoring, and a lack of transparency [

6,

7]. To address these challenges, the application of Artificial Intelligence (AI) and Natural Language Processing (NLP) for entrepreneurship policy text classification has emerged [

8,

9]. This technology automates the process of categorizing immense volumes of unstructured texts, such as policy documents, evaluation reports, press releases, and public feedback, into predefined categories, for instance, by policy instrument, target group, or policy objective. This greatly enhances the efficiency and accuracy of policy analysis. It not only provides data-driven support for evidence-based policy evaluation and optimization but also increases policy transparency. By enabling entrepreneurs and research institutions to more easily access and understand relevant information, it offers crucial technical support for building a healthy and efficient entrepreneurial ecosystem [

10,

11].

Current data-driven text classification methods are primarily divided into two main categories [

12,

13,

14,

15]. The first is based on traditional machine learning, which represents a classic paradigm in the field [

16]. Its core process involves transforming unstructured text into machine-readable numerical vectors through handcrafted feature engineering [

17]. This approach typically represents text using either the Bag-of-Words model, which treats a document as an unordered collection of word frequencies often weighted by the TF-IDF algorithm, or the N-gram model, which preserves local word order by capturing sequences of consecutive words. Once the text is vectorized, these feature vectors are fed into supervised learning classifiers such as Naive Bayes, Support Vector Machine, or Logistic Regression for training, enabling the model to learn the mapping between text and its category [

18,

19]. The effectiveness of this class of methods is heavily dependent on the quality of the feature engineering [

20].

The second category is composed of methods based on deep learning, which represent the state of the art in text classification technology [

21,

22]. Their primary advantage lies in the ability to automatically extract high-level semantic features from text through an end-to-end learning process, thus circumventing the need for cumbersome and experience-dependent handcrafted feature engineering [

23,

24]. These methods begin by mapping words into low-dimensional, dense vectors using word embedding techniques. Subsequently, different neural network architectures are employed to capture textual features: Convolutional neural networks efficiently extract key local phrase information through convolution and pooling operations [

25]; long short-term memory networks, a variant of recurrent neural networks, excel at processing text sequences to capture long-range contextual dependencies via their gating mechanisms [

26]; and architectures represented by the Transformer model leverage a core self-attention mechanism to process text in parallel, directly calculating the relational strength between words to build exceptionally powerful contextual representations, achieving breakthrough results in virtually all text classification tasks [

27,

28,

29,

30].

Despite the superior performance of text classification methods, two critical issues remain, limiting their practical application in the entrepreneurship policy domain. First, the intricate and multi-faceted nature of policy texts often leads to insufficient information extraction. Existing models frequently struggle to synergistically leverage the diverse types of information embedded in the text—such as statistical regularities, linguistic structures, and connections to external factual knowledge—resulting in a superficial semantic understanding and failing to overcome the semantic sparsity prevalent in this field. Second, the performance of state-of-the-art deep learning models is heavily reliant on large-scale annotated datasets for training. In specialized domains like entrepreneurship policy, acquiring such labeled data is a major bottleneck, as it is both labor-intensive and requires domain expertise. This dependency on limited labeled data renders models highly susceptible to overfitting, which severely impairs their ability to generalize to new and unseen policy documents in real-world applications.

To this end, a Multi-granularity Invariant Structure Learning (MISL) model is proposed for text classification in entrepreneurship policy. Specifically, our approach comprises three core components designed to systematically address the aforementioned challenges. First, we introduce a multi-view feature engineering module to tackle the issue of insufficient information extraction. This module constructs three distinct graphs to capture semantics from different perspectives: a statistical graph to model word co-occurrence patterns, a linguistic graph to capture syntactic roles and resolve ambiguity, and a knowledge graph to integrate external factual information. By fusing these heterogeneous views, the model generates a comprehensive and rich representation of the policy text, effectively alleviating semantic sparsity. Second, to mitigate the problem of overfitting on limited labeled data, we employ a sample-invariant representation learning strategy. This is achieved through a data augmentation process where we generate varied instances of the original text. The model is then trained to maximize the mutual information between the representations of the original text and its augmented versions. This forces the model to learn the essential and stable semantic core of the text, thereby enhancing its robustness and generalization capabilities. Finally, the model further refines these representations through neighborhood-invariant semantic learning. By constructing a nearest-neighbor graph and applying a contrastive objective, this component encourages intra-class compactness and inter-class separability in the feature space. It pulls semantically similar policy texts closer together while pushing dissimilar ones apart, which significantly improves the discriminative power of the final representations for more accurate classification.

The main contributions are threefold:

We propose a novel multi-view feature engineering module that integrates statistical, linguistic, and knowledge-based representations through distinct graph structures. This approach effectively alleviates semantic sparsity in policy texts by capturing comprehensive contextual information from multiple perspectives.

We introduce a sample-invariant representation learning strategy that leverages data augmentation and mutual information maximization. This method enhances the model’s robustness and generalization capabilities by focusing on the essential and stable semantic core of the text, even with limited labeled data.

We develop a neighborhood-invariant semantic learning component that constructs a nearest-neighbor graph and applies a contrastive objective. This technique improves the discriminative power of the final representations by promoting intra-class compactness and inter-class separability, leading to more accurate text classification.

2. The Proposed Method

Given a text document

D composed of a sequence of

L tokens, where the document belongs to one of

C predefined categories, the goal of the text classification task aims to learn a mapping function

to predict the corresponding category label

, i.e.,

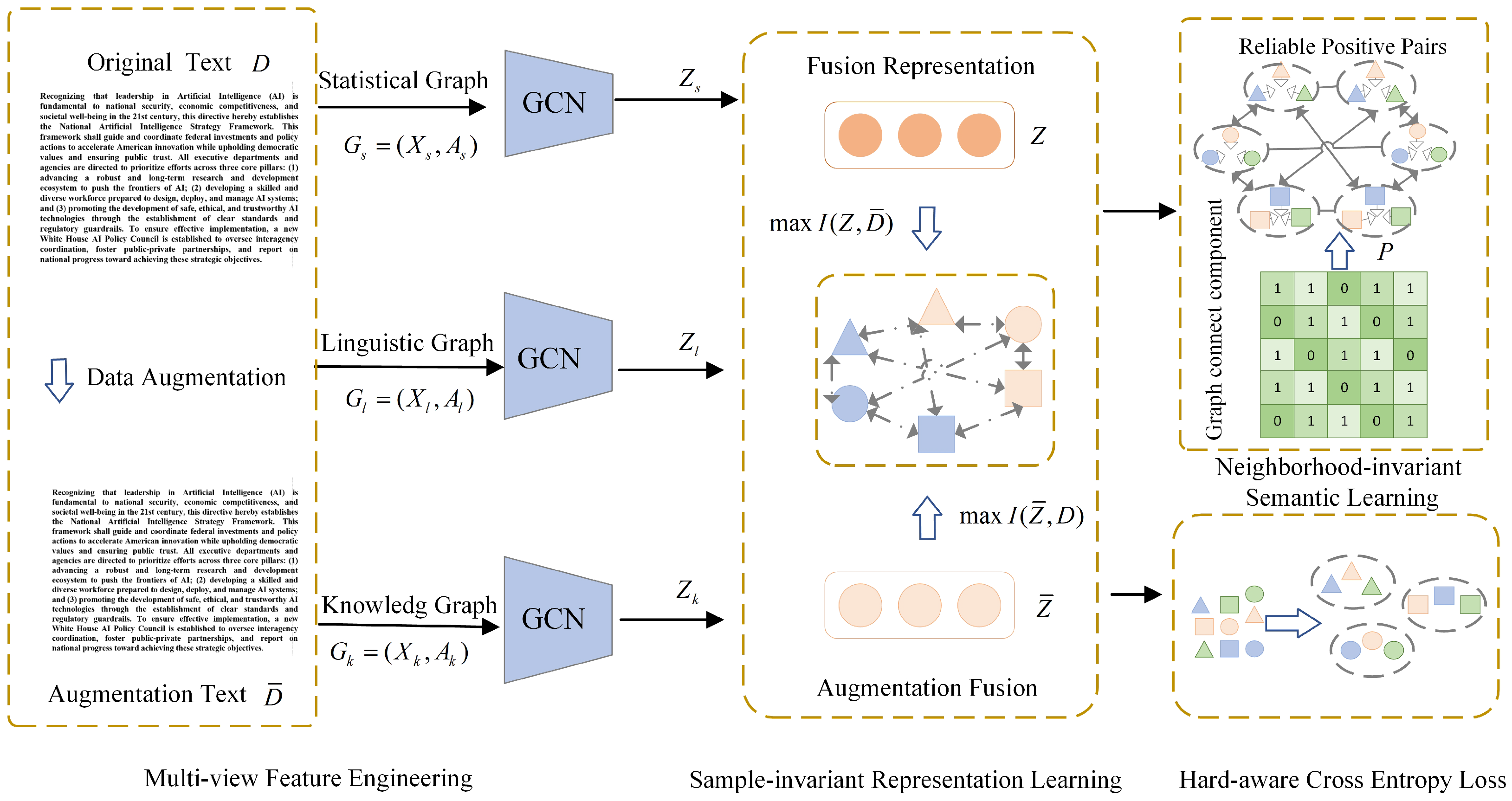

. To achieve the above objective, this paper proposes a multi-granularity invariant structure learning method (MISL) for mining short text patterns, which contains the multi-view feature engineering, the sample-invariant representation learning, and the neighborhood-invariant semantic learning, as shown in

Figure 1.

2.1. Multi-View Feature Engineering

Multi-view feature engineering aims to synergistically leverage the intrinsic statistical regularities and linguistic structures of the text as well as the factual knowledge provided by external knowledge bases, to alleviate semantic sparsity prevalent in texts. To achieve this objective, we design and construct three distinct graph structures to capture and model each of these information types, thereby generating a richer contextual representation for short texts.

Statistical representation. The statistical regularities of a text reflect the distributional properties and co-occurrence patterns of its vocabulary. To this end, we construct a statistical graph

to explicitly model this type of information. In this graph,

represents the set of word nodes. The connection strength between nodes is defined by an adjacency matrix

, where weights are calculated using Pointwise Mutual Information (PMI) to quantify the co-occurrence probability of word pairs:

For node features

, we use GloVe word vectors for initialization. Then, we input the statistical graph in a two-layer graph convolutional networks

to learn statistical representations

:

Linguistic representation. Linguistic structure, particularly syntactic information, is crucial for accurately interpreting the meaning of a text. We focus on utilizing Part-of-Speech (POS) tagging to parse the syntactic roles of words (e.g., distinguishing adjectives from adverbs), thereby resolving lexical ambiguity. To this end, we construct a linguistic graph

, where

is the set of nodes for all unique POS tags. The adjacency matrix

is also computed based on PMI to model the co-occurrence patterns of different POS tags:

The initial feature for each POS node,

, is set as a one-hot vector. Similar to the statistical representation, we apply a two-layer graph convolutional networks to generate linguistic representation

.

Knowledge representation. External factual knowledge can supplement short texts with necessary background information, significantly enhancing the performance of classification models. To incorporate this knowledge, we first identify and link entities from the text to a knowledge graph, and then construct a knowledge graph

. In the implementation, we use the TAGME toolkit to perform entity linking against the NELL knowledge base, where

is the resulting set of entity nodes. The initial embeddings for these entities,

, are generated using the TransE model, which is adept at learning entity relationships in a knowledge graph. The adjacency matrix

is derived from the cosine similarity between entity embedding pairs to capture semantic proximity:

Likewise, we apply a two-layer graph convolutional networks to generate linguistic representation

.

Based on the above three representations, a multi-view fusion function

is designed to aggregate complementary information:

where

Z denotes the fusion representation of the text. In the experiments,

is implemented as the concatenation operations of three representations.

2.2. Sample-Invariant Representation Learning

Sample-invariant representation learning aims to utilize invariant structure hidden in each text to boost the discriminative ability of representations. To accomplish this, we introduce the data augmentation strategy to generate augmentation text and then maximize mutual information between the augmentation text and original text to endow representations with sample-invariant structures.

Specifically, we define a text data augmentation strategy (

) to generate augmentation text:

where

,

, and

denote three augmentation methods, i.e., back translation, random deletion, and synonym replacement.

,

, and

correspond to the back translation rate, random deletion rate, and synonym replacement rate, respectively.

denotes the text augmentation of the text

D. Then, through the multi-view feature engineering, the augmentation fusion representation

is obtained.

In general, data augmentation should not alter the semantic information of the text. That is, the original text and augmentation text should have consistent semantics. Thus, the mutual information between original fusion representations and augmentation text is maximized to achieve the above objective:

where

denotes the mutual information function. Based on the definition of mutual information, the following holds:

where

denotes the marginal distribution of the augmented text,

is the marginal distribution of the representations, and

is the conditional distribution of

Z given

.

denotes the Kullback–Leibler divergence.

However, the direct optimization of a KL divergence-based objective presents a significant challenge in the context of deep learning. The unbounded nature of the KL divergence can lead to unstable training dynamics, where the loss can grow indefinitely, causing exploding gradients. To mitigate this, we seek a more robust and well-behaved alternative. The Jensen–Shannon (JS) divergence is an ideal candidate because it is both symmetric and, crucially, bounded within the range

. By substituting JS divergence for KL divergence, we reformulate our objective as follows:

While theoretically appealing, the direct computation of JS divergence is often intractable, as it requires knowledge of the distribution

, which is typically unknown. To overcome this, we employ a variational estimation approach. The variational lower bound of the JS divergence between two distributions,

and

, is defined as:

where

is a function, typically a neural network, that acts as a discriminator, and

is the sigmoid function.

denotes the expectation operator. This framework effectively reframes the problem of density ratio estimation as a more tractable binary classification task. Then, we replace

with

and

with

, and we obtain:

In practice, the expectation over the product of marginals is approximated using negative sampling. We recast an augmentation text

and fusion representations

Z of the corresponding original text

D as a positive pair. We recast the same augmentation text

and fusion representations

Z of the other original text. By training the discriminator to distinguish these pairs, the representation learning model is implicitly forced to maximize the mutual information.

Finally, the loss

of sample-invariant representation learning is defined as:

2.3. Neighborhood-Invariant Semantic Learning

Neighborhood-invariant semantic learning aims to bring similar samples with high confidence closer together and keep dissimilar samples with high confidence away to capture text patterns with intra-class compactness and inter-class separability.

Specifically, given fusion representations, we introduce the nearest-neighbor graph

via utilizing k-nearest neighbors to select the top k most similar neighbors for each text sample

D:

where

denotes the nearest set of the

j-th text sample

.

denotes the element of the nearest-neighbor graph

.

Following the initial identification of nearest neighbors for every sample, a naive method might consider this entire neighbor set as the basis for forming positive pairs. Such a strategy, however, risks contaminating the learning signal with semantically inconsistent pairings, as some neighbors may be incidental rather than truly related. To address this potential for noise, we implement a robust filtering mechanism that consults the pre-computed, stable class assignments. Our model mandates that for a pair of samples to be considered positive, they must simultaneously fulfill two requirements: a local relationship (being nearest neighbors) and a global one (residing in the same class). In contrast, pairs are defined as negative when they exhibit neither a local nor a global semantic connection. By imposing this strict, two-tiered validation, we effectively purify the set of positive and negative pairs, ensuring a more accurate and stable optimization process.

Based on the above analysis, for the nearest-neighbor graph

, we identify its disjoint subgraphs as different classes using the connected component labeling algorithm. This algorithm efficiently partitions the set of nodes into components

, where any two nodes within a component

are connected by a path. We treat each component as a pseudo-label, assuming that all texts within it are semantically similar. Let

be the function that returns the component ID for text

. From this, we derive a binary pseudo-label matrix

between

N text samples:

Here,

signifies that texts

and

belong to the same semantic component. Based on neighborhood structure information and class semantic information, we construct the reliable positive pairs set

and negative pairs set

as follows:

Then, the loss

of the neighborhood-invariant semantic learning is defined as:

where exp(·) and

are the exponential function and the cosine function, respectively.

2.4. The Overall Loss Function

The model is trained end-to-end by optimizing a composite objective that linearly combines the neighborhood-invariant semantic learning loss, the sample-invariant representation learning loss, and the hard-aware cross entropy loss, as follows:

where

and

are trade-off parameters.

is the hard-aware cross entropy loss:

where

is the predicted probability for the true class

of the

i-th text sample. The core idea is to dynamically scale the cross-entropy loss with a weighting factor

that diminishes the contribution of easy samples and, in turn, magnifies the focus on hard-to-classify samples.

can be obtained via inputting the fusion representation

into a linear classifier. The detailed training process is shown in Algorithm 1.

| Algorithm 1 Algorithm for Multi-granularity Invariant Structure Learning (MISL) |

- 1:

/* Training Phase */ - 2:

Input: Training dataset ; the category number C; loss weights . - 3:

Initialize: Initialize the parameters of MISL. - 4:

while not converged do - 5:

Sample a batch of text documents from D. - 6:

for each document in the batch do - 7:

Construct statistical graph , linguistic graph , and knowledge graph . - 8:

Obtain view-specific representations . - 9:

Fuse representations to get the final representation . - 10:

Generate an augmented view . - 11:

Obtain the augmentation representation using the same process as lines 7–9. - 12:

end for - 13:

Calculate the hard-aware cross-entropy loss . - 14:

Calculate the sample-invariant representation learning loss . - 15:

calculate the neighborhood-invariant semantic learning . - 16:

Compute the total loss: . - 17:

Update model parameters using gradient descent. - 18:

end while - 19:

Output: The model parameter . - 20:

/* Inference Phase */ - 21:

Input: A test document . - 22:

Apply the trained model with parameter on to obtain the corresponding label predictions. - 23:

Output: Label predictions of .

|

3. Experimental Evaluation

3.1. Setup

Datasets. Three text datasets are leveraged to test the performance of MISL, i.e., Ohsumed, TagMyNews, and Snippets. Following current works, a small pool of 40 labeled documents is randomly selected for each class, and then this pool is evenly partitioned, yielding 20 documents per class for the training set and another 20 for the validation set. The majority of the remaining data is designated as the test set. Specifically, the Ohsumed dataset includes 7400 documents, with 460 for training (6.22%), 11,764 words, 4507 entities, 38 tags, 23 classes. The TagMyNews dataset is the most extensive, with 32,549 documents, 140 for training (0.43%), 38,629 words, 14,734 entities, 42 tags, and 7 classes. The Snippets dataset is larger, with 12,340 documents, 160 for training (1.30%), 29,040 words, 9737 entities, 34 tags, and it covers 8 classes.

Evaluation Metrics: Following [

31,

32,

33], to evaluate the performance of MISL, Accuracy (ACC), Recall (Rec), Precision (Pre), and F1 are leveraged in the experiments. The larger the values of the four metrics, the better the performance. The experiment results are the average of five times.

Comparison methods. Eleven text classification methods are selected as baselines, including QSIM [

7], EMGAN [

10], GTC [

13], PTE [

17], LSTMNN [

19], NFS [

20], Hy-TC [

22], IEG-GAT [

24], IGCL [

30], BERT-avg [

34] and BERT-cls [

34].

Implementation details. In the experiments, the Adam optimizer is used to optimize the overall network with a learning rate of 0.0003 and an epoch number of 500. and are determined by conducting grid search experiments on three datasets. An early stopping mechanism was employed to prevent overfitting. The training process was terminated if the loss on the validation set did not decrease for 10 consecutive epochs, and the model weights from the best-performing epoch were restored for the final evaluation. For Ohsumed, TagMyNews, and Snippets, is set to 1, 1, and 0.1, respectively. is set to 1, 1, and 1, respectively.

3.2. Comparison Evaluation

The results of the comparison evaluation, as detailed in

Table 1,

Table 2 and

Table 3, demonstrate the superior performance of the proposed MISL model across all three datasets: Ohsumed, TagMyNews, and Snippets. In all evaluated metrics, our model consistently outperforms eleven baseline methods, often by a significant margin. These consistent and substantial gains across diverse datasets underscore the robustness and effectiveness of the proposed approach.

The remarkable performance of our proposed model can be attributed to several key methodological innovations designed to address the specific challenges of text classification in entrepreneurship policy. Firstly, the novel multi-view feature engineering module effectively mitigates semantic sparsity by integrating statistical, linguistic, and knowledge-based representations. This holistic approach captures a more comprehensive contextual understanding of the policy texts. Secondly, the sample-invariant representation learning strategy, which leverages data augmentation and mutual information maximization, enhances the model’s robustness and generalization capabilities, particularly in scenarios with limited labeled data. By forcing the model to learn the essential semantic core of the text, it becomes less susceptible to superficial variations. Lastly, the neighborhood-invariant semantic learning component improves the discriminative power of the final representations by promoting intra-class compactness and inter-class separability, leading to more accurate classification.

Meanwhile, to validate the statistical significance of our results, we performed paired t-tests between our proposed MISL model and each baseline method for every evaluation metric across all datasets. The tests were conducted using the results from five independent runs with different random seeds. Significance levels are indicated in the tables: ** denotes p < 0.01 when comparing baseline methods against our proposed model, confirming that all observed improvements are statistically meaningful.

3.3. Ablation Analysis

An ablation study is conducted to validate the contribution of each representation within our multi-view feature engineering module: statistical (

), linguistic (

), and knowledge-based (

). The results in

Table 4 consistently demonstrate the overall effectiveness of the multi-view approach, as the complete model incorporating all three representations achieved the best performance across all datasets, highlighting a synergistic effect where each view provides complementary and indispensable information. Notably, the removal of the knowledge-based representation resulted in the most substantial decline in performance, underscoring its critical role in resolving semantic sparsity by enriching the text with crucial background and contextual information from external knowledge bases. Eliminating the statistical representation also led to a significant performance degradation, confirming that information derived from word co-occurrence patterns serves as the fundamental building block for capturing the core topics of the text. Finally, while the removal of the linguistic representation had a comparatively lesser impact, it still caused a noticeable decrease in performance, suggesting its value as an auxiliary source of fine-grained semantic clues. In summary, the analysis reveals a clear hierarchy of importance for the representations and confirms that their effective integration is key to the model’s superior performance.

Meanwhile, to assess the contribution of each component within our composite objective, we performed an ablation study on the loss functions, with results presented in

Table 5. The results unequivocally establish the hard-aware cross-entropy loss (

) as the foundational component; its removal led to a catastrophic collapse in performance, as it provides the primary supervised signal essential for the classification task. Furthermore, both the neighborhood-invariant (

) and sample-invariant (

) learning losses proved to be critically important. Removing the neighborhood-invariant loss significantly weakened the discriminative power of the feature space, while removing the sample-invariant loss diminished the model’s robustness and ability to generalize by learning the core semantics of the text. The superior performance of the complete model over any of its ablated variants strongly demonstrates a powerful synergy, confirming that the integration of a core classification objective with these two distinct representation learning objectives is essential for achieving a highly accurate and robust final model.

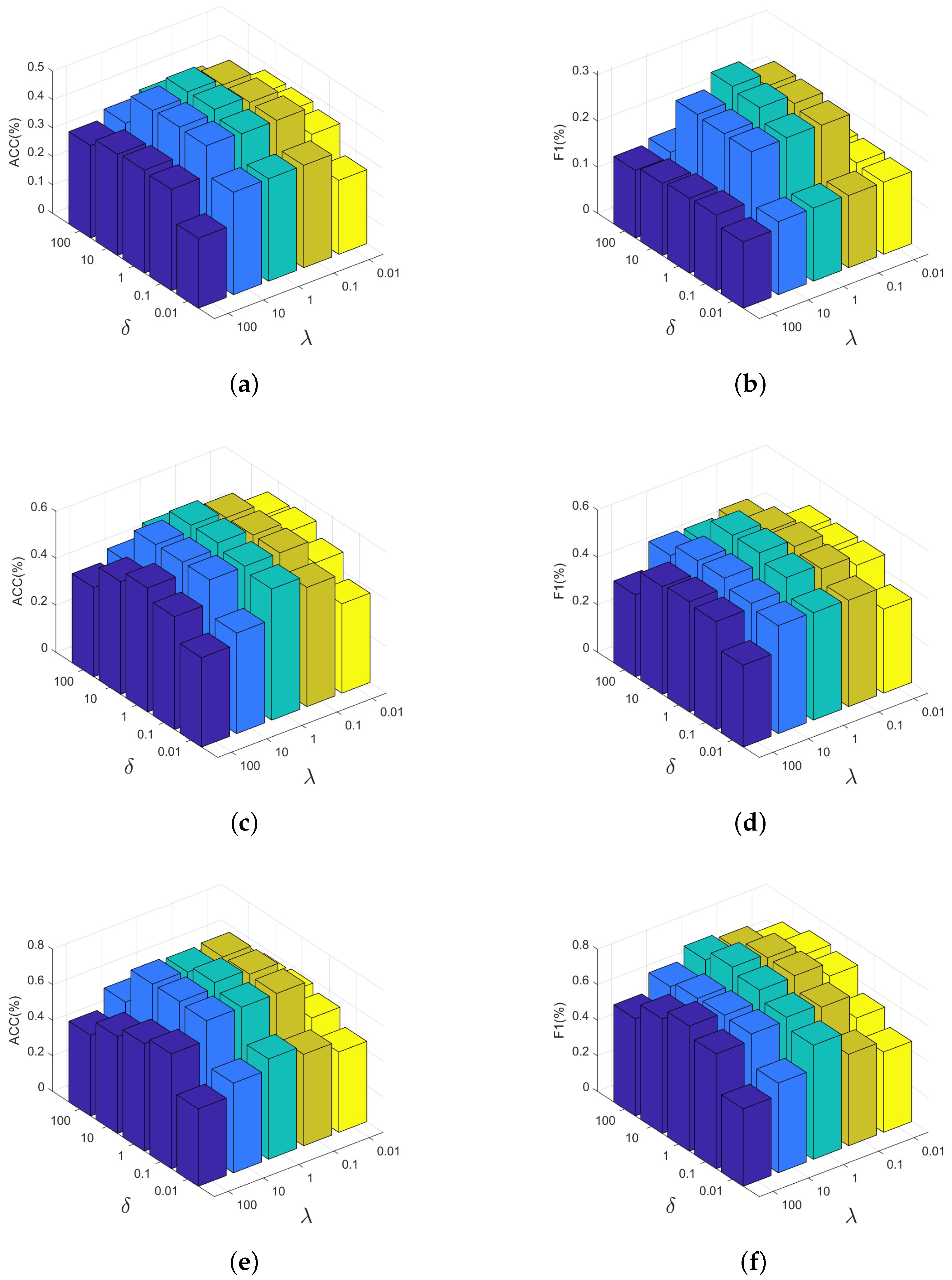

3.4. Parameter Analysis

and

act as weighting factors, controlling the influence of the neighborhood-invariant and sample-invariant losses, respectively. To determine the optimal values for these hyperparameters, a comprehensive grid search is conducted, which explores a range of values for both

and

, specifically from the set

. This process allows for a thorough evaluation of how different balances between the loss components affect the model’s ability to classify text accurately. The results in

Figure 2 demonstrate a consistent and telling pattern across all three datasets: Ohsumed, TagMyNews, and Snippets. The model’s performance, measured by ACC and F1, consistently peak when the values for

and

were set within the range of

. This phenomenon indicates that the MISL model is most effective when the contributions of the neighborhood-invariant and sample-invariant learning components are carefully balanced with the primary cross-entropy loss. When the weights are too high (e.g., 10 or 100), these specialized loss components may dominate the learning process, potentially overshadowing the fundamental classification task. Conversely, when the weights are too low (e.g., 0.01), their regularizing and structure-enforcing benefits are diminished, leading to suboptimal performance.

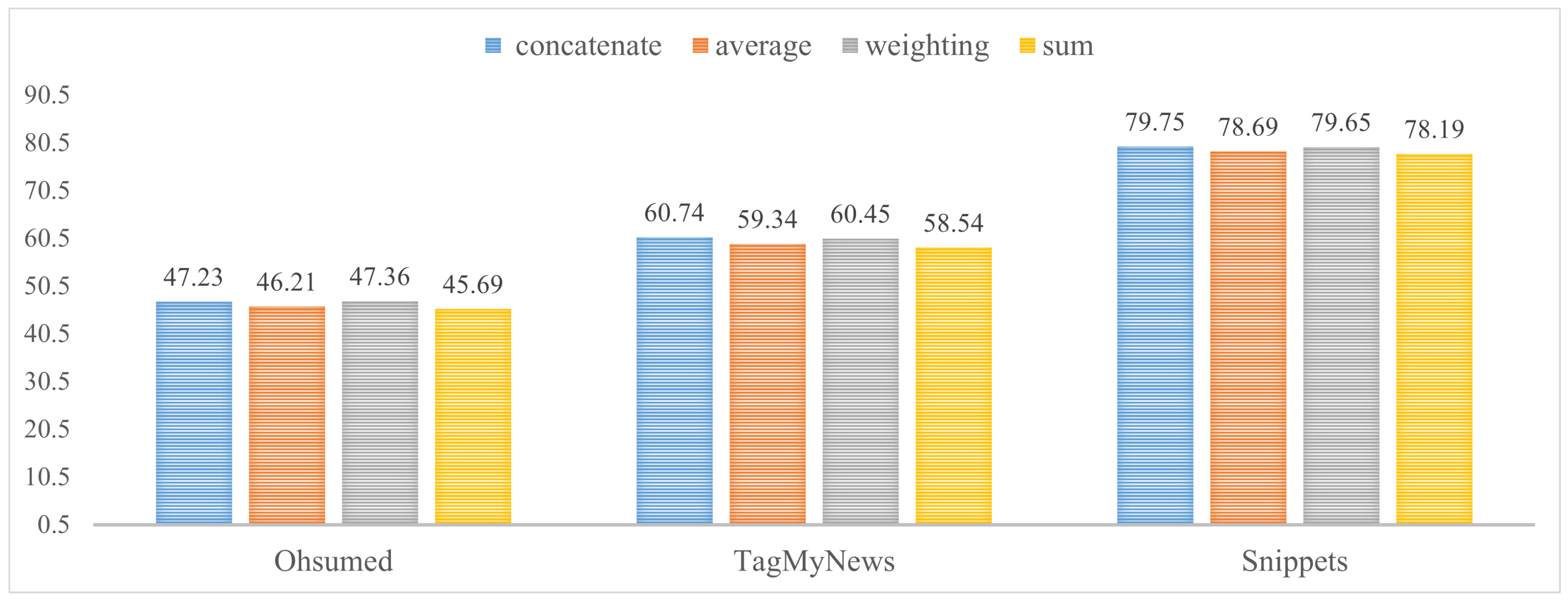

3.5. Fusion Analysis

To determine the most effective method for integrating the multi-view representations, we evaluate four distinct fusion operations: concatenation, average, weighting, and sum. The ACC of each method was tested on the Ohsumed, TagMyNews, and Snippets datasets. The results in

Figure 3 consistently show that the concatenate and weighting operations are highly competitive, both significantly outperforming the average and sum methods across all three datasets. While the weighting mechanism yields strong results, it introduces additional learnable parameters and computational overhead. Given that the concatenate operation achieves comparable or superior performance without this extra burden, it was selected as the fusion strategy for our model due to its optimal balance of effectiveness and efficiency.

3.6. Model Analysis

Based on the comprehensive model analysis in

Table 6, the proposed MISL framework demonstrates significant advantages in computational efficiency and practical deployment potential. The model achieves exceptional parameter efficiency, requiring substantially fewer parameters while maintaining competitive performance. The results conclusively show that our method successfully navigates the trade-off between model complexity and operational efficiency, offering a practical and effective approach for text classification tasks.

4. Conclusions

This paper addresses two core challenges in the text classification of entrepreneurship policy: the issue of semantic sparsity arising from the intrinsic complexity of policy texts, and the problems of model overfitting and poor generalization caused by the scarcity of labeled data in this specialized domain. To overcome these challenges, we propose an innovative multi-granularity invariant structure learning model. To combat semantic sparsity, the model first introduces a multi-view feature engineering module. This module constructs and fuses three distinct graph structures—statistical, linguistic, and knowledge-based—to capture textual information from multiple dimensions, thereby generating a comprehensive and rich semantic representation. To resolve the issue of model robustness in the context of data scarcity, we introduce a dual invariant structure learning framework. This framework operates on two levels: first, a sample-invariant representation learning that uses data augmentation and mutual information maximization to help the model learn the essential and stable semantic core of a text, making it insensitive to superficial perturbations. Second, a neighborhood-invariant semantic learning that applies a contrastive objective on a nearest-neighbor graph to enhance intra-class compactness and inter-class separability in the feature space. In summary, through its meticulously designed multi-view feature fusion and dual invariant learning mechanisms, the MISL model systematically solves the key difficulties in entrepreneurship policy text classification. It provides a powerful solution for achieving efficient, robust, and accurate classification of complex texts, even with limited data. While MISL demonstrates strong performance, we acknowledge several limitations that present opportunities for future work. First, the model’s knowledge representation module relies on external knowledge bases. Consequently, its effectiveness can be constrained by the coverage and quality of these bases, potentially leading to information loss for entities or concepts not well-represented in them. Second, the construction of multiple graph views and the subsequent graph convolutional operations introduce higher computational complexity compared to simpler text classification models, which may limit its application in scenarios requiring strict real-time processing.