Abstract

Metaheuristic algorithms are widely utilized as effective tools for solving complex optimization problems. Among them, the Harmony Search (HS) algorithm has garnered significant attention for its simple structure and excellent performance. The efficacy of the HS algorithm is heavily dependent on the configuration of its internal parameters, with the Harmony Memory Considering Rate (HMCR) and Pitch Adjusting Rate (PAR) playing pivotal roles. These parameters determine the probabilities of using the Random Generation (RG), Harmony Memory Consideration (HMC), and Pitch Adjustment (PA) operators, thereby controlling the balance between exploration and exploitation. However, a systematic empirical analysis of the interaction between these parameters and the characteristics of the problem at hand remains insufficient. Thus, this study conducts a comprehensive empirical analysis of the performance sensitivity of the HS algorithm to variations in HMCR and PAR values. The analysis is performed on a suite of 23 benchmark functions, encompassing diverse characteristics such as unimodality/multimodality and separability/non-separability, along with 5 real-world optimization problems. Through extensive experimentation, the performance for each parameter combination was evaluated on a rank-based system and visualized using heatmaps. The results experimentally demonstrate that the algorithm’s performance is most sensitive to the HMCR value across all function types, establishing that setting a high HMCR value (≥0.9) is a prerequisite for securing stable performance. Conversely, the optimal PAR value showed a direct correlation with the topographical features of the problem landscape. For unimodal problems, a low PAR value between 0.1 and 0.3 was more effective, whereas for complex multimodal problems with numerous local optima, a relatively higher PAR value between 0.3 and 0.5 proved more efficient in searching for the global optimum. This research provides a guideline into the parameter settings of the HS algorithm and contributes to enhancing its practical applicability by proposing a systematic parameter tuning strategy based on problem characteristics.

Keywords:

harmony search algorithm; metaheuristics; parameter tuning; empirical sensitivity analysis; optimization MSC:

68T20

1. Introduction

The challenges encountered in numerous contemporary fields such as engineering, science, and management often manifest as complex and precise optimization problems that cannot be linearized. These problems may feature vast solution spaces, non-linearity, and numerous constraints, making it exceedingly difficult or impossible to find the optimal solution using traditional mathematical methodologies [,,]. Consequently, metaheuristic algorithms, which draw inspiration from natural phenomena or mimic the behavioral patterns and strategies of animal or human, have emerged as a potent alternative []. Various metaheuristic techniques have been proposed, including the Genetic Algorithm, Particle Swarm Optimization, and Simulated Annealing. Among them, the Harmony Search (HS) algorithm, introduced by Z. W. Geem et al. in 2001, has been extensively studied due to its concise concept, ease of implementation, and robust global search capabilities [,]. (Based on major research databases accessed on 25 September 2025, Web of Science has 4093 papers with the query term of Topic = “harmony search”, and Scopus has 5020 papers within the query term of TITLE-ABS-KEY (“harmony search”)).

Recent studies on the Harmony Search algorithm demonstrate its wide-ranging applications. These include optimizing distillation processes [], proposing a hybrid algorithm combining HS with War Strategy Optimization and verifying its performance on various benchmark functions [], solving the Electric Vehicle (EV) routing problem with battery constraints using an improved HS algorithm [], proposing a hybrid algorithm combining HS with CDDO and validating it on 33 benchmark functions [], developing HS operators for the NP-complete combinatorial optimization problem of Nonogram puzzles [], proposing a hybrid technique combining Q-learning reinforcement learning with the HS metaheuristic to solve the unmanned surface vehicle scheduling problem [], proposing a hybrid algorithm combining the Global-best Harmony Search algorithm with Baldwinian learning to solve the multi-dimensional 0–1 Knapsack problem and verifying its performance on various benchmark functions [], proposing a hybrid HS algorithm that uses Particle Swarm Optimization to reinitialize the harmony memory and validating it on 15 continuous functions [], combining the HS algorithm with dynamic Gaussian fine-tuning and verifying it with CEC2017 benchmark functions [], and enhancing performance by integrating an Equilibrium Optimizer-based leadership strategy with the HS algorithm and validating it on CEC and real-world problems [], A hybrid HS was developed for optimizing distributed permutation flowshop scheduling [], A hybrid BAS–HS–GA metaheuristic was developed for optimal tuning of a fuzzy PID controller, achieving robust and delay-resilient heading control of USVs under marine disturbances [].

While numerous studies have successfully applied the Harmony Search algorithm, the majority of them have adopted specific values for key parameters like Harmony Memory Considering Rate (HMCR) and Pitch Adjusting Rate (PAR) based on convention from preceding research. This approach, which lacks a basic understanding of why those parameters are proper for the given problem, may fail to unlock the full potential of the algorithm.

For instance, while many HS studies have employed high HMCR values in the range of 0.8–0.99, some works, such as [,], have adopted relatively low HMCR values. This lack of a clear guideline regarding appropriate HMCR settings may hinder accurate performance comparisons among different algorithms. As in [,], there have been studies that attempted to determine appropriate parameter values by directly examining their influence through experiments. However, these works focused on identifying the most optimized parameters for the specific problems under consideration, rather than providing a general guideline. In contrast, the present study aims to analyze the influence of parameters across a wide variety of function types that were not addressed in the aforementioned studies.

The HS algorithm was inspired by the process of jazz improvisation, where musicians adjust their instrument’s pitch to find a better harmony. The algorithm’s search process consists of three main operations: (1) finding a completely new random solution (Random Generation; RG), (2) replicating a good solution stored in memory (Harmony Memory Consideration; HMC), and (3) slightly modifying the replicated solution (Pitch Adjustment; PA). The core parameters, HMCR and PAR, regulate the proportion and intensity of these three operations. The algorithm’s performance is highly dependent on the balance between exploration and exploitation determined by these two parameters. An overemphasis on exploitation (high HMCR) increases the risk of premature convergence to a local optimum, while an overemphasis on exploration (low HMCR) leads to excessively slow convergence and reduced efficiency. A high PAR signifies a more extensive search in the vicinity of a good solution, whereas a low PAR implies a more conservative local search with minor modifications. Despite the critical role of these parameters, many application-oriented studies have either fixed them to certain preferred values based on the researcher’s past experience or adjusted them only within a limited range. A systematic and comprehensive empirical analysis of the relationship between the fundamental characteristics of a problem (e.g., complexity of the solution space) and the optimal parameter values is still lacking.

Therefore, this study aims to: First, quantitatively measure the performance of the HS algorithm for all combinations of HMCR and PAR values across standard benchmark functions with diverse topographical characteristics. Second, based on the experimental results, analyze the performance sensitivity to parameter changes and identify the correlation between problem characteristics (unimodal/multimodal, separable/non-separable) and optimal parameter combinations. Third, synthesize the empirical analysis results to propose a practical and systematic parameter setting guideline for applying the HS algorithm to real-world problems.

2. Harmony Search Algorithm

To better understand the role of each parameter in the HS algorithm, this section describes the definitions of key terms, the operating mechanism of HS, and also provides its pseudocode representation. In the HS algorithm, a candidate solution is referred to as a harmony, and a set of good harmonies discovered during the search process is stored in the Harmony Memory (HM). The number of harmonies stored in the HM is determined by the parameter Harmony Memory Size (HMS).

The basic procedure of the algorithm begins with initializing the HM with randomly generated solutions. Then, at each iteration, a new harmony is generated by probabilistically applying three algorithmic operators described later in this section. The newly generated harmony is evaluated using the objective function and compared with the existing harmonies stored in the HM. If the new harmony exhibits a better evaluation than one of the harmonies in the HM, the inferior harmony is replaced with the new one. This process is referred to as the HM update.

The iteration continues until the user-defined maximum number of iterations is reached. As the search progresses, the HM becomes increasingly populated with higher-quality harmonies. Consequently, in subsequent iterations, new solutions are generated by referring to these improved harmonies, which enhances the overall performance of the algorithm.

The HS algorithm generates new solutions by utilizing three algorithmic operators. The first is the Random Generation (RG) operator, which, as its name implies, assigns variable values randomly within their predefined domains (variable range). Equation (1) expresses the RG operator in equation form.

Here, denotes a random number between 0 and 1.

The second is the HMC operator. This operator selects one harmony at random from those stored in the HM and directly copies its variable values into the new solution. The probability of applying this operator is controlled by the parameter HMCR. For example, if HMCR is set to 0.7, the algorithm employs the HMC operator with a 70% probability when generating a new solution, while the remaining 30% of the time it uses the RG operator. Equation (2) expresses the HMC operator in equation form.

The final operator is the PA operator. This operator can be applied only when the HMC operator has been executed. It slightly perturbs the variable values copied from the HM in order to enhance local exploration. The probability of applying this operator is determined by the parameter PAR. For instance, if PAR is set to 0.1, the PA operator is applied with a 10% probability after the execution of the HMC operator, while in the remaining 90% of cases, only the HMC operator is applied without PA. Equation (3) expresses the PA operator in equation form.

Here, denotes a random number between 0 and 1.

The working procedure of the HS algorithm can be found in the pseudocode of Algorithm 1.

| Algorithm 1. Harmony Search Algorithm |

| begin HS for each harmony in HM (size = HMS) initializing the HM end for for each iteration until the maximum number of iterations is reached for each variable if HMC operator is selected according to probability HMCR then Copy the value of a randomly selected harmony from HM if PA operator is selected according to probability PAR then Perturb the copied value by adding or subtracting a small amount end if else Generate a random value within the variable range end if end for if the evaluation of the new harmony is better than the worst harmony in HM then Replace the worst harmony in HM with the new harmony end if end for end HS |

3. Methodology

3.1. Benchmark Functions

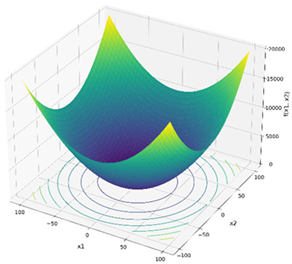

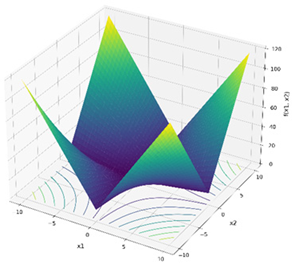

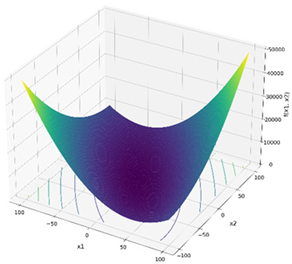

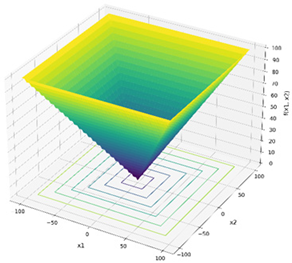

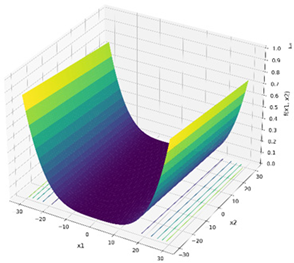

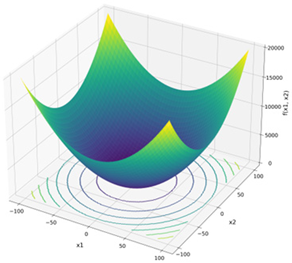

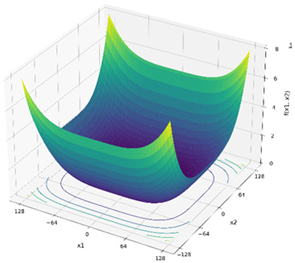

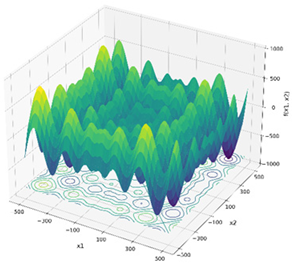

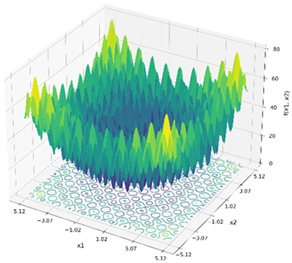

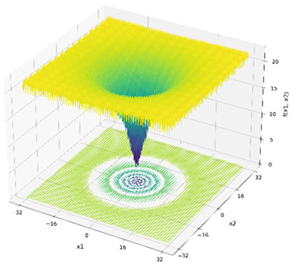

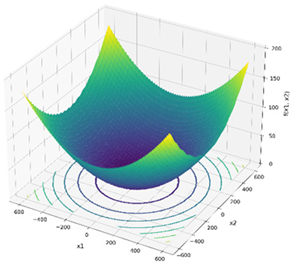

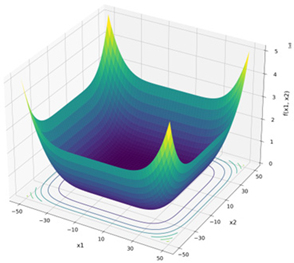

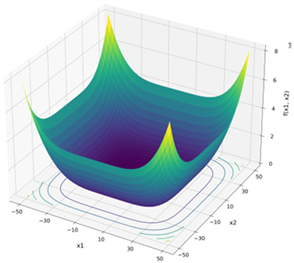

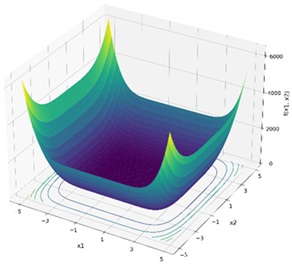

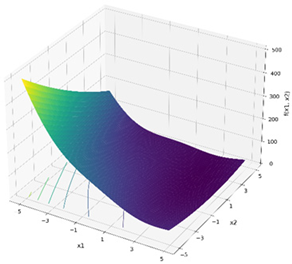

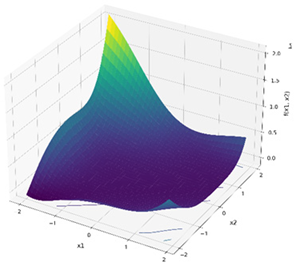

To conduct a multifaceted evaluation of the HS algorithm’s parameter sensitivity, this study utilized 23 benchmark functions widely used for assessing optimization algorithm performance, including functions from the CEC benchmark suite, Goldstein-Price, Schwefel, Shekel, and Rastrigin functions [,,]. These functions, detailed in Table 1 below, are broadly classified into two categories based on the characteristics of their solution space:

Table 1.

Benchmark function list.

Unimodal: Functions with a single global optimum, suitable for evaluating the algorithm’s convergence speed and accuracy, i.e., its ‘exploitation’ capability (F1–F7).

Multimodal: Functions containing multiple local optima, crucial for assessing the algorithm’s ability to escape local optima and find the global optimum, i.e., its ‘exploration’ capability (F8–F23).

In Table 1, the Description column includes the functional expression and, as an example, the corresponding graph for the case of . In addition, the Dimension column specifies the number of variables actually used in the experiments. The Range column indicates the domain of the variables applied in the experiments, and finally, the column presents the exact global optimum value.

Additionally, five real-world engineering design optimization problems, which feature constraints and complex interdependencies between variables, were used for testing [,]. These problems were classified separately, as the presence of multiple constraints makes them considerably more challenging optimization tasks compared to the general unimodal or multimodal problems listed above.

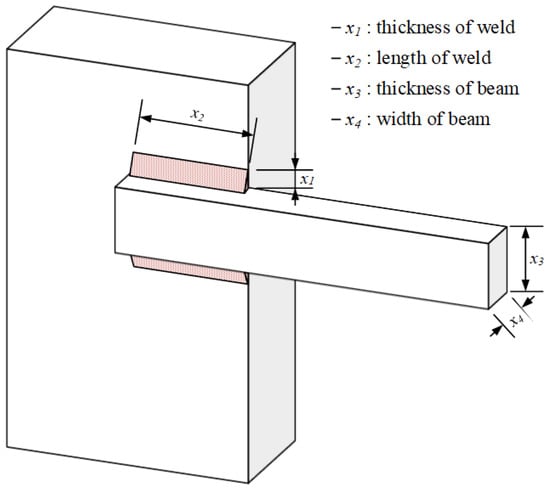

- F24—Welded beam design optimization problem

Figure 1 illustrates the overall structure of the welded beam design optimization problem and shows the meaning of each variable.

Figure 1.

Welded beam design optimization problem.

Minimize:

Subject to:

With:

where

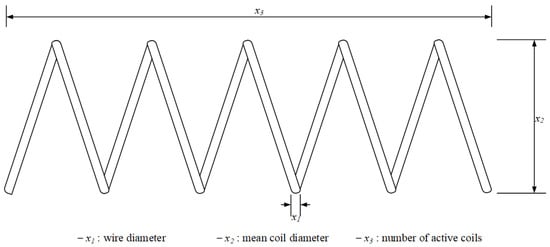

- F25—Tension/compression spring design optimization problem

Figure 2 illustrates the overall structure of the tension/compression spring design optimization problem and shows the meaning of each variable.

Figure 2.

Tension/compression spring design optimization problem.

Minimize:

Subject to:

where .

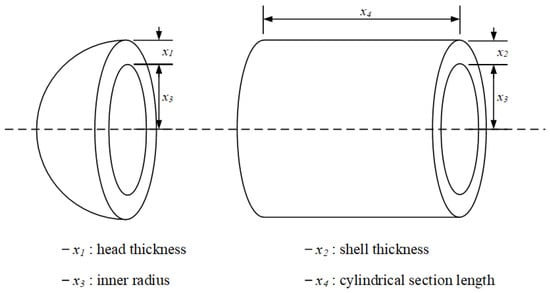

- F26—Pressure vessel design optimization problem

Figure 3 illustrates the overall structure of the pressure vessel design optimization problem and shows the meaning of each variable.

Figure 3.

Pressure vessel design optimization problem.

Minimize:

Subject to:

where

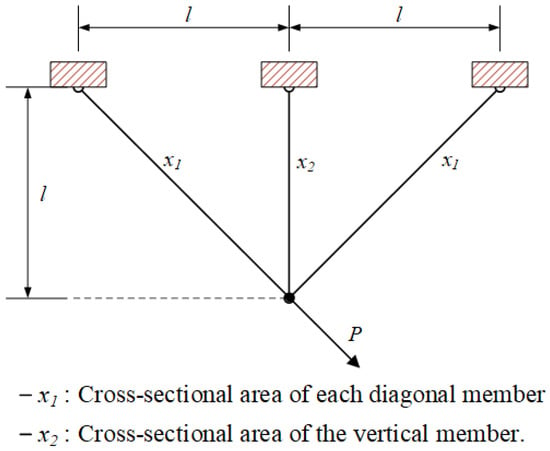

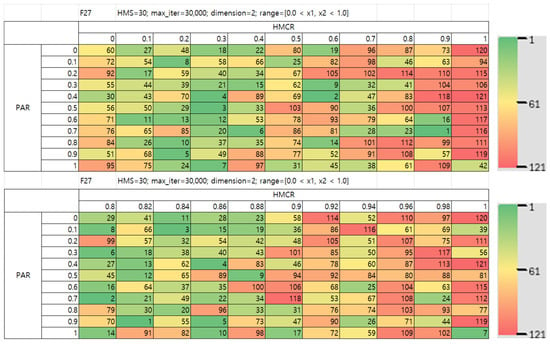

- F27—3bar truss design optimization problem

Figure 4 illustrates the overall structure of the 3bar truss design optimization problem. and shows the meaning of each variable.

Figure 4.

The 3bar truss design optimization problem.

Minimize:

Subject to:

With:

where

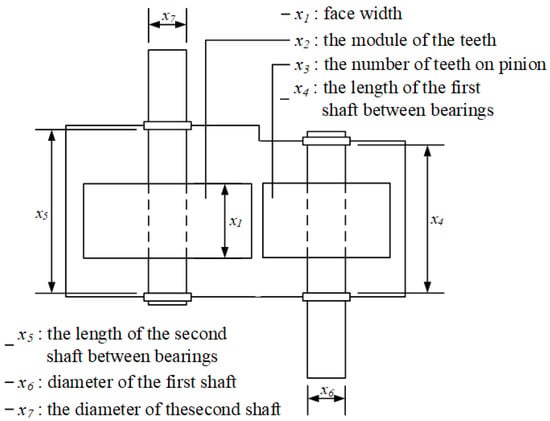

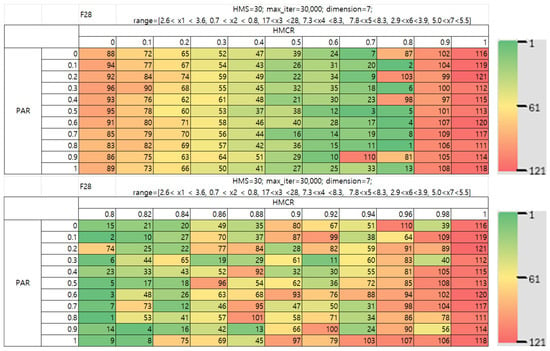

- F28—Speed reducer design optimization problem

Figure 5 illustrates the overall structure of speed reducer design optimization problem and shows the meaning of each variable.

Figure 5.

Speed reducer design optimization problem.

Minimize:

Subject to:

where .

3.2. Experimental Design and Performance Evaluation

In this study, to primarily investigate the influence of the HMCR and PAR parameters, which govern the core operators of the algorithm, the remaining parameters such as HMS and NI were fixed to values commonly adopted in previous studies.

All experiments were performed in Microsoft Excel using VBA, and comprehensive details of the experimental environment, including hardware specifications, are available in the Supplementary Materials.

For F24–28, which involve constraints, these were handled by adding a penalty term to the objective function, as expressed in the formulation used in Equation (46).

Here, denotes the original objective function, the penalty coefficient, the constraint, and the constant term. In this study, for F24, the penalty coefficient was set to 100 and the constant term was set to 1000. These values were chosen so that the magnitude of the penalty term would be considerably larger than that of the original objective function , thereby making it more likely that solutions satisfying all constraints would remain in the HM.

The experiment was designed as follows to precisely measure the algorithm’s parameter sensitivity.

- Variable Parameters:

HMCR: 0.0, 0.1, 0.2, …, 1.0 (11 levels)

PAR: 0.0, 0.1, 0.2, …, 1.0 (11 levels)

were conducted for a total of 11 × 11 = 121 parameter combinations.

- Fixed Parameters:

HMS: 30

Number of Improvisations (NI): 30,000

Maximum PA Step():

- Performance Evaluation:

For each benchmark function and each of the 121 parameter combinations, the HS algorithm was executed independently 30 times. To ensure statistical reliability, the average of the objective function values from the 30 runs was considered the final performance for that combination. Subsequently, the performance values of the 121 combinations were ranked. A rank closer to 1 indicates a better-performing parameter combination.

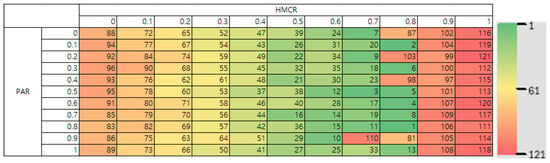

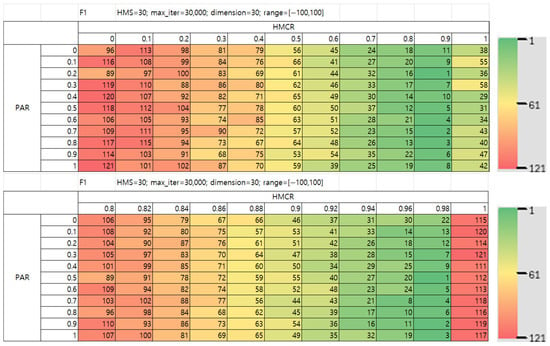

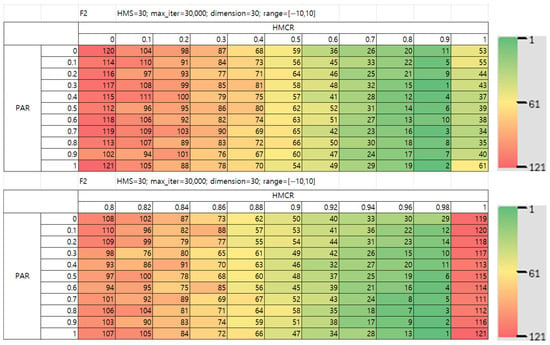

- Visualization:

To facilitate an intuitive assessment of parameter sensitivity, the performance ranks of the 121 parameter combinations for each function were visualized as an 11 × 11 heatmap. In these heatmaps, the axes correspond to the PAR and HMCR values, while the color of each cell represents the performance rank. A green color scale signifies a high rank (superior performance), whereas a red scale indicates a low rank (inferior performance). This visualization scheme allows for the direct visual identification of parameter sensitivity, as illustrated in the example in Figure 6.

Figure 6.

Example of a heatmap of the results data.

A preliminary empirical analysis of the results for several benchmark functions revealed that the algorithm’s performance is highly sensitive to the HMCR parameter. Specifically, a strong trend was observed wherein optimal performance was generally achieved with an HMCR value of 0.9. Therefore, to conduct a more granular investigation of the performance characteristics in the vicinity of HMCR = 0.9, a subsequent experiment was conducted as follows.

- Variable Parameters:HMCR: 0.82, 0.84, 0.86, …, 0.98 (9 levels)PAR: 0.0, 0.1, 0.2, …, 1.0 (11 levels)Other conditions are the same as before, and additional experiments are performed for a total of 9 × 11 = 99 parameter combinations.

4. Results and Analysis

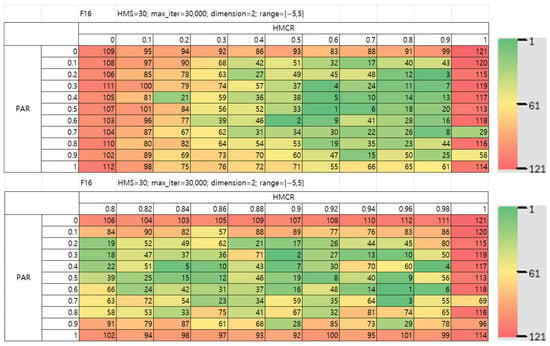

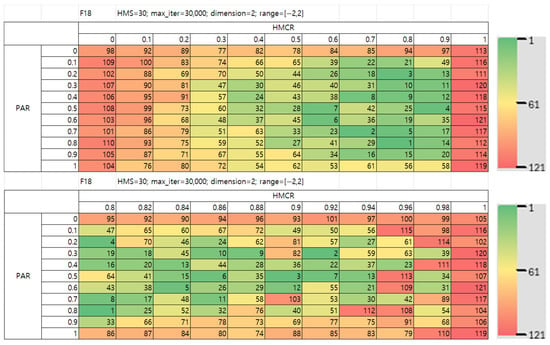

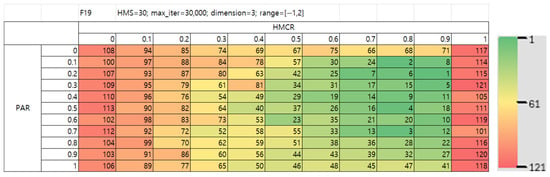

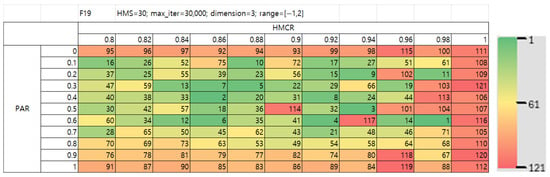

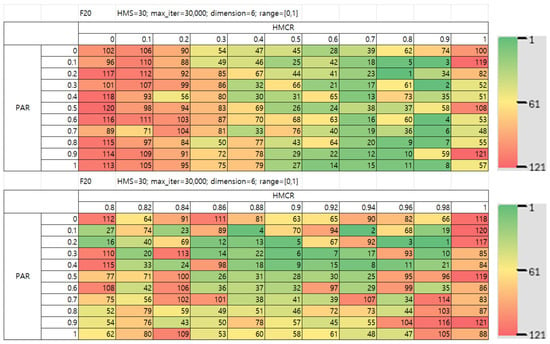

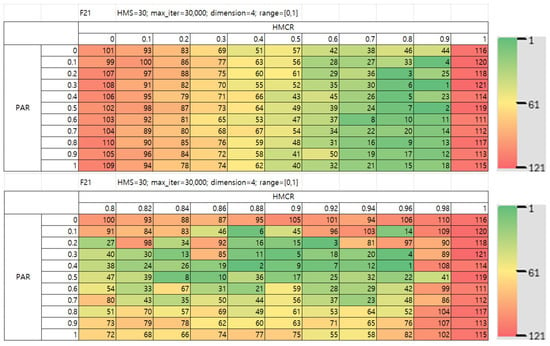

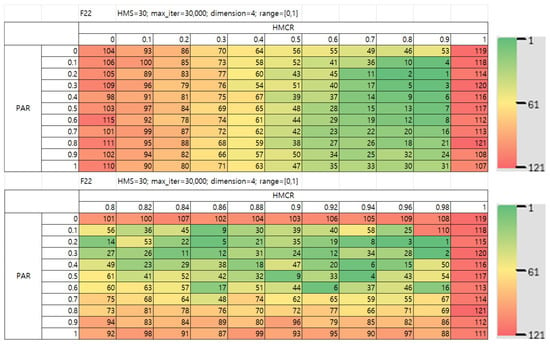

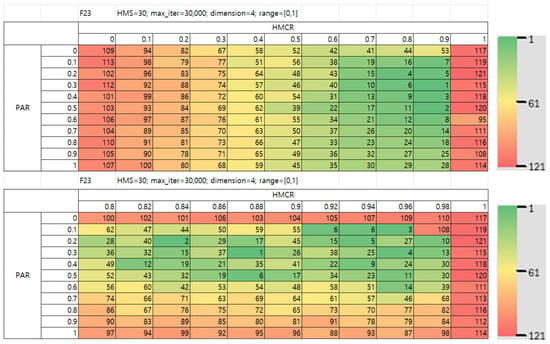

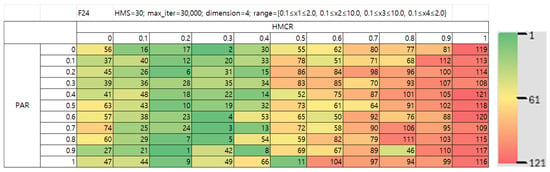

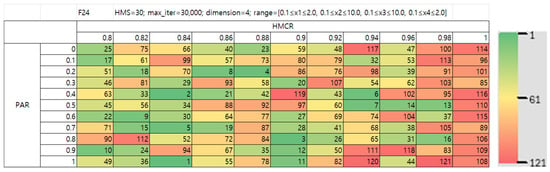

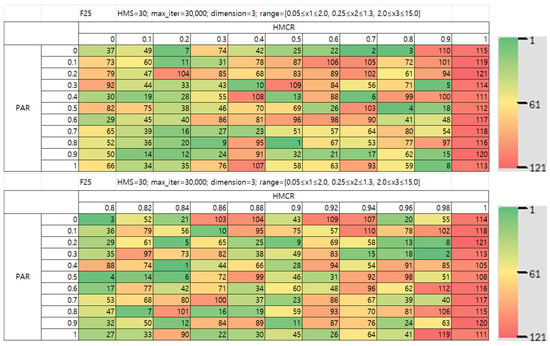

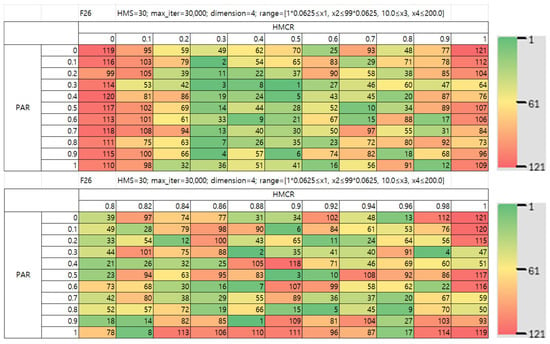

The sensitivity analysis results of two hyper-parameters (HMCR and PAR) for unconstrained unimodal functions (F1–F7), multimodal functions (F8–F23) and real-world design problems (F24–F28) are displayed in Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15, Figure 16, Figure 17, Figure 18, Figure 19, Figure 20, Figure 21, Figure 22, Figure 23, Figure 24, Figure 25, Figure 26, Figure 27, Figure 28, Figure 29, Figure 30, Figure 31, Figure 32, Figure 33 and Figure 34 in the form of heatmaps.

Figure 7.

Heatmap of parameter sensitivity experiments for the benchmark F1.

Figure 8.

Heatmap of parameter sensitivity experiments for the benchmark F2.

Figure 9.

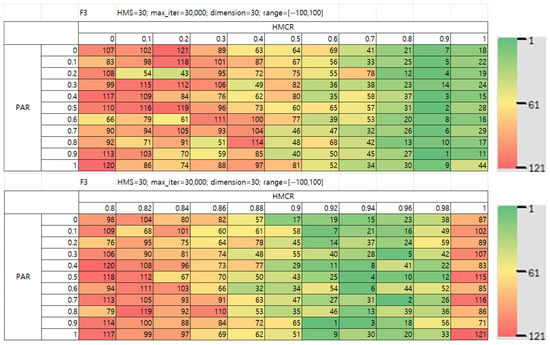

Heatmap of parameter sensitivity experiments for the benchmark F3.

Figure 10.

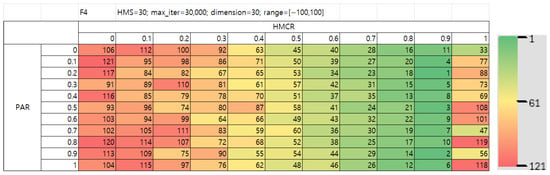

Heatmap of parameter sensitivity experiments for the benchmark F4.

Figure 11.

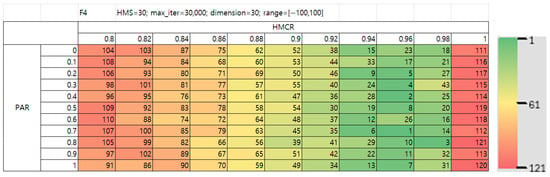

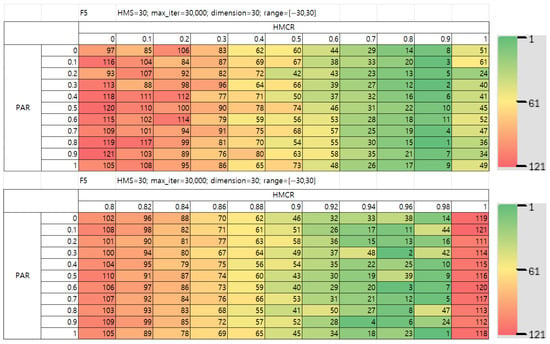

Heatmap of parameter sensitivity experiments for the benchmark F5.

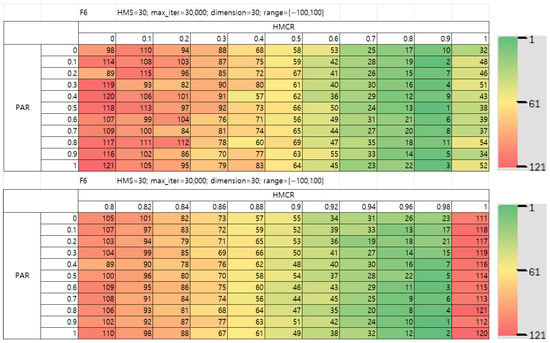

Figure 12.

Heatmap of parameter sensitivity experiments for the benchmark F6.

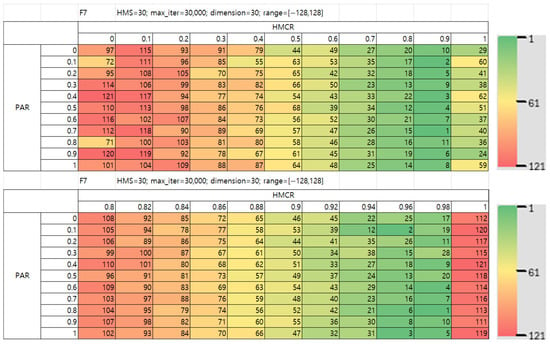

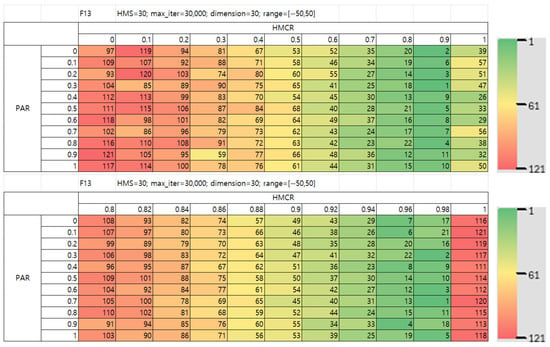

Figure 13.

Heatmap of parameter sensitivity experiments for the benchmark F7.

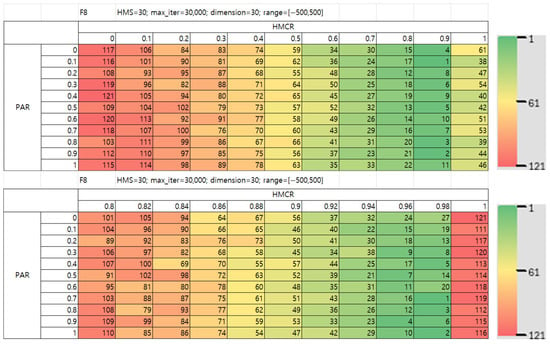

Figure 14.

Heatmap of parameter sensitivity experiments for the benchmark F8.

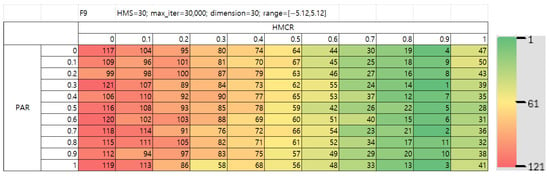

Figure 15.

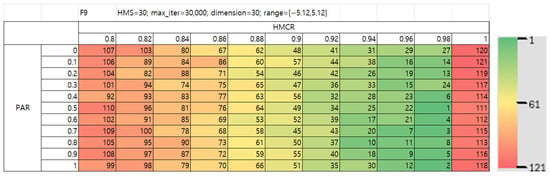

Heatmap of parameter sensitivity experiments for the benchmark F9.

Figure 16.

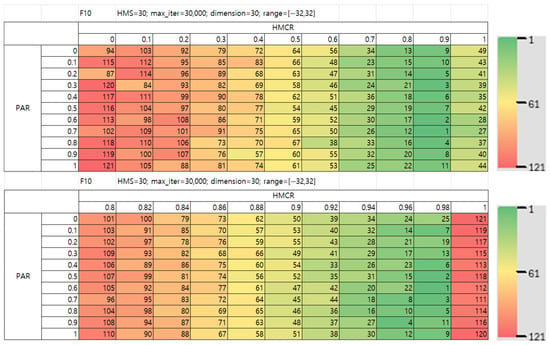

Heatmap of parameter sensitivity experiments for the benchmark F10.

Figure 17.

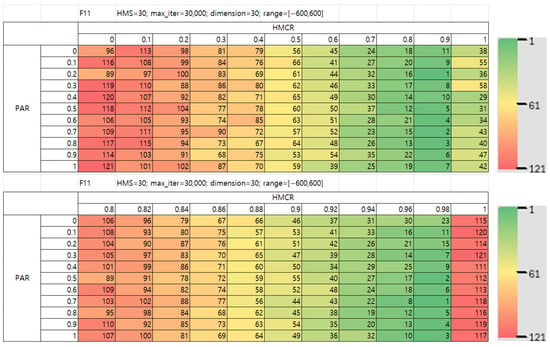

Heatmap of parameter sensitivity experiments for the benchmark F11.

Figure 18.

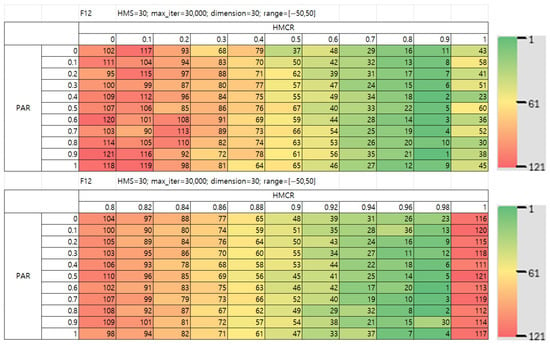

Heatmap of parameter sensitivity experiments for the benchmark F12.

Figure 19.

Heatmap of parameter sensitivity experiments for the benchmark F13.

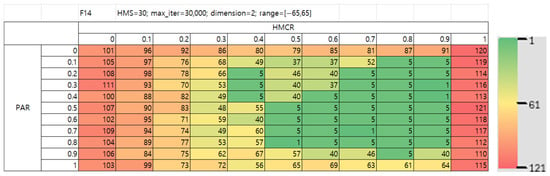

Figure 20.

Heatmap of parameter sensitivity experiments for the benchmark F14.

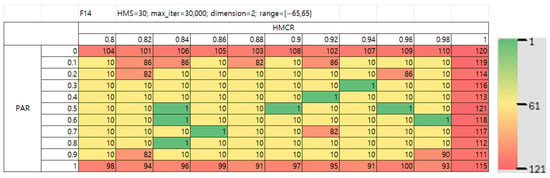

Figure 21.

Heatmap of parameter sensitivity experiments for the benchmark F15.

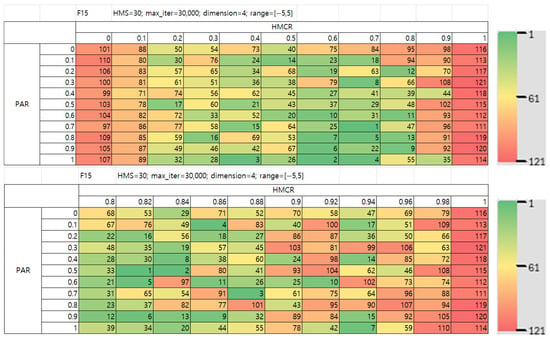

Figure 22.

Heatmap of parameter sensitivity experiments for the benchmark F16.

Figure 23.

Heatmap of parameter sensitivity experiments for the benchmark F17.

Figure 24.

Heatmap of parameter sensitivity experiments for the benchmark F18.

Figure 25.

Heatmap of parameter sensitivity experiments for the benchmark F19.

Figure 26.

Heatmap of parameter sensitivity experiments for the benchmark F20.

Figure 27.

Heatmap of parameter sensitivity experiments for the benchmark F21.

Figure 28.

Heatmap of parameter sensitivity experiments for the benchmark F22.

Figure 29.

Heatmap of parameter sensitivity experiments for the benchmark F23.

Figure 30.

Heatmap of parameter sensitivity experiments for real-world design problem F24.

Figure 31.

Heatmap of parameter sensitivity experiments for real-world design problem F25.

Figure 32.

Heatmap of parameter sensitivity experiments for real-world design problem F26.

Figure 33.

Heatmap of parameter sensitivity experiments for real-world design problem F27.

Figure 34.

Heatmap of parameter sensitivity experiments for real-world design problem F28.

4.1. Overall Trends in Parameter Sensitivity

In Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14, Figure 15, Figure 16, Figure 17, Figure 18, Figure 19, Figure 20, Figure 21, Figure 22, Figure 23, Figure 24, Figure 25, Figure 26, Figure 27, Figure 28, Figure 29, Figure 30, Figure 31, Figure 32, Figure 33 and Figure 34, it can be seen that observed the performance heatmaps for representative unconstrained unimodal (F1–F7) and multimodal (F8–F23) functions, as well as for the real-world design problems (F24–F28). Across all benchmark functions, several consistent and compelling trends emerge:

- Dominant Influence of HMCR: A common observation is that the color gradient across the heatmaps changes far more drastically along the horizontal axis (HMCR) than along the vertical axis (PAR). This indicates that the performance of the HS algorithm is substantially more sensitive to the HMCR value than to the PAR value. In the region where HMCR is less than 0.5 (the left area of the figures), performance was recorded in the lowest tier (indicated by red) for nearly all functions, regardless of the PAR setting. This strongly suggests that if the algorithm fails to sufficiently exploit existing good solutions, achieving efficient convergence is nearly impossible.

- The Optimal Performance Region (The “Golden Zone”): Conversely, the green areas, representing the most superior performance, were without exception concentrated in the region where HMCR ≥ 0.9. The best results were frequently observed specifically at HMCR = 0.9. This demonstrates that the HS algorithm is inherently based on a strong exploitation mechanism.

- The Pitfall of HMCR = 1.0: An intriguing phenomenon was the observation of performance degradation when HMCR was set to the extreme value of 1.0. This is attributed to the complete elimination of the possibility of random generation. By doing so, the algorithm loses the exploratory impetus required to escape from local optima discovered in the early stages of the search.

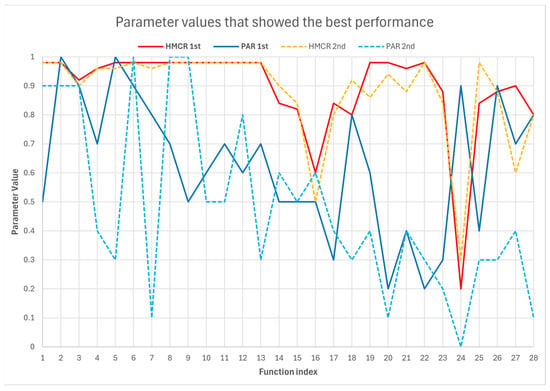

4.2. In-Depth Analysis of Parameter Preference Based on Function Characteristics

Figure 35 presents the HMCR and PAR values yielding the best and second-best performance across 28 benchmark functions. The horizontal axis denotes the benchmark function indices, while the vertical axis represents the corresponding HMCR and PAR parameter values. The solid line connects the values that achieved the best performance for each benchmark function, whereas the dashed line connects those associated with the second-best performance.

Figure 35.

Best performing HMCR/PAR values for each benchmark function.

While the general trends provide a broad overview, the topographical characteristics of a specific problem play a crucial role in determining the hyper-parameter values (especially for PAR value).

- Group 1: Unimodal Functions (F1–F7)

For this group of functions, which possess only a single global optimum, the core challenge for the algorithm is the speed and precision of convergence to that optimum. As illustrated by the heatmap for F1 in Figure 7, all functions in this group exhibited the best performance (dark green) at high HMCR values (0.9–0.99). Also, although PAR did not show a clear trend in the heatmap, Figure 35 shows that the top-performing PAR values for F1–F7 are consistently above 0.5, and in most cases exceed 0.7, indicating that for unimodal functions, a PAR setting of no less than 0.5 is generally preferable. This can be interpreted as follows: since the solution space contains no ‘traps’ (local optima), exploration would only lead to unnecessary wandering. Instead, a strong exploitation-dominant strategy, which continuously references good solutions via a high HMCR, proves to be the most efficient approach.

- Group 2: Multimodal Functions (F8–F23)

Group 2 can be further subdivided. First, F8–F13 have a vast, 30-dimensional search space, whereas F14–F23 have fewer dimensions, ranging from 2 to 6. However, the functions in F8–F13 are symmetrical and have a relatively simple structure, while those in F14–F23 are highly complex and feature deceptive landscapes with intentionally placed, difficult traps (local optima).

The results for F8–F13 were largely similar to those for the unimodal functions (F1–F7). The influence of PAR appeared minimal, and consistently superior performance was demonstrated with high HMCR values (≥0.9).

In contrast, for F14–F23, the trends were less consistent, and the green areas in the heatmaps were distributed over a broader range of HMCR values. For instance, the results for F16 in Figure 22 show the best performance at HMCR = 0.6. Similarly, for F17 in Figure 23, while not as pronounced as in F16, numerous cases of good performance were observed at HMCR values of 0.6 or 0.7. This implies that for challenging functions like those in F14–F23, it is often necessary to make significant leaps to escape local optima through random generation, highlighting the greater importance of the exploration-exploitation balance compared to the functions in F1–F13.

Furthermore, whereas the influence of PAR was previously almost invisible, the second set of heatmaps in Figure 22, Figure 23 and Figure 24 revealed that a PAR of 0.3–0.6 yielded good performance, and Figure 25, Figure 26, Figure 27, Figure 28 and Figure 29 showed good performance with a PAR of 0.1–0.3. This can be understood as follows: when viewing HMCR over a wide range (0–1), its dominant influence masks the effect of PAR. However, when the focus is narrowed to a small range like HMCR = 0.8–1.0, the differences in HMCR become less significant, thereby revealing the previously hidden influence of PAR. This demonstrates that while the dominant impact of HMCR in the first heatmaps may obscure the effect of PAR, the second heatmaps reveal that PAR was indeed exerting a subtle influence.

According to Figure 35, which only examines the peak performance points, most F18–F23 models also performed best at HMCRs above 0.9, although some, such as F15–18, performed best at 0.6 or 0.8.

Additionally, F14, 17, and F20–24 also performed best at PAR values lower than 0.5

- Group 3: Real-World Design Optimization Problems (F24–F28)

In Group 3, the variations observed in the challenging problem set of F14–F23 became even more pronounced. For problems 1, 3, and 4, better performance was achieved at lower HMCR values, such as 0.3. Problem 2 showed results that appeared to lack a clear trend, while for problem 5, good results were mostly obtained when HMCR was 0.8.

Looking at the best performance points in Figure 35, the results indicate that high HMCRs between 0.8 and 0.9 perform well, but only F24 shows the best performance at a low HMCR of 0.2.

The group-wise analysis is summarized in Table 2.

Table 2.

Summary of Parameter Influence across Function Groups.

5. Conclusions and Future Works

This study empirically analyzed the influence of the HS algorithm’s core parameters, HMCR and PAR, on its performance. Extensive experiments were conducted on 23 benchmark functions with diverse characteristics and 5 real-world optimization problems, with the results presented visually through rank-based heatmaps.

The sensitivity analysis revealed that the performance of the HS algorithm is substantially more sensitive to the HMCR value than to PAR. Specifically, it was experimentally established that setting a high HMCR value (≥0.9) is advantageous for securing overall algorithmic stability and performance. It is crucial to note, however, that the extreme case of setting HMCR to 1.0 could lead to severe performance degradation. This is attributed to the complete elimination of random generation, which significantly increases the likelihood of the algorithm becoming trapped in local optima.

The role of PAR manifested differently depending on the topographical complexity of the problem. For problems with a single optimum or relatively simple multiple optima (F1–F13), a high HMCR value 0.9–0.99 had a dominant influence, while a low to medium PAR 0.1–0.3 showed efficient performance. This signifies the importance of an exploitation strategy that leverages existing superior solutions when the solution space complexity is low. Conversely, for complex functions containing numerous local optima (F14–F23) and some real-world problems (F24–F28), a relatively higher PAR value 0.3–0.5 proved more effective for global optimum search. This result indicates that for high-difficulty problems, an adequate exploration capability—that is, securing solution diversity through pitch adjusting—is effective for escaping local optima.

Through these experimental results, this study reinforces the empirical foundation for setting the HMCR and PAR parameters in the HS algorithm. By providing practical guidelines for parameter tuning based on problem characteristics, this research contributes to enhancing the algorithm’s efficiency and applicability.

Future research is expected to devise methods to further enhance the robustness and convergence speed of the HS algorithm based on the insights from this preliminary but fundamental study. Additionally, future work will investigate dynamic parameter setting methodologies that have been studied in various other metaheuristic algorithms and adapt them for the HS algorithm. for example, in the early iterations where global exploration is expected to be more important, the HMCR could be maintained at a relatively low level, and as the iterations proceed, its value may be gradually increased up to around 0.9—while ensuring that it does not reach 1.0—to enhance exploitation in the later stages.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/math13203248/s1, Table S1: Friedman test, Table S2: Top three parameter pairs for benchmark function F1, Table S3: Top three parameter pairs for benchmark function F8, Table S4: Top three parameter pairs for benchmark function F15, Table S5: Top three parameter pairs for benchmark function F24, Table S6: OS, Hardware and Simulation information; Programming Code (MS-Excel VBA).

Author Contributions

Conceptualization, Z.W.G. and G.L.; methodology, Z.W.G. and G.L.; software, G.L.; validation, G.L. and Z.W.G.; formal analysis, G.L.; data curation, G.L. and Z.W.G.; writing—original draft preparation, G.L.; writing—review and editing, G.L. and Z.W.G.; visualization, G.L.; supervision, Z.W.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Korea Institute of Energy Technology Evaluation and Planning (KETEP) and the Ministry of Trade, Industry & Energy, Republic of Korea (RS-2024-00441420; RS-2024-00442817).

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yeniay, Ö. A comparative study on optimization methods for the constrained nonlinear programming problems. Math. Probl. Eng. 2005, 2, 165–173. [Google Scholar] [CrossRef]

- Shang, C.; Zhou, T.-T.; Liu, S. Optimization of Complex Engineering Problems Using Modified Sine Cosine Algorithm. Sci. Rep. 2022, 12, 20528. [Google Scholar] [CrossRef]

- Jaber, A.; Younes, R.; Lafon, P.; Khoder, J. A Review on Multi-Objective Mixed-Integer Non-Linear Optimization Programming Methods. Eng 2024, 5, 1961–1979. [Google Scholar] [CrossRef]

- Talbi, E.G. Metaheuristics: From Design to Implementation; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Omran, M.G.; Mahdavi, M. Global-best harmony search. Appl. Math. Comput. 2008, 198, 643–656. [Google Scholar] [CrossRef]

- Ding, Z.; Zhang, H.; Li, H.; Chen, J.; Lu, P.; Hua, C. Improved harmony search algorithm for enhancing efficiency and quality in optimization of the distillation process. ACS Omega 2023, 8, 28147–28158. [Google Scholar] [CrossRef] [PubMed]

- Khaleel, S.; Zaher, H.; Saeid, N.R. A novel hybrid harmony search (HS) with war strategy optimization (WSO) for solving optimization problems. J. Mech. Contin. Math. Sci. 2024, 19, 27–46. [Google Scholar] [CrossRef]

- Minanda, V.; Liang, Y.-C.; Chen, A.H.L.; Gunawan, A. Application of an improved harmony search algorithm on electric vehicle routing problems. Energies 2024, 17, 3716. [Google Scholar] [CrossRef]

- Ameen, A.A.; Rashid, T.A.; Askar, S. CDDO–HS: Child Drawing Development Optimization–Harmony Search Algorithm. Appl. Sci. 2023, 13, 5795. [Google Scholar] [CrossRef]

- Lee, G.; Geem, Z.W. Harmony search algorithm with two problem-specific operators for solving nonogram puzzle. Mathematics 2025, 13, 1470. [Google Scholar] [CrossRef]

- Ma, Z.; Gao, K.; Yu, H.; Wu, N. Solving heterogeneous USV scheduling problems by problem-specific knowledge based meta-heuristics with Q-learning. Mathematics 2024, 12, 339. [Google Scholar] [CrossRef]

- Feng, Y.; Wang, H.; Cai, Z.; Li, M.; Li, X. Hybrid learning moth search algorithm for solving multidimensional knapsack problems. Mathematics 2023, 11, 1811. [Google Scholar] [CrossRef]

- Brambila-Hernández, J.A.; García-Morales, M.Á.; Fraire-Huacuja, H.J.; Villegas-Huerta, E.; Becerra-del-Ángel, A. Hybrid harmony search optimization algorithm for continuous functions. Math. Comput. Appl. 2023, 28, 29. [Google Scholar] [CrossRef]

- Wang, J.; Ouyang, H.; Zhang, C.; Li, S.; Xiang, J. A novel intelligent global harmony search algorithm based on improved search stability strategy. Sci. Rep. 2023, 13, 7705. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Ouyang, H.; Li, S.; Ding, W.; Gao, L. Equilibrium optimizer–based harmony search algorithm with nonlinear dynamic domains and its application to real-world optimization problems. Artif. Intell. Rev. 2024, 57, 166. [Google Scholar] [CrossRef]

- Shen, H.; Cheng, Y.; Li, Y. A Hybrid Harmony Search Algorithm for Distributed Permutation Flowshop Scheduling with Multimodal Optimization. Mathematics 2025, 13, 2640. [Google Scholar] [CrossRef]

- Zhan, S.; Liu, Q.; Zhao, Z.; Zhang, S.; Xu, Y. Advanced Robust Heading Control for Unmanned Surface Vessels Using Hybrid Metaheuristic-Optimized Variable Universe Fuzzy PID with Enhanced Smith Predictor. Biomimetics 2025, 10, 611. [Google Scholar] [CrossRef]

- Makhmudov, F.; Kilichev, D.; Cho, Y.I. An application for solving minimization problems using the Harmony search algorithm. SoftwareX 2024, 27, 101783. [Google Scholar] [CrossRef]

- Ramos, C.C.O.; Souza, A.N.; Chiachia, G.; Falcão, A.X.; Papa, J.P. A novel algorithm for feature selection using Harmony Search and its application for non-technical losses detection. Comput. Electr. Eng. 2011, 37, 886–894. [Google Scholar] [CrossRef]

- Geem, Z.W. Harmony search algorithm for solving sudoku. In Proceedings of the Knowledge-Based Intelligent Information and Engineering Systems (KES 2007), Vietri sul Mare, Italy, 12–14 September 2007; pp. 371–378. [Google Scholar]

- Geem, Z.W. Optimal cost design of water distribution networks using harmony search. Eng. Optim. 2006, 38, 259–280. [Google Scholar] [CrossRef]

- Mahdavi, M.; Fesanghary, M.; Damangir, E. An improved harmony search algorithm for solving optimization problems. Appl. Math. Comput. 2007, 188, 1567–1579. [Google Scholar] [CrossRef]

- Suganthan, P.N.; Hansen, N.; Liang, J.; Deb, K. Problem Definitions and Evaluation Criteria for the CEC 2005 Special Session on Real-Parameter Optimization. Nat. Comput. 2005, 5, 341–357. [Google Scholar]

- Hussain, K.; Salleh, M.N.M.; Cheng, S.; Naseem, R. Common Benchmark Functions for Metaheuristic Evaluation: A Review. Int. J. Inform. Vis. 2017, 1, 218–223. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Cagnina, L.; Esquivel, S.C.; Coello Coello, C.A. Solving engineering optimization problems with the simple constrained particle swarm optimizer. Informatica 2008, 32, 319–326. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).