Joint Scheduling and Placement for Vehicular Intelligent Applications Under QoS Constraints: A PPO-Based Precedence-Preserving Approach

Abstract

1. Introduction

- Hierarchical VEC Architecture with DAG Task Modeling: We propose a cloud–edge–vehicle architecture that uses DAGs to represent subtasks, incorporating both sequential and parallel execution for vehicular applications.

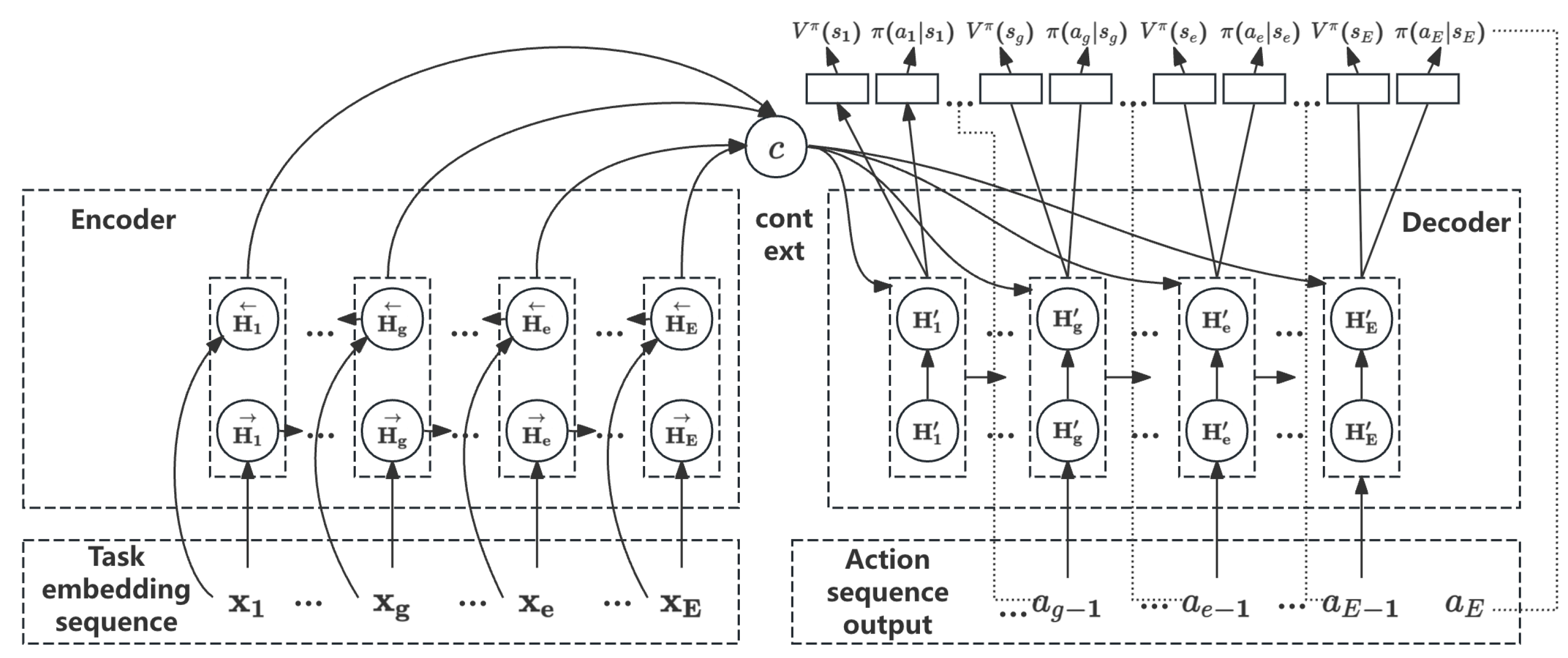

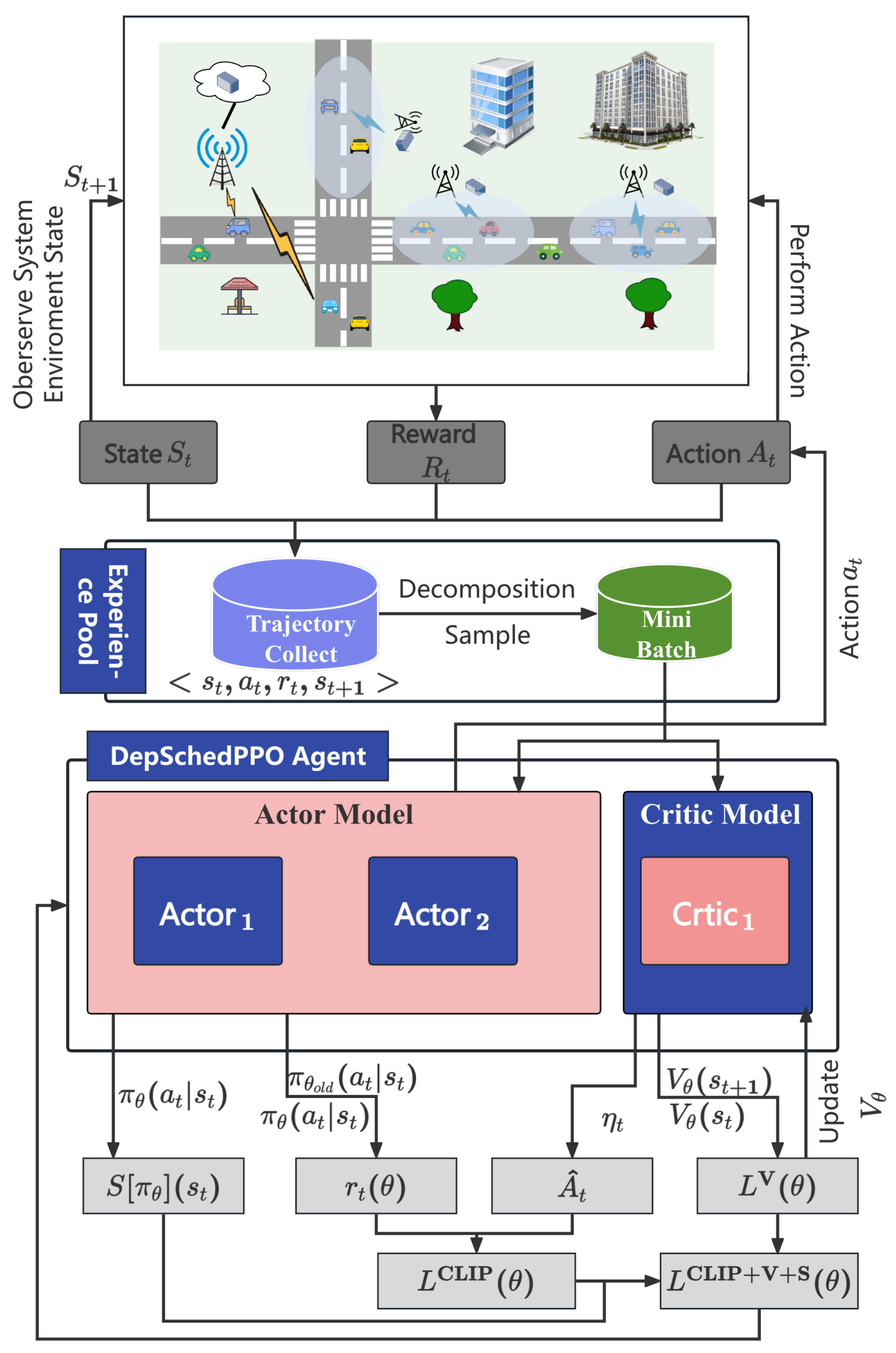

- Seq2Seq-Based Policy Network for Dependency-Aware Scheduling: Unlike conventional DRL methods that use feedforward DNNs, we design a novel Seq2Seq policy network to capture task dependencies and historical scheduling decisions, improving offloading accuracy and system performance.

- PPO-Based Optimization for QoS Maximization: We adopt Proximal Policy Optimization (PPO) to ensure stable learning and maximize the quality of service (QoS) in dynamic vehicular environments.

- Comprehensive Performance Evaluation: Extensive simulations demonstrate that DepSchedPPO outperforms existing methods in reducing task latency and energy consumption under diverse network conditions.

2. Related Work

2.1. Vehicular Edge Computing Architectures

2.2. Task Offloading

2.3. Summary

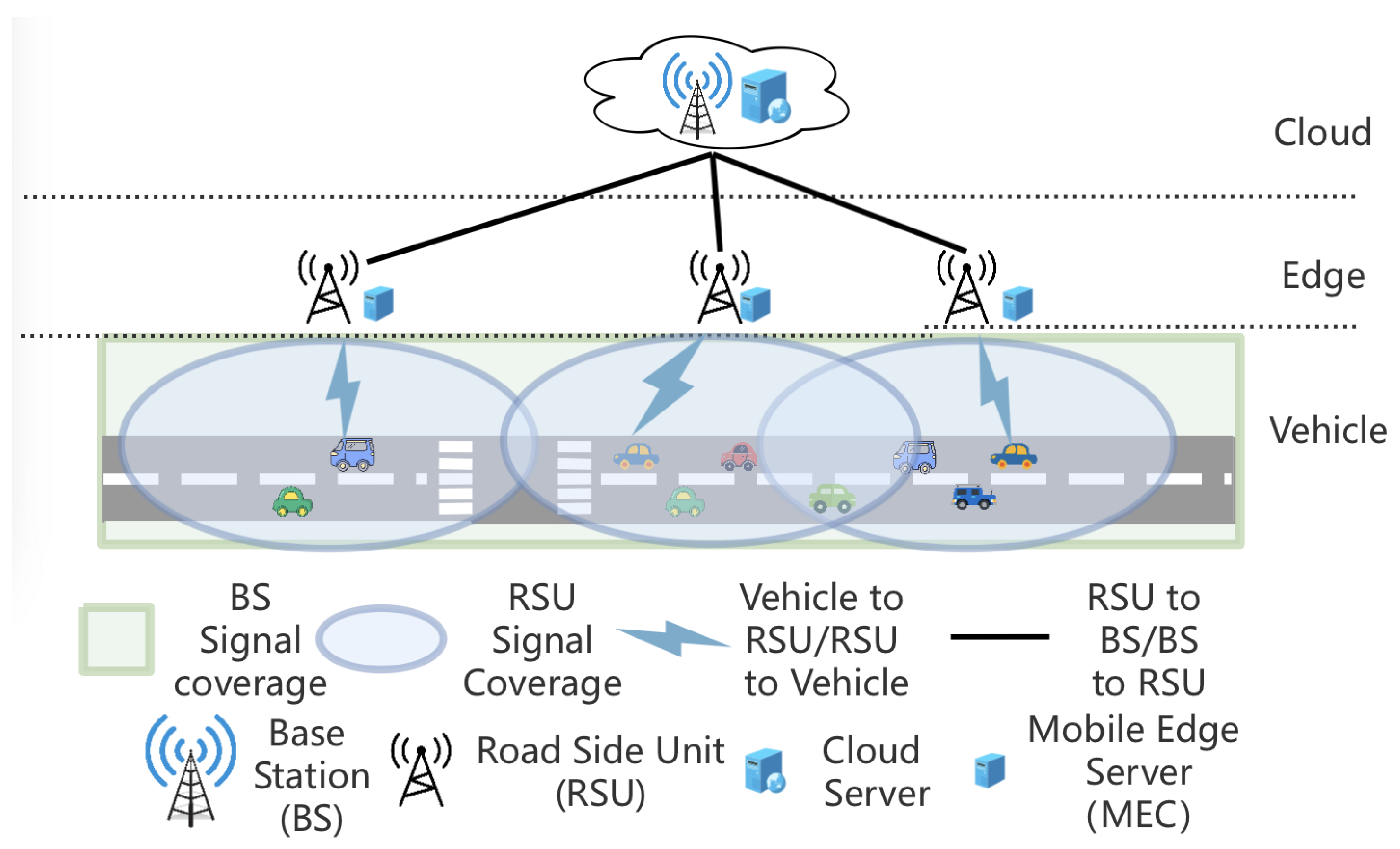

3. System Model

- Vehicle layer—on-road vehicles with limited computing capacity, communicating with BS and RSU.

- Edge layer—roadside nodes each combining an RSU (non-overlapping coverage to avoid interference) and an MEC server with moderate computing and storage capabilities.

- Cloud layer—large-scale compute resources accessed from BSs via cabled backhaul, providing centralized resources when edge capacity is insufficient.

3.1. Problem Assumptions

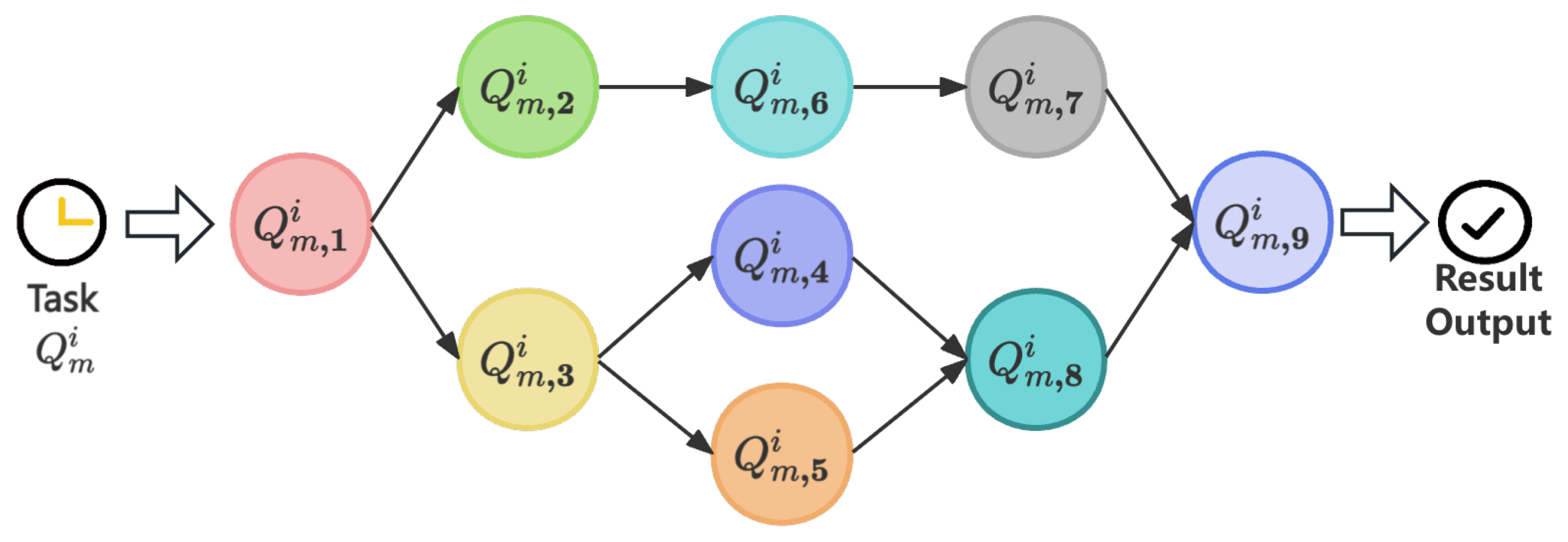

- The considered tasks are dependency-aware, representing intelligent vehicular applications composed of multiple interdependent subtasks. Each subtask is atomic, meaning it cannot be further divided and must be executed in a single stage without interruption.

- The vehicular edge computing system operates in a time-division manner, providing services to all vehicles in discrete time slots. It is assumed that tasks generated by vehicles can be completed within a single time slot.

- Due to data dependencies among subtasks, the output of a predecessor subtask may serve as input for its successor. Consequently, the return of intermediate results must be considered during task offloading to ensure correct execution of downstream subtasks.

- The dependency structure among subtasks may permit partial parallelism, meaning some subtasks can be executed concurrently. This increases the number of subtasks potentially offloaded to remote servers. However, due to the limited bandwidth capacity of MEC server communication channels, offloaded subtasks may experience queuing delays when the channel is occupied.

3.2. Problem Modeling

- Transmission Phase: The period to send the computation task to MEC. Due to limited channel bandwidth, tasks might experience waiting time before accessing an idle channel. Thus, the delay here consists of both the waiting time for channel availability and the wireless transmission duration.

- Execution Phase: The duration taken by MEC to process the assigned task.

- Download Phase: The time needed to deliver the computation results back to the vehicle terminal. As the returned data size is much smaller than the uploaded input, channel bandwidth is typically negligible here.

- Transmission Phase: The period required to transmit the computation task to the cloud server. Due to abundant communication resources at the cloud server, tasks do not need to wait for an idle channel; transmission starts immediately upon readiness.

- Execution Phase: The duration taken by the cloud server to execute the task. Sufficient computational resources are available on the cloud server; subtasks can be executed directly upon arrival.

- Download Phase: The time taken for the computation results to the vehicle terminal device. As with the MEC model, the returned data size is assumed to be small, so channel bandwidth limitations are ignored.

3.3. Problem Formulation

4. Algorithm Design

4.1. Markov Decision Process Elements

4.2. Seq2Seq Neural Network Architecture

4.3. DepSchedPPO Algorithm Architecture

4.4. DepSchedPPO Training and Testing Algorithms

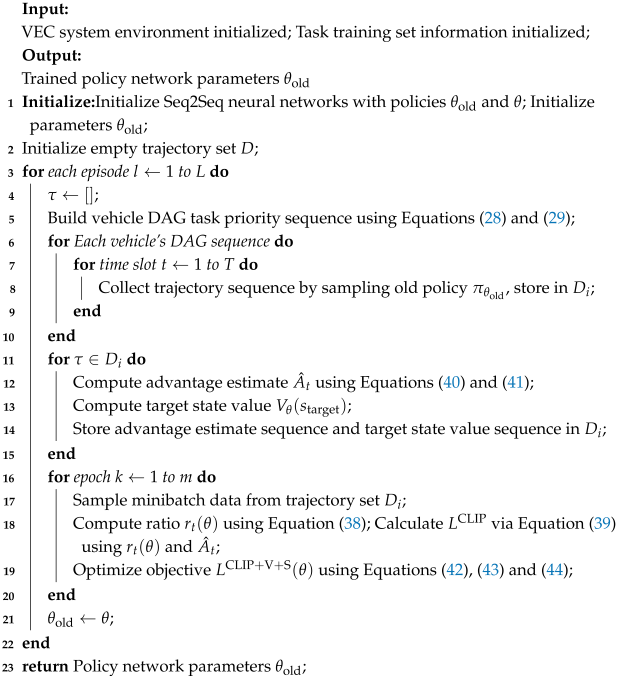

| Algorithm 1: DepSchedPPO training algorithm |

|

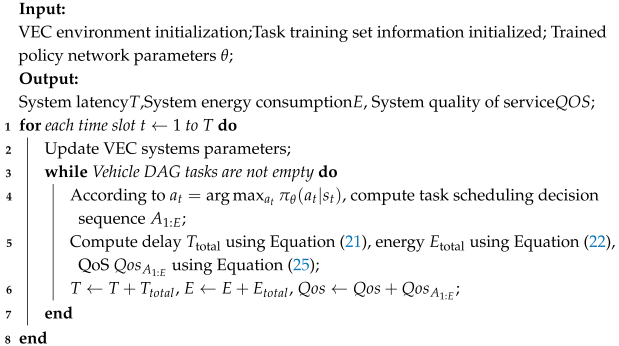

| Algorithm 2: DepSchedPPO validating algorithm |

|

5. Simulation and Result Analysis

5.1. Experimental Setup

- Task Offloading Simulation: Task graphs for each vehicle simulate offloading under three execution environments: local vehicle processing, MEC servers, and cloud servers. The DepSchedPPO algorithm makes offloading decisions based on real-time data, including network state, vehicular position, and available resources.

- Dynamic Mobility and Network Conditions: The simulation incorporates changing vehicle mobility and network conditions, which affect task offloading decisions due to bandwidth fluctuations and edge server status.

- Evaluation Metrics: We evaluate the algorithm using the following. Task Latency: Time from task initiation to result return. Energy Consumption: Total energy used by the vehicle, MEC, and cloud. Quality of Service (QoS): A composite metric balancing latency and energy consumption.

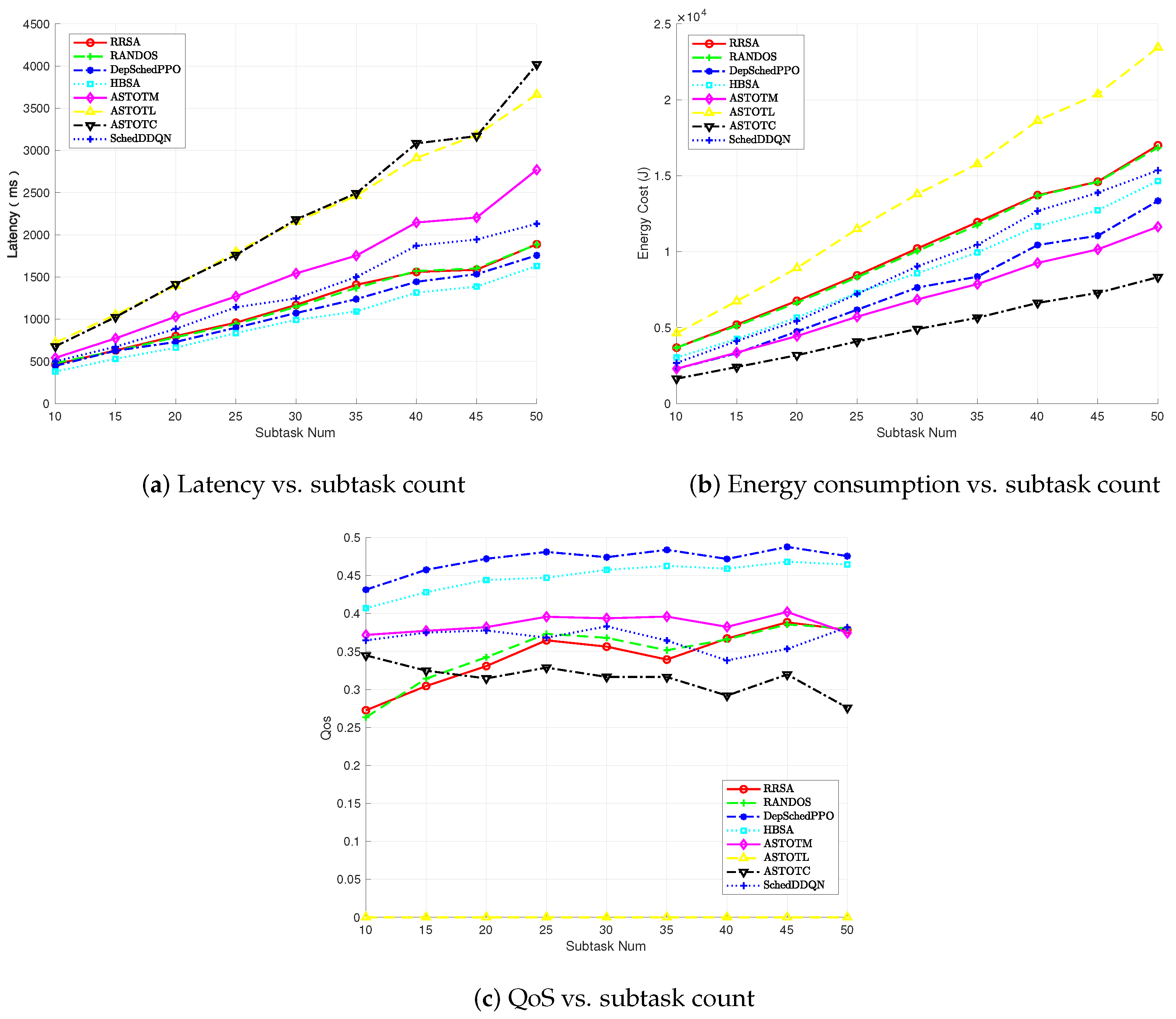

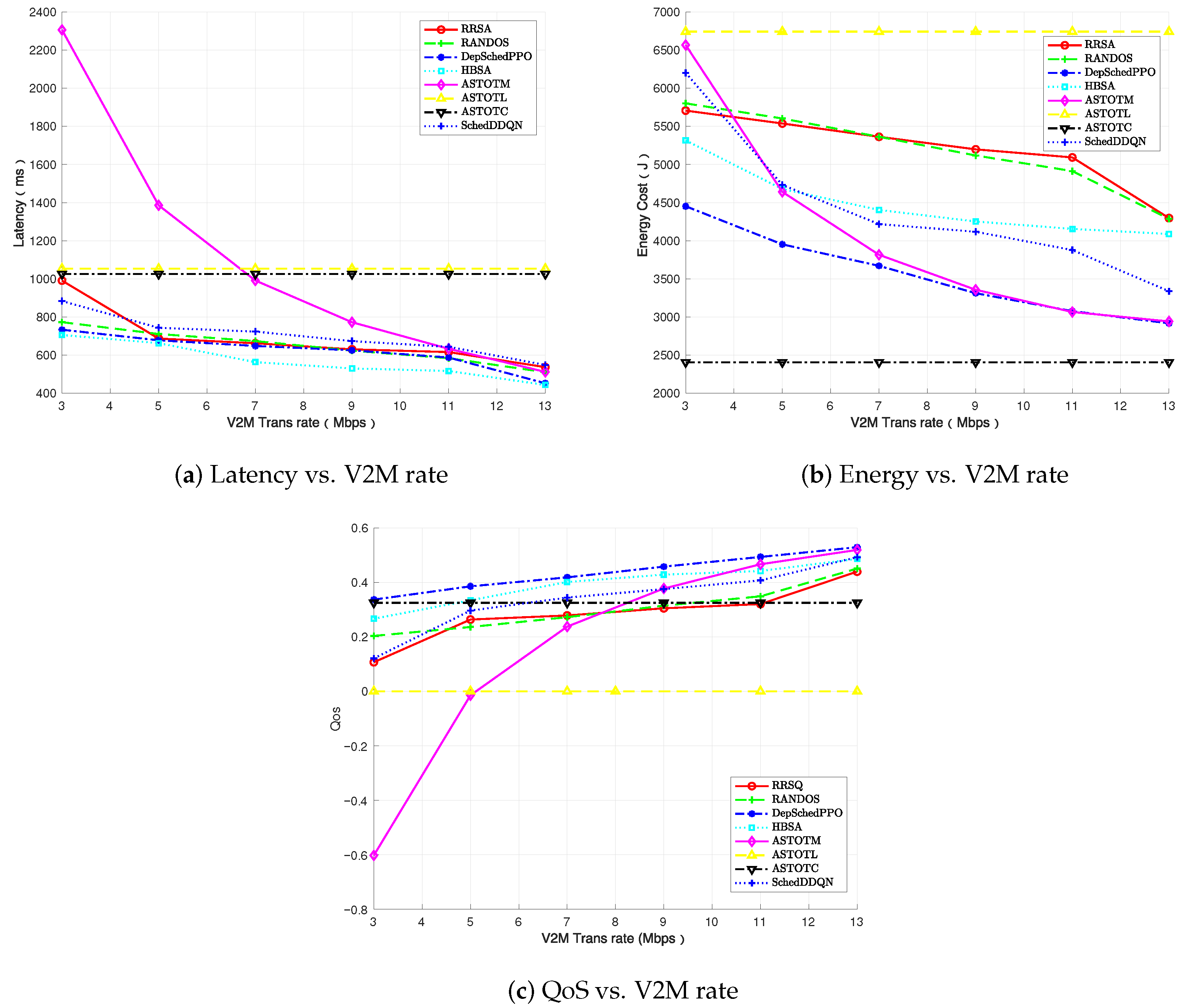

5.2. Result and Analysis

5.2.1. Effectiveness of DepSchedPPO

5.2.2. Efficiency of DepSchedPPO

- ASTOTL [67]: All subtasks execute locally on vehicles (baseline for potential offloading benefits).

- ASTOTM [67]: All subtasks offloaded to MEC servers.

- ASTOTC [60]: All subtasks offloaded to cloud servers (high compute but long transmission).

- RANDOS [60]: Random offloading strategy (lower-bound reference).

- RRSA [68]: Round-Robin scheduling algorithm is suitable for fairness scenarios, and the subtasks are alternately offloaded to the vehicle terminal, MEC server and cloud.

- HBSA [69]: HEFT-Based scheduling algorithm considers both computation and communication costs of tasks. Beginning from the exit node of the DAG task graph, it recursively determines the uplink ranking for each task by computing its average execution and communication times. Based on this ranking, the algorithm assigns each task to the resource that yields the earliest possible completion time. The start time of a task is then set according to the availability of its allocated resource and the completion times of all its predecessors.

- SchedDDQN [27]: SchedDDQN is an improved version of DQN algorithm, which aims to improve the stability and accuracy of learning by separating action selection and action evaluation. Specifically, the SchedDDQN combines the duel depth Q network and dual dqn technology, without considering the long-term dependence between tasks. The algorithm uses the behavior network to select the next action, and uses the target network to evaluate the Q value of the action.

6. Conclusions and Future Work

- Dependency-Aware Task Scheduling and Offloading: We introduce a novel approach that models vehicular tasks as Directed Acyclic Graphs (DAGs), ensuring that the task offloading respects both sequential constraints and parallelism, thereby improving system performance under realistic conditions.

- Deep Reinforcement Learning with PPO: We leverage the PPO algorithm to stabilize learning and enhance the offloading decision-making process, allowing for optimal scheduling in high-dimensional, dynamic environments.

- Integration of Task Dependencies and QoS Optimization: Unlike previous work, which often oversimplifies task dependencies, our approach models inter-subtask relationships and integrates Quality of Service (QoS) metrics to minimize latency and energy consumption, providing a more accurate representation of real-world vehicular applications.

- Comprehensive Evaluation: We conduct detailed evaluations under varying vehicular mobility and network conditions, showing that our method outperforms existing benchmarks, ensuring better adaptability and system robustness.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ma, Y.; Wang, Z.; Yang, H.; Yang, L. Artificial intelligence applications in the development of autonomous vehicles: A survey. IEEE/CAA J. Autom. Sin. 2020, 7, 315–329. [Google Scholar] [CrossRef]

- Wang, Z.; Zhong, Z.; Zhao, D.; Ni, M. Vehicle-Based Cloudlet Relaying for Mobile Computation Offloading. IEEE Trans. Veh. Technol. 2018, 67, 11181–11191. [Google Scholar] [CrossRef]

- Tran, T.X.; Hajisami, A.; Pandey, P.; Pompili, D. Collaborative Mobile Edge Computing in 5G Networks: New Paradigms, Scenarios, and Challenges. IEEE Commun. Mag. 2017, 55, 54–61. [Google Scholar] [CrossRef]

- Zhao, J.; Li, W.; Zhu, B.; Zhang, P.; Tang, R. A Tachograph-Based Approach to Restoring Accident Scenarios From the Vehicle Perspective for Autonomous Vehicle Testing. IEEE Trans. Intell. Transp. Syst. 2025, 26, 13909–13926. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, F.; Chen, X.; Wu, Y. Efficient Multi-Vehicle Task Offloading for Mobile Edge Computing in 6G Networks. IEEE Trans. Veh. Technol. 2022, 71, 4584–4595. [Google Scholar] [CrossRef]

- Yin, L.; Luo, J.; Qiu, C.; Wang, C.; Qiao, Y. Joint Task Offloading and Resources Allocation for Hybrid Vehicle Edge Computing Systems. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10355–10368. [Google Scholar] [CrossRef]

- Liang, J.; Tan, C.; Yan, L.; Zhou, J.; Yin, G.; Yang, K. Interaction-Aware Trajectory Prediction for Safe Motion Planning in Autonomous Driving: A Transformer-Transfer Learning Approach. IEEE Trans. Intell. Transp. Syst. 2025; early access. [Google Scholar] [CrossRef]

- Liu, Y.; Yan, B.; Wang, B.; Sun, Q.; Dai, Y. Computation Offloading Strategy Based on Improved Polar Lights Optimization Algorithm and Blockchain in Internet of Vehicles. Appl. Sci. 2025, 15, 7341. [Google Scholar] [CrossRef]

- Zhang, K.; Zhu, Y.; Leng, S.; He, Y.; Maharjan, S.; Zhang, Y. Deep Learning Empowered Task Offloading for Mobile Edge Computing in Urban Informatics. IEEE Internet Things J. 2019, 6, 7635–7647. [Google Scholar] [CrossRef]

- Ma, G.; Wang, X.; Hu, M.; Ouyang, W.; Chen, X.; Li, Y. DRL-Based Computation Offloading With Queue Stability for Vehicular-Cloud-Assisted Mobile Edge Computing Systems. IEEE Trans. Intell. Veh. 2023, 8, 2797–2809. [Google Scholar] [CrossRef]

- Zhang, J.; Guo, H.; Liu, J.; Zhang, Y. Task Offloading in Vehicular Edge Computing Networks: A Load-Balancing Solution. IEEE Trans. Veh. Technol. 2020, 69, 2092–2104. [Google Scholar] [CrossRef]

- Song, T.J.; Jeong, J.; Kim, J.H. End-to-End Real-Time Obstacle Detection Network for Safe Self-Driving via Multi-Task Learning. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16318–16329. [Google Scholar] [CrossRef]

- Wu, J.; Zou, Y.; Zhang, X.; Liu, J.; Sun, W.; Du, G. Dependency-Aware Task Offloading Strategy via Heterogeneous Graph Neural Network and Deep Reinforcement Learning. IEEE Internet Things J. 2025, 12, 22915–22933. [Google Scholar] [CrossRef]

- Shang, Y.; Li, Z.; Li, S.; Shao, Z.; Jian, L. An Information Security Solution for Vehicle-to-Grid Scheduling by Distributed Edge Computing and Federated Deep Learning. IEEE Trans. Ind. Appl. 2024, 60, 4381–4395. [Google Scholar] [CrossRef]

- Li, Y.; Yang, C.; Chen, X.; Liu, Y. Mobility and dependency-aware task offloading for intelligent assisted driving in vehicular edge computing networks. Veh. Commun. 2024, 45, 100720. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, X.; He, X.; Sun, Y. Bandwidth Allocation and Trajectory Control in UAV-Assisted IoV Edge Computing Using Multiagent Reinforcement Learning. IEEE Trans. Reliab. 2023, 72, 599–608. [Google Scholar] [CrossRef]

- Li, C.; Wu, J.; Zhang, Y.; Wan, S. Energy-Latency Tradeoff for Joint Optimization of Vehicle Selection and Resource Allocation in UAV-Assisted Vehicular Edge Computing. IEEE Trans. Green Commun. Netw. 2025, 9, 445–458. [Google Scholar] [CrossRef]

- Cui, Y.; Du, L.; He, P.; Wu, D.; Wang, R. Cooperative vehicles-assisted task offloading in vehicular networks. Trans. Emerg. Telecommun. Technol. 2022, 33, 4472–4488. [Google Scholar] [CrossRef]

- Li, M.; Gao, J.; Zhao, L.; Shen, X. Adaptive Computing Scheduling for Edge-Assisted Autonomous Driving. IEEE Trans. Veh. Technol. 2021, 70, 5318–5331. [Google Scholar] [CrossRef]

- Fan, W.; Liu, J.; Hua, M.; Wu, F.; Liu, Y. Joint Task Offloading and Resource Allocation for Multi-Access Edge Computing Assisted by Parked and Moving Vehicles. IEEE Trans. Veh. Technol. 2022, 71, 5314–5330. [Google Scholar] [CrossRef]

- Wang, H.; Lv, T.; Lin, Z.; Zeng, J. Energy-Delay Minimization of Task Migration Based on Game Theory in MEC-Assisted Vehicular Networks. IEEE Trans. Veh. Technol. 2022, 71, 8175–8188. [Google Scholar] [CrossRef]

- Zhou, W.; Fan, L.; Zhou, F.; Li, F.; Lei, X.; Xu, W.; Nallanathan, A. Priority-Aware Resource Scheduling for UAV-Mounted Mobile Edge Computing Networks. IEEE Trans. Veh. Technol. 2023, 72, 9682–9687. [Google Scholar] [CrossRef]

- Tran-Dang, H.; Kim, D.S. FRATO: Fog Resource Based Adaptive Task Offloading for Delay-Minimizing IoT Service Provisioning. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 2491–2508. [Google Scholar] [CrossRef]

- Ning, Z.; Zhang, K.; Wang, X.; Guo, L.; Hu, X.; Huang, J.; Hu, B.; Kwok, R.Y.K. Intelligent Edge Computing in Internet of Vehicles: A Joint Computation Offloading and Caching Solution. IEEE Trans. Intell. Transp. Syst. 2021, 22, 2212–2225. [Google Scholar] [CrossRef]

- Gu, B.; Zhou, Z. Task Offloading in Vehicular Mobile Edge Computing: A Matching-Theoretic Framework. IEEE Veh. Technol. Mag. 2019, 14, 100–106. [Google Scholar] [CrossRef]

- Ning, Z.; Huang, J.; Wang, X.; Rodrigues, J.J.P.C.; Guo, L. Mobile Edge Computing-Enabled Internet of Vehicles: Toward Energy-Efficient Scheduling. IEEE Netw. 2019, 33, 198–205. [Google Scholar] [CrossRef]

- Tang, H.; Wu, H.; Qu, G.; Li, R. Double Deep Q-Network Based Dynamic Framing Offloading in Vehicular Edge Computing. IEEE Trans. Netw. Sci. Eng. 2023, 10, 1297–1310. [Google Scholar] [CrossRef]

- Hui, Y.; Su, Z.; Luan, T.H.; Li, C. Reservation Service: Trusted Relay Selection for Edge Computing Services in Vehicular Networks. IEEE J. Sel. Areas Commun. 2020, 38, 2734–2746. [Google Scholar] [CrossRef]

- Li, J.; Liang, W.; Xu, W.; Xu, Z.; Jia, X.; Zhou, W.; Zhao, J. Maximizing User Service Satisfaction for Delay-Sensitive IoT Applications in Edge Computing. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 1199–1212. [Google Scholar] [CrossRef]

- He, X.; Lu, H.; Du, M.; Mao, Y.; Wang, K. QoE-Based Task Offloading With Deep Reinforcement Learning in Edge-Enabled Internet of Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 2252–2261. [Google Scholar] [CrossRef]

- Wan, S.; Li, X.; Xue, Y.; Lin, W.; Xu, X. Efficient computation offloading for Internet of Vehicles in edge computing-assisted 5G networks. J. Supercomput. 2019, 76, 2518–2547. [Google Scholar] [CrossRef]

- Xu, X.; Gu, R.; Dai, F.; Qi, L.; Wan, S. Multi-objective computation offloading for Internet of Vehicles in cloud-edge computing. Wirel. Netw. 2020, 26, 1611–1629. [Google Scholar] [CrossRef]

- Xun, Y.; Qin, J.; Liu, J. Deep Learning Enhanced Driving Behavior Evaluation Based on Vehicle-Edge-Cloud Architecture. IEEE Trans. Veh. Technol. 2021, 70, 6172–6177. [Google Scholar] [CrossRef]

- Dai, P.; Hu, K.; Wu, X.; Xing, H.; Teng, F.; Yu, Z. A Probabilistic Approach for Cooperative Computation Offloading in MEC-Assisted Vehicular Networks. IEEE Trans. Intell. Transp. Syst. 2022, 23, 899–911. [Google Scholar] [CrossRef]

- Zhou, J.; Tian, D.; Wang, Y.; Sheng, Z.; Duan, X.; Leung, V.C. Reliability-Optimal Cooperative Communication and Computing in Connected Vehicle Systems. IEEE Trans. Mob. Comput. 2020, 19, 1216–1232. [Google Scholar] [CrossRef]

- Liu, L.; Feng, J.; Mu, X.; Pei, Q.; Lan, D.; Xiao, M. Asynchronous Deep Reinforcement Learning for Collaborative Task Computing and On-Demand Resource Allocation in Vehicular Edge Computing. IEEE Trans. Intell. Transp. Syst. 2023, 24, 15513–15526. [Google Scholar] [CrossRef]

- Fu, J.; Zhu, P.; Hua, J.; Li, J.; Wen, J. Optimization of the energy efficiency in Smart Internet of Vehicles assisted by MEC. EURASIP J. Adv. Signal Process. 2022, 2022, 1–17. [Google Scholar] [CrossRef]

- Jia, Y.; Zhang, C.; Huang, Y.; Zhang, W. Lyapunov Optimization Based Mobile Edge Computing for Internet of Vehicles Systems. IEEE Trans. Commun. 2022, 70, 7418–7433. [Google Scholar] [CrossRef]

- Han, D.; Chen, W.; Fang, Y. A Dynamic Pricing Strategy for Vehicle Assisted Mobile Edge Computing Systems. IEEE Wirel. Commun. Lett. 2019, 8, 420–423. [Google Scholar] [CrossRef]

- Feng, W.; Zhang, N.; Li, S.; Lin, S.; Ning, R.; Yang, S.; Gao, Y. Latency Minimization of Reverse Offloading in Vehicular Edge Computing. IEEE Trans. Veh. Technol. 2022, 71, 5343–5357. [Google Scholar] [CrossRef]

- Ma, C.; Zhu, J.; Liu, M.; Zhao, H.; Liu, N.; Zou, X. Parking Edge Computing: Parked-Vehicle-Assisted Task Offloading for Urban VANETs. IEEE Internet Things J. 2021, 8, 9344–9358. [Google Scholar] [CrossRef]

- Qin, P.; Fu, Y.; Tang, G.; Zhao, X.; Geng, S. Learning Based Energy Efficient Task Offloading for Vehicular Collaborative Edge Computing. IEEE Trans. Veh. Technol. 2022, 71, 8398–8413. [Google Scholar] [CrossRef]

- Xiao, Z.; Shu, J.; Jiang, H.; Min, G.; Chen, H.; Han, Z. Overcoming Occlusions: Perception Task-Oriented Information Sharing in Connected and Autonomous Vehicles. IEEE Netw. 2023, 37, 224–229. [Google Scholar] [CrossRef]

- Rajak, R.; Kumar, S.; Prakash, S.; Rajak, N.; Dixit, P. A novel technique to optimize quality of service for directed acyclic graph (DAG) scheduling in cloud computing environment using heuristic approach. J. Supercomput. 2023, 79, 1956–1979. [Google Scholar] [CrossRef]

- Topcuoglu, H.; Hariri, S.; Wu, M.Y. Performance-effective and low-complexity task scheduling for heterogeneous computing. IEEE Trans. Parallel Distrib. Syst. 2002, 13, 260–274. [Google Scholar] [CrossRef]

- Sundar, S.; Liang, B. Offloading Dependent Tasks with Communication Delay and Deadline Constraint. In Proceedings of the IEEE INFOCOM 2018—IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 37–45. [Google Scholar] [CrossRef]

- Choudhary, A.; Gupta, I.; Singh, V.; Jana, P.K. A GSA based hybrid algorithm for bi-objective workflow scheduling in cloud computing. Future Gener. Comput. Syst. 2018, 83, 14–26. [Google Scholar] [CrossRef]

- Dong, S.; Xia, Y.; Kamruzzaman, J. Quantum Particle Swarm Optimization for Task Offloading in Mobile Edge Computing. IEEE Trans. Ind. Inform. 2023, 19, 9113–9122. [Google Scholar] [CrossRef]

- Lin, B.; Lin, K.; Lin, C.; Lu, Y.; Huang, Z.; Chen, X. Computation offloading strategy based on deep reinforcement learning for connected and autonomous vehicle in vehicular edge computing. J. Cloud Comput. 2021, 10, 33. [Google Scholar] [CrossRef]

- Liu, G.; Dai, F.; Huang, B.; Qiang, Z.; Wang, S.; Li, L. A collaborative computation and dependency-aware task offloading method for vehicular edge computing: A reinforcement learning approach. J. Cloud Comput. 2022, 11, 68. [Google Scholar] [CrossRef]

- Liu, S.; Yu, Y.; Lian, X.; Feng, Y.; She, C.; Yeoh, P.L.; Guo, L.; Vucetic, B.; Li, Y. Dependent Task Scheduling and Offloading for Minimizing Deadline Violation Ratio in Mobile Edge Computing Networks. IEEE J. Sel. Areas Commun. 2023, 41, 538–554. [Google Scholar] [CrossRef]

- Zhao, L.; Huang, S.; Meng, D.; Liu, B.; Zuo, Q.; Leung, V.C.M. Stackelberg-Game-Based Dependency-Aware Task Offloading and Resource Pricing in Vehicular Edge Networks. IEEE Internet Things J. 2024, 11, 32337–32349. [Google Scholar] [CrossRef]

- Luo, K.; Wang, Y.; Liu, Y.; Zhu, K. Collaborative Integration of Vehicle and Roadside Infrastructure Sensor for Temporal Dependency-Aware Task Offloading in the Internet of Vehicles. Int. J. Intell. Syst. 2025. [Google Scholar] [CrossRef]

- Sun, Z.; Mo, Y.; Yu, C. Graph-Reinforcement-Learning-Based Task Offloading for Multiaccess Edge Computing. IEEE Internet Things J. 2023, 10, 3138–3150. [Google Scholar] [CrossRef]

- Yan, J.; Bi, S.; Zhang, Y.J.A. Offloading and Resource Allocation With General Task Graph in Mobile Edge Computing: A Deep Reinforcement Learning Approach. IEEE Trans. Wirel. Commun. 2020, 19, 5404–5419. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, X.; Yuan, P.; Dong, H.; Zhang, P.; Tari, Z. Dependency-Aware Task Offloading Based on Application Hit Ratio. IEEE Trans. Serv. Comput. 2024, 17, 3373–3386. [Google Scholar] [CrossRef]

- Chen, M.; Wang, T.; Zhang, S.; Liu, A. Deep reinforcement learning for computation offloading in mobile edge computing environment. Comput. Commun. 2021, 175, 1–12. [Google Scholar] [CrossRef]

- Lu, H.; He, X.; Du, M.; Ruan, X.; Sun, Y.; Wang, K. Edge QoE: Computation Offloading With Deep Reinforcement Learning for Internet of Things. IEEE Internet Things J. 2020, 7, 9255–9265. [Google Scholar] [CrossRef]

- Sun, H.; Zhang, X.; Zhang, B.; Sha, K.; Shi, W. Optimal Task Offloading and Trajectory Planning Algorithms for Collaborative Video Analytics With UAV-Assisted Edge in Disaster Rescue. IEEE Trans. Veh. Technol. 2024, 73, 6811–6828. [Google Scholar] [CrossRef]

- Chen, J.; Yang, Y.; Wang, C.; Zhang, H.; Qiu, C.; Wang, X. Multitask Offloading Strategy Optimization Based on Directed Acyclic Graphs for Edge Computing. IEEE Internet Things J. 2022, 9, 9367–9378. [Google Scholar] [CrossRef]

- Zhan, W.; Luo, C.; Wang, J.; Wang, C.; Min, G.; Duan, H.; Zhu, Q. Deep-Reinforcement-Learning-Based Offloading Scheduling for Vehicular Edge Computing. IEEE Internet Things J. 2020, 7, 5449–5465. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar] [CrossRef]

- Sharma, N.; Ghosh, A.; Misra, R.; Das, S.K. Deep Meta Q-Learning Based Multi-Task Offloading in Edge-Cloud Systems. IEEE Trans. Mob. Comput. 2024, 23, 2583–2598. [Google Scholar] [CrossRef]

- Arabnejad, H.; Barbosa, J.G. List Scheduling Algorithm for Heterogeneous Systems by an Optimistic Cost Table. IEEE Trans. Parallel Distrib. Syst. 2014, 25, 682–694. [Google Scholar] [CrossRef]

- Chai, F.; Zhang, Q.; Yao, H.; Xin, X.; Gao, R.; Guizani, M. Joint Multi-Task Offloading and Resource Allocation for Mobile Edge Computing Systems in Satellite IoT. IEEE Trans. Veh. Technol. 2023, 72, 7783–7795. [Google Scholar] [CrossRef]

- Guo, Z.; Bhuiyan, A.; Liu, D.; Khan, A.; Saifullah, A.; Guan, N. Energy-Efficient Real-Time Scheduling of DAGs on Clustered Multi-Core Platforms. In Proceedings of the 2019 IEEE Real-Time and Embedded Technology and Applications Symposium (RTAS), Montreal, QC, Canada, 16–18 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 156–168. [Google Scholar] [CrossRef]

- Sahni, Y.; Cao, J.; Yang, L.; Ji, Y. Multihop Offloading of Multiple DAG Tasks in Collaborative Edge Computing. IEEE Internet Things J. 2021, 8, 4893–4905. [Google Scholar] [CrossRef]

- Zhang, X.; Li, R.; Zhao, H. A Parallel Consensus Mechanism Using PBFT Based on DAG-Lattice Structure in the Internet of Vehicles. IEEE Internet Things J. 2023, 10, 5418–5433. [Google Scholar] [CrossRef]

- Xiao, Q.z.; Zhong, J.; Feng, L.; Luo, L.; Lv, J. A Cooperative Coevolution Hyper-Heuristic Framework for Workflow Scheduling Problem. IEEE Trans. Serv. Comput. 2022, 15, 150–163. [Google Scholar] [CrossRef]

| Study | Objective | Methodology | Key Findings | Research Gap/Contribution of This Work |

|---|---|---|---|---|

| Xun et al. [33] | VEC architecture | Cloud–edge–vehicle collaboration | Vehicle behavior evaluation using cloud-trained models | Does not address task dependencies or dynamic offloading based on mobility. |

| Liu et al. [36] | Cooperative task computing | Multi-resource cooperative framework | Effective task allocation, but limited to static resources | No dynamic adaptation based on vehicle movement and network conditions. |

| Dai et al. [34] | Task allocation with MEC | Asynchronous deep reinforcement learning | Minimizes task delay via cloud–edge collaboration | Focuses only on delay minimization, while also optimizing energy and QoS. |

| Han et al. [39] | Reduce latency using edge computing | Distributed task offloading to neighboring vehicles | Reduced latency by leveraging nearby vehicle resources | Does not address energy consumption or QoS optimization. |

| Zhao et al. [52] | Task offloading in dynamic networks | Stackelberg game-based model | Improved utility for vehicles and controllers | Does not incorporate task dependencies or adjust based on urgency. |

| Ma et al. [41] | Multi-vehicle task offloading | Lyapunov optimization for task offloading | Delays reduced via cooperative task offloading between vehicles | Does not handle task dependencies or energy efficiency. |

| Feng et al. [40] | Reverse offloading strategy | Reverse offloading decision optimization | Optimizes reverse offloading to reduce VEC load | Neglects task dependency modeling and its impact on QoS and latency. |

| Qin et al. [42] | Resource allocation in VEC | Cooperative vehicle-side resource allocation | Efficient offloading and resource sharing | Does not model task dependencies or optimize QoS. |

| Sun et al. [54] | GNN for task offloading | GNNs and reinforcement learning | Encodes task dependencies for offloading decisions | Lacks QoS optimization and dynamic adaptation to vehicle conditions. |

| Yan et al. [55] | Task offloading using actor–critic and GNNs | Actor–Critic with GNN task modeling | Enhances task offloading efficiency using GNNs | Focuses on static task offloading, whereas our approach adapts dynamically. |

| Zhang et al. [56] | Multi-agent Q-learning for offloading | Q-learning with greedy scheduling | Improved QoS by optimizing task offloading decisions | Does not model task dependencies or handle dynamic adjustments. |

| Our Work | Dependency-aware task offloading optimization | Proximal Policy Optimization (PPO) with dependency modeling | Reduces task latency and energy, optimizing QoS in dynamic environments | Integrate dependency-aware task offloading with reinforcement learning for VEC, optimizing latency, energy, and QoS. |

| Symbol | Description |

|---|---|

| Set of RSUs (Road Side Units) | |

| Set of vehicles | |

| The e-th subtask of the m-th task from vehicle i | |

| Data volume of subtask | |

| Computational complexity of subtask | |

| Maximum tolerable latency of task | |

| / | Uplink and downlink trans rate between vehicle and MEC(V2M/M2V) |

| / | Uplink and downlink trans rate between vehicle and cloud(V2C/C2V) |

| / | Computing power cost of the vehicle terminal and MEC server |

| / | Transmission power cost of the MEC uplink and downlink channels |

| / | Transmission power cost of the cloud uplink and downlink channels |

| // | Computing capability of the vehicle, MEC, and cloud |

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Training vehicles | 500 | Test vehicles | 100 |

| V2M_up | 9 Mbps | V2M_down | 9 Mbps |

| V2C_up | 6 Mbps | V2C_down | 6 Mbps |

| Subtask data | [10, 50] KB | Subtask complexity C | [100, 500] Gcycles |

| Subtask output | [10, 50] KB | Vehicle terminal | 1 GHz |

| Edge server | 10 GHz | Cloud server | 80 GHz |

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Neural network layers | 2 | Hidden layer units | 256 |

| Clip range | 0.2 | Learning rate | |

| GAE factor | 0.95 | Discount factor | 0.99 |

| Value loss coefficient | 0.5 | Entropy coefficient | 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, W.; Chen, B. Joint Scheduling and Placement for Vehicular Intelligent Applications Under QoS Constraints: A PPO-Based Precedence-Preserving Approach. Mathematics 2025, 13, 3130. https://doi.org/10.3390/math13193130

Shi W, Chen B. Joint Scheduling and Placement for Vehicular Intelligent Applications Under QoS Constraints: A PPO-Based Precedence-Preserving Approach. Mathematics. 2025; 13(19):3130. https://doi.org/10.3390/math13193130

Chicago/Turabian StyleShi, Wei, and Bo Chen. 2025. "Joint Scheduling and Placement for Vehicular Intelligent Applications Under QoS Constraints: A PPO-Based Precedence-Preserving Approach" Mathematics 13, no. 19: 3130. https://doi.org/10.3390/math13193130

APA StyleShi, W., & Chen, B. (2025). Joint Scheduling and Placement for Vehicular Intelligent Applications Under QoS Constraints: A PPO-Based Precedence-Preserving Approach. Mathematics, 13(19), 3130. https://doi.org/10.3390/math13193130