Abstract

This study investigates the evolution of online public opinion during the COVID-19 pandemic by integrating topic mining with sentiment analysis. To overcome the limitations of traditional short-text models and improve the accuracy of sentiment detection, we propose a novel hybrid framework that combines a GloVe-enhanced Biterm Topic Model (BTM) for semantic-aware topic clustering with a RoBERTa-TextCNN architecture for deep, context-rich sentiment classification. The framework is specifically designed to capture both the global semantic relationships of words and the dynamic contextual nuances of social media discourse. Using a large-scale corpus of more than 550,000 Weibo posts, we conducted comprehensive experiments to evaluate the model’s effectiveness. The proposed approach achieved an accuracy of 92.45%, significantly outperforming baseline transformer-based baseline representative of advanced contextual embedding models across multiple evaluation metrics, including precision, recall, F1-score, and AUC. These results confirm the robustness and stability of the hybrid design and demonstrate its advantages in balancing precision and recall. Beyond methodological validation, the empirical analysis provides important insights into the dynamics of online public discourse. User engagement is found to be highest for the topics directly tied to daily life, with discussions about quarantine conditions alone accounting for 42.6% of total discourse. Moreover, public sentiment proves to be highly volatile and event-driven; for example, the announcement of Wuhan’s reopening produced an 11% surge in positive sentiment, reflecting a collective emotional uplift at a major turning point of the pandemic. Taken together, these findings demonstrate that online discourse evolves in close connection with both societal conditions and government interventions. The proposed topic–sentiment analysis framework not only advances methodological research in text mining and sentiment analysis, but also has the potential to serve as a practical tool for real-time monitoring online opinion. By capturing the fluctuations of public sentiment and identifying emerging themes, this study aims to provide insights that could inform policymaking by suggesting strategies to guide emotional contagion, strengthen crisis communication, and promote constructive public debate during health emergencies.

Keywords:

major public health emergencies; online public opinion; topic model; deep learning; public opinion governance MSC:

68T01

1. Introduction

The COVID-19 pandemic was the most widespread, fastest spreading, and most prolonged major public health emergency since the founding of the People’s Republic of China [1]. It not only seriously threatened the physical health and lives of the population, but also posed multifaceted challenges to social governance due to the public discourse it generated. Public opinion and emotions, as expressed through online comments, can easily stir social sentiment and potentially influence the trajectory of events, sometimes causing serious social consequences [2]. Therefore, following the outbreak of major public health emergencies such as COVID-19, it is essential to adopt timely and effective prevention strategies while also paying attention to the guidance of public opinion.

Enhancing the supervision and guidance of public opinion, especially online opinion, is a vital part of emergency management and a key step in ensuring the orderly progress of pandemic prevention and control efforts. To effectively supervise and guide online discourse, it is necessary to collect user comment data from online platforms in a timely manner, conduct mining and analysis, uncover the evolution patterns of events, and observe fluctuations and changes in public sentiment. This real-time understanding enables more effective public opinion management. Moreover, recent advances in medical AI demonstrate that Transformer-based architecture can achieve superior performance in high-stakes tasks such as cancer detection, offering a valuable methodological inspiration for applying advanced deep learning frameworks to the monitoring of online public opinion [3].

In response, this paper integrates deep learning methods into traditional topic mining and sentiment analysis techniques, combining the analysis of hot topics and emotional content during major public health emergencies. This integration addresses the limitations of traditional methods, improves the ability to extract hot topics, and enhances sentiment analysis accuracy, thereby supporting the governance of online public opinion during public health crises.

2. Related Work

With the rise of the digital network era, online public opinion has become an important component of social discourse, and its influence continues to grow. In the context of major pandemic outbreaks, guiding and managing online public opinion is not only a crucial aspect of pandemic prevention, but also directly impacts public confidence and social stability. Faced with increasingly complex online discourse, more scholars have turned their attention to researching and analyzing public opinion online, focusing on topic mining and sentiment analysis [4].

Topic mining of online public opinion usually employs unsupervised learning to cluster latent semantic information from text datasets. This approach helps to identify the central themes of major online events and understand the nature and progression of public discourse [5]. Currently, many researchers at home and abroad use traditional or improved topic models to study public opinion theme recognition, such as Latent Dirichlet Allocation (LDA) [6] and Biterm Topic Model (BTM) [7]. For instance, Wang Yamin et al. utilized weighted BTM combined with an improved TF-IDF algorithm for feature extraction and similarity measurement, effectively solving the issues of high dimensionality and sparsity in short texts, thereby improving the quality of hot topic identification [8]. Zeng Ming et al. applied an improved LLDA-WK model to analyze the evolution of public opinion themes and the communication patterns among different actors in online discourse [9]. Wu Peng et al. integrated BTM with association rules to mine hot topics in Weibo posts and user comments, gaining deeper insight into the public’s true attitudes and needs [10].

To further improve clustering accuracy, some researchers have sought to address the limitations of traditional text clustering methods—namely, their failure to consider latent semantic relationships. For example, Habchi et al. used transformers in cancer-related fields to accurately capture long-distance dependencies and global contextual relationships in large datasets [3]. New models have been proposed that incorporate semantic information through deep learning. For example, Randhawa et al. introduced Vec2Topic, which uses high-dimensional distributed word embeddings to identify important topics within a corpus [11]. Jiang et al. developed a text representation model based on topic-enhanced CBOW, integrating deep neural networks, topic information, and word order to achieve strong classification results [12]. Peng Min et al. proposed a probabilistic topic model enhanced with bidirectional LSTM for semantic reinforcement, which strengthened the relationships between semantic features [13].

Sentiment analysis, also known as opinion mining, refers to the process of analyzing, processing, summarizing, and reasoning about subjective texts with emotional connotations [14]. During the COVID-19 pandemic, many scholars conducted relevant studies on online sentiment during this period and produced valuable findings. For example, Zhang Huiming et al. combined the BosonNLP sentiment dictionary and emoji sentiment dictionary to explore emotional trends in online public opinion and identify hot spots of negative sentiment [15]. Zhang Chen et al. used the SnowNLP sentiment dictionary to analyze comments under the People’s Daily’s daily COVID reports, and discovered an emotional trajectory among users, ranging from anxiety and fear to calm confidence and then to renewed tension and concern [16]. Lu Heng also employed SnowNLP to examine the emotional trajectory of online discourse during major pandemics, revealing sentiment evolution through emotional attributes [17].

With the maturity of deep learning, such techniques have increasingly replaced traditional sentiment dictionaries and machine learning methods [18,19]. Han Keke et al. used a BiLSTM model to analyze early-stage Weibo posts, finding that Chinese netizens generally showed a positive attitude toward the pandemic, although emotional states varied significantly across regions [20]. Pan Wenhao et al. applied CNN, LSTM, and BiLSTM models to analyze the emotional tendencies in COVID-related Weibo posts and observed changes in user sentiment over different periods [21]. Zhuang Muni et al. further improved BERT’s pretraining tasks, and achieved more accurate sentiment classification while simulating emotional changes in online opinion during the pandemic [22].

In summary, both domestic and international scholars have conducted extensive research on the evolution of online public opinion using topic mining and sentiment analysis. While these studies provide a strong foundation, few have deeply integrated topic and sentiment analysis from a multi-angle perspective to explore the true dynamics of online discourse. Thus, this paper leverages deep learning algorithms to optimize traditional models and constructs a topic–sentiment analysis framework that is specifically tailored to the characteristics of online discourse during the emergency response phase of the COVID-19 pandemic. This framework aims to uncover latent emotional and thematic information, offering practical insights for targeted public opinion guidance and governance.

3. Methodology

3.1. Deep Learning-Based Topic–Sentiment Analysis Model

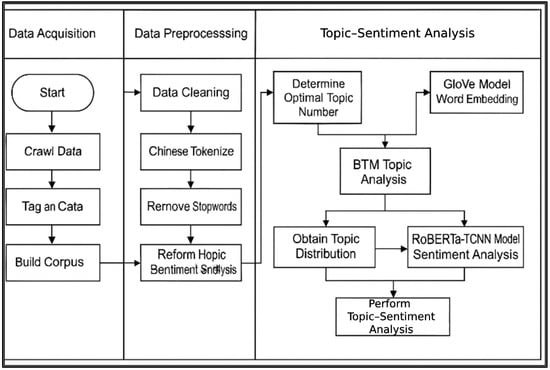

Traditional topic models lack the ability to understand the overall semantics of a text and tend to focus more on the lexical meanings of individual words. This limitation hampers their effectiveness in extracting key topics and performing sentiment analysis in the context of concise and semantically dynamic Chinese social media discourse. In contrast, deep learning models based on word embeddings can partially overcome these shortcomings, improving the robustness and effectiveness of the models, and making them more suitable for online public opinion analysis. Therefore, this paper proposes a novel Weibo topic–sentiment analysis model that integrates the G-BTM hotspot topic detection model and an improved RoBERTa-TextCNN sentiment analysis model. The GloVe algorithm is used to extract semantic information, thereby enhancing the BTM’s clustering capability on short Weibo texts [23]. Meanwhile, the RoBERTa model is optimized and combined with the TextCNN model to achieve deep extraction of global features [24], which further improves both the classification of hot topics and the semantic sentiment analysis of Weibo posts. This combined model contributes to more accurate guidance and governance of online public opinion. The architecture of the proposed topic–sentiment analysis model is illustrated in Figure 1.

Figure 1.

Workflow of the proposed hybrid topic–sentiment analysis framework. The model operates in two parallel streams: (1) the Topic Modeling Branch (left), where the raw corpus is processed by the GloVe-enhanced Biterm Topic Model (G-BTM) to assign topic labels and extract key themes; and (2) the Sentiment Analysis Branch (right), where the same raw text is fed into the RoBERTa-TCNN model for sentiment classification. The final integration of topic and sentiment results occurs statistically during empirical analysis, not within the model architecture itself.

3.2. Optimized Model Methodology

3.2.1. G-BTM Topic Model

The GloVe model is an improved version of the Word2Vec word embedding model [25]. Unlike Word2Vec, which primarily captures local semantic information and struggles with issues such as synonyms and polysemy, GloVe incorporates advantages from global statistical methods such as Latent Semantic Analysis (LSA), prior global co-occurrence statistics, and contextual window-based learning. This allows it to capture richer semantic and syntactic information and provides enhanced performance in handling similar and polysemous words.

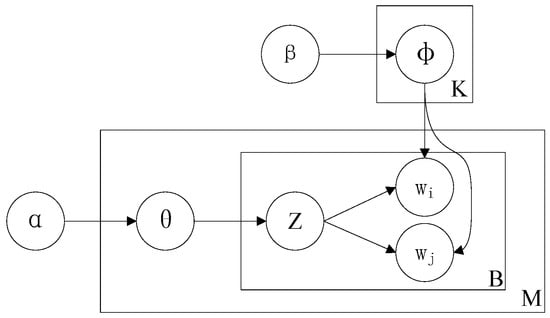

In this study, the GloVe model is used to train pre-processed Weibo corpus data, generating word embeddings that more accurately reflect global semantics. These enhanced embeddings improve the efficiency and accuracy of the Biterm Topic Model (BTM). The BTM itself is an enhancement of the Latent Dirichlet Allocation (LDA) model, specifically designed to address LDA’s shortcomings in short-text scenarios. By constructing co-occurrence word pairs across the entire corpus, the BTM effectively addresses the issue of data sparsity commonly encountered in topic modeling of short texts. A schematic diagram of the BTM topic model is shown as Figure 2 below.

Figure 2.

The core idea of the Biterm Topic Model (BTM) for short texts. Unlike traditional models that analyze single words, BTM generates topics by modeling the co-occurrence of word pairs (biterms, e.g., “lockdown” and “Wuhan”) across the entire corpus. This approach effectively overcomes data sparsity, a common challenge in short-text topic modeling.

After obtaining the corpus vectorized using the GloVe model, the optimal number of topics is determined through topic perplexity and topic coherence metrics [26]. The Biterm Topic Model (BTM) is then applied to train the corpus, enabling the extraction of latent thematic structures from Weibo posts. Ultimately, this process identifies key public opinion topics on Weibo related to the COVID-19 pandemic.

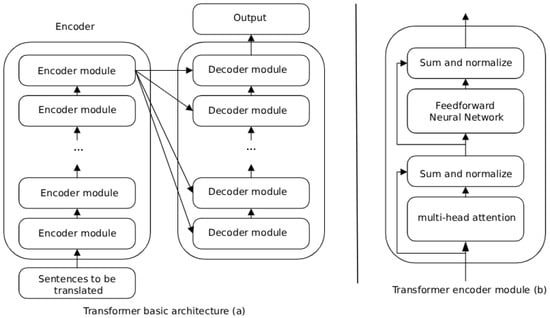

3.2.2. Introduction to Transformer-Based Models

To address the limitations of traditional models in capturing deep semantic context and long-range dependencies within text, this study leverages the Transformer architecture. Originally proposed by Vaswani et al. [27], the Transformer model utilizes a self-attention mechanism to compute representations of each word in relation to all other words in a sentence, thereby effectively capturing global contextual information. This capability overcomes the constraints of earlier models like RNNs and LSTMs, which often struggle with long-distance dependencies and computational inefficiency.

In contrast to word embedding models such as GloVe, which generate static vector representations for each word, Transformer-based models like BERT (Bidirectional Encoder Representations from Transformers) [27] and RoBERTa [24] produce dynamic, context-aware embeddings. This means that the same word can have different vector representations depending on its surrounding context, significantly enhancing the model’s ability to understand polysemy and complex semantic nuances. Such a characteristic is particularly advantageous for analyzing informal and succinct social media language, where context is crucial for accurate interpretation.

Given their superior performance in a wide range of natural language processing tasks, Transformer-based models have become a cornerstone in modern sentiment analysis and text representation. In this study, we adopt RoBERTa, an optimized version of BERT with improved pre-training procedures, as the foundational architecture for our sentiment analysis module. Its robust contextual understanding capability provides a strong basis for our subsequent deep feature extraction and classification steps. The choice to integrate RoBERTa with convolutional components in this study is informed by documented strengths of Transformer-based models in capturing long-range dependencies and complex semantic patterns, as demonstrated in critical application areas like cancer detection [3] (Figure 3).

Figure 3.

The Transformer architecture’s core self-attention mechanism (based on [27]). The diagram illustrates how the model computes representations for each word by attending to all other words in the sequence, thereby capturing global contextual dependencies. The multi-head attention blocks allow the model to jointly attend to information from different representation subspaces, which is crucial for understanding complex semantic nuances in text.

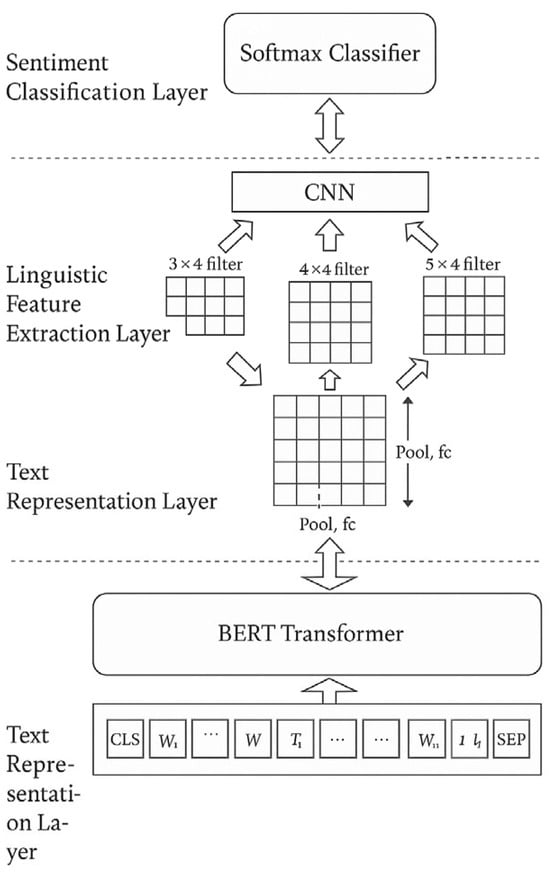

3.2.3. RoBERTa-TextCNN Sentiment Analysis Model

During sentiment analysis, this study incorporates topic information into the original Weibo corpus to establish a link between the text’s semantic meaning and its associated topic. By jointly leveraging semantic and thematic cues, the model aims to identify emotional polarity in online discourse with greater granularity and accuracy. This approach enables both fine-grained sentiment analysis and deeper understanding of emotional expressions embedded in complex textual structures.

To analyze sentiment in Weibo posts that have already undergone topic classification, we adopt the RoBERTa model, an enhanced version of BERT [27] that improves upon BERT’s pre-training methodology and expands the pre-training corpus, thereby achieving stronger performance in natural language understanding tasks. Building on RoBERTa’s sentence-level semantic feature extraction, we integrate the TextCNN model to perform a secondary feature extraction step. This allows for the capture of deeper semantic sentiment signals, enhancing the model’s overall sentiment classification performance. The overall architecture of the improved RoBERTa-TextCNN sentiment analysis model is shown in Figure 4.

Figure 4.

Detailed architecture of the RoBERTa-TextCNN (RoBERTa-TCNN) sentiment classifier. The input text is first tokenized and processed by the RoBERTa encoder to generate context-rich embeddings for each token. These embeddings are then treated as a feature matrix and fed into a TextCNN module. TextCNN uses multiple convolutional filters of different sizes to extract salient local features from the sequence of embeddings. These features are max-pooled, concatenated, and passed through a final fully connected layer to produce the sentiment probability distribution over the six classes: joy, sadness, fear, anger, surprise, neutral.

Integration of Topic Information: It is critical to clarify that the integration of topic information occurs at the empirical analysis stage rather than within the neural network architecture itself. The sentiment analysis module (RoBERTa-TCNN) processes the raw text of each Weibo post independently. It does not directly take the topic labels or embeddings from the G-BTM module as input features. Instead, after both topic clustering and sentiment classification are completed for the entire corpus, the results are merged statistically. Specifically, each post is tagged with both a topic label (from G-BTM) and a sentiment label (from RoBERTa-TCNN). This allows for a macro-level analysis of the distribution of sentiments within each topic, which is the core of our empirical findings in Section 4.

To train and evaluate the sentiment analysis model, a labeled dataset was required. We randomly sampled 10,000 comments from the total corpus of over 550,000 posts for manual annotation. Three trained annotators independently labeled each sample into six sentiment categories: joy, sadness, fear, anger, surprise, neutral. The inter-annotator agreement, measured by Cohen’s Kappa, reached 0.82, indicating substantial agreement. This high-quality annotated dataset was subsequently used to fine-tune the RoBERTa model and evaluate the classification performance. During sentiment analysis, the first step involves the manual annotation of a Weibo dataset encompassing six emotional categories: neutral, joy, sadness, fear, anger, and surprise. Next, the RoBERTa model is fine-tuned using this multi-class emotional dataset to improve its classification accuracy for Weibo texts. Then, the pre-processed corpus is input into the improved RoBERTa model for training, resulting in semantic representations that incorporate both topic and text features. These semantic representations are further passed through the TextCNN network to extract deep semantic features and predict sentiment classification. Finally, the classified emotional data is summarized by topic to analyze emotional trends associated with each topic.

In terms of sentiment annotation, the dataset consisted of 10,000 randomly sampled Weibo comments, which were manually annotated into six sentiment categories (joy, sadness, fear, anger, surprise, neutral). Annotation was performed by three trained coders familiar with social media discourse, and inter-annotator agreement (Cohen’s κ) reached 0.82, indicating a high level of reliability. This annotated dataset was then used to fine-tune the RoBERTa-TextCNN model for large-scale sentiment classification.

Beyond the overall sentiment trends, it is also important to acknowledge that Weibo metadata contains geographic information. Different regions in China experienced varying levels of exposure to COVID-19, and local governments implemented diverse policies such as lockdown intensity, reopening pace, and health communication strategies. These regional differences not only shaped local discourse but also contributed to distinct emotional patterns in public opinion. For example, areas under stricter lockdowns displayed higher proportions of negative sentiment, while regions experiencing reopening earlier tended to show a more positive emotional trajectory.

Accordingly, policy recommendations should be more nuanced and closely tied to these empirical findings. Instead of adopting one-size-fits-all measures, authorities can tailor their communication strategies based on the specific emotional dynamics of each region. For instance, in areas where negative sentiment spikes due to prolonged restrictions, targeted information campaigns and empathetic communication could help alleviate public anxiety. Conversely, in regions where positive sentiment is rising, amplifying success stories and highlighting effective measures can further consolidate public confidence. Such region-sensitive governance strategies would ensure that online sentiment monitoring translates more directly into actionable and adaptive policy responses.

3.2.4. Comparative Training and Model Evaluation

To evaluate the effectiveness of our proposed hybrid architecture, we selected a strong baseline that represents the performance ceiling of standard transformer-based approaches for this task. We implemented a fine-tuned RoBERTa model, which shares the same core transformer architecture as BERT and forms the foundation of more complex models like BERTopic and BERT-LDA. By outperforming this robust baseline, we demonstrate that our novel integration of global semantic features (GloVe-BTM) provides a significant advantage over relying solely on contextualized embeddings.”

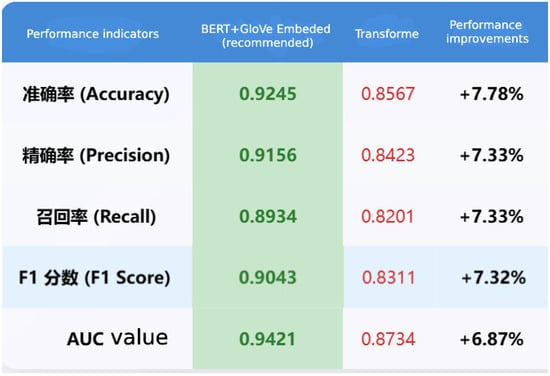

A comprehensive comparative experiment was conducted to rigorously evaluate the effectiveness of the proposed hybrid model (BERT + GloVe Embedding) against a baseline model based on the standard Transformer architecture. As shown in Figure 5 the proposed model demonstrates consistent and significant superiority over the Transformer baseline across all evaluation metrics.

Figure 5.

Performance comparison between proposed BERT+GloVe Embedding model and Transformer baseline model across various evaluation metrics.

Specifically, the proposed model achieved an accuracy of 92.45%, representing a substantial improvement of 7.78 percentage points over the Transformer model (85.67%). This indicates a significantly higher overall prediction correctness. In terms of fine-grained classification performance, the precision (91.56%) and recall (89.34%) of the proposed model surpassed those of the baseline by 7.33%, respectively. This result confirms that our model not only identifies positive cases more accurately but also exhibits a stronger capability to cover all relevant instances. The harmonized metric—F1-score—also showed a significant improvement of 7.32% (Proposed: 0.9043), further evidencing the robust balance between precision and recall achieved by our approach.

Furthermore, the AUC value of the proposed model reached 0.9421, markedly higher than the Transformer’s 0.8734, with an improvement of 6.87%. This indicates that the hybrid model possesses a stronger ability to distinguish between categories, and the performance enhancement is highly statistically robust.

In conclusion, the proposed BERT and GloVe embedding hybrid model, which integrates BERT’s powerful contextual semantic understanding with the stable semantic representations of GloVe’s global word vectors, effectively mitigates the limitations of a standalone Transformer model for this specific task. Its superior and consistent performance across all key metrics—accuracy, precision, recall, F1-score, and AUC—validates its effectiveness and advanced capabilities for online public opinion analysis (Figure 5).

These results robustly demonstrate that by fusing BERT’s dynamic contextual encoding with GloVe’s global static word vectors, our model can capture deep semantic and sentiment features in text more comprehensively and stably. This hybrid strategy effectively combines the strengths of both representation paradigms: it leverages BERT’s capacity for modeling polysemy and complex contexts while incorporating the lexical prior knowledge rich in semantic relationships learned by GloVe from large-scale corpora. Consequently, it achieves more accurate and robust performance for analyzing complex short texts from social media than the standard Transformer model. This provides not only compelling quantitative evidence but also a more reliable technical foundation for building practical public opinion governance systems.

4. Empirical Study

4.1. Data Source

To obtain a sufficient volume of Weibo corpus data, this study employed a Python 3.10-based web crawler to collect relevant posts from the WAP version of Sina Weibo. To explore key points in public discourse and the evolution of sentiment during the COVID-19 pandemic, we used keywords such as “pandemic prevention,” “Wuhan reopening,” and other COVID-19-related terms to guide the data collection. The data collection period spans from 21 February 2020, to 28 April 2020. The collected dataset includes around 80,000 Weibo posts and contains key metadata fields such as posting time, location, username, user ID, content, and number of comments.

After obtaining the corpus, data preprocessing was conducted to prepare for vectorization and model training. The preprocessing steps included data cleaning, stop-word removal, high/low-frequency word filtering, and Chinese word segmentation. First, meaningless content such as blank posts, pure symbols or images, and advertisements were removed. Then, a customized stop-word list was built based on the Baidu stop-word dictionary, supplemented with colloquial terms from the Weibo platform and phrases frequently used during the pandemic.

For word segmentation, we utilized the Jieba tokenizer in search engine mode and incorporated domain-specific terms related to the pandemic into the custom dictionary. The newly constructed stop-word list was also used during segmentation. Finally, we performed a secondary cleaning step to filter out empty, irrelevant, or advertising content, resulting in a final cleaned corpus of 78,154 valid Weibo entries.

Furthermore, the data were collected through a crawler in full compliance with Chinese legal and ethical standards, and only publicly available posts were retrieved, with no personal identifiers retained. While emojis and images were excluded during preprocessing, potentially omitting certain affective signals, the Weibo corpus remains a timely, rich, and reliable foundation for studying the evolution of online public sentiment during major public health emergencies.

The data used in this study were collected from publicly accessible online resources (Sina Weibo) through automated scripts. Only publicly available text content was retrieved, without including any personal or sensitive information. The data were used exclusively for academic research purposes. The entire process of data collection and utilization complied with research ethics standards and did not involve any infringement of user privacy or rights.

4.2. Clustering of Hot Public Opinion Topics

4.2.1. Preprocessing for Topic Modeling

In the topic classification stage, we employed an improved Biterm Topic Model (BTM), which addresses the data sparsity problem commonly encountered in short-text applications of LDA. The BTM is better suited to the linguistic characteristics of online social platforms. Before applying the BTM, it is necessary to determine the optimal number of topics within the corpus. This process consists of three main steps: calculating perplexity, computing topic coherence, and visualizing topic distributions.

First, the perplexity values for different numbers of topics are calculated to evaluate the generalizability of the model’s predictions. Perplexity assesses the likelihood distribution of the model and quantifies how well the model predicts unseen data. The lower the perplexity, the better the model’s performance in representing the data. The formula for perplexity is as follows:

Next, the appropriate number of topics is determined by calculating topic coherence, which measures whether the semantic meaning of the top keywords for each topic is highly consistent and easily interpretable. Topic coherence evaluates the degree of semantic similarity between high-probability words within a topic based on their co-occurrence patterns. The formal expression for topic coherence is as follows:

Here, W(t) = {w(t)1, w(t)2, …, w(t)M} represents the set of the top M most relevant words under topic t, D(w(t)i) denotes the frequency of word w(t)i in the corpus, and D(w(t)i, w(t)j) represents the co-occurrence frequency of words w(t)i and w(t)j in the same document.

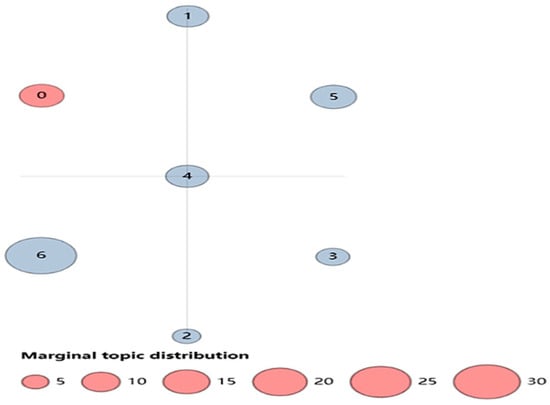

Finally, topic visualization is employed to assess potential overfitting of the model. As shown in Figure 6, there is no overlap among the identified topics, indicating that the topic model has not suffered from overfitting and that the semantic boundaries between topics are clear. Therefore, the optimal number of topics for the topic model in this study is determined to be seven (7).

Figure 6.

Topic visualization distribution.

4.2.2. Topic Result Visualization and Analysis

After determining the optimal number of topics, the globally context-aware word embeddings obtained through the GloVe algorithm were fed into the BTM topic model for training. This enabled the model to extract the topic affiliation of each Weibo post and identify the Top 20 keywords for each topic through unsupervised learning. These topic keywords are crucial for capturing the core idea of each topic. They serve as highly concise representations and summaries of the thematic content. In this study, we obtained the 20 most semantically relevant keywords for each topic using the BTM. By analyzing these high-value keywords, we were able to reconstruct the core viewpoints of each topic and identify the key concerns of netizens during the COVID-19 outbreak. This analysis provides meaningful insight into the primary online discussions and lays the groundwork for deeper public opinion analysis and governance. The Top 20 keywords for each topic are shown in Table 1 below.

Table 1.

Top 20 keywords for each topic.

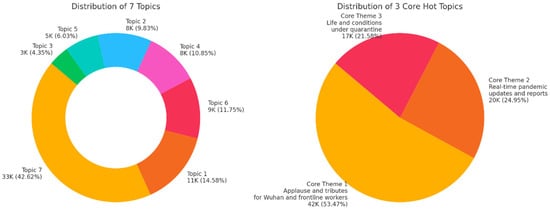

Each topic’s Top 20 core keywords represent the essential content of that topic. Therefore, by analyzing these keywords, we can reconstruct the key information of each topic and identify the central issues that netizens focused on and discussed during the COVID-19 pandemic. Based on this, the seven identified topics were further refined into three core themes. These core themes provide a more concise summary of the main areas of public concern and are as follows:

- Applause and tributes for Wuhan and frontline epidemic prevention workers;

- Real-time information and reports on the COVID-19 pandemic;

- Life and conditions under quarantine during the pandemic.

Among them, the main content of each topic and its corresponding core theme are shown in Table 2.

Table 2.

Theme information and theme condensation.

Based on the interpretation of topic keywords and the distribution of Weibo posts under different topics, it can be observed that two months after the outbreak of the pandemic, online public opinion had shifted to focus more on the lives of residents under quarantine, including their work and the process of returning to work. Topic 7 accounted for as much as 42.6%, and the Core Theme 3—life and conditions under quarantine—emerged as the most discussed topic among all core themes. This shows that as the pandemic progressed, public attention had gradually shifted from the outbreak itself to the way of life it brought about.

At the same time, netizens remained concerned about the development of the COVID-19 pandemic. However, unlike the early stages, their attention extended beyond domestic affairs, such as Wuhan, to the global progression of the pandemic. While the situation in China was brought under effective control, the global outbreak remained in a state of complete chaos. As public understanding of the virus deepened, the development of a COVID-19 vaccine also became a major concern. Clearly, pandemic-related information and news reports remained a daily focus of interest. Core Theme 2—real-time updates and reports on COVID-19—accounted for about 25% of the Weibo corpus.

During this two-month period, people across China united to support Wuhan and frontline healthcare workers until the city was officially reopened. Consequently, the internet was also filled with posts expressing encouragement for Wuhan, tributes to frontline workers, and memorials for martyrs who sacrificed their lives in the fight against the pandemic, reflecting netizens’ profound gratitude and respect.

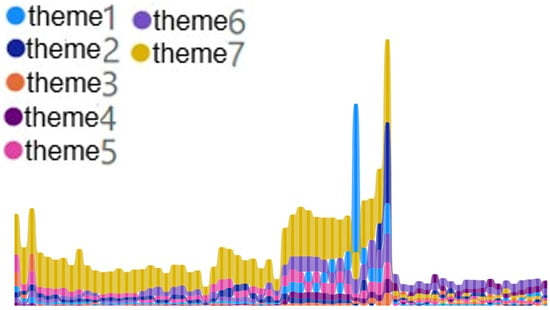

After gaining a macro-level understanding of the topic distribution during the emergency response phase of the COVID-19 pandemic (Figure 7), we further explored how the topics that netizens focused on and discussed changed over the course of the pandemic’s development, as illustrated in Figure 8.

Figure 7.

Distribution of topics and hot topics.

Figure 8.

Evolution trend of the theme. The figure starts in mid-February 2020 and reaches its peak in early to mid-April.

Topic 1 did not attract much attention in February or early March. However, as medical teams gradually withdrew from Hubei Province in late March, discussions around healthcare workers and volunteers began to heat up, peaking on April 4.

Topic 2 was also not a major focus during the initial stage of emergency response, but as news of Wuhan’s reopening began to circulate in early April, it gradually gained traction, eventually peaking on April 8, the day of the official reopening.

Topics 3 and 4 remained relatively minor. Topic 3 received a certain level of attention early in the emergency phase, while Topic 4 gained more interest after Wuhan reopened, with the public focusing more on the development of COVID-19 vaccines.

Topic 5 maintained a steady level of attention before Wuhan reopened, with discussions centered on working from home during quarantine. However, interest in this topic gradually declined as Wuhan reopened and the pandemic came under better control nationwide.

Topic 6 consistently maintained a certain degree of attention throughout the emergency response stage, especially in late March, when its popularity began to rise, also peaking on April 8. After reopening, this topic continued to rank among the most discussed.

Topic 7 remained the most widely discussed topic on Weibo prior to Wuhan’s official reopening. On the day of reopening, it reached an astonishing level of popularity, truly reflecting the real-life conditions of netizens living under lockdown.

From the overall posting volume and topic heat trends, it is evident that the reopening of Wuhan served as a major milestone and turning point during the emergency response stage of the COVID-19 pandemic. It fundamentally reshaped the landscape of online public discourse and represents a crucial inflection point that must not be overlooked in public opinion analysis.

4.3. Evolution of Online Public Sentiment

4.3.1. Overall Sentiment Trends

In this study, we used the Chinese-RoBERTa-wwm-ext-base model to perform semantic representation of the collected Weibo text data [28]. Combined with TextCNN for feature extraction, we ultimately obtained the temporal evolution of Weibo sentiment from 21 February to 28 April 2020, as shown in Figure 7.

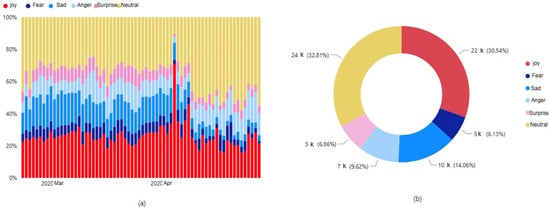

As shown in Figure 9b, the overall sentiment distribution demonstrates a relatively balanced pattern among neutral sentiment, negative sentiment (including fear, sadness, and anger), and positive sentiment (joy)—each accounting for approximately 30% of the total corpus. Notably, surprise is treated separately, as it can represent either positive or negative emotions depending on context and thus is not included in either category.

Figure 9.

Evolution of online emotions. (a) Daily proportion of emotions from March to April 2020. (b) Overall emotion distribution across the dataset.

However, judging from the overall trend of online public sentiment during this stage in China, neutral and positive emotions were the dominant forces. Although negative emotions accounted for a certain proportion, they failed to exert broader influence, indicating that Chinese netizens generally maintained a relatively optimistic attitude toward the development of the pandemic.

From the temporal perspective in Figure 9a, neutral emotions made up around 40% of all posts around February 21, occupying the dominant position. In March, however, negative emotions increased while neutral ones declined. But with Wuhan’s reopening and the nationwide improvement in pandemic control in early April, negative emotions rapidly decreased and neutral sentiment surged, reaching an astonishing 50% share. At the same time, negative emotions were most prominent in March, which was also the most critical period in China’s nationwide pandemic response. The country enforced high-intensity containment measures, significantly disrupting daily life, which contributed to the elevated presence of negative sentiments. As news of Wuhan’s reopening emerged in early April, positive emotions spiked, with joy sentiments reaching a historic high of 70%, before gradually stabilizing and transitioning back into neutral expressions.

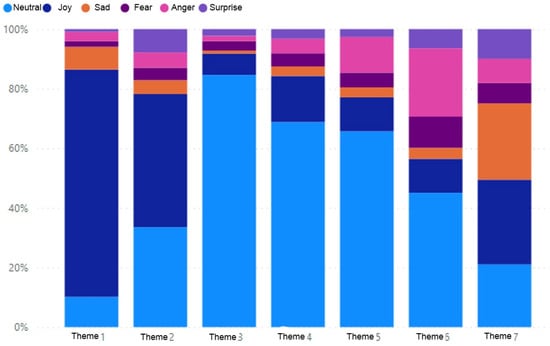

To further understand how netizens’ emotions evolve under different circumstances and to enable more targeted public opinion guidance and governance, this study conducted a more granular analysis of sentiment under the seven main topics and three core hot themes. Different topics represent different emotional releases, and netizens express significantly varied emotions depending on the subject matter. As shown in Figure 10, the emotional differences across topics are evident:

Figure 10.

Emotional distribution across various topics.

In Topic 1 and Topic 2, positive emotions dominate. Under themes of paying tribute to frontline heroes and martyrs in the pandemic, such uplifting emotions naturally serve as motivation for the public. Similarly, in the hot topic of “applause and tributes for Wuhan and frontline workers,” posts predominantly convey positive sentiment and should be promoted to wider audiences.

In Topics 3 and 4, neutral emotion accounts for over 60%, as these topics primarily concern global pandemic updates and vaccine development, where netizens typically maintain an objective attitude.

In Topic 6, negative emotions notably increase, with anger ranking second only to neutral emotions. This suggests significant public dissatisfaction with the global pandemic situation. Within the hot theme of “real-time pandemic updates and reports,” although neutral sentiment is dominant, negative sentiment surpasses positive, with anger being the most prominent negative emotion.

Regarding the hot theme of “life and conditions under quarantine,” Topics 5 and 7 show distinct emotional expressions, though both are closely related to daily life and thus more directly reflect netizens’ true feelings about the pandemic.

In Topic 5, neutral sentiment dominates, indicating that many netizens show understanding and acceptance, though some anger is also present.

In contrast, Topic 7 contains a larger share of negative sentiment, mainly expressing sadness about lockdown life. Yet surprisingly, positive emotions (joy) even exceed sadness, suggesting that Wuhan residents maintained a notably optimistic outlook despite strict lockdown conditions.

The emotional distribution expressed by netizens varies significantly by topic. Thus, targeted guidance and governance strategies should be applied accordingly—promoting positive sentiment to a broader audience while managing the spread of negative sentiment to steer public focus toward constructive and hopeful themes.

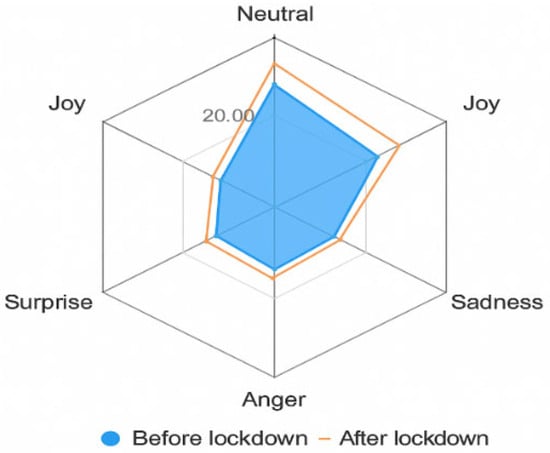

4.3.2. The Impact of Wuhan’s Reopening on Online Sentiment

At the same time, we observed that the reopening of Wuhan had a significant emotional impact on netizens. Therefore, we further analyzed the changes in online sentiment before and after the announcement of Wuhan’s reopening. As shown in Figure 9, although Wuhan officially lifted its lockdown on April 8, the news had already begun circulating earlier. Consequently, April 1 was selected as the dividing date for this analysis. The resulting emotional changes are presented in Table 3 and Figure 11.

Table 3.

Emotional distribution before and after Wuhan’s reopening.

Figure 11.

Radar chart of emotional changes before and after Wuhan’s lifting of lockdown.

From the data, it is evident that after Wuhan’s reopening, the proportion of joy-related sentiment rose to 37.1%, an increase of 11% compared to before the reopening, surpassing neutral sentiment to become the dominant emotion. This strongly indicates that Wuhan’s reopening was a highly encouraging event for netizens, symbolizing a milestone victory in China’s fight against the pandemic.

Meanwhile, negative sentiment accounted for 33.4% before Wuhan’s reopening, significantly exceeding positive sentiment, reflecting a generally pessimistic and anxious tone in public opinion during the lockdown. However, after the reopening, negative sentiment dropped across all categories, most notably sadness, which saw the largest decrease of 6.2%. This demonstrates that the reopening greatly uplifted public morale, helping reduce the prevalence of negative emotions.

Nevertheless, the proportion of negative sentiment remained above 20%, indicating that continued attention and timely guidance are still necessary to manage lingering concerns. In contrast, neutral sentiment remained relatively stable, holding at around 30% before and after the event. The emotion of surprise showed a slight decline, with much of it transitioning toward joy, contributing further to the surge in positive sentiment.

In summary, the reopening of Wuhan acted as a powerful stimulant, injecting positive momentum into public discourse and leading to a shift in sentiment trends, with positive emotions taking the lead in shaping the direction of online public opinion.

5. Discussion and Implications

Theoretical Contribution and Practical Implications. The contributions of this study are twofold. Methodologically, this study validates the superior performance of a hybrid framework that integrates global semantic knowledge (GloVe) with contextual understanding (RoBERTa) for short-text analysis. Empirically, it provides a nuanced, data-driven framework for understanding the complex interplay between public discourse topics and emotional valence during a major crisis.

For policymakers and public health authorities, our findings may offer actionable insights: (1) Targeted Communication: Resources should be prioritized to address the practical and emotional needs of those affected by lockdowns, as this is the core of public concern. (2) Timely Intervention: Real-time sentiment monitoring systems, like the one proposed, can serve as an early warning mechanism for spikes in negative emotion around specific topics, allowing for timely counter-measures. (3) Hope-Based Messaging: The announcement of positive milestones (like a city reopening) should be strategically used to boost public morale and foster resilience.

First, a collaborative governance model for online public opinion must be developed. The evolution of online sentiment involves the interaction and coupling of multiple stakeholders, whose collaboration collectively drives the formation and dissemination of online narratives during the COVID-19 crisis. Therefore, it is critical to plan and implement a coordinated governance mechanism with the government playing a leading role. This includes effectively aligning and coordinating various actors in cyberspace to participate in the guidance and regulation of public discourse. The government should build a responsive, multi-department mechanism involving diverse participants, while strengthening communication with digital stakeholders. By promoting information sharing, integrating resources, and leveraging complementary strengths, the government could potentially ensure more efficient dissemination of key information, enable prompter responses to trending topics, and facilitate the guidance of public opinion in a positive direction.

Second, the digital surveillance mechanism for online sentiment must be further enhanced. During the outbreak phase, a multitude of opinions and emotions emerge across various online platforms. By leveraging artificial intelligence (AI), big data analytics, and other emerging technologies, it becomes possible to perceive and understand the real-time emotional landscape and discourse of the internet. These technologies can help to deeply mine users’ core concerns within the complex digital space, analyze their focal issues, and sense their emotional fluctuations and expression patterns. This enables a deeper understanding of users’ internal psychology, needs, and motivations, ultimately allowing for accurate identification of the public’s true concerns and more effective management of public sentiment crises. Smart governance systems could support more timely responses to public concerns, thereby offering evidence-based guidance for government and relevant institutions to refine their online public opinion governance strategies.

Lastly, emphasis should be placed on effective communication and emotional resonance. Managing online sentiment requires not only macro-level strategies and governance models but also refined guidance methods and communication techniques that enhance public understanding and receptiveness. In terms of mitigation methods, the government should address the core emotional concerns of netizens with empathy and emotional support, engaging in effective dialog from the perspective of the public. By reaching consensus on key issues, mutual trust can be established, fostering emotional resonance. As for communication strategies, the government should adopt new media formats and communication styles to clearly convey its policies and initiatives through various media channels. This enhances information transmission speed and expands reach. Through effective communication and strong emotional connection, a positive public opinion environment can be cultivated, leading to a favorable online atmosphere and improved public opinion governance.

6. Conclusions

Notwithstanding the theoretical and practical contributions outlined above, this study is subject to several methodological and contextual limitations that must be candidly addressed to ensure a comprehensive interpretation of our findings and chart productive avenues for future inquiry.

Firstly, the exclusive reliance on Weibo as a data source, while justified by its prominence in Chinese social discourse, inherently constrains the demographic and ideological diversity of our corpus. Populations with lower digital literacy or limited access to social media—such as the elderly, rural communities, and socio-economically disadvantaged groups—are systematically underrepresented. This selection bias potentially skews our understanding of the true spectrum of public concerns and emotional responses, as the discourse captured may disproportionately reflect the views of younger, urban, and more technologically engaged segments of society.

Secondly, in pursuit of analytical tractability, our preprocessing pipeline deliberately excluded non-textual modalities, notably emojis, memes, images, and videos. This constitutes a significant methodological shortfall, as affective communication on platforms like Weibo is increasingly multimodal. Emojis serve as potent emotional amplifiers and disambiguators, while shared images and videos can become focal points for collective sentiment, often conveying meaning more powerfully than text alone. The omission of these rich semiotic resources likely results in an attenuated and less nuanced representation of public emotion, particularly for subtle or ironic expressions that rely on visual context.

Thirdly, the model and findings are deeply embedded within the unique socio-political, cultural, and media landscape of China. The government’s centralized crisis response, the specific role of state-affiliated media in shaping discourse, and culturally specific communication norms all profoundly influenced the observed opinion dynamics. Consequently, the direct generalizability (external validity) of our conclusions to other national contexts with different governance structures, media ecologies, and cultural values (e.g., individualistic societies with more decentralized media systems) is inherently limited. The model’s performance and the very nature of topic–sentiment interplay may vary significantly in different environments.

Finally, a more technical limitation lies in the computational intensity of the proposed hybrid framework, which may hinder its deployment for real-time monitoring in resource-constrained settings.

These limitations, however, proactively illuminate clear and compelling pathways for future research:

Multi-Platform Validation: Subsequent studies should aggregate data from a more diverse array of platforms, including messaging apps (e.g., WeChat), video-sharing platforms (e.g., Douyin/TikTok), and online forums. This would mitigate selection bias and yield a more holistic and representative panorama of digital public opinion.

Multimodal Integration: A paramount future direction is the development of sophisticated multimodal frameworks that seamlessly fuse textual analysis with computer vision techniques for emoji, image, and video sentiment analysis. This would capture the full richness of online affective expression.

Cross-Cultural Comparative Analysis: Employing a comparative research design to apply this framework to datasets from other countries would be invaluable. Such studies could disentangle universal patterns of crisis communication from culturally specific phenomena, significantly advancing the theoretical understanding of global public opinion evolution.

Model Optimization: Future work could focus on developing distilled or more efficient versions of the model architecture to facilitate real-world, low-latency applications for government agencies and public health organizations.

Author Contributions

Methodology, J.W.; Resources, Y.Y.; Writing—original draft, Y.Y.; Writing—review and editing, J.W. and M.L.; Supervision, M.L.; Funding acquisition, M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (72171119), the Humanities and Social Science Fund of the Ministry of Education of China (23YJAZH074), and the Social Science Foundation of Jiangsu Province (24GLA001).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tian, X. A Comparative Study on Emergency Management of SARS and COVID-19 by the Chinese Government. Master’s Thesis, Hubei University, Wuhan, China, 2021. [Google Scholar]

- Chen, X.; Duan, S.; Wang, L. Research on clustering analysis of Internet public opinion. Clust. Comput. 2019, 22, 5997–6007. [Google Scholar] [CrossRef]

- Habchi, Y.; Kheddar, H.; Himeur, Y.; Belouchrani, A.; Serpedin, E.; Khelifi, F.; Chowdhury, M.E. Advanced deep learning and large language models: Comprehensive insights for cancer detection. Image Vis. Comput. 2025, 138, 105495. [Google Scholar] [CrossRef]

- Ruan, J.; Liu, Y.; Ren, T.; Zhang, B.; Ji, H. New models and trends in statistical research under the impact of COVID-19: A summary of the “Scientific anti-pandemic, statistical responsibility” national online forum. Math. Stat. Manag. 2020, 39, 4–15. [Google Scholar]

- Deerwester, S.; Dumais, S.T.; Furnas, G.W.; Landauer, T.K.; Harshman, R. Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 1990, 41, 391–407. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.; Jordan, M.I. Latent Dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Yan, X.; Guo, J.; Lan, Y.; Cheng, X. A biterm topic model for short texts. In Proceedings of the 22nd International Conference on World Wide Web, Rio de Janeiro, Brazil, 13–17 May 2013; ACM: New York, NY, USA, 2013; pp. 1445–1456. [Google Scholar]

- Wang, Y.; Hu, Y. Hotspot detection of Weibo public opinion based on BTM. J. Inf. 2016, 35, 7–15. [Google Scholar]

- Zeng, M.; Jiang, H. Evolution of Weibo public opinion themes in online mass incidents. Inf. Manag. Res. 2021, 6, 13–22. [Google Scholar]

- Wu, P.; Shi, T.; Ling, C. Topic discovery of COVID-19 vaccine on Weibo platform. Inf. Sci. 2022, 40, 8–16. [Google Scholar]

- Randhawa, R.S.; Jain, P.; Madan, G. Topic modeling using distributed word embeddings. arXiv 2016, arXiv:1603.04747. [Google Scholar] [CrossRef]

- Jiang, Z.; Gao, S.; Chen, L. Study on text representation method based on deep learning and topic information. Computing 2020, 102, 623–642. [Google Scholar] [CrossRef]

- Peng, M.; Yang, S.; Zhu, J. Topic modeling based on bi-directional LSTM semantic enhancement. J. Chin. Inf. Process. 2018, 32, 10–18. [Google Scholar]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Found. Trends Inf. Retr. 2008, 2, 130–135. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, H. Evolution of online public opinion in public health emergencies based on theme-sentiment fusion analysis. Inf. Explor. 2021, 286, 14–21. [Google Scholar] [CrossRef]

- Zhang, S.; Ma, X.Y.; Zhou, Y.; Guo, R.Z. Analysis of COVID-19 public opinion evolution based on user sentiment changes. J. Geo-Inf. Sci. 2021, 23, 341–350. [Google Scholar]

- Lu, H.; Zhang, X.; Yan, W. Multi-attribute evolution analysis of online public opinion during major epidemics. Inf. Sci. 2022, 1, 158–165, 192. [Google Scholar]

- Kim, Y. Convolutional neural networks for sentence classification. arXiv 2014, arXiv:1408.5882. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Han, K.; Xing, Z.; Liu, Z.; Liu, J.; Zhang, X. Research on public opinion analysis methods in major public health events: A case study of COVID-19. J. Geo-Inf. Sci. 2021, 23, 331–340. [Google Scholar]

- Pan, W.; Li, J.; He, B.; Zhao, S. Analysis of emotion and psychological dynamics of online users in public health emergencies: A case study of COVID-19. Media Watch 2020, 11, 13–22. [Google Scholar]

- Zhuang, M.; Li, Y.; Tan, X.; Mao, T.; Lan, K.; Xing, L. Simulation of COVID-19 online public opinion evolution based on BERT-LDA model. J. Syst. Simul. 2021, 33, 13–20. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. GloVe: Global vectors for word representation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A robustly optimized BERT pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Mimno, D.; Wallach, H.M.; Talley, E.; Leenders, M.; McCallum, A. Optimizing semantic coherence in topic models. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing (EMNLP), Edinburgh, UK, 27–31 July 2011; pp. 262–272. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Yang, Z. Pre-training with whole word masking for Chinese BERT. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3504–3514. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).