Abstract

In order to test within-group homogeneity for numerical or ordinal variable groupings, we have introduced a family of discrete probability distributions, related to the Gini mean difference, that we now study in a deeper way. A member of such a family is the law of a statistic that operates on the ranks of the values of the random variables by considering the sums of the inter-subgroups ranks of the variable grouping. Being so, a law of the family depends on several parameters such as the cardinal of the group of variables, the number of subgroups of the grouping of variables, and the cardinals of the subgroups of the grouping. The exact distribution of a law of the family faces computational challenges even for moderate values of the cardinal of the whole set of variables. Motivated by this challenge, we show that an asymptotic result allowing approximate quantile values is not possible based on the hypothesis observed in particular cases. Consequently, we propose two methodologies to deal with finite approximations for large values of the parameters. We address, in some particular cases, the quality of the distributional approximation provided by a possible finite approximation. With the purpose of illustrating the usefulness of the grouping laws, we present an application to an example of within-group homogeneity grouping analysis to a grouping originated from a clustering technique applied to cocoa breeding experiment data. The analysis brings to light the homogeneity of production output variables in one specific type of soil.

MSC:

62E10; 62E20; 62E17; 62Gxx

1. Introduction

In this work, taking as a starting point a statistic introduced in [], we formally define and study a family of discrete finite probability laws that are useful to test the within-group homogeneity of groupings—or clusters—of observations for a finite set of random variables.

We call an element of this family of probability laws a grouping distribution. A grouping distribution, of the family we are interested in, is related to the Gini’s mean difference (for information on this statistic, see [,,,]). It has also some affinity with the distribution used in the Kruskal–Wallis test (see []) but, while this test compares ranks across multiple groups but focuses on the between-group variance of ranks, the grouping distribution focuses on the within-group dispersion.

The most well known homogeneity test is based on the Chi-square statistic and is applied to samples of numerical variables to determine if the samples originate from the same distribution. In this sense, homogeneity is an intergroup property, that is, the groups are homogeneous to one another, or not. In the same vein, the work [] studies a family of nonparametric tests to decide if two independent samples have a common distribution with the same density; the tests rest on a family of distances between densities. In this work, we consider “homogeneity” in the sense of the homogeneity of elements within the groups; the principle is that, using some criteria, we first partition the data in groups, and then we test if the partition criteria give rise to groups which are homogeneous by themselves, or not.

In our approach, a grouping distribution is applied to a finite set of ranks of observations of random variables and so, as a consequence, a fruitful study of the grouping statistic law seems to rely heavily on combinatorics. To the best of our knowledge, the distribution of a random variable with the grouping probability law can only be determined with computational help due to the large number of events.

Among the tools available for the study of probability laws, the transform methods such as Laplace, Fourier or z-transform, for instance, are usually effective. The study of the Fourier transform by means of signal theory does not appear to be useful since the patterns observed in the graphical representations of the Fourier transforms of the laws result from an artifact—the French battement—resulting from the interference of trigonometric waves with frequencies close to one another.

The idea of considering semigroups generated either by the values taken by the distributions of the probabilities of the values can be motivated by the description of sums of independent random variables having a common grouping law. We will deal with this idea in another article.

Let us briefly describe the contents and main contributions of this work.

- In Section 2, we define the family of grouping distributions—which, in our opinion, should be denominated Mexia distributions, honoring the statistician extraordinaire João Tiago Mexia who proposed them for the first time—we detail some properties of the family and present tables of quantiles of the grouping distributions for moderate values of parameters computed from the full discrete distribution, and we state some remarks that highlight the motivation for the problems studied in the following.

- In Section 3, we present some results on moment generating functions that may have an independent interest, namely, Theorem 3, showing, under some general hypothesis, that the Kolmogorov distance between probability distributions is bounded by the distance between the correspondent moment generating functions. We prove a negative asymptotic result based on observed properties of some fully computable grouping distributions and we propose two methodologies to build approximate laws for grouping distributions with large parameter values; these methodologies may be considered implementation open problems due to technical difficulties.

- In Section 4, we present an application of a grouping distribution to assess the within-group homogeneity of clusters of observations from a cocoa breeding experiment.The clusters are obtained by the well-known KMeans clustering technique applied to the whole set of values of the variables. We show that the use of the grouping distribution allows to recover some important features of the observations, namely, the homogeneity of production variables in one type of soil that other statistical methods—such as ANOVA—do not bring to light.

The figures and the computations are performed using Wolfram’s Mathematica ™.

2. The Definition of the Grouping Probability Law

In this section, we define—in a constructive way, that is, by giving the values and the algorithm to obtain the correspondent probabilities—the family of the grouping probability laws.

Definition 1

(The family of grouping probability laws). The grouping test statistic law—denoted by —is the probability law defined by the following:

- 1.

- The set of integers ;

- 2.

- The set of all partitions of in p subsets such that

- 3.

- The values of the following function , defined on :from which we can obtain the corresponding frequencies of those values.

Let us detail some important observations about this family of probability laws. Firstly, it is a useful family for a statistical diagnostic of lack of homogeneity in groups; an example of such a statistical application is presented in Section 4. Secondly, the actual computation of values and respective frequencies is a challenge even for moderate values of N, say, for example ; in fact, for , , , and , we have that = 17,383,860, a number that can bring a computational challenge. Let us look first at some aspects of the possible statistical applications.

2.1. Statistical Relevance of Grouping Distributions

We first observe an elementary fact that is relevant for the control of any algorithm computing the law of a grouping distribution in the sense that it allows to estimate some of the worst case scenarios.

Remark 1

(The largest value for the partitions). Since the number of ways of depositing N distinct objects into p distinct bins, with objects in the first bin, objects in the second bin, and so on, is given by the multinomial coefficient and since , we obtain that

and so takes one of its largest values whenever we have

See Appendix A.4 for an idea of a proof.

Remark 2

(Notation and example). We write to denote a random variable X that follows the law , that is, X takes the values taken by , defined in Formula (1), with the corresponding frequencies or probabilities. Let us consider the law . The number of possible partitions is equal, according to Formula (2), to 126. But the set of values taken by has only 16 different values; these values, together with the corresponding probabilities or frequencies of occurrence, are displayed in Table 1.

Table 1.

Values and corresponding probabilities for .

Neither the values nor the frequencies of occurrence of these values, in Table 1, convey a discernible pattern of regularities allowing for an a priori description of values and corresponding probabilities for the law . The quest for regularities allowing such an a priori description is a very interesting theme of research not considered in this work.

Remark 3

(On the statistical use of the grouping law). Given a collection of N random variables with completely ordered values—for instance, numerical random variables—it may be advisable to consider the partition of the N random variables in p groups of these random variables; the reason may be multifold: common origins, common data collecting structure, common structural characteristics, or even as a result of some clustering process. In order to test the statistical significance of the within-group homogeneity of the grouping, we consider, for each group, the global ranks of each of the variables of the group; such an attribution of ranks is possible since the variables have completely ordered values. This grouping procedure defines an initial family of subsets of —such that —for which the function has a determined value. The grouping will have statistical significance if the value of is small enough with respect to all the other possible values of the function taken on all possible partitions of in different p subsets such that . Once we have the law we can define lower quantiles of this law and perform the adequate statistical test.

Let us look at two tables of quantiles —Table 2 and Table 3—of the grouping distributions , for and , observing that due to the fact that there are only two subgroups, , there is a symmetry, allowing us to reduce the number of cases presented. In this case, a statistical analysis focuses on testing the homogeneity of groups.

Table 2.

Quantiles and for groupings in sets having as elements .

Table 3.

Quantiles and for groupings in sets having as elements .

With the quantile Table 2 and Table 3, the statistical test may be performed in the following way. Consider a set of values—corresponding to the observation of random variables—a set with a number of elements between 8 and 19, let us say, for example, elements; this set can be decomposed into two groups corresponding to such that as follows: (2,11), (3,10), (4,9), (5,8) and (6,7). Suppose we have to test the homogeneity of a decomposition of the set of 13 values in two groups, for example, the decomposition (4,9), that is, in one subgroup with 4 values and the other subgroup with 9 values. In Table 3, we obtain the quantiles = 332 and = 356. We then order the 13 values and we consider the ranks of these values. Considering the subset of ranks corresponding to the subgroup with 4 values and the subset of ranks of the subgroup with 9 values, we can compute the value of the statistic defined in Formula (1) and compare it with a quantile, say or , for some instances. Since the more significant one is the within-group homogeneity of the grouping, the lesser one is the value of the statistic ; an observation of a statistic value smaller that one of the chosen quantiles will provide indication of the within-group homogeneity.

2.2. Computational Issues for the Groupings Statistic Family

We first observe that the number of ways to partition a set of N objects into p non-empty subsets without the restriction of having a determined number of elements in each subset as in Definition 1 and Remark 1—a number denominated Stirling number of the second kind, or Stirling partition number—is given by

for and (see [], p. 258) for substantial information on this subject). It is clear that for , we have , and so we have exponential growth, with N, of the number of evaluations, for , of the statistic for the family of probability laws when r varies.

Moreover, summing in Formula (3), over all possible p, we obtain the total number of partitions of N, that is, the Bell number, a number that grows super exponentially (see [], pp. 374, 493, 603).

This observation suggest two approaches. A first approach is to have at our disposal a set of asymptotic results allowing to approach the laws , for large values of N, more easily computable distributions. We present a negative result in Section 3 that leads to the proposal of two methodologies to obtain approximations for the grouping laws for large parameter values. A second approach consists of studying the structure of the distributions in order to find regularities allowing for amenable computations; we defer to a posterior publication for some preliminary results with this approach, where we use elementary numerical semigroup facts to study convolutions of grouping laws.

3. On an Asymptotic Result for Some Grouping Distributions

Suppose that we have a random variable X with grouping distribution taking the values with probabilities with . We know that the higher numbers of different configurations of groupings occur when for all . In this case, it is to be expected that both m, that is, the number of different values taken by X—denoted by —and, simultaneously, these values taken by X, will all be large, while, concurrently, the values of the probabilities such that will be uniformly small. In the case of N and p being simultaneously large, the computational burden of computing the discrete finite valued distribution is excessive, and so it would be useful to have an asymptotic result for the X grouping distribution.

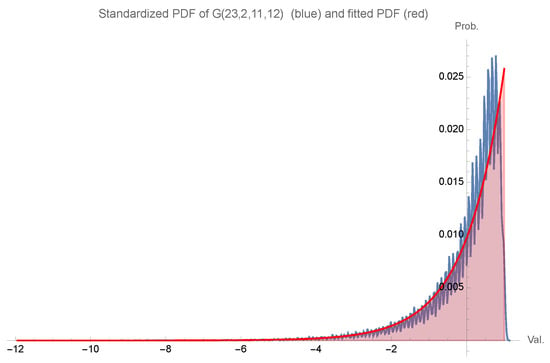

Let us firstly detail some of the challenges we face in the quest for an asymptotic result. We take as an example a random variable X with the grouping distribution ; we first consider the values taken by X and the respective probabilities of occurrence and, from those, we obtain the probability law of the corresponding standardized random variable Y from which we can derive the empirical distribution. The PDF of the empirical distribution of Y is depicted in blue color in Figure 1.

Figure 1.

Empirical distribution PDF of standardized (blue) and fitting of the standardized data of the law of by a normal distribution.

We also fitted the discrete data of the logarithm of , the PDF function of Y, by a function of the form , thus obtaining, upon the exponentiation of and the normalization of , a fitting by a normally distributed random variable of the standardized data of X. The PDF of the normal distribution fitting the PDF of the empirical distribution of Y is depicted in red color also in Figure 1. We observe that the PDF in blue is more akin to a trajectory of a stochastic process having as a mean the PDF in red. The lower irregularity in the left tail of the blue PDF is why, in our perspective, we can only expect an asymptotic normality result that can give acceptable results only for the lower quantiles of the law .

3.1. Some Auxiliary Results on Moment Generating Functions

We first prove an auxiliary result—Theorem 3 ahead—that shows that if we have some control over the behavior of a sequence of moment generating functions, then we also have control over the sequence of the correspondent distribution functions. For that purpose, in the sequel, we consider the following setting of main hypothesis.

We have probability laws and with respective densities and and the correspondent moment generating functions (MGFs) defined by

Let us suppose that the following holds:

- The densities and have exponential decay that isThis is equivalent to the fact that the interior of the sets and —that is, respectively, and —are non-empty sets (see Proposition 5.1 in []). The sets and are, in fact, intervals.

- Also as a consequence, we have that and are holomorphic (analytic) in a strip of the complex plane defined by (see Proposition 5.3 in []).

- For every in the complex plane strip defined by , we have that

- There exists an integrable function G in the variable such that, for every in the complex plane strip defined by , we have

- In order to address the inversion formula, we recall that, by the definition in Formula (4), we can extend the MGF to a strip in the complex plane, with defined, for , bysince in the right-hand side we always have an integrable function. Formula (6) shows that the extension of the MGF to the strip defined by can be considered a family of Fourier transforms, namely, the Fourier transforms of the family . Now by the standard results on the inversion of the Fourier transform—or the characteristic function—we have, for instance, that if for all the function is integrable, then by the inversion theorem (see [], p. 185 or [], p. 126), we obtain that almost surely in x,that is,with , where the right-hand expression is the usual one for the inversion of the Laplace transform (see [], pp. 60–66), with the integral being taken in the principal value sense. We have similar expressions for all . The inversion of the MGF in Formula (7) may hold with a more general hypothesis. It must be noticed that by reason of the Cauchy theorem on contour integrals, the integral in the right-hand side of Formula (7) does not depend on (see [], p. 226).

We secondly recall two important results needed in the sequel allowing the determination of MGF. The first guarantees the existence of a limit distribution from the convergence of a sequence of moments (see [], p. 145).

Theorem 1

(Weakly convergence from convergence of moments). Let be a sequence of probability measures with all elements of the sequence admitting a finite sequence of raw moments such that, for all , there exists such that

and moreover for some ,

Then, there exists a probability measure μ having as raw moments the sequence and such that the sequence converges weakly to μ.

Proof.

Due to the importance of this result, we present a proof that differs from the proof suggested in ([], p. 145). The existence of having as its moment sequence is a consequence of the Hamburger’s moment theorem. In fact, we consider an arbitrary sequence of real numbers and we observe that for all ,

thus showing that the sequence is positive semidefinite. By Hamburger’s theorem, there exists a positive measure over the reals, having the sequence as its moment sequence (see [], p. 63). Moreover, the measure is a probability measure since

using the fact that all measures are probability measures. Now the condition in Formula (8) shows that , that is, the MGF of the probability measure is defined in an interval around zero I with a non-empty interior. As a consequence, for t in a compact interval around zero with a non-empty interior , we have that

since the convergence of the series of moments defining is uniform in J by reason of the condition in Formula (8). Now, by a well-known result (see again [], p. 145), since the sequence of moment generating functions converges to for , which is a non-degenerate interval around zero, then the sequence converges weakly to , and the theorem is proved. □

The next result, a companion of the previous one, gives Carleman’s condition for the unicity of the limit distribution (see [], p. 123, for a proof).

Theorem 2

(The Carleman sufficient condition for the unicity of the solution of the moment problem). Under the conditions and notations of Theorem 1, if we have that

then there is at most one probability measure admitting as its moment sequence.

The next theorem considers the case of probability laws admitting densities with respect to the Lebesgue measure over and gives a bound on the Kolmogorov distance in terms of an integral of the difference of the MGF.

Theorem 3

(Convergence on the Kolmogorov distance from the convergence of the moment generating functions). Suppose that the hypotheses above are verified. Then, for an arbitrary such that , there exists a constant such that, with and ,

Formula (11) shows that the sequence of distribution functions associated with the sequence of probability measures converges in the Kolmogorov distance to the distribution function of the probability measure μ. This happens provided that the sequence of the moment generating functions of the sequence of probability measures converge almost surely to the moment generating function of the probability measure μ, and the difference of the MGF is bounded by an integrable function in the imaginary variable.

Proof.

We take the inversion formula in (7), recalling that the definition is taken in the principal value sense with . We also recall that the inversion formula does not depend on . We then have, for and ,

And knowing that is symmetric around zero, for and taking ,

Since, by Formula (5), the MGF difference is bounded by the integrable function G, we have that for all

and so, for a constant that depends on an arbitrary such that ,

Now, since we have that

by the hypotheses above and the dominated convergence Lebesgue theorem, the final statement in theorem is proved. □

Remark 4

(On the application of Theorem 3). We observe that, by an application of the Cauchy theorem, Formula (11) can be rewritten as

that is, considering in the integral. Moreover, the bound in Formula (5) is verified if, for instance, there is such that,

and then the integral on the right-hand side of Formula (12) is finite. The bound in Formula (13) occurs for normal distributed random variables since, for instance, if , then .

3.2. A Negative Asymptotic Result on the Limit Law of Grouping Distributions

We next formulate a negative result that shows that, from a set of hypotheses that we can derive from the observation of the examples analyzed, there is not an asymptotic probability distribution for the grouping laws. Firstly as a motivation, we report some empirical observations that are relevant for the set of hypothesis underlying the stated result.

Example 1

(Observations of a particular behavior of ). For the case of with and the specific pairs that give the maximum of the number of configurations for the partition in two set groups, we compute the ratios of the moments of the standardized random variables :

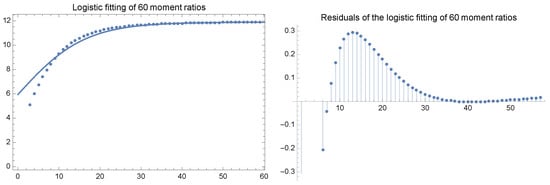

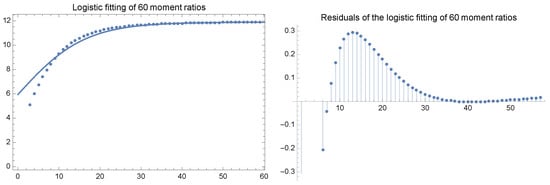

We fit logistic functions of the form given in Formula (14), to the cases of for ,

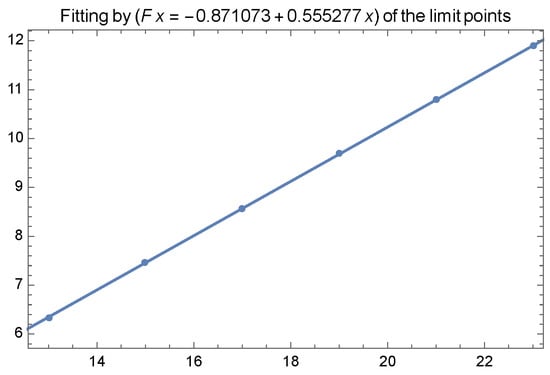

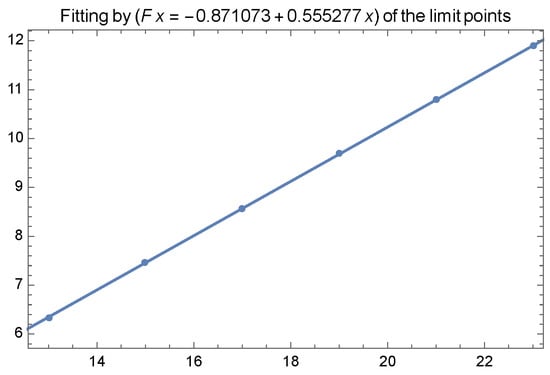

and we obtain the results in Table 4 that show that as seen, also, in Figure 2.

Table 4.

Limit points for moment ratio logistic fittings of for .

Figure 2.

Linear fitting of the limit points of the logistic fittings for considering .

The logistic fitting of the first absolute values of moment ratios for —and the correspondent residuals of the fitting—can be observed, for instance, in Figure 3. The fittings—for small parameter values—give important information for the larger values of the moments since this information is extremely relevant for the the theorem on asymptotic the asymptotic behaviour.

Figure 3.

Logistic fitting of the first absolute values of moment ratios for (left) and correspondent residuals (right).

As already pointed out, we observe that the variation of L with N represented in the Figure 2 corresponds to a linear fitting with L, verifying:

The observations reported in Example 1 are reflected in Hypothesis B—by taking into consideration the results in Table 4—and also in Hypothesis C, both formulated in the following. We stress that Hypothesis D is also a reflection of observed facts although these fact are not explicitly reported in this paper. It should be interesting to have a formal proof for all possible cases of the law of .

Hypothesis 1

(Observed hypothesis on for large N and p). Consider to be the sequence of standardized versions of , a sequence of random variables with grouping probability laws denoted by verifying the following hypothesis:

- A.

- .

- B.

- For all , there exists a sequence such that, with , for we have

- C.

- For , there exists such that

- D.

- We finally suppose that for all m and ,

- These hypothesis are verified in the cases analyzed and referred to in Example 1.

If we assume the set of conditions in Hypothesis 1, we have the following negative result.

Theorem 4

(On the asymptotic behavior of for large N and p). If we have that

then there is a unique generalized function —that is not a probability measure—giving rise to a moment generating function defined by

for t in a non-empty interval centered at zero.

Proof.

We will show that a contradiction comes from the hypothesis in Formula (16). Recall that since the random variables are standardized and since we are interested in the asymptotic behavior, we consider that, by Hypothesis B, we have for and ,

Now, using the hypothesis in Formula (16) and considering for a constant depending on m such that

that is, originated from the product limit in Formula (18), we have that

by the alternating signs of the moments in Formula (15). Since we have that, for all ,

by Theorem 1, there should exist a probability law such that the sequence of laws given by converges weekly to the law . Being so, if we have a random variable with this law, that is , the raw moments of this random variable are given by

Moreover, since the condition in Formula (10) of Theorem 2 is easily seen to be verified, there should exist only one probability distribution with this moment sequence. As a consequence, the MGF of the probability law should be given by

We are now going to show that for all c, the objects giving rise to the sequence of moments in Formula (19)—and the consequent moment generating function in Formula (17)—are not probability measures but, instead, distributions of finite order 1 and 2. These distributions of finite order 1 and 2 are Schwarz generalized functions given by derivatives of measures for which the MGF is also well defined (see again [], p. 226). Consider to be the Dirac probability measure placed in a, and and as its first and second derivatives. We have the following two cases.

- I

- If , we have thatthis case encompasses the extreme case , where the number of groups converges to the number of elements to group, and so asymptotically, all the groups have only one element.

- II

- As a consequence, if , we have thata case which encompasses the case where the number of groups stays fixed while the number of elements to group goes to infinity and so asymptotically at least one of the subgroups has an unbounded number of elements.

- We observe that in both cases, the generalized function presented—such that its MGF is given by Formula (17)—is unique. If , we want to recover Formula (17) by the rules of calculus of the generalized functions. We notice that , given by Formula (21), is a generalized function with compact support and so, its definition domain is the test space of indefinitely differentiable functions over . Recall that if the generalized function with compact support K is given by a locally integrable function f with support K, we have that for every indefinitely differentiable function ,

We stress that the rules of calculus with general generalized functions may be seen as extensions of this particular case. We notice that the variable x corresponds to the integration variable. Since is a indefinitely differentiable function of the variable x, with t being a parameter, we have that

a result in accordance with Formula (20). If , then Formula (17) is , and we then have

We signal that the unicity is a consequence of a general property of the Laplace transforms of generalized functions (see [], p. 80). We can conclude the following. Assuming Hypothesis 1 and, in particular, that is equal to c, entails a contradiction between the consequence of Theorem 1 and the observations just made above. That is, that the object having as moments the expressions in Formula (19) is not a measure but instead a generalized function of order greater than one. □

Remark 5

(On an interpretation of the result in Theorem 4). We so have that although the set of conditions in Hypothesis 1 may be a reasonable extrapolation from the observations—in the cases where computations are feasible—these conditions entail a contradiction. As a consequence of this contradiction, these conditions are not tenable as a general hypothesis on grouping laws. A possible asymptotic result should rely on a different set of conditions.

Although, for the moment, we do not have an asymptotic result, we may nevertheless take advantage of approximate laws. The next remark shows several examples of such approximations.

Remark 6

(On the error committed by using the normal distribution quantiles). The use of the grouping distribution allows to test the significance of grouping by considering the lower quantiles of the distribution. In Table 5, we present the quantiles for for , the correspondent quantiles obtained by reverting the standard normal distribution with the mean and variance of the adequate , and the relative error committed by taking the normal quantiles instead of the proper distribution quantiles.

Table 5.

Quantiles and for for , quantiles and from the normal distribution and corresponding relative errors and .

We observe that the error for the quantile decreases with increasing , as expected, while the error for the quantile is always bounded by 0.2% and oscillates, which may be revealing a slower rate of convergence. A caveat for the use of the quantiles deduced from the normal approximation is that their computation requires the computation of the mean and standard deviation of the law and, for the moment, this can only be achieved exactly by the computation of the values and respective probabilities of the distribution.

3.3. Determining Approximate Laws for Grouping Distributions with Large Parameter Values

In the following, we take into consideration the approximate distributions presented in Remark 6 and a consequence of Theorem 4, namely, that it is irrelevant to assume having in view an asymptotic result. We will consider, for practical purposes, that we know a sequence of approximate values for the moments of for large N and p, and we will provide approximations for the correspondent laws. We stress that an adequate sequence of approximate values for the moments can be determined by a simulation procedure and, being so, does not require the determination of the law of the grouping distribution. Next, we briefly describe methodologies—see Methodologies 1 and 2—to achieve approximations to the unknown laws.

From now on, we consider the hypothesis formulated in Remark 1. And that for the random variable , we have approximations to the raw moments of this random variable given by

with . We now recall an important result, instrumental for a choice between one of two methodologies aiming at the determination of approximate laws for for large . The result allows the possible determination of a finite support for a probability measure (see [], p. 64).

Theorem 5

(A condition for a representing measure to have a finite support). Consider the Hankel matrix of order —presented in Formula (23)—of a positive semidefinite sequence and its determinant denoted by :

Then, the following properties are equivalent:

- I

- The sequence is a moment sequence represented by a measure supported on n points.

- II

- We have that

Now, we consider the sequence of raw moments of the random variable . It is a positive semidefinite sequence as shown in Formula (9). In principle, we only know approximations to this sequence given by Formula (22) and we have approximate values to the corresponding Hankel matrices and to its determinants; for instance, we have that , the approximate Hankel matrix of order 6 is given by

with being a sequence of variable correcting terms issued from Formula (18) and given by

We now propose a two-way methodology to fit a probability distribution to the approximate sequence of moments given in Formula (22) for large N and p in the grouping law . The following methodological description may be taken as a formulation of an open technical implementation problem.

Methodology 1

(Fitting a discrete law with finite support to the approximated moment sequence). Suppose that for the sequence of the determinants of the approximated Hankel matrices , we observe that the condition given in Formula (24) is verified for a given . We consider the problem of determining a discrete law determined by the values it takes, given by the vector and the corresponding vector of probabilities with moments given by the vector . This problem is tantamount to the problem of solving the matricial equation given by , with being the Vandermonde matrix associated with the vector A, that is, the matricial equation given by

in the unknown vectors A and P, given the vector M. A natural assumption for the matrix problem in Formula (25) is that the components of the vector A—that is, the values taken by the random variable with the unknown law—are all distinct; this assumption entails that the determinant of the Vandermonde matrix is non-zero and so the matrix has an inverse.

It may happen that the condition in Formula (24) is not verified for the determinants of the approximated Hankel matrices . In that case we have, as an alternative, to look for an absolutely continuous approximation law. This approach is detailed in Methodology 2. Again, we stress that the following methodological description may be taken as a formulation of an open technical implementation problem.

Methodology 2

(Fitting a continuous law to the approximated moment sequence). We now suppose that for the sequence of the determinants of the approximated Hankel matrices , we observe that the condition given in Formula (24) is not verified. Then, having in view Proposition 3.11 in [] (p. 65), it is natural to look for an absolutely continuous probability distribution. A first natural choice is a mixture with weights in the interval of Gaussian laws for . We recall that the moments of such a law are given by

In order to determine the parameters of this mixture law, we can consider solving the matricial equation given by

in the unknown vectors , and W, given the known vector of approximate moments in the right-hand side of the matricial equation. Let us suppose that is an absolutely continuous probability law that is a solution to the matricial equation in Formula (26). This means that , the sequence of the first moments of the mixture law are approximately equal to the moments of the given vector M. Let us consider Formula (12) in order to estimate the Kolmogorov distance between , the distribution function of the random variable , and the distribution function of the probability law . We observe that since is a finite mixture of Gaussian laws, the hypothesis in Formula (13) is verified. As a consequence, for all , there exist such that

We then have that, if as a consequence of the numerical method used to solve the matricial equation in Formula (26), we can guarantee that,

We will have that the sequence of laws will approach —that is, the law of —in the Kolmogorov distance.

Remark 7

(Further studies in asymptotic results). We intend to further develop Methodologies 1 and 2 in future work starting with a study of laws in Example 1. It is possible that an asymptotic result under a different set of more adequate hypotheses exists; if that is the case, it may be useful to use established techniques to an approach of the limit distribution by finite dimensional ones (see [,,]).

4. An Application of the Grouping Statistic to a Clustering Analysis

We present in the following an application of the grouping statistic to cocoa plants data, from a agricultural station in Ghana, already analyzed in []. The data consist of 144 five-component vectors with the first component indicating the plant variety, the second component indicating the type of soil, and the remaining three components indicating the observed measurements of the variables plant height, stem diameter and dry matter; these variables allow the assessment of the performance of the plantation, linking it to the adequacy between soil and plant variety. The data of twelve plant varieties are in Table A1; the four soils description, allowing for the generic location of the soil in Ghana, are in Table A2. A summary of the main chemical characteristics of the four soils is given in Table A3.

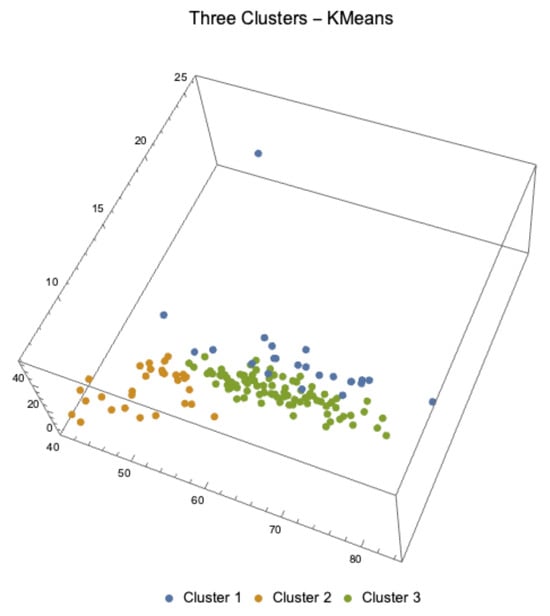

Our purpose with the following analysis is to use an adequate grouping statistic to extract information from the data. In the previous approach, presented in [], the grouping statistic was applied to a natural grouping of the varieties, taking into account some genetic ascent of the varieties. In this work, we first look for a grouping obtained by a clustering technique, namely, the KMeans clustering methodology, applied directly to the set of values of the three variables. The analysis method proposed goes as follows:

- 1.

- We first determine the data grouping in three clusters using the KMeans clustering classification method. A good introduction to classification techniques can be read in []. The results of the classification are presented in Table 6, and a graphical representation of the 3-dimensional clusters of observations is presented in Figure 4.

Table 6. Subsets of varieties present in each cluster according to the 3 variables and 4 soils.

Table 6. Subsets of varieties present in each cluster according to the 3 variables and 4 soils. Figure 4. Graphical representation of the 3 KMeans defined clusters in the three variables plant height, stem diameter and dry matter.

Figure 4. Graphical representation of the 3 KMeans defined clusters in the three variables plant height, stem diameter and dry matter. - 2.

- As a consequence of the classification, we then use the grouping statistics and to assess for each one of the four soils the quality of the grouping. The values obtained are given in Table 7.

Table 7. Values of the grouping statistic according to the 3 variables and 4 soils.

Table 7. Values of the grouping statistic according to the 3 variables and 4 soils. - 3.

- We then present some conclusions reached by the use of the statistics and to the results of the KMeans grouping.

Remark 8

(Analysis of the KMeans grouping in Table 6). It is relevant to notice that for all the three variables—plant height, stem diameter, and dry matter—the three clusters contain the same varieties. With the exception of Soil 2, the set of varieties is always partitioned into two groups—each group in its own cluster—to wit, the set and the complement of this set with respect to , that is, . The exception of Soil 2 is that the set of varieties has variety in a cluster that is empty in the case of the other soils. Since the grouping statistic is applied to ranks of the varieties, obtained from the sums of all the observations in each variety, we discard the observation variety in cluster 1 for Soil 2. For Soil 2, the alternative of considering, instead of , the grouping statistic —corresponding to having one more rank—does not change the final result since the rank value corresponding to the variable is always equal to 13.

Remark 9

(Analysis of the statistic results in Table 7). Let us consider a statistical test using the statistical distribution , where the null hypothesis is given as follows: the grouping produces inhomogeneous groups of varieties. We observe that the rejection region for this test consists of values of the statistic for sufficiently rank homogeneous groups. If the within-group ranks are homogeneous, the values of the statistic—given by Formula (1)—are smaller and so, given that the 5% quantile for the distribution is , we reject the null hypothesis—that the group is not homogeneous—for all the three variables, that is, plant height, stem diameter and dry matter, in Soil 3. This allow us to conclude that Soil 3 provides homogeneous production characteristics as given by the three variables’ values. In order to have a more detailed look at the particular behavior of Soil 3, we present in Table 8 the ranks of the varieties in each cluster for all three variables.

Table 8.

Subsets of ranks of varieties present in each cluster according to the 3 variables and 4 soils.

Remark 10

(Analysis of the ranks of the varieties per clusters in Table 8). It is clear from the observation of the ranks (in red color) that the varieties in cluster 3 for Soil 3——perform homogeneously worse than the remaining varieties of the set since the ranks are, in general, larger, the exception being variety for the variable stem diameter. Varieties have the second characteristic string CRG0314 in common—see Table A1 in the Appendix A—and so this may be an indication of the inadequacy of this ancestor characteristic of varieties to Soil 3.

5. Discussion

As stated in the first line of the introduction, a particular example of grouping distribution was used in [], initially to allow the application of a well-known statistical methodology analysis and secondly to extract within-group homogeneity information of the grouping. In the present work, we studied the whole family of grouping distributions. Having observed severe computational challenges for the computation of the laws of grouping distributions—for large parameter values—we explored the possibility of an asymptotic result that would allow the determination of an approximate distribution for large parameter values. In order to obtain such an asymptotic result, we assumed some hypothesis heuristically deduced from the observation of the law behavior for small parameter values. It came as a surprise that under such a hypothesis, the asymptotic limit is a generalized function and not a measure that may be considered a probability distribution. Left with the problem of obtaining approximate distributions for the laws of grouping distributions, in the case of large parameter values, we proposed two methodologies that must be further studied since they present difficult technical implementation problems. In the context of Big Data, the grouping of variables and the determination of potential within-group homogeneities may represent a useful tool.

In this work, we deepened the study of some grouping distributions that find an important usage in testing the internal homogeneity—the within-group homogeneity—of subgroups of a given group. An example of such usage using real data was, presented and the comparison between the use of grouping statistics and the use of classic ANOVA highlighted the power of the grouping distributions to bring forward new information from the data. We pointed out the computational challenges arising from large values of the cardinal of the values to be grouped and the cardinal of the set of subgroups to consider—the main parameters of the grouping distribution—and aimed at a better understanding of the properties of the grouping distributions for large parameter values by several ways. In one of those ways, we proved that there does not exist an asymptotic result, giving a limit probability law when the main parameters tend to infinity and under a set of hypothesis that were observed in a significant set of examples. To contravene this negative result, we proposed, as implementation open problems, the ulterior study of two methodologies aimed at providing distribution approximations for large values of the main parameters.

6. Conclusions and Future Work

In this work, we formally defined and studied a family of discrete probability distributions—already introduced in a previous applied work—that may be used to assess the within-group homogeneity of a partition of observations in groups according to some criteria; examples of such criteria are the variables having some genetic common ascendent, and the values of the variables being grouped according to some clustering method such as KMeans. For the smaller values of the parameters, for which the computational effort is manageable, we provided tables of quantiles allowing to perform a test; the proposed test has as a null hypothesis that the group is not homogeneous. We also further developed a moment generating function result with a twofold purpose: firstly to analyze the possibility of an asymptotic result for the grouping distributions for large values of the parameters under some natural hypothesis, and secondly to assess the quality of a continuous approximation of the grouping distribution by a mixture of normal distributions. We presented an analysis of cocoa breeding experiment data, using an adequate grouping distribution, and we were able to extract information on the homogeneous behavior of cocoa plants production variables in one specific type of soil. By further analyzing the behavior of the variables grouping, we were able to conclude on the possible inadequacy of two variables in that specific type of soil.

There are at least three directions of development in the study of the grouping distributions. The first avenue is the development of the two methodologies proposed to provide distribution approximations for large values of the main parameters; this development may require an ingenious use of some optimization techniques. The second avenue is the investigation of the properties of the numerical semigroups associated to the sequence of convolutions of the grouping laws. And the third avenue is the quest for asymptotic results under different sets of hypotheses.

Author Contributions

Conceptualization, M.L.E., N.P.K., C.N., K.O.-A. and P.P.M.; methodology, M.L.E.; software, M.L.E.; validation, M.L.E., N.P.K., C.N., K.O.-A. and P.P.M.; formal analysis, M.L.E., N.P.K., C.N., K.O.-A. and P.P.M.; investigation, M.L.E., N.P.K., C.N., K.O.-A. and P.P.M.; resources, M.L.E.; data curation, M.L.E.; writing—original draft preparation, M.L.E.; writing—review and editing, M.L.E., N.P.K., C.N., K.O.-A. and P.P.M.; visualization, M.L.E., N.P.K., C.N., K.O.-A. and P.P.M.; supervision, M.L.E.; project administration, M.L.E.; funding acquisition, M.L.E. and C.N. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by national ressources through the FCT—Fundação para a Ciência e a Tecnologia, I.P., under the scope of the project UIDB/00297/2020 (https://doi.org/10.54499/UIDB/00297/2020, accessed on Monday 30 September 2024) and project UIDP/00297/2020 (https://doi.org/10.54499/UIDP/00297/2020, accessed on Monday 30 September 2024) (Centre for Mathematics and Applications, University Nova de Lisboa) and also through project UIDB/00212/2020 (https://doi.org/10.54499/UIDB/00212/2020, accessed on Thursday 13 September 2025) and project UIDB/00212/2020 (https://doi.org/10.54499/UIDP/00212/2020, accessed on Thursday 13 September 2024). (Centre for Mathematics and Applications, Universidade da Beira Interior). We thank Jerome Agbesi Dogbatse of the Cocoa Research Institute of Ghana for allowing us to use the data in this study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MGF | Moment Generating Function |

| ANOVA | Analysis of Variance |

| Probability Density Function | |

| KMeans | Clustering classification methodology |

Appendix A. Data Characteristics

In this appendix, we detail some information available on the data and on the ANOVA analysis on the data.

Appendix A.1. On the Varieties of Plants of Cocoa in the Data

According to Table A1, there are 12 different varieties, 4 from the ascendant PA150 , 5 from T63/967 and 3 other varieties with no common ascendant.

Table A1.

List of varieties.

Table A1.

List of varieties.

| Code | Variety | Code | Variety | Code | Variety |

|---|---|---|---|---|---|

| Standard Variety | |||||

Appendix A.2. On the Soils of the Data

The surface soil (0–15 cm) of three soil series, namely, Soil 1, Soil 2, and Soil 3, was collected from secondary forests in the Western Region. Soil 4 is from the Eastern Region. This region has the recommended soil pH required for cocoa production and is considered to be one of the best cocoa-growing soils and so was also included in the study. The four surface soils were passed through a 2 mm mesh sieve and a subsample characterized through the initial laboratory analysis. A specification of the origin of the soils is given in Table A2.

Table A2.

List of soils.

Table A2.

List of soils.

| Name | Region of Provenance in Ghana |

|---|---|

| Soil 1 | Forest Ochrosols-Oxysols intergrades (Saamang) |

| Soil 2 | Forest Oxysol (Samreboi) |

| Soil 3 | Forest Ochrosol (Boako) |

| Soil 4 | Forest Rubrisols-Ochrosol intergrade (Tafo) |

In general, all forest Ochrosols are acidic in nature, but the management practices applied—e.g., fertilizer application—may change the levels of chemical properties measured. The chemical properties of the different soils are specified in Table A3.

Table A3.

Chemical properties of different soils.

Table A3.

Chemical properties of different soils.

| Soil Name | pH (a) | OC (b) (%) | TN (c) (%) | AP (d) (mg/kg) | K (e) (cmolc/kg) | Mg (f) (cmolc/kg) | Ca (g) (cmolc/kg) |

|---|---|---|---|---|---|---|---|

| Soil 1 | 4.991 | 3.36 | 0.327 | 17.593 | 0.263 | 0.55 | 7.98 |

| Soil 2 | 4.208 | 0.5 | 0.095 | 20.715 | 0.569 | 3.142 | 1.729 |

| Soil 3 | 5.072 | 2.34 | 0.309 | 19.849 | 0.329 | 2.861 | 6.309 |

| Soil 4 | 5.635 | 0.76 | 0.123 | 23.845 | 0.57 | 3.009 | 6.473 |

| Critical min | 5.6 | 3.5 | 0.09 | 20 | 0.25 | 1.33 | 7.5 |

(a) pH—potential of hydrogen. (b) OC—Organic Carbon. (c) TN—Total Nitrogen. (d) AP—Available Phosphorus. (e) K—Exchangeable Potassium. (f) Mg—Exchangeable Magnesium. (g) Ca—Exchangeable Calcium.

Appendix A.3. ANOVA of the Data

We present the results of the ANOVA of the data with the purpose of establishing a contrast—in terms of the production performance—of the influence of the factors variety and soil on the three variables plant height, stem diameter, and dry matter. For the purpose of allowing a correct perspective on what follows, we must stress the distinct nature of the two statistical approaches presented: the grouping distribution application and the ANOVA analysis. The ANOVA analysis is focused on comparing the means of variables and so it gives information on differences; in contrast, the grouping statistics operates on ranks of the sums of all the values of the variables and gives information on the within-group homogeneity.

Remark A1

(Analysis of the results in Table A4). As usual, we have that the null hypothesis for each factor is that there is no significant difference between groups of that factor and so, a sufficiently small p-value—values coloured in red in the table—allows us to reject the null hypothesis. We may then conclude that the type of soil factor is always relevant for the production performance in the three variables, that the variety factor is only relevant for the plant height variable, and that the interaction in factors variety–soil is relevant for the dry matter variable. As a consequence, the results of the statistical clustering analysis concerning Soil 3 acquire relevance.

Table A4.

ANOVA p-values for factors variety, soil, and interaction variety–soil.

Table A4.

ANOVA p-values for factors variety, soil, and interaction variety–soil.

| Factors | Plant Height | Stem Diameter | Dry Matter |

|---|---|---|---|

| Variety | |||

| Soil | |||

| Variety–Soil |

Remark A2

(Analysis of the results in Table A5). The results in this table should be compared with the results of Table 8 where we see that, in general, the ranks in Cluster 3 for varieties , , and are almost always the worst possible ranks obtained from the sum of all observations, the exception being for the variable stem diameter, a case for which variety has the first rank.

Nevertheless, we can observe that in more other cases, the averages for some varieties surpass the global averages. The values in the table show that for the variable plant height, the average in Soil 3 is larger than the global average for all three varieties; for the variable stem diameter, the average in Soil 3 is larger than the global average for varieties and ; and for the variable dry matter, the average in Soil 3 is larger than the global average for varieties and .

Moreover, we have that for the variable plant height, the average plant height in Soil 3 is larger than the global average of plant height in Soil 3 for the variety ; for the variable stem diameter, the average stem diameter in Soil 3 is larger that the global average of the stem diameter in Soil 3 also for the variety ; for the variable dry matter, the average dry matter in Soil 3 is larger than the global average of dry matter in Soil 3 for the varieties and .

These results dealing with averages complement the results based on the ranks of total outputs in each of the variables and varieties.

Table A5.

Averages values for the variables plant height, stem diameter, and dry matter globally and in Soil 3.

Table A5.

Averages values for the variables plant height, stem diameter, and dry matter globally and in Soil 3.

| Variety | A (PH) (a) | Plant Height (b) | A (SD) (c) | Stem Diameter (d) | A (DM) (e) | Dry Matter (f) |

|---|---|---|---|---|---|---|

| 67.2778 | 10.4125 | 34.1715 | ||||

| 59.7215 | 11.297 | 32.4229 | ||||

| 64.3505 | 10.2296 | 31.4934 |

A (PH)(a): Average plant height for each variety. Plant Height(b): The global average plant height in Soil 3 is 67.395. A (SD)(c): Average stem diameter for each variety. Stem Diameter(d): The global average stem diameter in Soil 3 is 10.765. A (DM)(e) Average dry matter for each variety. Dry Matter(f): The global average dry matter for Soil 3 is 34.4709.

Appendix A.4. A Proof of a Referred Result

In this appendix, we collect the proof of a result that was quoted in the main text and that may have some interest on its own. The following proof is well known and is presented only for the completeness of the work.

Theorem A1.

The multinomial coefficient is maximized when the values of are as close to each other as possible.

Proof.

The multinomial coefficient is defined by

where . The Stirling’s approximation formula for the integer factorials is the following for large n:

Applying Stirling’s approximation to the multinomial coefficient gives

Simplifying this expression, we obtain

We are now going to perform an analysis of the logarithmic terms. To maximize the multinomial coefficient, we maximize its logarithm:

Using Stirling’s approximation for logarithms

So,

Since , we have

We now use Jensen’s Inequality. The function is convex for . By Jensen’s inequality, for such that :

therefore,

This implies that:

Hence, the expression is maximized when are as equal as possible. □

References

- Opoku-Ameyaw, K.; Nunes, C.; Esquível, M.L.; Mexia, J.T. CMMSE: A nonparametric test for grouping factor levels: An application to cocoa breeding experiments in acidic soils. J. Math. Chem. 2023, 61, 652–672. [Google Scholar] [CrossRef]

- Yitzhaki, S. Gini’s mean difference: A superior measure of variability for non-normal distributions. Metron 2003, 61, 285–316. [Google Scholar]

- Zenga, M.; Polisicchio, M.; Greselin, F. The variance of Gini’s mean difference and its estimators. Statistica 2004, 64, 455–475. [Google Scholar]

- Haye, R.L.; Zizler, P. The Gini mean difference and variance. Metron 2019, 77, 43–52. [Google Scholar] [CrossRef]

- Baz, J.; Pellerey, F.; Díaz, I.; Montes, S. Stochastic ordering of variability measure estimators. Statistics 2024, 58, 26–43. [Google Scholar] [CrossRef]

- Conover, W.J. Practical Nonparametric Statistics, 3rd ed.; John Wiley & Sons, Inc.: New York, NY, USA, 1999; pp. viii+584. [Google Scholar]

- Butucea, C.; Tribouley, K. Nonparametric homogeneity tests. J. Stat. Plan. Inference 2006, 136, 597–639. [Google Scholar] [CrossRef]

- Graham, R.L.; Knuth, D.E.; Patashnik, O. Concrete Mathematics: A Foundation for Computer Science, 2nd ed.; Addison-Wesley Publishing Company: Reading, MA, USA, 1994; pp. xiv+657. [Google Scholar]

- Esquível, M.L. Probability generating functions for discrete real-valued random variables. Teor. Veroyatn. Primen. 2007, 52, 129–149. [Google Scholar] [CrossRef]

- Rudin, W. Real and Complex Analysis, 3rd ed.; McGraw-Hill Book Co.: New York, NY, USA, 1987; pp. xiv+416. [Google Scholar]

- Katznelson, Y. An Introduction to Harmonic Analysis; Dover Publications, Inc.: New York, NY, USA, 1976; corrected edition; pp. xiv+264. [Google Scholar]

- Widder, D.V. The Laplace Transform; Princeton Mathematical Series; Princeton University Press: Princeton, NJ, USA, 1941; Volume 6, pp. x+406. [Google Scholar]

- Schwartz, L. Mathematics for the Physical Sciences; Dover Publications, Inc.: Mineola, NY, USA, 1966; p. 358. [Google Scholar]

- Kallenberg, O. Foundations of Modern Probability, 3rd ed.; Probability Theory and Stochastic Modelling; Springer: Cham, Switzerland, 2021; Volume 99, pp. xii+946. [Google Scholar] [CrossRef]

- Schmüdgen, K. The Moment Problem; Graduate Texts in Mathematics; Springer: Cham, Switzerland, 2017; Volume 277, pp. xii+535. [Google Scholar]

- Durrett, R. Probability—Theory and Examples, 5th ed.; Cambridge Series in Statistical and Probabilistic Mathematics; Cambridge University Press: Cambridge, UK, 2019; Volume 49, pp. xii+419. [Google Scholar] [CrossRef]

- Beffa, F. Weakly Nonlinear Systems—With Applications in Communications Systems; Understanding Complex Systems; Springer: Cham, Switzerland, 2024; pp. xiv+371. [Google Scholar] [CrossRef]

- Lindner, M. An introduction to the limit operator method. In Infinite Matrices and Their Finite Sections; Frontiers in Mathematics; Birkhäuser Verlag: Basel, Switzerland, 2006; pp. xv+191. [Google Scholar]

- Ran, A.C.M.; Serény, A. The finite section method for infinite Vandermonde matrices. Indag. Math. 2012, 23, 884–899. [Google Scholar] [CrossRef]

- Hernández-Pastora, J.L. On the solutions of infinite systems of linear equations. Gen. Relativ. Gravit. 2014, 46, 1622. [Google Scholar] [CrossRef]

- Scitovski, R.; Sabo, K.; Martínez-Álvarez, F.; Ungar, Š. Cluster Analysis and Applications; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).