Abstract

In this paper, the adaptive consensus problem of unknown non-linear multi-agent systems (MAs) with communication noises under Markov switching topologies is studied. Based on the adaptive control theory, a novel distributed control protocol for non-linear multi-agent systems is designed. It consists of the local interfered relative information and the estimation of the unknown dynamic. The Radial Basis Function networks (RBFNNs) approximate the nonlinear dynamic, and the estimated weight matrix is updated by utilizing the measurable state information. Then, using the stochastic Lyapunov analysis method, conditions for attaining consensus are derived on the consensus gain and the weight of RBFNNs. The main findings of this paper are as follows: the consensus control of multi-agent systems under more complicated and practical circumstances, including unknown nonlinear dynamic, Markov switching topologies and communication noises, is discussed; the nonlinear dynamic is approximated based on the RBFNNs and the local interfered relative information; the consensus gain k must to be small to guarantee the consensus performance; and the proposed algorithm is validated by the numerical simulations finally.

MSC:

93A16; 68T42

1. Introduction

Multi-agent systems consist of multiple interacting agents with simple structures and limited abilities, it can complete complex tasks that a single agent or a monolithic system cannot accomplish itself. Because of the advantages of strong autonomy and low cost, multi-agent systems are widely applied in various fields, such as spacecraft [1,2], Wireless Sensor Networks [3], biological systems [4], microgrids [5,6] and so on.

As the fundamental research issue of multi-agent systems, consensus control refers to designing a valid distributed protocol to prompt all agents to asymptotically reach an agreement in a state (position, velocity) level [7]. There have been numerous significant research studies focusing on the consensus control of multi-agent systems. Olfati-Saber et al. considered the consensus problems of several common linear continuous, discrete multi-agent systems and derived the conditions for achieving consensus [8,9,10,11,12,13], but they assumed that the communications among agents are ideal, i.e., each agent receives accurate state information from their neighboring agents. However, because of the existence of various factors, such as measurement errors, channel fading, thermal noises and so on, the information each agent from their neighboring agents inevitably undergoes interference from communication noises. And the systems with communication noises cannot achieve a consensus by using the conventional state feedback protocols adopted in [8,9,10,11,12,13]. Based on the stochastic approximation theory, Huang et al. [14] introduced the decreasing step size to reduce the effects of the communication noises and obtained the mean square consensus conditions of discrete systems in three cases: a symmetric network, a general network and a leader-following network. Immediately after Huang’s research was conducted, Li and Ren et al. [15,16,17] introduced time-varying gain to attenuate the communication noises and established the sufficient and necessary conditions for achieving mean square consensus of integer-order continuous multi-agent systems. Subsequently, numerous significant works emerged that focused on consensus control of multi-agent systems with communications noise on distinct scenarios, such as Markov switching topologies [18,19,20,21], time-delays [22,23], event-triggered [24,25], heterogeneous [26,27], collaborate–compete interactions [28,29] and so on.

The studies mentioned above are all about multi-agent systems with linear dynamics. However, because of the complexity and uncertainty of the actual environment, most of the practical systems are nonlinear and even the dynamics are unknown. Although there has been research concerning the consensus control of nonlinear multi-agent systems with communications noises, such as [30,31,32,33], the noises considered in these papers are multiplicative noise, the non-linear parts of the systems and the coefficient of the noises are usually assumed to satisfy the Lipschitz conditions , and the Lipschitz L constant is known, which means the information on the non-linear dynamic is known. And in references [34], although the additive noises are considered, the Lipschitz constant is still essential knowledge before the non-linear dynamic can be established. For the non-linear multi-agent systems with coupling parametric uncertainties and stochastic measurement noises, Huang [35] designed a distributed adaptive mechanism consisting of two parts: the adaptive individual estimates for the uncertainties and the local relative information multiplying time-varying gain, which were guaranteed to solve the asymptotic consensus non-linear multi-agent systems with parametric uncertainties. The results show that the proposed protocol prevents the inherent nonlinearities from incurring finite-time explosion and ensures an almost-certain consensus.

Currently, Neural networks (NNs) have increasingly widespread applications in many fields such as artificial intelligence, system control, image recognition, language processing and so on. In the field of system control, Neural networks play an important role in model predictive control [36], fuzzy control [37], and adaptive control [38], and became powerful tools in the stability analysis of nonlinear multi-agent systems because of their excellent approximation abilities [38,39,40,41,42,43]. In ref. [38], Chen et al. proposed a NNs control approach for the nonlinear multi-agent systems with time delay. The nonlinear dynamic is approximated by RBFNNs and the time-delay is compensated by a Lyapunov–Krasovskii functional. Ma et al. [39] considered the nonlinear multi-agent systems with time delay and external noises. Meng et al. [40] proposed a robust consensus protocol which can alleviate the effects of external disturbances and residual error generated by the approximated term, which guarantee the uniformly ultimately consensus of the system. Mo et al. [41] proposed an integer-order adaptive protocol for the fractional-order multi-agent systems with unknown nonlinear dynamic and external disturbances, and the effectiveness of the proposed protocol of verified by constructing the Riemman–Liouvile fractional-order integral and the finite-time tracking consensus problems of high-order nonlinear multi-agent systems were studied in refs. [42,43]. Although significance results have been obtained in the consensus control of multi-agent systems [38,39,40,41,42,43], these works did not take into account the existence of communication noises.

It is, therefore, the purpose of the current paper to resolve the consensus problems of multi-agent systems with unknown nonlinear dynamic and communication noises. Inspired by these works above, we consider the consensus problems of unknown nonlinear multi-agent systems with communication noises and Markov switching topologies. Firstly, the interfered local relative information is constructed to ensure the consensus performance, and the RBFNNs are used to model the nonlinearity. Finally, by using the stochastic stability theory, conditions for reaching consensus are derived. The main contributions of this paper are as follows: (1) The non-linear dynamic is unknown, and it is approximated by the RBFNNs; (2) conditions for reaching consensus on consensus gain and the weight of RBFNNs are obtained, the consensus gain k needs to be small enough to guarantee the consensus performance; (3) this paper considers the communication environment with communication noises and Markov switching topologies; this is more practical, since the communication is inevitable.

The rest of this paper is organized as follows. The problem description and preliminary knowledge, including graph theory, RBFNNs and the Markov process, are introduced in Section 2. Section 3 introduces how to design the protocol and conduct a detailed convergence analysis.Three simulation results are shown in Section 4 and conclusions are drawn in Section 5.

Notations: denote the set of real numbers, denotes the set of matrixes; denote the dimensional identity matrix; represent the zero vector or matrix with corresponding dimension; represent the eigenvalue of the matrix; and represent the maximum and minimum eigenvalue of matrix L, respectively; denotes the Frobenius norm; ⊗ denotes the Kronecker product; denotes the trace of matrix represents the equivalent infinitesimal of x.

2. Problem Description and Preliminaries

2.1. Graph Theory

The interaction and communication of agents can be characterized by an undirected graph . A undirected graph includes vertex sets , that represent agent ith, and the edges set , the adjacency matrix of , and if edge , and ; otherwise, is the element of the i-th and j-th. Agent j is called the neighbor of i if i can obtain the state information of j and . And represents the neighbors set of i. The degree of i is denoted as , and the degree matrix ; the Laplacian matrix is defined as . is connected if, for each pair-wise agent j and i, there exists a path [44].

According to the undirected property, the following lemma exists:

Lemma 1

([10]). For a connected undirected graph , is the eigenvector of , and all the eigenvalues of can be written as . And there exists an orthogonal matrix such that .

2.2. Problem Description

Consider unknown nonlinear multi-agent systems; the dynamic is described as follows:

where , represent the state and input of i, respectively, : is the unknown nonlinear vector function, .

The state information of i-th agent received from neighbor agent j is:

where , , are independent standard white noises and finite noises intensities, respectively.

Assumption 1.

The system (1) is measurable; that is, each agent can measure the state information of its neighbor agents, and interference is caused by the communication noises.

Definition 1.

Remark 1.

Denote , , , . Obviously, Equation (3) is equivalent to . Basically, Definition 1 is a rigorous description of the consensus. From the point of view of practical control, we can relax Definition 1 as follows.

Definition 2.

For any chosen , if there exists a distributed protocol that satisfies

the consensus is achieved.

Remark 2.

In [15], all agents converge to a random variable, which is determined by the initial states of agents. Unlike the mean square average consensus of linear multi-agent systems in [15], however, the results are not available for the systems with a nonlinear dynamic. We committed to the final states of all agents achieving consensus without converge to a fixed random variable in this paper.

For an ergodic Markov process with limited states , which is defined in a complete probability space , the state transition probability matrix is defined as:

where .

Since the Markov process is ergodic, there exists a stationary distribution , and .

This paper considers that the multi-agent system’s topologies switch among , and the switching is dominated by an ergodic Markov process.

Assumption 2.

Each graph is unconnected, but their union graph is connected.

2.3. RBFNNs

The Neural network has an excellent ability to approximate unknown functions. The RBFNNs are extraordinarily effective in the analysis of consensus control. For a unknown nonlinear function , it can be rewritten as the approximated form

where , is the weight matrix, is the basis function vector, and

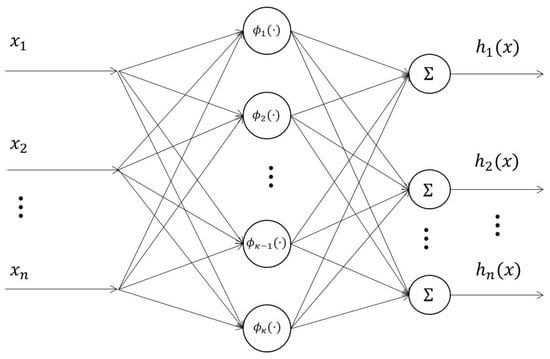

where represents the receptive field of the center, is the input of the RBFNNs, is the width of Gaussian function. Figure 1 shows the structure of the RBFNNs, and , , , , represent the input layer, neuron layer and output layer, respectively.

Figure 1.

Strcture of the RBFNNs.

It is proved that any unknown nonlinear function can be approximated by the Neural network to any accuracy over a compact set if the Neural networks contain enough neurons [45]. Therefore, for a given unknown nonlinear function and any approximation error , there holds the following equation,

where is the ideal weight matrix, satisfies . In practical terms, the ideal weight matrix is unknown and it needs to be estimated. Define as the estimation of ; then can be written in the following form.

and updates online to approximate the ideal weight matrix by using the states information of x.

Lemma 2

([46]). For a continuous and bounded function , if satisfies , and , then .

3. Consensus Analysis

In this paper, we aim to derive an appropriate adaptive control protocol based on the local interfered relative state information and the RBFNNs for each agent of the system, such that the effect of communication noises is reduced and the consensus of non-linear multi-agent systems (1) can be achieved simultaneously.

For convenience, we will omit if there is no confusion in the following paper.

We denote the local consensus error of system (1) as

where represents the Markov process. Since the communication topology is switching and is dominated by the switching signal , the is adopted to indicate the internal communication among agents.

Next, we denote a positive semidefinite scalar function

where .

Since the communication topology is unconnected, according to the graph theory and matrix theory, has at least two non-zero eigenvalues. If we assume the number of zero eigenvalue is , then, the eigenvalues can be written as , and the eigenvalues of can be written as , where represents the minimum nonzero eigenvalue of , then

where , , , .

If the dynamic of is exactly known, the communication noises do not exist, and the communication topology of system (1) is connected and stationary, we take the derivative of

And the consensus problem of system (1) can be solved by designing a simple adaptive control protocol , then, and only when . can be regarded as a Lyapunov function, and , according to the Lyapunov stability theory, we can obtain system (1) to achieve consensus when .

However, due to the existence of the unknown dynamic , communication noises and topology switching, the simple adaptive control protocol has no effect on the consensus control of system (1).

Based on the above analysis, the proposed distributed adaptive control scheme is as follows.

Since are unknown nonlinear functions, protocol (9) cannot be used directly for the analysis. According to the description of Section 2.3, for any given constant , can be rewritten as:

where is the ideal weight matrix, is the estimation of , , , represent the number of neurons, .

Remark 3.

In [30,32,33], the nonlinear dynamics usually are assumed to satisfy the Lipschitz condition, , which means that the Lipschitz constant is known, and the conditions for achieving consensus contain the Lipschitz constant L. However, because the nonlinear functions are unknown, namely, the Lipschitz constant is also unknown, the protocol used in these references cannot be applied for system (1) . So the RBFNNs is adopted to approximate in this paper because of its excellent approximation ability.

Let be the estimate of the ideal weight matrix ; then, based on the RBFNNs, we proposed the following adaptive control protocol:

where , is a predetermined constant, , are positive parameters to be determined.

Because the ideal weight matrix is also unknown, the Neural networks are introduced to estimate , and the nonlinear dynamic can be replaced by . Then, the estimated matrix updated by using the measurable state information.

In protocol (11), is the feedback controller, which is adopted to drive all agent achieve consensus. It contains the ith agent state information and its neighbor agents’ state information, which are the essence of distributed control. When the consensuses are eventually achieved, the inequalities (4) in Definition 2 will hold.

The update law (12) is to prompt the when the time tends to infinity, which means estimates the ideal matrix accurately, namely, the approximate the non-linear dynamic accurately. And the term can be seen as the scalar product of and , when , the angle between and is smaller than , and the should be decreased; otherwise, the should be increased. When the angle between and is adjusted to , , the ideal weight matrix is estimated well; namely, the non-linear dynamic is approximated well.

Then, according to the theory of stochastic differential equations, (13) can be rewritten as

where , , are standard Brownian motions.

Theorem 1.

Proof.

Choosing the Lyapunov candidate:

where , , , if and , otherwise. Obviously .

From (7), we obtain

where represent the minimum nonzero eigenvalue and maximum eigenvalue of , respectively.

With reference to Theorem 1 in [39], we can obtain

And, according to Young’s inequality, we obtain

Since the Markov process is ergodic, it will travel through all states and eventually tend to the stationary distribution , and because the union topology of is connected, there exists an orthogonal matrix , .

Thus, when ,

where , , , , . From Lemma 2, we obtain

Furthermore,

where .

Then,

, which means that is bounded when , and for , by choosing the , , choosing the value properly, and because of the arbitrariness of , there exists .

Furthermore,

which means that, asymptotically, convergence of system (1) is achieved. □

Remark 4.

The analysis of Theorem 1 shows that the consensus gain k determines the convergence speed and attenuate the communication noise simultaneously. It should be neither too small, so that control effect is not obvious, or too big, so that the communication noises cannot be attenuated. We can choose the appropriate value of k and to make a trade-off between the convergence rate and the noises reduction.

Remark 5.

The analysis in [16,17] is based on solving the stochastic differential equation; unfortunately, it is hard or perhaps even impossible for non-linear systems, and it is obvious that these control protocols are not applicable to system (1). Therefore, the RBFNNs were adopted to approximate the nonlinear dynamic and have proved to be extraordinarily effective.

Remark 6.

In [38,39,40,42,43], the nonlinear multi-agent systems without communication noise were studied and conditions for achieving consensus were obtained. The results of the analysis show that the value of consensus gain k is bigger, and the convergence rate is faster. However, the analysis in Theorem 1 shows that to attenuate the communication noises and achieve a better consensus performance, the consensus gain k should be sufficiently small.

4. Simulations

Example 1.

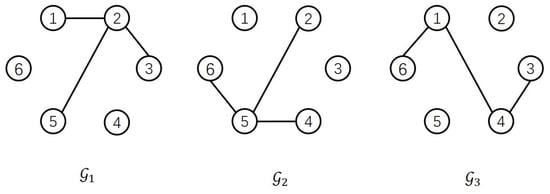

Consider a nonlinear multi-agent system consisting of six agents. The interaction communications are shown in Figure 2; the switching of the topologies is dominated by a Markov process whose transition probability matrix is and the switching frequency is .

Figure 2.

The communication topologies of Example 1.

The nonlinear dynamic is described as follows:

where , , , the noise intensities are , , , , . The initial states are .

In this paper, we choose the RBFNNs with 12 neurons and a random center, which are distributed uniformly in the range of , and , , , , ,

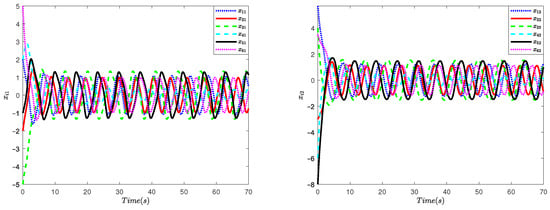

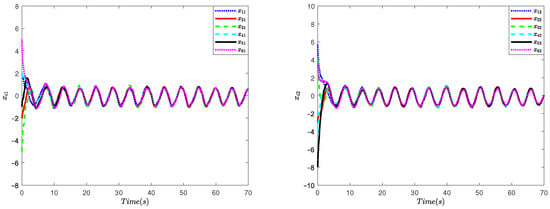

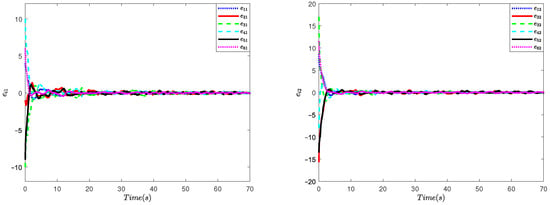

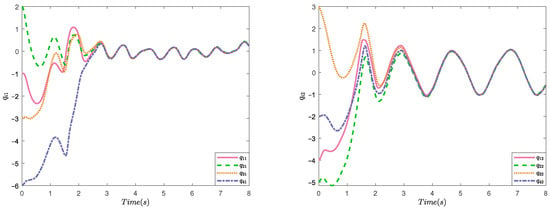

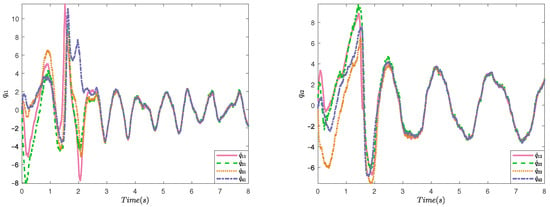

Figure 3 shows the trajectories of all agents without and control input; Figure 4 and Figure 5 show the state of all agents and consensus errors when , from which we can see that all agents achieve consensus and the consensus errors tend towards zero eventually. Figure 6 and Figure 7 show the state trajectories of all agents and consensus errors when ; they show that when k is not small enough, the communication noise are not attenuated and the consensus cannot be achieved, which is consistent with Remark 1.

Figure 3.

State trajectories of , without protocol.

Figure 4.

State trajectories of , when .

Figure 5.

The trajectories of consensus errors , when .

Figure 6.

State trajectories of , when .

Figure 7.

The performance of consensus errors , when .

Example 2.

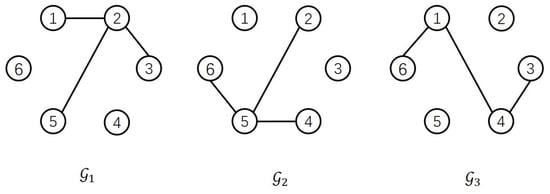

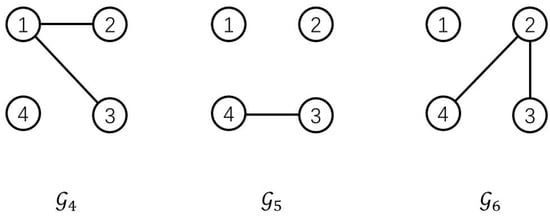

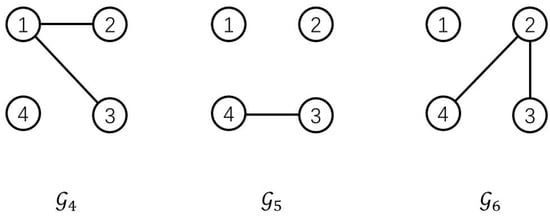

The multi-manipulator systems have wide applications in industrial production, such as in automobile assembly and freight handling. In this example, consider a multi-manipulator system consisting of four single-link manipulators. Each manipulator can be regarded as an agent. The communication topologies are switching among , which are shown in Figure 8, with probability P, and the dynamic of each manipulator can be found in [47].

Figure 8.

The communication topologies of Example 2 and Example 3.

Let all the noise intensities be 1, choosing the RBFNNs with six neurons, and let the centers be distributed uniformly in the range of , , , , , , The initial states are set as .

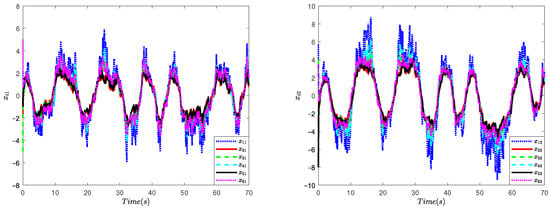

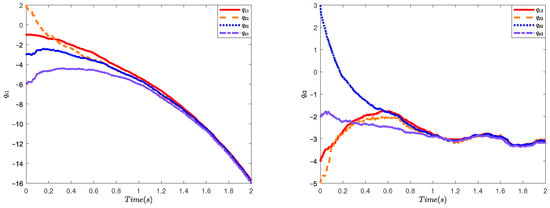

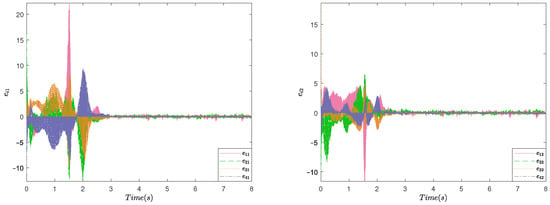

Figure 9 and Figure 10 show the trajectories of when and , respectively. This shows that taking a smaller value of k can make a better consensus performance.

Figure 9.

The trajectories of , when .

Figure 10.

The trajectories of , when .

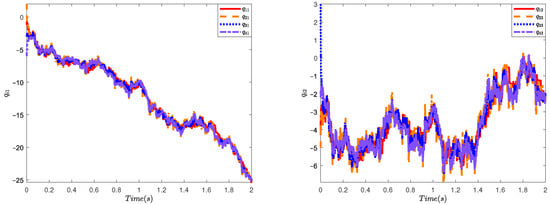

Example 3.

To further validate the effectiveness of the proposed protocol, we consider a multi-manipulator system consisting of four two-link manipulators, assuming that communication topologies and the switching are the same as in Example 2. And the dynamic of each manipulator can be found in [48]

We denote ; then, the system model can be transformed as the form of (1)

Letting all the noise intensities be 2, we choose the RBFNNs with six neurons which have the same setting as described in example 2, , , , The initial set is also and .

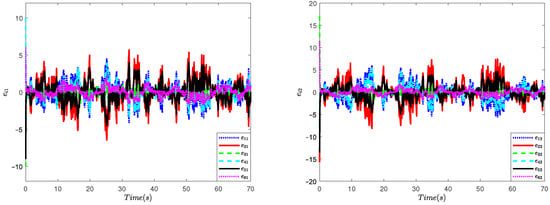

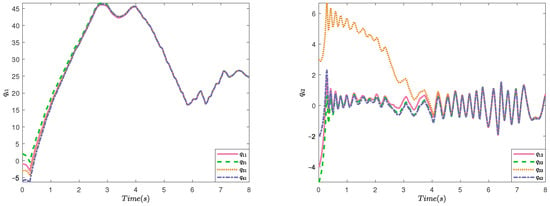

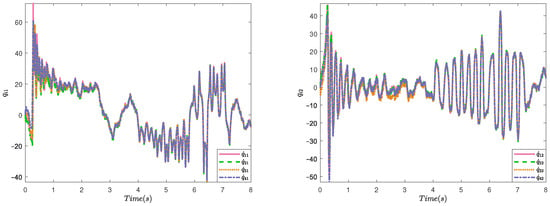

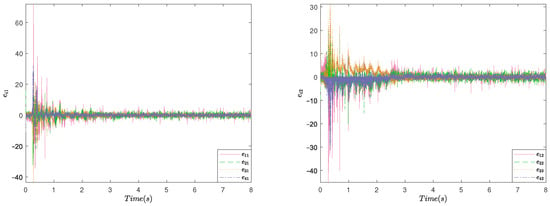

Figure 11, Figure 12 and Figure 13 show the trajectories of and when and Figure 14, Figure 15 and Figure 16 show the trajectories of and when , obviously, the multi-manipulator system has a more stable performance with a minor k.

Figure 11.

State trajectories of , when .

Figure 12.

The trajectories of , when .

Figure 13.

The trajectories of consensus errors , when .

Figure 14.

State trajectories of , when .

Figure 15.

The trajectories of , when .

Figure 16.

The trajectories of consensus errors , when .

The three examples validate the effectiveness of our protocol; furthermore, although k needs to be small enough, the value of k depends on the system model. A minor k has a better performance in terms of noise reduction; however, it sacrifices the convergence rate. Therefore, a trade-off should be made in practical applications.

5. Conclusions

In this paper, we consider the consensus control of unknown nonlinear multi-agent systems with communication noises under Markov switching topologies. The unknown dynamic is approximated by utilizing the RBFNNs, and the estimated weight matrix updated online using the local interfered state information. Based on the stochastic Lyapunov analysis method, consensus conditions on the consensus gain and the weight matrix of NNs are derived, and the results show that the consensus gain should be small enough to guarantee the consensus and attenuate the communication noise simultaneously. In future, we will further consider designing a better protocol to ensure the convergence rate while reducing the noise effect.

Additionally, this paper mainly focuses on the design of distributed control protocol and the analysis of obtaining the consensus conditions regardless of the specific information of the nonlinear dynamic. Although the NNs perform excellently in the control protocol, there exist uncertainties in NNs weights because of the insufficient knowledge of the nonlinear dynamic, Markov switching signal and communications noises, which may affect the performance of the protocol or the instability of the system. Accordingly, quantifying the uncertainties (such as the work in ref. [49]), to help design more efficient control protocols will be another topic of interest that we will pursue in our future work.

Author Contributions

Conceptualization, L.X.; Methodology, S.G. and L.X.; Software, S.G.; Validation, S.G.; Formal analysis, S.G.; Writing—original draft, S.G.; Writing—review & editing, L.X.; Supervision, L.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 52075177, in part by the National Key Research and Development Program of China under Grant 2021YFB3301400, in part by the Research Foundation of Guangdong Province under Grant 2019A050505001 and Grant 2018KZDXM002, in part by Guangzhou Research Foundation under Grant 202002030324 and Grant 201903010028.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.The data are not publicly available due to the ongoing study.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MAs | Multi-agent systems |

| RBFNNs | Radial Basis Function Neural Networks |

| NN | Neural Network |

References

- Bae, J.H.; Kim, Y.D. Design of optimal controllers for spacecraft formation flying based on the decentralized approach. Int. J. Aeronaut. Space 2009, 10, 58–66. [Google Scholar] [CrossRef][Green Version]

- Kankashvar, M.; Bolandi, H.; Mozayani, N. Multi-agent Q-Learning control of spacecraft formation flying reconfiguration trajectories. Adv. Space Res. 2023, 71, 1627–1643. [Google Scholar] [CrossRef]

- Kar, S.; Moura, J.M.F. Distributed consensus algorithms in sensor networks with imperfect communication: Link failures and channel noise. IEEE Trans. Signal Process. 2008, 57, 355–369. [Google Scholar] [CrossRef]

- Gancheva, V. SOA based multi-agent approach for biological data searching and integration. Int. J. Biol. Biomed. Eng. 2019, 13, 32–37. [Google Scholar]

- Zhang, B.; Hu, W.; Ghias, A.M.Y.M. Multi-agent deep reinforcement learning based distributed control architecture for interconnected multi-energy microgrid energy management and optimization. Energy Convers. Manag. 2023, 277, 116647. [Google Scholar] [CrossRef]

- Fan, Z.; Zhang, W.; Liu, W. Multi-agent deep reinforcement learning based distributed optimal generation control of DC microgrids. IEEE Trans. Smart. Grid 2023, 14, 3337–3351. [Google Scholar] [CrossRef]

- Jadbabaie, A.; Lin, J.; Morse, A.S. Coordination of groups of mobile autonomous agents using nearest neighbor rules. IEEE Trans. Autom. Contr. 2003, 48, 988–1001. [Google Scholar] [CrossRef]

- Olfati-Saber, R.; Murray, R.M. Consensus problems in networks of agents with switching topology and time-delays. IEEE Trans. Autom. Contr. 2004, 49, 1520–1533. [Google Scholar] [CrossRef]

- Olfati-Saber, R.; Fax, J.A.; Murray, R.M. Consensus and cooperation in networked multi-agent systems. Proc. IEEE 2007, 95, 215–233. [Google Scholar] [CrossRef]

- Ren, W.; Beard, R.W. Consensus seeking in multiagent systems under dynamically changing interaction topologies. IEEE Trans. Autom. Contr. 2005, 50, 655–661. [Google Scholar] [CrossRef]

- Cao, Z.; Li, C.; Wang, X. Finite-time consensus of linear multi-agent system via distributed event-triggered strategy. J. Franklin Inst. 2018, 355, 1338–1350. [Google Scholar] [CrossRef]

- Ma, Q.; Xu, S. Intentional delay can benefit consensus of second-order multi-agent systems. Automatica 2023, 147, 110750. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, Z.; Cui, R. Distributed Optimal Consensus Protocol for High-Order Integrator-Type Multi-Agent Systems. J. Franklin. Inst. 2023, 360, 6862–6879. [Google Scholar] [CrossRef]

- Huang, M.; Manton, J.H. Coordination and consensus of networked agents with noisy measurements: Stochastic algorithms and asymptotic behavior. SIAM J. Control. Optim. 2009, 48, 134–161. [Google Scholar] [CrossRef]

- Li, T.; Zhang, J.F. Mean square average-consensus under measurement noises and fixed topologies: Necessary and sufficient conditions. Automatica 2009, 45, 1929–1936. [Google Scholar] [CrossRef]

- Cheng, L.; Hou, Z.G.; Tan, M. Necessary and sufficient conditions for consensus of double-integrator multi-agent systems with measurement noises. IEEE Trans. Autom. Contr. 2011, 56, 1958–1963. [Google Scholar] [CrossRef]

- Cheng, L.; Hou, Z.G.; Tan, M. A mean square consensus protocol for linear multi-agent systems with communication noises and fixed topologies. IEEE Trans. Autom. Contr. 2013, 59, 261–267. [Google Scholar] [CrossRef]

- Miao, G.; Xu, S.; Zhang, B. Mean square consensus of second-order multi-agent systems under Markov switching topologies. IMA J. Math. Control. Inf. 2014, 31, 151–164. [Google Scholar] [CrossRef]

- Ming, P.; Liu, J.; Tan, S. Consensus stabilization of stochastic multi-agent system with Markovian switching topologies and stochastic communication noise. J. Franklin Inst. 2015, 352, 3684–3700. [Google Scholar] [CrossRef]

- Li, M.; Deng, F.; Ren, H. Scaled consensus of multi-agent systems with switching topologies and communication noises. Nonlinear Anal. Hybrid. 2020, 36, 100839. [Google Scholar] [CrossRef]

- Guo, H.; Meng, M.; Feng, G. Mean square leader-following consensus of heterogeneous multi-agent systems with Markovian switching topologies and communication delays. Int. J. Robust. Nonlin. 2023, 33, 355–371. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, R.; Zhao, W. Stochastic leader-following consensus of multi-agent systems with measurement noises and communication time-delays. Neurocomputing 2018, 282, 136–145. [Google Scholar] [CrossRef]

- Zong, X.; Li, T.; Zhang, J.F. Consensus conditions of continuous-time multi-agent systems with time-delays and measurement noises. Automatica 2019, 99, 412–419. [Google Scholar] [CrossRef]

- Sun, F.; Shen, Y.; Kurths, J. Mean-square consensus of multi-agent systems with noise and time delay via event-triggered control. J. Franklin Inst. 2020, 357, 5317–5339. [Google Scholar] [CrossRef]

- Xing, M.; Deng, F.; Li, P. Event-triggered tracking control for multi-agent systems with measurement noises. Int. J. Syst. Sci. 2021, 52, 1974–1986. [Google Scholar] [CrossRef]

- Guo, S.; Mo, L.; Yu, Y. Mean-square consensus of heterogeneous multi-agent systems with communication noises. J. Franklin Inst. 2018, 355, 3717–3736. [Google Scholar] [CrossRef]

- Chen, W.; Ren, G.; Yu, Y. Mean-square output consensus of heterogeneous multi-agent systems with communication noises. IET Control Theory Appl. 2021, 15, 2232–2242. [Google Scholar] [CrossRef]

- Wang, C.; Liu, Z.; Zhang, A. Stochastic Bipartite Consensus for Second-Order Multi-Agent Systems with Communication Noise and Antagonistic Information. Neurocomputing 2023, 527, 130–142. [Google Scholar] [CrossRef]

- Du, Y.; Wang, Y.; Zuo, Z. Bipartite consensus for multi-agent systems with noises over Markovian switching topologies. Neurocomputing 2021, 419, 295–305. [Google Scholar] [CrossRef]

- Zhou, R.; Li, J. Stochastic consensus of double-integrator leader-following multi-agent systems with measurement noises and time delays. Int. J. Syst. Sci. 2019, 50, 365–378. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, Y.; Zong, X. Stochastic leader-following consensus of discrete-time nonlinear multi-agent systems with multiplicative noises. J. Franklin Inst. 2022, 359, 7753–7774. [Google Scholar] [CrossRef]

- Zong, X.; Li, T.; Zhang, J.F. Consensus of nonlinear multi-agent systems with multiplicative noises and time-varying delays. In Proceedings of the 2018 IEEE Conference on Decision and Control (CDC), Miami Beach, FL, USA, 17–19 December 2018; pp. 5415–5420. [Google Scholar]

- Chen, K.; Yan, C.; Ren, Q. Dynamic event-triggered leader-following consensus of nonlinear multi-agent systems with measurement noises. IET Control Theory Appl. 2023, 17, 1367–1380. [Google Scholar] [CrossRef]

- Tariverdi, A.; Talebi, H.A.; Shafiee, M. Fault-tolerant consensus of nonlinear multi-agent systems with directed link failures, communication noise and actuator faults. Int. J. Control 2021, 94, 60–74. [Google Scholar] [CrossRef]

- Huang, Y. Adaptive consensus for uncertain multi-agent systems with stochastic measurement noises. Commun. Nonlinear Sci. 2023, 120, 107156. [Google Scholar] [CrossRef]

- Bao, Y.; Chan, K.J.; Mesbah, A. Learning-based adaptive-scenario-tree model predictive control with improved probabilistic safety using robust Bayesian neural networks. Int. J. Robust Nonlin. 2023, 33, 3312–3333. [Google Scholar] [CrossRef]

- Ma, T.; Zhang, Z.; Cui, B. Impulsive consensus of nonlinear fuzzy multi-agent systems under DoS attack. Nonlinear Anal. Hybr. 2022, 44, 101155. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Wen, G.X.; Liu, Y.J. Adaptive consensus control for a class of nonlinear multiagent time-delay systems using neural networks. IEEE Trans. Neur. Netw. Lear. Syst. 2014, 25, 1217–1226. [Google Scholar] [CrossRef]

- Ma, H.; Wang, Z.; Wang, D. Neural-network-based distributed adaptive robust control for a class of nonlinear multiagent systems with time delays and external noises. IEEE Trans. Syst. Man. Cybern. Syst. 2015, 46, 750–758. [Google Scholar] [CrossRef]

- Meng, W.; Yang, Q.; Sarangapani, J. Distributed control of nonlinear multiagent systems with asymptotic consensus. IEEE Trans. Syst. Man. Cybern. Syst. 2017, 47, 749–757. [Google Scholar] [CrossRef]

- Mo, L.; Yuan, X.; Yu, Y. Neuro-adaptive leaderless consensus of fractional-order multi-agent systems. Neurocomputing 2019, 339, 17–25. [Google Scholar] [CrossRef]

- Wen, G.; Yu, W.; Li, Z. Neuro-adaptive consensus tracking of multiagent systems with a high-dimensional leader. IEEE Trans. Cybern. 2016, 47, 1730–1742. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, C.; Cai, X. Adaptive neural network finite-time tracking control for a class of high-order nonlinear multi-agent systems with powers of positive odd rational numbers and prescribed performance. Neurocomputing 2021, 419, 157–167. [Google Scholar] [CrossRef]

- Godsil, C.; Royle, G.F. Algebraic Graph Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Stone, M.H. The generalized Weierstrass approximation theorem. Math. Mag. 1948, 21, 237–254. [Google Scholar] [CrossRef]

- Ge, S.S.; Wang, C. Adaptive neural control of uncertain MIMO nonlinear systems. IEEE Trans. Neur. Netw. 2004, 15, 674–692. [Google Scholar] [CrossRef]

- Jiang, Y.; Liu, L.; Feng, G. Adaptive optimal control of networked nonlinear systems with stochastic sensor and actuator dropouts based on reinforcement learning. IEEE Trans. Neur. Netw. Lear. 2022, 22, 1–14. [Google Scholar] [CrossRef]

- Wang, D.; Ma, H.; Liu, D. Distributed control algorithm for bipartite consensus of the nonlinear time-delayed multi-agent systems with neural networks. Neurocomputing 2016, 174, 928–936. [Google Scholar] [CrossRef]

- Bao, Y.; Velni, J.M.; Shahbakhti, M. Epistemic uncertainty quantification in state-space LPV model identification using Bayesian neural networks. IEEE Control Syst. Lett. 2020, 5, 719–724. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).