Abstract

Large amounts of data are created from sensors in Internet of Things (IoT) services and applications. These data create a challenge in directing these data to the cloud, which needs extreme network bandwidth. Fog computing appears as a modern solution to overcome these challenges, where it can expand the cloud computing model to the boundary of the network, consequently adding a new class of services and applications with high-speed responses compared to the cloud. Cloud and fog computing propose huge amounts of resources for their clients and devices, especially in IoT environments. However, inactive resources and large number of applications and servers in cloud and fog computing data centers waste a huge amount of electricity. This paper will propose a Dynamic Power Provisioning (DPP) system in fog data centers, which consists of a multi-agent system that manages the power consumption for the fog resources in local data centers. The suggested DPP system will be tested by using the CloudSim and iFogsim tools. The outputs show that employing the DPP system in local fog data centers reduced the power consumption for fog resource providers.

Keywords:

cloud computing; fog computing; Internet of Things; big data; Cloud of Things; energy efficiency; machine-to-machine networks MSC:

68M10; 68M99

1. Introduction

The term IoT concerns how to achieve a point at which several of the entities around us will have the capability to interconnect with each other through the Internet with no human involvement [1]. A cloud computing model enables on-demand access to a network for sharing and configuring computing resources. Instead of sending IoT data to the cloud, the fog offers secure storage and management of nearby IoT devices.

Fog computing and cloud computing both provide a variety of resources and services on-demand. The service providers in both models can contain numerous physical and virtual machines (VMs). A growing number of cloud service providers are expanding their data centers worldwide in order to meet the rapidly growing demand for data management capabilities and storage among clients and entities. In cloud data centers, a large number of linked resources are typically present, including physical machines as well as virtual machines that consume large amounts of electricity for their activities [2,3]. The proposed system in this paper (DPP) rests on turning inactive machines to (Sleep/Wakeup) or switched-off mode by applying the migration of VMs between local data centers in a fog environment.

The construction and management of data centers must be cost-effective for cloud providers. As cloud computing scales up, power consumption increases, resulting in higher operational costs. An amount of 45% of the total cost of a data center will be attributed to physical resources (such as CPU, memory, and storage) [4]. Nevertheless, energy costs will make up 15% of operating costs, according to [5]. Data centers have doubled their energy consumption in the past five years; infrastructure and energy costs will account for 75% of the overall operating cost [6]. It is therefore important for cloud data centers to reduce their energy consumption.

Virtualization technology is now used by most of the physical servers in cloud data centers. VMs are placed on different hosts and communicate with each other based on the service-level agreement (SLA) with cloud providers. It is important to maintain application performance isolation and security for each VM by providing it with enough resources, including CPU, memory, storage, and bandwidth. Virtualization technology allows for the running of multiple virtual servers on a single physical machine (PM), which helps with resource utilization and energy efficiency. Likewise, virtualization provides an efficient way to manage resources and reduce energy consumption, enabling cloud managers to deploy resources on-demand and in an orderly manner [7].

IaaS (infrastructure as a service) is one of the major services of public clouds with virtualization. Cloud providers optimize resource allocation by deploying virtual machines (VMs) on physical machines (PMs) based on tenants’ SLA requirements. As different mappings between VMs and PMs lead to different resource utilizations, cloud providers face the challenge of placing multiple VMs required by tenants efficiently on physical servers to minimize the number of active physical resources and energy consumption, resulting in reduced operation and management costs. VM placement has become a hot topic in recent years.

1.1. Internet of Things (IoT)

Initially, the IoT was intended to reduce human interaction and effort by employing several types of sensors that could gather data from the surrounding environment, organize it, and allow storage and management of that information [8,9]. The IoT is a modern innovation of the Internet. It allows entities (things) to gain access to information that was aggregated by other entities, or they can be used to be part of complex services [10,11]. By using any network or service, the IoT is designed to enable entities to be connected anytime, anywhere, and with anything. The figure below illustrates the general concept of the Internet of Things. A general overview of the IoT is shown in Figure 1 [12].

Figure 1.

IoT general concept [12].

IoT architecture comprises multiple layers of technologies to support IoT operations. The first layer consists of smart entities incorporated with sensors. Sensors provide a method of correlating physical and digital objects to allow the accumulating and managing of real-time data. Sensors are available in different types for a variety of uses. In addition to measuring air quality and temperature, the sensors can record pressure, humidity, flow, electricity, and movement. There is also memory in the sensors for storing a certain amount of data. Usually, the sensors embedded in IoT systems produce a huge volume of big data. The majority of sensors have need of connectivity to a gateway in order to transfer the big data that was collected from the sounded environment. The gateways vary from Wide Area Networks, Personal Area Networks, to Local Area Networks. In the current network environment, networks, applications, and services between machines are supported by a variety of protocols. It is becoming increasingly necessary to integrate multiple networks with different protocols and technologies to provide a wide range of IoT applications and services in a heterogeneous environment as the demand for services and applications continues to grow [12].

Considering the limitations of the IoT in terms of processing power and storage, it is necessary to create a collaboration between the IoT and cloud computing. This collaboration has been referred to as the Cloud of Things (CoT), and this collaboration is one of the most effective solutions available to solve many of the challenges associated with the IoT [13].

1.2. Cloud Computing

As a result of cloud computing, individuals and businesses have the opportunity to access dynamic and distributed computer resources such as storage, processing power, and services via the Internet on demand. Third parties control and administer these resources remotely. Webmail, social networking sites, and online storage services are all examples of cloud services [14].

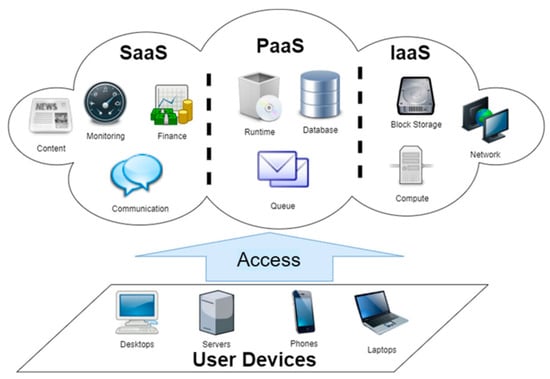

In most cases, a cloud provider is responsible for operating and administering a large data center, which is used to house and host cloud resources, as well as providing computing resources to cloud clients. These resources can be reached from anywhere by anyone via the Internet on demand [15]. The cloud computing infrastructure is illustrated in Figure 2.

Figure 2.

Cloud computing infrastructure [16].

IaaS, SaaS, and PaaS represent the cloud service models offered by the cloud providers. Infrastructure as a Service (IaaS) refers to a monitoring model or/and service business that provides on-demand virtualization of resources [17]. In this paradigm, the provider is the owner of the machines and the applications. Instead of owning these machines and applications, the providers allow cloud clients to use them virtually. According to this model, the client pays or rents the services on a pay-per-use basis [18]. A cloud resource can be accessed via the web-based interface by cloud users.

The most popular cloud service model is Software as a Service (SaaS), in which cloud users can obtain access to the services and applications of the cloud, in order to exploit them with no need to purchase or download those applications and services. In the same way, it is a storage service model in which the cloud users can also store their data in a rental base [19]. As in the IaaS, the cloud clients can get access and use applications and services of SaaS through using the web-based services.

A third model of cloud service is Platform as a Service (PaaS). where clients of cloud service providers can rent services from the cloud providers in order to run their applications on the Internet by renting infrastructure, software, and hardware from the cloud provider [20]. Applications can be developed and tested in this environment, which is particularly useful for application developers.

Presently, cloud computing is growing vastly in different services and applications. It has developed to become a most significant technology of computing infrastructure, applications, and services [21].

1.3. Fog Computing

The Cloud of Things (CoT) is a combination of the Internet of Things and cloud computing. The reason behind this collaboration is that the IoT is distinguished by its rustiness in term of privacy, performance, reliability, security, processing power, and storage. The combination is one of the useful choices to solve most IoT challenges [22]. In addition to simplifying IoT data transfer, the CoT facilitates the installation and integration of complicated data between entities [23,24]. It is, however, challenging to manufacture new IoT services and applications due to the large number of IoT devices and equipment with different paradigms available. It is necessary to analyze and process the large volumes of data generated by IoT devices and sensors in order to determine the correct action to be taken. It is therefore a necessity to have a high-bandwidth network to be able to transfer all of the data into a cloud environment. In this case, fog computing can be utilized to solve this problem [24,25].

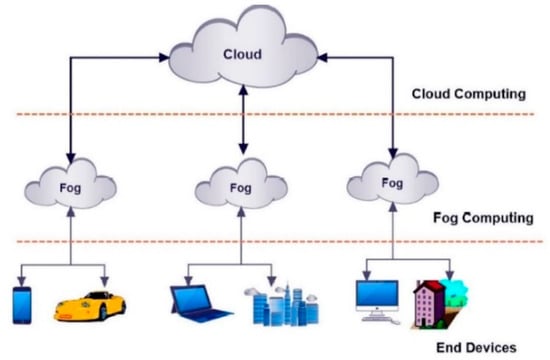

IoT is a particular application of fog computing, which was developed by Cisco [26]. It offers a number of benefits to a wide range of fields, particularly the Internet of Things. IoT devices can access fog computing, similar to cloud computing, by storing and processing data locally, rather than transferring it to a cloud server. Fog computing, in general, is an extension of cloud computing but is located closer to end users (endpoints) [22], as shown in Figure 3.

Figure 3.

Fog computing is an expansion of the cloud but closer to end devices [25].

As a result of fog computing in IoT environments, performance will be improved and the amount of data transferred to cloud computing environments for storage and processing will decrease. Thus, instead of sending sensors’ data to the cloud, network end devices will process, analyze, and store the data locally instead. As a result, network traffic and latency will be reduced [27].

The following points clarify how fog computing functions. Data from IoT devices are collected by fog devices closest to the network edge. Afterwards, the fog IoT application selects the best location for analyzing the data. Mostly, there are three kinds of data [22,28]:

- The highest time-sensitive data: this kind of data is processed on the fog nodes closest to the entity of data generator.

- A fog node processes, assesses, and responds to data which can wait for action or respond for a few seconds or minutes.

- Those data sets that cannot be delayed or are less time-sensitive are sent to the cloud where they are archived, analyzed, and permanently stored.

Our paper’s contributions can be summarized in the following points:

- Proposing a Dynamic Power Provisioning (DPP) system in fog data centers, which consists of a multi-agent system that manages the power consumption for fog resources in the local data centers.

- The proposed system will manage, monitor, and coordinate running jobs in fog data centers by using a fog service broker. From one side, this broker integrates IoT with Edge-Fog, and with cloud environments from the other.

- Reducing the amount of data transferred to the cloud environment.

- The outputs of the proposed system show that employing the DPP system in the local fog data centers reduced power consumption for fog resource providers.

This paper relies on an experiment for its research method. There is a description of what was done to answer the research question, how it was done, a rationale for the experimental design, and an explanation of how the results were analyzed.

This paper is organized as follows: The second section provides a literature review. The third section presents the proposed DPP system model, and the fourth section is its evaluation. The conclusion is the last section of the paper.

2. Related Works

There is a lot of research regarding how the cloud and fog computing models monitor their resources and how they can reduce the power consumption during their jobs. The purpose of this section is to introduce several models that have been introduced in the field of fog computing and cloud computing.

Al-Ayyoub et al. [29] provided an overview of the Dynamic Resources Provisioning and Monitoring (DRPM) system for organizing cloud resources through the use of multi-agent systems. The DPRM also takes into consideration the QoS for cloud customers. Moreover, the DRPM system incorporates a modern VM selection algorithm known as Host Fault Detection (HFD) that reduces the amount of power consumed by cloud providers by migrating VMs within their data centers. The DRPM system was assessed by using the Cloudsim simulator.

Patel and Patel in [2] proposed that inactive physical machines be switched to lower power states (sleep/wake up or switched off) in order to maintain the performance requirements of customers in cloud data centers. The machines that operate in a data center can be classified into three categories based on their use: overloaded machines are machines with usage more than a specific level; in the same way, machines with usage less than a specific level may be described as under-loaded machines; the rest of the machines are considered as normal machines. The resource provider tries to shift some workloads between machines, so the target machines do not become overloaded. This is done by selecting some virtual machines from overloaded machines and attempting to move them on other machines in a way that makes source machines turn into a normal one while at the same time preventing the target one from getting overloaded. Also, the system tries to select all virtual machines from under-loaded machines and move them on other machines in a way that allows for turning off those under-loaded machines when all virtual machines are well migrated for the intention of reducing energy.

Katal et al. in [30] clarified that there are different models for assessing power consumption in storage devices, servers, and network devices. It was suggested in the paper that a model be set up in three steps, namely reconfiguration, optimization, and monitoring, to optimize the power consumption. According to the paper, the energy enchantment policy could be controlled by power consumption estimation models, which could result in a 20% reduction in energy consumption. Jiang et al. in [31] examined the problem of power management in non-physical data centers in the early rise of cloud computing. The objective is using non-physical (virtualization) technologies in data centers to dynamically employ power-controlled methods and policies. Using this method, two elements are incorporated into the resource administrator, namely the local administrator and the global administrator. Local policies are recognized as the power administrator policies of the guest OS. Global policies, on the other hand, are derived from the local administrator policies for the location of the VM.

H. Zhao et al. in [32] determined the challenges associated with the dynamic location of applications and services in a container as a power-aware issue. The cost and size of containers can be altered, and live movements take place at regular intervals for the migration of virtual machines from one machine to another. Jeba et al. in [33] suggested a scheme of creation of lower and upper utilization levels (thresholds) to recognize under-loaded and overloaded servers. In order to reduce the likelihood of SLA violations when the machine consumption is above the upper level, the paper proposed moving some virtual machines from the machine. It is similarly necessary to relocate all VMs on an under-loaded machine and to turn off the machine in order to reduce energy consumption if consumption falls below the lower level. However, no exact method of determining lower and upper levels was presented in the paper. A summary of related work can be show in Table 1.

Table 1.

A Summary of Related Work.

Singh et al. in [39] presented fog computing along with real-time applications related to it. In addition to demonstrating that fog computing is capable of controlling and operating big data generated by IoT devices, the paper stated that fog computing is capable of addressing latency and congestion concerns associated with fog models. M. Chiang and T. Zhang [40] describe how fog computing is used in conjunction with the Internet of Things. They considered the new interests in developing IoT systems and the ways that can be used to solve the challenges related to the present networking and computing models.

Hong et al. in [27] conclude that the combination of fog computing and the Internet of Things has been demonstrated to result in fog as a service (FaaS). As a service provider in FaaS, the service provider is responsible for building and maintaining a network of fog nodes across a range of geographies and serves as an owner to various clients in different sectors. Each fog node owns its local storage, computation, and networking abilities. Unlike cloud computing, FaaS offers a variety of computing, storage, and management services that can be established and operated by large and small businesses at different levels according to customer needs. The cloud is normally managed by large businesses that are able to build and manage large data centers.

All of the previous systems and frameworks suggested their work for cloud computing. None of them has proposed a system or mechanisms that can deploy on fog computing. Our proposed system in this paper (DPP system) is a dynamic system that can reduce and organize the power consumption for the data centers in the fog environment.

3. System Model

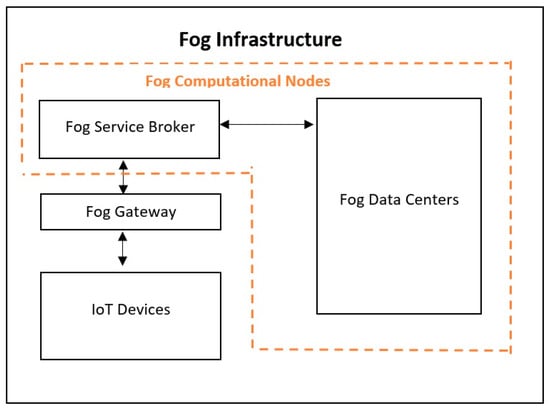

In this study, the main goal of the system model is to reduce the energy consumption in fog data centers. Thus, it is necessary to first describe the fog infrastructure in order to illustrate this model. Figure 4 shows the fog infrastructure and components.

Figure 4.

Fog infrastructure and components.

The fog service broker essentially is an agent manager that monitors and coordinates the running jobs in the fog data centers. This component is described as a point of integrating of the IoT environment with the Edge-Fog from one side, and the cloud environment from the other side. Using Internet of Things devices, instructions will be translated into physical actions based on the sounds in the environment. There are several wireless or wired transmission protocols that can be used to connect IoT devices to close gateways. IoT devices and fog resource brokers are connected through a fog gateway. Also, in some situation the fog gateway may have the capability to connect and transfer data directly to the cloud environment without the necessity to get in touch with the fog service broker first. This state can happen if the data that need to be transferred are less time-sensitive to delay.

Fog data centers are in charge of application execution, data storage, and other operations’ control. They can be used for storing the users’ credentials, reserving the application list, storing the data from IoT devices, and improving the storage capacity of the cloud for the purpose of supporting data management.

Generally, fog service brokers or fog gateways determine the optimum way to handle the data received from IoT applications and devices. When the data are highly time-sensitive, they are processed by the fog nodes closest to the entity that generated the data. In the second case, if the data can wait or be delayed for seconds or minutes for actions or responses, the data are transferred along to the fog node for processing, assessment, and action. Last but not least, if the data cannot be delayed or are not too time-sensitive to be stored on-premises, the data are usually transferred to the cloud for storage and analysis, including historical and archival data analysis and big data analytics.

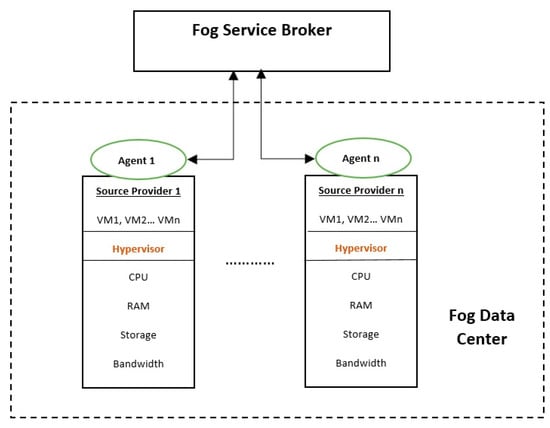

Due to the rapid increase in IoT devices’ requests for data storage and processing, fog resource providers have created a number of data centers near the IoT environment. The fog data center can contain many physicals and virtual machines (VMs). Figure 5 shows our proposed system for the fog data center. The Hypervisor in every single machine is used to organize and create the VMs on the top of physical machines.

Figure 5.

Proposed system.

In our proposed system, for each physical machine, there is an agent which is responsible for sending the feedbacks about the status of the running jobs inside the machine to the fog service broker. Also, it is the job of the agent to decide the necessity of the VM migrations between the physical machines; in order to reduce power consumption within data centers, inactive machines should be turned off.

Machines that are operating in fog data centers are categorized into three types, depend on their utilizing: overloaded machines are machines with usage more than a specific level; in the same way, machines with usage less than a specific level may be described as under-loaded machines; the rest of the machines are considered as normal machines.

There are many physical and virtual machines in the fog data centers which consume a considerable amount of electricity as a result of their operation. Our proposed system of decreasing energy consumption in fog data centers is adopted from [2], in which the system is proposed for cloud data centers. In addition to maintaining customers’ performance requirements in fog data centers, the proposed DPP system makes use of a mechanism to switch inactive physical machines into lower power states (Sleep/Wakeup or switched off). This operation is performed by the fog resource providers’ agents.

The agent at the fog resource provider tries to shift some workloads between machines so the target machines do not become overloaded. This is done by selecting some virtual machines from overloaded machines and attempting to move them on other machines in a way that makes source machines turn into normal ones but at the same time prevents the target one from getting overloaded. Also, the system tries to select all virtual machines from underloaded machines and move them on other machines in a way that allows for turning off those underloaded machines when all virtual machines are well migrated for the intention of reducing energy. To apply that, several factors should be considered such as the current workload, resource availability on target machines, and migration feasibility, while at the same time ensuring that the migration process does not overload the target machines during the transfer.

It is necessary to develop an allocation strategy that optimizes resource utilization, minimizes energy consumption, and ensures efficient processing when mapping virtual machines (VMs) to physical machines in a fog computing environment. This includes resource utilization, load balancing, dynamic mapping, fault tolerance, redundancy, optimization, and security.

To determine which machines are considered as underloaded, overloaded or normal, the Lower Threshold (T Lower), which determines the lowest utilized host, is used. A preliminary calculation of the workload (WTotal) of all available active machines (hosts) in the data center is made by using Equation (1). The maximum number of virtual machines to be migrated (HMax) depends on the total number of virtual machines (WTotal) in the data center as in Equation (2). Afterward, the lowest threshold value (T Lower) can be calculated as in Equation (3). Based on T Lower, one can attempt to move all the virtual machines to the lowest utilized host after determining T Lower. The target host should have sufficient resources to contain the migrated VMs.

where WTotal: Total Work Load Datacenter; Ui: Utilization of Host; Htotal: Number of Hosts.

HMax: Maximum number of hosts that can be migrated;

T Lower: Lower Threshold.

4. Experiments and Results

In order to evaluate the proposed DPP system’s performance, Cloudsim [41] and IFogsim [42] were employed. IFogsim is considered to be one of the most popular simulations for fog computing, and Cloudsim is a popular simulator tool for cloud computing environments.

The characteristics of the physical machines and virtual machines included in this simulation are presented in Table 2 and Table 3. A simulation was conducted in two parts, each with the same settings. As part of our simulation, 300 physical machines were allocated, as well as 900 virtual machines. The SPECpower benchmark [43] was used to test the real power consumption data, as shown in Table 4. The utilization here indicate the CPU utilization.

Table 2.

Physical machine specification.

Table 3.

Specification of virtual machines.

Table 4.

Power consumption (watts per hour) at different load levels.

A linear relationship between power consumption and CPU utilization can be used to accurately determine the power consumption by physical machines. A small utilization can still result in a significant amount of energy consumption for the machine, as can be seen from Table 4. Therefore, it is essential to turn off such kind of inactive machines, or reduce the number of virtual machines running on those physical machines when they are not in use, in order to reduce the power consumed by the physical machine itself.

The following steps justify the main phases of our simulation:

- In IoT, devices generate and send data in accordance with the surrounding environment. These data are sent through fog gateways to the fog service broker.

- Fog service brokers receive data from IoT devices and search for the most appropriate data centers to process the data.

- Later, the fog service broker transmits the data to the appropriate resource providers (data centers) and contacts the agent associated with each provider.

- The fog service broker may divide the data between several resource providers inside the data center for the purpose of reducing costs and accelerating the process.

- While the data is being processed inside the data center, the provider’s agent will periodically send the fog service broker messages regarding the level of achievement of the running data. In this manner, the fog service broker will be able to determine if the process of data collection has been successful. This will assist moving the data to a different provider if any failure happened.

- Simultaneously, the agent attached to the provider inside the data center checks periodically the CPU utilization of the machines inside the data center.

- The fog data centers make use of the DPP system by trying to determine the inactive VMs or the ones that exceed the Lower Threshold, and try to shut down those VMs if they are inactive to migrate them to other physical machines.

- Finally, the fog service broker transmits the data outputs to the IoT environment.

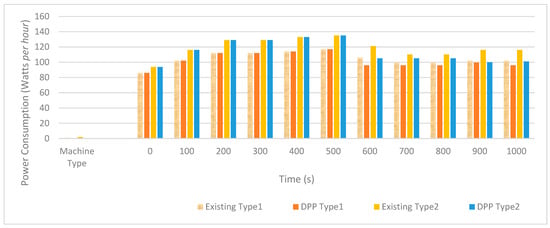

Figure 6 shows the simulation results of our experiment. DPP (Dynamic Power Provisioning) significantly reduced the power consumed by physical machines, particularly post-500. A reduction in energy consumption was achieved within the data center as a result of this system. As a consequence of the increased efficiency facilitated by the DPP system, the data center experienced a scenario where certain virtual machines had to be either shut down or migrated. This action was necessitated by the decreased utilization or inactive state of the physical machines. By adapting to the altered utilization patterns of these physical resources, the DPP system optimized its operation, resulting in a reduction in overall power consumption for the entire data center. This outcome reflects a successful synergy between technology and energy efficiency, showcasing how dynamic systems like DPP can intelligently manage resources, adapt to fluctuations, and contribute significantly to reducing the environmental footprint of data centers.

Figure 6.

Simulation results.

5. Conclusions

A large amount of data is generated by IoT services and applications due to sensors and other machines. Using fog computing, the cloud computing model is extended to the network’s boundary. Cloud and fog computing propose a huge amount of resources for their clients and devices, especially in IoT environments. Most of these resources are being added to large data centers in order to meet the growing number of requests coming from cloud clients, IoT devices, and applications. In some cases, these resources may remain inactive for quite some time before new tasks are sent to cloud or fog servers for processing. The result of that is wasting a huge amount of electricity. Implementing a Dynamic Power Provisioning (DPP) system within fog data centers is the central focus of this paper. The DPP system comprises a multi-agent framework designed to oversee and regulate power consumption for the fog resources within local data centers. The aim is to optimize resource utilization and mitigate energy wastage. To evaluate the efficacy of this proposed system, tests were conducted using CloudSim and iFogSim, specialized simulation tools tailored for cloud and fog computing environments, respectively. These simulations were pivotal in assessing the performance and impact of the DPP system on power consumption within fog data centers. The results obtained from these tests indicate a tangible reduction in power consumption for fog resource providers upon the implementation of the DPP system in local fog data centers. This reduction serves as empirical evidence validating the effectiveness of the proposed DPP framework in efficiently managing power usage. Such findings signify a promising advancement in addressing the challenges of energy efficiency within fog computing infrastructures. By implementing intelligent systems like DPP, these data centers can adapt power consumption dynamically, aligning it more closely with actual resource demands. This not only enhances operational efficiency but also contributes significantly to reducing electricity wastage, thereby supporting sustainability objectives.

Author Contributions

Conceptualization, M.A.M. and T.A.; methodology, M.A.M.; validation, M.A.M. and T.A.; formal analysis, T.A.; investigation, T.A.; resources, M.A.M.; data curation, M.A.M. and T.A.; writing—original draft preparation, M.A.M. and T.A.; writing—review and editing, M.A.M. and T.A.; visualization, T.A.; supervision, M.A.M. and T.A.; funding acquisition, M.A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was funded by Institutional Fund Projects under grant no. IFPIP: 1122-849-1443.

Data Availability Statement

There is no statement regarding the data.

Acknowledgments

The authors gratefully acknowledge technical and financial support provided by the Ministry of Education and King Abdulaziz University, DSR, Jeddah, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Atlam, H.F.; Alenezi, A.; Walters, R.J.; Wills, G.B. An Overview of Risk Estimation Techniques in Risk-based Access Control for the Internet of Things. In Proceedings of the 2nd International Conference on Internet of Things, Big Data and Security (IoTBDS 2017), Porto, Portugal, 24–26 April 2017; pp. 254–260. [Google Scholar] [CrossRef]

- Patel, N.; Patel, H. Energy efficient strategy for placement of virtual machines selected from underloaded servers in compute Cloud. J. King Saud Univ.-Comput. Inf. Sci. 2020, 32, 700–708. [Google Scholar] [CrossRef]

- Alwada’n, T.; Alkhdour, T.; Alarabeyyat, A.; Rodan, A. Enhanced Power Utilization for Grid Resource Providers. Adv. Sci. Technol. Eng. Syst. 2020, 5, 341–346. [Google Scholar] [CrossRef]

- Griner, C.; Zerwas, J.; Blenk, A.; Ghobadi, M.; Schmid, S.; Avin, C. Cerberus: The Power of Choices in Datacenter Topology Design—A Throughput Perspective. Proc. ACM Meas. Anal. Comput. Syst. 2021, 5, 38. [Google Scholar] [CrossRef]

- Jin, C.; Bai, X.; Zhang, X.; Xu, X.; Tang, Y.; Zeng, C. A measurement-based power consumption model of a server by considering inlet air temperature. Energy 2022, 261, 125126. [Google Scholar] [CrossRef]

- Christian, L.; Belady, P.E. In the Data Center, Power and Cooling Costs More than the It Equipment It Supports. Electronics Cooling. Available online: https://www.electronics-cooling.com/2007/02/in-the-data-center-power-and-cooling-costs-more-than-the-it-equipment-it-supports/ (accessed on 12 December 2023).

- Wei, C.; Hu, Z.; Wang, Y. Exact algorithms for energy-efficient virtual machine placement in data centers. Future Gener. Comput. Syst. 2020, 106, 77–91. [Google Scholar] [CrossRef]

- Kumar, D.; Rishu; Annam, S. Fog Computing Applications with Decentralized Computing Infrastructure—Systematic Review. In Proceedings of the Seventh International Conference on Mathematics and Computing: ICMC 2021, Kolkata, India, 12–15 January 2021; Springer: Singapore, 2022; pp. 499–509. [Google Scholar] [CrossRef]

- Atlam, H.F.; Walters, R.J.; Wills, G.B.; Daniel, J. Fuzzy logic with expert judgment to implement an adaptive risk-based access control model for IoT. Mob. Netw. Appl. 2021, 26, 2545–2557. [Google Scholar] [CrossRef]

- Alouffi, B.; Hasnain, M.; Alharbi, A.; Alosaimi, W.; Alyami, H.; Ayaz, M. A Systematic Literature Review on Cloud Computing Security: Threats and Mitigation Strategies. IEEE Access 2021, 9, 57792–57807. [Google Scholar] [CrossRef]

- Abd El-Mawla, N.; Badawy, M. Eco-Friendly IoT Solutions for Smart Cities Development: An Overview. In Proceedings of the 2023 1st International Conference on Advanced Innovations in Smart Cities (ICAISC), Jeddah, Saudi Arabia, 23 January 2023; IEEE: Washington, DC, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Laghari, A.A.; Wu, K.; Laghari, R.A.; Ali, M.; Khan, A.A. A review and state of art of Internet of Things (IoT). Arch. Comput. Methods Eng. 2021, 29, 1395–1413. [Google Scholar] [CrossRef]

- Atlam, H.F.; Alenezi, A.; Alharthi, A.; Walters, R.; Wills, G. Integration of cloud computing with internet of things: Challenges and open issues. In Proceedings of the 2017 IEEE International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Exeter, UK, 21–23 June 2017; pp. 670–675. [Google Scholar] [CrossRef]

- Golightly, L.; Chang, V.; Xu, Q.A.; Gao, X.; Liu, B.S. Adoption of cloud computing as innovation in the organization. Int. J. Eng. Bus. Manag. 2022, 14, 18479790221093992. [Google Scholar] [CrossRef]

- Caterino, M.; Fera, M.; Macchiaroli, R.; Pham, D.T. Cloud remanufacturing: Remanufacturing enhanced through cloud technologies. J. Manuf. Syst. 2022, 64, 133–148. [Google Scholar] [CrossRef]

- Chen, C.; Chiang, M.; Lin, C. The High Performance of a Task Scheduling Algorithm Using Reference Queues for Cloud- Computing Data Centers. Electronics 2020, 9, 371. [Google Scholar] [CrossRef]

- Abbasi, A.A.; Abbasi, A.; Shamshirband, S.; Chronopoulos, A.T.; Persico, V.; Pescapè, A. Software-defined cloud computing: A systematic review on latest trends and developments. IEEE Access 2019, 7, 93294–93314. [Google Scholar] [CrossRef]

- Sharma, R.K. The Ultimate Guide to Cloud Computing Technology. Web: February 2020. Available online: https://www.netsolutions.com/insights/what-is-cloud-computing/ (accessed on 12 July 2020).

- Loukis, E.; Janssen, M.; Mintchev, I. Determinants of software-as-a-service benefits and impact on firm performance. Decis. Support Syst. 2019, 117, 38–47. [Google Scholar] [CrossRef]

- Stone, T.W.; Alarcon, J.L. Platform as a Service in Software Development: Key Success Factors (September 15, 2019). In Proceedings of the Ninth International Conference on Engaged Management Scholarship (2019), Antwerp, Belgium, 5–7 September 2019. [Google Scholar] [CrossRef]

- Alwada’n, T.; Al-Tamimi, A.K.; Mohammad, A.H.; Salem, M.; Muhammad, Y. Dynamic congestion management system for cloud service broker. Int. J. Electr. Comput. Eng. 2023, 13, 872–883. [Google Scholar] [CrossRef]

- Al Masarweh, M.; Alwada, T.; Afandi, W. Fog Computing, Cloud Computing and IoT Environment: Advanced Broker Management System. J. Sens. Actuator Netw. 2022, 11, 84. [Google Scholar] [CrossRef]

- Hamdan, S.; Ayyash, M.; Almajali, S. Edge-computing architectures for internet of things applications: A survey. Sensors 2020, 20, 6441. [Google Scholar] [CrossRef]

- Avasalcai, C.; Murturi, I.; Dustdar, S. Edge and fog: A survey, use cases, and future challenges. Fog Comput. Theory Pract. 2020, 43–65. [Google Scholar] [CrossRef]

- Sabireen, H.; Neelanarayanan, V.J. A review on fog computing: Architecture, fog with IoT, algorithms and research challenges. ICT Express 2021, 7, 162–176. [Google Scholar] [CrossRef]

- Alli, A.A.; Alam, M.M. The fog cloud of things: A survey on concepts, architecture, standards, tools, and applications. Internet Things 2020, 9, 100177. [Google Scholar] [CrossRef]

- Hong, X.; Zhang, J.; Shao, Y.; Alizadeh, Y. An Autonomous Evolutionary Approach to Planning the IoT Services Placement in the Cloud-Fog-IoT Ecosystem. J. Grid Comput. 2022, 20, 32. [Google Scholar] [CrossRef]

- Cisco. Fog Computing and the Internet of Things: Extend the Cloud to Where the Things Are. April 2015. Available online: https://www.cisco.com/c/dam/en_us/solutions/trends/iot/docs/computing-overview.pdf (accessed on 14 July 2020).

- Al-Ayyoub, M.; Jararweh, Y.; Daraghmeh, M.; Althebyan, Q. Multi-agent based dynamic resource provisioning and monitoring for cloud computing systems infrastructure. Clust. Comput. 2015, 18, 919–932. [Google Scholar] [CrossRef]

- Katal, A.; Dahiya, S.; Choudhury, T. Energy efficiency in cloud computing data centers: A survey on software technologies. Clust. Comput. 2023, 26, 1845–1875. [Google Scholar] [CrossRef]

- Jiang, C.; Wang, Y.; Ou, D.; Li, Y.; Zhang, J.; Wan, J.; Luo, B.; Shi, W. Energy efficiency comparison of hypervisors. Sustain. Comput. Inform. Syst. 2019, 22, 311–321. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, J.; Liu, F.; Wang, Q.; Zhang, W.; Zheng, Q. Power-aware and performance-guaranteed virtual machine placement in the cloud. IEEE Trans. Parallel Distrib. Syst. 2018, 29, 1385–1400. [Google Scholar] [CrossRef]

- Jeba, J.A.; Roy, S.; Rashid, M.O.; Atik, S.T.; Whaiduzzaman, M. Towards green cloud computing an algorithmic approach for energy minimization in cloud data centers. In Research Anthology on Architectures, Frameworks, and Integration Strategies for Distributed and Cloud Computing; IGI Global: Hershey, PA, USA, 2021; pp. 846–872. [Google Scholar] [CrossRef]

- Naranjo, P.G.V.; Baccarelli, E.; Scarpiniti, M. Design and energy-efficient resource management of virtualized networked Fog architectures for the real-time support of IoT applications. J. Supercomput. 2018, 74, 2470–2507. [Google Scholar] [CrossRef]

- Nan, Y.; Li, W.; Bao, W.; Delicato, F.C.; Pires, P.F.; Dou, Y.; Zomaya, A.Y. Adaptive Energy-Aware Computation Offloading for Cloud of Things Systems. IEEE Access 2017, 5, 23947–23957. [Google Scholar] [CrossRef]

- Conti, S.; Faraci, G.; Nicolosi, R.; Rizzo, S.A.; Schembra, G. Battery Management in a Green Fog-Computing Node: A Reinforcement-Learning Approach. IEEE Access 2017, 5, 21126–21138. [Google Scholar] [CrossRef]

- Wang, S.; Huang, X.; Liu, Y.; Yu, R. CachinMobile: An energy-efficient users caching scheme for fog computing. In Proceedings of the 2016 IEEE/CIC International Conference on Communications in China (ICCC), Chengdu, China, 27–29 July 2016; IEEE: Washington, DC, USA, 2016; pp. 1–6. [Google Scholar]

- Yang, Y.; Wang, K.; Zhang, G.; Chen, X.; Luo, X.; Zhou, M.T. Maximal energy efficient task scheduling for homogeneous fog networks. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Honolulu, HI, USA, 15–19 April 2018; IEEE: Washington, DC, USA, 2018; pp. 274–279. [Google Scholar]

- Singh, S.P.; Nayyar, A.; Kumar, R.; Sharma, A. Fog computing: From architecture to edge computing and big data processing. J. Supercomput. 2019, 75, 2070–2105. [Google Scholar] [CrossRef]

- Chiang, M.; Zhang, T. Fog and IoT: An Overview of Research Opportunities. IEEE Internet Things J. 2016, 3, 854–864. [Google Scholar] [CrossRef]

- Bergmayr, A.; Breitenbücher, U.; Ferry, N.; Rossini, A.; Solberg, A.; Wimmer, M.; Kappel, G.; Leymann, F. A systematic review of cloud modeling languages. ACM Comput. Surv. (CSUR) 2018, 51, 1–38. [Google Scholar] [CrossRef]

- Mahmud, R.; Pallewatta, S.; Goudarzi, M.; Buyya, R. Ifogsim2: An extended ifogsim simulator for mobility, clustering, and microservice management in edge and fog computing environments. J. Syst. Softw. 2022, 190, 111351. [Google Scholar] [CrossRef]

- Arshad, U.; Aleem, M.; Srivastava, G.; Lin, J.C. Utilizing power consumption and SLA violations using dynamic VM consolidation in cloud data centers. Renew. Sustain. Energy Rev. 2022, 167, 112782. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).