Abstract

Generalization is challenging in small-sample-size regimes with over-parameterized deep neural networks, and a better representation is generally beneficial for generalization. In this paper, we present a novel method for controlling the internal representation of deep neural networks from a topological perspective. Leveraging the power of topology data analysis (TDA), we study the push-forward probability measure induced by the feature extractor, and we formulate a notion of “separation” to characterize a property of this measure in terms of persistent homology for the first time. Moreover, we perform a theoretical analysis of this property and prove that enforcing this property leads to better generalization. To impose this property, we propose a novel weight function to extract topological information, and we introduce a new regularizer including three items to guide the representation learning in a topology-aware manner. Experimental results in the point cloud optimization task show that our method is effective and powerful. Furthermore, results in the image classification task show that our method outperforms the previous methods by a significant margin.

Keywords:

deep neural network; representation space; persistent homology; push-forward probability measure MSC:

68T07

1. Introduction

Although over-parameterized deep neural networks generalize well in practice when sufficient data are provided, in small-sample-size regimes, generalization is more difficult and requires careful consideration. Since the ability to learn task-specific representations is beneficial for generalization, a lot of effort has been dedicated to imposing structure on the latent space or representation space via additional regularizers [1,2], to guide the mapping from input space into internal space, or control properties of the internal representations [3]. However, internal representations are high-dimensional, discrete, sparse, incomplete and noisy; extraction of information from this kind of data is rather challenging.

In order to explore and control internal representations, there are various ways to choose: (1) For the algebraic methods based on vector space [4], coordinates are not natural, the power of linear transformation is limited, and low-dimensional visualizations cannot faithfully characterize the data. (2) For the statistical methods [5], a small sample size limits the power of the analysis, inference and computation; asymptotic statistics cannot be used; and the results may exhibit large variance. (3) For geometric methods based on distances [6] or manifold assumptions [7], it is difficult to capture the global picture, and metrics are not theoretically justified [8] (for neural networks, notions of distance are constructed by the feature extractor, which is hard to understand). Individual parameter choices may significantly influence the results, and constraints based on geometry information, such as pairwise distances, may be too strict to respect reality. (4) Methods based on calculus [9,10] can capture the local information in a small neighborhood for each point, but the performance is questionable while high-dimensional data are sparse and the sample size is small.

Besides the above traditional methods, there is another fundamentally different perspective, an unexplored, powerful tool to employ, i.e., the topological data analysis (TDA) method. The advantages of TDA methods [8,11,12,13] are as follows: (1) TDA studies the global “shape” of data and explores the underlying topological and geometric structures of point clouds. As a complement to localized and generally more rigid geometric features, topological features are suitable for capturing multi-scale, global and intrinsic properties of data. (2) Topology methods study geometric features in a way that is less sensitive to the choice of metrics, and this insensitivity is beneficial when the metric is not well understood or only determined in a coarse way [8], as in the neural networks. (3) Topology methods are coordinate-free, and they only focus on the intrinsic geometric properties of the geometric objects. (4) Instead of determining a proper spatial scale to understand and control the data, persistent homology collects information over the whole domains of parameter values and creates a summary in which the features that persist over a wide range of spatial scales are considered more likely to represent true features of the underlying space rather than artifacts of sampling, noise or particular choice of parameters.

1.1. Related Works

Previous work related to our work can be divided into two categories. The first category focuses on regularization using statistical information of internal representations. Cogswell et al. [1] proposed a regularizer to encourage diverse or non-redundant representations by minimizing the cross-covariance of internal representation. Choi et al. [2] designed two class-wise regularizers to enforce the desired characteristic for each class; one focused on reducing the covariance of the representations for samples from the same class, and the other used variance instead of covariance to improve compactness. The second category studies deep neural networks using tools from algebraic topology, in particular, persistent homology. Brüel-Gabrielsson et al. [14] presented a differentiable topology layer to extract topological features, which can be used to promote topological structure or incorporate a topological prior via regularization. Kim et al. [15] proposed a topological layer for generative deep models to feed critical topological information into subsequent layers and provided an adaptation for the distance-to-measure (DTM) function-based filtration. Hajij et al. [16] defined and studied the classification problem in machine learning in a topological setting and showed when the classification problem is possible or not possible in the context of neural networks. Li et al. [17] proposed an active learning algorithm to characterize the decision boundaries using their homology. Chen et al. [18] proposed measuring the complexity of the classification boundary via persistent homology, and the topological complexity was used to control the decision boundary via regularization. Vandaele et al. [19] introduced a novel set of topological losses to topologically regularize data embeddings in unsupervised feature learning, which can efficiently incorporate a topological prior. Hofer et al. [20] considered the problem of representation learning, treated each mini-batch as a point cloud, and controlled the connectivity of latent space via a novel topological loss. Moor et al. [3] extended this work and proposed a loss term to harmonize the topological features of the input space with the topological features of the latent space. This approach also acts on the level of mini-batches, computes persistence diagrams for both input space and latent space, and encourages these two persistence diagrams (PD) to be similar by the regularization item. Wu et al. [21] explored the rich spatial behavior of data in the latent space, proposed a topological filter to filter out noisy labels, and theoretically proved that the method is guaranteed to collect clean data with high probability. These works show empirically or theoretically that enforcing a certain topological structure on representation space can be beneficial for learning tasks.

Hofer et al. [22] proposed an approach to regularize the internal representation to control the topological properties of the internal space, and proved that this approach would enforce mass concentration effects which are beneficial for generalization. However, the authors based their work on the assumption that a loss function that yields a large margin in the representation space should be selected and, therefore, that the mass concentration effect is only beneficial if the reference set is located sufficiently far away from the decision boundary, but this large margin assumption may be violated in practice.

1.2. Contribution

In this paper, we apply the TDA method, in particular, persistent homology from algebraic topology, to analyze and control the global topology of the internal representations of the training points, which reveals the intrinsic structure of the representation space.

When TDA is combined with statistics, data are deemed to be generated from some unknown distribution instead of some underlying manifold. TDA methods are used to infer topological features of the underlying distribution, especially the support of the dis-tribution. Inspired by [22], by combining statistics with topological data analysis, our work focuses on the probability measure induced by the feature extractor and treats the representation of the training points in a mini-batch as point cloud data from which the topological information is extracted, and then we compute persistence diagram of the persistent homology. Specifically, we consider the topological properties of the samples from the product measure of two classes to enforce intra-class mass concentration and separation between classes simultaneously. We extend the definitions and techniques in [22] to formalize the separation between two classes via persistent homology. We argue that if this separation property is encouraged, then both mass concentration and separation will be enforced, and we proposed a novel weight function and constructed a novel loss to control the topological properties of the representation space.

In summary, our contributions are as follows:

(1) We characterize a separation property between two classes in representation space in terms of persistent homology (Section 3.2).

(2) We prove that a topological constraint on the samples of the push-forward probability measure in the presentation space leads to mass separation (Section 3.2).

(3) We propose a novel weight function based on DTM. Using our weight function, the weighted Rips filtration can be built on top of training samples from class pairs in a mini-batch. The stability of the persistence diagram with respect to the proposed weight function is presented (Section 3.4).

(4) We propose three regularization items, including a birth loss, a margin loss and a length loss, which operate on a persistence diagram obtained via persistent homology computations on mini-batches, to encourage mass separation (Section 3.4).

The remainder of this paper is structured as follows: In Section 2, we present some topological preliminaries relevant to our work. Section 3 gives our main results, including the separation property, the weight function and the regularization method. Section 4 shows the experimental results on synthetic data and benchmark datasets. Finally, Section 5 gives the conclusion.

2. Topological Preliminaries

Generally, in topological data analysis, the point clouds are thought to be finite samples taken from an underlying geometric object. To extract topological and geometric information, a natural way is to “connect” data points that are close to each other to build a global continuous shape on top of the data. This section contains a brief introduction to the relevant topological notions. More details can be found in several excellent introductions and surveys [8,11,12,13,23].

2.1. Simplicial Complex, Persistent Homology and Persistence Diagrams

Simplicial complex K is a discrete structure built over a finite set of samples to provide a topological approximation of the underlying topology or geometry. The Čech complex and the Vietoris-Rips complex are widely used in TDA. Below, for any and , let be the open ball of radius centered at .

Definition 1

(Čech complex [11]). Let be finite and . The Čech complex is the simplicial complex

Definition 2

(Vietoris-Rips complex [11]). Let be finite and r > 0. The Vietoris-Rips complex is the simplicial complex

For a simplicial complex K, the k-th homology group of K is used to characterize k-dimensional topological features of K, denoted by . The k-th Betti number of K is the dimension of the vector space . The k-th Betti number counts the number of k-dimensional features of K. For example, counts the number of connected components, counts the number of holes, and so on.

For Definitions 1 and 2, it is difficult to choose a proper r without prior domain knowledge. The main insight of persistent homology is to compute topological features of a space at different spatial resolutions. In general, the assumption is that features that persist for a wide range of parameters are “true” features. Features persisting for only a narrow range of parameters are presumed to be noise.

A filtration of a simplicial complex K is a collection of subcomplexes approximating the data points at different spatial resolutions, formally defined as follows:

Definition 3

(Filtration [11]). Let be a simplicial complex, and . A family of subcomplexes of is said to be a filtration of if it satisfies

(1)for;

(2)

Given , two filtrations and of are -interleaved [24] if for every , and . The interleaving pseudo-distance between and is defined as the infimum of such :

Let be a finite point set in and . The family forms Čech filtration for , and the family forms Rips filtration. Since the Čech complex is expensive to compute, Rips filtration is less expensive to compute than Čech filtration and is frequently used to investigate the topology of the point set .

In the construction of Čech filtrations, the radii of balls increase uniformly. We can also make radii increase non-uniformly. Let be a continuous function, . For , we define a function by

By modifying the definition of , we define a simplicial complex by

For each fixed t, is a Čech complex. The family forms a filtration, which is called the weighted Čech filtration. We can also construct the weighted Rips filtration in a similar way.

For a filtration and each non-negative k, we keep track of when k-dimensional homological features appear and disappear in the filtration. If a homological feature appears at and disappears at , then we say is born at and dies at . By considering these pairs as points in the plane, we obtain the persistence diagram.

Definition 4

(Bottleneck distance [15]). Given two persistence diagrams and , their bottleneck distance () is defined by

where is the usual -norm, and with Diag being the diagonal with infinite multiplicity, and the set consists of all the bijections .

2.2. DTM Function

Despite strong stability properties, distance-based methods in TDA, such as the Čech or Vietoris-Rips filtrations, are sensitive to outliers and noise. To address this issue, [24] introduced an alternative distance function, i.e., the DTM function. Details of DTM-based filtrations are studied in [25]. We only list the properties of the DTM that will be used here.

Let be a probability measure over , and a parameter. For every , let be the function defined on by .

Definition 5

(Distance-to-measure [24]). The distance-to-measure function (DTM) with parameter and power is the function defined by

and if not specified, is used as a default and omitted.

From Definition 5, it can be seen that for every , is not lower than the distance from to the support of .

Proposition 1

([24]). For every probability measure and , is 1-Lipschitz.

Proposition 2

([24]). Let and be two probability measures, and . Then

where is the Wasserstein distance between and .

In practice, the measure is usually unknown, and we only have a finite set of samples ; a natural idea to estimate an approximation of the DTM from is to plug the empirical measure instead of in Definition 5, to obtain the “distance to the empirical measure (DTEM)”. For , the DTEM satisfies

where denotes the distance between x and its j-th neighbor in . This quantity can be easily computed in practice since it only requires the distances between x and the sample points.

3. Topological Regularization

Let be the input space, the label space and the internal representation space before the classifier. Assuming there are C classes, we formulate the neural network as a compositional mapping: , where represents a feature extractor and represents a classifier that maps the internal representation to the predicted label. Assume the representation space is equipped with a metric . Let be the probability measure on and be the push-forward probability measure induced by on the Borel -algebra defined by on .

We focus on the internal representation space; in particular, we study the push-forward probability measure induced by the feature extractor on , identify a property of that is beneficial for generalization and propose a regularization method to implement the property.

3.1. Push-Forward Probability Measure and Generalization

Let represent the deterministic mapping from the support of to the label space, and be a training sample, where are m i.i.d. draws from , and .

For a neural network and , we define the generalization error by , where

To study the property of , we consider the class-specific probability measure as in [22], define the restriction of (i.e., the push-forward of via ) to class k by

where is the representation of class k in .

If the probability mass of class k’s decision region, measured via , tends towards one, it may lead to better generalization. Reference [22] formulated this notion by establishing a direct link between and the generalization error.

Proposition 3

([22]). For any class , let be its internal representation and be its decision region in w.r.t. . If, for ,, then .

Proposition 3 links generalization to a condition depending on . Intuitively, increasing the probability of mapping a sample of class k into the correct decision region can improve generalization.

Based on this observation, [22] introduced the definition of a -connected set to characterize connectivity via 0-dimensional (Vietoris-Rips) persistent homology and proved that a corresponding property for probability measure would be beneficial for generalization.

Definition 6

(-connected [22]). Let . A set is -connected iff all 0-dimensional death-times of its Vietoris-Rips persistent homology are in the open interval .

However, [22] assumed a large margin in representation space which may be violated in deep neural networks. In the following, we extend their work and identify a property for probability measure that can enhance the separation between classes.

Note that we can also write Proposition 3 in an alternate form, because the probability mass of all classes’ decision region measured via sums to one, we have , which means that for each class k, the sum of the probability mass of other classes’ decision region, measured via , tends towards zero. Intuitively, decreasing the probability of mapping a sample of class k into other incorrect decision regions can improve generalization.

Therefore, in order to decrease , we take class pairs and into consideration and formulate a notion of separation in terms of persistent homology as follows.

3.2. Probability Mass Separation

In this section, we show that a certain topological constraint on the pair will lead to probability mass separation. More precisely, given a reference set , let , our topological constraint provides a non-trivial upper bound on in terms of .

In order to enforce the separation between two classes, we extend Definition 6 to characterize the separation between two sets:

Definition 7.

Let,. Considering two sets,and, we denote the death-times of’s 0-dimensional Vietoris-Rips persistent homology asand order the indexing of points by decreasing lifetimes, i.e.,for. Then, we stateandare-separated, if and only if the following two conditions are satisfied:

(1)andare both-connected;

(2)is in the open interval.

Then we use this notion to capture the concentration and separation of a (b, b) sample from a pair. For b-sized i.i.d. samples from , we denote the product measure of by , and for (b, b)-sized samples from (b i.i.d. draws from each), we denote the product measure by .

Define the indicator function as follows:

are -separated.

Now we consider the probability of the (b, b)-sized samples from being -separated.

Definition 8.

Let,,, and. We call apair-separated if:

For two classes C1 and C2, consider the restriction of to C1 and C2, i.e., and . Assume is -separated, consider reference set and let , together with the complement set , where . Let , and . According to [22], when is fixed, we can lower bound . In the following, we will provide an approach to upper bound s, which hints at mass separation between different classes.

For a (b, b) sample , consider the distribution of among and N. Let and be the numbers of ’s and ’s that fall within , respectively; i.e., and . Apparently, if the membership assignment satisfies: and , then cannot be -separated.

Thus, we define events that cannot be -separated as follows:

and then we have .

In the following lemma, we compute the probability of event E and derive some useful properties.

Proposition 4.

Let,,and. Denote the following:

Then the probability of E can be expressed in terms ofandas follows:, and for, it holds that

(1)is monotonically increasing on,

(2)is monotonically increasing on.

Proof.

For argument (1), we fix and write

To study the monotonicity properties of , it is sufficient to consider .

We define two auxiliary functions:

Then we have , and that

Hence,

Consequently, is monotonically increasing, and thus, so is .

For argument (2), the proof is similar and omitted. □

Now we can derive the main theorem:

Theorem 1.

Let,,and. Then it holds that

Proof.

The left side includes all the events that violate the separation assumption, and the right side is only a special case among them. Therefore, by combining Definition 8 and Proposition 4, we complete the proof. □

3.3. Ramifications of Theorem 1

According to Theorem 1 and Proposition 4, if covers a certain mass of , we can upper bound the mass it covers of , i.e., because is bounded by and is monotonically increasing in and , if is greater than some , then should be less than some . This is beneficial for generalization if is constructed from the representations of the correctly classified training instances to include some minimal mass of C1.

Assuming that the mass of the reference set is fixed, noting that our Definition 7 is stronger than the mass concentration condition in [22] and then letting , we have

Let be the smallest mass in the extension, and then .

By Theorem 1, we have

and thus is non-empty. Now let identify the largest mass in the extension for which the inequality holds. As increases, decreases; therefore, .

Let , then .

As is monotonically decreasing in , is monotonically increasing in ; furthermore, as is monotonically increasing in s, is monotonically decreasing in . These facts motivate our regularization goal of increasing . In other words, increasing would both boost mass concentration within a class and enforce mass separation between two classes.

Suppose M is constructed mainly by training samples from C1, i.e., we choose and to include many training samples from C1; then we can ask the following: how much mass of C1 should M contain at least to boost the separation?

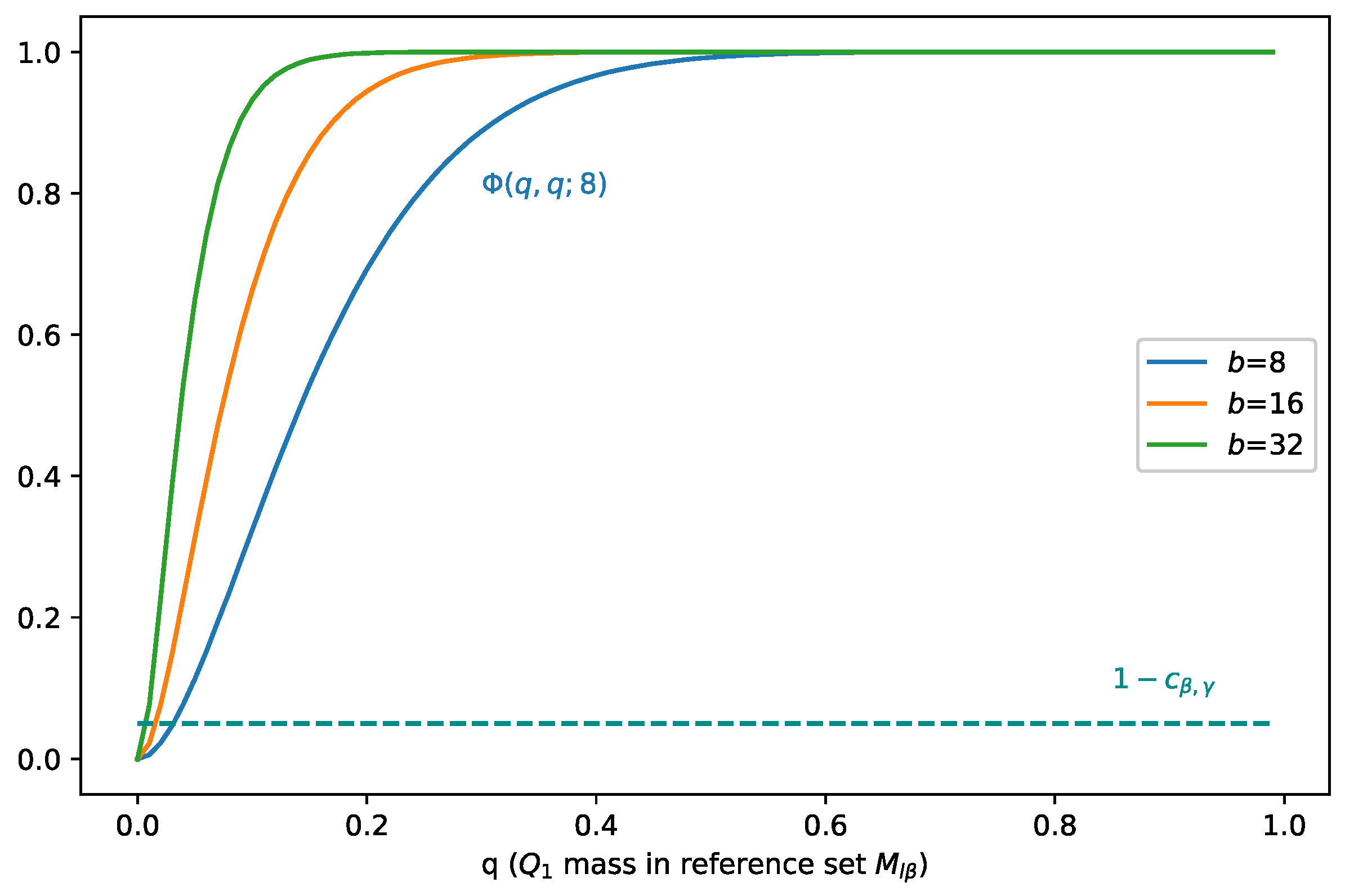

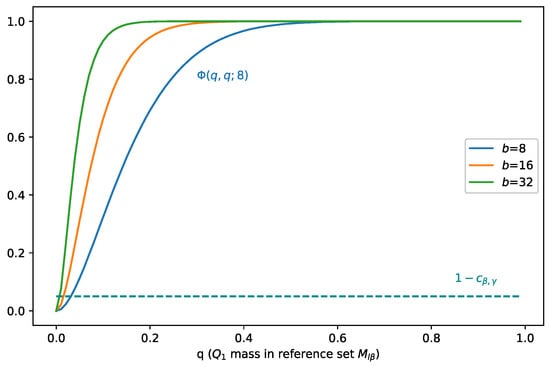

We plot in Figure 1, and we can see that at point (0.049, 0.049), i.e., . At this point, the mass separation effect starts to occur. To satisfy Inequality (21), when increases, should decrease, which means that as covers more mass of , it covers less mass of . In addition, as the batch size b increases, the least mass of that should cover decreases.

Figure 1.

Illustration of when holds, i.e., when the mass separation effect starts to occur. When increases, should decrease, which means that as covers more mass of , it covers less mass of . As the batch size b increases, the least mass of that should cover decreases.

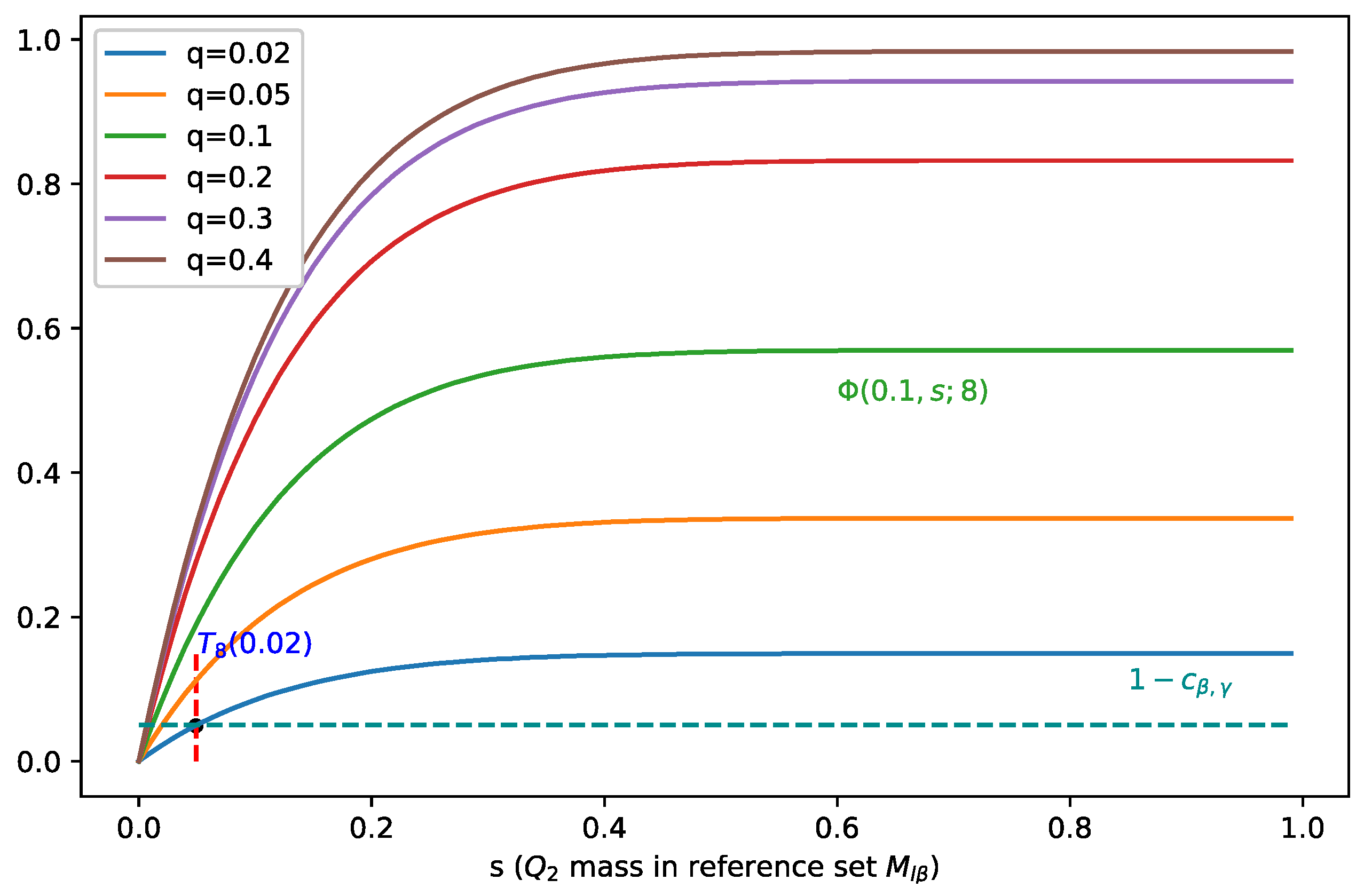

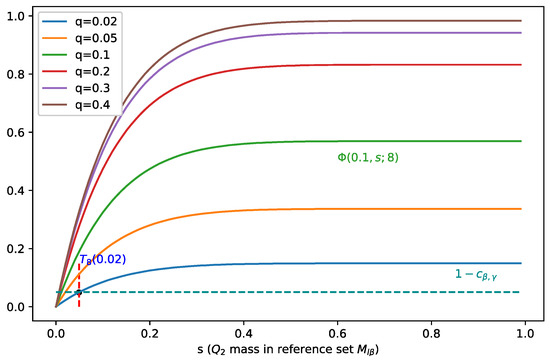

In Figure 2, we fix the batch size to 8 and visualize as a function of (for different values of ), and we can see that when increases, moves towards zero, which indicates a smaller , i.e., covers less mass of , and therefore, it leads to a better separation.

Figure 2.

Illustration of for b = 8 and different values of . Points at which holds are marked by dots. When increases, moves towards zero, which indicates a smaller , i.e., covers less mass of .

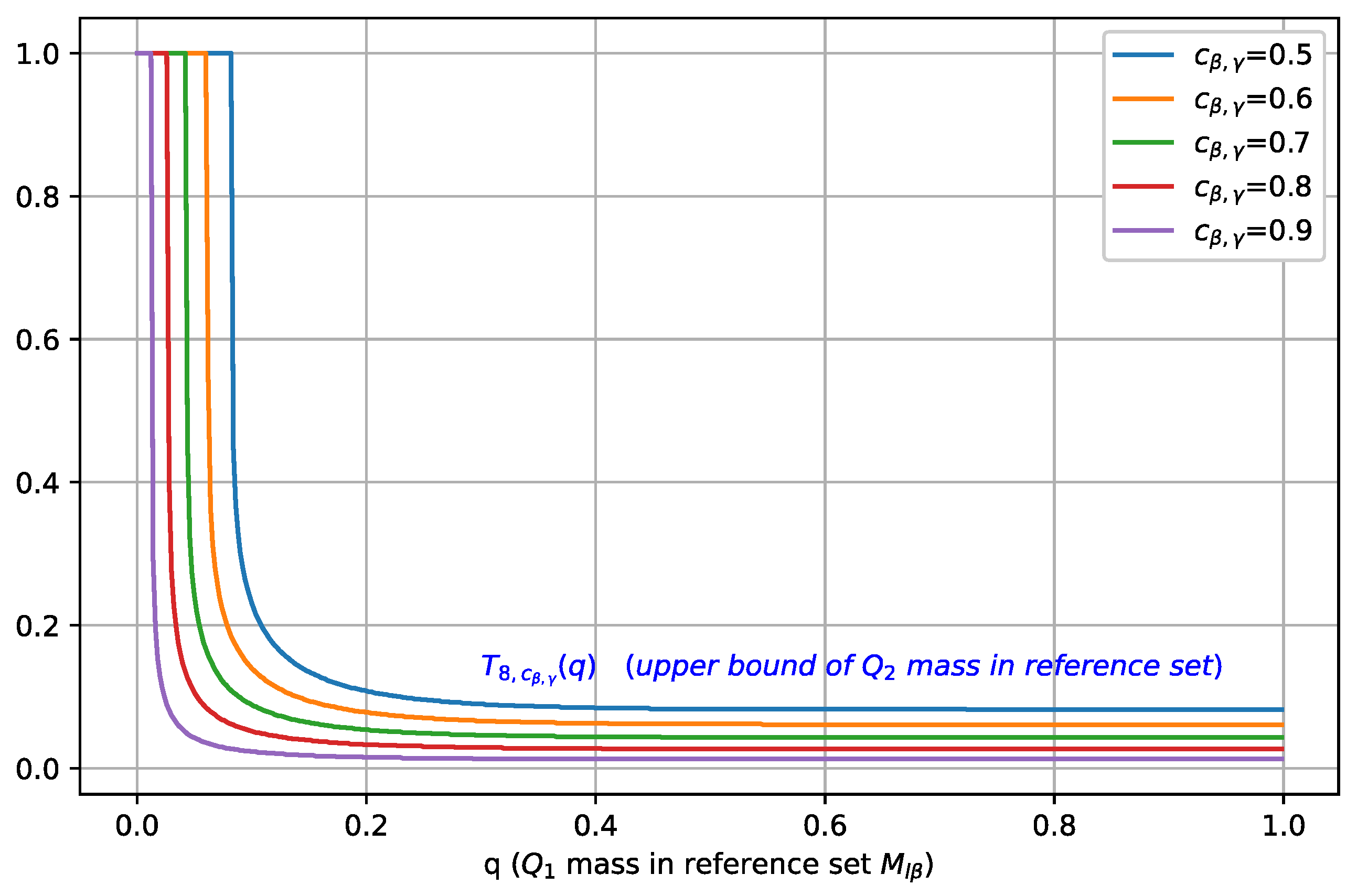

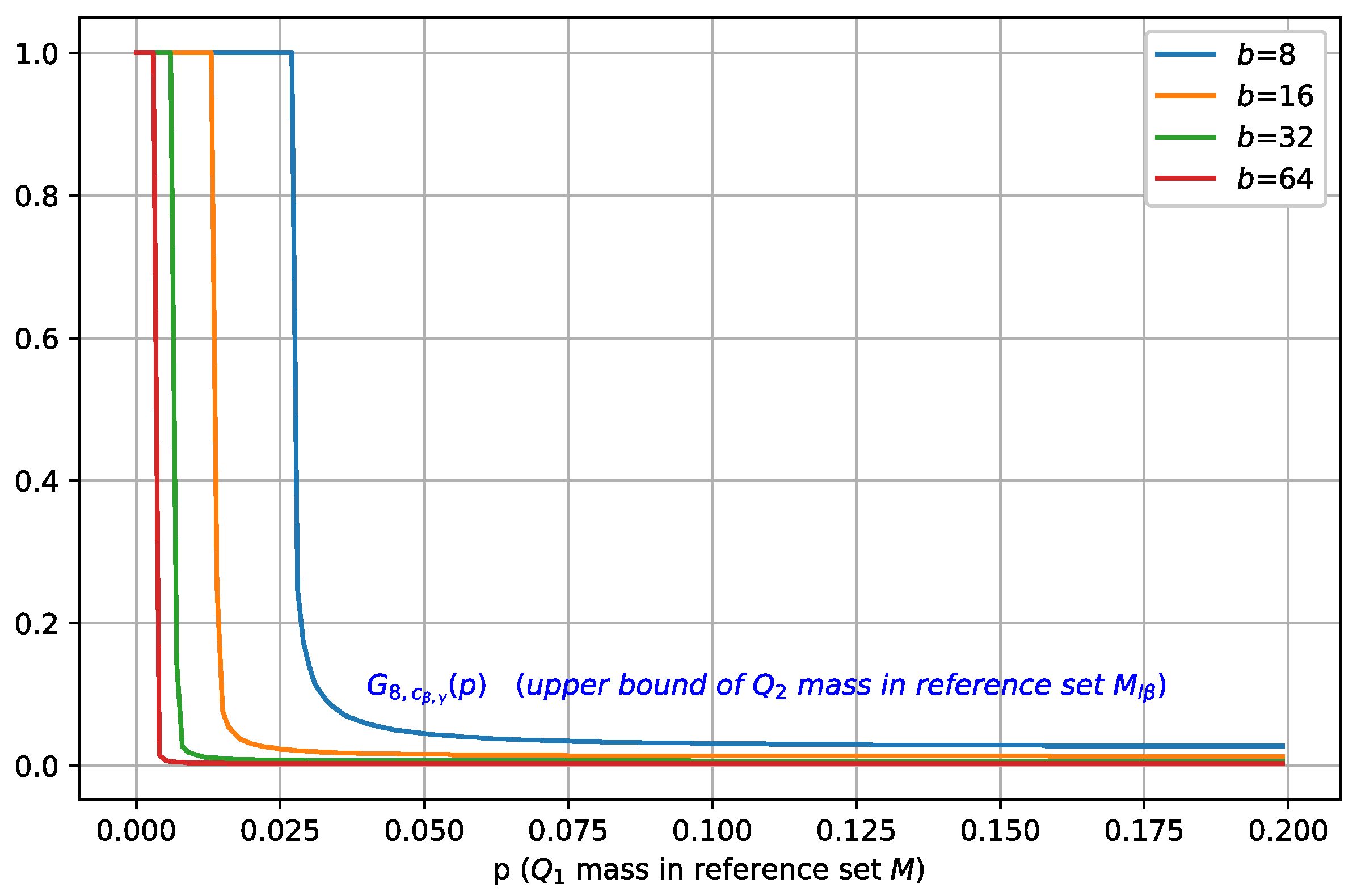

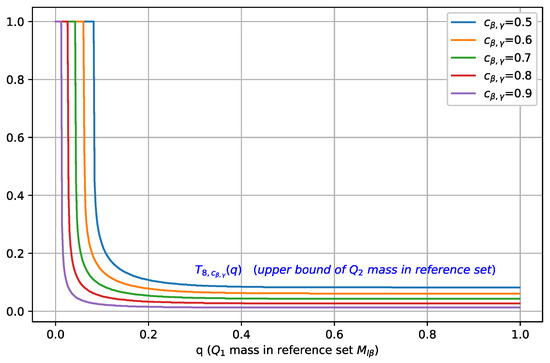

In Figure 3, we plot as a function of for different values of , where . As is increased, the maximal mass of contained in , characterized by , shifts towards a smaller value, which indicates that a better separation between classes C1 and C2 is achieved.

Figure 3.

Illustration of , i.e., the upper bound on , plotted as a function of the mass (for b = 8 and different values of ). For a fixed , as is increased, the maximal mass of contained in decreases, i.e., better separation is achieved.

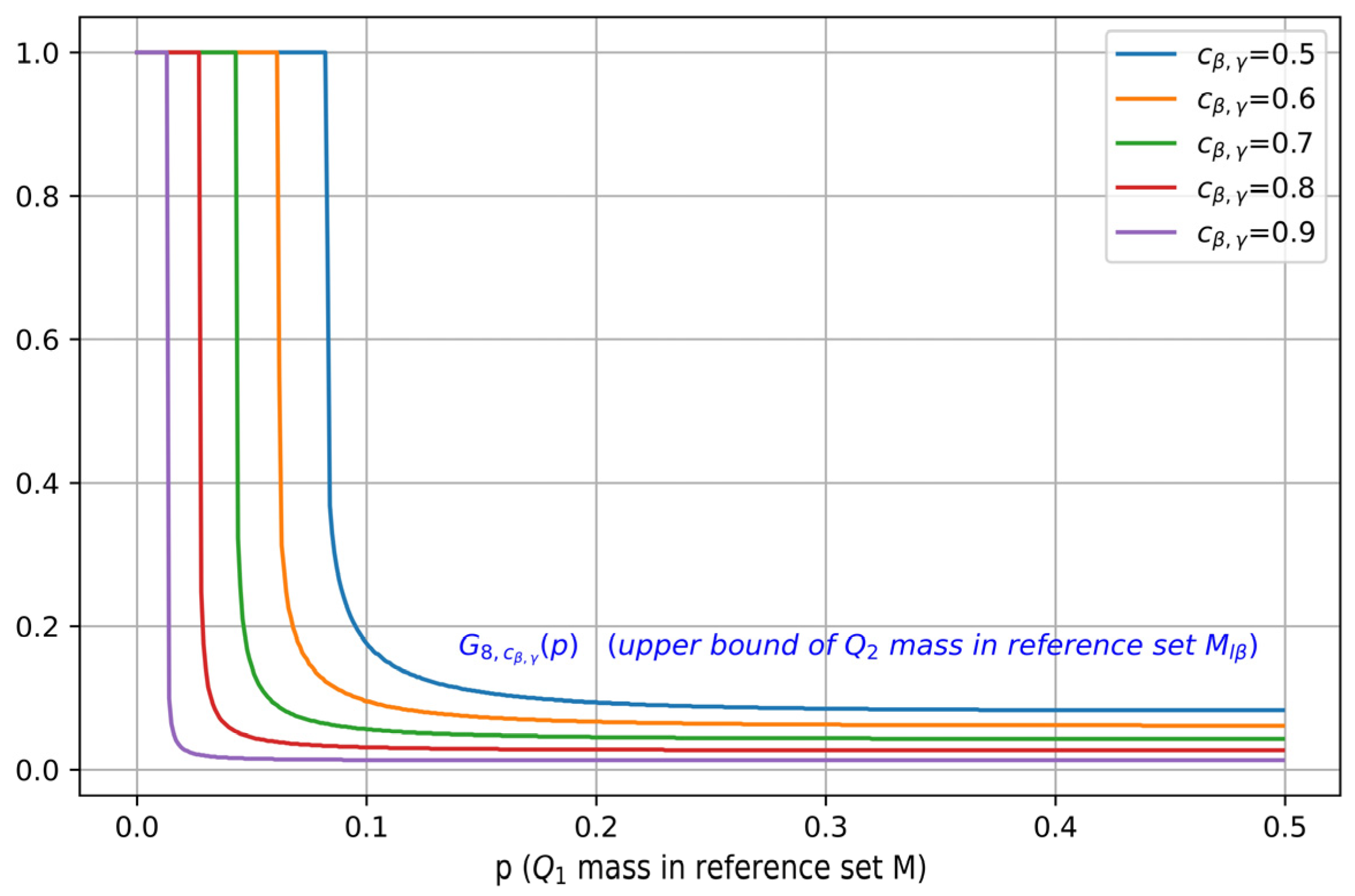

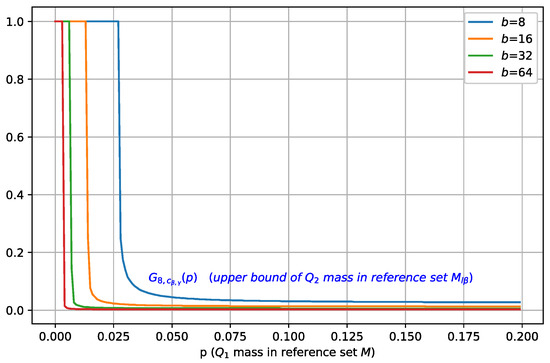

Figure 4 visualizes as a function of for different values of , where . It can be seen that as is increased, the maximal mass of contained in , characterized by , also shifts towards a smaller value, which indicates a better separation.

Figure 4.

Illustration of , i.e., the upper bound on , plotted as a function of the mass (for b = 8, and different values of ). For a fixed , as is increased, the maximal mass of contained in decreases.

Figure 5 plots as a function of for different values of batch size b, where . As b is increased, the maximal mass of contained in , characterized by , also shifts towards a smaller value, which indicates a better separation, and in order to achieve separation, M only needs to cover a small mass of .

Figure 5.

Illustration of , i.e., the upper bound on , plotted as a function of the mass (for , and different values of b). For a fixed , as the batch size is increased, the maximal mass of contained in decreases.

3.4. Weighted Rips Filtration and Regularization

In Section 3.2, we show that a topological constraint on a pair would lead to probability mass concentration and separation. To impose this constraint, we propose a function that is used to construct the filtration, and then we compute the 0-dimensional persistent diagram and construct the loss item to regularize the internal representation.

Our method acts on the level of mini-batches; we construct each mini-batch B as a collection of n sub-batches, i.e., B = (B1,…, Bn), as in [22]. Each sub-batch consists of b samples from the same class, and thus the resulting mini-batch B contains n*b samples. Our regularizer consists of three items and penalizes deviations from a -separated arrangement of for all sub-batch pairs (Bi, Bj).

3.4.1. A Weight Function for Weighted Rips Filtration

To construct a proper filtration to deal with samples from two different classes, we define a function :

where T is the temperature that controls the magnitude and is the DTM function defined in Equation (7).

Considering the mass separation for two classes, we denote the data instances of class by , and then for a class pair , the training samples can be written as follows: . Let and be the restriction of (i.e., the push-forward of via ) to classes i and j, respectively. In order to construct filtration with Equation (24), firstly, we need to compute for . Note that and can be computed with Equation (9), the DTEM, where is approximated by and is approximated by . According to Equation (24), for a good classifier, points from class i should have smaller function values than points from class j. Then we plug into Equation (4) to compute the weighted Rips filtration (we set p = 1 for Equation (4) in this research), and obtain the 0-dimensional persistence diagram, i.e., the multi-set of intervals for homology in dimension 0, . After that, we order the indexing of points by decreasing lifetimes as done in Definition 7; we will use them later to construct the loss item in Section 3.4.3.

3.4.2. Stability

In this section, we establish the stability results for our weight function in Equation (24). In Theorem 2, the stability of the weight function is given, which will later be used in Theorem 3 to ensure the stability of the filtration with respect to the weight function . Proposition 5 is used to ensure the stability of the filtration with respect to . According to persistent homology theory, the stability results for the filtration translate as stability results for the persistence diagrams. We present our main stability results in Theorem 3.

Theorem 2.

Let,, and be four probability measures, and . Then

Proof.

Let and . Then .

Because is 1-Lipschitz, i.e., for all and , , we have

The last inequality is obtained according to Proposition 2. □

In Proposition 5, we consider the stability of the filtration with respect to . For brevity, the subscripts of are omitted.

Proposition 5.

Suppose thatandare compact and that the Hausdorff distance. Then the filtrationsandare k-interleaved with.

Proof.

It suffices to show that for every , .

For , there exists such that , i.e., . From the hypothesis , there exists such that . Then we need to prove that , i.e., .

According to triangle inequality, . Then it suffices to show that .

Using Equation (4), we have

According to Proposition 1, the DTM function is 1-Lipschitz, and then

Therefore,

In the following theorem, we combine the above results to establish the stability of the persistence diagram with respect to and .

Theorem 3.

Consider four measures,,andonwith compact supports,,and, respectively. Let,,and,denotes the bottleneck distance between persistence diagrams. Then

Proof.

Under some regularity conditions, , where denotes the interleaving pseudo-distance between two filtrations as defined in Equation (3).

We use the triangle inequality for the interleaving distance:

For the first part (1) on the right side of Equation (30), it can be seen that from Proposition 5, we have

For the second part (2) on the right side of Equation (30), according to Proposition 3.2 in [25], we have

Using Theorem 2, we have

By combining part (1) and part (2), we complete the proof. □

3.4.3. Regularization via Persistent Homology

We split the persistence intervals obtained in Section 3.4.1 into two subsets:

where consists of the intervals in which the birth time belongs to class i and consists of the intervals in which the birth time belongs to class j.

Now we define three loss items:

- Birth loss

Birth loss is designed to measure intra-class distance, in order to meet the first requirement of Definition 6, to enforce intra-class mass concentration:

where and are super parameters used to control the birth time for each class.

- Margin loss

Margin loss is designed to measure the “distance” between two classes. There may be connected components that appear due to points from class i but disappear due to points from class j, these cases should be penalized. In addition, for class j, the longest interval in would finally merge into class i’s intervals.

Let ; we define

which means that we penalize the margins smaller than , where is also a super parameter used to control inter-class separation.

- Length loss

Weighted Rips filtration is not as direct as the Rips filtration in controlling distances. Therefore, the length loss can be used in combination with the birth loss to penalize large intra-class distances. In addition, we hope that the two classes correspond to two connected components, which will persist for a wide range of parameters until these two components finally merge when the parameter reaches a sufficiently large value. We also want to prevent from becoming overly dense. To formulate this intuition, we define

where is a super parameter.

Finally, our regularization item can be written as follows:

where the weightings , and can be set such that the range of the loss is comparable, in range, to the cross-entropy loss, or can be selected via cross-validation.

4. Experiments

In this section, we test our idea with some experiments. We first consider point cloud optimization to obtain some intuition on the behavior of (35), and then we evaluate our approach on the image classification task.

4.1. Point Cloud Optimization

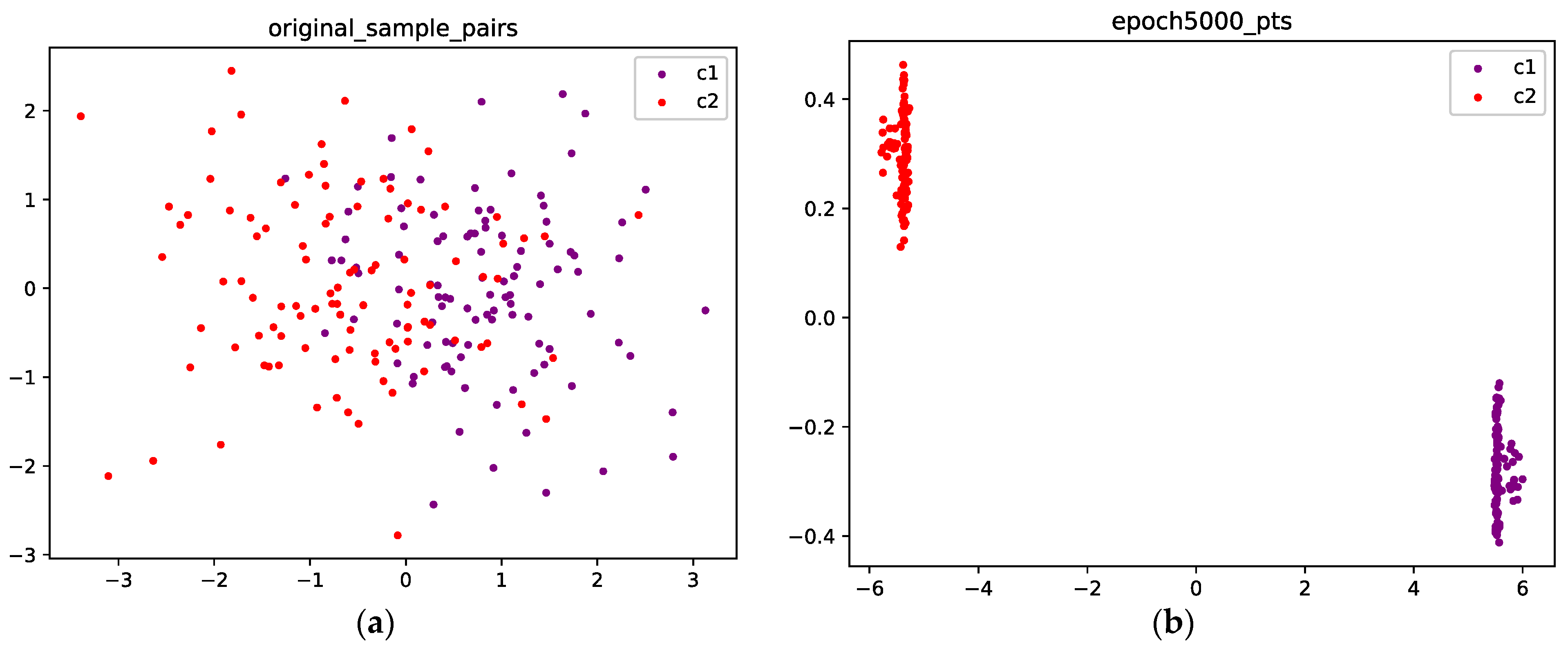

To validate our approach, as an illustrative example, we perform point cloud optimization with only the proposed loss in Equation (35), without other loss items. Point clouds are generated from Gaussian mixture distribution, and we assume that these points are from two different classes: C1 and C2. In the following figures, purple points represent samples from C1, and red points represent samples from C2.

4.1.1. Gaussian Mixture with Two Components

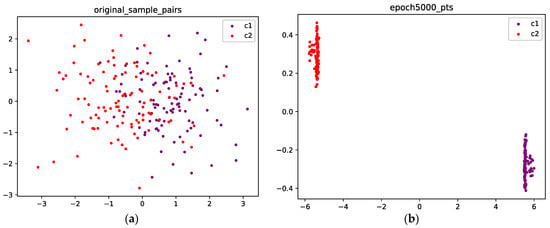

To test the separation effect, we set the centers of the two components to (−0.6, 0) and (0.6, 0), and the covariance matrix is set to the identity matrix. For parameters in the weight function of Equation (24), we set and . For super parameters in the three loss items, we set , , and . To encourage clustering and speed up optimization, we adopt a dynamic m update scheme, i.e., gradually increasing m during training. The weightings of the three loss functions are set to 9, 1 and 0.3, respectively, and are chosen by gradient information in the first epoch.

Figure 6a shows the initial position of the points; the purple points are sampled from C1, and the red points are sampled from C2. Figure 6b shows the final position after 5000 epochs; the mass concentration and separation effects are obvious, and the points from the two classes are well separated.

Figure 6.

(a) The original points are sampled from a Gaussian mixture with two components; the purple points are sampled from class 1, and the red points are sampled from class 2. (b) Optimized configuration after 5000 epochs; the points from the two classes are well separated.

Since the weight function may lead to imbalanced point configurations for the two classes, we can address this issue by changing the order of the two sets of points alternatively when feeding data to the computation of persistent homology during training. For vision datasets, because our regularization is used together with cross-entropy loss and a stochastic mini-batch sampling scheme, the imbalance will be compensated automatically.

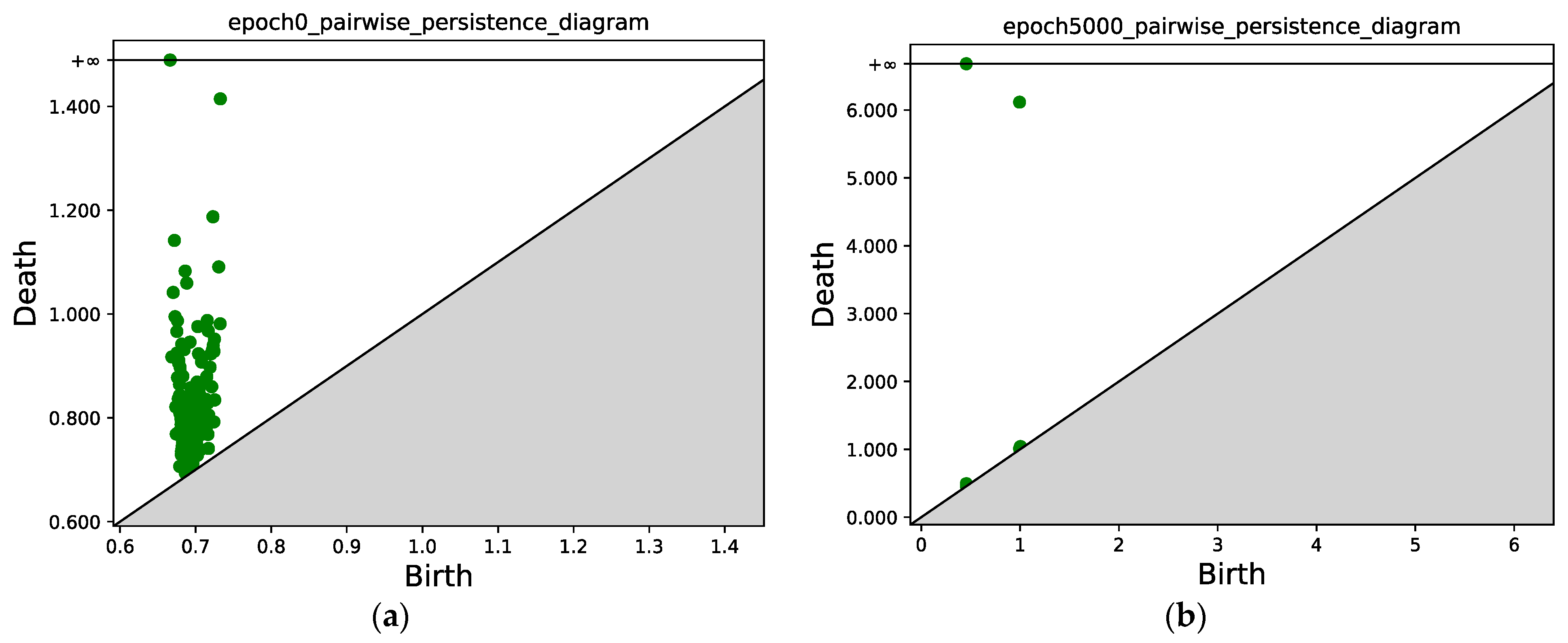

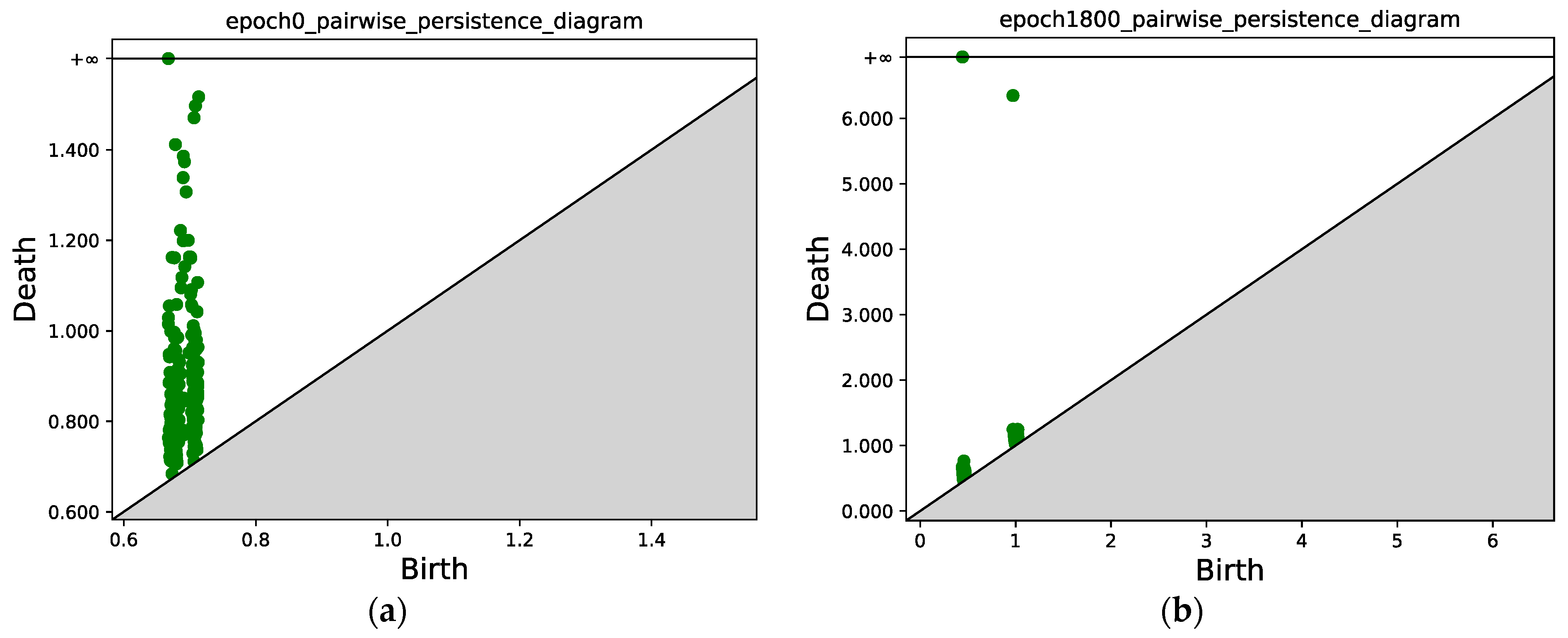

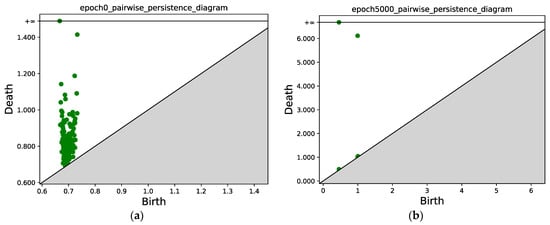

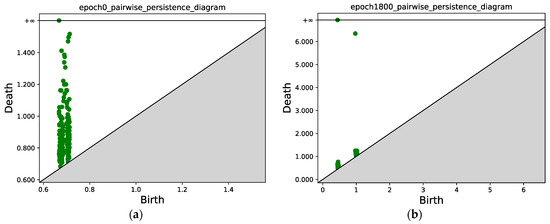

Figure 7 compares the persistence diagrams before and after training; each green point represents a (bi, di) pair, the green point with y = inf represents the final merged single component left when the filtration value is sufficiently large, and the green point with y > 6 tells us that at this value, the two components merge, i.e., one component disappears and merges into the other component which is generated at an earlier time. In Figure 7, we can see that after 5000 epochs, the two subsets mentioned in Section 3.4.3 that correspond to the two classes are well separated, i.e., two connected components can be identified in the persistence diagram. We can also see that the points from the same class are concentrated.

Figure 7.

(a) Persistence diagram obtained via persistent homology at epoch 0; (b) Persistence diagram after 5000 epochs. Two connected components can be identified, the points with x ≈ 0.5 correspond to the first connected component (class 1), and the points with x ≈ 1.0 correspond to the second connected component (class 2).

4.1.2. Gaussian Mixture with Four Components

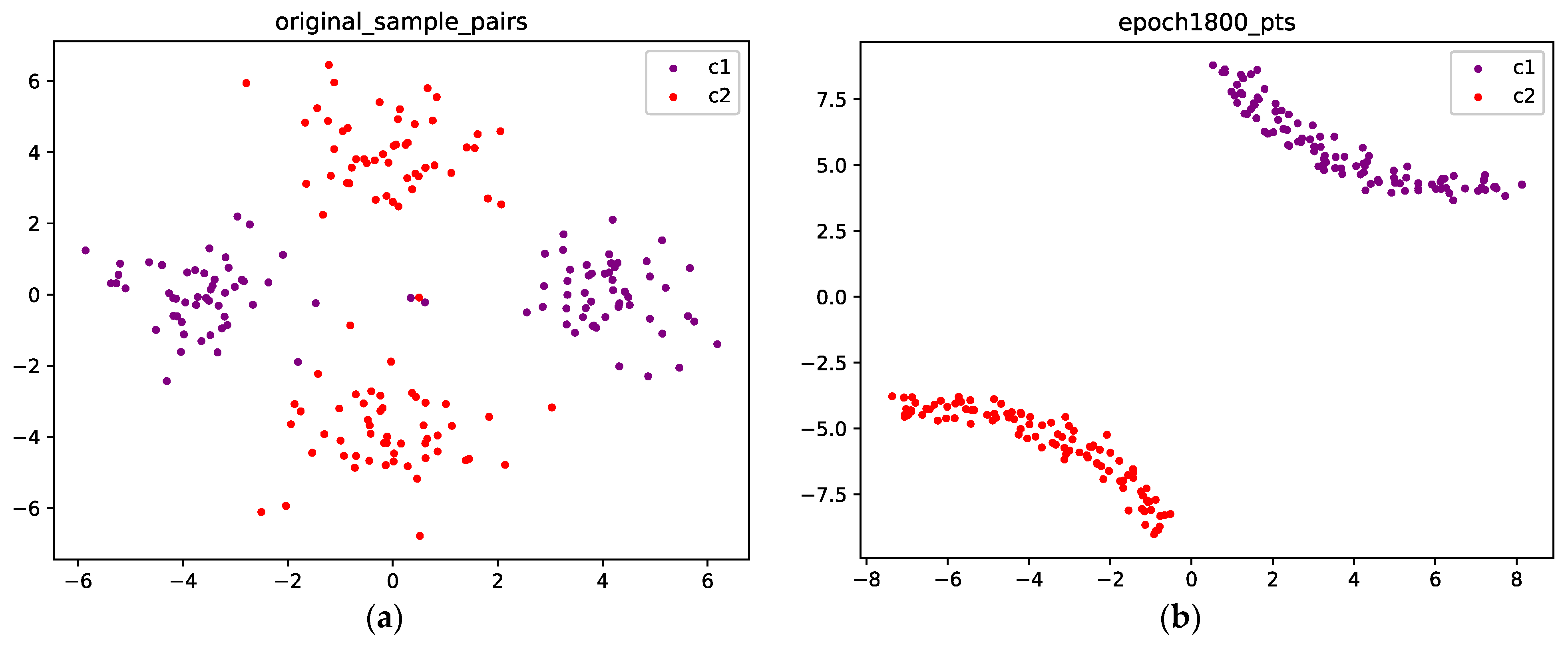

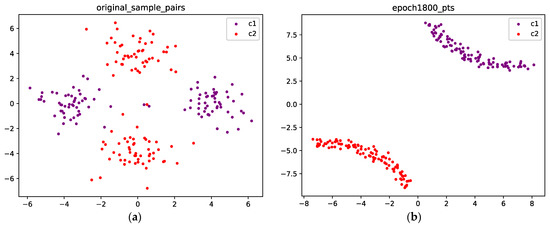

As a more challenging example, we consider a Gaussian mixture with four components. We suppose that they represent samples from two different classes, i.e., each class corresponds to two components. For each class, we hope the corresponding two components can merge. To achieve this goal, samples from one class have to travel across samples from the other class, which may cause the loss to increase. Therefore, to obtain optimal results, the optimizer needs to climb the mountain in the loss landscape before it arrives at a valley.

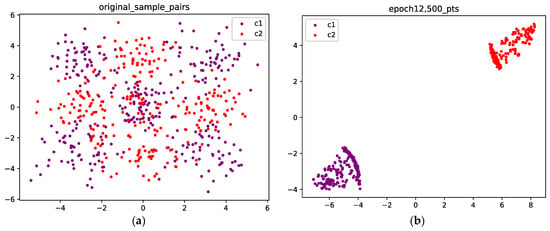

Figure 8 visualizes the points before and after training. Figure 8a shows the initial position of the points. The purple points are sampled from C1, and the red points are sampled from C2. We can see that after 1800 epochs, for each class, the two components merge into a single connected component, as shown in Figure 8b.

Figure 8.

(a) The original points are sampled from a Gaussian mixture with four components, and each class corresponds to two components; the purple points are sampled from class 1, and the red points are sampled from class 2; (b) Optimized configuration after 1800 epochs; the points from the two classes are well separated.

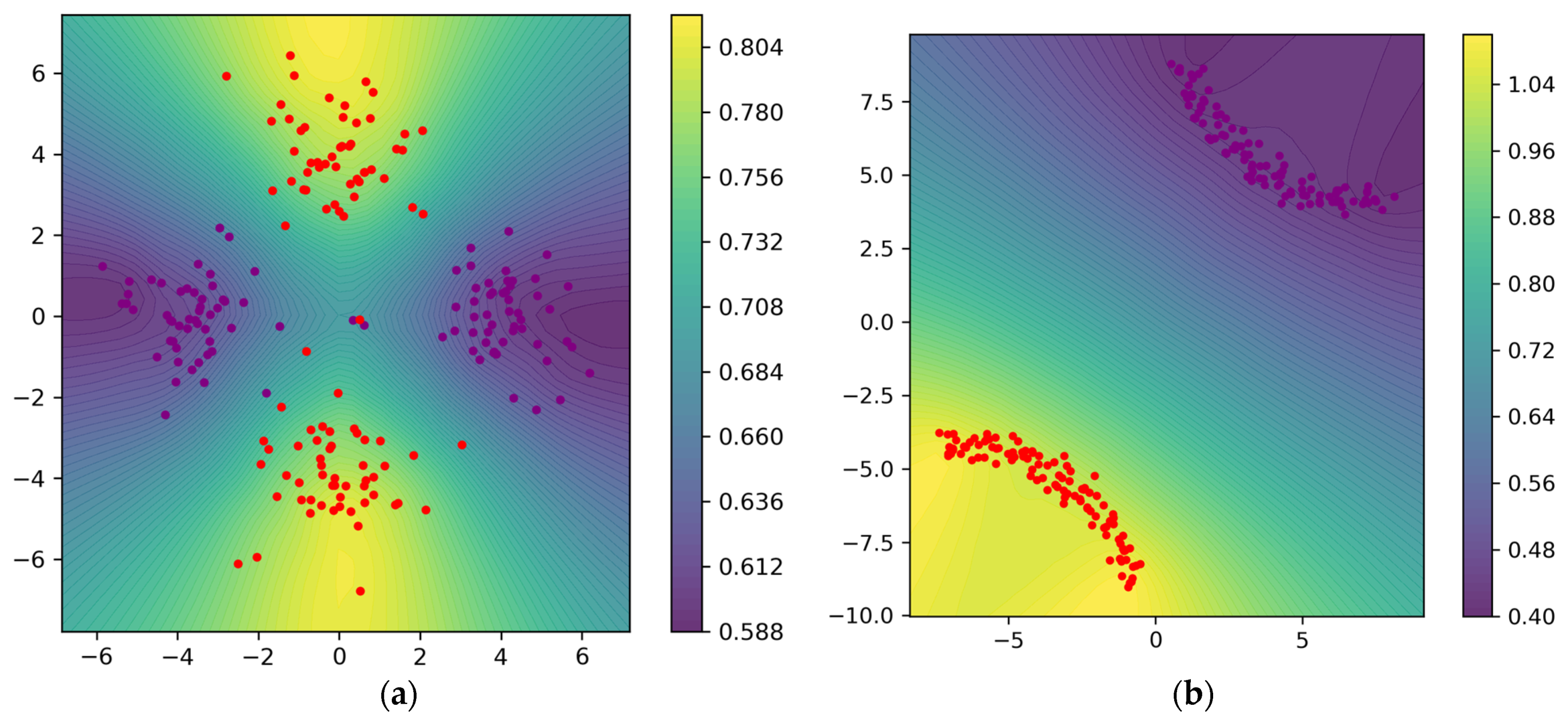

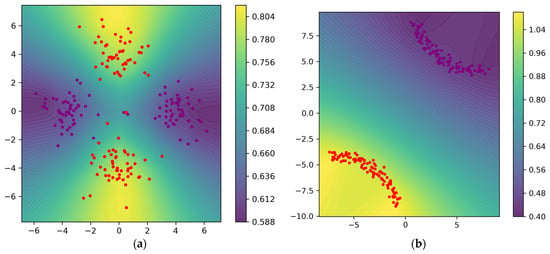

Figure 9 visualizes the function values calculated by our weight function (24); these values are used to construct the weighted Rips filtration using Equation (4) to extract topological information, and finally, the topological information is used by the regularizer to guide the optimization. Figure 9a visualizes the function values and the contour lines at epoch 0; it can be seen that larger values are assigned for points from C2. Figure 9b visualizes the function values and the contour lines after 1800 epochs.

Figure 9.

(a) Weight function values and contour lines evaluated on the mesh at epoch 0; the points from class 2 correspond to larger values. (b) Weight function values and contour lines evaluated on the mesh after 1800 epochs.

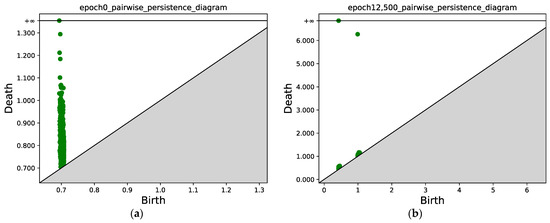

Similar to Figure 7, Figure 10 compares the persistence diagrams before and after training. Figure 10b shows that after 1800 epochs, two connected components can be identified, and the mass concentration and separation effect is obvious.

Figure 10.

(a) Persistence diagram obtained via persistent homology at epoch 0. (b) Persistence diagram after 1800 epochs. Two connected components can be identified; the points with x ≈ 0.5 correspond to the first connected component (class 1), and the points with x ≈ 1.0 correspond to the second connected component (class 2).

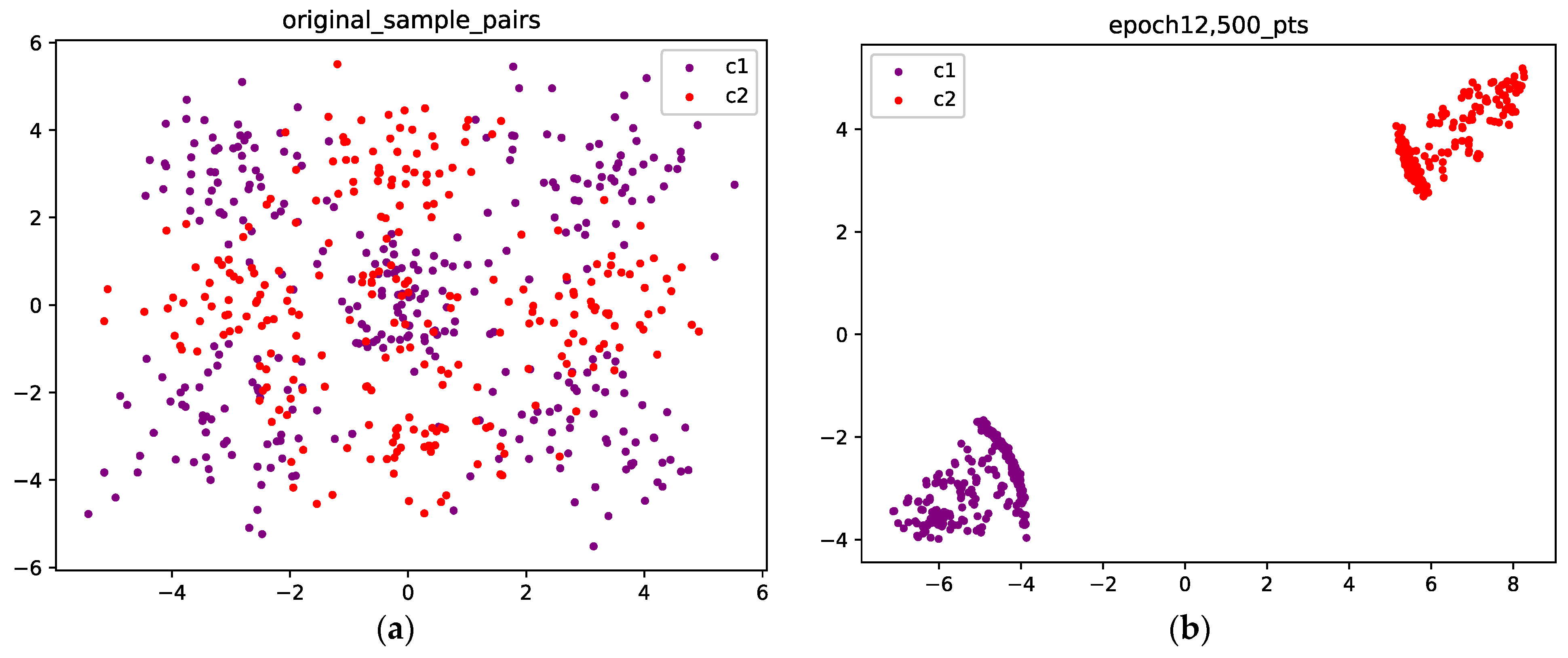

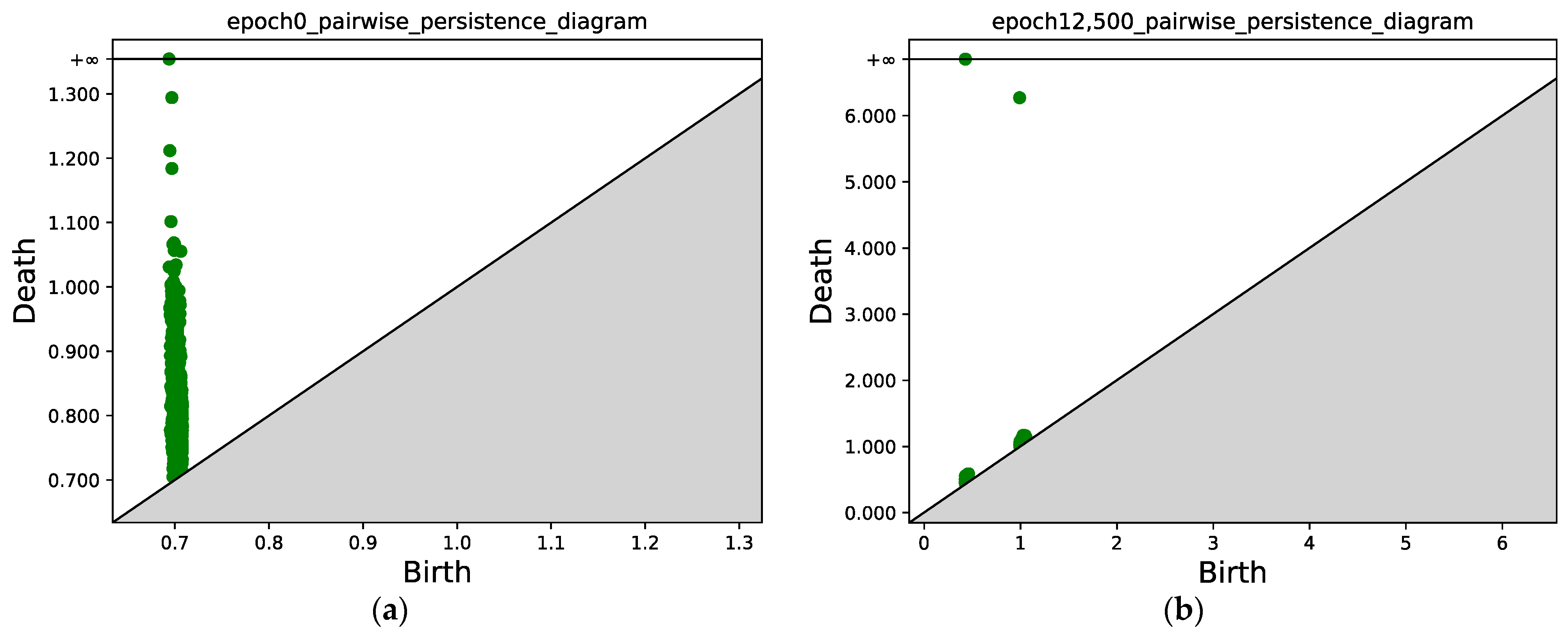

4.1.3. Gaussian Mixture with Nine Components

In Figure 11 and Figure 12, we present the results for a Gaussian mixture with nine components. Figure 11a shows the initial position of the points sampled from two classes, while Figure 11b shows the results after 12,500 epochs. Figure 12 compares the persistence diagrams before and after training; it can be seen that our method achieves an effective performance in separating samples from two different classes.

Figure 11.

(a) The original points are sampled from a Gaussian mixture with nine components; the purple points are sampled from class 1, and the red points are sampled from class 2. (b) Optimized configuration after 12,500 epochs; the points from the two classes are well separated.

Figure 12.

(a) Persistence diagram obtained via persistent homology at epoch 0. (b) Persistence diagram after 12,500 epochs. Two connected components can be identified; the points with x ≈ 0.5 correspond to the first connected component (class 1), and the points with x ≈ 1.0 correspond to the second connected component (class 2).

4.2. Datasets

In this part, we use the same models and settings as [22], and we evaluate our method on three vision benchmark datasets: MNIST [26], SVHN [27] and CIFAR10 [28]. For MNIST and SVHN, 250 instances are used for training the model, for CIFAR10, 500 and 1000 instances are used.

CNN-13 [29] architecture is employed for CIFAR10 and SVHN. For MNIST, a simpler CNN architecture is employed. We use a stochastic gradient descent (SGD) optimizer with a momentum of 0.9, and the cosine annealing learning rate scheduler [30] is employed.

With the cross-entropy loss, the weighting of our regularization term is set such that the loss in Equation (35) is comparable to the cross-entropy loss. In our experiments, each batch contains n = 8 sub-batches, and the sub-batch size is set to b = 16; thus, the total batch size is 128.

During training, for each epoch, we select the 10 most significant channels dynamically for each class to perform topological computation; the criterion for channel selection is similar to that in [31]. To compensate for the imbalance between two classes induced by the weight function, we use the ratio of the derivative to weight the two items in the birth loss (Equation (32)). In order to meet the stability requirements of topological computation, we use 0.001 as the minimal differentiable distance between points. The weighting of our regularization term is set to 0.001. For parameters in the weight function (24), we set and . For super parameters in the three loss items, we set , , , and ; weight decay on is fixed to 1 × 10−3, and weight decay on is fixed to 0.001, except for CIFAR10-1k, for which we set it to 5 × 10−4. On MNIST, the initial learning rate is fixed to 0.1. On SVHN and CIFAR10, it is fixed to 0.5.

Table 1 compares our method to Vanilla (including batch normalization, dropout and weight decay) and the regularizers proposed in relevant works, in particular, the regularizers based on statistics of representations [1,2] and the topological regularizer as proposed in [22]. In addition, we also provide results given by the Jacobian regularizer [9]. We report the average test error (%) and the standard deviation over 10 cross-validation runs. The number attached to the dataset names indicates the number of training instances used. It can be seen that our method achieves the lowest average error for MNIST-250, CIFAR10-500, and CIFAR10-1k. For SVHN, the mean error is a little higher than the result presented in [22], but our method achieves a lower variance. Especially, our method outperforms all the regularization methods based on statistical constraints by a significant margin, which demonstrates the advantage of the proposed topology-aware regularizer, and this also supports our claim that mass separation is beneficial.

Table 1.

Comparison to previous regularizers. “Vanilla” includes batch normalization, dropout and weight decay. The average test error and the standard deviation are reported.

5. Conclusions

Traditionally, statistical methods are employed to impose constraints on the internal representation space for deep neural networks, while topological methods are generally underexploited. In this paper, we took a fundamentally different perspective to control internal representation with tools from TDA. By utilizing persistent homology, we constrained the push-forward probability measure and enhanced mass separation in the internal representation space. Specifically, we formulated a property of this measure that is beneficial for generalization for the first time, and we proved that a topological constraint in the representation space leads to mass separation. Moreover, we proposed a novel weight function for weighted Rips filtration, proved its stability and introduced a regularizer that operates on the persistence diagram obtained via persistent homology to control the distribution of the internal representations.

We evaluated our approach in the point cloud optimization task and the image classification task. For the point cloud optimization task, experiments showed that our method can separate points from different classes effectively. For the image classification task, experiments showed that our method significantly outperformed the previous relevant regularization methods, especially those methods based on statistical constraints.

In summary, both theoretical analysis and experimental results showed that our method can provide an effective learning signal utilizing topological information to guide internal representation learning. Our work demonstrated that persistent homology may serve as a novel and powerful tool for promoting topological structure in the internal representation space. Areas for future research are the exploration of the potential of 1-dimensional persistent homology and the development of other topology-aware methods for deep neural networks.

Author Contributions

M.C. and D.W. worked on conceptualization, methodology, software and writing—original draft preparation; S.F. and Y.Z. worked on validation and writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No.62172086, Grant No.62272092).

Data Availability Statement

The data used in this study are available from the references.

Acknowledgments

The authors would like to express our sincere gratitude to the editor and reviewers for their valuable comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cogswell, M.; Ahmed, F.; Girshick, R.; Zitnick, L.; Batra, D. Reducing Overfitting in Deep Networks by Decorrelating Representations. In Proceedings of the International Conference on Learning Representations, San Juan, PR, USA, 2–4 May 2016. [Google Scholar]

- Choi, D.; Rhee, W. Utilizing Class Information for Deep Network Representation Shaping. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January 2019. [Google Scholar]

- Moor, M.; Horn, M.; Rieck, B.; Borgwardt, K. Topological Autoencoders. In Proceedings of the 37th International Conference on Machine Learning, Online, 13–18 July 2020. [Google Scholar]

- Raghu, M.; Gilmer, J.; Yosinski, J.; Sohl-Dickstein, J. SVCCA: Singular Vector Canonical Correlation Analysis for Deep Learning Dynamics and Interpretability. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Littwin, E.; Wolf, L. Regularizing by the variance of the activations’ sample-variances. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Pang, T.Y.; Xu, K.; Dong, Y.P.; Du, C.; Chen, N.; Zhu, J. Rethinking Softmax Cross-Entropy Loss for Adversarial Robustness. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26 April 2020. [Google Scholar]

- Zhu, W.; Qiu, Q.; Huang, J.; Calderbank, R.; Sapiro, G.; Daubechies, I. LDMNet: Low Dimensional Manifold Regularized Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Carlsson, G. Topology and Data. Bull. Am. Math. Soc. 2009, 46, 255–308. [Google Scholar] [CrossRef]

- Hoffman, J.; Roberts, D.; Yaida, S. Robust Learning with Jacobian Regularization. arXiv 2019, arXiv:1908.02729. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Chazal, F.; Michel, B. An Introduction to Topological Data Analysis: Fundamental and Practical Aspects for Data Scientists. Front. Artif. Intell. 2017, 4, 667963. [Google Scholar] [CrossRef] [PubMed]

- Herbert, E.; John, H. Computational Topology: An Introduction; American Mathematical Society: Providence, RI, USA, 2010. [Google Scholar]

- Hensel, F.; Moor, M.; Rieck, B. A Survey of Topological Machine Learning Methods. Front. Artif. Intell. 2021, 4, 681108. [Google Scholar] [CrossRef]

- Brüel-Gabrielsson, R.; Nelson, B.J.; Dwaraknath, A.; Skraba, P.; Guibas, L.J.; Carlsson, G. A topology layer for machine learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Online, 26–28 August 2020. [Google Scholar]

- Kim, K.; Kim, J.; Zaheer, M.; Kim, J.S.; Chazal, F.; Wasserman, L. PLLay: Efficient Topological Layer Based on Persistence Landscapes. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020. [Google Scholar]

- Hajij, M.; Istvan, K. Topological Deep Learning: Classification Neural Networks. arXiv 2021, arXiv:2102.08354. [Google Scholar]

- Li, W.; Dasarathy, G.; Ramamurthy, K.N.; Berisha, V. Finding the Homology of Decision Boundaries with Active Learning. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020. [Google Scholar]

- Chen, C.; Ni, X.; Bai, Q.; Wang, Y. A Topological Regularizer for Classifiers via Persistent Homology. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Naha, Japan, 16–18 April 2019. [Google Scholar]

- Vandaele, R.; Kang, B.; Lijffijt, J.; De Bie, T.; Saeys, Y. Topologically Regularized Data Embeddings. In Proceedings of the International Conference on Learning Representations, Online, 25–29 April 2022. [Google Scholar]

- Hofer, C.; Kwitt, R.; Dixit, M.; Niethammer, M. Connectivity-Optimized Representation Learning via Persistent Homology. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Wu, P.; Zheng, S.; Goswami, M.; Metaxas, D.; Chen, C. A Topological Filter for Learning with Label Noise. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020. [Google Scholar]

- Hofer, C.D.; Graf, F.; Niethammer, M.; Kwitt, R. Topologically Densified Distributions. In Proceedings of the 37th International Conference on Machine Learning, Online, 13–18 July 2020. [Google Scholar]

- Sizemore, A.E.; Phillips-Cremins, J.; Ghrist, R.; Bassett, D.S. The Importance of the Whole: Topological Data Analysis for the Network Neuroscientist. Netw. Neurosci. 2019, 3, 656–673. [Google Scholar] [CrossRef] [PubMed]

- Chazal, F.; Cohen-Steiner, D.; Merigot, Q. Geometric Inference for Probability Measures. Found. Comput. Math. 2011, 11, 733–751. [Google Scholar] [CrossRef]

- Anai, H.; Chazal, F.; Glisse, M.; Ike, Y.; Inakoshi, H.; Tinarrage, R.; Umeda, Y. DTM-Based Filtrations. In Proceedings of the 35th International Symposium on Computational Geometry, Portland, OR, USA, 18–21 June 2019. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading Digits in Natural Images with Unsupervised Feature Learning. In Proceedings of the Conference on Neural Information Processing Systems, Granada, Spain, 12–17 December 2011. [Google Scholar]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Laine, S.; Aila, T. Temporal ensembling for semisupervised learning. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Huang, Z.; Wang, H.; Xing, E.P.; Huang, D. Self-Challenging Improves Cross-Domain Generalization. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).