Abstract

Computing an element of the Clarke subdifferential of a function represented by a program is an important problem in modern non-smooth optimization. Existing algorithms either are computationally inefficient in the sense that the computational cost depends on the input dimension or can only cover simple programs such as polynomial functions with branches. In this work, we show that a generalization of the latter algorithm can efficiently compute an element of the Clarke subdifferential for programs consisting of analytic functions and linear branches, which can represent various non-smooth functions such as max, absolute values, and piecewise analytic functions with linear boundaries, as well as any program consisting of these functions such as neural networks with non-smooth activation functions. Our algorithm first finds a sequence of branches used for computing the function value at a random perturbation of the input; then, it returns an element of the Clarke subdifferential by running the backward pass of the reverse-mode automatic differentiation following those branches. The computational cost of our algorithm is at most that of the function evaluation multiplied by some constant independent of the input dimension n, if a program consists of piecewise analytic functions defined by linear branches, whose arities and maximum depths of branches are independent of n.

MSC:

68Q25; 68W40

1. Introduction

Automatic differentiation refers to various techniques to compute the derivatives of a function represented by a program, based on the well-known chain rule of calculus. It has been widely used across various domains, and diverse practical automatic differentiation systems have been developed [1,2,3]. In particular, reverse-mode automatic differentiation [4] has been a driving force of the rapid advances in numerical optimization [5,6,7].

There are two important properties of reverse-mode automatic differentiation: correctness and efficiency. For programs consisting of smooth functions, it is well known that reverse-mode automatic differentiation always computes the correct derivatives [8,9]. Furthermore, for programs returning a scalar value, it efficiently computes their derivatives in the sense that its computational cost is at most proportional to that of the function evaluation, where the additional multiplicative factor is bounded by five for rational functions [10,11] and by some constant that depends on the underlying implementation of smooth functions; if the arities of the functions are independent of the input dimension n, then this constant is also independent of n [12]. Such correctness and efficiency of reverse-mode automatic differentiation, referred to as the Cheap Gradient Principle, have been of central importance for modern nonlinear optimization algorithms [13].

In practical problems, a program often involves branches (e.g., max and an absolute value), and the corresponding target function can be non-smooth. In other words, the derivative of the program may not exist at some inputs. In this work, we investigated a Cheap Subgradient Principle: an efficient algorithm that correctly computes an element of the Clarke subdifferential, a generalized notion of the derivative, for scalar programs. One naïve approach is to directly apply reverse-mode automatic differentiation to the Clarke subdifferential. Such a method is computationally cheap as in the smooth case; however, due to the absence of the sharp chain rule for the Clarke subdifferential, it is incorrect in general even if the target function is differentiable [8,14,15].

There have been extensive research efforts to correctly compute an element of the Clarke subdifferential. A notable line of work is based on the lexicographic subdifferential [16], which is a subset of the Clarke subdifferential, but a sharp chain rule holds under some structural assumptions. Based on this, a series of works [17,18,19,20] has shown that an element of the lexicographic subdifferential can be computed by evaluating n directional derivatives, where n denotes the input dimension. Nevertheless, since this approach requires computing n directional derivatives, it incurs a multiplicative factor n in its computational cost compared to that of function evaluation in the worst case.

To avoid such an input-dimension-dependent factor, a two-step randomized algorithm for programs with branches has been proposed [14]. In the first step, the algorithm chooses a random direction and finds a sequence of branch selections based on the directional derivative with respect to under some qualification condition [14,21]. Then, the second step computes the derivative corresponding to the branches returned in the first step, which is shown to be an element of the Clarke subdifferential. Here, the second step can also be efficiently implemented via reverse-mode automatic differentiation. As a result, this two-step algorithm correctly computes an element of the Clarke subdifferential under the qualification condition, with the computational cost independent of the input dimension. However, this result is only for piecewise polynomial programs, defined by branches and finite compositions of monomials and affine functions.

In this work, we propose an efficient automatic subdifferentiation algorithm by generalizing the algorithm in [14] described above. Our algorithm correctly computes an element of the Clarke subdifferential for programs consisting of any analytic functions (including polynomials) and linear branches. As in the prior efficient automatic (sub)differentiation works, the computational cost of our algorithm is that of the function evaluation multiplied by some constant independent of the input dimension n, if a program consists of piecewise analytic functions (defined by linear branches) whose arities and maximum depths of branches are independent of n (e.g., max and the absolute value).

Related Works

Non-smooth optimization: Although smooth functions are easy to formulate and optimize, they have limited applicability as non-smoothness appears in various science and engineering problems. For example, real-world problems in thermodynamics often involve discrete switching between thermodynamic phases, which can be modeled by non-smooth functions. Dynamic simulation and optimization under these models often require the treatment of these models [22,23]. In machine learning applications, hinge loss, ReLU, and maxpool operations are often used, which makes the optimization objective non-smooth [6,24,25]. For optimizing convex, but non-smooth functions, subderivative methods are widely used for approximating a local minimum [26,27]. However, for non-convex functions, the subderivative does not exist in general, and researchers have investigated the generalized notion of derivatives (e.g., the Clarke subdifferential).

Optimization algorithms using generalized derivatives: Recently, the convergence properties of optimization algorithms based on generalized derivatives for non-convex and non-smooth functions have received much attention. Ref. [28] proved that, for locally Lipschitz functions, the stochastic gradient method, where the gradient is chosen from the Clarke subdifferential, converges to a stationary point. However, as we introduced in the previous section, computing an element of the Clarke subdifferential is computationally expensive or can be efficient only for a specific class of programs. Ref. [29] proposed a new notion of gradient called the conservative gradient, which can be efficiently computed; nevertheless, its convergence property is not well understood, especially under practical setups.

2. Problem Setup

2.1. Notations

For , we denote , where . For any set , we use , where denotes the zero-dimensional vector. For any vector and , we denote for the i-th coordinate of x and , where ; similarly, for any and index set with , we use . For any and , we use ; when , we write to denote the standard inner product between u and v. For any , sign if and sign otherwise. For any real-valued vector x, len denotes the length of x, i.e., len if . For any set , we use cl, int, and conv to denote the closure, interior, and convex hull of , respectively. We lastly define the Clarke subdifferential. Given a function and the set of all points at which f is differentiable, the Clarke subdifferential of f at is a set defined as

2.2. Programs with Branches

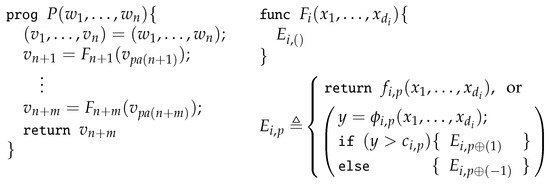

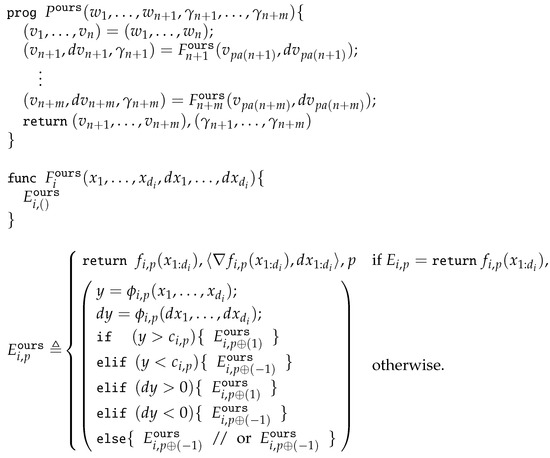

We considered a program P defined in Figure 1 (left). P applies a series of

primitive functions to compute intermediate variables and, then, returns the last result . Each primitive function is continuous and defined in an inductive way as in Figure 1 (right): either applies a function or branches via (possibly nested) if–else statements. Namely, is a continuous, piecewise function. If branches with an input , then it first evaluates for some and checks whether or not for some threshold value . If , it executes a code that either applies a function or executes another code or depending on whether or not. The case is handled in a similar way. Here, we assumed that each has finitely many branches. In short, each primitive function first finds a proper piece labeled by and, then, returns . We illustrate a flow chart for a primitive function in Figure 2.

Figure 1.

Definitions of a program P (left) and primitive functions (right).

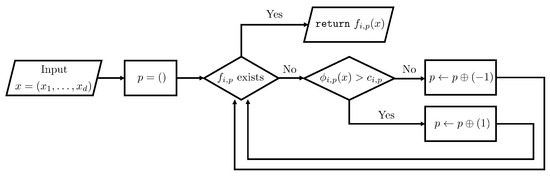

Figure 2.

A flow chart illustrating a primitive function .

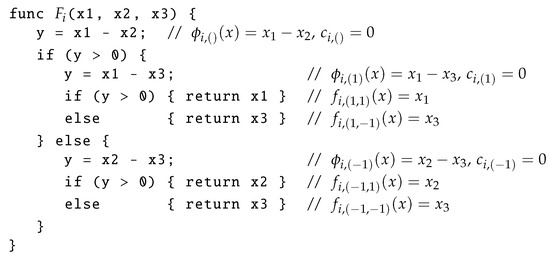

We present an example code for a primitive function that returns in Figure 3. In this example, branches at most twice: its first branch is determined by , which is stored in y in the second line in Figure 3. If (the third line with ), then it executes , which corresponds to lines 4–6. Otherwise, it moves to , which corresponds to the lines 8–10. Suppose is executed (i.e., in line 3). Then, it computes and stores it in y as in line 4. If , then is executed, which returns the value of (i.e., ). Otherwise, is executed, which returns the value of (i.e., ).

Figure 3.

Example code for the max function .

We considered each and as a function of an input . Specifically, for all , we use ; and for all , we use and , where with . Under this notation, denotes the

target function

represented by the program P. We often omit w and write and if it is clear from the context. We denote the gradient (or Jacobian) of functions with respect to an input w by the operator: e.g., D and D.

Throughout this paper, we focus on programs with linear branches; that is, each is linear in . Primitive functions with linear branches can express fundamental non-smooth functions such as max, the absolute value, bilinear interpolation, and any piecewise analytic functions with finite linear boundaries. They have been widely used in various fields including machine learning, electrical engineering, and non-smooth analysis. For example, max–min representation (or the abs–normal form) has been extensively studied in non-smooth analysis [30,31]. Furthermore, neural networks using the ReLU activation function and maxpool operations are widely used in machine learning, computer vision, load forecasting, etc. [6,24,25]. The assumption on linear branches will be formally introduced in Assumption 1 in Section 3.1.

2.3. Pieces of Programs

We introduce useful notations here. For each , we define the set of the pieces of as

We also define for the pieces of the overall program. Here, we include an auxiliary piece for the first n indices so that for any and . For each , we define the set of the inputs to that corresponds to the piece , as

Then, forms a partition of . Likewise, we also define the set of the inputs to the overall program P that corresponds to , as

Then, forms a partition of . Lastly, for each , we inductively define the function that corresponds to , but is obtained by using the piece of each , as

where is defined as in . Then, coincides with on for all .

2.4. Reverse-Mode Automatic Differentiation

Reverse-mode automatic differentiation is an algorithm for computing the gradient of the target function (if it exists) by sequentially running one forward pass (Algorithm 1) and one backward pass (Algorithm 2). Given , its forward pass computes and corresponding pieces such that for . Namely, we have for all .

| Algorithm 1 Forward pass of reverse-mode automatic differentiation |

|

| Algorithm 2 Backward pass of reverse-mode automatic differentiation |

|

Given and , the backward pass computes by applying the chain rule to the composition of differentiable functions . In particular, it iteratively updates and returns . It is well known that reverse-mode automatic differentiation computes the correct gradient, i.e., coincides with for all , if primitive functions do not have any branches [8,9]. However, if some uses branches, it may return arbitrary values even if the target function is differentiable at w [14,32,33]. In the rest of the paper, we use AD to denote reverse-mode automatic differentiation.

Our algorithm computing an element of the Clarke subdifferential is similar to AD: it first finds some pieces and applies the backward pass of AD (Algorithm 2) to compute its output. Here, we chose the pieces that are used for computing the forward pass with some perturbed input, not the original one. Hence, our pieces and that of AD are different in general, which enables our algorithm to correctly compute an element of the Clarke subdifferential. We provide more details including the intuition behind our algorithm in Section 3.2 and Section 3.3.

3. Efficient Automatic Subdifferentiation

In this section, we present our algorithm for efficiently computing an element of the Clarke subdifferential. To this end, we first introduce a class of primitive functions, which we consider in the rest of this paper. Then, we describe our algorithm after illustrating its underlying intuition via an example. Lastly, we analyze the computational complexity of our algorithm.

3.1. Assumptions on Primitive Functions

We considered primitive functions that satisfy the following assumptions.

Assumption 1.

For any , , and , the following hold:

- .

- is linear, i.e., there exists such that .

- is analytic on .

The first assumption states that, for any and , there exists such that selects at x. In other words, there is no non-reachable piece , i.e., all pieces of are necessary to express . The second assumption requires that all if–else statements of have linear in their conditions. Lastly, we considered , which is analytic on its domain (e.g., polynomials, exp, log, and sin), as stated in the third assumption. From this, is well-defined and analytic on some open set containing cl for all and .

Assumption 1 admits any primitive function that is analytic or piecewise analytic with linear boundaries such as max and bilinear interpolation. Hence, it allows many interesting programs such as nearly all neural networks considered in modern deep learning, e.g., [6,34].

3.2. Intuition Behind Efficient Automatic Subdifferentiation

As in AD, our algorithm first performs one forward pass (Algorithm 3) to compute the intermediate values and to find proper pieces for the given input w. Then, it runs the original backward pass of AD (Algorithm 2) to compute an element of the Clarke subdifferential at w using the intermediate values and the pieces generated by the forward pass. Here, the key component of our algorithm is about

how to choose proper pieces in the forward pass so that the backward pass can correctly compute an element of the Clarke subdifferential.

Before describing our algorithm, we explain its underlying intuition. Let be a random vector drawn from a Gaussian distribution (see the initialization of Algorithm 3). Then, there exists unique and some almost surely such that

i.e., a given program takes the same piece for all inputs close to w along the direction of ; see Lemma 7 in Section 4 for the details. Since on and is differentiable, Equation (1) implies that is differentiable at for all . Therefore, the quantity:

is an element of the Clarke subdifferential , and our algorithm computes this very quantity via the backward pass of AD.

| Algorithm 3 Forward pass of our algorithm |

|

We now illustrate the main idea behind our forward pass, which enables the backward pass to compute in Equation (2). As an example, consider a program with the following primitive functions: are all analytic, and branches only once with and . For notational simplicity, we use .

If , then it is easy to observe that from the continuity of and u. Likewise, if , then . In the case that , we use the following directional derivatives to determine :

for , which can be easily computed using the chain rule. From the definition of , the linearity of , and the chain rule, it holds that

where denotes the vector of all with . Then, by Taylor’s theorem, (or ) implies (or ). In summary, if or , then the exact can be found, and hence, the backward pass (Algorithm 2) can correctly compute using .

Now, we considered the only remaining case: and . Unlike the previous cases, it is non-trivial here to find the correct because the first-order Taylor series approximation does not provide any information about whether a small perturbation of w toward increases or not. An important point, however, is that we do not need the exact to compute an element of the Clarke subdifferential; instead, it suffices to compute . Surprisingly, this can be performed by choosing an

arbitrary piece

of , as shown below.

For simplicity, suppose that , i.e., for some ; the below argument can be easily extended to an arbitrary linear . Let for , i.e., . Then, for any , we have

almost surely, by the chain rule and the following result: implies almost surely (Lemma 5 in Section 4). Here, from the continuity and the definition of , we must have on the hyperplane , and thus, for any and . From this and , we then obtain

for all . By combining Equations (4) and (5), we can finally conclude that

almost surely, where the last equality is from the fact that is either or . To summarize, if and , we can compute the target element of the Clarke subdifferential (i.e., ) by choosing an arbitrary piece of .

3.3. Forward Pass for Efficient Automatic Subdifferentiation

Our algorithm for computing an element of the Clarke subdifferential is based on the observation made in the previous section: it runs one forward pass (Algorithm 3) for computing and some such that and one backward pass of AD (Algorithm 2) for computing .

We now describe our forward pass procedure (Algorithm 3). First, it randomly samples a vector from a Gaussian distribution and initializes for all (line 2). Then, it iterates for as follows. Given and their directional derivatives with respect to , lines 5–13 in Algorithm 3 find a proper piece of by exploring its branches. If the condition in line 6 is satisfied, then it moves to the branch corresponding to (line 7). It moves in a similar way if the condition in line 8 is satisfied. As in our example in Section 3.2, if and (line 10), then our algorithm moves to an arbitrary branch (line 11). Once Algorithm 3 finds a proper piece of , it updates and via the chain rule (line 14). Here, can be correctly computed due to the continuity of , while can also be correctly computed almost surely; see Lemma 8 in Section 4 for details. We remark that our algorithm is a generalization of the algorithm in [14]. The difference occurs in lines 10–11, where the existing algorithm deterministically chooses s based on some qualification condition [14].

As illustrated in Section 3.2, the piece computed by our forward pass satisfies almost surely, and hence, the backward pass using this correctly computes almost surely, which is an element of the Clarke subdifferential. We formally state the correctness of our algorithm in the following theorem; its proof is given in Section 4.

Theorem 1.

Suppose that Assumption 1 holds. Then, for any , running Algorithm 3 and then Algorithm 2 returns an element of almost surely.

3.4. Computational Cost

In this section, we analyze the computational cost of our algorithm (both forward and backward passes) on a program P, compared to the cost of running P. Here, we only counted the cost of arithmetic operations and function evaluations and ignore the cost of memory read and write. We assumed that elementary operations (), the comparison between two scalar values (), and sampling a value from the standard normal distribution have a unit cost (e.g., cost), while the cost for evaluating an analytic function f is represented by cost. To denote the cost of evaluating a program P with an input w, we use cost. Likewise, for the cost of running our algorithm (i.e., Algorithms 2 and 3) on P and w, we use cost. We also assumed that memory read/write costs are included in our cost function. Under this setup, we bound the computational cost of our algorithm in Theorem 2.

Theorem 2.

Suppose that for all . Then, for any program P and its input , where

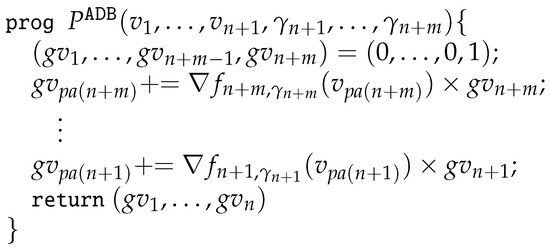

The assumption in Theorem 2 is mild since it is satisfied if at least one distinct operation is applied to each input for evaluating P. The proof of Theorem 2 is presented in Section 5, where we use program representations of Algorithms 2 and 3 (see Figure 4 and Figure 5 in Section 3.4 for the details).

Figure 4.

A program implementing the backward pass of AD (Algorithm 2).

Figure 5.

A program implementing Algorithm 3. Here, .

Suppose that, for each , and (i.e., the arity and the maximum branch depth of ) are independent of n. This condition holds in many practical cases: e.g., the absolute value function has and ; has and . Under this mild condition, , , and are independent of n, and thus, does so because the numerator in the definition of is independent of n and the denominator is at least one (as ). This implies that is independent of the input dimension n under the above condition.

In practical setups with large n, the computational cost of our algorithm can be much smaller than that of existing algorithms based on the lexicographic subdifferential [17,18,19,20]. For example, modern neural networks have more than a million parameters (i.e., n), where the cost for computing the gradient of each piece in the activation functions (i.e., ) is typically bounded by . Further, the depth of branches in these activation functions is often bounded by a constant (e.g., the depth is one for ReLU). Hence, for those networks, , and our algorithm does not incur much computational overhead. On the other hand, lexicographic-subdifferential-based approaches require at least n computations of [17,18,19,20], which may not be practical when n is large.

4. Proof of Theorem 1

In this section, we prove Theorem 3 under the setup that in Algorithm 3 is given instead of randomly sampled. This theorem directly implies Theorem 1 since the statement of Theorem 3 holds for almost every and the proof of Theorem 1 requires showing the same statement almost surely, where the randomness comes from following an Isotropic Gaussian distribution. Namely, proving Theorem 3 suffices for proving Theorem 1. We note that all results in this section are under Assumption 1.

Theorem 3.

Given , Algorithms 3 and 2 compute an element of for almost every .

4.1. Additional Notations

We frequently use the following shorthand notations: the set of indices of branches:

and an auxiliary index set:

For , , and , we use

Note that and are analytic (and, therefore, differentiable) for all , , and . We next define the set of pieces reachable by our algorithm (Algorithm 3) with inputs as :

4.2. Technical Claims

Lemma 1.

For any open , for any analytic, but non-constant , and for any , there exists such that

Furthermore, f is strictly monotone on and strictly monotone on .

Proof Lemma 1.

Without loss of generality, suppose that . Since f is analytic, f is infinitely differentiable and can be represented by the Taylor series on for some :

where denotes the i-th derivative of f. Since f is non-constant, there exists such that . Let be the minimum such i. Then, by Taylor’s theorem, .

Consider the case that and is odd. Then, we can choose so that

i.e., f is strictly increasing on (e.g., by the mean value theorem), and hence, . One can apply a similar argument to the cases that and is odd, and is even, and and is even. This completes the proof of Lemma 1. □

Lemma 2

(Proposition 0 in [35]). For any , for any open connected , and for any real analytic , if , then for all .

Lemma 3.

For any , for any open connected , and for any real analytic , if , then for all .

Proof Lemma 3.

The proof directly follows from Lemma 2. □

4.3. Technical Assumptions

Assumption 2.

Given , satisfies the following: for any , , and , if is not a constant function, then

Assumption 3.

Given , satisfies the following: for any , , and ,

4.4. Technical Lemmas

Lemma 4.

Given , almost every satisfies Assumption 2.

Proof of Lemma 4.

Since , if the set of that does not satisfy Assumption 2 has a non-zero measure, then there exist , , and such that is not a constant function and

Without loss of generality, suppose that . Then, from the definition of , is contained in the zero set:

of an analytic function defined as

Namely, . However, from Lemma 2, must be a constant function, which contradicts our assumption that is not a constant function. This completes the proof of Lemma 4. □

Lemma 5.

Given , almost every satisfies Assumption 3.

Proof of Lemma 5.

Since implies for all , we prove the converse. Suppose that . Since the set has zero measure under ,

also has zero measure. This completes the proof of Lemma 5. □

Lemma 6.

For and , suppose that satisfies one of the following for all :

- ;

- .

Then,.

Proof of Lemma 6.

Without loss of generality, assume that . Since we assumed by Assumption 1, there exists , i.e., for all . Define

Since is linear, for and for any , we have

This implies that, for any and , it holds that

Since and for all by the definition of , there exists such that

for all and . Combining Equations (7) and (8) implies that for all , i.e., x is a limit point of . This completes the proof of lem:closure □

4.5. Key Lemmas

Lemma 7.

For any and for any satisfying Assumption 2, there exist and such that

Proof of Lemma 7.

We first define some notations: for an analytic function and ,

Under Assumption 2 and by Lemma 1, one can observe that, for any , , and , if and only if is a constant function. In addition, from Lemma 1, if is not a constant function, then . Using Algorithm 4, we iteratively construct and update for each so that

Under our construction of , one can observe that . From our choice of , for any , , and for , the following statements hold:

- If , then is open since is strictly monotone on ;

- If , then are constant functions (i.e., is a constant) due to Assumption 2.

For any , we have , where

Here, is open since each term for the intersection in the above equation is open; it is if , and it is an inverse image of a continuous function of an open set otherwise. This completes the proof of Lemma 7. □

| Algorithm 4 Construction of and |

|

Corollary 1.

For any and for any satisfying Assumption 2, there exist and such that

Proof of Corollary 1.

This corollary directly follows from Lemma 7. □

Lemma 8.

For any and for any satisfying Assumption 3, it holds that

Proof of Lemma 8.

We use the mathematical induction on i to show that and for all . The base case is trivial: and for all . Hence, suppose that since the case that is also trivial. Then, by the induction hypothesis, we have and for all . For notational simplicity, we denote and for all .

Let and . First, by Lemma 6, the definition of , and the induction hypothesis, we have

Due to the continuity of , this implies that

Now, it remains to show . To this end, we define the following:

From the definition of and , . Furthermore, by Assumption 3, for any and , we have if and only if , i.e., for all . Therefore, since , it holds that

In addition, due to the identities

showing the following stronger statement suffices for proving Lemma 8:

By Lemma 1 and the induction hypothesis (), there exists such that, for any and and for ,

Since each is linear (i.e., continuous) and by the definition of , for each , there exists an open neighborhood (open in ) of such that, for any and ,

Here, we claim that, for any ,

First, from the definition of , we have . In addition, by Equation (10) and the definition of , either or for all and ; the same argument also holds for . Hence, by Lemma 6, the LHS of Equation (11) holds. Likewise, we have .

Due to the continuity of , Equation (11) implies that, for any ,

i.e., for all and . Here, and are differentiable at by Equation (11) and Assumption 1. Due to the analyticity of and , this implies that, for any , we have

where and are differentiable at by Equation (11) and Assumption 1. This proves Equation (9) and completes the proof of Lemma 8. □

4.6. Proof of Theorem 3

Under Assumptions 2 and 3, combining Lemmas 4 and 5, Corollary 1, and Lemma 8 completes the proof of Theorem 3.

5. Proof of Theorem 2

Here, we analyze the computational costs based on the program representations in Figure 4 and Figure 5. For simplicity, we use A2 and A3 for the shorthand notations for Algorithm 2 and Algorithm 3, respectively. Under these setups, our cost analysis for and is as follows: for such that , where and are the outputs of and :

This implies the following:

where the first inequality is from the above bound and the second inequality is from the definition of and the assumption . This completes the proof.

6. Conclusions

In this work, we proposed an efficient subdifferentiation algorithm for computing an element of the Clarke subdifferential of programs with linear branches. In particular, we generalized the existing algorithm in [14] and extended its application from polynomials to analytic functions. The computational cost of our algorithm is at most that of the function evaluation multiplied by an input-dimension-independent factor, for primitive functions whose arities and maximum depths of branches are independent of the input dimension. We believe that extending our algorithm to general functions (e.g., continuously differentiable functions), general branches (e.g., nonlinear branches), and general programs (e.g., programs with loops) will be an important future research direction.

Funding

This research was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (No. 2019-0-00079, Artificial Intelligence Graduate School Program, Korea University) and Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2022R1F1A1076180).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The author declares no conflict of interest.

References

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the Symposium on Operating Systems Design and Implementation (OSDI), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. In Proceedings of the NIPS Autodiff Workshop. 2017. Available online: https://openreview.net/forum?id=BJJsrmfCZ (accessed on 1 November 2023).

- Frostig, R.; Johnson, M.; Leary, C. Compiling machine learning programs via high-level tracing. In Proceedings of the SysML Conference, Stanford, CA, USA, 15–16 February 2018; Volume 4. [Google Scholar]

- Speelpenning, B. Compiling Fast Partial Derivatives of Functions Given by Algorithms; University of Illinois at Urbana-Champaign: Champaign, IL, USA, 1980. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 84–90. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Griewank, A.; Walther, A. Evaluating Derivatives: Principles and Techniques of Algorithmic Differentiation, 2nd ed.; SIAM: Philadelphia, PA, USA, 2008. [Google Scholar]

- Pearlmutter, B.A.; Siskind, J.M. Reverse-mode AD in a functional framework: Lambda the ultimate backpropagator. ACM Trans. Program. Lang. Syst. 2008, 30, 1–36. [Google Scholar] [CrossRef]

- Baur, W.; Strassen, V. The complexity of partial derivatives. Theor. Comput. Sci. 1983, 22, 317–330. [Google Scholar] [CrossRef]

- Griewank, A. On automatic differentiation. Math. Program. Recent Dev. Appl. 1989, 6, 83–107. [Google Scholar]

- Bolte, J.; Boustany, R.; Pauwels, E.; Pesquet-Popescu, B. Nonsmooth automatic differentiation: A cheap gradient principle and other complexity results. arXiv 2022, arXiv:2206.01730. [Google Scholar]

- Griewank, A. Who invented the reverse mode of differentiation. Doc. Math. Extra Vol. ISMP 2012, 389400, 389–400. [Google Scholar]

- Kakade, S.M.; Lee, J.D. Provably Correct Automatic Sub-Differentiation for Qualified Programs. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Montréal, QC, Canada, 3–8 December 2018; pp. 7125–7135. [Google Scholar]

- Lee, W.; Yu, H.; Rival, X.; Yang, H. On Correctness of Automatic Differentiation for Non-Differentiable Functions. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Virtual, 6–12 December 2020; pp. 6719–6730. [Google Scholar]

- Nesterov, Y. Lexicographic differentiation of nonsmooth functions. Math. Program. 2005, 104, 669–700. [Google Scholar] [CrossRef]

- Khan, K.A.; Barton, P.I. Evaluating an element of the Clarke generalized Jacobian of a composite piecewise differentiable function. ACM Trans. Math. Softw. 2013, 39, 1–28. [Google Scholar]

- Khan, K.A.; Barton, P.I. A vector forward mode of automatic differentiation for generalized derivative evaluation. Optim. Methods Softw. 2015, 30, 1185–1212. [Google Scholar] [CrossRef]

- Barton, P.I.; Khan, K.A.; Stechlinski, P.; Watson, H.A.J. Computationally relevant generalized derivatives: Theory, evaluation and applications. Optim. Methods Softw. 2018, 33, 1030–1072. [Google Scholar] [CrossRef]

- Khan, K.A. Branch-locking AD techniques for nonsmooth composite functions and nonsmooth implicit functions. Optim. Methods Softw. 2018, 33, 1127–1155. [Google Scholar] [CrossRef]

- Griewank, A. Automatic directional differentiation of nonsmooth composite functions. In Proceedings of the Recent Developments in Optimization: Seventh French-German Conference on Optimization, Dijon, France, 27 June–2 July 1995; Springer: Berlin/Heidelberg, Germany, 1995; pp. 155–169. [Google Scholar]

- Sahlodin, A.M.; Barton, P.I. Optimal campaign continuous manufacturing. Ind. Eng. Chem. Res. 2015, 54, 11344–11359. [Google Scholar] [CrossRef]

- Sahlodin, A.M.; Watson, H.A.; Barton, P.I. Nonsmooth model for dynamic simulation of phase changes. AIChE J. 2016, 62, 3334–3351. [Google Scholar] [CrossRef]

- Hanin, B. Universal function approximation by deep neural nets with bounded width and relu activations. Mathematics 2019, 7, 992. [Google Scholar] [CrossRef]

- Alghamdi, H.; Hafeez, G.; Ali, S.; Ullah, S.; Khan, M.I.; Murawwat, S.; Hua, L.G. An Integrated Model of Deep Learning and Heuristic Algorithm for Load Forecasting in Smart Grid. Mathematics 2023, 11, 4561. [Google Scholar] [CrossRef]

- Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Rehman, H.U.; Kumam, P.; Argyros, I.K.; Shutaywi, M.; Shah, Z. Optimization based methods for solving the equilibrium problems with applications in variational inequality problems and solution of Nash equilibrium models. Mathematics 2020, 8, 822. [Google Scholar] [CrossRef]

- Davis, D.; Drusvyatskiy, D.; Kakade, S.; Lee, J.D. Stochastic subgradient method converges on tame functions. Found. Comput. Math. 2020, 20, 119–154. [Google Scholar] [CrossRef]

- Bolte, J.; Pauwels, E. Conservative set valued fields, automatic differentiation, stochastic gradient methods and deep learning. Math. Program. 2021, 188, 19–51. [Google Scholar] [CrossRef]

- Scholtes, S. Introduction to Piecewise Differentiable Equations; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Griewank, A.; Bernt, J.U.; Radons, M.; Streubel, T. Solving piecewise linear systems in abs-normal form. Linear Algebra Its Appl. 2015, 471, 500–530. [Google Scholar] [CrossRef]

- Bolte, J.; Pauwels, E. A mathematical model for automatic differentiation in machine learning. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Online, 6–12 December 2020; pp. 10809–10819. [Google Scholar]

- Lee, W.; Park, S.; Aiken, A. On the Correctness of Automatic Differentiation for Neural Networks with Machine-Representable Parameters. arXiv 2023, arXiv:2301.13370. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Mityagin, B. The zero set of a real analytic function. arXiv 2015, arXiv:1512.07276. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).