Abstract

In this paper, we study the phase structure of the product of D * D order matrices. In each round, we randomly choose a matrix from a finite set of d matrices and multiply it with the product from the previous round. Initially, we derived a functional equation for the case of matrices with real eigenvalues and correlated choice of matrices, which led to the identification of several phases. Subsequently, we explored the case of uncorrelated choice of matrices and derived a simpler functional equation, again identifying multiple phases. In our investigation, we observed a phase with a smooth distribution in steady-state and phases with singularities. For the general case of D-dimensional matrices, we derived a formula for the phase transition point. Additionally, we solved a related evolution model. Moreover, we examined the relaxation dynamics of the considered models. In both the smooth phase and the phase with singularities, the relaxation is exponential. The superiority of relaxation in the smooth phase depends on the specific case.

MSC:

92D15; 60B20

1. Introduction

The investigation of the product of Random Matrices is a significant area in modern statistical physics concerning disordered systems and data science [1,2,3,4,5,6,7,8]. This field of mathematics is well-established and has connections to quantum condensed matter, Hidden Markov models (an essential tool in data science) [9,10,11,12,13], genetic network population dynamics [14], evolution in dynamically changing environments [15,16,17,18,19,20,21], and quantum resetting [22]. Our recent study focused on the phase structure of the product of correlated random matrices with D = 2 [23]. We discovered a transition similar to the localized-delocalized transition previously observed in evolution models [14,24]. The choice of the matrix in the current round can either be correlated, depending on the matrix chosen in the previous round of iteration, or uncorrelated.

During the investigation of the product of random matrices, a key objective is to calculate the maximal Lyapunov index. This can be achieved using the formalism described in [12,13]: by introducing a vector and multiplying it at each round by a randomly chosen matrix type for that round. This process generates an n-th vector at the n-th round. The norm of the n-th vector grows exponentially with n, and the coefficient of this exponential growth corresponds to the maximal Lyapunov index.

In the case of correlated matrix type choice, a functional equation has recently been derived to describe the probability distribution for the vector mentioned earlier. A similar equation has also been derived for the Hidden Markov model. Further investigation of this equation revealed the identification of a phase transition in the steady-state distribution for the rotating reference vector. This transition is directly related to the phase transition found in [14]. In [14], transitions occur between different types of environments in infinitesimal periods of time, resulting in a system of ordinary differential equations (ODEs) instead of a functional equation.

In our recent investigation, we focused on the product of correlated d = 2 matrices of order D = 2, with correlation in the type choice. In Section 2, we identify the phase structure for the case of uncorrelated matrices. Additionally, we derive formulas to determine the phase transition points in the case of high-order matrices. In Section 3, we delve into the related evolution on a fluctuating fitness landscape, including its relaxation dynamics.

2. The Product of Matrices

2.1. The Uncorrelated Matrices

We have two matrices, and . We have a probability for the choice of the matrix type x. We choose some initial vector , then multiply it by the randomly chosen matrix, obtaining the updated version of the vector . We define the distribution at the n-th round, and . We have a vector with a distribution at the n-th round, then at the next step we obtain

For the case of matrices, we can parameterize the two-dimensional vector via angular parameter .

where d is a norm of the vector.

If we have a vector with angle after multiplying it by matrices , then the matrix A will move it to the point , where is the inverse function of f. It means that matrix A acts as a transformation that takes the vector from the angle to its inverse according to the function f.

where

then the functional Equation (1) transforms to

Equation (4) is derived for general assumptions.

For the numerical investigation (see the Appendix B for the details of the numerical algorithm) it is convenient to look at the probability distribution with fixed types ,

For the real eigenvalues case, we assume a steady state solution for Equation (4)

First, we need to identify the support where the solution of Equation (4) is defined. We define as the angles of the eigenvectors that are associated with the maximal eigenvalues:

We assume that the angles are the special points for the functions functions .

For the steady state case of Equation(4), we expand the function near the point

We look an Ansatz

near the points and .

Let us define . Looking the solutions with the scaling behavior near the corresponding critical points

When the transition rates tend to 0, then our Ansatz Equation (10) transforms to

We obtain from Equation (7)

Thus

The critical cases are when .

and

We derived Equations (13) and (14) by assuming a scaling Ansatz as given in Equation (10). Although the scaling Ansatz is not observed in all situations according to our numerical simulations, Equations (13) and (14) are still well-confirmed and hold true.

For simplicity, we use the following representation for the matrices

where means a transposition of the vector. We denote also

Then we have

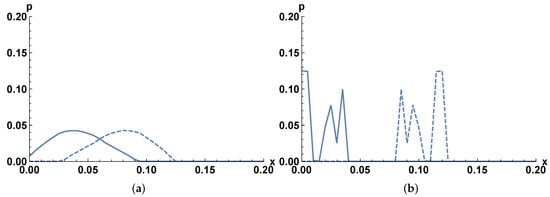

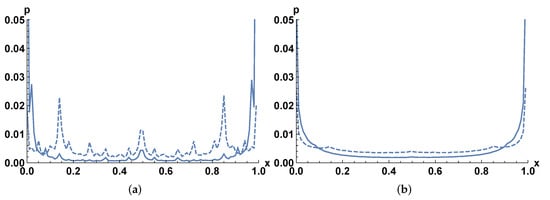

Figure 1 and Figure 2 illustrate the phase transition. In our investigation, we choose both matrices with equal probabilities.

Figure 1.

The distributions (the smooth line) and (the dashed line) versus , see Equation (5). , see Equation (15). (a) The case without singularities , see Equation (15). The right border of the smooth line is . The right border of the dashed line is . The left border of the dashed line is close to the point . (b) A singular distribution. . We identify the left border of the smooth line with , and the right border of the dashed line with . The right border of the smooth line with , the right border of the dashed line with .

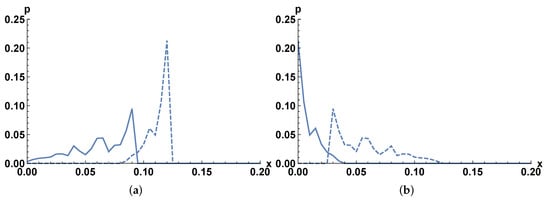

Figure 2.

The distributions (the smooth line) and (the dashed line) versus see Equation (5). , see Equation (15). (a) .The right border of the smooth line with a singularity is at the point The left border of the smooth line is , and the right border of the dashed line is . (b) , The singularity point of the dashed line is . The left border of the smooth line is , and the right border of the dashed line is .

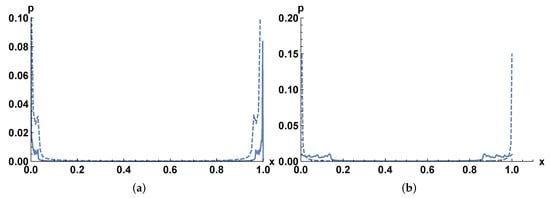

2.2. The Relaxation Dynamics

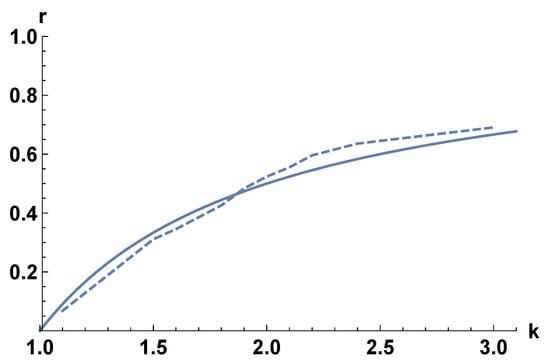

During our investigation, we also studied the relaxation dynamics and confirmed that there is an exponential fast relaxation to the steady state in both phases. In these phases, the square distance to the steady state decreases approximately as . Although we were not able to derive a general expression for the relaxation dynamics in all cases, we observed that when we choose , it is reasonable to consider r as the gap between the maximal and next eigenvalues.

where the maximal eigenvalue is 1 (the probability balance condition) and r is the gap between the maximal and the next to the maximal eigenvalues, see Equation (15). Figure 3 gives the results of numerics for the r.

Figure 3.

The relaxation dynamics for the uncorrelated case, the for the model with . The smooth line is our theoretical estimate, the dashed line is the result by numerics.

We also investigated the relaxation dynamics in case of correlated choice of the matrices: at the next round, we have the current choice of the matrix type with a probability q and an alternative choice with a probability . Figure 4 gives the results of the numerics for the r.

Figure 4.

The numerical results for the relaxation dynamics, the for the model with . The system holds with probability the current landscape and, with the probability , moves to the alternative one. The change of distribution is at .

2.3. The Identification of the Phase Transition in Higher Dimensions

Consider the case when there are order matrices. For the sake of simplicity, we take the following representation for the matrices

The Matrix A has a maximal eigenvalue , the next eigenvalues are , and . The Matrix B has a maximal eigenvalue , the next eigenvalues are and .

If we take a vector , then multiplying by A we obtain ∼, thus the distance to the decreased times. We have that there is

times attraction. Thus for d = 2 case we identify .

Exact critical point corresponds to the limit

When

The steady-state distribution is expected to be focused on an eigenvector related to the maximal eigenvalue. We derived Equations (20) and (21) without making any scaling assumption. Our derivation is on a qualitative level, in contrast to the strict derivation of Equations (13) and (14) after using the Ansatz (10). Currently, the state of the system is described by a d-dimensional unit vector .

In the Figure 5 we take , . Our numeric confirms the existence of phase transition according to Equations (21) and (22). We calculate numerically the distribution via for the case with the A matrix choice, and via for the alternative case.

In the d = 2 case, we observed non-ergodicity with several separated domains. However, in the current case, we do not observe such non-ergodic behavior with separated domains. This difference in behavior is attributed to the one-dimensional character of the functional equation in the current scenario. In summary, the change in the dimensionality of the functional equation has an impact on the presence or absence of non-ergodicity and separated domains in the system.

Although we are unable to derive a steady-state distribution for similar to the one described by Equation (5), we can still measure some distribution numerically during the iteration process. By doing so, we can identify the phase transition by observing the singularities of the distribution. Even without an analytical expression for the steady-state distribution, numerical methods can provide valuable insights into the system’s behavior and help identify important transitions and patterns.

2.4. Complex Eigenvalues Case

We assume that the support of the steady state distribution can be larger than . We take the following matrix

and

In the case where one matrix has a real eigenvalue , while another matrix has complex eigenvalues, we observe a transition in both distributions, as described by Equation (13), as shown in Figure 6.

Figure 6.

The three-dimensional case. Equation (18). We take 10 random initial vectors then make 100,000 iterations. The smooth line is for , the dashes line is for . . (a) . The probability distribution p versus Both distributions correspond to the localization case. (b) . The probability distribution p versus . There is no singularity in .

The presence of complex eigenvalues in one matrix introduces additional dynamics and can lead to interesting transitions in the distributions. The interplay between real and complex eigenvalues influences the behavior of the system and can result in distinct phase transitions.

3. Investigation of Related Evolution Model

In the previous sections, we have identified various qualitative behaviors for the steady-state distributions. These behaviors arise due to the interplay between random transitions between different types of matrices and forces of selection (attraction towards the attractor state). Now, in this section, we proceed to investigate a related model involving the evolution of a population with two genotypes, where there exist random transitions between two landscapes.

The Crow–Kimura evolution model [25,26] considers a population with a genome length L and two alleles for each gene. The mutation rate is represented by µper genome. In this model, the growth rate of a genotype is equal to its fitness. We choose J as the growth rate of the “peak” genotype, which is the genotype with a single peak fitness [27]. The fitnesses of other genotypes are set to zero. In this model, the evolution of the population is driven by mutation and natural selection, where genotypes with higher fitness (higher growth rates) have a greater chance of survival and reproduction. The model allows us to investigate the dynamics of population evolution in the presence of mutation and fitness differences among genotypes.

We have the following equation [27] for the fraction of replicators with the high fitness sequence

where x is the fraction of the peak genotype in the population.

Let us consider two fitness landscapes with and for the first case and for the second case.

Let us consider two fitness landscapes where the mutation rate is . In the first case, the growth rate , and in the second case, . We now introduce random transitions between the landscapes with the rate . To describe the process stochastically, we introduce the probability distributions , where x represents the genotype and t represents time.

We can derive the following system of equations, similar to the one found in [14]:

The support where the steady state solution is nonzero is , .

Consider the steady-state distribution. We again assume a scaling Ansatz similar to the one in Equation (10):

By applying the Ansatz to Equation (25) and near the critical point, we make the replacement , where is the growth rate of genotype l and is the steady state position of genotype l. This approximation allows us to simplify the equation and analyze the behavior of the system near the critical point.

If we put , we can derive the transition points for the system.

When or , we observe singularities in the distribution of near the point . This indicates that the system is in a localized phase. On the other hand, if , we do not have singularities, and the system is in a de-localized phase. These phases, characterized by the presence or absence of singularities in the distributions of , were first discovered in [14]. The presence of singularities indicates localization, while the absence of singularities signifies the de-localization of the population in the respective phases.

Let us investigate the model numerically. Maintaining probability balance is crucial in the numerical algorithm at each step of the iteration. This means that the probabilities of all possible events must sum up to 1. It ensures the correctness and accuracy of the algorithm and prevents numerical errors or discrepancies. Please refer to the Appendix B section for further details on how the probability balance is upheld throughout the numerical iteration process. For the numerical algorithm, it is principal to hold the probability balance at any step of iteration, see the Appendix B.

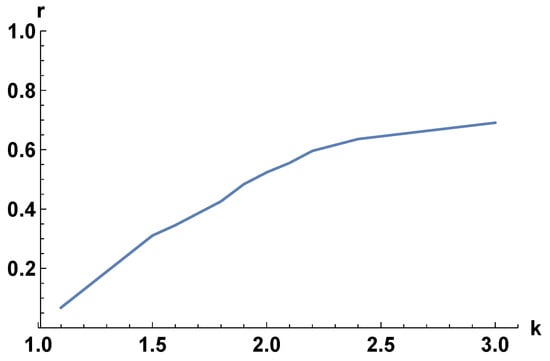

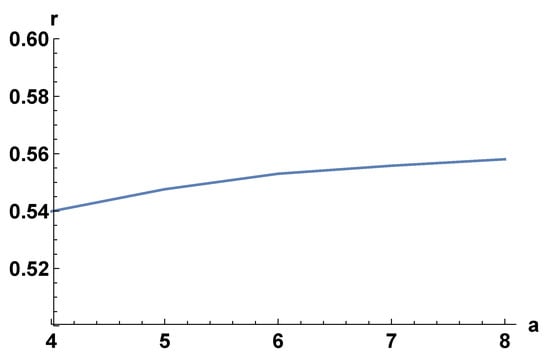

Instead of discretization of the equation, a related random walk model on the strip is formulated, with transitions between neighbors on each chain and between two chains. The model considers L points in the interval, and the probabilities at the points in the grid are defined using two sets of probabilities, . The numerical simulations have been performed, and the results indicate that the relaxation dynamics strongly depend on the phase of the system, as shown in Figure 7.

Figure 7.

The relaxation dynamics, the for the evolution model with . The change of distribution is at .

4. Conclusions

In this current article, our investigation focused on the product of random matrices and its related evolution model. The study aimed to understand the behavior and dynamics of the system under consideration. In this article, we formally define the “localized phase” as a phase characterized by a singularity in the steady-state distribution and the “de-localized phase” as a phase with a smooth distribution. This definition provides a rigorous classification of the phases based on the behavior of the steady-state distributions. Upon analysis, we identify that the de-localized phase exhibits a wide distribution, indicating a broader range of possible outcomes for the system. In our investigation, we numerically studied the product of uncorrelated discrete random matrices and identified a phase transition between localized and de-localized phases. The derived master Equation (3) for this case was found to be simpler than the one for correlated random matrices. By considering matrices with real eigenvalues and complex eigenvalues separately, we were able to obtain analytical formulas for the transition points, including a formula (Equation (19)) for the appearance of singularities in the phase space.

For the case of 2 × 2 matrices, we observed a series of transitions, as shown in Figure 1. However, we did not find any indication that different phases in the statics have different expressions for the Lyapunov indices. Regarding the relaxation dynamics, we investigated both the correlated and uncorrelated cases. We found that there is no transition in the dynamics, but relaxation is faster in the singular phase. Despite obtaining answers to some questions related to the random matrix problem, certain issues remain unsolved. Two significant problems are determining the conditions under which relaxation in the localized phase is faster than in the de-localized phase and identifying cases of explicit non-ergodic behavior in the steady-state distribution. Furthermore, we investigated the related evolution model, which resembles the case of random matrix products when matrices have real eigenvalues. In this model, we verified that the relaxation dynamics are somehow faster in the non-singular phase. Although our research focused on the Master equation, we acknowledge that the existence of the phase transition may have practical implications for related models, such as Hidden Markov Models. In conclusion, our work contributes to a better understanding of the random matrix problem, but some challenges remain unresolved. We hope that future research will address these difficult problems and provide further insights into the practical implications of the phase transition in related models.

For Hidden Markov Models (HMMs), in the functional equations such as Equation (5), the transition probabilities between states depend on the current state of the system. In HMMs, the system’s state is hidden and can only be inferred from the observed output (emission) probabilities associated with each state. The transition probabilities in the functional equations determine the probabilities of transitioning between different hidden states based on the current observed state. The existence of phase transition here could have a serious impact. Indeed, the view that learning is especially successful near the phase transition point is supported by a vast amount of research and experience over the last decades. In various complex systems, such as statistical physics, machine learning, neural networks, and optimization algorithms, it has been observed that the system’s performance improves significantly when operating near a phase transition point [28].

The Kalman filter [29] is another interesting model related to the product of correlated random matrices. The Kalman filter is an essential tool in control theory and estimation, used for state estimation in dynamic systems. It deals with estimating the state of a system based on noisy measurements. In the linear case, the Kalman filter has a well-defined and efficient solution. However, in the nonlinear case, the correct solution remains an unsolved problem [30]. The nonlinear Kalman filter is an active area of research in control theory and estimation. The challenge of solving the nonlinear Kalman filter is due to the complexity introduced by the nonlinearity of the system dynamics and the uncertainty in the process and measurement noise. Nonlinear Kalman filters, such as the Extended Kalman Filter (EKF) and Unscented Kalman Filter (UKF), are commonly used as approximations, but they may have limitations and are not guaranteed to provide the globally optimal solution. Researchers continue to work on developing better algorithms and approaches to handle the nonlinear case effectively and efficiently. We hope that the methods developed here and in [31] could work for the nonlinear Kalman filter case as well.

Author Contributions

Software, V.S.; Investigation, H.M. and D.B.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Russian Science Foundation grant No. 23-11-00116, SCS of Armenia grants No. 21T-1C037, 20TTAT-QTa003 and Faculty Research Funding Program 2022 implemented by the Enterprise Incubator Foundation with the support of PMI Science.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

D.B.S. thanks I. Gornyi and P. Popper for discussions.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix B. Numerical Algorithms

We used two numerical algorithms.

Appendix B.1. The First Algorithm

We take grids uniformly with a step and define the probability distribution at the grid as . We replace by where is the coordinate of the closest grid to the point .

Appendix B.2. The Second Algorithm

Consider our functions in the interval . Let us we have a singularity point at the point . Then we consider the grids, points with the step between 0 and , points with the step between and . We identify the points .

We have points in the cycle:

Next, we identify the probabilities in in points.

We define the total probability as

where

Next, for any j we define and as the neighbors of the point. Then we define and . Next, we define and . Eventually, we define the transition matrix us

where are matrices with the matrix elements .

References

- Furstenberg, H.; Kesten, H. Products of random matrices. Ann. Math. Statist. 1960, 31, 457–469. [Google Scholar] [CrossRef]

- Furstenberg, H. Noncommuting random products. Trans. Am. Math. Soc. 1963, 108, 377–428. [Google Scholar] [CrossRef]

- Bougerol, P.; Lacroix, J. Products of Random Matrices with Applications to Schrodinger Operators; Birhauser: Basel, Switzerland, 1985. [Google Scholar]

- Chamayou, J.F.; Letac, G. Explicit stationary distributions for compositions of random functions and products of random matrices. J. Theor. Prob. 1991, 4, 3–36. [Google Scholar] [CrossRef]

- Crisanti, A.; Paladin, G.; Vulpiani, A. Products of Random Matrices in Statistical Physics; Springer: Berlin/Heidelberg, Germany, 1993. [Google Scholar]

- Comtet, A.; Luck, J.M.; Texier, C.; Tourigny, Y. The Lyapunov exponent of products of random 2 × 2 matrices close to the identity. J. Stat. Phys. 2013, 150, 13–65. [Google Scholar] [CrossRef]

- Comtet, A.; Texier, C.; Tourigny, Y. Lyapunov exponents, one-dimensional Anderson localization and products of random matrices. J. Phys. A 2013, 46, 254003. [Google Scholar] [CrossRef]

- Comtet, A.; Tourigny, Y. Impurity models and products of random matrices. arXiv 2016, arXiv:1601.01822. [Google Scholar]

- Ephraim, Y.; Merhav, N. Hidden Markov processes. IEEE Trans. Inform. Theory 2002, 48, 1518–1569. [Google Scholar] [CrossRef]

- Mamon, R.S.; Elliott, R.J. (Eds.) Hidden Markov Models in Finance: Further Developments and 147 Applications Volume II; Springer Nature: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Saakian, D.B. Exact solution of the hidden Markov processes. Phys. Rev. E 2017, 96, 052112. [Google Scholar] [CrossRef]

- Zuk, O.; Kanter, I.; Domany, E. The entropy of a binary hidden Markov process. J. Stat. Phys. 2005, 121, 343–360. [Google Scholar] [CrossRef][Green Version]

- Allahverdyan, A.E. Entropy of Hidden Markov Processes via Cycle Expansion. J. Stat. Phys. 2008, 133, 535. [Google Scholar] [CrossRef][Green Version]

- Hufton, P.G.; Lin, Y.T.; Galla, T.; McKane, A.J. Intrinsic noise in systems with switching environments. Phys. Rev. E 2016, 93, 052119. [Google Scholar] [CrossRef]

- Mayer, A.; Mora, T.; Rivoire, O.; Walczak, A.M. Diversity of immune strategies explained by adaptation to pathogen statistics. Proc. Natl. Acad. Sci. USA 2016, 113, 8630. [Google Scholar] [CrossRef]

- Kussell, E.; Leibler, S. Phenotypic diversity, population growth, and information in fluctuating environments. Science 2005, 309, 2075–2078. [Google Scholar] [CrossRef]

- Skanata, A.; Kussell, E. Evolutionary Phase Transitions in Random Environments. Phys. Rev. Lett. 2016, 117, 038104. [Google Scholar] [CrossRef]

- Wienand, K.; Frey, E.; Mobilia, M. Evolution of a Fluctuating Population in a Randomly Switching Environment. Phys. Rev. Lett. 2017, 119, 158301. [Google Scholar] [CrossRef]

- Rivoire, O.; Leibler, S. The value of information for populations in varying environments. J. Stat. Phys. 2011, 142, 1124–1166. [Google Scholar] [CrossRef]

- Rivoire, O. Informations in models of evolutionary dynamics. J. Stat. Phys. 2016, 162, 1324–1352. [Google Scholar] [CrossRef]

- Saakian, D.B. Semianalytical solution of the random-product problem of matrices and discrete-time random evolution. Phys. Rev. E 2018, 98, 062115. [Google Scholar] [CrossRef]

- Snizhko, K.; Kumar, P.; Romito, A. Quantum Zeno effect appears in stages. Phys. Rev. Res. 2020, 2, 033512. [Google Scholar] [CrossRef]

- Poghosyan, R.; Saakian, D.B. Frontiers in Frontiers, Infinite Series of Singularities in the Correlated Random Matrices Product. Front. Phys. 2021. [Google Scholar] [CrossRef]

- Nilsson, M.; Snoad, N. Error Thresholds for Quasispecies on Dynamic Fitness Landscapes. Phys. Rev. Lett. 2000, 84, 191. [Google Scholar] [CrossRef] [PubMed]

- Crow, J.F.; Kimura, M. An Introduction to Population Genetics Theory; Harper and Row: New York, NY, USA, 1970. [Google Scholar]

- Baake, E.; Wagner, H. Mutation-selection models solved exactly with methods of statistical mechanics. Genet. Res. 2001, 78, 93–117. [Google Scholar] [CrossRef] [PubMed]

- Saakian, D.B.; Hu, C.-K. Solvable biological evolution model with a parallel mutation-selection scheme. Phys. Rev. E 2004, 69, 046121. [Google Scholar] [CrossRef]

- Xie, R.; Marsili, M. A random energy approach to deep learning. J. Stat. Mech. Theory Exp. 2022, 7, 073404. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35. [Google Scholar] [CrossRef]

- Gustafsson, F.; Hendeby, G. Some Relations between Extended and Unscented Kalman Filters. IEEE Trans. Signal Process. 2012, 60, 555. [Google Scholar] [CrossRef]

- Galstyan, V.; Saakian, D.B. Quantifying the stochasticity of policy parameters in reinforcement learning problems. Phys. Rev. E 2023, 107, 034112. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).