Abstract

This paper presents a robust method for dealing with switching regression problems. Regression models with switch-points are broadly employed in diverse areas. Many traditional methods for switching regressions can falter in the presence of outliers or heavy-tailed distributions because of the modeling assumptions of Gaussian errors. The outlier corruption of datasets is often unavoidable. When misapplied, the Gaussian assumption can lead to incorrect inference making. The Laplace distribution is known as a longer-tailed alternative to the normal distributions and connected with the robust least absolute deviation regression criterion. We propose a robust switching regression model of Laplace distributed errors. To advance robustness, we extend the Laplace switching model to a fuzzy class model and create a robust algorithm named FCL through the fuzzy classification maximum likelihood procedure. The robustness properties and the advance of resistance against high-leverage outliers are discussed. Simulations and sensitivity analyses illustrate the effectiveness and superiority of the proposed algorithm. The experimental results indicate that FCL is much more robust than the EM-based algorithm. Furthermore, the Laplace-based algorithm is more time-saving than the t-based procedure. Diverse real-world applications demonstrate the practicality of the proposed approach.

MSC:

62-08

1. Introduction

Switching regression models are widely applied because the relationships between an outcome variable and covariates often alter appreciably at places called switch-points (SPs) or change-points. Switching regression is also referred to as change-point, multiple-phase, or segmented regression. Switching regression problems occur in many areas, such as medicine, finance, ecology, and climatology. For instance, in medical studies, the risk of a new baby with Down Syndrome (DS) is hardly relevant to the mother’s age, but the risk increases sharply with the mother’s age after a threshold [1]. SPs are critical for signifying changes in incidence rates, which remain low for younger people but increase significantly after an age threshold [2]. Additional applications include assessing the switching regressions in finance to know where and how the market structure is altered because of government interventions [3]; investigating climate changes when studying global warming [4]; and revealing danger points when the rate of species loss rises abruptly [5].

Because of the broad range of applications, many recent studies on the methods for solving switching regression issues exist. For instance, [6] proposed a procedure termed wild binary segmentation (WBS), which inherits the merit of binary segmentation and further improves its weakness. Ref. [3] extended the use of WBS to non-stationary time series. Ref. [7] proposed a new technique termed ensemble binary segmentation (EBS) to locate SPs for irregularly spaced data. Notice that these binary-segmentation-based approaches are only applicable to mean shift detection. Moreover, Ref. [8] presented a simultaneous multiscale change-point estimator (SMUCE) for estimating SPs and regression parameters with the possibility of over- and underestimating the number of SPs controlled by a multiscale test. Ref. [9] proposed an extended version, H-SMUCE, for locating changes in a heterogeneous regression model. There are more nonparametric approaches to SP problems, such as [10,11,12]. Some researchers have considered SP detection problems for auto-correlated series, such as [13,14,15]. There have been studies on the computational efficiency, such as [16,17,18].

Despite the increase in studies on the different problems of SP detection, there has been less research on robust methods in the literature of switching regressions. Switching regression models are usually estimated by experimental data assuming errors are normally distributed (c.f. [19,20]). Such assumptions are known to be sensitive to outliers. However, outlier corruption of datasets is often unavoidable because of the occurrence of measurement errors during data collection and data processing [21]. When misapplied, the Gaussian assumption can often lead to serious distortions in regression estimates and incorrect inference making. The impacts of atypical observation on the switching regression estimates of Gaussian models are even severer.

Two methods frequently considered for coping with outlier problems are outlier diagnosis and robust estimation. The diagnostic approach includes the detection and removal of outliers. Aggarwal [22] stated that the outlier diagnostic is indeed a subjective decision on whether a data point is distant enough from most data points to be removed. In particular, the elimination of data that are actually not outliers, as well as the retention of outliers in the dataset, may lead to less reliable inferences in the subsequent analysis. Robust estimation methods have been suggested for regression analysis [23]. These robust approaches exploit estimators that are more resistant than the least squares estimator in the presence of atypical observations. As is well-known, outliers can have considerable influence on the estimations of traditional linear regression models. The effects of outliers on the estimates of switching regression models are even more serious because the estimates of both regression parameters and SPs can be distorted. Although robust procedures are needed to estimate switching regression models, there is little research on robust estimations for switching regressions. In this study, we aim to develop a resistant algorithm for switching regressions.

M-estimators are popular in robust statistics and are defined as a minimum of the sum of a certain resistant loss function over all data points in a sample. Ref. [19] implemented Tukey’s biweight loss function in a penalized cost criterion for finding the optimal segmentation. Their method is only applicable to the datasets of a constant variance, which may not be feasible in many real applications. Ref. [24] combined M-estimation with the expectation and maximization (EM) algorithm to develop robust algorithms for solving multiphase regression problems. Their EM-based algorithms have the same drawback as EM in that convergence is slow. There have been recent nonparametric studies on robust procedures for switching regressions. Ref. [25] introduced a rank-based bent line regression for segmented regressions of one SP. Ref. [26] combined Muggeo’s [1] linearization procedure with ranked-based estimators for continuous piecewise regressions. Ref. [27] suggested a robust Wilcoxon-type estimator for detecting a single change in mean in a short-range dependent process. These methods are restricted to continuous models [25,26] or single change detection [25,27].

Several researchers have implemented t distributions to develop a robust statistical model. For example, Ref. [23] suggested a robust clustering approach through a mixture model of t distributions. Ref. [28] introduced a robust mixture regression model of t noises. Ref. [29] detected a single change-point in a t-regression model on the basis of Schwarz Information Criterion (SIC). Ref. [30] combined Bayesian approaches with t distributions to locate changes in variances for a piecewise regression model assuming the regression coefficients are unvaried. Ref. [31] connected t distributions to construct two robust algorithms to make robust estimations for switching regression models. One concern with t-based approaches is the determination of the degrees of freedom, which can considerably affect the fit of the resulting model.

The least absolute deviation (LAD) criterion is known to be robust among a broad class of criteria. A few researchers have embedded LAD in switching regression models for making resistant inferences [32,33,34]. A robust method connected with LAD is the likelihood approach based on the Laplace distribution. The Laplace distribution is known as a heavy-tailed alternative to the normal distribution. In recent years, the Laplace distribution has received attention because of the theoretical developments and the computational advancements in LAD regressions. Ref. [35] pointed out that the robustness properties of the Laplace distribution make it desirable in many applied research areas. Several researchers have advocated using the Laplace distribution instead of the Gaussian distribution for the robust fit of a model to a dataset (cf. [36,37,38,39]). In regression analyses, Ref. [40] proposed a robust mixture regression model based on the Laplace distribution. Ref. [41] proposed using a two-phase Laplace regression model and the Schwarz Information Criterion (SIC) to detect the single change-point. Ref. [42] employed Bayesian analysis to locate a single change in a sequence of observations having Laplace distributions. The shortcoming of these two methods is the limitation of their applicability to a single switch-point detection. Although the Laplace distribution has been considered in many fields for deriving resistant estimators, it is much less utilized in switching regression analyses. This may be partly because the maximizer of the Laplacian likelihood function cannot be conducted in a closed form through differentiation but an iterative numerical scheme is required.

Consider the advantages of the Laplace distribution, where the longer-than-normal tail makes it desirable in various applied research areas, and fewer parameters are involved than in t-distributions, so the Laplace-based estimation procedure is simpler than the t-based method. We propose a robust switching regression model of Laplace noises. To derive an explicit form of the maximum likelihood estimator (MLE) for the Laplacian model, we regard the switching regression model as a mixture model and exploit the fact that a Laplace distribution is a scale mixture of normal distributions. Thus, we can greatly simplify the computations and easily obtain an explicit solution of estimates for each individual parameter. Because of the indefiniteness between two adjoining segments, we further embed the fuzzy clustering into the switching Laplace regression model. Consequently, the fuzzy-based algorithm is not only more resistant to atypical observations but also more time-effective.

The article will proceed as follows. In Section 2, a new approach for switching regressions is introduced. The robustness properties and the advancement of the proposed method are also discussed. In Section 3, a simulation study and a sensitivity analysis are conducted to show the effectiveness and advantages of the proposed method. Discussion and conclusions are presented in Section 4.

2. Robust Algorithms for Switching Regression Models

Suppose is a dataset randomly drawn from a switching regression of c segments and the p-dimensional predictor, and the response is denoted by and , respectively. Assume further that the relation between the response y and predictors, , is completely determined by an independent variable, say . We may assume the sample is arranged in the ascending order of and hence denote the switch-points (SPs) by their rank. Suppose the c − 1 changes in the switching regression model occur at . Define and . Given , suppose the observation is in the kth segment, the corresponding regression model can be written as

where has mean 0 and variance and all are independent , . For robust estimations, we assume the switching regression errors follow Laplace distributions with scale parameters , i.e., , , , with the density , .

Thus, the conditional distributions of are

i.e., the conditional densities are

where , , . Next, we develop a robust algorithm for switching regressions of Laplacian errors by using the expectation and maximization (EM) algorithm.

2.1. Robust EML Algorithm

We consider exploiting the mixture likelihood approach to make estimations for switching regressions because locating SPs is parallel to grouping data into clusters of similar objects and the mixture model is a popular framework for modelling heterogeneity in data for clustering.

Given model (1), let with be a collection of c − 1 SPs. Note that changes in switching regressions occur randomly; hence, we regard each potential collection of c − 1 SPs as a random variable denoted by :

with

satisfying

Accordingly, the sample from switching regressions is incomplete with unobserved variables , , . To use EM for estimations, we consider transferring the distribution of SP collections into the membership of observations belonging to each individual segment. Let the vector of indicator variable be defined by

where are independent and identically distributed with a multinomial distribution consisting of one draw on c segments with probabilities , respectively. We write

Given , are a collection of c − 1 SPs, then

thus,

and

Thus, the switching regression model can be viewed as a mixture model with an incomplete sample and unobservable variables . Recall , , thus the “mixed joint density” can be defined as

By Equations (1) and (2), the complete likelihood function for the Laplacian switching model is

where , are mixing proportions satisfying .

The complete log-likelihood function is

Usually an explicit maximum likelihood estimator (MLE) for is not available through the above log-likelihood. For deriving the explicit MLE by EM, we consider expressing the Laplace distribution as a scale mixture of normal distributions (this property with proof is stated as Lemma A1 in the Appendix A). Let be the latent random vector variable, such that

where denotes given . It follows that (see Lemma A1 in the Appendix A)

The complete likelihood function becomes

The complete log likelihood function can be rewritten as

In using EM, given the current estimate at the jth iteration, the calculations in the E-step simplify to calculating and , in accordance with (3) and (4), and the applications of EM for the mixture models illustrated by McLachlan and Peel [43].

An estimate of is given by

with

where .

By Lemma A1 in the Appendix A,

Then, in the M step, we maximize

by taking partial derivatives of with respect to all parameters to derive an explicit solution for . Based on the above, we propose a robust EML algorithm for estimating switching regression models. The workflow of EML includes three parts. First, initialize the parameter estimates. Second, update the estimates by EM. Finally, obtain the estimates of SPs.

EML Algorithm

- (1)

- Given an initial parameter estimate at the (j + 1)th iteration, we execute the following two steps.

- (2)

- E step: Compute and by Equations (7) and (8).

- (3)

- M-step: Update parameter estimateswhere is a diagonal matrix with the ith diagonal element .

- (4)

- Repeat steps (2) and (3) until convergence is obtained.

After the EML algorithm converges, the c − 1 SPs are estimated by

2.2. Robust FCL Algorithm

We consider extending the crisp model to a fuzzy class model to advance the accuracy of estimations and speed up the rate of convergence. Utilizing the idea of [44], we treat each potential SP collection as a partition of data with a fuzzy membership and transfer them into the pseudo-memberships of data lying in each individual subdivision. Consider a switching regression model of c − 1 SPs described by (1). Let , be a collection of SPs with a fuzzy membership under the constraints and . Let represent the fuzzy membership of lying in the kth segment. Referring to [44], we describe by as

Accordingly, the switching regression model becomes a fuzzy class model with a fuzzy c-partition, , , , satisfying . Thus, a fuzzy classification maximum likelihood (FCML) function (c.f. [45]) can be formulated as

subject to , , m > 1, , , , , . The FCML procedure (c.f. [45]) is serviceable for deriving the estimates for regression parameters. Assume the regression errors have Laplace distributions, i.e., , , . That is,

Similarly, express the Laplace density as a scale mixture of normal distributions with a latent vector introduced:

We have the FCML objective function

Replace with given by (8) such that

Then, take the derivative of over , and with a Lagrange multiplier under the constraints . We derive the updated estimates

where is the matrix in having as its ith row; Y is the vector in having yi as its ith component, and is a diagonal matrix with the kth diagonal element . Next, to update the fuzzy membership , we consider a new objective function through the concepts of fuzzy sets and fuzzy c partitions. Given as a collection of SPs, let

where . Define the objective function

with the constraints and . We derive the updated equation

Based on the above, we propose the FCL algorithm as follows.

The workflow of FCL includes three parts. First, initialize the parameter estimates. Second, update the estimates based on the FCML procedure and the concepts of fuzzy sets with fuzzy c partitions. Finally, obtain the estimates of SPs.

FCL Algorithm

- (1)

- Given the initial value of , , we compute by (12), whereand

At the (j + 1)th iteration, we execute the following three steps.

- (2)

- Compute , , , , by (15)–(17).

- (3)

- Update with , by (18) and (19).

- (4)

- Update and by (12) and (8).

- (5)

- Repeat steps (2)–(4) until convergence is obtained.

After the algorithm converges, the SPs are estimated by

2.3. The Robustness and Advancement of FCL

We demonstrate the capability of FCL in resisting outliers through the influence function and gross error sensitivity. M-estimators are popular in statistics for estimations, which are derived by minimizing an objective function of the form

where is a function defined for measuring the difference between the true distribution and the one assumed. An M-estimator is usually the solution to the equation

A sample mean is a popular M-estimator associated with the loss function . A maximum likelihood estimator is also an M-estimator with , and is the density of the population data drawn from. The influence curve (IC) is helpful for assessing the relative influence of an individual observation on an estimate. The IC of an M-estimator is proportional to its function

assuming the density function exists. An estimator might be seriously distorted by an outlier when the estimator has an unbounded IC. The gross error sensitivity g* defined as

This is an important tool to measure the robustness of an estimator. can be viewed as the worst effect that an additional tiny point might have on the associated estimator.

For the proposed method, assuming errors have Laplace distributions, the estimator is essentially an M-estimator associated with the absolute deviation loss function

The corresponding function is

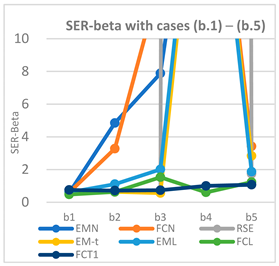

We also find that the loss function associated with normal regressions is the squared errors , thus, . Moreover, the Huber loss and Tukey’s biweight function are also frequently used in statistics for robust estimations. We present these four ICs in Figure 1 for comparisons. Clearly, the IC of squared errors (normal regressions) is unbounded; that is, its gross error sensitivity . Furthermore, even though x deviates from 0 only to a small degree, the value of IC alters remarkably. This implies that the normal-based estimators are sensitive to outliers. On the other hand, the other three ICs in Figure 1 are bounded. The IC associated with Laplace regressions is bounded by 1, which is always less than that of Huber’s loss. On the other hand, the estimator from Tukey’s biweight loss is the most resistant against extreme outliers, but the Laplace-based estimator is more favorable in the presence of mild outliers (in the general range of 1 < |x| < 3, as shown in Figure 1). Thus, from the viewpoint of robust statistics, the Laplace-based estimator is robust enough for atypical observations.

Figure 1.

IC curves of M-estimators with squared loss (Normal), absolute deviation loss (Laplace), Huber loss, and Tukey’s biweight loss.

Similar to many M-estimators obtained in linear regressions, the proposed Laplace-based method is only robust to outliers along the y-direction but not robust to outliers with extreme high leverage. Once there exist any high-leverage outliers, the proposed method may fail to produce sufficient estimates, and hence, modifications are required. An obvious approach is to find those extremely high leverage points and then discard them. Referring to linear regression analyses, the leverage score of observation is described by

where , , and are the sample mean and the sample covariance of s, respectively. Considering and are easily affected by outliers, we replace them with the minimum variance determinant (MCD) estimators (c.f. [46]) among all subsets of h elements taken from the dataset with h = (n + p + 1)/2 (c.f. [46]). By means of the Fast MCD algorithm (c.f. [47]), we trim the dataset by discarding those data having leverages ranking in the top . As to the selection of , a small value such as 0.1 is usually sufficient in practice, since the proposed method is resistant enough to most outliers but can only be seriously affected by a minority of outliers with extremely high leverage.

3. Experiments and Applications

3.1. Simulation Study

The efficacy and superiority of the proposed algorithms were examined through comparisons with existing methods. The methods employed include

- EMN: EM algorithm with normally distributed errors [44],

- FCN: FCML algorithm with normally distributed errors [44],

- RSE: Rank-based segmented method [26],

- EM-t: EM robust algorithm using Tukey’s biweight function [24],

- EML: The proposed EML algorithm,

- FCL: The proposed FCL algorithm,

- FCT1: FCML algorithm with t distributed errors and the degrees of freedom equal to 1 [31].

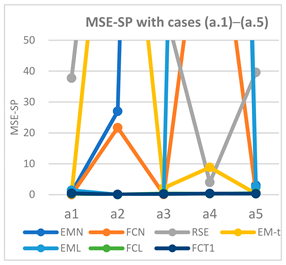

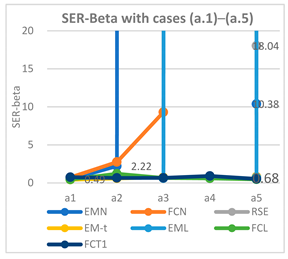

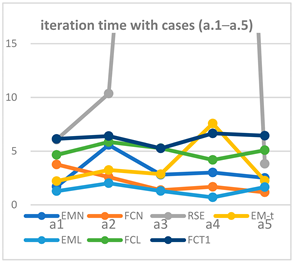

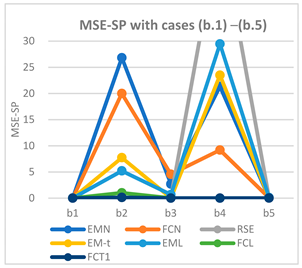

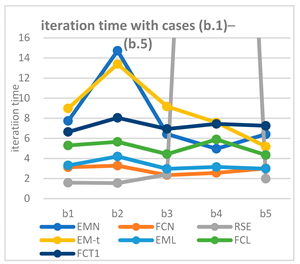

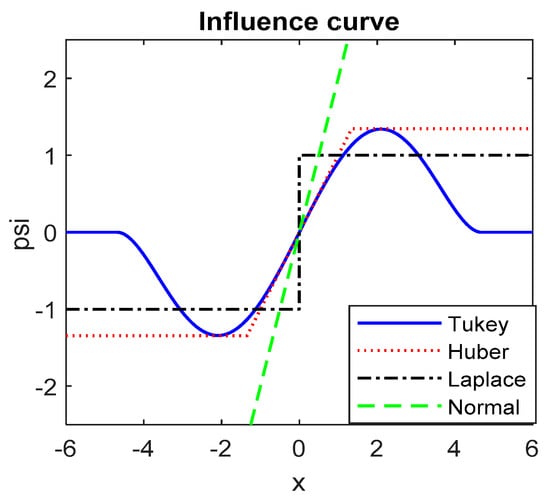

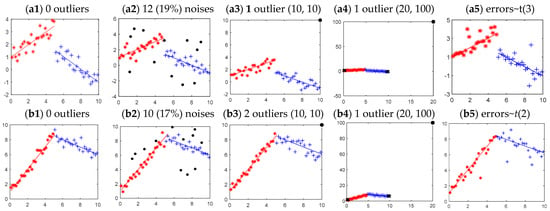

We evaluated the performance of each method under five situations for each model, as shown in Figure 2 and Figure 3. A random sample of size 50 was generated according to each respective model setting with regression errors , i = 1, 2, 3. Data were generated as follows. First, we evenly partitioned the interval [0, 10] into c non-overlapping subintervals, , with and . Then, ni values were generated from the uniform distribution as values of the independent variable x. Then, the values of y were derived according to the model setting with errors simulated from , i = 1, 2, 3. We set the stop criterion = 0.000005 and the initial membership of each possible combination of c − 1 SPs, , equal to , respectively. The number of replicates in each simulation run were 1000 and 200 for models of one and two switch-points, respectively. The measures for comparing the performances were (1) the mean square errors (MSEs) of the SP estimate (MSE-SP), ; (2) the sum of MSEs over all the estimates of regression coefficients (SER-Beta), , , where is the estimate of true in the rth replication; (3) the time (in seconds) taken by each simulation run (time).

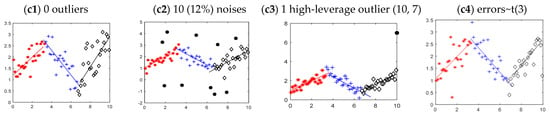

Figure 2.

Plots of Models (a) and (b), true parameters and one simulated series from each respective contamination scenario. Model expression , ; i = 1, 2; Model (a): 1 SP discontinuous model, *: (1, 0.5),+: (4, −0.5), i = 1, 2; Model (b): 1 SP continuous model, *: (1, 1.5), +: (11, −0.5), i = 1, 2.

Figure 3.

Plots of Model (c), true parameters and one simulated series from each respective contamination scenario. Model expression , ; i = 1, 2, 3; *: (1, 0.5), +: (4.3, −0.5), ◇: (−2.4, 0.5), i = 1, 2, 3.

We first considered the comparisons of one SP model in which Model (a) breaks at SP but Model (b) is continuous. For easy understanding, Figure 2 presents the true model setting and one of the simulated series including simulated data, contaminations, and fitted lines for each individual model. The five contamination scenarios considered were the presence of (1) no contaminations, (2) background noises, (3) high-leverage contaminating points, (4) extremely high leverage points, (5) errors having a heavy-tailed distribution. Table 1 and Table 2 report the estimation results of Models (a) and (b), respectively, with both the numerical and graphical presentations.

Table 1.

MSE of the switch-point and regression parameter estimates for Model (a) under scenarios (a1)–(a5).

Table 2.

MSE of the switch-point and parameter estimates for Model (b) under scenarios (b.1)–(b.5).

We see from Table 1 and Table 2 that, in the absence of contaminations (cases a1 and b1), all the methods provided satisfactory estimates for both models except RSE. RSE produced inadequate estimates with a large MSE-SP and SER-beta for Model (a) because it restricted two estimated regression lines from being connected. On the other hand, the proposed FCL performed a little better than EMN and FCN in case (b1) where data were not contaminated and normally distributed. As to the estimations in the contaminating scenarios, the normal-based methods, EMN and FCN, were seriously affected and resulted in a large MSE-SP and SER-Beta. In contrast, the proposed FCL produced sufficient and competitive estimates for both models under the cases with different types of contaminations, such as cases a2, a3, a5, b2, b3, and b5. For data contaminated by extremely high-leverage outliers, such as cases a4 and b4, the modified version, FCLtr with cut proportion (i.e., removing those data having a leverage ranked in the top 10%) provided adequate estimates of the regression model. Moreover, Table 1 and Table 2 show that FCL and FCT1 were competitive in the estimation precision but the proposed FCL was generally more time-saving. Thus, FCL performed better than the other methods.

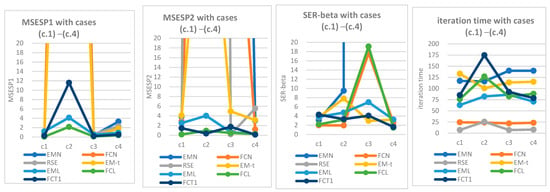

Next, we investigated the estimations for Model (c) of two switch-points. The model setting and contamination scenarios are illustrated in Figure 3 with a simulated series for an impression of the model and the simulated data. Estimation results are summarized in Figure 4. Similar to in previous investigations, all the methods produced adequate estimates for clean data in case (c1). Notice that RSE provided reasonable estimates of SPs in cases (c1) and (c3) because of the small MSE-SP in estimating SPs, but it failed to give fair estimates of regression coefficients for extremely large SER-Beta. This meant that RSE did not result in good estimates of SPs and regression parameters simultaneously. Furthermore, the estimations of RSE were not good enough for data in the presence of background noises (cases c2 and c4). On the other hand, as expected, the normal-based methods, EMN and FCN, were severely influenced in the presence of atypical observations or long-tailed distributions (cases c2–c4) for large MSE-SP and SER-Beta. Both FCL and FCT1 based on fuzzy clustering performed better than EML with crisp clustering. In particular, the performance of FCL was better than or at least comparable with that of FCT1 for smaller MSE-SP and SER-Beta in most scenarios. Moreover, in the case where data were contaminated by extremely high-leverage outliers, such as point (20, 100), the modified version FCLtr was useful for deriving sufficient estimates. We did not show the estimation results of data contaminated by extremely high-leverage outliers for Model (c) here because they were similar to those we derived from (c1), such as those of cases a4 and b4 shown in Table 1 and Table 2 for Models (a) and (b).

Figure 4.

MSE of the switch-point and regression estimates for Model (c) under scenarios (c1)–(c4).

In summary, the proposed switching regression model assuming Laplace distributed errors was much more resistant than normal-based models when atypical observations or heavy-tails were present in the dataset. Embedding the fuzzy clustering was helpful for promoting the ability of the algorithm in resisting contaminations. The proposed FCL worked well whether or not data were in the presence of abnormal observations. In particular, FCL generally performed better than the existing methods for providing less biased estimates of SPs and fewer mean square errors of fitted regression models for models of a single or multiple SPs under different kinds of contaminations. Although FCT1 and FCL were competitive in the accuracy of estimations, FCL was more efficient than FCT1 for time taken in iterations. Furthermore, FCL based on the Laplace distribution was free from the trouble with the degrees of freedom. The capability of FCL to withstand high-leverage points was fairly good, and this is important because high-leverage outliers may drastically distort the estimations. In particular, for data in the presence of extreme high-leverage points, the modified version, FCLtr with the cut proportion , usually provided satisfactory estimates.

3.2. Sensitivity Study with a Real Dataset

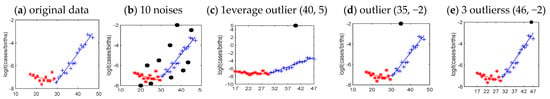

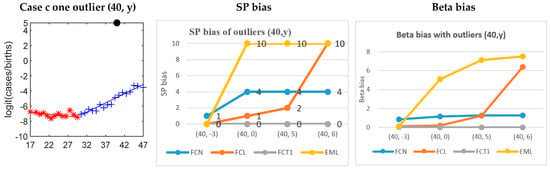

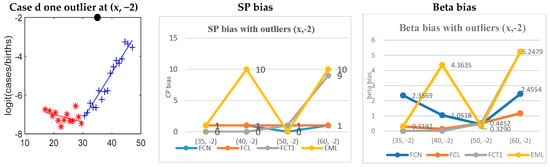

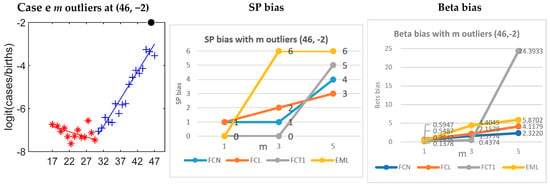

We executed a sensitivity study to investigate the tolerance of the proposed algorithm to different types of contamination. A real dataset often used for switching regression modeling is the Down Syndrome (DS) data from [48]. The DS data were collected to study how the risk of DS varies with the mother’s age. It was most important to find the threshold age of mothers over which the risk of DS drastically increased. We applied FCN, FCL, FCT1, and EML to DS data to compare their capability of withstanding different types of outliers. As with [49, 50], we assessed these methods using DS data with additional outliers. D’Urso et al. [49] described that for a robust algorithm, the estimates derived from contaminated data should be close to those from original data. Similar to D’Urso et al., we evaluated the effect of additional outliers on the estimations by examining the difference between the two estimates from data with and without contaminations. The five situations considered were (a) original data, (b) m background noises m = 6, 10, (c) one leverage outlier at (40, y), y = −3, 0, 5, 6, (d) one outlier at (x, −2), x = 35, 40, 50, 60, and (e) m high-leverage outliers at (46, −2), m = 1, 3, 5 (see Figure 5). The noises in case (b) were generated as follows. m x-values were randomly generated from uniform distribution U(17, 45), then y was generated based on the fitted lines from (a) with regression errors following N(0, 52). We report the experimental results with graphical presentations in Figure 6, Figure 7, Figure 8 and Figure 9.

Figure 5.

Plots of the estimated models for DS data with different kinds of additional outliers.

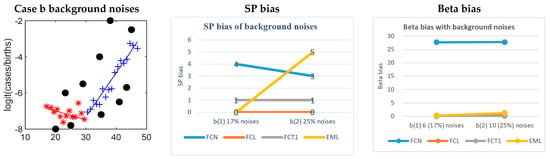

Figure 6.

Comparisons of cases (a) and (b); data in case (b) are contaminated by additional background noises with cases b(1) and b(2) including 6 (17%) and 10 (25%) noises respectively.

Figure 7.

Comparisons of cases (a) and (c); data in case (c) are contaminated by one outlier (40, y), y = −3, 0, 5, 6, respectively.

Figure 8.

Comparisons of cases (a) and (d); data in case (d) are contaminated by one outlier (x, −2), x = 35, 40, 50, 60, respectively.

Figure 9.

Comparisons of cases (a) and (e); data in case (e) are contaminated by m high-leverage outliers (46, −2), m = 1, 3, 5.

To measure the effect of contaminating data on the estimations, we adopted the idea of Maronna et al. [50] to evaluate the influence of m additional data points on the estimates of SP and regression coefficients by the difference and with

where and represent the original dataset and the set with additional data points, respectively; , and , are the estimates of SP and regression coefficients obtained from the original and the contaminated dataset, respectively. We denote the difference and as the SP bias and the Beta bias, respectively, in Figure 6, Figure 7, Figure 8 and Figure 9.

We first evaluated the effect of background noises on the four methods. Investigating Figure 6, we saw that FCN was noticeably affected by the large difference in both the estimated SP () and the estimated betas () in both case b(1) and b(2). Next, EML worked well in case b(1) where data were contaminated by 6 (17%) background noises, but it failed to provide reasonable estimates when noises increased to 10 (25%) points. On the contrary, FCL and FCT1 were rarely affected since their biases of estimation in both case b(1) and b(2) were very small (see Figure 6).

We examined the robustness of four algorithms with respect to outliers in the y-direction (see Figure 7). We first added an outlier at (40, −3) to data, then moved y to 0, 5, 6, one at a time. Notice that, as y increased, point (40, y) became farther from the original data. FCT1 was the most robust with almost no changes in all the estimations for very small SP and Beta biases. FCL was also very resistant until the contaminating point moved to (40, 5) which was far away from the original data by more than 3.7 standard deviations. By contrast, FCN and EML were heavily affected by outlier (40, y) for .

We investigated the resistance of estimators against an outlier at (x, −2) with varied x (x = 35, 40, 50, 60) (see Figure 8). FCL was the most robust, for it was slightly affected in all scenarios where x moved from 35 to 60. In contrast, FCN was not resistant in any of the four situations. FCT1 was badly affected when x moved to 60. Finally, we investigated the robustness of estimators in the presence of several high-leverage outliers at the same place (46, −2) (see Figure 9). FCL and FCT1 provided reasonable estimates when the number of high-leverage outliers was no more than 3. FCL was more tolerant than FCT1 in the case of 5 additional high-leverage outliers at (46, −2) in presenting much less bias of SP and Beta than FCT1.

In summary, the sensitivity analysis showed that the Laplace-based FCL and EML were actually more robust than the normal-based FCN in the presence of different types of contaminations. Furthermore, FCL always performed better than EML. This indicated that fuzzy clustering helped to increase the ability to withstanding outliers. FCL was generally competitive with FCT1 and produced acceptable estimates in the presence of different types of noisy points. Specifically, FCL was more robust to high-leverage outliers (data points with fairly large values in x-direction) than other methods. The robustness to high-leverage outliers is important because high-leverage points are very likely to distort the estimations.

3.3. Real Applications

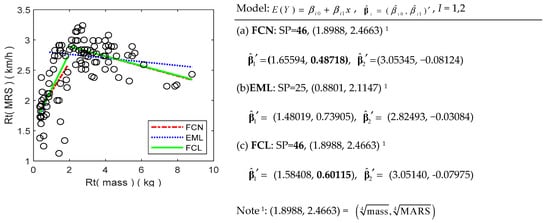

Example 1. We demonstrated the practicability of the proposed FCL by using a dataset containing 107 land mammals taken from [51] to discuss the relations between the maximal running speed (MRS) and the size of body. It was comprehensible that neither the largest nor the smallest mammals runs fastest. Thus, the relationship between running speed and body size should alter at some place. Ref. [52] pointed out that animals are constructed with similar elasticity, and the fourth roots of MRS and body mass are linearly dependent. Figure 10 indicates the linear dependence of (MRS)1/4 on (mass)1/4 and there is a slope change in the linear relation. Furthermore, some exceptionally low points are present in the lower left-hand side. Ref. [25] determined the three slowest point outliers based on Grubbs’ test. The left line is likely to be pulled down. That is, the slope of the left line may be underestimated. Figure 10 illustrates that EML produced an SP estimate of 25 and an unfitted line in the right-hand segment owing to the influence of low points in the lower left corner. FCL and FCN resulted in an identical SP estimate of 46 but the slope derived by FCN is clearly lower than that by FCL. This means FCL is more resistant against the outliers at the bottom left side than EML and FCN. More important is that the resulting lines of FCL fit the data well. Thus, FCL was tolerant of atypical observations and provided an estimated model to fit the data well.

Figure 10.

Estimated SP and regression coefficients of FCN, EML, and FCL using MARS data.

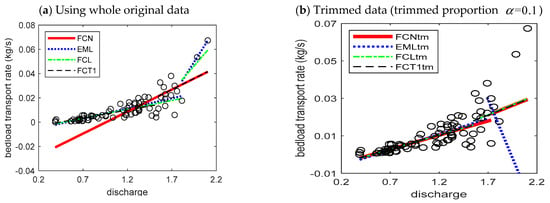

Example 2. We applied the proposed method to a dataset from https://doi.org/10.2737/RDS-2007-0004 (obtained on 26 May 2017) to investigate the relevance between the bedload transportation rate (kg/s) and the discharge rate. Bedload transport indicates sediment transportation along a streambed. Sediment transport rate is usually decomposed into two phases according to speed, from slow (Phase I) to fast (Phase II) (cf. [53]). The switch-point is important for understanding where and how the transport rate changes. Ref. [53] remarked that two-phase models are suitable and sufficient for describing bedload transport. Figure 11 shows that the speed of bedload transport that increased with the water discharge became much faster for water discharge rates over 1.5 (m3/s) approximately. Moreover, Figure 11 exhibits two abnormal points in the upper right hand section. Ref. [25] determined that these two points are outliers through a Grubbs’ test. In particular, they have a significant influence on estimators because of their high leverages. Figure 11a shows that the SP estimates derived by FCN, EML, and FCL were not reasonable. The estimated SP by FCT1 seems acceptable but the fitted line on the right is pulled up by two high-leverage outliers.

Figure 11.

Fitting results of the four methods with original and trimmed data.

We employed the trimmed version of the four methods with the trimming proportion to alleviate the influence of leverage outliers. Figure 11b and Table 3 demonstrate that FCN and EML still did not provide reasonable estimates. In contrast, the trimmed versions of FCLtr and FCT1tr resulted in very close estimates. In particular, the identical SP estimate at discharge rate 1.54858 was close to the SP 1.539 derived by [25].

Table 3.

Estimated models derived from the four methods with original and trimmed data.

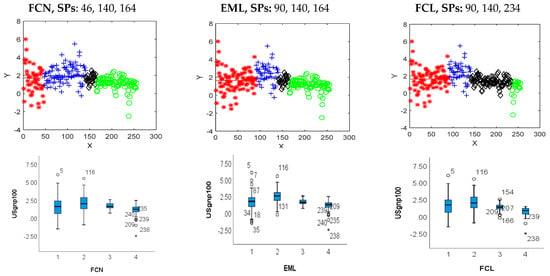

Example 3. We considered the changes in the GNP time series on the web page of the Federal Reserve Bank of St. Louis (http://research.stlouisfed.org/fred2/series/GNP accessed on 10 August 2022). The quarterly data are seasonally adjusted and presented in billions of dollars. Ref. [3] analyzed the series from 1949:1 to 2013:1 through three methods and found that there may be three changes occurring around points 18, 134, and 234. We investigated the same dataset as [3], and we also observed the first difference in the natural logarithm GNP series regarding its linear trend. The box plots and the descriptive statistics of GNP data in Figure 12a indicate the dataset contained several outliers, and it may have a long-tailed distribution for the large kurtosis and a small p-value for the two normal tests. Hence, the proposed FCL should be more suitable for the GNP data than FCN and EML because of its better robustness, based on the extensive simulation results. Figure 13 presents the estimation results derived by FCL, EML, and FCN. We noticed that the last two switch-points, 140 and 234 (corresponding to 1984:1 and 2007:3), detected by FCL were near those (134 and 234, i.e., 1982:3 and 2007:3) detected by [3]. The second change point 140 (1984:1) obtained by FCL indicated the start of the Great Moderation, a period when the United States experienced decreased macroeconomic volatility until the financial crisis in 2007. Furthermore, the third change point 234 (2007:3) signified the reverse of the Great Moderation and growth rates becoming negative because of the recent economic recession (c.f. [54]). Moreover, the first change at 90 (1971:3) produced by FCL was also critical to the US financial history. The first SP (1971:3) corresponded to the collapse of the Breton Woods Agreement and System and the start of the 1970 recession, a period of economic stagnation in many Western countries. (Note that the Breton Woods Agreement and System had a lasting influence on international currency exchange and trade through its development of the IMF and World Bank (c.f. [55]). This example illustrates that FCL can detect significant changes in the US economy even when the data are not normally distributed and contain numerous outliers. Therefore, FCL is robust in the presence of long-tails and atypical observations. More importantly, all three of FCL estimated switch-points were reasonable and interpretable compared with the US economic conditions.

Figure 12.

Plots and descriptive statistics of USGNP data.

Figure 13.

Estimations for USGNP data using FCL, EML, and FCN.

4. Conclusions and Discussion

This article introduced a new robust procedure for switching regression models based on the Laplace distribution. The least absolute deviation (LAD) criterion is often used in regressions for making robust inferences. A robust procedure related to LAD is the likelihood approach using Laplace distributions. The Laplace distribution provides a longer-tailed alternative to the normal distribution. In parallel, theoretical development and the computational advancement related to the Laplace distribution have made it popular in establishing a robust statistical model. These advantageous properties make the Laplace-based switching regression models resistant to abnormal observations. Moreover, there are fewer parameters in Laplace distributions than in t-distributions; thus, the estimation procedures are simpler than t-based estimations. The switching regression model is regarded as a mixture model with switch-points as unobserved variables, so the EM algorithm can be employed for estimating the switch-points and regression parameters. The computation of EM was simplified by writing the Laplace distribution as a scale mixture of normal distributions. The Laplace-based switching model was further extended to a fuzzy class model, an algorithm named FCL that was proposed through fuzzy clustering procedures. To make the proposed method more tolerant of high-leverage outliers, a trimmed version of FCL was proposed by first removing a moderate amount of high-leverage points and then applying FCL to the remaining data. Sensitivity analyses demonstrated the capability of the proposed FCL to resist against outliers and long-tailed distributions. Furthermore, the efficiency of FCL was superior in that it performed as well as the normal-based algorithm (FCN) for normally distributed data without contaminations. The simulation study and sensitivity analyses proved the proposed FCL to be effective and superior to other existing methods. We observed that the estimators derived from the Laplace-based algorithm (FCL) and the t-based algorithm (FCT1) were generally comparable regarding the estimation precision but FCL was always more time-effective than FCT1. Simulation results also showed that the trimmed version of FCL was serviceable for alleviating the effect of outliers with extremely high leverages.

When making use of the trimmed FCL, one needs to determine the percentage of data with high leverages to be trimmed. In general, outliers with extremely high leverages are rarely more than 10%. In accordance with our experiments, one may set 5% initially and then increase it little by little if required. The removed proportion decision is related to the efficacy and efficiency and the trimmed FCL. Thus, a method based on formal statistic tests is needed for making an objective decision on whether or not a datum is an outlier. In fact, data with high leverages may present small residuals in the fit of a regression model. In such situations, those data can provide helpful information for the fit of a model to data. Thus, further research on connecting the information of the leverage of data with the resulting residuals may be useful for advancing the precision of estimations from the trimmed method. On the other hand, the Laplacian switching regression model can be easily generalized by using the asymmetric Laplace distribution so that the new method will also be resistant in the presence of data following asymmetric distributions.

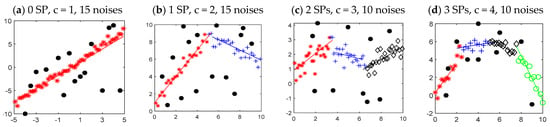

Another concern regarding the proposed method was the resolution of the number of segmentations. The decision on the number of subdivisions is crucial for deriving adequate estimates of switching regression models. It was challenging to establish a statistical test for determining the number of segments theoretically because of the failure of regularity conditions and scarce knowledge of the distribution of the likelihood-based tests. Many studies have implemented information criteria such as the Bayesian information criterion (BIC) or test-like methods for selecting the number of SPs. Here, we utilized the idea of [56] to observe the successive changes in indexes that evaluate the fit of the estimated regression model, such as the changes in the residual sum of squared errors (RSS), RSS(c − 1)-RSS(c) (RSS(c) means the RSS resulted from fitting data with a model of c segments). The mean square errors were defined as and negative twice the log of the maximum likelihood (−2lnL). Intuitively, in a regression model actually having c* subdivisions, the fitted loss should decrease gradually as a smaller c () increases to c* and reach the minimum at c*. Thus, the optimal c is likely to be the one before which the adjacent difference of fitted losses is large but smaller right after it. Moreover, the generalized BIC introduced by [57] was also considered. The four models in Figure 14 were utilized for generating data with contaminations to demonstrate the usefulness of the considered indexes. Results are shown in Table 4 in which the values of indexes corresponding to the optimal c are illustrated in bold. It can be seen from Table 4 that MSE provided clear indications of the optimal c in that the adjacent changes of MSE decreased successively for c smaller than the true value, and then the decrease was reduced once c was larger than the true value. Thus, MSE was helpful for choosing the number of subdivisions. In real applications, the cutting point may not be clear. In such situations, one may try several potential c values based on MSE indexes and then select the best model according to the selection rules often used in regression analysis. Although the proposed method lacks a theoretical basis, it is a crude but practical tool.

Figure 14.

Models employed to determine the number of subdivisions through the suggested indices.

Table 4.

Determination of the number of subdivisions of models (a)–(d) in Figure 14.

Author Contributions

Conceptualization and methodology, K.-P.L. and S.-T.C.; software, S.-T.C.; validation, K.-P.L.; formal analysis, S.-T.C.; investigation, K.-P.L.; resources, K.-P.L.; data curation, K.-P.L.; writing—original draft preparation, K.-P.L.; writing—review and editing, K.-P.L. and S.-T.C.; visualization, K.-P.L.; supervision, K.-P.L.; project administration, K.-P.L.; funding acquisition, K.-P.L. and S.-T.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by National Science and Technology Council, Taiwan (Grant numbers MOST 110-2118-M-025-001 and MOST 110-2118-M-003-002).

Data Availability Statement

The sources of datasets are, respectively stated in each example they are considered (pages 17–22).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

The following lemma shows a Laplace distribution can be expressed as a scale mixture of normal distributions.

Lemma A1.

For , let Y and V be two random variables distributed as , , where has density , .

We claim that

- 1.

- Y has a Laplace distribution with mean 0 and scale parameter b, i.e., Y has the density

- 2.

- The posterior distribution of V given Y = y has a mean

Proof.

We first state the inverse Gaussian distribution for borrowing it in proving Equation (A1). Consider Z an inverse Gaussian random variable distributed as IG(, ) with density

where is a shape parameter, , and .

To show (A1), it is equivalent to show for any

For computing the above integration, let , , and , then

Hence,

To prove Equation (A2), consider the posterior distribution of has a density

□

References

- Muggeo, V.M.R. Segmented: An R package to fit regression models with broken-line relationships. R News 2008, 8, 20–25. [Google Scholar]

- MacNeill, I.B.; Mao, Y. Change-point analysis for mortality and morbidity rate. In Applied Change Point Problems in Statistics; Sinha, B., Rukhin, A., Ahsanullah, M., Eds.; Nova Science: Hauppauge, NY, USA, 1995; pp. 37–55. [Google Scholar]

- Korkas, K.K.; Fryzlewicz, P. Multiple change-point detection for non-stationary time series using wild binary segmen-tation. Statisca Sin. 2017, 27, 287–311. [Google Scholar]

- Werner, R.; Valev, D.; Danov, D.; Guineva, V. Study of structural break points in global and hemispheric temperature series by piecewise regression. Adv. Space Res. 2015, 56, 2323–2334. [Google Scholar] [CrossRef]

- Toms, J.D.; Villard, M.-A. Threshold detection: Matching statistical methodology to ecological questions and conservation planning objectives. Avian Conserv. Ecol. 2015, 10, 2. [Google Scholar] [CrossRef]

- Fryzlewicz, P. Wild binary segmentation for multiple change-point detection. Ann. Stat. 2014, 42, 2243–2281. [Google Scholar] [CrossRef]

- Korkas, K.K. Ensemble binary segmentation for irregularly spaced data with change-points. J. Korean Stat. Soc. 2022, 51, 65–86. [Google Scholar] [CrossRef]

- Frick, K.; Munk, A.; Sieling, H. Multiscale change point inference. J. R. Stat. Soc. Ser. B Stat. Methodol. 2014, 76, 495–580. [Google Scholar] [CrossRef]

- Pein, F.; Sieling, H.; Munk, A. Heterogeneuous change point inference. J. R. Stat. Soc. Ser. B Stat. Methodol. 2017, 79, 1207–1227. [Google Scholar] [CrossRef]

- Zou, C.; Yin, G.; Feng, L.; Wang, Z. Nonparametric maximum likelihood approach to multiple change-point problems. Ann. Stat. 2014, 42, 970–1002. [Google Scholar] [CrossRef]

- Haynes, K.; Fearnhead, P.; Eckley, I.A. A computationally efficient nonparametric approach for changepoint detection. Stat. Comput. 2017, 27, 1293–1305. [Google Scholar] [CrossRef]

- Sun, J.; Sakate, D.; Mathur, S. A nonparametric procedure for changepoint detection in linear regression. Commun. Stat.-Theory Methods 2021, 50, 1925–1935. [Google Scholar] [CrossRef]

- Romano, G.; Rigaill, G.; Runge, V.; Fearnhead, P. Detecting Abrupt Changes in the Presence of Local Fluctuations and Autocorrelated Noise. J. Am. Stat. Assoc. 2021, 1–16. [Google Scholar] [CrossRef]

- Chakar, S.; Lebarbier, E.; Lévy-Leduc, C.; Robin, S. A robust approach for estimating change-points in the mean of an AR(1) process. Bernoulli 2017, 23, 1408–1447. [Google Scholar] [CrossRef]

- Fryzlewicz, P.; Rao, S.S. Multiple-change-point detection for auto-regressive conditional heteroscedastic processes. J. R. Stat. Soc. Ser. B Stat. Methodol. 2014, 76, 903–924. [Google Scholar] [CrossRef]

- Killick, R.; Fearnhead, P.; Eckley, I.A. Optimal detection of change points with a linear computational cost. J. Am. Stat. Assoc. 2012, 107, 1590–1598. [Google Scholar] [CrossRef]

- Maidstone, R.; Hocking, T.; Rigaill, G.; Fearnhead, P. On optimal multiple changepoint algorithms for large data. Stat. Comput. 2017, 27, 519–533. [Google Scholar] [CrossRef] [PubMed]

- Tickle, S.O.; Eckley, I.A.; Fearnhead, P.; Haynes, K. Parallelization of a Common Changepoint Detection Method. J. Comput. Graph. Stat. 2020, 29, 149–161. [Google Scholar] [CrossRef]

- Fearnhead, P.; Rigaill, G. Changepoint Detection in the Presence of Outliers. J. Am. Stat. Assoc. 2019, 114, 169–183. [Google Scholar] [CrossRef]

- Aminikhanghahi, S.; Cook, D.J. A survey of methods for time series change point detection. Knowl. Inf. Syst. 2017, 51, 339–367. [Google Scholar] [CrossRef]

- Maier, C.; Loos, C.; Hasenauer, J. Robust parameter estimation for dynamical systems from outlier-corrupted data. Bioinformatics 2017, 33, 718–725. [Google Scholar] [CrossRef]

- Aggarwal, C.C. Outlier analysis. In DataMining; Springer: New York, NY, USA, 2015. [Google Scholar]

- Peel, D.; McLachlan, G. Robust mixture modelling using the t distribution. Stat. Comput. 2000, 10, 339–348. [Google Scholar] [CrossRef]

- Lu, K.-P.; Chang, S.-T. Robust algorithms for multiphase regression models. Appl. Math. Model. 2020, 77, 1643–1661. [Google Scholar] [CrossRef]

- Zhang, F.; Li, Q. Robust bent line regression. J. Stat. Plan. Inference 2017, 185, 41–55. [Google Scholar] [CrossRef] [PubMed]

- Shi, S.; Li, Y.; Wan, C. Robust continuous piecewise linear regression model with multiple change points. J. Supercomput. 2018, 76, 3623–3645. [Google Scholar] [CrossRef]

- Gerstenberger, C. Robust Wilcoxon-type estimation of change-point location under short range dependence. J. Time Ser. Anal. 2018, 39, 90–104. [Google Scholar] [CrossRef]

- Yao, W.; Wei, Y.; Yu, C. Robust mixture regression using the t-distribution. Comput. Stat. Data Anal. 2014, 71, 116–127. [Google Scholar] [CrossRef]

- Osorio, F.; Galea, M. Detection of a change-point in student-t linear regression models. Stat. Pap. 2005, 47, 31–48. [Google Scholar] [CrossRef]

- Lin, J.-G.; Chen, J.; Li, Y. Bayesian Analysis of Student t Linear Regression with Unknown Change-Point and Application to Stock Data Analysis. Comput. Econ. 2012, 40, 203–217. [Google Scholar] [CrossRef]

- Lu, K.-P.; Chang, S.-T. Robust Algorithms for Change-Point Regressions Using the t-Distribution. Mathematics 2021, 9, 2394. [Google Scholar] [CrossRef]

- Bai, J. Estimation of multiple-regime regressions with least absolutes deviation. J. Stat. Plan. Inference 1998, 74, 103–134. [Google Scholar] [CrossRef]

- Ciuperca, G. Estimating nonlinear regression with and without change-points by the LAD method. Ann. Inst. Stat. Math. 2011, 63, 717–743. [Google Scholar] [CrossRef]

- Ciuperca, G. Penalized least absolute deviations estimation for nonlinear model with change-points. Stat. Pap. 2011, 52, 371–390. [Google Scholar] [CrossRef]

- Kozubowski, T.J.; Nadarajah, S. Multitude of Laplace distributions. Stat. Pap. 2010, 51, 127–148. [Google Scholar] [CrossRef]

- Yavuz, F.G.; Arslan, O. Linear mixed model with Laplace distribution (LLMM). Stat. Pap. 2016, 59, 271–289. [Google Scholar] [CrossRef]

- Nguyen, H.D.; McLachlan, G.J. Laplace mixture of linear experts. Comput. Stat. Data Anal. 2016, 93, 177–191. [Google Scholar] [CrossRef]

- Franczak, B.C.; Browne, R.P.; McNicholas, P.D. Mixtures of shifted asymmetric Laplace distributions. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1149–1157. [Google Scholar] [CrossRef]

- Bhowmick, D.; Davison, A.; Goldstein, D.R.; Ruffieux, Y. A Laplace mixture model for identification of differential expression in microarray experiments. Biostatistics 2006, 7, 630–641. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Song, W.; Yao, W.; Xing, Y. Robust mixture regression model fitting by Laplace distribution. Comput. Stat. Data Anal. 2014, 71, 128–137. [Google Scholar] [CrossRef]

- Yang, F. Robust Mean Change-Point Detecting through Laplace Linear Regression Using EM Algorithm. J. Appl. Math. 2014, 2014, 856350. [Google Scholar] [CrossRef]

- Jafari, A.; Yarmohammadi, M.; Rasekhi, A. Bayesian analysis to detect change-point in two-phase Laplace model. Sci. Res. Essays 2016, 11, 187–193. [Google Scholar]

- McLanchlan, G.; Peel, D. Finite Mixture Models; Wiley: New York, NY, USA, 2000. [Google Scholar]

- Lu, K.-P.; Chang, S.-T. A fuzzy classification approach to piecewise regression models. Appl. Soft Comput. 2018, 69, 671–688. [Google Scholar] [CrossRef]

- Yang, M.-S. A survey of fuzzy clustering. Math. Comput. Model. 1993, 18, 1–16. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Leroy, A.M. Robust Regression and Outlier Detection; Wiley-Interscience: New York, NY, USA, 1987. [Google Scholar]

- Rousseeuw, P.J.; Van Driessen, K. A fast algorithm for the minimum covariance determinant estimator. Technometrics 1999, 41, 212–223. [Google Scholar] [CrossRef]

- Davison, A.; Hinkley, D. Bootstrap Methods and Their Application; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- D’Urso, P.; Massari, R.; Santoro, A. Robust fuzzy regression analysis. Inf. Sci. 2011, 181, 4154–4174. [Google Scholar] [CrossRef]

- Maronna, R.A.; Martin, R.D.; Yohai, V.J. Robust Statistics: Theory and Methods; Wiley: New York, NY, USA, 2006. [Google Scholar]

- Garland, T. The relation between maximal running speed and body mass in terrestrial mammals. J. Zool. 1983, 199, 157–170. [Google Scholar] [CrossRef]

- McMahon, T.A. Using body size to understand the structural design of animals: Quadrupedal locomotion. J. Appl. Physiol. 1975, 39, 619–627. [Google Scholar] [CrossRef]

- Chiodi, F.; Claudin, P.; Andreotti, B. A two-phase flow model of sediment transport: Transition from bedload to suspended load. J. Fluid Mech. 2014, 755, 561–581. [Google Scholar] [CrossRef]

- Clark, T. Is the Great Moderation over? An empirical analysis. Fed. Reserve Bank Kansas City Econ. Rev. 2009, 94, 5–42. [Google Scholar]

- Bernstein, E. Reflections on Bretton Woods, The International Monetary System: Forty Years after Bretton Woods; Federal Reserve Bank of Boston: Boston, MA, USA, 1984. [Google Scholar]

- Hawkins, D.M. Fitting multiple change-point models to data. Comput. Stat. Data Anal. 2001, 37, 323–341. [Google Scholar] [CrossRef]

- Ciuperca, G. A general criterion to determine the number of change-points. Stat. Probab. Lett. 2011, 81, 1267–1275. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).